Abstract

Gibbs ringing is a feature of MR images caused by the finite sampling of the acquisition space (k-space). It manifests itself with ringing patterns around sharp edges which become increasingly significant for low-resolution acquisitions. In this paper, we model the Gibbs artefact removal as a constrained variational problem where the data discrepancy, represented in denoising and convolutive form, is balanced to sparsity-promoting regularization functions such as Total Variation, Total Generalized Variation and \(L_1\) norm of the Wavelet transform. The efficacy of such models is evaluated by running a set of numerical experiments both on synthetic data and real acquisitions of brain images. The Total Generalized Variation penalty coupled with the convolutive data discrepancy term yields, in general, the best results both on synthetic and real data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Acquired MR signals lie in a frequency-phase space known as k-space, and MR images are obtained via the inverse Fourier transform (FT) of a finite k-space acquisition. Because of scan time and SNR limitations, the outer parts of the k-space, containing the high-frequency information of the image, are generally not recorded. Therefore, all MR images are affected by Gibbs ringing, a well-known artefact consisting of spurious oscillations in the proximity of sharp image gradients at tissue boundaries. These artifacts often occur at high-contrast interfaces since the partial k-space sampling cuts off the high-frequency components in these zones. Such artifacts degrade the quality of the images and make quantitative measurement difficult, hampering clinical diagnosis. Although Gibbs ringing is a feature of all MR images, it becomes increasingly significant for low resolution or quantitative modalities such as diffusion MRI and when it is necessary to increase the resolution of MR images to facilitate the doctors’ diagnosis. In these cases, the MR images are reconstructed on a finer grid by using zero-filling (ZF), which sets the missing high-frequency components to zero, causing severe ringing artifacts.

Moreover, in many MR imaging applications, a short scan time is required to observe dynamic processes such as the beating heart, the passage of a contrast bolus guidance of an interventional procedure, or to reduce artifacts from physiological motion [1,2,3,4]. In such applications, only a portion of the k-space is acquired, and the missing data are usually simply replaced by zeros. Despite the intensive research into acquisition and reconstruction algorithms, the ZF method is still most commonly used by clinical doctors since, although presenting truncation artifacts, it is stable and fast.

For all these reasons, a significant quantity of MR images containing truncation artifacts is archived and re-exploited for the purpose of diagnosis, treatment evaluation and disease monitoring. Therefore, the development of effective post-processing approaches to remove truncation artifacts from these acquired MR images are highly desirable. In particular, it is desirable to remove as much as possible the ringing artifacts without adding excessive blur to the images.

There is a considerable amount of literature on the post-processing of MR images, which can be summarized as follows. One approach consists in recovering the missing information by data extrapolation, see [5] and reference therein. Variational approaches have been proposed based on the Total Variation regularization [2,3,4, 6, 7], the \(L_1\) norm of the shearlet transform [8, 9] and the weighted Total Variation [10, 11]. Finally, [12] proposes a method based on space domain filters presenting a Matlab software tool which is often used in clinical practice.

In the present paper, we model the Gibbs artefact removal as a constrained variational problem balancing data discrepancy and sparsity-promoting regularization functions such as Total Variation (TV), Total Generalized Variation (TGV) and \(L_1\) norm of Wavelet transform (L1W). The data discrepancy can be represented by the absolute distance between the data and the unknown enhanced image (denoising model), or it can reproduce the truncation in the k-space as a convolution of the underlying image with a sinc-function.

Concerning the regularization functions, there are several papers in the literature about the Total Variation (TV) and second-order Total Variation (Total Generalized Variation) applied to the denoising model [4, 6]. Moreover, the Wavelet transform has proven to be effective in noise removal [13] and regularization based on wavelet transform is investigated in the compressed sensing context (see [14] and references therein).

To the best of our knowledge, in the context of Gibbs artefact removal, a thorough investigation of TV, TGV and L1W for the convolution model is not present in the literature. For this reason, we focus our analysis on the denoising and convolutive models with such regularization functions. The variational problems are solved by adapting the Alternate Directions Method of Multipliers (ADMM) to the specific objective functions.

This work aims to assess the efficacy of the proposed variational models by running an extended set of numerical experiments in which the ringing artifacts are introduced on synthetic and real data on high resolution acquired brain images.

The structure of the paper is the following: Sect. 2 discusses in detail the models and their numerical implementation; Sect. 3 presents the most significant numerical results. Finally, Sect. 4 presents some conclusions.

2 Models and methods

The Gibbs ringing artifacts arise because the Fourier series cannot represent a discontinuity within a finite number of terms. The ringing effect is especially visible for acquisitions at low resolution around sharp edges. Low pass filtering techniques reduce this artefact at the expense of introducing image blur. For this reason, edge preserving variational filtering techniques are studied. One approach [2, 3] is to compute the enhanced image \(x_e\) by solving the following denoising problem:

where \(y\in {\mathbb {R}}^n\), \(x\in {\mathbb {R}}^n \), \(n=n_xn_y\), respectively are the data and unknown images of size \(n_x \times n_y\), and \({\mathcal {R}}(x)\) represents an edge-preserving penalty function.

A sharp cut-off or truncation in the k-space is equivalent to a convolution in the spatial domain with a sinc function. In the discrete FT reconstruction of MR images, the cut-off frequency equals the frequency of the sinc function that is convolved with the image. The oscillating lobes of the sinc function result in the ringing pattern around sharp edges. Therefore, an enhanced image \(x_c\) can be obtained by the following convolutive model:

Concerning the regularization functions, we focus our analysis on models (1) and (2) with the following regularization functions:

-

Total Variation function [15], defined in discrete form as:

$$\begin{aligned} {\mathcal {R}}(x)= TV(x) \equiv \Vert \nabla x \Vert _1, \end{aligned}$$where \(\nabla \in {\mathbb {R}}^{2n \times n}\) represents the discrete gradient operator.

-

Second order Total Generalized Variation (TGV) function [16]:

$$\begin{aligned} {\mathcal {R}}(x)= TGV(x) \equiv \min \limits _{w\in {\mathbb {R}}^{2n}} \displaystyle {\alpha _0 \left\| \nabla x - w\right\| _1} + {\alpha _1 \left\| Ew\right\| _1}, \quad \alpha _0,\alpha _1\in (0,\,1) \end{aligned}$$where \(E \in {\mathbb {R}}^{4n \times 2n} \) represents the symmetrized derivative operator.

-

\(L_1\) norm of the discrete wavelet transform W [17]:

$$\begin{aligned} {\mathcal {R}}(x)=\Vert Wx \Vert _1. \end{aligned}$$

where \(W\in {\mathbb {R}}^{n \times n}\) represents an orthogonal wavelet transform.

We solve the optimization problems (1) and (2) by the ADMM method by reformulating them as separable convex optimization problems with two blocks. Firstly, for the reader’s convenience, let us recall the classical two-blocks ADMM method for the solution of the following problem

where \(A_i\in {\mathbb {R}}^{p\times n_i}\) (\(i=1,2\)) are given linear operators, \(b\in {\mathbb {R}}^p\) is a given vector and \(\Omega _i\subset {\mathbb {R}}^{n_1}\) (\(i=1,2\)) are closed convex sets. The functions \(f_i:{\mathbb {R}}^{n_i}\rightarrow {\mathbb {R}}\) (\(i=1,2\)) are convex functions on \(\Omega _i\), respectively. The augmented Lagrangian function of problem (3) has the form

where \(\mu \in {\mathbb {R}}^p\) is a vector of Lagrange multipliers and \(\rho >0\) is a penalty parameter. Given a chosen initial point \((x^{(0)},z^{(0)},\mu ^{(0)})\in {\mathbb {R}}^{n_1+n_2+p}\), the ADMM method consists of the iteration

As far as TV and L1W regularization is concerned, we can reformulate problems (1) and (2) as follows

where H is the identity matrix for model (1) or it derives from the discretization of the convolution product with the sinc finction for model (2), and \(L=\nabla \) or \(L=W\) for TV and L1W regularization, respectively. Clearly, problem (5) is a separable problem of the form (3) with \(f_1(x) = \frac{\lambda }{2} \Vert Hx-y\Vert ^2\), \(f_2(z)=\Vert z\Vert _1\), \(A_1=L\), \(A_2=-I\), \(b=0\), \(\Omega _1=\{x \ : \ x\ge 0 \}\); we can now apply the ADMM method to problem (5). In iterations (4), the subproblem in x is a bound constrained quadratic programming problem; we solve it by the variable projection (VP) method introduced in [18], a gradient projection method using a limited minimization rule as linesearch [19] and the Barzilai and Borwei rule [20] for steplength selection. The subproblem in z can be rewritten as

This subproblem is separable and can be solved exactly by computing the proximal operator of \(\Vert z\Vert _1\).

For the solution of problems (1) and (2) with TGV regularization, we use a two-blocks ADMM method originally proposed in [21] for the restoration of directional images under Poisson noise. To this end, we reformulate the problems as follows:

where \(z_1\in {\mathbb {R}}^n\), \(z_2\in {\mathbb {R}}^{2n}\), \(z_3\in {\mathbb {R}}^{4n}\), \(z_4\in {\mathbb {R}}^{n}\), and \(\chi _{{\mathbb {R}}_+^n}(\cdot )\) is the characteristic function of the nonnegative orthant in \({\mathbb {R}}^n\). By introducing the auxiliary variables \({\tilde{x}} = ( x, w )\) and \(z = ( z_1, z_2, z_3, z_4 )\) we can reformulate problem (7) as a problem of the form (3) where we define the two separable functions as

and the matrices \(A_1\in {\mathbb {R}}^{8n\times 3n}\) and \(A_2\in {\mathbb {R}}^{8n\times 8n}\) as

The ADMM iterations can now be applied to problem (7); we underline that both the \({\tilde{x}}\) and z subproblems can be solved exactly at a low cost and we refer the reader to [21] for a deeper discussion on the solution of the ADMM subproblems.

The ADMM method for solving two-block convex minimization problems has been studied extensively in the literature and its global convergence has been proved [22, 23] when the two subproblems are solved exactly. This is the case of our version of ADMM specialized for TGV regularization. In our implementation of ADMM for TV and L1W regularization, the x subproblem is solved to a high precision, i.e., the iterations of the inner VP method have been stopped when the relative distance between two successive values of the objective function becomes less than \(10^{-6}\). We have observed practical convergence of the corresponding ADMM scheme. However, we underline that variants of inexact ADMM have been proposed which are based on different strategies for the control of the lack of exactness [24,25,26,27].

3 Numerical results

To assess the effectiveness of the proposed models and regularization functions, we developed numerical tests using synthetic and brain images acquired by MRI scanner. The numerical results have been computed using Matlab R2021a on a Intel Core i5 processor with 2.50GHZ and Windows operating system.

In our experiments, the matrix W of L1W regularization represents an orthogonal Haar wavelet transform with four levels. As regards the implementation of the ADMM method, the initial guess is chosen as the null vector, i.e., \(x^{(0)}=0\), \(z^{(0)}=0\) and \(\mu ^{(0)}=0\). Moreover, the ADMM iterations are terminated when

or after a maximum number of 100 iterations. The VP method for the solution of the inner x subproblems, for TV and L1W regularization, is arrested when the residual norm becomes less than \(10^{-6}\) or after 1000 iterations. The values of the TGV weights are chosen as \(\alpha _0 = \beta \) and \(\alpha _1=(1-\beta )\) with \(\beta =0.5\). Indeed we found that, for smaller values of \(\beta \), TGV is less effective in removing Gibbs artifacts while, for larger values, the restored MR images tend to be too smooth. Finally, the value of the ADMM penalty parameter is set as \(\rho = 10\). Obviously, the choice of \(\rho \) affects the ADMM convergence rate but it also influences the quality of the restored images which are too smooth, for large value of \(\rho \). The choice \(\rho =10\) gives a good tradeoff between convergence rate and solution quality.

Synthetic data

Simulated \(128\times 128\) raw MRI data have been created by using the Matlab function mriphantom.m [28]. This function analytically generates raw data from k-space coordinates along a Cartesian trajectory using the continuous Shepp and Logan head phantom function. Noisy k-space data is also used by adding Gaussian white noise of level equal to 0.025.

Figure 1 shows the exact phantom image and the image obtained via inverse FT of the simulated k-data without and with added noise. In order to better visualize the ringing artifacts in the printed figures, Fig. 1 also shows the negative images whose colormap limits are set to [-0.5,0]. Since artifacts are more evident in the phantom negative images, we will always show them in the following.

To compare the models, we heuristically compute the optimal value of the regularization parameter corresponding to a particular error metric. In preliminary investigations, we considered the most commonly used error metrics [29]: Root Mean Square Error (RMSE), Improved Signal to Noise Ratio (ISNR), Structural SIMilarity index (SSIM), Feature SIMilarity index (FSIM). Repeating the same analysis for all the models and the regularization functions, we observed that the best RMSE and ISNR values are obtained for too small \(\lambda \) values, producing excessively blurred images. Conversely, both optimal SSIM and FSIM values are obtained for larger values of \(\lambda \) giving results of better visual quality; in most cases \(\lambda _\text {SSIM} < \lambda _\text {FSIM}\). As an example, we report in Fig. 2 the values of RMSE, ISNR, SSIM and FSIM computed by the TGV-sinc model for different \(\lambda \) and represent the optimal value with a red circle. We observe that RMSE, ISNR are optimal at the same \(\lambda \) while SSIM and FSIM are optimal for larger \(\lambda \) values.

In Fig. 3, we represent the images computed by the TGV-sinc model with the best regularization parameter for each metric (corresponding to the red circle in Fig. 2). It is evident that the RMSE and ISNR are not proper metrics to measure the quality of the computed images, while SSIM and FSIM give visually similar results. For this reason, in the remaining tests, we use the SSIM as metric to heuristically set the optimal value of the regularization parameter for each model and regularization functional.

For all the considered models, in Table 1, we report the value of the regularization parameters (determined as previously explained) and the corresponding RMSE, ISNR and SSIM values; we also report the number of ADMM iterations (column iter) and computation time in seconds (column time), using both noiseless and noisy data. In all cases, the best SSIM values (highlighted in bold) are reached by TGV regularization, while the worst SSIM values are always reached by L1W regularization. Moreover, L1W regularization has the highest computational cost, as reported in the last two columns of Table 1.

For the noiseless data, Figs. 4 and 5 show the reconstructions obtained by the denoise (1) and sinc (2) models, respectively. The error images are also depicted, i.e., the absolute difference between the exact phantom image and the restored one. For better visualization of the differences, the negative values of the error images are displayed with colormap limits set to [-0.1,0]. Finally, for the noisy data, Figs. 6 and 7 display the restored and error images obtained by model (1) and (2), respectively. A visual inspection of the figures shows that TGV regularization is always more effective in removing ringing artifacts than both TV and L1W regularization. Moreover, from Figs. 4-7, we observe that the TGV-sinc model can remove ringing artifacts more effectively as compared to the TGV-denoise model, even if the SSIM values reached by the TGV-denoising model are slightly higher than those of TGV-sinc model (see Table 1 where the best values are written in bold). Hence, for synthetic data, the TGV-sinc model appears the more appropriate model for the removal of ringing artifacts.

Noiseless synthetic data: reconstructed (top) and difference (bottom) images obtained by model (1)

Noiseless synthetic data: reconstructed (top) and difference (bottom) images obtained by model (2)

Noisy synthetic data: reconstructed (top) and difference (bottom) images obtained by model (1)

Noisy synthetic data: reconstructed (top) and difference (bottom) images obtained by model (2)

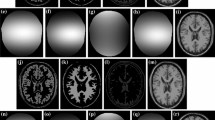

Real MRI data We consider the problem of obtaining a high-resolution MR image from a low-resolution one. This process is often required for clinical purposes, posterior analysis, or postprocessing, such as registration or segmentation. Figure 8 shows the exact high resolution image of size \(256\times 256\) and the acquired low resolution image of size \(128\times 128\).

Since the denoising model (1) is generally less effective, we focus our attention on the sinc model (2) coupled with TV, L1W and TGV regularizations. Figure 9 reports the high-resolution images obtained by ZF and the sinc model with the considered regularization functionals. The red arrows highlight ringing artifacts in the ZF image; by comparing the high-resolution images, we observe that both TV and TGV regularization effectively remove the artifacts, but, as expected, TV tends to produce small flat regions in the image. On the other hand, L1W performs worse, and some artifacts are still present in the corresponding high-resolution image. Finally, Table 2 displays the numerical results confirming the superiority of TGV regularization.

To evaluate the performance of the proposed approach in the presence of noisy data, we add Gaussian white noise of level 0.1 to the low-resolution k-space data. Figure 10 shows the obtained high resolution images. It is evident that the ZF and L1W images are degraded by noise, while TV and TGV regularization can remove noise from the high-resolution image. The numerical results are reported in Table 2; they show TGV superior accuracy, as measured by the SSIM index.

4 Conclusion

In this paper, we investigate the efficacy of the denoising and sinc convolution model in removing Gibbs artifacts using TV, TGV and L1W penalties. From observing the results, we conclude that the sinc convolution model coupled with TGV penalty is, in general, the most effective and that L1W produces the worst results in almost all cases.

Therefore, future developments will focus on the automatic setting of the TGV parameters by exploiting the Uniform Penalty principle [30, 31].

References

Piccolomini, E.L., Zama, F., Zanghirati, G., Formiconi, A.: Regularization methods in dynamic MRI. Appl. Math. Comput. 132(2–3), 325–339 (2002)

Landi, G., Piccolomini, E.L.: A total variation regularization strategy in dynamic MRI. Optim. Methods and Softw. 20(4–5), 545–558 (2005)

Landi, G., Piccolomini, E.L., Zama, F.: A total variation-based reconstruction method for dynamic MRI. Comput. Math. Methods Med. 9(1), 69–80 (2008)

Veraart, J., Fieremans, E., Jelescu, I.O., Knoll, F., Novikov, D.S.: Gibbs ringing in diffusion MRI. Magn. Reson. Med. 76(1), 301–314 (2016)

Luo, J., Wang, S., Li, W., Zhu, Y.: Removal of truncation artefacts in magnetic resonance images by recovering missing spectral data. J. Magn. Reson. 224, 82–93 (2012)

Krylov, A., Nasonov, A.: Adaptive total variation deringing method for image interpolation. In: 2008 15th IEEE International Conference on Image Processing, pp. 2608–2611 (2008). IEEE

Block, K.T., Uecker, M., Frahm, J.: Suppression of MRI truncation artifacts using total variation constrained data extrapolation. Int. J. Biomedical Imaging 2008, 184123 (2008)

Liu, R.W., Shi, L., Yu, S.C.H., Wang, D.: Hybrid regularization for compressed sensing mri: Exploiting shearlet transform and group-sparsity total variation. In: 2017 20th International Conference on Information Fusion (Fusion), pp. 1–8 (2017). https://doi.org/10.23919/ICIF.2017.8009783

Aelterman, J., Luong, H.Q., Goossens, B., Pižurica, A., Philips, W.: Augmented lagrangian based reconstruction of non-uniformly sub-nyquist sampled mri data. Signal Process. 91(12), 2731–2742 (2011)

Zhang, M., Kumar, K., Desrosiers, C.: A weighted total variation approach for the atlas-based reconstruction of brain mr data. In: 2016 IEEE International Conference on Image Processing (ICIP), pp. 4329–4333 (2016). https://doi.org/10.1109/ICIP.2016.7533177

Calatroni, L., Lanza, A., Pragliola, M., Sgallari, F.: Adaptive parameter selection for weighted-TV image reconstruction problems. J. Phys: Conf. Ser. 1476(1), 012003 (2020). https://doi.org/10.1088/1742-6596/1476/1/012003

Kellner, E., Dhital, B., Kiselev, V.G., Reisert, M.: Gibbs-ringing artifact removal based on local subvoxel-shifts. Magn. Reson. Med. 76(5), 1574–1581 (2016)

Donoho, D.L.: De-noising by soft-thresholding. IEEE Trans. Inf. Theory 41(3), 613–627 (1995)

Song, L.-X., Zhang, J.-G., Wang, Q.: MRI reconstruction based on three regularizations: total variation and two wavelets. Biomed. Signal Process. Control 30, 64–69 (2016)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60(1–4), 259–268 (1992)

Bredies, K., Kunisch, K., Pock, T.: Total generalized variation. SIAM J. Imag. Sci. 3(3), 492–526 (2010)

Mallat, S.: A Wavelet Tour of Signal Processing. Elsevier, NL (1999)

Ruggiero, V., Zanni, L.: Projection-type methods for large convex quadratic programs: theory and computational experience. J. Optim. Theory Appl. 104, 281–299 (2000)

Bertsekas, D.P.: Nonlinear Programming. Athena Scientific, U.S.A (1999)

Barzilai, J., Borwein, J.M.: Two point step size gradient methods. IMA J. Numerical Anal. 8, 141–148 (1988)

di Serafino, D., Landi, G., Viola, M.: Directional tgv-based image restoration under poisson noise. Journal of Imaging 7(6), 99 (2021)

Eckstein, J., Bertsekas, D.P.: On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55, 293–318 (1992)

Luenberger, D.G., Ye, Y.: Linear and Nonlinear Programming, 4th edn. Springer, Switzerland (2016)

Hager, W.W., Zhang, H.: Inexact alternating direction methods of multipliers for separable convex optimization. Comput. Optim. Appl. 73, 201–235 (2019)

Ng, M.K., Wang, F., Yuan, X.: Inexact alternating direction methods for image recovery. SIAM J. Sci. Comput. 33(4), 1643–1668 (2011). https://doi.org/10.1137/100807697

Chen, L., Sun, D., Toh, K.: An efficient inexact symmetric gauss-seidel based majorized admm for high-dimensional convex composite conic programming. Math. Program. 161, 237–270 (2017)

Eckstein, J., Yao, W.: Approximate admm algorithms derived from lagrangian splitting. Comput. Optim. Appl. 68, 363–405 (2017)

Ouwerkerk, R.: mriphantom. MATLAB Central File Exchange (2022)

Sara, U., Akter, M., Uddin, M.S.: Image quality assessment through FSIM, SSIM, MSE and PSNR-a comparative study. J. Computer and Commun. 7(3), 8–18 (2019)

Bortolotti, V., Brown, R., Fantazzini, P., Landi, G., Zama, F.: Uniform Penalty inversion of two-dimensional NMR relaxation data. Inverse Prob. 33(1), 015003 (2016)

Bortolotti, V., Landi, G., Zama, F.: 2DNMR data inversion using locally adapted multi-penalty regularization. Comput. Geosci. 25(3), 1215–1228 (2021)

Acknowledgements

We are profoundly grateful to Professor Ilio Galligani for his passionate teaching of numerical analysis as a means of tackling the challenges of real problems. We honour him for having enthusiastically initiated us into the study of numerical problems in medical imaging.

Funding

Open access funding provided by Alma Mater Studiorum - Università di Bologna within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Landi, G., Zama, F. A variational approach to Gibbs artifacts removal in MRI. Ann Univ Ferrara 68, 465–481 (2022). https://doi.org/10.1007/s11565-022-00431-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11565-022-00431-8