Abstract

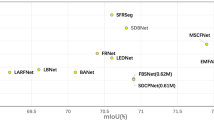

In response to the fuzzy and complex boundaries of unstructured road scenes, as well as the high difficulty of segmentation, this paper uses BiSeNet as the benchmark model to improve the above situation and proposes a real-time segmentation model based on partial convolution. Using FasterNet based on partial convolution as the backbone network and improving it, adopting higher floating-point operations per second operators to improve the inference speed of the model; optimizing the model structure, removing inefficient spatial paths, and using shallow features of context paths to replace their roles, reducing model complexity; the Residual Atrous Spatial Pyramid Pooling Module is proposed to replace a single context embedding module in the original model, allowing better extraction of multi-scale context information and improving the accuracy of model segmentation; the feature fusion module is upgraded, the proposed Dual Attention Features Fusion Module is more helpful for the model to better understand image context through cross-level feature fusion. This paper proposes a model with a inference speed of 78.81 f/s, which meets the real-time requirements of unstructured road scene segmentation. Regarding accuracy metrics, the model in this paper excels with Mean Intersection over Union and Macro F1 at 72.63% and 83.20%, respectively, showing significant advantages over other advanced real-time segmentation models. Therefore, the real-time segmentation model based on partial convolution in this paper well meets the accuracy and speed required for segmentation tasks in complex and variable unstructured road scenes, and has reference value for the development of autonomous driving technology in unstructured road scenes. Code is available at https://github.com/BaiChunhui2001/Real-time-segmentation.

Similar content being viewed by others

References

Muhammad, K., Hussain, T., Ullah, H., et al.: Vision-based semantic segmentation in scene understanding for autonomous driving: recent achievements, challenges, and outlooks. IEEE Trans. Intell. Transp. Syst. 23(12), 22694–22715 (2022)

Zheng, T., Huang, Y., Liu, Y., et al.: Clrnet: cross layer refinement network for lane detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 898–907 (2022)

Oğuz, E., Küçükmanisa, A., Duvar, R., et al.: A deep learning based fast lane detection approach. Chaos Solitons Fract. 155, 111722 (2022)

Lee, D.H., Liu, J.L.: End-to-end deep learning of lane detection and path prediction for real-time autonomous driving. SIViP 17(1), 199–205 (2023)

Chen, J., Jia, K., Chen, W., et al.: A real-time and high-precision method for small traffic-signs recognition. Neural Comput. Appl. 34(3), 2233–2245 (2022)

Yu, J., Ye, X., Tu, Q.: Traffic sign detection and recognition in multiimages using a fusion model With YOLO and VGG network. IEEE Trans. Intell. Transp. Syst. 23(9), 16632–16642 (2022)

Min, W., Liu, R., He, D., et al.: Traffic sign recognition based on semantic scene understanding and structural traffic sign location. IEEE Trans. Intell. Transp. Syst. 23(9), 15794–15807 (2022)

Elhassan, M.A.M., Huang, C., Yang, C., et al.: DSANet: dilated spatial attention for real-time semantic segmentation in urban street scenes. Expert Syst. Appl. 183, 115090 (2021)

Dong, G., Yan, Y., Shen, C., et al.: Real-time high-performance semantic image segmentation of urban street scenes. IEEE Trans. Intell. Transp. Syst. 22(6), 3258–3274 (2020)

Wang, Y., Ahsan, U., Li, H., et al.: A comprehensive review of modern object segmentation approaches. Found. Trends Comput. Graph. Vis. 13(2–3), 111–283 (2022)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3431—3440 (2015)

Ronneberger, O., Fischer, P., Brox, T.: U-net: convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention-MICCAI, 18th International Conference, Munich, Germany, October 5–9, 2015, Proceedings, Part III 18. Springer International Publishing, pp. 234–241 (2015)

Chen, L.C., Papandreou, G., Kokkinos, I., et al.: Semantic image segmentation with deep convolutional nets and fully connected CRFS. (2014) arXiv preprint arXiv:1412.7062

Chen, L.C., Papandreou, G., Kokkinos, I., et al.: Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFS. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2017)

Chen, L.C., Papandreou, G., Schroff, F., et al.: Rethinking atrous convolution for semantic image segmentation (2017) arXiv preprint arXiv:1706.05587

Chen, L.C., Zhu, Y., Papandreou, G., et al.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European conference on computer vision (ECCV), pp. 801–818 (2018)

Paszke, A., Chaurasia, A., Kim, S., et al.: Enet: a deep neural network architecture for real-time semantic segmentation (2016). arXiv preprint arXiv:1606.02147

Yu, C., Wang, J., Peng, C., et al.: Bisenet: bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), pp. 325–341 (2018)

Hong, Y., Pan, H., Sun, W., et al.: Deep dual-resolution networks for real-time and accurate semantic segmentation of road scenes (2021). arXiv preprint arXiv:2101.06085

Xu, J., Xiong, Z., Bhattacharyya, S.P.: PIDNet: a real-time semantic segmentation network inspired by PID controllers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19529–19539 (2023)

Baheti, B., Innani, S., Gajre, S., et al.: Eff-unet: a novel architecture for semantic segmentation in unstructured environment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 358–359 (2020)

Rasib, M., Butt, M.A., Riaz, F., et al.: Pixel level segmentation based drivable road region detection and steering angle estimation method for autonomous driving on unstructured roads[J]. IEEE Access 9, 167855–167867 (2021)

Lin, N., Zhao, W., Liang, S., et al.: Real-time segmentation of unstructured environments by combining domain generalization and attention mechanisms. Sensors 23(13), 6008 (2023)

Chen, J., Kao, S., He, H., et al.: Run, don’t walk: chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12021–12031 (2023)

Sandler, M., Howard, A., Zhu, M., et al.: Mobilenetv2: inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520 (2018)

Wang, Q., Wu, B., Zhu, P., et al.: ECA-Net: efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11534–11542 (2020)

Zhao, H., Shi, J., Qi, X., et al.: Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2881–2890 (2017)

Cordts, M., Omran, M., Ramos, S., et al.: The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3213–3223 (2016)

Brostow, G.J., Fauqueur, J., Cipolla, R.: Semantic object classes in video: a high-definition ground truth database. Pattern Recogn. Lett. 30(2), 88–97 (2009)

Varma, G., Subramanian, A., Namboodiri, A., et al.: IDD: a dataset for exploring problems of autonomous navigation in unconstrained environments. In 2019 IEEE Winter Conference on Applications of Computer Vision (WACV). IEEE, pp. 1743-1751 (2019)

Shrivastava, A., Gupta, A., Girshick, R.: Training region-based object detectors with online hard example mining. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 761–769 (2016)

Poudel, R.P.K., Liwicki, S., Cipolla, R.: Fast-scnn: Fast semantic segmentation network (2019). arXiv preprint arXiv:1902.04502

Zhao, H., Qi, X., Shen, X., et al.: Icnet for real-time semantic segmentation on high-resolution images. In Proceedings of the European Conference on Computer Vision (ECCV), pp. 405–420 (2018)

Yu, C., Gao, C., Wang, J., et al.: Bisenet v2: bilateral network with guided aggregation for real-time semantic segmentation. Int. J. Comput. Vis. 129, 3051–3068 (2021)

Acknowledgements

This study was supported by Yunnan Provincial Science and Technology Major Project (No. 202202AE090008) tited “Application and Demonstration of Digital Rural Governance Based on Big Data and Artificial Intelligence”.

Author information

Authors and Affiliations

Contributions

Chunhui Bai wrote the main manuscript, Chubhui Bai and Lilian Zhang visualised, Lutao Gao did data curation, Linnan Yang did the re-writing, Lin Peng and Peishan Li did the formal analyses. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bai, C., Zhang, L., Gao, L. et al. Real-time segmentation algorithm of unstructured road scenes based on improved BiSeNet. J Real-Time Image Proc 21, 91 (2024). https://doi.org/10.1007/s11554-024-01472-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01472-2