Abstract

Purpose

Specialized robotic and surgical tools are increasing the complexity of operating rooms (ORs), requiring elaborate preparation especially when techniques or devices are to be used for the first time. Spatial planning can improve efficiency and identify procedural obstacles ahead of time, but real ORs offer little availability to optimize space utilization. Methods for creating reconstructions of physical setups, i.e., digital twins, are needed to enable immersive spatial planning of such complex environments in virtual reality.

Methods

We present a neural rendering-based method to create immersive digital twins of complex medical environments and devices from casual video capture that enables spatial planning of surgical scenarios. To evaluate our approach we recreate two operating rooms and ten objects through neural reconstruction, then conduct a user study with 21 graduate students carrying out planning tasks in the resulting virtual environment. We analyze task load, presence, perceived utility, plus exploration and interaction behavior compared to low visual complexity versions of the same environments.

Results

Results show significantly increased perceived utility and presence using the neural reconstruction-based environments, combined with higher perceived workload and exploratory behavior. There’s no significant difference in interactivity.

Conclusion

We explore the feasibility of using modern reconstruction techniques to create digital twins of complex medical environments and objects. Without requiring expert knowledge or specialized hardware, users can create, explore and interact with objects in virtual environments. Results indicate benefits like high perceived utility while being technically approachable, which may indicate promise of this approach for spatial planning and beyond.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The increasing number of machines, robots, and tools in the modern operating room (OR) due to technological advancements and specialization poses a challenge for space management and complexity reduction [1]. Planning the required tools and their positioning for novel procedures in advance is essential for streamlining processes, enhancing patient safety, operating efficiency, and communication [2]. This becomes even more difficult for first time, specialized, rare, or unique procedures that have limited prior knowledge and little room for trial and error [3]. Therefore, fast and simple decision-making is crucial, as needs can change rapidly and unpredictably [4].

However, the current situation is problematic, as planning the space requirements and layout of the needed tools and machinery can be prohibitively difficult and costly. The targeted spaces are often unavailable due to the high demand and cost of ORs, and because planners or the machinery might still be remote at the time of planning [5]. Most planning happens in two dimensions on computer screens, with approaches using low-fidelity or artist-created 3D or virtual reality (VR) visualizations just becoming available. Hence, methods are needed to enable virtual spatial planning and exploration in a realistic scenario, with the relevant objects. Such VR environments can work in remote setups and do not require access to the facilities. Said methods would help anticipate and address potential needs and problems in advance, streamlining the implementation. Reducing the need for layout revisions and equipment re-positioning can in turn help to reduce procedure times, lowering cost and chances for complications.

Current software solutions for this situation, while helpful, have significant limitations. They are usually costly and labor-intensive [3], making them hard to adapt or extend with new needs or machines, especially for novices. Creating digital assets of rooms and tools is also expensive and complex. LiDAR scanners and photogrammetry are precise, but require expertise and post-processing [6]. SLAM-like methods are fast to create output and straightforward to use, but produce noisy and low-quality output [7]. Neural radiance fields (NeRFs) can generate realistic images, but their spatial reconstruction performance can be inadequate, and they have poor compatibility with existing rendering pipelines and exhibit high computational demand [8]. In addition, the suitability of state-of-the-art model output as VR environments is not yet well understood.

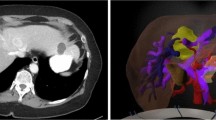

Our approach uses neural surface reconstruction of casual video captures to create digital twins of complex medical environments, such as ORs and objects, as displayed in Fig. 1. We export these reconstructions as textured polygon meshes, compatible with existing rendering pipelines and various downstream applications. Our process is mostly automated, minimizing the need for specialized 3D capture hardware and post-processing. To display these digital twins, we devised a VR environment, accessible through standalone headsets. This environment allows users to explore, as well as interact with and manipulate objects, helping them plan upcoming procedures. To evaluate our approach, we ran a user study. Participants were tasked with adjusting the layout of virtual ORs, using two different reconstructions styles: neural reconstruction and whiteboxed reconstruction. We collected both subjective and objective data, revealing significantly increased perceived utility and presence using the neural reconstruction-based environments, combined with higher perceived workload and exploratory behavior.

Our focus in this work is to evaluate whether neural reconstruction techniques would be useful and potentially even beneficial in a practical scenario in which it might be used realistically. We evaluate this utilizing a mostly automatic way to create the visualizations, with clinical planning as an example scenario. The key contribution of our work include:

-

1.

A framework for creating digital twins of complex medical environments and objects using neural reconstruction of casual video capture.

-

2.

An evaluation of a state-of-the-art neural reconstruction techniques for creating accurate digital twins of OR settings.

-

3.

Preliminary insights into the cognitive impact of operating room planning within neural reconstructions compared to whiteboxed environments in VR, focusing on aspects such as workload, sense of presence, and perceived utility through a user study with non-expert participants.

-

4.

An analysis of objective factors including user exploration and interaction patterns within these virtual environments.

The remainder of this work is organized as follows: The next section reviews the most important Related Works. Our reconstruction approach, rooms and objects are explained in the Methods section. The Evaluation section describes the application, tasks, measures, study population and procedure. Subjective and objective measure outcomes are presented with detailed statistics in the Results section. The Discussion section interprets the main findings and ends with limitations and a conclusion.

Related work

Spatial planning within ORs has become increasingly complex with the influx of devices and tools. Current practices predominantly rely on the graph theoretic approach for facilities layout planning [9]. However, these methods are reaching their limitations in terms of flexibility and precision, struggling to adapt to the dynamics of modern OR environments, with the design quality a key determining factor for outcomes [10]. In parallel, there has been an increasing adoption of using VR for spatial planning, where initial findings suggest that high fidelity in VR environments can enhance spatial understanding and decision-making [11].

To overcome the challenges of spatial representation and the shortcomings of traditional modeling methods, there is a growing trend toward incorporating various data acquisition techniques. The integration of point cloud data with CAD models represents an innovative approach to streamline the modeling process, maintaining accuracy while increasing efficiency [12]. Recently, NeRFs have increasingly gained traction as a way to create arbitrary view renderings of a reconstructed scene from monocular RGB [8], with ongoing research into optimizing accuracy and speed [13], enabling applications. Relying on NeRFs directly as an environment representation is challenging, as they have demanding runtime compute requirements and do not explicitly define surfaces. Clear surfaces however are important for collision handling, enabling interaction. Further, the scene geometry is usually modeled by a density neural network. This can make it challenging to export a defined 3D surface to use in other rendering applications, as density thresholding is scene specific and does not always yield the desired results. Neural surface reconstruction methods that combine neural rendering ideas with explicit surface reconstruction can provide high-fidelity, detailed 3D models because they rely on optimizing a signed distance field [14] instead of a density approximator. These methods can be adapted to offer a robust and portable solution for reconstruction of complex environments for digital twin-based scenario modeling and planning, providing a practical solution to visualize and navigate ORs.

Methods

Digital twin creation

We created virtual analogs of two operating rooms and ten objects using a custom implementation of Neuralangelo [14]. We used the suggested parameters, except for reducing the hash map size to \(2^{21}\) for objects. Our creation pipeline was as follows: Take a video of the target object or room using a smartphone, prepare the data and train the network, extract the mesh, cut off excess geometry, decimate it and create a mapped texture. Training took around 40–50 h using a NVIDIA Quadro RTX 6000 (see“Appendix C”). Further acceleration of the reconstruction process by factors of \(>50\) is possible [13]. Data collection happened with off-the-shelf hardware (recent model iPhone). No beautification of the mesh besides cutting off superfluous parts to ensure stable performance and quality was done. Outcomes represent the state of the art in realistic neural reconstruction. Examples of the expected visual quality can be found in [14]. While our method excels at reconstructing shapes and fine details from limited source material, reflective and uniformly colored surfaces can be challenging. The capabilities of the rendering hardware represent a further limitation of the achievable quality. This procedure was chosen to require no expensive hardware, and little expert time and knowledge. The few manual steps could possibly be automated.

Rooms

We reconstructed two environments: a mock OR mainly used for experiments on novel robotic systems, and a real OR in daily use in a hospital. The rooms were reconstructed from around 20–25 min of wide-angle video each, depending on the complexity and details. To optimize the visuals for VR hardware, we split the meshes into two parts of around 400k faces, each with a 4k texture. This improved the visual quality and reduced the creation time and hardware requirements. Impressions from the reconstructions of the real and mock OR are shown in Figs. 1 and 2a, respectively.

Objects

We further created ten different reconstructions of objects found in or around the rooms used in the experiment: A hospital bed, rolling chair, surgical robot, roll-able table with equipment, surgeon lookalike, trolley with medical material, roll-able tracking system, tracking system on tripod, workshop trolley, and a roll-able digital monitor. The objects were reconstructed from around 2–4 min of video. Mesh decimation depended on the object complexity, objects had 20k to 60k faces and a 2k texture. Samples of input images and resulting models can be seen in Fig. 3.

Evaluation

We performed a within-subject repeated measures experiment with the level of reconstruction type, i.e., neural reconstruction and whiteboxed reconstruction, as well as the type of environment, i.e., real and mock OR, as independent factors. Thus, participants evaluated two reconstruction types in two environments.

Virtual reality application

The VR application was developed with Unity 2022.3.7 due to ease of development and deploying to VR headsets. We created whiteboxed versions of the environments and objects as a second reconstruction level for the experimental application, see Fig. 2. The whiteboxed versions were based on the neural reconstructions and represent the quality level one could realistically achieve using CAD modeling software in reasonable time without resorting to specialized hardware or relying on experts to truthfully model the environment. In addition to the 2 rooms and 10 items, the applications contain a training scene, shown before the actual experiment starts. Users can look around and move freely in the virtual environment, physically within around \(1~\hbox {m}^{2}\), virtually additionally via teleportation. It is possible to create, move (near and far interaction, one- and two-handed), and delete objects. The VR application mirrors the user and objects positions onto the supervisor’s laptop. The system was developed to run locally on a Meta Quest 2 head mounted display (HMD) device, with using controllers to allow user input. In the experimental setup, we use a secondary laptop to let the study supervisor issue commands and load environments remotely on the headset. Communication between the laptop and HMD was wireless via Wi-Fi.

Tasks

To engage participants in the virtual environment, they were tasked to do a simple planning task for the OR. They had to place, move and remove objects while considering the objective at hand. The instructions were displayed next to the application menu. Included were information about target objects, which constraints to be applied, and the objectives. While the task were simple enough to ensure approachability within the VR exposure time, the tasks were inspired by realistic planning scenarios. Additionally, some objects were already placed in the scene, exemplary as a current setup. These could be moved or removed. We designed the tasks to encourage participants to experience the room and objects, and stay engaged during the VR trials for comparable exposure. Therefore, we did not specify the tasks too much to enable exploration. Actual planning outcomes were not quantified. See “Appendix A” for examples.

Measures

Perceived utility

A custom scale was used to assess the perceived utility of the application. It consisted of eight questions with the three subscales confidence, memorability, and utility. All questions were to be answered on a 7-point Likert scale with endpoints fully disagree (1) and fully agree (7). The questions can be found in “Appendix B.”

Presence

We used the Igroup Presence Questionnaire (IPQ) by Schubert et al. [15] to measure presence in the virtual environment. It consists of 14 items on four subscales (general, spatial presence, involvement, perceived realism) on a 7-point Likert scale with varying endpoints.

Perceived task load

To assess workload and performance the NASA Task load index (NASA-TLX) [16] was used, including all subscales (mental, physical, and temporal demand and performance, effort, and frustration), without pairwise comparisons (raw TLX).

Motion sickness

The Fast Motion Sickness Scale (FMSS) by Keshavarz et al. [17] was used to check whether participants were negatively affected by the experience using one question. Scale width is 0 (no sickness) to 100 (very high sickness).

Lastly, we asked participants to rate their familiarity with the presented virtual environment on a 7-point Likert scale. The endpoints of the scale were completely unknown (1) and very familiar (7).

We further evaluate participants’ behavior inside the virtual environment as objective measures. We log the following regarding locomotion: summed up head rotation in angle degrees, summed up head movement in meters, and total number of teleportation. We further log participants’ interactions: objects created, objects deleted, objects moved, plus every 4 s whether the participant was moving something around in this time span. As with the questionnaires, these data were recorded for each VR exposure.

Testing procedure

The experiment took approximately 1 h to complete. At first, the study information was read to the participants and they then gave verbal consent. Participants provided demographic background (age, gender, current occupation, VR experience, and medical knowledge) and a baseline FMSS. Next, participants did a short VR training session for around 5 min, to familiarize themselves with the VR application. Afterward, they started with one of the four conditions in a counterbalanced order. Participants worked on a task in the virtual environment for 5 min. The instructions were given within the environment using text displays next to the menu. After the 5 min were over, participants took off the HMD and proceeded to answer the post-experiment questionnaire on a computer. This was repeated four times so that participants experienced both reconstructions in both rooms. After the last condition was completed, they had the option to leave comments on their experience.

Participants

We recruited 21 participants via mailing lists and word of mouth to take part in the experiment to achieve reasonable statistical power. Of those participants, nine identified as female and twelve as male. The mean age was \(M = 24.57\) (\(\hbox {SD} = 1.66\)) years, all participants were graduate students. Nine participants had little VR experience (less than 5 times overall), nine had some VR experience with occasional use, and three reported high experience with regular use. Prior medical knowledge measured with a 7-point Likert scale no knowledge (1) and detailed knowledge (7), resulted in a mean of \(M = 2.90\) (\(\hbox {SD} = 1.58\)). One participant had to be excluded from the objective measures’ data analysis due to technical issues. Participants received no compensation. Exclusion criteria were being underage and severe uncorrected vision.

Results

We used SPSS version 29 to run our statistical evaluation, calculating two-way repeated measures tests with a significance level of 0.05. While we are mainly interested in the effects of the reconstruction type, we included the room as a factor to be able to evaluate its effects. We did not test for sphericity as both factors have only two levels. We tested all data for normality using the Shapiro–Wilk test, which was either completely normally distributed, or had only minor violations in some scales, unlikely to affect outcomes at our sample size.

Subjective measures

All main effects for the reconstruction type of the measurements perceived utility, presence, and workload can be found in Table 1.

Perceived utility

We find a significant difference in perceived utility (see “Appendix B”) between the reconstruction types, \(F(3,18)=22.661\), \(p<.001\), Wilks’\( \Lambda =.209\), \(\eta _{p}^2=.791\). Effects of the room (\(F(3,18)=2.644\), \(p<.080\), Wilks’\( \Lambda =.694\), \(\eta _{p}^2=.306\)) and interaction effects (\(F(3,18)=1.062\), \(p<.390\), Wilks’\( \Lambda =.850\), \(\eta _{p}^2=.150\)) did not reach significant levels. All three subscales were rated significantly higher in the neural reconstruction-based environment. To assess the reliability and internal consistency of our questionnaire, we calculated Cronbach’s alpha. The scale was found to be highly reliable [18] with \(\alpha =.929\).

Presence

Similarly to utility, we calculate the effects on presence with the four IPQ subscales as input to a MANOVA. There was a significant difference in overall presence between the two visualization types, \(F(4, 17)=68.304\), \(p<.001\), Wilks’ \(\Lambda =.059\), \(\eta _{p}^2=.941\). Further, there was no significant effect of the room (\(F(4,17)=1.059\), \(p<.407\), Wilks’\( \Lambda =.801\), \(\eta _{p}^2=.199\)) and no significant interaction effect (\(F(4,17)=1.370\), \(p<.286\), Wilks’\( \Lambda =.756\), \(\eta _{p}^2=.244\)). Exploring the four subscales, we find all of them significantly higher in the neural reconstruction-based visualization.

Perceived task load

Analyzing the reconstruction type, we find the mental, physical, and effort subscales significantly different between the conditions. The other scales, temporal, performance, and frustration do not differ significantly. Participants generally reported higher workload in the neural reconstruction-based environment compared to the whiteboxed environment, however, with frustration being slightly lower. Additionally, comparing differences between the rooms irrespective of reconstruction type, we find performance (\(F(1, 20)=11.310\), \(p=.003\), \(\eta _{p}^2=.361\) significantly higher in the mock OR (\(M=76.1\); \(\hbox {SE}=2.729\)) compared to the real OR (\(M=67.7\); \(\hbox {SE}=3.466\)).

Familiarity with environment

Participants rated their familiarity with the neural reconstructed mock OR \(M=5.81\) (\(\hbox {SD}=1.08\), with the whiteboxed mock or \(M=4.14\) (\(\hbox {SD}=1.90\)), with the neural reconstructed real OR \(M=5.29\) (\(\hbox {SD}=1.31\)) and with the whiteboxed real OR \(M=3.33\) (\(\text {SD}=1.88\)).

Motion sickness

We found no statistically significant difference in reported sickness between our measurement before the experiment started and after each measurement, \(F(2.751, 55.024)=0.238\), \(p=854\), \(\eta _{p}^2=.012\). Reported data are with Greenhouse–Geisser correction applied.

Objective measures

Tracked participant head movement and rotation both were significantly higher in the reconstructed environment. There was, however, no impact of visualization on teleportation movement actions. It was instead mostly dependent on room size. Table 2 has a detailed listing of objective measures’ effects. We compared participants interactivity as four second time slots spent manipulating objects, as well as sum of all discrete object interaction events. There was no significant difference in participant interactivity between the rooms in either of those cases.

Qualitative comments

Even though post-exposure comments were optional, 18 participants positively mentioned the reconstruction quality, real and detail-rich environment of neural reconstruction-based scenarios. Also positively mentioned were the ease of use of the application controls in VR. Negatively mentioned were that the environment sometimes was lacking in detail, not high enough resolution or flat surfaces were not completely flat. Some users further mentioned problems interacting with the menu and objects as desired and difficulties understanding and implementing the tasks.

Discussion

The perceived usability, including all subscales, was significantly higher in the neural reconstruction-based digital twins. This indicates that this reconstruction type provided users with worthwhile additional details and information to make more informed decisions. Users experienced higher mental and physical workload in the neural digital twins. We expected this to be the case, as there are more details around to process and take in, as indicated by the increased head movement and rotation. While it might be worthwhile to invest in ways to direct users attention to reduce this workload, it is to note that higher workload does not necessarily mean that participants were mentally overloaded, performing worse. Future studies could explore this relationship more. There was no increased interactivity, indicating that the more realistic visuals did not inspire more thorough planning. Together with the increased perceived utility, it stands to reason that the visual detail was responsible for the higher perceived utility, independent of the amount of interaction and planning. Future work could investigate whether hybrid approaches, combining the two visualization strategies, lead to better outcomes. In such cases, lower detail environments could be automatically generated as well, strategically leaving out unimportant details while keeping all relevant information.

TLX performance being significantly higher in the mock OR, compared to the real one, possibly indicates that the tasks were harder there, or participants were less sure about their performance with a real surgical environment. There being no difference in TLX performance between reconstruction types, but in perceived utility, might indicate that novice users did not know how to properly evaluate their planning outcome, but still believe that the visualization would be useful for experts solving clinical planning tasks. Overall increased presence indicates the suitability of the environment, with high realism indicating a high level of detail, and high levels of involvement and spatial presence matching the increased head rotation and users actively exploring and understanding the environment. Analyzing the familiarity values, we see very high averages for the real OR, even though most participants are very unlikely to know the room. The higher realism possibly evokes more memories of other ORs participants have seen, indicating suitability for learning in the right context [19], helping users recall information due to context-based environmental cues. Our approach works well for different environments and has high reconstruction quality, making it worth investigating as a pedagogical tool. These results indicate that future planning tools could invest in enabling users to supply own video material for processing and then planning.

Limitations

We tested two different VR scenarios focused on being realistic, and easily achievable, thus lacking a comparison to current advanced planning tools. Further, our sample is limited to graduate students. Despite partial medical knowledge, the results cannot be blindly generalized to planning experts. However, we believe that the study gives substantial indications for the feasibility of the approach. Finally, our tasks were fictitious examples and may not resemble realities’ full complexity. Thus, some of our findings might not generalize fully beyond the specific scenarios of this study. Further studies should therefore substantiate the results of the present investigation, possibly utilizing clinical professionals, real planning or emergency tasks and an expanded set of environments and objects. Integration into simulation environments could help quantify planning success in addition to field tests. Discrete event simulation was shown to be able to measure and compare virtual ORs along multiple axes like spatial or hygienic requirements [20] and could potentially also integrate analyses directly into the virtual environment.

Conclusion

Our results indicate suitability of neural reconstructed digital twins for medical planning tasks. Utilizing such visualizations increased perceived utility and presence compared to a low visual fidelity environment. Moreover, we show that current neural reconstructions can be created and used with off-the-shelf hardware, providing real-world benefits.

References

Wong SW, Smith R, Crowe P (2010) Optimizing the operating theatre environment. ANZ J Surg 80(12):917–924. https://doi.org/10.1111/j.1445-2197.2010.05526.x

Zhao Y, Mourshed M, Wright J (2009) Factors influencing the design of spatial layouts in healthcare buildings. In: Association of Researchers in Construction Management, ARCOM

Barbagallo S, Corradi L, Goyet J, Iannucci M, Porro I, Rosso N, Tanfani E, Testi A (2015) Optimization and planning of operating theatre activities: an original definition of pathways and process modeling. BMC Med Inform Decis Mak 15(1):38. https://doi.org/10.1186/s12911-015-0161-7

Romeijn HE, Zenios SA (2008) Introduction to the special issue on operations research in health care. Oper Res 56(6):1333–1334. https://doi.org/10.1287/opre.1080.0647

Cardoen B, Demeulemeester E, Beliën J (2010) Operating room planning and scheduling: a literature review. Eur J Oper Res 201(3):921–932. https://doi.org/10.1016/j.ejor.2009.04.011

Raj T, Hashim FH, Huddin AB, Ibrahim MF, Hussain A (2020) A survey on LiDAR scanning mechanisms. Electronics 9(5):741. https://doi.org/10.3390/electronics9050741

Taheri H, Xia ZC (2021) SLAM; definition and evolution. Eng Appl Artif Intell 97:104032. https://doi.org/10.1016/j.engappai.2020.104032

Mildenhall B, Srinivasan PP, Tancik M, Barron JT, Ramamoorthi R, Ng R (2020) NeRF: representing scenes as neural radiance fields for view synthesis. arXiv:2003.08934

Assem M, Ouda BK, Wahed MA (2012) Improving operating theatre design using facilities layout planning. In: 2012 Cairo international biomedical engineering conference (CIBEC). IEEE, Giza, pp 109–113. https://doi.org/10.1109/CIBEC.2012.6473314

Brownlee SA, Whitson PJ, Ibrahim AM (2019) Measuring and improving the design quality of operating rooms. Surg Infect 20(2):102–106. https://doi.org/10.1089/sur.2018.291

Szczepańska A, Kaźmierczak R, Myszkowska M (2021) Virtual reality as a tool for public consultations in spatial planning and management. Energies 14(19):6046. https://doi.org/10.3390/en14196046

Gong L, Berglund J, Fast-Berglund Å, Johansson B, Wang Z, Börjesson T (2019) Development of virtual reality support to factory layout planning. Int J Interact Des Manuf (IJIDeM) 13(3):935–945. https://doi.org/10.1007/s12008-019-00538-x

Müller T, Evans A, Schied C, Keller A (2022) Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans Graph 41(4):1–15. https://doi.org/10.1145/3528223.3530127

Li Z, Müller T, Evans A, Taylor RH, Unberath M, Liu M-Y, Lin C-H (2023)Neuralangelo: high-fidelity neural surface reconstruction. In: 2023 IEEE/CVF conference on computer vision and pattern recognition (CVPR). IEEE, Vancouver, pp 8456–8465. https://doi.org/10.1109/CVPR52729.2023.00817

Schubert TW (2003) The sense of presence in virtual environments: a three-component scale measuring spatial presence, involvement, and realness. Z Medienpsychol 15(2):69–71. https://doi.org/10.1026//1617-6383.15.2.69

Hart SG, Staveland LE (1988) Development of NASA-TLX (task load index): results of empirical and theoretical research. Adv Psychol, vol 52. North-Holland, Amsterdam, pp 139–183

Keshavarz B, Hecht H (2011) Validating an efficient method to quantify motion sickness. Hum Factors J Hum Factors Ergon Soc 53(4):415–426. https://doi.org/10.1177/0018720811403736

Tavakol M, Dennick R (2011) Making sense of Cronbach’s alpha. Int J Med Educ 2:53–55. https://doi.org/10.5116/ijme.4dfb.8dfd

Essoe JK-Y, Reggente N, Ohno AA, Baek YH, Dell’Italia J, Rissman J (2022) Enhancing learning and retention with distinctive virtual reality environments and mental context reinstatement. npj Sci Learn 7(1):1–14

Neumann J, Angrick C, Höhn C, Zajonz D, Ghanem M, Roth A, Neumuth T (2020) Surgical workflow simulation for the design and assessment of operating room setups in orthopedic surgery. BMC Med Inform Decis Mak 20(1):145. https://doi.org/10.1186/s12911-020-1086-3

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was supported by the Digital Health Innovation Platform (d.hip), the NSF under Award No. 2239077, the Link Foundation Fellowship for Modeling, Training, and Simulation, and Johns Hopkins Internal Funds.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest to declare.

Ethical approval

The study was approved by the Johns Hopkins University Institutional Review Board (HIRB00017775) and conducted in accordance with the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 69243 KB)

Appendices

Appendix A: Tasks

Tasks participants had to work on in the virtual environments.

Mock OR

-

Task: Update Hardware! Needed: a surgical robot, one or more tracking carts, a TV cart, space for at least two surgeons. Goals: Remove old tripods and place new tracking carts with clean line of view of the patient. Surgeons can easily manipulate the robot and see the monitor. Robot can reach the patient.

-

Task: Prepare long procedure! Needed: Sterile cart, trolley, two or more surgeons, chairs and place to lay down tools. Goal: Surgeons should be able to move freely, while being able to easily access tools in the trolley and lay them on the table. Chairs should be accessible as needed to sit down without obstructing paths.

Real OR

-

Task: Plan Surgery! Needed: a surgical robot, a TV cart, tracking trolley, table and space for at least two surgeons. Goal: Surgeons can easily manipulate the robot and reach patient, table and trolley. The TV should be well visible, but not in the way.

-

Task: Transfer Patient! Needed: a surgical robot, a tracking tripod, a monitor and space for patient table, sterile material cart and medical professionals. Goal: Make sure a patient bed can move right next to operating table. Find the best layout to transfer patient, then back to surgery easily, while having access to sterile material.

Appendix B: Perceived utility instrument

See the Table 3.

Appendix C: Reproduction information

We used a workstation with the following hardware for Neuralangelo training:

-

AMD Ryzen 9 3950X 16-Core Processor

-

Two NVIDIA Quadro RTX 6000 24 GB memory graphics cards

-

128 GB RAM

-

Operating system: Ubuntu 20.04

-

NVIDIA driver version 470.182.03

-

CUDA version 11.4

The rooms were reconstructed using both GPUs. Objects were reconstructed using a single GPU and a reduced hash map size of \(2^{21}\). All other parameters were as described in [14]. While we used a custom implementation, open-source implementations of the Neuralangelo reconstruction approach are available from Nvidia (https://github.com/NVlabs/neuralangelo) and as part of SDFStudio (https://github.com/autonomousvision/sdfstudio).

The input data were collected with recent model Apple iPhones (11 and 14), with the built-in wide-angle lens and high framerate to reduce motion blur artifacts. The reconstruction pipeline is also shown in Fig. 4.

Reconstruction pipeline. Data are acquired with a phone or camera, poses for each picture are determined by a structure from motion algorithm, a reconstruction is trained with the located images, a mesh is exported by running marching cubes on the signed distance field and then decimated, a texture is rendered for mapped to the exported mesh

The study supervisor monitored the experiment using a 15-inch Razer Blade laptop. Participants used the same machine to fill out the questionnaires after the VR exposure.

Appendix D: Reconstruction quality comparison

This section contains exemplary comparison images to judge the neural reconstruction output before SDF to mesh conversion or texture mapping has been applied. Raw camera input data are on the left of each image and neural reconstruction output on the right. Figure 5 highlights reconstructions of the environments, Fig. 6 displays comparisons of the objects.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kleinbeck, C., Zhang, H., Killeen, B.D. et al. Neural digital twins: reconstructing complex medical environments for spatial planning in virtual reality. Int J CARS 19, 1301–1312 (2024). https://doi.org/10.1007/s11548-024-03143-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-024-03143-w