Abstract

Purpose

Recently, transformers have been adopted to computer vision applications and achieve great success in image segmentation. However by simply applying transformers to medical segmentation task it is hard to achieve much higher accuracy than by traditional U-shaped network structures, which are based on CNNs and has been extensively researched. On the other hand, CNN structure pays more attention to local information and ignores global information, which is very important for the medical image segmentation dataset with cell scattered background. This motivates us to explore the feasibility of using U-shape effective fusion transformer network architectures for medical image segmentation tasks.

Methods

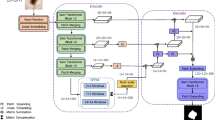

In this paper, we propose a multibranch U-shaped structure fusion transformer network (MBUTransNet), which consists of two distinct branches. In branch 1, Coordinate attention transformer is designed to extract long-term dependency information through weight coordinates. In branch 2, small U-net blocks and multiscale feature fusion block are proposed to replace convolution blocks of each layer and fuse the feature maps from different layers, respectively.

Results

Our experiments demonstrate that the proposed MBUTransNet has achieved a 0.076 and 0.1269 improvement in DICE compared to the previous best method on MoNuSeg and Synapse multiorgan segmentation dataset, respectively, while the model parameters will be no significant increase.

Conclusion

Without bells and whistles, MBUTransNet achieves better performance on medical image datasets, including medical cell segmentation and abdominal organs segmentation. Compared with transformer-based methods, our proposed model also obtains quite competitive parameters.

Similar content being viewed by others

References

Alijamaat A, NikravanShalmani A, Bayat P (2021) Multiple sclerosis lesion segmentation from brain MRI using U-Net based on wavelet pooling. Int J Comput Assist Radiol Surg 16(9):1459–1467

Andresen J, Kepp T, Ehrhardt J, Burchard CVD, Roider J, Handels H (2022) Deep learning-based simultaneous registration and unsupervised non-correspondence segmentation of medical images with pathologies. Int J Comput Assist Radiol Surg 17(4):699–710

Cao H, Wang Y, Chen J, Jiang D, Zhang X, Tian Q, Wang M (2021) Swin-unet: Unet-like pure transformer for medical image segmentation. arXiv preprint arXiv:2105.05537

Chen J, Lu Y, Yu Q, Luo X, Adeli E, Wang Y, Lu L, Yuille AL, Zhou Y (2021) Transunet: transformers make strong encoders for medical image segmentation. arXiv preprint arXiv:2102.04306

Chen LC, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2017) Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans Patt Anal Mach Intell 40(4):834–848

Devlin J, Chang MW, Lee K, Toutanova K (2019) Bert: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of NAACL-HLT, pp 4171–4186

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, Uszkoreit J, Houlsby N (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929

Fu H, Cheng J, Xu Y, Wong DWK, Liu J, Cao X (2018) Joint optic disc and cup segmentation based on multi-label deep network and polar transformation. IEEE Trans Med Imaging 37(7):1597–1605

Gu Z, Cheng J, Fu H, Zhou K, Hao H, Zhao Y, Zhang T, Gao S, Liu J (2019) Ce-net: context encoder network for 2d medical image segmentation. IEEE Trans Med Imaging 38(10):2281–2292

Hatamizadeh A, Tang Y, Nath V, Yang D, Myronenko A, Landman B, Roth HR, Xu D (2022) Unetr: transformers for 3d medical image segmentation. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 574–584

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13713–13722

Ibtehaz N, Rahman MS (2020) MultiResUNet: rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw 121:74–87

Kumar N et al (2019) A multi-organ nucleus segmentation challenge. IEEE Trans Med Imaging 39(5):1380–1391

Kumar N, Verma R, Sharma S, Bhargava S, Vahadane A, Sethi A (2017) A dataset and a technique for generalized nuclear segmentation for computational pathology. IEEE Trans Med Imaging 36(7):1550–1560

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10012–10022

Milletari F, Navab N, Ahmadi SA (2016) V-net: fully convolutional neural networks for volumetric medical image segmentation. InL: 2016 fourth international conference on 3D vision (3DV), IEEE, pp 565–571

Oktay O, Schlemper J, Folgoc LL, Lee M, Heinrich M, Misawa K, Mori K, McDonagh S, Hammerla NY, Kainz B, Glocker B, Rueckert D (2018) Attention u-net: Learning where to look for the pancreas. arXiv preprint arXiv:1804.03999

Qin X, Zhang Z, Huang C, Dehghan M, Zaiane OR, Jagersand M (2020) U2-Net: going deeper with nested U-structure for salient object detection. Patt Recognit 106:107404

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention. Springer, Cham, pp 234–241

Schlemper J, Oktay O, Schaap M, Heinrich M, Kainz B, Glocker B, Rueckert D (2019) Attention gated networks: learning to leverage salient regions in medical images. Med Image Anal 53:197–207

Sirinukunwattana K, Pluim JP, Chen H, Qi X, Heng PA, Guo YB, Wang L, Matuszewski BJ, Bruni E, Sanchez U, Bhm A, Ronneberger O, Cheikh BB, Racoceanu D, Kainzi P, Pfeiffer M, Urschler M, Snead DRJ, Rajpoot NM (2017) Gland segmentation in colon histology images: the glas challenge contest. Med Image Anal 35:489–502

Valanarasu JMJ, Oza P, Hacihaliloglu I, Patel VM (2021) Medical transformer: gated axial-attention for medical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, Cham, pp 36–46

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. Adv Neural Inf Process Systems 30

Wang H, Cao P, Wang J, Zaiane OR (2022) Uctransnet: rethinking the skip connections in u-net from a channel-wise perspective with transformer. In: Proceedings of the AAAI conference on artificial intelligence, Vol. 36, No. 3, pp 2441–2449

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7794–7803

Wang X, Xiang T, Zhang C, Song Y, Liu D, Huang H, Cai W (2021) Bix-nas: searching efficient bi-directional architecture for medical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, Cham, pp 229–238

Xiang T, Zhang C, Liu D, Song Y, Huang H, Cai W (2020) BiO-Net: learning recurrent bi-directional connections for encoder-decoder architecture. In: International conference on medical image computing and computer-assisted intervention. Springer, Cham, pp 74–84

Zhang Y, Liu H, Hu Q (2021) Transfuse: fusing transformers and cnns for medical image segmentation. In: International conference on medical image computing and computer-assisted intervention, Springer, Cham, pp 14–24

Zhao H, Shi J, Qi X, Wang X, Jia J (2017) Pyramid scene parsing network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2881–2890

Zhou Z, Siddiquee MMR, Tajbakhsh N, Liang J (2019) Unet++: redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans Med Imaging 39(6):1856–1867

Zhu XL, Shen HB, Sun H, Duan LX, Xu YY (2022) Improving segmentation and classification of renal tumors in small sample 3D CT images using transfer learning with convolutional neural networks. Int J Comput Assist Radiol Surg. https://doi.org/10.1007/s11548-022-02587-2

Funding

This study was funded by National Natural Science Foundation of China (NSFC) (62006107, 61402212) and Introduction and Cultivation Program for Young Innovative Talents of Universities in Shandong (2021QCYY003)

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors JunBo Qiao, Xing Wang, Ji Chen and MingTao liu declare that they have no conflict of interest.

Human and animal rights

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qiao, J., Wang, X., Chen, J. et al. MBUTransNet: multi-branch U-shaped network fusion transformer architecture for medical image segmentation. Int J CARS 18, 1895–1902 (2023). https://doi.org/10.1007/s11548-023-02879-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-023-02879-1