Abstract

Purpose

Automatic segmentation and classification of surgical activity is crucial for providing advanced support in computer-assisted interventions and autonomous functionalities in robot-assisted surgeries. Prior works have focused on recognizing either coarse activities, such as phases, or fine-grained activities, such as gestures. This work aims at jointly recognizing two complementary levels of granularity directly from videos, namely phases and steps.

Methods

We introduce two correlated surgical activities, phases and steps, for the laparoscopic gastric bypass procedure. We propose a multi-task multi-stage temporal convolutional network (MTMS-TCN) along with a multi-task convolutional neural network (CNN) training setup to jointly predict the phases and steps and benefit from their complementarity to better evaluate the execution of the procedure. We evaluate the proposed method on a large video dataset consisting of 40 surgical procedures (Bypass40).

Results

We present experimental results from several baseline models for both phase and step recognition on the Bypass40. The proposed MTMS-TCN method outperforms single-task methods in both phase and step recognition by 1-2% in accuracy, precision and recall. Furthermore, for step recognition, MTMS-TCN achieves a superior performance of 3-6% compared to LSTM-based models on all metrics.

Conclusion

In this work, we present a multi-task multi-stage temporal convolutional network for surgical activity recognition, which shows improved results compared to single-task models on a gastric bypass dataset with multi-level annotations. The proposed method shows that the joint modeling of phases and steps is beneficial to improve the overall recognition of each type of activity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Recent works in computer-assisted interventions and robot-assisted minimally invasive surgery have seen significant progress in developing advanced support technologies for the demanding scenarios of a modern operating room (OR) [6, 21, 27]. Automatic surgical workflow analysis, i.e., reliable recognition of the current surgical activities, plays an important role in the OR by providing the semantic information needed to design assistance systems that can support clinical decision, report generation, and data annotation. This information is at the core of the cognitive understanding of the surgery and could help reduce surgical errors, increase patient safety, and establish efficient and effective communication protocols [5, 19, 21, 27].

A surgical procedure can be decomposed into activities at different levels of granularity, such as the whole procedure, phases, stages, steps, and actions [18]. Recent works have strongly focused on developing methods to recognize one specific level of granularity from video data. The visual detection of phases [7, 15, 16, 25, 30], robotic gestures [2, 10, 26, 29], and instruments [11, 14, 16, 22] has, for instance, seen a surge in research activities, due to their potential impact on developing intra- and postoperative tools for the purposes of monitoring safety, assessing skills, and reporting. Many of these previous works have focused on endoscopic cholecystectomy procedures, utilizing the publicly available large-scale Cholec80 dataset [25], and on cataract surgical procedures, utilizing the popular CATARACTS dataset [11, 30].

In this work, we target another type of high volume procedure, namely the gastric bypass. This procedure is particularly interesting for activity analysis as it exhibits a very complex workflow. Gastric bypass is a procedure to treat obesity, which is considered a global health epidemic by the World Health Organization [1], with approximately 500,000 laparoscopic bariatric procedures performed every year worldwide [3]. Laparoscopic Roux-En-Y gastric bypass (LRYGB), the most performed and gold standard bariatric surgical procedure [3], consists in the reduction of the stomach and the bypass of part of the small bowel. Various clinical groups have worked to find a consensus on the best workflow for this technically demanding surgical procedure in order to improve standardization and reproducibility [17]. A clear framework and shared nomenclature to segment surgical procedures are currently missing.

Similar to [17], we introduce a hierarchical representation of LRYGB procedure containing phases and steps representing the workflow performed in our hospital and focus our attention on the recognition of these two types of activities. Toward this end, we utilize a new large-scale dataset, called Bypass40, containing 40 endoscopic videos of gastric bypass surgical procedures annotated with phases and steps. Overall, 11 phases and 44 steps are annotated in all videos. This opens new possibilities for research in surgical knowledge modeling and recognition. To jointly learn the tasks of phase and step recognition, we introduce MTMS-TCN, a multi-task multi-stage temporal convolutional networks, extending MS-TCNs [9] proposed for action segmentation.

The contributions of this paper are threefold: (1) we introduce new multi-level surgical activity annotations for the LRYGB procedure and utilize a novel dataset; (2) we propose a multi-task recognition model utilizing only visual features from the endoscopic video; and (3) we benchmark the proposed method with other state-of-the-art deep learning models on the new Bypass40 dataset for surgical activity recognition, demonstrating the effectiveness of the joint modeling of phases and steps.

Sample images from the dataset with phase labels on top-left and step labels on top-right corner. The labels can be inferred from Fig. 2

Related work

EndoNet [25] and DeepPhase [30] belong to the early works that employed deep learning for surgical workflow analysis on cholecystectomy and cataract surgeries. EndoNet jointly performed phase and tool detection, and the model consisted of a CNN followed by a hierarchical hidden Markov model (HMM) for modeling temporal information, while DeepPhase used a CNN followed by recurrent neural network (RNN) temporal modeling. EndoNet was evolved to EndoLSTM [24] that consisted of a CNN for feature extraction and an LSTM, i.e., long short-term memory, for temporal refinement. Similarly, SV-RCNet [15] trained an end-to-end ResNet [12] and LSTM model incorporating a prior knowledge inference scheme for surgical phase recognition. MTRCNet-CL [16] proposed a multi-task model to detect tool presence and phase recognition. The features from the CNN were used to detect tool presence and also served as input to a LSTM model for phase prediction. Additionally, a correlation loss was introduced to enhance the synergy between the two tasks. Most of the previous methods use LSTMs, which retains memory for a limited sequence. Since the average duration of a surgery can range from less than half an hour to many hours, it makes it challenging for LSTM-based models to leverage the temporal information for surgical phase recognition.

Temporal convolutional networks (TCNs) [20] were introduced to hierarchically process videos for action segmentation. An encoder–decoder architecture was able to encode both high- and low-level features in contrast to RNNs. Furthermore, dilated convolutions [23] were utilized in TCNs for action segmentation that showed performance improvements due to a large receptive field for higher temporal resolution. MS-TCN [9] consisted of a multi-stage predictor architecture with each stage consisting of multi-layer TCN, which incrementally refined the prediction of the previous stage. Recently, TeCNO [7] adapted the MS-TCN architecture for online surgical phase prediction by implementing causal convolutions [23]. We build upon this architecture and confirm experimentally that it is superior to LSTM for multi-level activity recognition.

Hierarchical surgical activities: phases & steps

We introduce two hierarchically defined surgical activities called phases and steps for the LRYGB procedure. These two elements define the workflow of the surgery at two levels of granularity with the phases describing the surgical workflow at coarser level than the steps. Phases describe a set of fundamental surgical aims to accomplish in order to successfully complete the surgical procedure, while steps describe a set of surgical actions to perform in order to accomplish a surgical phase. The surgical procedure is segmented into 44 fine-grained steps, along with 11 coarser phases. All the phases and steps are presented in Fig. 2. These two types of activities are interesting for their inherent hierarchical relationship, which is shown in the figure. Additionally, the figure highlights all the critical phases, and corresponding critical steps, that are clinically known to be important for surgical outcomes [4].

We make use of a new dataset, called Bypass40, consisting of 40 videos of LRYGB procedures with an average duration of 110 ± 30 minutes. This dataset is created from surgeries performed by 7 expert surgeons at IHU Strasbourg. The videos are captured at 25 frames-per-second (fps) with a resolution of \(854 \times 480\) or \(1920 \times 1080\) and annotated with phases and steps. Sample images with respective phase labels are shown in Fig. 1. The distribution of phases and steps in the Bypass40 dataset is shown in Fig. 3. As can be seen, there is a high imbalance in class distribution of both phases and steps. This is to be expected as all steps need not occur in all surgeries and also task completion time of the phases/steps may not be similar.

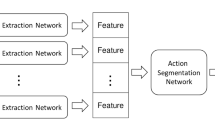

Methodology With the aim of joint online recognition of phases and steps, we propose an online surgical activity recognition pipeline consisting of the following steps: 1) A multi-task ResNet-50 is employed as a visual feature extractor. 2) A multi-task multi-stage causal TCN model refines the extracted feature of the current frame by encoding temporal information deduced by analyzing the history. We propose this two-step approach so that the temporal model training is independent of the backbone CNN feature extraction models. The overview of the model setup is depicted in Fig. 4.

Feature Extraction Architecture ResNet-50 [13] has been successfully employed in many works for phase segmentation [7, 15, 16, 28]. In this work, we utilize the same architecture as our backbone visual feature extraction model. The model maps \(224 \times 224 \times 3\) RGB images to a feature space of size \(N_f = 2048\). The model is trained on frames extracted from the videos, without any temporal context, in a multi-task setup to predict both phase and step as shown in Fig. 1 (a). Since both activities are multi-class classification problems that exhibit imbalance in the class distribution, softmax activations and class-weighted cross-entropy loss are utilized. The class weights for both activities are calculated using the median frequency balancing [8]. The total loss, \(\mathcal {L}_{total} = \mathcal {L}_{phase} + \mathcal {L}_{step}\), is obtained by combining equally weighted contributions of class-weighted cross-entropy loss for phases \((\mathcal {L}_{phase})\) and steps \((\mathcal {L}_{step})\).

Temporal Modeling

For joint temporal surgical activity recognition task, we propose MTMS-TCN, a multi-task extension of a multi-stage temporal convolutional network. The model takes an input video consisting of \(x_{1:t}\), \(t \in [1, T]\) frames, where T is the total number of frames, and predicts \(y_{1:t}\) where \(y_t\) is the class label for the current timestamp t. Following the design of MS-TCN, MTMS-TCN contains neither pooling layers nor fully connected layers and it is only constructed with temporal convolutional layers. Our temporal model consists of only temporal convolutional layers; in particular, they are dilated residual layers performing dilated convolutions. Since our aim is to segment surgical activities online, similar to TeCNO [7], we perform causal convolutions [23] at each layer which depends only on n past frames and does not rely on any future frames. The dilation factor is increased by a factor of 2 for each consecutive layer which increases exponentially the temporal receptive field of the network without introducing any pooling layer. Additionally, the multi-stage model recursively refines the output of the previous stage.

Similar to our setup for CNN, we train our MTMS-TCN in a multi-task fashion to jointly predict the two activities by attaching two heads at the end of a stage. Softmax activations with cross-entropy loss for phase and step are applied, and the total loss is similar to the loss utilized for training the backbone CNN (Eq. 3). Please note that the cross-entropy loss is not class-weighted. This is done to allow the temporal model to learn implicitly the duration and occurrence of each class in both phases and steps.

Experimental setup

Dataset We evaluate our method on the Bypass40 dataset described in Section 3. We split the 40 videos in the dataset into 4 subsets of 10 videos each to perform 4-fold cross-validation. Each subset was used as test set, while the other subsets were combined together and divided into training and validation tests consisting of 24 and 6 videos, respectively. The dataset was subsampled at 1 fps amounting to approximately 149,000 frames for training, 41,000 frames for validation, and 66,000 frames for testing in each fold. The frames are resized to ResNet-50’s input dimension of \(224 \times 224 \times 3\), and the training dataset is augmented by applying horizontal flip, saturation, and rotation.

Model Training The ResNet-50 model is initialized with weights pre-trained on ImageNet. The model is then trained for the task of phase and step recognition in a single-task setup, called ResNet, and jointly in a multi-task setup, called MT-ResNet, described in Section 3. In all the experiments, the model is trained for 30 epochs with a learning rate of 1e-5, weight regularization of 5e-5, and a batch size of 32. The test results presented are from the best performing model on the validation set. The baseline TCN model is trained in a single-task setup utilizing the features extracted from backbone ResNet (Fig. 5). This is effectively achieved by training TeCNO separately for the two activity recognition tasks. The MTMS-TCN model is trained in a multi-task setup utilizing the backbone MT-ResNet trained in a similar fashion. All models are trained with a different number of TCN stages to identify the effect of the number of stages on long temporal associations. In all the experiments, the model is trained for 200 epochs with a learning rate of 3e-4. The features representations of augmented data for CNN are also utilized for training the TCN model (Fig. 5). Our CNN backbone was implemented in TensorFlow, while the temporal models (TCN and LSTM) were implemented in Pytorch. Our models were trained on NVIDIA GeForce RTX 2080 Ti GPUs.

Evaluation Metrics We follow the same evaluation metrics used in other related publications [7, 15, 16], where accuracy (ACC), precision (PR), recall (RE), and F1 score (F1) are used to effectively compare the results. Accuracy quantifies the total correct classification of activity in the whole video. PR, RE, and F1 are computed class-wise, defined as:

where GT and P represent the ground truth and prediction for one class, respectively. These values are averaged across all the classes to obtain PR, RE, and F1 for the entire test set. We perform 4-fold cross-validation and report the results as mean and standard deviation across all the folds.

The overview of all evaluated models is depicted in Fig. 5. MTMS-TCN is evaluated against popular surgical phase recognition networks, ResNetLSTM [15], and TeCNO [7]. Both these networks are trained in a two-step process for the single-task of phase and step separately. Furthermore, ResNetLSTM is extended to get MT-ResNetLSTM where the ResNetLSTM model is trained in a multi-task setup. Since causal convolutions are used in the model for online recognition of activities, for fair comparison unidirectional LSTM is utilized. The LSTM, with 64 hidden units, is trained using the video features extracted from the CNN backbone with a sequence length equal to the length of the videos for 200 epochs with a learning rate of 3e-4.

Results and discussions

Comparison of MTMS-TCN (Stage I) with other state-of-the-art methods, utilizing both LSTMs and TCNs, is presented in Table 1 and Table 2 on both phase and step recognition tasks. TeCNO which utilizes TCNs outperforms both ResNetLSTM and MT-ResNetLSTM models by 1% and 3% in terms of accuracy. MTMS-TCN outperforms TeCNO, ResNetLSTM, and MT-ResNetLSTM models for by 2% the phase recognition.

Similarly, for step recognition, TeCNO outperforms both LSTM-based models by 3-4% with respect to accuracy and 3-6% in terms of precision. MTMS-TCN improves over TeCNO by 1% in accuracy and outperforms it by 2% and 1.5% in terms of precision and recall, respectively. In turn, MTMS-TCN outperforms LSTM-based models by 4-5% in terms of accuracy and 3-8% in terms of precision and recall.

Table 3 presents performance of all the models on joint recognition of phase and step. We present joint phase-step prediction accuracy which is computed as the average number of instances where both the phase and step are correctly recognized by the model. All the multi-task models outperform their single-task counterpart. In particular, MTMS-TCN outperforms TeCNO by 3%. Moreover, the joint-recognition accuracy of MTMS-TCN is very close to its step recognition accuracy which indicated that the model has implicitly learned the hierarchical relationship and benefited from it.

The improvement achieved by both MTMS-TCN and TeCNO in both the recognition tasks over LSTM-based models is attributed to the higher temporal resolution and large receptive field of the underlying TCN module. On the other hand, improvement of MTMS-TCN over TeCNO is attributed to the multi-task setup. Additionally, MT-ResNet, the backbone of our MTMS-TCN, achieves improved performance in steps with a small decrease in performance for phase recognition compared to ResNet, the backbone of TeCNO.

A set of surgically critical steps along with their average precision, recall, and F1-score is presented in Table 4. MTMS-TCN performs better than TeCNO in recognizing many of the steps. Moreover, short duration steps such as S25, S30, and S39 that are harder to recognize are significantly better recognized by our MTMS-TCN over TeCNO. All these results validate our model trained in a multi-task setup for joint recognition of phases and steps.

Fig. 6 visualizes a video set of 3 best and 3 worst performances of MTMS-TCN for phase recognition. The MTMS-TCN, in some cases, performs better than TeCNO in recognizing smaller phases, such as P5, P7, P9, and P10. MTMS-TCN is also able to recognize phase transitions better than TeCNO in some instances (e.g., P3, P4, and P9). Additionally, both the methods outperform ResNet and ResNetLSTM models.

Fig. 7 visualizes the complete video set of one best and one worst performance of MTMS-TCN for step recognition. Since there are 44 steps, visualizing all of them is quite challenging and clutters the plot. To effectively show the results, we look at one videos instead of 3 in each best and worst category. Furthermore, for better visualization we use a 20 categorical colormap and all 44 steps are mapped onto this colormap. The results clearly show that MTMS-TCN is able to better capture smaller steps and step transitions in comparison to TeCNO and ResNetLSTM.

Conclusion

In this paper, we introduce a new multi-level surgical activity annotations for the LRYGB procedures, namely phases and steps. We proposed MTMS-TCN, a multi-task multi-stage temporal convolutional network that was successfully deployed for joint online phase and step recognition. The model is evaluated on a new dataset and compared to state-of-the-art methods in both single-task and multi-task setups and demonstrates the benefits of modeling jointly the phases and steps for surgical workflow recognition.

References

Obesity: preventing and managing the global epidemic. Report of a WHO consultation. World Health Organ Tech Rep Ser 894, 1–253 (2000)

Ahmidi N, Tao L, Sefati S, Gao Y, Lea C, Haro BB, Zappella L, Khudanpur S, Vidal R, Hager GD (2017) A dataset and benchmarks for segmentation and recognition of gestures in robotic surgery. IEEE Trans Biomed Eng 64(9):2025–2041

Angrisani L, Santonicola A, Iovino P, Formisano G, Buchwald H, Scopinaro N (2015) Bariatric surgery worldwide 2013. Obes Surg 25(10):1822–1832

Birkmeyer JD, Finks JF, OReilly A, Oerline M, Carlin AM, Nunn AR, Dimick J, Banerjee M, Birkmeyer NJ, (2013) Surgical skill and complication rates after bariatric surgery. New Engl J Med 369(15):1434–1442. https://doi.org/10.1056/nejmsa1300625

Bricon-Souf N, Newman CR (2007) Context awareness in health care: A review. Int J Med Inf 76(1):2–12

Cleary K, Kinsella A (2005) OR 2020: The operating room of the future - workshop report. J Laparoendosc Adv Surg Tech - Part A 15(5):495–573

Czempiel T, Paschali M, Keicher M, Simson W, Feussner H, Kim ST, Navab N (2020) Tecno: Surgical phase recognition with multi-stage temporal convolutional networks. In: MICCAI

Eigen D, Fergus R (2015) Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In: 2015 IEEE International Conference on Computer Vision (ICCV), pp. 2650–2658. https://doi.org/10.1109/ICCV.2015.304

Farha YA, Gall J (2019) MS-TCN: Multi-stage temporal convolutional network for action segmentation. In: CVPR

Funke I, Bodenstedt S, Oehme F, von Bechtolsheim F, Weitz J, Speidel S (2019) Using 3d convolutional neural networks to learn spatiotemporal features for automatic surgical gesture recognition in video. In: MICCAI

Hajj HA, Lamard M, Conze PH, Cochener B, Quellec G (2018) Monitoring tool usage in surgery videos using boosted convolutional and recurrent neural networks. Med Image Anal 47:203–218

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: CVPR

He K, Zhang X, Ren S, Sun J (2016) Identity mappings in deep residual networks. In: Computer Vision – ECCV 2016, pp. 630–645. Springer International Publishing

Jin A, Yeung S, Jopling J, Krause J, Azagury D, Milstein A, Fei-Fei L (2018) Tool detection and operative skill assessment in surgical videos using region-based convolutional neural networks. 2018 IEEE Winter Conference on Applications of Computer Vision (WACV) pp. 691–699

Jin Y, Dou Q, Chen H, Yu L, Qin J, Fu CW, Heng PA (2018) SV-RCNet: Workflow recognition from surgical videos using recurrent convolutional network. IEEE Trans Med Imaging 37(5):1114–1126

Jin Y, Li H, Dou Q, Chen H, Qin J, Fu C, Heng P (2020) Multi-task recurrent convolutional network with correlation loss for surgical video analysis. Medical image analysis 59:

Kaijser MA, van Ramshorst GH, Emous M, Veeger NJGM, van Wagensveld BA, Pierie JPEN (2018) A delphi consensus of the crucial steps in gastric bypass and sleeve gastrectomy procedures in the netherlands. Obesity Surg 28(9):2634–2643

Katić D, Julliard C, Wekerle AL, Kenngott H, Müller-Stich BP, Dillmann R, Speidel S, Jannin P, Gibaud B (2015) LapOntoSPM: an ontology for laparoscopic surgeries and its application to surgical phase recognition. Int J Comput Assisted Radiol Surg 10(9):1427–1434

Kranzfelder M, Staub C, Fiolka A, Schneider A, Gillen S, Wilhelm D, Friess H, Knoll A, Feussner H (2012) Toward increased autonomy in the surgical OR: needs, requests, and expectations. Surg Endoscopy 27(5):1681–1688

Lea C, Vidal R, Reiter A, Hager GD (2016) Temporal convolutional networks: A unified approach to action segmentation. In: Lecture Notes in Computer Science, pp. 47–54. Springer International Publishing

Maier-Hein L, Vedula SS, Speidel S, Navab N, Kikinis R, Park A, Eisenmann M, Feussner H, Forestier G, Giannarou S, Hashizume M, Katic D, Kenngott H, Kranzfelder M, Malpani A, März K, Neumuth T, Padoy N, Pugh C, Schoch N, Stoyanov D, Taylor R, Wagner M, Hager GD, Jannin P (2017) Surgical data science for next-generation interventions. Nat Biomed Eng 1(9):691–696. https://doi.org/10.1038/s41551-017-0132-7

Nwoye CI, Mutter D, Marescaux J, Padoy N (2019) Weakly supervised convolutional lstm approach for tool tracking in laparoscopic videos. Int J Comput Assisted Radiol Surg 14:1059–1067

van den Oord A, Dieleman S, Zen H, Simonyan K, Vinyals O, Graves A, Kalchbrenner N, Senior A, Kavukcuoglu K (2016) WaveNet: A generative model for raw audio. In: Arxiv

Twinanda AP (2017) Vision-based approaches for surgical activity recognition using laparoscopic and rbgd videos. In: PhD thesis

Twinanda AP, Shehata S, Mutter D, Marescaux J, de Mathelin M, Padoy N (2017) EndoNet: A deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging 36(1):86–97

Varadarajan B, Reiley C, Lin H, Khudanpur S, Hager G (2009) Data-derived models for segmentation with application to surgical assessment and training. In: G.Z. Yang, D. Hawkes, D. Rueckert, A. Noble, C. Taylor (eds.) MICCAI, pp. 426–434

Vercauteren T, Unberath M, Padoy N, Navab N (2020) Cai4cai: The rise of contextual artificial intelligence in computer-assisted interventions. Proc IEEE 108(1):198–214

Yu T, Mutter D, Marescaux J, Padoy N (2019) Learning from a tiny dataset of manual annotations: a teacher/student approach for surgical phase recognition

Zappella L, Béjar B, Hager G, Vidal R (2013) Surgical gesture classification from video and kinematic data. Med Image Anal 17(7):732–745

Zisimopoulos O, Flouty E, Luengo I, Giataganas P, Nehme J, Chow A, Stoyanov D (2018) DeepPhase: Surgical phase recognition in cataracts videos. In: MICCAI

Acknowledgements

This work has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No. 813782 - project ATLAS. This work was also supported by French state funds managed within the Investissements d’Avenir program by BPI France (project CONDOR) and by the ANR (ANR-16-CE33-0009, ANR-10-IAHU-02). The authors would also like to thank the IHU and IRCAD research teams for their help with the data annotation during the CONDOR project.

Funding

Open access funding provided by Università degli Studi di Verona within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical approval

The research was conducted in accordance with the 1964 Helsinki Declaration. The surgical videos were recorded and collected in an anonymized manner following informed consent of patients. The local medical research and ethical committee cleared the present study from the Research Involving Human Subjects Act since the study did not imply any deviation from standard of care.

Informed consent

The patients consented to data recording

Code availability

The source code is public available at https://github.com/CAMMApublic/MTMS-TCN-Phase-Step-Bypass.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ramesh, S., Dall’Alba, D., Gonzalez, C. et al. Multi-task temporal convolutional networks for joint recognition of surgical phases and steps in gastric bypass procedures. Int J CARS 16, 1111–1119 (2021). https://doi.org/10.1007/s11548-021-02388-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-021-02388-z