Abstract

Purpose

Probe-based confocal laser endomicroscopy (pCLE) is a recent imaging modality that allows performing in vivo optical biopsies. The design of pCLE hardware, and its reliance on an optical fibre bundle, fundamentally limits the image quality with a few tens of thousands fibres, each acting as the equivalent of a single-pixel detector, assembled into a single fibre bundle. Video registration techniques can be used to estimate high-resolution (HR) images by exploiting the temporal information contained in a sequence of low-resolution (LR) images. However, the alignment of LR frames, required for the fusion, is computationally demanding and prone to artefacts.

Methods

In this work, we propose a novel synthetic data generation approach to train exemplar-based Deep Neural Networks (DNNs). HR pCLE images with enhanced quality are recovered by the models trained on pairs of estimated HR images (generated by the video registration algorithm) and realistic synthetic LR images. Performance of three different state-of-the-art DNNs techniques were analysed on a Smart Atlas database of 8806 images from 238 pCLE video sequences. The results were validated through an extensive image quality assessment that takes into account different quality scores, including a Mean Opinion Score (MOS).

Results

Results indicate that the proposed solution produces an effective improvement in the quality of the obtained reconstructed image.

Conclusion

The proposed training strategy and associated DNNs allows us to perform convincing super-resolution of pCLE images.

Similar content being viewed by others

Introduction

Probe-based confocal laser endomicroscopy (pCLE) is a state-of-the-art imaging system used in clinical practice for in situ and real time in vivo optical biopsy. In particular, recent works using Cellvizio (Mauna Kea Technologies, France) have demonstrated the impact of introducing pCLE as a new imaging modality for the diagnostics procedures of conditions such as pancreatic cystic tumours and the surveillance of Barrett’s oesophagus [4]. pCLE is a recent imaging modality in gastrointestinal and pancreaticobiliary diseases [4].

The authors of [4] have shown that despite clear clinical benefits of pCLE, improving its specificity and sensitivity would help it become a routine diagnostic tool. Specificity and sensitivity are directly dependent on the quality of the pCLE images. Therefore, increasing the resolution of these images might bring a more reliable source of information and improve pCLE diagnosis.

Certainly, the key point of pCLE is its suitability for real-time and intraoperative usage. Having high-quality images in real time potentially allows for better pCLE interpretability. Thus, offline processing would not fit in the standard clinical work-flow required in this context.

The trend for image sensor manufacturers is to increase the resolution, as apparent in the current move to high-definition endoscopic detectors. Recently introduced 4K endoscopes provide 8M pixels, a difference to pCLE of 2-to-3 orders of magnitude. In pCLE, reliance on an imaging guide—an optical fibre bundle, composed of a few tens of thousands of optical fibres, each acting as the equivalent of a single-pixel detector—fundamentally limits the image quality. These fibres are irregularly positioned in the bundle which implies that tissue signal is a collection of pixels sampled on an irregular grid. Hence, a reconstruction procedure is needed for mapping the irregular samples to a Cartesian image. Other factors that reduce pCLE image quality are cross-talk among neighbouring fibres and limited signal-to-noise ratio. All these factors lead to the generation of images with artefacts, noise, relatively low contrast and resolution. This work proposes a software-based resolution augmentation method which is more agile and simpler to implement than hardware engineering solutions.

Building on from the idea that high-resolution (HR) images are desired, this study explores advanced single-image super-resolution (SISR) techniques which can contribute to effective improvement in image quality. Although SISR for natural images is a relatively mature field, this work is the first attempt to translate these solutions into the pCLE context. Beyond SISR, video registration technique [13] have been proposed to increase the resolution of pCLE. Such methods provide a baseline super-resolution technique, but suffers from artefact and are computationally too expensive to be applied in real time. Because of the recent success of deep learning for SISR on natural images [1], this work focuses on exemplar-based super-resolution (EBSR) deep learning techniques. However, the translation of these methods to the pCLE domain is not straightforward, notably due to the lack of ground-truth HR images required for the training. There is indeed no equivalent imaging device capable of producing higher-resolution endomicroscopic imaging, nor any robust and highly accurate means of spatially matching microscopic images acquired across scales with different devices. Furthermore, in comparison with natural images, currently available pCLE images suffer from specific artefacts introduced by the reconstruction procedure that maps the tissue signal from the irregular fibre grid to the Cartesian grid.

The contribution of this work is threefold. First, three deep learning models for SISR are examined on the pCLE data. Second, to overcome the problem of the lack of ground-truth low-resolution (LR)/HR image pairs for training purposes, a novel pipeline to generate pseudo-ground-truth data by leveraging an existing video registration technique [13] is proposed. Third, in the absence of a reference HR ground truth, to assess the clinical validity of our approach, a Mean Opinion Score (MOS) study was conducted with nine experts (1–10 years of experience) each assessing 46 images according to three different criteria. To our knowledge, this is the first research work to address the challenge of SISR reconstruction for pCLE images based on deep learning, generate pCLE pseudo-ground-truth data for training of EBSR models and demonstrate that pseudo-ground-truth trained models provide convincing SR reconstruction.

The rest of the paper is organised as follows. “Related work” section presents the state of the art for SISR with natural images. “Materials and methods” section presents the proposed training methodology based on realistic pseudo-ground-truth generation and detail the implementation of the SISR models. “Results” section gives information on the evaluation of our approach using a quantitative image quality assessment (IQA) and a MOS study. “Discussion and conclusions” section summarises the contribution of this research to pCLE SISR.

Related work

Super-resolution (SR) has received a lot of interest from the computer vision community in the recent decades [10]. Initial SR approaches were based on single-image super-resolution (SISR) and exploited signal processing techniques applied to the input image. An alternative to SISR is multi-frame image super-resolution based on the idea that HR image can be reconstructed by fusing many LR images together. Ideally, the combination of several LR image sources enriches the information content of the reconstructed HR image and contributes to improving its quality. Registration can be used to merge LR images acquired at slightly shifted field-of-views into a unified HR image.

In the specific context of pCLE, the work proposed by Vercauteren et al. [13] presents a video registration algorithm that, in some cases, can improve spatial information of the reconstructed pCLE image, and reveals details which were not visible initially. The quality of the registration is a key step to the success of the SR reconstruction, but the alignment of images captured at different times is not trivial. Misalignment leads to incorrect fusion and generates artefacts such as ghosting. Moreover, registration is a computationally expensive technique, making this approach unsuitable for real-time purposes.

Another interesting approach to SISR is exemplar-based super-resolution (EBSR), which learns the correspondence between low- and the high-resolution images. Thanks to the recent success of deep learning and Convolutional Neural Networks (CNNs), EBSR methods currently represent the state-of-the-art for the SR task [1]. Although many research groups have worked on deep-learning-based SR for natural images, and although CNNs are currently widely used in various medical imaging problems [11], only recently have CNNs been used for SR in medical imaging. Noteworthy is the work proposed in [12] that attempt to improve the quality of magnetic resonance images.

The behaviour of CNNs, especially in the context of SR, is strongly driven by the choice of a loss function, and the most popular one is mean squared error (MSE) [16]. Although MSE as a loss function steers the SR models towards the reconstitution of HR images with high peak signal-to-noise ratios, this does not necessarily mean that the final images will provide a good quality perception. A model trained with a selective loss function involving a Generative Adversarial Network for Image Super-Resolution (SRGAN) was proposed by Ledig et al. [7]. The authors designed an adversarial loss to classify HR images into SR images and ground-truth HR images. Based on a MOS study, the authors showed that the participants perceived the quality of the restored HR images as higher compared to the image quality measured only by a PSNR.

Another critical issue with deep CNNs is the convergence speed. Several solutions, such as using a very high learning rate for network training [5], and removing batch-normalisation modules [8] were proposed to tackle this issue.

Materials and methods

The Smart Atlas database [2], a collection of 238 anonymised pCLE video sequences of the colon and oesophagus, is used in this study. The database was split into three subsets: training set (70%), validation set (15%), and test set (15%). Each subset was created ensuring that colon and oesophagus tissue were equally represented. Data were acquired with 23 unique probes of the same pCLE probe type. The SR models are specific to the type of the probe but generic to the exact probe being used. Thus, the models do not need to be retrained for probes of the same type. Another type of probe, such as needle-CLE (nCLE), would require a specifically trained model. nCLE and pCLE differ by the number of optical fibres and the design of the distal optics.

“Pseudo-ground-truth image estimation based on video registration” section explains how the pseudo-ground-truth HR images were generated. “Generation of realistic synthetic pCLE data” section describes our proposed simulation framework to generate synthetic LR (\(\hbox {LR}_{\mathrm{syn}}\)) images from original LR (\(\hbox {LR}_{\mathrm{org}}\)) images. “Implementation details” section presents the pre-processing steps needed for standardising the input images and details the implementation of the super-resolution CNNs used in this study.

Pseudo-ground-truth image estimation based on video registration

To compensate for the lack of ground-truth HR pCLE data, a registration-based mosaicking technique [13] was used to estimate HR images. Mosaicking acts as a classical SR technique and fuses several registered input frames by averaging the temporal information. The mosaics were generated for the entire Smart Atlas database and used as a source of HR frames.

Since mosaicking generates a single large field-of-view mosaic image from a collection of input LR images, it does not directly provide a matched HR image for each LR input. To circumvent this, we used the mosaic-to-image diffeomorphic spatial transformation resulting from the mosaicking process to propagate and crop the fused information from the mosaic back into each input LR image space. The image sequences resulting from this method are regarded as estimates of HR frames. These estimates will be referred to as \(\widehat{\hbox {HR}}\) in the text.

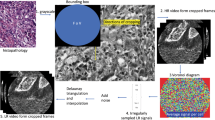

The image quality of the mosaic image heavily depends on the accuracy of the underpinning registration which is a difficult task. The corresponding pairs of LR and \(\widehat{\hbox {HR}}\) images generated by the proposed registration-based method suffer from artefacts, which can hinder the training of the EBSR models (Fig. 1).

Specifically, it can be observed that alignment inaccuracies occurring during mosaicking were a source of ghosting artefacts which in combination with residual misalignments between the LR and \(\widehat{\hbox {HR}}\) images, creates unsuitable data for the training. Sequences with obvious artefacts were manually discarded. However, even on this selected dataset, training issues were observed. To address these, we simulated LR-HR image pairs for training EBSR algorithms while leveraging the registration-based \(\widehat{\hbox {HR}}\) images as realistic HR images.

Generation of realistic synthetic pCLE data

Currently available pCLE images are reconstructed from scattered fibre signal. Every fibre in the bundle acts as a single-pixel detector. To reconstruct pCLE images on a Cartesian grid, Delaunay triangulation and piecewise linear interpolation are used. The simulation framework developed in this study mimics the standard pCLE reconstruction algorithm and starts by assigning to each fibre the average of the signal from seven neighbouring pixels [6]. In the standard reconstruction algorithm, the fibre signal, which includes noise, is then interpolated. Similarly, noise was added to the simulated data to produce realistic images and avoid creating a wide domain gap between real and simulated pCLE images.

Despite some misalignment artefacts, the registration-based generation of \(\widehat{\hbox {HR}}\) presented in “Pseudo-ground-truth image estimation based on video registration” section produces images with fine details and a high signal-to-noise ratio. Our simulation framework uses these \(\widehat{\hbox {HR}}\) and produces simulated LR images with a perfect alignment.

The proposed simulation framework relies on observed irregular fibre arrangements and corresponding Voronoi diagrams. Each fibre signal was extracted from an \(\widehat{\hbox {HR}}\) image, by averaging the \(\widehat{\hbox {HR}}\) pixel values within the corresponding Voronoi cell.

To replicate realistic noise patterns on the simulated LR images, additive and multiplicative Gaussian noise (a and m respectively) is added to the extracted fibre signals fs to obtain a noisy fibre signal nfs as: \({ nfs} =(1+m).*{ fs} + a \). The standard deviation of the noise distributions was tuned based on visual similarity between \(\hbox {LR}_{\mathrm{org}}\) and \(\hbox {LR}_{\mathrm{syn}}\) and between their histograms. Sigma values were 0.05 and 0.01 * \((\max \ { fs} -\min \ { fs})\) for multiplicative and additive Gaussian distribution, respectively. In the last step, Delaunay-based linear interpolation was performed thereby leading to our final simulated LR images.

LR and \(\widehat{\hbox {HR}}\) images were combined into two datasets: 1. Original pCLE (\(\hbox {pCLE}_{\mathrm{org}}\)) built with pairs of \(\hbox {LR}_{\mathrm{org}}\) taken from sequences of Smart Atlas database and \(\widehat{\hbox {HR}}\) images, and 2. synthetic pCLE (\(\hbox {pCLE}_{\mathrm{syn}}\)) built by replacing the \(\hbox {LR}_{\mathrm{org}}\) images with \(\hbox {LR}_{\mathrm{syn}}\) images.

Implementation details

The datasets were pre-processed in three steps. First, intensity values were normalised: \(\hbox {LR} = \hbox {LR} - \hbox {mean}_{\mathrm{lr}}/\hbox {std}_{\mathrm{lr}}\) and \(\hbox {HR} = \hbox {HR} - \hbox {mean}_{\mathrm{lr}}/\hbox {std}_{\mathrm{lr}}\). Second, pixels values were scaled of every frame individually in the range [0–1]. Third, non-overlapping patches of \(64 \times 64\) pixels were extracted for the training phase, considering only pixels in the pCLE Field of View (FoV). A stochastic patch-based training was used for training the networks, with a minibatch of size 54 patches to fit into the GPU memory (12 GB).

Models were trained with patches from the training set. The patches from the validation set were used to monitor the loss during training with the purpose to avoid overfitting. Since all the considered networks are fully convolutional, the test images were processed full size and no patch processing is required during the inference phase.

Three CNNs networks for SR were used: sparse-coding-based FSRCNN [3], residual-based EDSR [8], and generative adversarial network SRGAN [7]. Every model was trained with the two datasets presented in “Generation of realistic synthetic pCLE data” section.

MSE is the most commonly used loss function for SR. Zhao et al. [16] showed that MSE has two limitations: it does not converge to the global minimum and produces blocky artefacts. In addition to demonstrating that L1 loss outperforms L2, the authors also introduced a new loss function SSIM \(+\) L1 by incorporating the Structural Similarity (SSIM) [15]. FSRCNN and EDSR were trained considering independently both L1 and SSIM \(+\) L1 to investigate their applicability for our data based on a quantitative comparison.

Results

Acknowledging the lack of proper ground truth for super-resolution of pCLE and the ambiguous nature of established IQA metrics, a three-stage approach was designed for the evaluation of the proposed method using the three SR architectures considered in “Materials and methods” section.

The first stage, presented in “Experiments on synthetic data” section and relying on the quantitative assessment, demonstrates the applicability of EBSR for pCLE in the ideal synthetic case where ground-truth is available. In this quantitative stage, the inadequacy of the existing video-registration-based high-resolution images as a ground truth for EBSR training purpose is demonstrated.

The second stage, presented in “Experiments on original data” section, focuses on the quantitative assessment of our methods in the context of real input images and on the evaluation of our best model against other state-of-the-art SISR methods.

In the third stage, performed to overcome the limitations of the quantitative assessment, a MOS study was carried out by recruiting nine independent experts, having 1–10 years of experience working with pCLE images.

Quantitative analysis

For the quantitative analysis, the SR images were examined exploiting two complementary metrics: (i) SSIM to evaluate the similarity between the SR image and the \(\widehat{\hbox {HR}}\), and (ii) Global Contrast Factor (GCF) [9] as a reference-free metric for measuring image contrast which is one of the key characteristic of image quality in our context. Analysing both SSIM and GCF in combination leads to a more robust evaluation. SSIM alone cannot be depended on when the reference image is unreliable, while improvements in GCF alone can be achieved deceitfully for example by adding a large amount of noise.

Using these metrics, six scores for each SR method were extracted: mean and standard deviation of (i) SSIM between SR and \(\widehat{\hbox {HR}}\), (ii) GCF differences between SR and LR and (iii) GCF differences between SR and the \(\widehat{\hbox {HR}}\). Finally, to determine which approach performs better, a composite score \(\hbox {Tot}_{\mathrm{cs}}\) obtained by averaging the normalised value of SSIM with the normalised GCF difference between SR and LR was defined. Both factors are re-scaled to the range [0–1]. In our quantitative assessment, the score obtained by the initial \(\hbox {LR}_{\mathrm{org}}\) was considered as baseline reference.

Experiments on synthetic data

In the first experiment, synthetic data are used to demonstrate that our models work in the ideal situation where ground truth is available. The first section of Table 1 shows the scores obtained when the SR models are trained on \(\hbox {pCLE}_{\mathrm{syn}}\) and tested on \(\hbox {LR}_{\mathrm{syn}}\). Here, it is evident that the EDSR and FSRCNN trained with SSIM \(+\) L1 obtain a substantial improvement on the different quality factors with respect to the LR image. More specifically, in comparison with the initial LR image, the SSIM was increased by + 0.06 when EDSR is used and by + 0.05 when FSRCNN is used. These approaches also yield a GCF value that is very close to the GCF in \(\widehat{\hbox {HR}}\) and an improvement of + 0.32 and + 0.36 in the GCF with respect to LR images. Statistical significance of these improvements was assessed with a paired t test (p value less than 0.0001). From this experiment, it is possible to conclude that the proposed solution is capable of performing SR reconstruction when the models are trained on synthetic data with no domain gap at test time.

Example of SR images obtained when \(\hbox {pCLE}_{\mathrm{syn}}\) and \(\hbox {pCLE}_{\mathrm{org}}\) are used for train and test. From top to the bottom, the images in the middle represent the SR image obtained when: (i) \(\hbox {pCLE}_{\mathrm{syn}}\) are used for train and test, (ii) \(\hbox {pCLE}_{\mathrm{syn}}\) are used for train, and the \(\hbox {pCLE}_{\mathrm{org}}\) are used for test, and (iii) \(\hbox {pCLE}_{\mathrm{org}}\) are used for train and test

Experiments on original data

When real images are considered, the same conclusions cannot be reached. The results obtained by training on \(\hbox {pCLE}_{\mathrm{org}}\) and testing on \(\hbox {LR}_{\mathrm{org}}\) are reported in the second section of Table 1, and here it is evident that all the different quality factors decrease. The best approach is the FSRCNN trained using SSIM \(+\) L1 as loss function. With respect to the previous case this approach loses 0.04 on the SSIM, and 0.12 on the \(\Delta \) GCF with LR. This leads to a final reduction of 0.14 for the \(\hbox {Tot}_{\mathrm{cs}}\) score. In this scenario, the deterioration of SSIM and GCF compared to the previous synthetic case can be due to the use of inadequate \(\widehat{\hbox {HR}}\) images during the training (i.e. misalignment during the fusion, lack of compensation for motion deformations, etc.). Better results are instead obtained when the SR models performed on \(\hbox {LR}_{\mathrm{org}}\) images are trained using the \(\hbox {pCLE}_{\mathrm{syn}}\) (last section of Table 1). Here, the quality factors increased when compared to the previous case, although they do not overcome the results obtained when the approach is trained and tested on synthetic data. EDSR, in particular, has a \(\hbox {Tot}_{\mathrm{cs}}\) score of 0.65 that is 0.08 better than the best approach trained on \(\hbox {pCLE}_{\mathrm{org}}\) (the second section of Table 1) and 0.06 worse than the best approach trained and tested on \(\hbox {pCLE}_{\mathrm{syn}}\) (first section of Table 1). The GCF obtained here are in general much better when compared to the previous two cases. An example of the visual results from the different training modalities is shown in Fig. 2. In conclusion, our findings suggest that existing video-registration-based approaches are inadequate to serve as a ground truth for HR images, while EBSR approaches, such as the EDSR and FSRCNN, when trained on synthetic data, can produce SR images that enhance the quality of the LR images.

Due to our conclusions, the MOS study was performed using images obtained from the models trained only with synthetic data.

To further validate our methodology, in Table 2, the results obtained by the best model of our approach (EDSR trained on synthetic data with SSIM \(+\) L1 as loss function) were compared against other state-of-the-art SISR methodologies. Specifically, in this experiment a Wiener deconvolution, a variational Bayesian inference approach with sparse and non-sparse priors [14], the SRGAN and EDSR networks pretrained on natural images were considered. The Wiener deconvolution was assumed to have a Gaussian point-spread function with the parameter \(\sigma =2\) estimated experimentally from the training set. Finally, the last column of Table 2 includes the results of a contrast-enhancement approach obtained by sharpening the input with parameters similarly tuned on the trained set. Although our approach is not consistently outperforming the other on each individual quality score, when the combined score \(\hbox {Tot}_{\mathrm{cs}}\) is considered, our method outperforms the others by a large margin.

Semi-quantitative analysis (MOS)

To perform the MOS, nine independent experts were asked to evaluate 46 images each. Full-size \(\hbox {LR}_{\mathrm{org}}\) were selected randomly from test set of \(\hbox {pCLE}_{\mathrm{org}}\), and used to generate SR reconstructions. At each step, the SR images obtained by the three different methods (SRGAN, FSRCNN and EDSR) trained on synthetic data and a contrast-enhancement obtained by sharpening the input (used as a baseline) are shown to the user, in a randomly shuffled order. The input and the \(\widehat{\hbox {HR}}\) are also displayed on the screen as references for the participants. For each of the four images, the user assigns a score between 1 (strongly disagree) to 5 (strongly agree) on three different questions:

-

Q1: Are there any artefacts/noise in the image?

-

Q2: Can you see an improvement in contrast with respect to the input?

-

Q3: Can you see an improvement in the details with respect to the input?

To make sure that the questions were correctly interpreted, each participant received a short training before starting the study. The results on the MOS are shown in Fig. 3. EDSR is the approach that achieves the best performance on Q2 and Q3. Instead based on Q1, both FRSCNN and EDSR do not introduce a significant amount of artefact or noise. The results of the MOS give us one more indication, which our training methodology allows improvements on the quality of the pCLE images. In Fig. 4 is shown a few examples of the obtained SR images using our proposed methodology.

Discussion and conclusions

This work addresses the challenge of super-resolution for pCLE images. This is the first work to evaluate the potential of deep learning and exemplar-based super-resolution in pCLE context.

The main contribution of this work is to overcome the challenge of lack of ground-truth data. A novel methodology to produce pseudo-ground-truth exploiting an existing video registration method, and simulating realistic LR image based on physical model of pCLE acquisition is proposed.

The conclusions are that synthetic pCLE data can be used to train CNNs while applying them to real scenario data because of a physically inspired simulation process that reduces the domain gap between real and simulated images.

The robust IQA test based on the Structural Similarity (SSIM) and global contrast factor (GCF) score confirmed the improvement of obtained results in respects to the input image. An analysis of perceptual quality of images with a Mean Opinion Score (MOS) study recruiting nine independent pCLE experts showed that SR models give clinically interesting results. Experts perceived an improvement in the quality of the reconstructed images with respect to the input image without noting a significant increase in the amount of noise and artefacts. The quantitative and semi-quantitative user perception analysis provided consistent conclusions.

Providing a better quality of pCLE images might improve the decision process during the endoscopic examination. Further evaluation will focus on the temporal consistency of the super-resolution and will rely on histopathological confirmation to validate the authenticity of the generated details.

References

Agustsson E, Timofte R (2017) NTIRE 2017 challenge on single image super-resolution: dataset and study. In: 2017 IEEE conference on computer vision and pattern recognition workshops (CVPRW), pp 1122–1131. https://doi.org/10.1109/CVPRW.2017.150

André B, Vercauteren T, Buchner AM, Wallace MB, Ayache N (2011) A smart atlas for endomicroscopy using automated video retrieval. Med Image Anal 15(4):460–476

Dong C, Loy CC, Tang X (2016) Accelerating the super-resolution convolutional neural network. In: European conference on computer vision, Springer, pp 391–407

Fugazza A, Gaiani F, Carra MC, Brunetti F, Lévy M, Sobhani I, Azoulay D, Catena F, de’Angelis GL, de’Angelis N (2016) Confocal laser endomicroscopy in gastrointestinal and pancreatobiliary diseases: a systematic review and meta-analysis. BioMed Res Int 2016:1–31. https://doi.org/10.1155/2016/4638683

Kim J, Kwon Lee J, Mu Lee K (2016) Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1646–1654

Le Goualher G, Perchant A, Genet M, Cavé C, Viellerobe B, Berier F, Abrat B, Ayache N (2004) Towards optical biopsies with an integrated fibered confocal fluorescence microscope. Med Image Comput Comput Assist Interv MICCAI 2004:761–768

Ledig C, Theis L, Huszar F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z et al. (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4681–4690

Lim B, Son S, Kim H, Nah S, Lee KM (2017) Enhanced deep residual networks for single image super-resolution. In: The IEEE conference on computer vision and pattern recognition (CVPR) workshops

Matkovic K, Neumann L, Neumann A, Psik T, Purgathofer W (2005) Global contrast factor—a new approach to image contrast. Comput Aesthet 2005:159–168

Park SC, Park MK, Kang MG (2003) Super-resolution image reconstruction: a technical overview. IEEE Signal Process Mag 20(3):21–36

Ravì D, Wong C, Deligianni F, Berthelot M, Andreu-Perez J, Lo B, Yang GZ (2017) Deep learning for health informatics. IEEE J Biomed Health Inf 21(1):4–21

Tanno R, Ghosh A, Grussu F, Kaden E, Criminisi A, Alexander DC (2017) Bayesian image quality transfer. In: International conference on medical image computing and computer-assisted intervention, Springer, pp 265–273

Vercauteren T, Perchant A, Malandain G, Pennec X, Ayache N (2006) Robust mosaicing with correction of motion distortions and tissue deformations for in vivo fibered microscopy. Med Image Anal 10(5):673–692

Villena S, Vega M, Babacan SD, Molina R, Katsaggelos AK (2013) Bayesian combination of sparse and non-sparse priors in image super resolution. Digit Signal Process 23(2):530–541

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Zhao H, Gallo O, Frosio I, Kautz J (2015) Loss functions for image restoration with neural networks. IEEE Trans Comput Imag 3(1):47–57. https://doi.org/10.1109/TCI.2016.2644865

Acknowledgements

The authors would like to thank the High Dimensional Neurology group, Institute of Neurology, UCL for provide computational support. The authors would like to thank the independent experts at Mauna Kea Technologies for participating to the MOS survey.

Funding This work was supported by Wellcome/EPSRC [203145Z/16/Z; NS/A000050/1; WT101957; NS/A000027/1; EP/N027078/1]. This work was undertaken at UCL and UCLH, which receive a proportion of funding from the DoH NIHR UCLH BRC funding scheme. The PhD studentship of Agnieszka Barbara Szczotka is funded by Mauna Kea Technologies, Paris, France.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The PhD studentship of Agnieszka Barbara Szczotka is funded by Mauna Kea Technologies, Paris, France. Tom Vercauteren owns stock in Mauna Kea Technologies, Paris, France. The other authors declare no conflict of interest.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed consent

For this type of study, formal consent is not required. This article does not contain patient data.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ravì, D., Szczotka, A.B., Shakir, D.I. et al. Effective deep learning training for single-image super-resolution in endomicroscopy exploiting video-registration-based reconstruction. Int J CARS 13, 917–924 (2018). https://doi.org/10.1007/s11548-018-1764-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-018-1764-0