Abstract

Purpose

To systematically review the use of artificial intelligence (AI) in musculoskeletal (MSK) ultrasound (US) with an emphasis on AI algorithm categories and validation strategies.

Material and Methods

An electronic literature search was conducted for articles published up to January 2024. Inclusion criteria were the use of AI in MSK US, involvement of humans, English language, and ethics committee approval.

Results

Out of 269 identified papers, 16 studies published between 2020 and 2023 were included. The research was aimed at predicting diagnosis and/or segmentation in a total of 11 (69%) out of 16 studies. A total of 11 (69%) studies used deep learning (DL)-based algorithms, three (19%) studies employed conventional machine learning (ML)-based algorithms, and two (12%) studies employed both conventional ML- and DL-based algorithms. Six (38%) studies used cross-validation techniques with K-fold cross-validation being the most frequently employed (n = 4, 25%). Clinical validation with separate internal test datasets was reported in nine (56%) papers. No external clinical validation was reported.

Conclusion

AI is a topic of increasing interest in MSK US research. In future studies, attention should be paid to the use of validation strategies, particularly regarding independent clinical validation performed on external datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

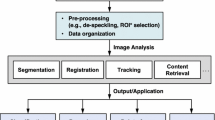

In recent years, the field of medical imaging has undergone a transformative evolution, largely driven by the remarkable advancements in artificial intelligence (AI) and machine learning (ML) technologies [1,2,3]. The term artificial intelligence refers to the scientific domain focused on enabling machines to execute tasks typically reliant on human intelligence [4]. Within AI, conventional machine learning stands as a discipline wherein algorithms undergo training via established datasets, allowing machines to learn. These trained algorithms subsequently apply acquired knowledge to conduct diagnostic analyses on unfamiliar datasets [5]. Deep learning, another subset of AI, mirrors the neural structure of the human brain. Employing artificial neural networks comprising multiple hidden layers, this methodology tackles intricate problem-solving. The integration of these hidden layers empowers machines to continuously assimilate new information, enhancing their proficiency over time [6].

Among various medical imaging modalities, musculoskeletal (MSK) ultrasound (US) has gained increasing attention as a valuable diagnostic tool for assessing a wide range of disorders [7]. MSK US, with its non-invasive, radiation-free, and real-time imaging capabilities, is a valid solution for diagnosing and monitoring conditions such as tendon injuries, ligament tears, arthritis, and soft tissue abnormalities [8,9,10]. The application of AI in MSK US addresses some of the inherent challenges associated with conventional US imaging, including operator-dependent variability, image interpretation subjectivity, and time-consuming data analysis [11]. By leveraging AI algorithms, it is possible to automate the detection and characterization of MSK abnormalities, reducing the potential for human error and facilitating faster and more accurate diagnoses. Moreover, AI can aid in improving the standardization of image acquisition protocols and optimizing the overall workflow in MSK US examinations [12, 13].

To date, preliminary AI studies employing conventional ML or DL approaches have been applied to MSK US to improve diagnosis and outcome [14,15,16]. One issue is the validation of both conventional ML and DL approaches, which is crucial to ensure their generalizability [17]. The purpose of this systematic review was to assess the current state of research and development regarding AI integration in MSK US. We systematically reviewed and synthesized findings from a wide range of studies to evaluate the methodology, with emphasis on AI algorithm categories and validation strategies. We also discussed potential challenges, limitations, and future prospects of AI in MSK US, aiming to create awareness of important key topics when designing and executing future research related to AI in MSK US. Finally, this systematic review seeks to contribute to the growing body of evidence supporting the use of AI in MSK US, ultimately improving patient care, enhancing diagnostic capabilities, and advancing the field of MSK medicine.

Material and methods

Literature search

Local ethics committee approval was not needed because of the nature of the study, which was a systematic review.

An electronic literature search was conducted on the PubMed and Medline databases for articles published up to January 19, 2024. The search query was performed using the following keywords and their expansions: (“MSK” OR “musculoskeletal”) AND (“machine learning” OR “machine learning-based” OR “learning” OR “artificial intelligence” OR “artificial intelligence-based” OR “deep learning” OR “deep” OR “neural network”) AND (“ultrasound” OR “US”).

Studies were first screened by title and abstract, and then, the full text of eligible studies was retrieved for further review. The references of identified publications were checked for additional publications to include. The literature search and study selection were performed by one reviewer (blinded for review) and double-checked by a second reviewer (blinded for review). The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines [18] were followed.

Inclusion and exclusion criteria

The inclusion criteria were (i) the use of AI in MSK US; (ii) involvement of human participants; (iii) English language; (iv) statement that approval from the local ethics committee and informed consent from each patient or a waiver for it was obtained.

The exclusion criteria were (i) studies reporting insufficient data for outcomes (e.g., details on AI algorithm and/or validation strategies not described); (ii) case reports and series, narrative reviews, guidelines, consensus statements, editorials, letters, comments, or conference abstracts.

Data extraction

Data were extracted to a spreadsheet with a drop-down list for each item, grouped into three main categories, namely baseline study characteristics; AI algorithm categories; and validation strategies. Items regarding baseline study characteristics included first author’s last name, year of publication, study aim, evaluated structure, study design, sample size, and reference standard. Those concerning the AI algorithm included the use of conventional ML or DL-based algorithms. Data regarding validation strategies included the use of cross-validation techniques, clinical validation performed on a separate internal test dataset, and clinical validation performed on an external or independent test dataset.

Results

Baseline study characteristics

A flowchart illustrating the literature search process is presented in Fig. 1. After screening 269 papers and applying the eligibility criteria, 16 studies were included in this systematic review. Table 1 details the baseline study characteristics of the included studies.

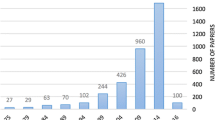

All studies were published between 2020 and 2023. Two (12%) out of 16 investigations were published in 2023, six (38%) in 2022, four (25%) in 2021, and four (25%) in 2020. The design was prospective in 10 (62%) studies and retrospective in the remaining six (38%) studies. The median sample size was 151 patients (range 6 – 3,801).

The research was focused on diagnosis or grading of pathologies in seven (44%) studies and segmentation of structures in four (25%) studies. Less frequently, research was aimed at the prediction of total knee replacement outcome [34], generation of synthetic US images [21], estimation of absolute states of skeletal muscle [22], assessment of muscle fascicle lengths [32], and measurement of muscle thickness [33], as detailed in Table 1. Regarding the anatomic structures investigated, eight (50%) papers focused on the upper extremity, with the shoulder being the most frequently investigated structure (n = 3, 19%). Seven (44%) papers focused on the lower extremity, with the lower leg being the most frequently investigated structure (n = 4, 25%).

The most frequently employed reference standard was manual annotation (n = 7, 44%), followed by expert opinion/consensus (n = 4, 25%). In the remaining studies, electromyography [22], nerve conduction studies [30], an automated muscle fascicle tracking software [32], and clinical follow-up information [34] were used as reference standards. In one study [35], the reference standard was not specified.

AI algorithms and validation strategies

A total of 11 (69%) studies used DL-based algorithms, three (19%) studies employed conventional ML-based algorithms, and two (12%) studies employed both DL and conventional ML algorithms, as detailed in Table 2.

Regarding validation strategies, a total of six (38%) studies provided details on cross-validation techniques with K-fold cross-validation being the most frequently used (n = 4, 25%). Two (13%) studies used the leave-one-out cross-validation technique. A clinical validation was reported in nine (56%) papers. In all cases, the clinical validation was performed on a separate set of data from the primary institution, i.e., internal test dataset. No cases of external or independent validation were reported in the papers included in this systematic review. Details on cross-validation and clinical validation strategies employed in the included studies are provided in Table 2.

Discussion

This systematic review focused on the use of AI in MSK US with emphasis on categories of AI algorithms and validation strategies. Most included studies employed DL-based algorithms, either alone or combined with conventional ML approaches. Clinical validation with internal test datasets was frequently used. However, no cases of external validation were reported.

AI has gained increasing attention in medicine and particularly also in radiology over the past few years [1]. The same is true for MSK imaging as a radiological sub-specialty. The number of papers that focused on AI in MSK US has increased over the years, and half of those included in this review have been published since 2022. Compared to the total literature published on AI in MSK imaging, the papers focusing on US imaging are a minority, however. The authors believe that this might be explained by several reasons: (i) US imaging is less popular than alternative imaging methods for diagnosing MSK pathologies in many centers and (ii) US imaging is more operator dependent than other modalities, which makes it more difficult to standardize and successfully train AI models for the purpose of diagnosis and/or grading of pathologies [11].

Fields of application of AI in MSK US are diverse. Most studies in this systematic review focused on clinical questions related to diagnosis and/or grading of MSK pathologies. They used a prospective study design in a majority of cases which offers advantages in controlling data gathering and matching patient and/or imaging characteristics. Retrospective study designs, which were used to a lesser extent, allowed inclusion of a larger number of patients with imaging data previously acquired. Public databases were not used in the papers included in this review and should be considered in future research studies to validate AI approaches against independent data.

The AI algorithms most frequently encountered were based on DL. Compared with conventional ML, DL can automatically filter features to improve recognition performance based on multi-layer models [36]. However, a large number of labeled training samples are required in order to achieve excellent learning performance [37]. This requirement can be difficult to meet in US imaging where expert annotation is time-consuming and datasets are often limited with regard to the number of cases.

Validation of AI performance is important. When dealing with limited datasets, resampling strategies such as cross-validation prove beneficial. They aim to curb over fitting and improve the accuracy of the model's performance on new data [38]. Among the studies reviewed, K-fold cross-validation was the most frequently utilized technique for this purpose. At the same time, a clinical validation against a separate set of data is desirable to test the AI model and ensure its applicability on unseen cases. Clinical validation with internal test datasets was performed in the majority of papers. However, none of the studies conducted clinical validation using entirely separate datasets from different institutions. Hence, for future studies, it would be crucial to expand beyond a single institution and incorporate external testing of the model with substantial and independent datasets. This approach would greatly enhance the robustness and reliability of the findings.

Limitations

This study represents a systematic review of the literature. Due to the limited number of papers dealing with AI in MSK US published over the past few years and their heterogeneity with regard to different categories analyzed and metrics employed, it was not possible to perform a meta-analysis with more solid statistical tests. Furthermore, the review did not include a formal evaluation of the quality of each study that was included. Our emphasis was on presenting methodological data that serve as quality indicators on their own.

Conclusion

AI is a topic of increasing interest in MSK US research reflected by the growing number of publications each year. Regarding the methodology of such studies, attention should be paid to the use of accurate reproducibility and validation strategies in order to assure high-quality algorithms and outcomes.

Abbreviations

- AI:

-

Artificial intelligence

- DL:

-

Deep learning

- ML:

-

Machine learning

- MSK:

-

Musculoskeletal

- US:

-

Ultrasound

References

Najjar R (2023) Redefining radiology: a review of artificial intelligence integration in medical imaging. Diagnostics (Basel) 13:2760. https://doi.org/10.3390/diagnostics13172760

Gitto S, Cuocolo R, Albano D, Morelli F, Pescatori LC, Messina C, Imbriaco M, Sconfienza LM (2021) CT and MRI radiomics of bone and soft-tissue sarcomas: a systematic review of reproducibility and validation strategies. Insights Imaging 12:68. https://doi.org/10.1186/s13244-021-01008-3

Gitto S, Cuocolo R, Huisman M, Messina C, Albano D, Omoumi P, Kotter E, Maas M, Van Ooijen P, Sconfienza LM (2024) CT and MRI radiomics of bone and soft-tissue sarcomas: an updated systematic review of reproducibility and validation strategies. Insights Imaging 15:54. https://doi.org/10.1186/s13244-024-01614-x

Wagner MW, Namdar K, Biswas A, Monah S, Khalvati F, Ertl-Wagner BB (2021) Radiomics, machine learning, and artificial intelligence-what the neuroradiologist needs to know. Neuroradiology 63:1957–1967. https://doi.org/10.1007/s00234-021-02813-9

Erickson BJ, Korfiatis P, Akkus Z, Kline TL (2017) Machine learning for medical imaging. Radiographics 37:505–515. https://doi.org/10.1148/rg.2017160130

McBee MP, Awan OA, Colucci AT, Ghobadi CW, Kadom N, Kansagra AP, Tridandapani S, Auffermann WF (2018) Deep learning in radiology. Acad Radiol 25:1472–1480. https://doi.org/10.1016/j.acra.2018.02.018

Patil P, Dasgupta B (2012) Role of diagnostic ultrasound in the assessment of musculoskeletal diseases. Ther Adv Musculoskelet Dis 4:341–355. https://doi.org/10.1177/1759720X12442112

Henderson REA, Walker BF, Young KJ (2015) The accuracy of diagnostic ultrasound imaging for musculoskeletal soft tissue pathology of the extremities: a comprehensive review of the literature. Chiropr Man Therap 23:31. https://doi.org/10.1186/s12998-015-0076-5

Albano D, Basile M, Gitto S, Messina C, Longo S, Fusco S, Snoj Z, Gianola S, Bargeri S, Castellini G, Sconfienza LM (2024) Shear-wave elastography for the evaluation of tendinopathies: a systematic review and meta-analysis. Radiol Med 129:107–117. https://doi.org/10.1007/s11547-023-01732-4

Gitto S, Albano D, Serpi F, Spadafora P, Colombo R, Messina C, Aliprandi A, Sconfienza LM (2024) Diagnostic performance of high-resolution ultrasound in the evaluation of intrinsic and extrinsic wrist ligaments after trauma. Ultraschall Med 45:54–60. https://doi.org/10.1055/a-2066-9230

Tenajas R, Miraut D, Illana CI, Alonso-Gonzalez R, Arias-Valcayo F, Herraiz JL (2023) Recent advances in artificial intelligence-assisted ultrasound scanning. Appl Sci (Basel) 13:3693. https://doi.org/10.3390/app13063693

Akkus Z, Cai J, Boonrod A, Zeinoddini A, Weston AD, Philbrick KA, Erickson BJ (2019) A survey of deep-learning applications in ultrasound: artificial intelligence-powered ultrasound for improving clinical workflow. J Am Coll Radiol 16:1318–1328. https://doi.org/10.1016/j.jacr.2019.06.004

Yi J, Kang HK, Kwon JH, Kim KS, Park MH, Seong YK, Kim DW, Ahn B, Ha K, Lee J, Hah Z, Bang WC (2021) Technology trends and applications of deep learning in ultrasonography: image quality enhancement, diagnostic support, and improving workflow efficiency. Ultrasonography 40:7–22. https://doi.org/10.14366/usg.20102

Shin Y, Yang J, Lee YH, Kim S (2021) Artificial intelligence in musculoskeletal ultrasound imaging. Ultrasonography 40:30–44. https://doi.org/10.14366/usg.20080

Gitto S, Serpi F, Albano D, Risoleo G, Fusco S, Messina C, Sconfienza LM (2024) AI applications in musculoskeletal imaging: a narrative review. Eur Radiol Exp 8:22. https://doi.org/10.1186/s41747-024-00422-8

Getzmann JM, Kaniewska M, Rothenfluh E, Borowka S, Guggenberger R (2021) Comparison of AI-powered 3D automated ultrasound tomography with standard handheld ultrasound for the visualization of the hands-clinical proof of concept. Skeletal Radiol 51:1415–1423. https://doi.org/10.1007/s00256-021-03984-5

Maleki F, Ovens K, Gupta R, Reinhold C, Spatz A, Forghani R (2022) Generalizability of machine learning models: quantitative evaluation of three methodological pitfalls. Radiol Artif Intell 5:e220028. https://doi.org/10.1148/ryai.220028

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff JM, Akl EA, Brennan SE, Chou R, Glanville J, Grimshaw JM, Hróbjartsson A, Lalu MM, Li T, Loder EW, Mayo-Wilson E, McDonald S, McGuinness LA, Stewart LA, Thomas J, Tricco AC, Welch VA, Whiting P, Moher D (2021) The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 372:n71. https://doi.org/10.1136/bmj.n71

Cheng Y, Jin Z, Zhou X, Zhang W, Zhao D, Tao C, Yuan J (2022) Diagnosis of metacarpophalangeal synovitis with musculoskeletal ultrasound images. Ultrasound Med Biol 48:488–496. https://doi.org/10.1016/j.ultrasmedbio.2021.11.003

Chiu PH, Boudier-Revéret M, Chang SW, Wu CH, Chen WS, Özçakar L (2022) Deep learning for detecting supraspinatus calcific tendinopathy on ultrasound images. J Med Ultrasound 30:196–202. https://doi.org/10.4103/jmu.jmu_182_21

Cronin NJ, Finni T, Seynnes O (2020) Using deep learning to generate synthetic B-mode musculoskeletal ultrasound images. Comput Methods Programs Biomed 196:105583. https://doi.org/10.1016/j.cmpb.2020.105583

Cunningham RJ, Loram ID (2020) Estimation of absolute states of human skeletal muscle via standard B-mode ultrasound imaging and deep convolutional neural networks. J R Soc Interface 17:20190715. https://doi.org/10.1098/rsif.2019.0715

Di Cosmo M, Fiorentino MC, Villani FP, Frontoni E, Smerilli G, Filippucci E, Moccia S (2022) A deep learning approach to median nerve evaluation in ultrasound images of carpal tunnel inlet. Med Biol Eng Comput 60:3255–3264. https://doi.org/10.1007/s11517-022-02662-5

Smerilli G, Cipolletta E, Sartini G, Moscioni E, Di Cosmo M, Fiorentino MC, Moccia S, Frontoni E, Grassi W, Filippucci E (2022) Development of a convolutional neural network for the identification and the measurement of the median nerve on ultrasound images acquired at carpal tunnel level. Arthritis Res Ther 24:38. https://doi.org/10.1186/s13075-022-02729-6

Droppelmann G, Tello M, García N, Greene C, Jorquera C, Feijoo F (2022) Lateral elbow tendinopathy and artificial intelligence: binary and multilabel findings detection using machine learning algorithms. Front Med (Lausanne) 9:945698. https://doi.org/10.3389/fmed.2022.945698

Du Toit C, Orlando N, Papernick S, Dima R, Gyacskov I, Fenster A (2022) Automatic femoral articular cartilage segmentation using deep learning in three-dimensional ultrasound images of the knee. Osteoarthr Cartil Open 4:100290. https://doi.org/10.1016/j.ocarto.2022.100290

Lee SW, Ye HU, Lee KJ, Jang WY, Lee JH, Hwang SM, Heo YR (2021) Accuracy of new deep learning model-based segmentation and key-point multi-detection method for ultrasonographic developmental dysplasia of the hip (DDH) screening. Diagnostics (Basel) 11:1174. https://doi.org/10.3390/diagnostics11071174

Lin BS, Chen JL, Tu YH, Shih YX, Lin YC, Chi WL, Wu YC (2020) Using deep learning in ultrasound imaging of bicipital peritendinous effusion to grade inflammation severity. IEEE J Biomed Health Inform 24:1037–1045. https://doi.org/10.1109/JBHI.2020.2968815

Loram I, Siddique A, Sanchez MB, Harding P, Silverdale M, Kobylecki C, Cunningham R (2020) Objective analysis of neck muscle boundaries for cervical dystonia using ultrasound imaging and deep learning. IEEE J Biomed Health Inform 24:1016–1027. https://doi.org/10.1109/JBHI.2020.2964098

Lyu S, Zhang Y, Zhang M, Jiang M, Yu J, Zhu J, Zhang B (2023) Ultrasound-based radiomics in the diagnosis of carpal tunnel syndrome: The influence of regions of interest delineation method on mode. J Clin Ultrasound 51:498–506. https://doi.org/10.1002/jcu.23387

Marzola F, van Alfen N, Doorduin J, Meiburger KM (2021) Deep learning segmentation of transverse musculoskeletal ultrasound images for neuromuscular disease assessment. Comput Biol Med 135:104623. https://doi.org/10.1016/j.compbiomed.2021.104623

Rosa LG, Zia JS, Inan OT, Sawicki GS (2021) Machine learning to extract muscle fascicle length changes from dynamic ultrasound images in real-time. PLoS ONE 16:e0246611. https://doi.org/10.1371/journal.pone.0246611

Saleh A, Laradji IH, Lammie C, Vazquez D, Flavell CA, Azghadi MR (2021) A deep learning localization method for measuring abdominal muscle dimensions in ultrasound images. IEEE J Biomed Health Inform 25:3865–3873. https://doi.org/10.1109/JBHI.2021.3085019

Tiulpin A, Saarakkala S, Mathiessen A, Hammer HB, Furnes O, Nordsletten L, Englund M, Magnusson K (2022) Predicting total knee arthroplasty from ultrasonography using machine learning. Osteoarthr Cartil Open 4:100319. https://doi.org/10.1016/j.ocarto.2022.100319

Yu L, Li Y, Wang XF, Zhang ZQ (2023) Analysis of the value of artificial intelligence combined with musculoskeletal ultrasound in the differential diagnosis of pain rehabilitation of scapulohumeral periarthritis. Medicine (Baltimore) 102:e33125. https://doi.org/10.1097/MD.0000000000033125

Cai L, Gao J, Zhao D (2020) A review of the application of deep learning in medical image classification and segmentation. Ann Transl Med 8:713. https://doi.org/10.21037/atm.2020.02.44

Tajbakhsh N, Shin JY, Gurudu SR, Hurst RT, Kendall CB, Gotway MB, Liang J (2016) Convolutional neural networks for medical image analysis: full training or fine tuning? IEEE Trans Med Imaging 35:1299–1312. https://doi.org/10.1109/TMI.2016.2535302

Parmar C, Barry JD, Hosny A, Quackenbush J, Aerts HJWL (2018) Data analysis strategies in medical imaging. Clin Cancer Res 24:3492–3499. https://doi.org/10.1158/1078-0432.CCR-18-0385

Funding

Open access funding provided by Università degli Studi di Milano within the CRUI-CARE Agreement. This work was supported by the Gottfried und Julia Bangerter-Rhyner-Stiftung with a research grant obtained by J.M.G.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection, and analysis were performed by J.M.G., G.Z., and S.G. The first draft of the manuscript was written by J.M.G. and G.Z. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interests

C. Messina is a Junior Deputy Editor, D. Albano is a Scientific Editorial Board Member, and L.M. Sconfienza is a Consultant to the Editor of La Radiologia Medica. They have not taken part in the review or selection process of this article. The remaining authors declare that they have no competing interests related to this article.

Ethical approval

This is a systematic review of the literature. All studies included contain a statement that approval from the local ethics committee and informed consent from each patient or a waiver for it was obtained. The manuscript does not contain new clinical studies or patient data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Getzmann, J.M., Zantonelli, G., Messina, C. et al. The use of artificial intelligence in musculoskeletal ultrasound: a systematic review of the literature. Radiol med (2024). https://doi.org/10.1007/s11547-024-01856-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11547-024-01856-1