Abstract

Maximum likelihood estimation is among the most widely-used methods for inferring phylogenetic trees from sequence data. This paper solves the problem of computing solutions to the maximum likelihood problem for 3-leaf trees under the 2-state symmetric mutation model (CFN model). Our main result is a closed-form solution to the maximum likelihood problem for unrooted 3-leaf trees, given generic data; this result characterizes all of the ways that a maximum likelihood estimate can fail to exist for generic data and provides theoretical validation for predictions made in Parks and Goldman (Syst Biol 63(5):798–811, 2014). Our proof makes use of both classical tools for studying group-based phylogenetic models such as Hadamard conjugation and reparameterization in terms of Fourier coordinates, as well as more recent results concerning the semi-algebraic constraints of the CFN model. To be able to put these into practice, we also give a complete characterization to test genericity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Preliminaries

This paper is concerned with inferring the evolutionary history of a set of a species or other taxa from sequence data using maximum likelihood. In practice, maximum likelihood inference is among the most commonly-used in phylogenetic analyses, and in contrast to the simple (but more analytically tractable) model considered in this paper, maximum likelihood estimation is typically undertaken with sophisticated models of site evolution, utilizing heuristic (e.g. hill-climbing) methods and multiple start points to explore the tree space in order to obtain parameters which maximize the likelihood. A variety of excellent and widely-used implementations exist, many of which have been used in thousands of studies (e.g., Nguyen et al. 2015; Stamatakis 2014; Yang 2007; Price et al. 2010).

Nonetheless, there remains interest in computing analytic (i.e., closed-form) solutions in simpler cases (Chor and Snir 2004; Chor et al. 2003, 2000; Yang 2000; Chor et al. 2007; Hobolth and Wiuf 2024; Chor et al. 2005) as well as exact solutions via algebraic methods (Kosta and Kubjas 2019; Garcia Puente et al. 2022), with the goal of providing a more rigorous understanding of the properties of maximum likelihood estimation and the ways that it can fail. For example, it is well-known that the maximum likelihood tree need not be unique (Steel 1994), that for certain data there exists a continuum of trees which maximize the likelihood (Chor et al. 2000), and that there exist data for which the maximum likelihood estimate does not exist—or at least, is not a true tree with finite branch lengths (Kosta and Kubjas 2019). In the context of phylogenetic estimation, maximum likelihood exhibits complex behavior even in very simple cases; one important example of this is long-branch attraction, a form of estimation bias which has been the subject of much interest (see, e.g., Susko and Roger 2021; Parks and Goldman 2014; Bergsten 2005; Anderson and Swofford 2004), but is not yet fully understood.

The specific problem that we consider in this paper is that of using maximum likelihood to estimate the branch lengths of an unrooted 3-leaf tree from molecular data generated according to the Cavendar-Farris-Neyman (CFN) model, a binary symmetric model of site substitution. A related problem of estimating rooted 3-leaf trees under the molecular clock assumption was considered by Yang (2000). The problem considered here differs from that in Yang (2000) as we do not assume a molecular clock; instead our problem involves maximizing the likelihood over three independently varying branch length parameters.

The 3-leaf MLE problem in this paper was considered, though not fully solved, in Kosta and Kubjas (2019). There, the authors introduced a general algorithm for computing numerically exact solutions using semi-algebraic constraints (i.e. polynomial inequalities) satisfied by phylogenetic models, along with methods from numerical algebraic geometry. Applying their method to the 3-leaf maximum likelihood problem under the CFN model, they discovered a nontrivial example where the maximum likelihood estimate does not exist. A similar problem was also considered in Parks and Goldman (2014), who obtained a partial solution (i.e., involving simulations) for the 4-state Jukes-Cantor model, allowing them to use distance estimates to predict with high accuracy certain features of the maximum likelihood estimate for specific data.

In this paper, we go one step further, presenting a full solution to the 3-leaf maximum likelihood problem under the CFN model, for generic data. Our proofs have a similar flavor to the model boundary decompositions technique in Allman et al. (2019, Section 4), but we consider a submodel that is not full dimensional. The main result of this paper provides a full characterization of the behavior of maximum likelihood estimation in this setting, up to and including necessary and sufficient conditions for the MLE to exist, as well as detailed analysis describing the ways in which an MLE may fail to exist. Further, our results validate the predictions given in Parks and Goldman (2014), providing theoretical underpinning to an interesting connection between maximum likelihood and distance-based estimates appearing in that paper.

While finalizing this manuscript, the recent work of Hobolth and Wiuf (2024) came to our attention. Building on a connection between the multinomial distribution and maximum likelihood estimation of 3-leaf trees under the CFN model, the authors of Hobolth and Wiuf (2024) make a surprising and interesting discovery about the likelihood geometry for 3-leaf models: in the case of three leaves, estimation by maximum likelihood is equivalent to estimation by pairwise distances, an equivalence which does not hold for trees with four or more leaves. In particular, the authors use this to obtain an analytic solution to the MLE problem for 3-leaf trees which is applicable whenever the data (regarded here as a vector representing the empirical site pattern frequencies) lies in the interior of the parameter space of the model. The present paper provides a natural extension of the 3-leaf result in Hobolth and Wiuf (2024) in two ways: first, by providing a simple characterization of when the data lies in the interior of the model, and second, by analyzing in detail the case when it does not; the analysis of this non-interior case is both substantial and technically non-trivial, and provides an improved understanding of the settings where maximum likelihood is prone to failure, such as in the case of trees with very long or short branches.

The remainder of this paper is structured as follows. In Sect. 1 we introduce the model, problem statement, and some of the key tools that will be employed. In Sect. 2, we present our main result, a closed-form “analytic” solution to the maximum-likelihood problem for 3-leaf trees under the CFN model. In Sect. 3, we discuss the significance and novel contribution of this result. A proof of the main result is then presented in Sect. 4.

1.1 Data and Model of Evolution

1.1.1 Tree Parameter

For any finite set \(\mathcal {X}\), an \(\mathcal {{ X}}\)-tree \(\mathcal {T}\) is an ordered pair \((T;\varphi )\) where T is a tree with vertex set V(T) and the labelling map \(\varphi :\mathcal {X}\rightarrow V\) is a map such that \(v\in \varphi (\mathcal {X})\) whenever \(v\in V(T)\) and \(\text {deg}(v) \le 2\). If \(\varphi \) is a bijection into the leaves of T, then \(\mathcal {T}\) is called a phylogenetic \(\mathcal {{ X}}\)-tree; in this case the elements of \(\mathcal {X}\) are identified with the leaves of the tree (for a standard reference, see Semple and Steel 2003). In addition, we associate with \(\mathcal {T}\) a vector of nonnegative branch lengths \(d:= (d_{e})_{e\in E(T)}\), where E(T) is the edge set of T.

We regard \(\mathcal {X}\) as a set of taxa, with T representing a hypothesis about their evolutionary or genealogical history; the branch lengths are regarded as representing a measure of evolutionary distance measured in expected number of mutations per site. In this paper we consider exclusively the case \(\mathcal {X}=[3]\).

Rather than using the evolutionary distances d as edge parameters of T, for our analyses it will be more convenient to use an alternative parameterization of the branch lengths as a vector \(\theta := (\theta _{e})_{e\in E(T)}\in [0,1]^{|E(T)|}\), where

for all \(e\in E(T)\). The numerical edge parameters \((\theta _{e})_{e\in E(T)}\) have been referred to as “path-set variables” (Chor et al. 2003); we refer to them here as the Hadamard parameters.

1.1.2 Site Substitution Model

The site substitution model considered in this paper is the fully symmetric Cavendar-Farris-Neyman (CFN) model. Also known as the \(N_{2}\) model (e.g., in Semple and Steel (2003)), the CFN model takes as input a tree parameter \(\mathcal {T}_{\theta } = (\mathcal {T},\theta )\), consisting of a phylogenetic [n]-tree \(\mathcal {T}=(T,\varphi )\) along with edge parameters \(\theta =(\theta _{e})_{e\in E(T)}\), and outputs a random vector \(X=(X_{1},\ldots ,X_{n})\), whose entries \(X_{1},\ldots ,X_{n}\in \left\{ -1,+1\right\} \) are associated with the n leaves of \(\mathcal {T}\).

The CFN model, corresponding to a time-reversible Markov chain on a tree, is the simplest model of site substitution, possessing only two nucleotide states, which we denote by \(+1\) (pyrimidine) and \(-1\) (purine). Under this model, the probability of a nucleotide in state i transitioning to state j over an edge of length t can be shown to be

for all \(i,j\in \left\{ -1,+1\right\} \) (Yang 2000, and for a more general reference, see Semple and Steel (2003), p. 197). In other words, the transition probability from state i to j along a given edge e, denoted \(P_{ij}(e)\), is

for all \(i,j\in \left\{ +1,-1\right\} \).

Moreover, we assume a uniform root distribution, from which it follows that

for all \(\sigma \in \left\{ -1,1\right\} ^{n}\) (c.f. Lemma 8.6.1.(ii) in Semple and Steel (2003), see also Sturmfels and Sullivant (2004), p. 221).

The distribution of X depends on both the topology and branch lengths of the tree parameter. For a phylogenetic [n]-tree \(\mathcal {T}\) with topology \(\tau \) and Hadamard parameters \(\theta \), we use the notation

where

and \(\mathbb {P}\) is the distribution of X under the CFN model with parameters \(\mathcal {T}\) and \(\theta \).

1.1.3 Identifiability of Model Parameters

Another way to understand the CFN model is as a parameterized statistical model with the tree topology \(\tau \) held fixed; in this case, the CFN model is regarded as the image of the map

where \(\Theta _{\tau }\subseteq [0,1]^{|E(T)|}\) is the set of possible Hadamard parameters, and where \(\Delta _{r-1}\subset \mathbb {R}^{r}\) is the probability simplex of dimension \(r-1\); i.e.,

The usual assumption prescribed for the CFN model (which is not made in this paper) is that \(\Theta _{\tau } = (0,1)^{|E(T)|}\) (so that \(0<\theta _{e}<1\) for all \(e\in E(T)\), or equivalently, that \(0<d_{e}<\infty \) for all \(e\in E(T)\)). Under that assumption, \(\Psi _{\tau }\) is injective (Hendy 1991), and hence the edge parameters \(\theta =(\theta _{e})_{e\in E(T)}\) are identifiable. This means that if \(\mathcal {T}_{\theta }:=(\mathcal {T},\theta )\) and \(\mathcal {T}_{\theta '}:=(\mathcal {T},\theta ')\) are two n-leaf trees with the same topology \(\tau \) but with edge parameters \(\theta \) and \(\theta '\) such that \(\theta \ne \theta '\), then the distributions of X will be different under \(\mathcal {T}_{\theta }\) and \(\mathcal {T}_{\theta '}\).

On the other hand in this paper, we consider an extension by allowing \(\theta _e\in [0,1]\) for each edge \(e\in E(T)\), rather than \(\theta _e\in (0,1)\). This extension allows for branch lengths which are infinite or zero (when measured in expected number of mutations per site), in order to better understand the behavior of maximum likelihood estimation in the limit as one or more branch lengths tend to zero or to infinity. This seemingly slight extension of the model substantially adds to the complexity of the analysis. In particular, as a consequence of this extension, it is no longer the case that the numerical parameters \(\theta \) are identifiable, which presents certain complications, described in detail later in the paper. On the other hand, it also has the effect of guaranteeing the existence of the maximum likelihood estimate.

1.1.4 Data

In practice, DNA sequence data is typically arranged as a multiple sequence alignment, an \(n\times N\) matrix, with each row corresponding to a leaf of \(\mathcal {T}\) and each column representing an aligned site position. It is standard (albeit unrealistic) to assume that sites evolved independently, and as such our data consists of \(N\) random column vectors

where X is a random variable taking values in \(\left\{ +1,-1\right\} ^{n}\) whose distribution will be described below, and which is regarded as a vector of nucleotides observed at the leaves of \(\mathcal {T}\) such that \(X_{i}\) is the nucleotide observed at the vertex with label i for each \(i\in [n]\). Under the CFN model, the distribution of X depends on the topology of \(\mathcal {T}\) as well as the edge parameters \(\theta \). Due to the exchangeability of \(X^{(1)},\ldots ,X^{(N)}\), the data can be summarized by a site frequency vector

where

1.1.5 \(\alpha \)-Split Patterns

In light of Eq. (3), it is possible using a change of coordinates to represent the distribution of X using a vector of \(2^{n-1}\) entries rather than \(2^{n}\) entries. For any vector \(\sigma \in \left\{ -1,+1\right\} ^{n}\) and any \(\alpha \in [n-1]\), we say that \(\sigma \) has \(\alpha \)-split pattern if there exists \(k\in \left\{ -1,+1\right\} \) such that \(\sigma _{i}=k\) if and only if \(i\in \alpha \).

For example, if \(n=3\) then \((+1,-1,+1)\) and \((-1,+1,-1)\) both have \(\left\{ 2\right\} \)-split pattern; \((+1,+1,-1)\) and \((-1,-1,+1)\) both have \(\left\{ 1,2\right\} \)-split pattern, and so forth.

Analogously to the notation in Eq. (4), define the expected site pattern spectrum to be

where

In other words, \(\bar{p}_{\alpha }\) is the probability that the entries of X correspond to the split \(\alpha |([n]\backslash \alpha )\). For this vector \(\bar{p}\), we follow (Hendy and Penny 1993) in assuming that the subsets of \([n-1]\) are ordered lexicographically, e.g., so that for \(n=3\), we have the order \((\emptyset , \left\{ 1\right\} , \left\{ 2\right\} ,\left\{ 1,2\right\} )\).

By Eq. (3), the distribution vector \(p\in \Delta _{2^{n}-1}\) is fully specified without loss of information by the lower dimensional vector \(\bar{p}\in \Delta _{2^{n-1}-1}\) (c.f., Allman and Rhodes (2007)). The data may also be summarized by the lower-dimensional (but sufficient) statistic

where

1.2 The Maximum Likelihood Problem

Let \(\mathcal {T}=(T,\varphi )\) be a phylogenetic [n]-tree with unrooted tree topology \(\tau \) and Hadamard edge parameters \(\theta =(\theta _{e})_{e\in E(T)}\) for some \(n\ge 2\). Given data taking the form of Eq. (5), or equivalently Eq. (7), the log-likelihood function is the function

where \(p_{\sigma }\) and \(\bar{p}_{\alpha }\) are defined as in Eqs. (4) and (6).

The maximum likelihood problem is to find all parameters \(\tau \) and \(\theta \in [0,1]^{|E(T)|}\) which maximize Eq. (8).

When \(n=3\), there is only one unrooted tree topology, in which case this problem reduces to that of finding the numerical parameters \(\theta \in [0,1]^{3}\) which maximize Eq. (8).

1.3 Hadamard Conjugation

In this section we introduce an important reparametrizaton of the CFN model, as well as a central tool in our analyses: Hadamard conjugation.

For any even subset \(Y\subseteq [n]\), define the path set \(P(\mathcal {T},Y)\) induced by Y on \(\mathcal {T}\) to be the set of \(\frac{1}{2}|Y|\) edge-disjoint paths in \(\mathcal {T}\), each of which connects a pair of leaves labelled by elements from Y, taking \(P(\mathcal {T},\emptyset )=\emptyset \). This set is unique if \(\mathcal {T}\) is a binary tree (Semple and Steel 2003).

The edge spectrum is the vector

where

Inductively define \(H_{0}:= [1]\), and for \(k \ge 0\) define

Let \(H:=H_{n-1}\). Then H is a \(2^{n-1}\times 2^{n-1}\) matrix, and by our choice of ordering for \([n-1]\), we have \(H = (h_{\alpha ,\beta })_{\alpha ,\beta \subseteq [n-1]}\) where

In particular, H is a symmetric Hadamard matrix with \(H^{-1} = \frac{1}{2^{n-1}}H\). Since H is the character table of the group \(\mathbb {Z}_{2}^{n-1}\), multiplication by H can be regarded as a discrete Fourier transformation, a commonly-used tool in the study of the CFN model and other group-based substitution models [see Semple and Steel (2003, chp. 8) as well as Coons and Sullivant (2021), Sullivant (2018), Hendy et al. (1994)]. Closely related to the discrete Fourier transform is the next theorem, which allows us to translate between the edge spectrum and the expected site pattern spectrum.

Theorem 1.1

[Hadamard conjugation (Hendy and Penny 1993; Evans and Speed 1993)] Let \(\gamma \in \mathbb {R}^{2^{n-1}}\) be the edge spectrum of a phylogenetic [n]-tree \(\mathcal {T}\), let \(H:=H_{n-1}\), and let \(\bar{p}\) the expected site pattern spectrum as defined in Eq. (6). Then

where the exponential function \(\exp (\cdot )\) is applied component-wise.

Hadamard conjugation, as formulated in this theorem, has proved to be an essential tool in a number of previous results and has analogues for substitution models other than the CFN [see, e.g., Chor et al. (2003, 2000)]. For a proof and a detailed discussion of this theorem, we refer the reader to Semple and Steel (2003). In particular, we will utilize the following proposition, itself a consequence of Theorem 1.1.

Proposition 1.2

[Corollary 8.6.6 in Semple and Steel (2003)] Let \(\theta _{e}\in [0,1]\) for all \(e\in E(T)\). Then for all subsets \(\alpha \) of \([n-1]\),

Note that Proposition 1.2 holds even if the root distribution is not taken to be uniform, however we do not consider that case in this paper. When \(n=3\), Eq. (10) reduces to

and by a change of notation, this can be rewritten as

for all \(\sigma \in \left\{ -1,+1\right\} ^{3}\). Therefore by Eq. (3),

for all \(\sigma \in \left\{ +1,-1\right\} ^{3}\).

1.4 Interpretation of Hadamard Parameters

We regard a vector of Hadamard parameters \(\theta =(\theta _{e})_{e\in E(T)}\) as biologically plausible if \(\theta _{e}\in (0,1)\) for all \(e\in E(T)\). Since \(-\frac{1}{2}\log \theta _{e}\) is the expected number of mutations on edge e, it follows that \(\theta _{e}\in (0,1)\) if and only if \(d_{e}\in (0,\infty )\). In other words, biologically plausible Hadamard parameters correspond to trees with branch lengths having positive and finite expected number of mutations per site. In this work, we allow for \(\theta _{e}\in [0,1]\) in order to better study the ways that maximum likelihood can fail to return a tree with biologically plausible parameters; for example, trees with extremely short or long branches are of special interest, since it is in this setting that long-branch attraction is hypothesized to occur.

Observe that \(\theta _{e}\in [0,1]\) measures the correlation between the state of the Markov process at the endpoints of the edge e. Suppose \(e=(u,v)\in E(T)\) and let \(X_{u}\) and \(X_{v}\) denote the state of the Markov process at nodes u and v respectively. Equation (2) implies that if \(\theta _{e}=1\) then \(X_{u}=X_{v}\) with probability 1. On the other hand, if \(\theta _{e}=0\) then \(X_{u}\) and \(X_{v}\) are independent; to see why this is the case, observe that using Eq. (2), it holds for all \(i,j\in \left\{ -1,1\right\} \) that

The observation has an important consequence which is summarized in the next lemma. In particular, by conditioning on the state of the Markov process at the endpoints of e and using the Markov property, a straightforward calculation gives the following result:

Lemma 1.3

(Independence caused by “infinitely long” branches) Let \(\mathcal {T}=(T,\varphi )\) be a phylogenetic [n]-tree. Let \(e\in E(T)\) and let \(A_{e}|B_{e}\) denote the split induced by e on the leaf set [n]. If \(\theta _{e}=0\) then the random vectors \((X_{i}: i\in A_{e})\) and \((X_{i}: i\in B_{e})\) are independent.

A proof of Lemma 1.3 can be found in Appendix A.

1.5 Fourier Coordinates and Semi-algebraic Constraints

One key takeaway of 1.2 is that the probability of any site pattern can be computed as a polynomial function of the Hadamard parameters.

Hence the CFN model for a fixed tree topology \(\tau \) may be regarded as the image of the map

In our setting, we take \(\Theta _{\tau }=[0,1]^{|E(T)|}\) and regard the statistical model as the image

An important monomial parameterization of the CFN model is obtained by means of the discrete Fourier representation (see Coons and Sullivant 2021; Sullivant 2018; Sturmfels and Sullivant 2004), which here is given by the matrix H. If \(\textbf{q}= (q_{111},q_{101},q_{011},q_{110})^{\top }\) is a vector of Fourier coordinates, then \(\textbf{q}=H_{2}\bar{p}\). In the case with \(n=3\), we have \(\gamma =(-(d_{1}+d_{2}+d_{3}), d_{1},d_{2},d_{3})^{\top }\), so that Theorem 1.1 implies that

For group-based models (see, e.g., Sullivant 2018) like the CFN model, \(\overline{\Psi }_{\tau }\) is a polynomial map, and hence all points in \(\mathcal {M}_{\tau }\) satisfy certain polynomial equalities, called phylogenetic invariants, and polynomial inequalities, called semi-algebraic constraints, both of which are usually formulated in terms of Fourier coordinates.

In the 3-leaf case considered here, the only phylogenetic invariant is \(q_{111}=1\), which is equivalent to the stochastic invariant \(\bar{p}_{\emptyset }+\bar{p}_{\left\{ 1\right\} }+\bar{p}_{\left\{ 2\right\} }+\bar{p}_{\left\{ 1,2\right\} }=1\).

The semi-algebraic constraints of the CFN model were studied more generally by Matsen (2008) and Kosta and Kubjas (2019). When the assumption is made that the tree parameter has biologically plausible parameters, the semi-algebraic constraints for 3-leaf trees consist of the following inequalities:

and

The inequality Eq. (16) corresponds simply to the assumption that the evolutionary distance between each pair of leaves is finite.

The remaining semi-algebraic constraints in Eq. (17) have straightforward interpretation in terms of the additive evolutionary distances on the tree induced by the branch lengths \(d_1, d_2,\) and \(d_3\). To see this, observe that by Eq. (15), the inequalities in Eq. (17) can be written as

and by Eq. (1), these are equivalent to

In other words, the semi-algebraic constraints in Eq. (17) are nothing but the triangle inequality in disguise.Footnote 1

Taken together, Eqs. (16) and (17) are equivalent to the tree having branch lengths in \(d_1,d_2,d_3\) which are both positive and finite. Note this assumption is not made in this paper, as we allow for branch lengths to also be either zero or infinite, a relaxation which corresponds to using non-strict inequalities in Eqs. (16) and (17). Nonetheless, the strict inequalities will play an important role in our main result.

Remark 1.4

The semi-algebraic constraints in (17) show up in other settings as well. For example, in Matsen (2008) they appear as embeddability conditions for the Kimura 3-parameter model; in that setting, the inequalities are equivalent to the nonnegativity of the off-diagonal entries of the mutation rate matrix, and therefore—just as in our setting—implicitly specify that the branch lengths be nonnegative. For a generalization of these inequalities as embeddability conditions see the main result of Ardiyansyah et al. (2021).

Because the Fourier coordinates factorize into Hadamard parameters, as shown in Eq. (15) (and more generally: see, e.g., Semple and Steel 2003), the nontrivial Fourier coordinates thus have a simple biological interpretation. For each distinct pair \(i,j\in [3]\), since \(\mathbb {E}\left[ X_{i} \right] =\mathbb {E}\left[ X_{j}\right] =0\), it follows that

In other words, the nontrivial Fourier coordinates are covariances of nucleotides observed at the leaves of the tree.

Both the monomial parameterization in Eq. (15) and the semialgebraic constraints in Eqs. (16 and (17) will play an important role in the proof and interpretation of our main result for 3-leaf trees. In particular, our approach is to estimate the Fourier coordinates \(\theta _{1}\theta _{2},\theta _{1},\theta _{3},\theta _{2}\theta _{3}\) directly from the data, and it turns out that whether or not these estimates satisfy inequalities corresponding to Eqs. (16) and (17) completely determines the qualitative properties of the maximum likelihood estimate.

2 Main Result: An Analytic Solution to the 3-Leaf MLE Problem

2.1 The 3-Leaf Maximum Likelihood Problem

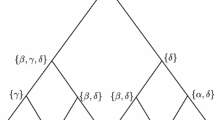

Let \(\mathcal {T}\) be an unrooted phylogenetic [3]-tree, with unknown numerical edge parameters \(\theta ^{\mathcal {T}}=(\theta ^{\mathcal {T}}_{1},\theta ^{\mathcal {T}}_{2},\theta ^{\mathcal {T}}_{3})\in [0,1]^{3}\), as shown in Fig. 1. Let \(\textbf{s}\) be a site frequency vector obtained from \(N\) independent samples \(X^{(1)},\ldots ,X^{(N)}\) generated according to the CFN process on \(\mathcal {T}\). The 3-leaf maximum likelihood problem is to find all numerical parameters \(\theta \in [0,1]^{3}\) which maximize Eq. (8) given the data \(\textbf{s}\).

Note that since there is only one possible unrooted topology for a 3-leaf tree, the topology parameter \(\tau \) does not play a role in this problem.

2.2 Key Definitions and Notation

The key statistics used are the following:

Definition 2.1

(The statistics \(M_{ij}^{+},M_{ij}^{-},B_{ij},\textbf{B}\)) For all \(i,j\in [n]\) such that \(i\ne j\), define

as well as

and

In words, \(M^{+}_{ij}\) is the number of samples for which leaves i and j share the same nucleotide state and \(M_{ij}^{-}\) is the number for which the nucleotides observed at leaves i and j differ. It follows by definition that \(M^{+}_{ij}+M^{-}_{ij}=N\) and that \(M^{+}_{ij}=M^{+}_{ji}\) and \(M^{-}_{ij}=M^{-}_{ji}\) for all distinct \(i,j\in [3]\).

The statistic \(B_{ij}\) measures the observed correlation of the observations at leaves i and j of the tree. By the law of large numbers,

almost surely as \(N\rightarrow \infty \). Therefore by Eq. (18), \(B_{ij}\) is a consistent estimator of the Fourier coordinate \(\theta _{i}\theta _{j}\), which is itself the covariance of \(X_{i}\) and \(X_{j}\). Moreover, it is easy to check that

Due to symmetries of the problem, it will be useful to index the statistics in Definition 2.1 using permutations.

Notation 2.2

(Indexing with permutations) Let \(\text {Alt}(3)\) denote the alternating group of degree 3, which can be expressed in cycle notation as

For each \(\pi \in \text {Alt}(3)\), we write

The use of the statistic \(\textbf{B}\) will permit us to obtain a simple criterion for when a maximum likelihood estimate corresponding to a 3-leaf tree with finite branch lengths exists. This criteria involves the following set:

Definition 2.3

(The set \(\mathcal {D}\)) Define

As we will show in the next theorem, it turns out that given some fixed data \(\textbf{s}\), a maximum likelihood estimate with finite branch lengths exists precisely if and only if \(\textbf{B}\in \mathcal {D}\). Importantly, since \(\textbf{B}\) is an estimate of the nontrivial Fourier coordinates \((q_{110},q_{101},q_{011})=(\theta _{1}\theta _{2},\theta _{1}\theta _{3},\theta _{2}\theta _{3})\), the inequalities which define \(\mathcal {D}\) correspond precisely to the semi-algebraic constraints in Eqs. (16) and (17).

2.3 Assumptions About the Data

We make two simplifying assumptions about the data \(\textbf{s}\):

-

A.1

\(\bar{s}_{\alpha }>0\) for all \(\alpha \subseteq [2]\).

-

A.2

\(B_{12},B_{13}\) and \(B_{23}\) are nonzero and distinct.

In words, assumption A.1 states that each site pattern aaa, aab, aba, abb is observed at least once in the data (where a and b represent different nucleotides). One consequence of this is that \(M^{+}_{ij},M^{-}_{ij}\in (0,N)\) whenever \(i\ne j\). Since the number of site patterns is \(2^{n-1}\), this assumption would be unrealistic for a tree with many more leaves (e.g., \(n>30\)) given the size of genomic datasets (Chor et al. 2000), but for our purposes (i.e., with \(n=3\)), this assumption is reasonable. Assumption A.2 is an assumption about the genericity of the data, in the sense that it is equivalent to assuming that

or equivalently,

These two assumptions considerably simplify the problem with very little loss of generality, as both assumptions are likely to be satisfied when \(N\) is large.

2.4 Statement of Main Result

Our main result is the following theorem, which solves the maximum likelihood problem for 3-leaf trees; further discussion of this result is given in Sect. 3.

Theorem 2.4

(Global MLE for the 3-leaf tree) Assume that A.1 and A.2 hold. Then \(\ell \) has a maximizer on the set \([0,1]^{3}\). Denote the set of all such maximizers as

and let \(\pi _{1},\pi _{2},\pi _{3}\in \text {Alt}(3)\) such that

If \(\textbf{B}\in \mathcal {D}\), then

On the other hand, if \(\textbf{B}\notin \mathcal {D}\), then the following trichotomy holds:

-

(i)

If \(0<B_{\pi _2}\) then

$$\begin{aligned} E_\textrm{MLE}{} & {} = \left\{ \theta \in [0,1]^{3}: \theta _{\pi _{1}(1)}=B_{\pi _{1}(1),\pi _{1}(3)}, \, \theta _{\pi _{1}(2)}=B_{\pi _{1}(2),\pi _{1}(3)}, \,\right. \\{} & {} \quad \left. \text {and } \, \theta _{\pi _{1}(3)}=1\right\} . \end{aligned}$$ -

(ii)

If \(0\in (B_{\pi _2}, B_{\pi _3})\) then

$$\begin{aligned} E_\textrm{MLE} = \left\{ \theta \in [0,1]^{3}: \theta _{\pi _{3}(1)}\theta _{\pi _{3}(2)}=B_{\pi _{3}}, \, \text { and } \, \theta _{\pi _{3}(3)}=0\right\} . \end{aligned}$$ -

(iii)

If \(B_{\pi _{3}}<0\) then

$$\begin{aligned} E_\textrm{MLE} = \bigcup _{\overset{A\subseteq [3]:}{|A| \ge 2}} \left\{ \theta \in [0,1]^{3}: \theta _{i}=0 \text { for all }i\in A\right\} . \end{aligned}$$

Enumerating out the possible cases of Theorem 2.4 immediately yields the following corollary.

Corollary 2.5

Under the assumptions of Theorem 2.4, the MLE can be determined from the values of \(B_{12},B_{13},B_{23}\) using Table 1.

Before proving this theorem in Sect. 4, we first discuss its significance and some implications.

3 Discussion of Novel Contribution

In addition to providing necessary and sufficient conditions for the MLE to exist as a tree with finite branch lengths, Theorem 2.4 also characterizes the ways that this can fail to occur, and highlights a subtle connection between the semi-algebraic constraints given in Eqs. (16) and (17) and properties of the maximum likelihood estimate.

In an important paper on long-branch attraction (Parks and Goldman 2014), a compelling connection was drawn between maximum likelihood and distance estimates on a 3-leaf tree under the Jukes-Cantor model of site substitution. Through a combination of analytic boundary case analysis and simulations, the authors argued that the failure of distance-based branch-length estimates to satisfy the triangle inequality and nonnegativity constraints was a good predictor of maximum likelihood failing to return a tree with biologically plausible branch lengths.

Due to the use of different substitution models (i.e., the CFN model considered here versus the 4-state Jukes-Cantor model considered in Parks and Goldman (2014)), some translation is necessary to recognize the connection between our results and those of Parks and Goldman (2014).

The distance estimates used in Parks and Goldman (2014) are related to the standard Jukes-Cantor correction (Jukes and Cantor 1969; Yang 2006), and are given by the formula

which returns an estimate of evolutionary distance \(D_{ij}\) between taxa i and j, measured in expected number of mutations per site. The variable \(M_{ij}^{-}\) is the number of samples such that the nucleotide states observed at taxa i and j differ (i.e., the same as in this paper). The convention used in Parks and Goldman (2014) is to define \(D_{ij}=\infty \) if \(M_{ij}^{-}\ge 3/4\). Formulated in terms of these distances, the predictions made in Parks and Goldman (2014) are given in Table 2.

By comparison, for the CFN model considered in this paper, the analogous distance estimate (see, e.g., Yang 2000) is

with the convention that \(\widetilde{D}_{ij}=\infty \) if \(B_{ij}\le 0\).

An inspection of Tables 1 and 2 reveals that, modulo the aforementioned change to the distance estimates to account for the different substitution models, the results of Theorem 2.4 coincide precisely with the predictions made in Parks and Goldman (2014) for all data satisfying Assumptions A.1 and A.2. Thus, Theorem 2.4 puts the predictions in Parks and Goldman (2014) on a sound theoretical basis, as it proves that certain properties of the maximum likelihood estimate are fully determined by whether or not the data satisfies the semialgebraic constraints of the model (i.e., the inequalities of Eqs. (16 and (17), which are mirrored by the inequality conditions in Tables 1 and 2).

In addition, Theorem 2.4 provides a characterization and better understanding of the way that the maximum likelihood estimator can fail to return a tree with biologically plausible branch lengths, but instead returns an estimate with edge parameters \(\theta \notin (0,1)^{3}\). Consider the data point

Kosta and Kubjas (2019), it was shown using algebraic methods that maximum likelihood fails to return an estimate with biologically plausible edge parameters for this data point. Instead, it was shown that for this data, the likelihood is maximized as the branch length of leaf 2 goes to infinity. It is easy to verify this conclusion with Theorem 2.4. Observe that

Since \(B_{12},B_{23}<0\), it follows by row 6 of Table 1 that the likelihood is maximized when

This agrees with the result in Kosta and Kubjas (2019), as \(\theta _{2}=0\) if and only if \(d_{2}=+\infty .\) Indeed, Theorem 2.4 characterizes all the ways that the likelihood estimate might be maximized when one or more branch lengths tend to infinity, up to our simplifying Assumptions A.1 and A.2.

Further, Theorem 2.4 provides a succinct explanation of why, for this data, it would be unreasonable to expect maximum likelihood to return a tree with biologically plausible edge parameters. For any tree with edge parameters \(\theta \in (0,1)^{3}\), the Fourier coordinates must satisfy the semi-algebraic constraint in Eq. (17), but for this data point, since \(B_{12},B_{23}<0\), the estimates of the Fourier coordinates fail to satisfy a corresponding positivity inequality.

The previous example elucidates one of the ways that long branches on a species tree can result in the MLE returning a boundary case: when data comes from a species tree with one or more very long branches relative to the size of \(N\), it is more likely that one or more components of \(\textbf{B}\) will be negative, so that \(\textbf{B}\notin \mathcal {D}\) and hence the MLE must be on the boundary.

Nonetheless, this is not the full story. The next example shows how the MLE can lie on the boundary of the model even if all of the distance estimates are finite and positive. Consider the data

so that

In this case \(B_{ij}>0\) for all \(i,j\in [3]\), so the analogous inequality to Eq. (16) is satisfied: based on the data, all of the pairs of nucleotides are positively correlated, so no infinitely-long branches are to be expected; the distance estimates \(\widetilde{D}_{12},\widetilde{D}_{13},\widetilde{D}_{23}\) are all finite and positive.

However, by Theorem 2.4, this is not sufficient to guarantee that maximum likelihood will return a tree with biologically plausible parameters, since there is another semi-algebraic constraint (i.e., Eq. (17)) which must be satisfied for such trees. Indeed, since

it follows that \(\textbf{B}\notin \mathcal {D}\), and hence the data falls into case (i) of Theorem 2.4. More specifically, since this data corresponds to row 2 of Table 1, it follows that the likelihood is maximized when

which does not correspond to a binary tree because the branch length of leaf 1 is zero.

One final and more general takeaway from Theorem 2.4 is that it highlights how the geometry of the statistical model, here determined by the semi-algebraic constraints Eqs. (16) and (17), influences the possible behavior of maximum likelihood estimation. Maximum likelihood returns a tree with biologically plausible branch lengths if and only if the data satisfies analogues of the polynomial inequalities Eqs. (16) and (17). In addition, when the data does not lie in the interior of the model, the question of which inequalities are not satisfied determines the different ways that maximum likelihood fails (i.e., which branches have lengths zero or infinity). This suggests that for phylogenetic trees with more than three leaves, a better understanding of the role that semialgebraic constraints play in maximum likelihood estimation may turn out to be useful in explaining some of the more complex behaviors of maximum likelihood estimation of phylogenetic trees. Of special interest to note are 4-leaf trees, due to the possibility of long-branch attraction. Indeed, recent work has shown that in the 4-leaf case, the study of semi-algebraic constraints for phylogentic models involves surprising subtleties that may be important for inference (Casanellas et al. 2021).

To be sure, in the cases of trees with \(n \ge 4\) leaves, to say nothing of more realistic and complicated substitution models, the increased algebraic complexity of the likelihood equations presents formidable obstacles. First, as shown in Hobolth and Wiuf (2024), when \(n \ge 4\), the likelihood equations do not have solutions which can be expressed in terms of pairwise sequence comparisons (as was done here and in Hobolth and Wiuf (2024)). Moreover, in many cases, closed form solutions are unlikely to exist at all; an example of this can be found in the analysis of the 4-leaf MC-comb in Chor et al. (2003), where it was shown that the critical points of the likelihood function correspond to zeros of a degree 9 polynomial which cannot be solved by radicals.

Despite these limitations, solutions can nonetheless be obtained using tools from numerical algebraic geometry which return theoretically correct solutions with probability one (see, e.g., Kosta and Kubjas 2019, Gross et al. 2016, Chor et al. 2005, Chor et al. 2003). Moreover, the number of solutions, called the maximum likelihood (ML) degree, can be computed using Gröbner basis techniques (Hoşten et al. 2005) and methods from singularity theory (Rodriguez and Wang 2017; Maxim et al. 2024).

4 Proof of the Main Result

Our proof of Theorem 2.4 considers the problem of maximizing the log-likelihood separately two cases:

-

(1)

the interior case, i.e., the problem of maximizing \(\ell \) over all \(\theta \in (0,1)^{3}\), and

-

(2)

the boundary cases, corresponding to when \(\theta \in \partial (0,1)^{3}\).

For the boundary cases, we follow the approach taken by the authors of Parks and Goldman (2014), who analyzed the maximum likelihood problem in the context of the Jukes-Cantor model and obtained analytic solutions for boundary cases there by decomposing the boundary \(\partial (0,1)^{3}\) into 26 components, consisting of 8 vertices, 12 edges, and 6 faces, and then maximizing \(\ell \) on each of those individually. Our approach is similar, though we group certain edges and faces together in those cases in which the analysis is similar.

The approach taken to proving Theorem 2.4 is as follows. First, the problems of maximizing \(\ell \) in the interior case and boundary cases are considered separately in Sects. 4.1 and 4.2 respectively. Section 4.3 presents several lemmas which compute and compare log-likelihoods of local maxima in various cases, with results summarized in Table 3. Finally, in Sect. 4.4, we utilize these results to prove Theorem 2.4.

4.1 Maximizing the Log-Likelihood on \((0,1)^{3}\)

In this subsection we consider the problem of maximizing Eq. (8) on the set \((0,1)^{3}\). This set corresponds to those trees whose branches are of finite and nonzero length, when measured in expected number of mutations per site. Since \((0,1)^{3}\) is open, the existence of a local maximum is not guaranteed. The main result of this subsection gives, for generic positive data, necessary and sufficient conditions for \(\ell \) to have a local maximum in \((0,1)^{3}\), and a formula if it exists; it also shows \(\ell \) has at most one local maximum on \((0,1)^{3}\).

We begin with an important definition and a technical lemma.

Let \(\widehat{\phi }: \mathbb {R}^{3}\backslash \left\{ x:x_{1}x_{2}x_{3}=0\right\} \rightarrow \mathbb {C}^{3}\) be defined by

Further, let \(\phi \) be the restriction of \(\widehat{\phi }\) to \(\mathcal {D}\):

The next lemma summarizes some useful properties of \(\phi \).

Lemma 4.1

The function \(\phi :\mathcal {D} \rightarrow (0,1)^{3}\) is a continous bijection, with inverse function \(\phi ^{-1}:(0,1)^{3}\rightarrow \mathcal {D}\) given by

Proof of Lemma 4.1

First, note that if \(x=(x_{1},x_{2},x_{3})\in \mathcal {D}\) then \(\phi (x)\in (0,1)^{3}\) by the definition of \(\widehat{\phi }\) and \(\mathcal {D}\). Moreover, since \(x_{1},x_{2},x_{3}\ne 0\), whenever \(x=(x_{1},x_{2},x_{3})\in \mathcal {D}\), it follows that \(\phi \) is continuous on \(\mathcal {D}\).

Next, to show that \(\phi \) is injective, let \(x,\tilde{x}\in \mathcal {D}\) such that \(\phi (x)=\phi (\tilde{x})\). Let \(\phi _{1}\) and \(\phi _{2}\) denote the first and second components of \(\phi \) respectively. Then \(\phi _{1}(x)\phi _{2}(x)=\phi _{1}(\tilde{x})\phi _{2}(\tilde{x})\) or equivalently, \(x_{1}^{2}= \tilde{x}_{1}^{2}\). Since \(x,\tilde{x}\in (0,1)^{3}\), this implies \(x_{1}=\tilde{x}_{1}\). Similar arguments show that \(x_{2}=\tilde{x}_{2}\) and \(x_{3}=\tilde{x}_{3}\), and hence \(x=\tilde{x}\). Therefore \(\phi \) is injective.

Next we show that \(\phi \) is surjective. Let \(y\in (0,1)^{3}\) be arbitrary. Then it is easy to see that the point \(y'=(y'_{1},y'_{2},y'_{3}):=(y_{1}y_{2},y_{1}y_{3},y_{2}y_{3})\) satisfies

for all choices of distinct \(i,j,k\in [3]\), and hence \(y'\in \mathcal {D}\). Moreover, \(\phi (y')=y\) by definition of \(\widehat{\phi }\). Therefore \(\phi \) is surjective and has an inverse given by the formula \(\phi ^{-1}(y)=y'\), which is precisely the formula in Eq. (23). \(\square \)

We are now ready to state the main lemma of this subsection, which solves the problem of maximizing \(\ell \) on the set \((0,1)^{3}\), or in other words, solves the maximum likelihood problem for biologically plausible parameters.

Lemma 4.2

(MLE for 3 Leaf Tree—Interior Case) Assume that A.1 and A.2 hold, and let

If \({\textbf {B}}\in \mathcal {D}\) then \(\theta ^{*}\) is the unique local maximum of \(\ell \) in \((0,1)^{3}\) and has log-likelihood

On the other hand, if \({\textbf {B}}\notin \mathcal {D}\) then \(\ell \) has no local maximum on \((0,1)^{3}\).

Proof of Lemma 4.2

For ease of notation, we will write

It follows by Eqs. (8) and (11) that

An initial attempt to compute the critical points of \(\ell (\theta )\) directly by taking the gradient of \(\ell \) yields a polynomial system which is difficult to solve analytically, so instead we modify this approach by first considering a different function whose extrema are closely related to those of \(\ell \).

To define this function, first let \(\mathcal {D}_{F}\subseteq \mathbb {R}^{3}\) be the intersection of half-spaces defined by the following inequalities

and let \(F:\mathcal {D}_{F}\rightarrow \mathbb {R}\) be defined by

The significance of F is owed to the observation that \(\ell = F\circ \phi ^{-1}\), which is proved in the next claim.

Claim 1: For all \(\theta \in (0,1)^{3}\),

Proof of Claim 1

Since the domain of \(\ell \) is \((0,1)^{3}\), it follows from Lemma 4.1 and Eqs. (26) and (28) that Eq. (29) holds provided that F is defined on the image of \(\phi ^{-1}\). Therefore, since \(\text {im}(\phi ^{-1})=\mathcal {D}\) and \(\text {dom}(F)=\mathcal {D}_{F}\) it will suffice to show that \(\mathcal {D}\subseteq \mathcal {D}_{F}\).

Let \(u\in \mathcal {D}\). Then by Lemma 4.1 there exist \(w_{1},w_{2},w_{3}\in (0,1)\) such that

To show that \(u\in \mathcal {D}_{F}\), it suffices by Eq. (27) to show that

The first of these four equations holds trivially. As for the other three, we will only prove \(1+w_{1}w_{2}-w_{1}w_{3}-w_{2}w_{3}>0\), as the other two inequalities can be proved in the same manner. Let \(h(w_{1},w_{2}):=1+w_{1}w_{2} -w_{1}-w_{2}\). Since \(w_{1},w_{2}>0\) and \(w_{3}<1\), it holds that

Therefore it will suffice to show that \(h(w_{1},w_{2}) \ge 0\) for all \(w_{1},w_{2}\in [0,1]\). Indeed, using calculus it is easy to see that h is minimized on \([0,1]\times [0,1]\) when at least one of the arguments \(w_{1},w_{2}\) equals one, and that the minimum is zero. Therefore \(1+w_{1}w_{2}-w_{1}w_{3}-w_{2}w_{3}>0\). We conclude that \(\mathcal {D}\subseteq \mathcal {D}_{F}\), as required to prove the claim. \(\square \)

The next two claims serve to characterize the extrema of F.

Claim 2. The point \({\textbf {B}}=(B_{12},B_{13},B_{23})\) is the unique critical point of F.

Proof of Claim 2

It is first necessary to verify that \({\textbf {B}}\) is in the domain of F. To do this, it will suffice to show that \({\textbf {B}}\) satisfies the inequalities in Eq. (27).

Using Eq. (20) and the observation \(\bar{s}_1+\bar{s}_2+\bar{s}_3+\bar{s}_4=N\), it is easy to check that

Therefore by A.1, it follows that \({\textbf {B}} = (B_{12},B_{13},B_{23})\) satisfies the inequalities in Eq. (27), as required. Therefore \({\textbf {B}}\) is in the domain of F.

We now proceed with a standard critical point calculation. Letting \(v_{1} = (1,1,1)^{\top }\), \(v_{2} = (-1,-1,1)^{\top }\), \(v_{3}= (-1,1,-1)^{\top }\), \(v_{4} =(1,-1,-1)^{\top }\), and taking partial derivatives of F in Eq. (28) with respect to the variables x, y and z, it follows that for all \(u=(x,y,z)^{\top }\in \mathcal {D}_{F}\),

where

Setting \(\nabla F = 0\) and using Eq. (31), we deduce that the following system of equations holds:

Substituting the formulas for the \(A_{i}\)’s from Eq. (32) and rearranging terms, we obtain

Writing this out, this is the matrix equation

Using the fact that \(\bar{s}_1+\bar{s}_2+\bar{s}_3+\bar{s}_4=N\), one can check that the \(3\times 3\) matrix in the above equation has inverse

Therefore

By Eq. (20), the right-hand side is precisely the vector \((B_{12},B_{13},B_{23})^{\top }\), and therefore we conclude that \({\textbf {B}}=(B_{12},B_{13},B_{23})\) is the unique critical point of F on its domain \(\mathcal {P}\). This completes the proof of the claim. \(\square \)

Let \(H_{F}\) denote the Hessian matrix of F; that is,

It is clear that F is twice-differentiable on its domain, so \(H_{F}(x)\) is defined for all \(x\in \mathcal {D}_{F}\).

Claim 3. \(H_{F}({\textbf {B}})\) is negative definite.

Proof of Claim 3

Since \(H_{F}({\textbf {B}})\) is a real symmetric matrix, it will suffice to show that its eigenvalues are all negative, as this will imply that \(H_{F}({\textbf {B}})\) is negative definite.

Using the code in Appendix B, we first compute the characteristic polynomial of \(H_{F}\):

where \(t_{1}=1+x+y+z\), \(t_{2}=1-x-y+z\), \(t_{3}=1-x+y-z\), \(t_{4}=1+x-y-z\), and \(\bar{s}_1,\bar{s}_2,\bar{s}_3,\bar{s}_4\) are defined in Eq. (25).

We need to show that \(P_\textrm{char}\) has only negative roots, as this will imply that all three eigenvalues of \(H_{F}\) are negative, and hence that \(H_{F}({\textbf {B}})\) is negative definite. Since \(H_F\) is a real symmetric matrix, the roots of \(P_\textrm{char}\) are all real numbers, and therefore it will be enough to show that \(P_\textrm{char}\) has no nonnegative roots. Indeed, since all the coefficients of \(P_\textrm{char}\) are positive, Decartes’ rule of signs implies that \(P_\textrm{char}\) has no positive roots. Moreover since \((\bar{s}_1,\bar{s}_2,\bar{s}_3,\bar{s}_4)\ne (0,0,0,0)\) by A.1, the constant term in \(P_\textrm{char}\) is nonzero, and hence \(P_\textrm{char}(0)\ne 0\) as well. We conclude that \(P_\textrm{char}\) has no nonnegative roots, as required to prove the claim. \(\square \)

Using the results of the previous three claims, the next two claims will together characterize the local maxima of \(\ell \) on \((0,1)^{3}\).

Claim 4: If \({\textbf {B}}\in \mathcal {D}\) then \(\theta ^{*}\in (0,1)^{3}\) and \(\theta ^{*}\) is a local maximum of \(\ell \).

Proof of Claim 4

Observe that F and \(\phi ^{-1}\) are both differentiable on their respective domains. Therefore if \(\theta \in (0,1)^{3}\) and \(x=\phi ^{-1}(\theta )\), then using the chain rule to differentiate Eq. (29) gives

Suppose \({\textbf {B}}\in \mathcal {D}\). Then by Lemma 4.1, \(\theta ^{*}=\phi ({\textbf {B}})\in (0,1)^{3}\) and \({\textbf {B}}=\phi ^{-1}(\theta ^{*})\). Therefore Eq. (33) implies

By Claim 2, \({\textbf {B}}\) is a critical point of F, i.e., \(\nabla F({\textbf {B}})=0\). Therefore

This shows that \(\theta ^{*}\) is a critical point of \(\ell \).

Next we show that \(\theta ^{*}\) is a local maximum of \(\ell \). Since \(\ell =F\circ \phi ^{-1}\) by Eq. (29), and since F and \(\phi ^{-1}\) are both twice differentiable on their respective domains, therefore it follows by the chain rule for Hessian matrices (see, e.g., Magnus and Neudecker 2007, pp. 125–126), that \(\ell \) is also twice differentiable and has Hessian matrix

where

for all \(\theta \in (0,1)\). Since \({\textbf {B}}\) is a critical point of F due to Claim 2, we have \(\frac{\partial F}{\partial x_{i}}({\textbf {B}}) = 0\) for each \(i=1,2,3\). Therefore

Since \(H_{F}({\textbf {B}})\) is a negative definite matrix by Claim 3, and since \(\det J_{\phi ^{-1}}(\theta ^{*}) \ne 0\) by Eq. (34), we conclude that \(H_{\ell }(\theta ^{*})\) and \(H_{F}({\textbf {B}})\) are similar matrices, and hence \(H_{\ell }(\theta ^{*})\) is negative definite as well. Therefore by the second derivative test (see, e.g., Magnus and Neudecker 2007, Theorem 4, p. 140), the point \(\theta ^{*}\) is a local maximum of \(\ell \). This completes the proof of the claim. \(\square \)

Claim 5: If \(\theta \in (0,1)^{3}\) is a local maximum of \(\ell \) then \({\textbf {B}}\in \mathcal {D}\) and \(\theta =\theta ^{*}\).

Proof of Claim 5

Let \(\theta \in (0,1)^{3}\) be a local maximum of \(\ell \) and let \(x=\phi ^{-1}(\theta )\). Since \(\theta \) a critical point, Eq. (33) implies

Since

it follows that \(J_{\phi ^{-1}}(\theta )\) is non-singular, and hence

Therefore x is a critical point of F. Since the only critical point of F is \({\textbf {B}}\) by Claim 3, it follows that \(x = {\textbf {B}}\). Since \(x\in D\) by Lemma 4.1, this implies that \({\textbf {B}}\in \mathcal {D}\). Therefore

This completes the proof of the claim. \(\square \)

We can now use Claims 4 and 5 to prove the theorem. If \({\textbf {B}}\in \mathcal {D}\) then Claims 4 and 5 imply that \(\theta ^{*}\) is the unique local maximum in \((0,1)^{3}\). On other other hand, if \({\textbf {B}}\notin \mathcal {D}\), then the contraposition of Claim 5 implies \(\ell \) has no local maximum in \((0,1)^{3}\). This proves the first part of Lemma 4.2; it remains only to prove Eq. (24).

Indeed, if \({\textbf {B}}\in \mathcal {D}\) then since \(\theta ^{*} = \phi (B_{12},B_{13},B_{23})\), it follows by definition of \(\phi \) that

for all \(i,j\in [3]\) such that \(i<j\). Plugging Eq. (35) into Eq. (30),

Therefore by Eq. (26),

which is precisely Eq. (24). This completes the proof of Lemma 4.2. \(\square \)

4.2 Maximizing the Log-Likelihood on \(\partial (0,1)^{3}\)

In this subsection we consider the problem of maximizing \(\ell \) on the boundary \(\partial (0,1)^{3}\). As discussed at the beginning of Sect. 4, this corresponds to the boundary of the unit cube, consisting of 6 faces, 12 edges, and 8 vertices. The lemmas in this section consider the problem of maximizing \(\ell \) on various groupings of these components.

The eight vertices of the unit cube are simply the elements of the set \(\left\{ 0,1\right\} ^{3}\). The twelve edges are the sets

defined for \(j,k\in \left\{ 0,1\right\} \). The 6 faces are the sets of the form

where \(\pi \in \text {Alt}(3)\) and \(i\in \left\{ 0,1\right\} \).

The task at hand is to maximize \(\ell \) on each of these boundary sets. We begin with the next lemma, which utilizes assumption A.1 to dispatch the edge and vertex boundary cases which are “trivial” in the sense of never containing the maximum.

Lemma 4.3

(Trivial cases: \(\mathcal {E}_\textrm{triv}\)) Assume that A.1 holds. Let

If \(\theta \in \mathcal {E}_\textrm{triv}\) then \(\ell (\theta \mid s) = -\infty \).

Proof

If \(\theta \in E_{(1,1,\cdot )}\cup E_{(1,\cdot ,1)}\cup E_{(\cdot ,1,1)}\cup \left\{ (0,1,1),(1,0,1),(1,1,0),(1,1,1)\right\} \) then there exist two leaves \(i,j\in [3]\) with \(i\ne j\) such that \(\theta _{i}=\theta _{j}=1\). By A.1, there exists a \(\sigma \in \left\{ 1,-1\right\} ^{3}\) with \(\sigma _{i}\ne \sigma _{j}\) and \(s_{\sigma }>0\). Moreover, by Eq. (2), the probability of a transition occuring along the path between leaves i and j is zero. In particular, since \(\mathbb {P}\left[ X=\sigma \right] \le \mathbb {P}\left[ X_{i}\ne X_{j} \right] \), this implies that \(\mathbb {P}\left[ X=\sigma \right] = 0\). Therefore

Therefore \(\ell (\theta )=-\infty \) by Eq. (8). \(\square \)

The next lemma considers the remaining 4 vertices of the unit cube (1, 0, 0), (0, 1, 0), (0, 0, 1), and (0, 0, 0), as well as the edges \(E_{(0,0,\cdot )}\), \(E_{(0,\cdot ,0)}\), and \(E_{(\cdot ,0,0)}\). The union of these boundaries is the following set:

The next lemma shows that \(\mathcal {E}_\textrm{ind}\) consists of all choices of numerical parameter values under which the \(X_{1},X_{2},\) and \(X_{3}\) are independent.

Lemma 4.4

(Log-likelihood of \(\ell \) on \(\mathcal {E}_\textrm{ind}\)) If \(\theta \in \mathcal {E}_\textrm{ind}\) then

Proof

Suppose \(\theta \in \mathcal {E}_\textrm{ind}\), and let \(i,j\in [3]\) such that \(i\ne j\). Then the path between leaves i and j contains an edge e such that \(\theta _{e}=0\). Therefore by Lemma 1.3, the random variables \(X_{1},X_{2}\) and \(X_{3}\), are mutually independent. Therefore for all \(\sigma \in \left\{ -1,1\right\} ^{3}\),

Substituting this into Eq. (8) implies Eq. (36).

\(\square \)

An example of a tree with numerical parameters \(\theta \in F_{\pi ,1}= \left\{ \theta : \theta _{1},\theta _{2}\in (0,1),\theta _{3}=1\right\} \) with \(\pi =(1)\) the identity permutation. Since \(\theta _{3} = 1\), Eq. (2) implies that no transitions can occur on leaf 3, and hence vertex 3 is regarded to lie on the path between leaves 1 and 2

The next lemma of this section considers the problem of maximzing \(\ell \) on the three faces \(F_{\pi ,1}\), \(\pi \in \text {Alt}(3)\). An example of the graphical model correponding to these cases is shown in Fig. 3.

Lemma 4.5

(Maximizers of \(\ell \) on \(F_{\pi ,1}\)) Let \(\pi \in \text {Alt}(3)\), and let \(\theta \in F_{\pi ,1}\). Then the set of local maxima of \(\ell \) on \(F_{\pi ,1}\) is

Moreover, if \(\theta \in \mathcal {F}_{\pi }\) then

or equivalently

Proof

Let \(\pi \in \text {Alt}(3)\), and let \(i=\pi (1)\), \(j=\pi (2)\), and \(k=\pi (3)\). Let \(\theta _{i},\theta _{j}\in (0,1)\) be arbitrary. Since \(\theta _{k}=1\), it follows by Eq. (12)

Hence Eq. (8) can be written as

Therefore

Differentiating with respect to \(\theta _{i}\) and \(\theta _{j}\), it follows that for each \(u\in \left\{ i,j\right\} \),

Solving the system \(\nabla \ell (\theta ) = 0\), we obtain the solution satisfying

and if \(B_{ik},B_{jk}\in (0,1)\) then Eq. (41) determines the unique critical point of \(\ell \) on the set \(F_{\pi ,1}\); on the other hand, if \(B_{ik}\notin (0,1)\) or \(B_{jk}\notin (0,1)\), then \(\ell \) has no critical points on \(F_{\pi ,1}\).

Moreover, this critical point on \(F_{\pi ,1}\) is a maximum by the second derivative test since for all \(\theta \in F_{\pi ,1}\), the Hessian matrix

is negative definite.

Finally, if Eq. (41) holds, plugging \(\theta _{i}=B_{ik}\) and \(\theta _{j}=B_{jk}\) into Eq. (40) gives

which is nothing but Eq. (38) written in a different notation. It remains to prove Eq. (39). Observe that \(1+B_{\pi } = \frac{2\,M^{+}_{\pi }}{N}\) and \(1-B_{\pi } = \frac{2\,M^{-}_{\pi }}{N}\) for all \(\pi \in \text {Alt}(3)\). Equation (39) can be obtained by making these substitutions in Eq. (38) and then simplifying using logarithm properties and \(M_{\pi }^{+}+M_{\pi }^{-}=N\). \(\square \)

The final lemma of this section considers the problem of maximizing \(\ell \) when exactly one parameter in \(\left\{ \theta _{1},\theta _{2},\theta _{3}\right\} \) is assumed to be zero. This pertains to each of the 3 remaining faces \(F_{\pi ,0}\), \(\pi \in \text {Alt}(3)\), as well as the remaining six edges \(E_{(\cdot ,0,1)}\), \(E_{(\cdot ,1,0)}\), \(E_{(0,\cdot ,1)}\), \(E_{(1,\cdot ,0)}\), \(E_{(0,1,\cdot )}\), and \(E_{(1,0,\cdot )}\). Such boundaries represent one of two graphical models, like those shown in Fig. 2. We group the boundary cases up into the following three sets, defined for each \(\pi \in \text {Alt}(3)\):

Interpreted geometrically, \(G_{\pi }\) consists of the union of one face and two of its adjacent edges:

Lemma 4.6

(Maximizers of \(\ell \) on \(G_{\pi }\))

Let \(\pi \in \text {Alt}(3)\). The local maxima of \(\ell \) on \(G_{\pi }\) are the points in the set

all of which have log-likelihood

or equivalently

In particular, this implies that if \(B_{\pi }\notin (0,1)\) then \(\ell \) has no local maxima on F.

The proof of this lemma is similar to that of Lemma 4.5, and can be found in Appendix A.

The graphical model corresponding to a 3-leaf “tree” with edge parameters \(\theta \in E_{(1,\cdot ,0)}\) (left) or \(\theta \in F_{(\cdot ,\cdot ,0)}\) (right). In both cases, the biological meaning of \(\theta _{3}=0\) is that species 3 is “infinitely far away” from species 1 and 2, when distances are measured in expected number of mutations per site. By Lemma 1.3, \((X_{1},X_{2}) \perp \!\!\!\!\perp X_{3}\), and for this reason we depict vertex 3 as a disconnected vertex

The key results of Sects. 4.2 and 4.1 are summarized in Table 3. In that table, computed are the maximizer(s) of \(\ell \) on the interior and boundary of the unit cube (namely, the sets \(E_\textrm{int},\mathcal {E}_\textrm{triv},\mathcal {E}_\textrm{ind},G_{\pi }\), and \(F_{\pi ,1},\pi \in \text {Alt}(3)\), which together partition the closed unit cube). The corresponding sets of maximizers on each of these sets (\(\mathcal {E}_\textrm{int},\mathcal {E}_\textrm{triv},\mathcal {E}_\textrm{ind},\mathcal {G}_{\pi }\), and \(\mathcal {F}_{\pi }\), \(\pi \in \text {Alt}(3)\), respectively) are level sets of \(\ell \), although some of them may be empty depending on the data. The necessary and sufficient conditions for each of them to be nonempty, as well as the value that the log-likelihood function takes on each of them, are shown in the second and third columns of the table.

4.3 Comparisons of Likelihoods

To prove Theorem 2.4 will require some comparisons of the log-likelihoods of elements of \(\mathcal {E}_\textrm{int},\mathcal {E}_\textrm{ind},\mathcal {G}_{\pi },\) and \(\mathcal {F}_{\pi }\), \((\pi \in \text {Alt}(3))\). In this subsection, we prove several lemmas toward this end.

We will make use of the information inequality, which we state next (for proof, see, e.g., Theorem 2.6.3 in Cover (2006)).

Theorem 4.7

(Information Inequality) Let \(k \ge 1\). If \(\tilde{p}=(\tilde{p}_{1},\ldots ,\tilde{p}_{k})\) and \(\tilde{q}=(\tilde{q}_{1},\ldots ,\tilde{q}_{k})\) satisfy \(\tilde{p},\tilde{q}\in \Delta _{k-1}\) then

with equality if and only if \(\tilde{p}=\tilde{q}\).

The next two lemmas utilize the information inequality to show that elements of \(\mathcal {E}_\textrm{int}\) have greater log-likelihood than elements of \(\mathcal {F}_{\pi }\) and \(\mathcal {G}_{\pi }\), for all \(\pi \in \text {Alt}(3)\).

Lemma 4.8

(\(\mathcal {E}_\textrm{int}\) vs \(\mathcal {F}_{\pi }\)) If \(\theta ^{*}\in \mathcal {E}_\textrm{int}\) then

for all \(\theta \in \mathcal {F}_{\pi }\) and all \(\pi \in \text {Alt}(3)\).

Proof

We prove the case with \(\pi =(1)\) as the proofs for the cases with \(\pi \in \left\{ (123),(132)\right\} \) are similar.

Let \(\theta \in \mathcal {F}_{\pi }\). By Eq. (39),

Observe that

Therefore we can rewrite Eq. (45) as

Regrouping terms gives

To apply Theorem 4.7, it is first necessary to verify that

Since the entries of this vector are clearly nonnegative, it suffices to show that they sum to 1. Indeed, using \(M_{13}^{+}+M_{13}^{-}=N\) and \(M_{23}^{+}+M_{23}^{-}=N\), we have

Therefore by applying Theorem 4.7 to the right hand side of Eq. (46),

where the last equality follow from Eq. (24). \(\square \)

A similar application of Theorem 4.7 can be used to prove the following theorem; the details of the proof can be found in Appendix A.

Lemma 4.9

(\(\mathcal {E}_\textrm{int}\) vs \(\mathcal {G}_{\pi }\)) Assume that A.1 holds. If \(\theta ^{*}\in \mathcal {E}_\textrm{int}\) then

for all \(\theta \in \mathcal {G}_{\pi }\) and all \(\pi \in \text {Alt}(3)\).

The next lemma compares the likelihoods of elements in \(\mathcal {F}_{\pi }\) and \(\mathcal {G}_{\tilde{\pi }}\) when \(\pi ,\tilde{\pi }\in \text {Alt}(3)\) are distinct.

Lemma 4.10

(\(\mathcal {F}_{\pi }\) vs \(\mathcal {G}_{\tilde{\pi }}\) for \(\pi \ne \tilde{\pi }\)) Assume that A.1 holds. Let \(\pi _{1},\pi _{2}\) and \(\pi _{3}\) denote the three distinct elements of \(\text {Alt}(3)\). If \(\theta \in \mathcal {F}_{\pi _{1}}\) then

for all \(\tilde{\theta }\in \mathcal {G}_{\pi _{2}}\cup \mathcal {G}_{\pi _{3}}\).

Proof

Suppose \(\theta \in \mathcal {F}_{\pi _{1}}\), and suppose that \(\tilde{\theta }\in \mathcal {G}_{\tilde{\pi }}\) for some \(\tilde{\pi }\in \text {Alt}(3)\backslash \left\{ \pi _{1}\right\} \). Without loss of generality, assume \(\tilde{\pi }=\pi _{3}\). Then by Eqs. (38) and (43)

Since \(M_{\pi _{2}}^{+} = \frac{NB_{\pi _{2}}+ N}{2}\) and \(M_{\pi _{2}}^{-} = \frac{NB_{\pi _{2}}- N}{2}\), it follows that

As a function of \(B_{\pi _{2}}\), the right-hand side is strictly increasing on (0, 1), which can be seen by differentiating and observing that the derivative is positive on this interval. Moreover, we note that \(B_{\pi _{2}}\in (0,1)\), a fact which follows from the hypothesis that \(\mathcal {F}_{\pi _{1}}\ne \emptyset \) (see Table 3). Therefore, since the right-hand side of Eq. (48) is strictly increasing on (0, 1), and since \(B_{\pi _{2}}\in (0,1)\), it follows that

This completes the proof of the lemma. \(\square \)

The next lemma compares the likelihoods of elements in \(\mathcal {F}_{\pi }\) and \(\mathcal {F}_{\tilde{\pi }}\) when \(\pi ,\tilde{\pi }\in \text {Alt}(3)\) are distinct.

Lemma 4.11

(\(\mathcal {F}_{\pi }\) vs \(\mathcal {F}_{\tilde{\pi }}\), \(\pi \ne \tilde{\pi }\)) Let \(\pi ,\tilde{\pi }\in \text {Alt}(3)\) be distinct, and suppose that \(\theta \in \mathcal {F}_{\pi }\) and \(\tilde{\theta }\in \mathcal {F}_{\tilde{\pi }}\). Then

if and only if

Proof

By Eq. (38)

where \(f(x):=(1+x)\log \left( 1+x \right) +(1-x)\log \left( 1-x \right) \). Note that

which is positive for all \(x\in (0,1)\). Therefore f is increasing on (0, 1). Moreover, \(B_{\pi },B_{\tilde{\pi }}\in (0,1)\) since \(\mathcal {F}_{\pi }, \mathcal {F}_{\tilde{\pi }}\ne \emptyset \) (see Table 3). Taken together, these facts along with Eq. (49) imply that \(\ell (\theta )-\ell (\tilde{\theta })>0\) if and only if \(B_{\pi }>B_{\tilde{\pi }}\). \(\square \)

The next lemma compares the log-likelihods of elements in \(\mathcal {G}_{\pi }\) and \(\mathcal {G}_{\tilde{\pi }}\), for distinct elements \(\pi ,\tilde{\pi }\in \text {Alt}(3)\). The proof is similar to that of Lemma 4.11 and can be found in Appendix A.

Lemma 4.12

(\(\mathcal {G}_{\pi }\) vs \(\mathcal {G}_{\tilde{\pi }}\) for \(\pi \ne \tilde{\pi }\)) Let \(\pi ,\tilde{\pi }\in \text {Alt}(3)\) such that \(\pi \ne \tilde{\pi }\), and let \(\theta \in \mathcal {G}_{\pi }\) and \(\tilde{\theta }\in \mathcal {G}_{\tilde{\pi }}\). Assume that A.1 holds. If

then

The next lemma shows that elements of \(\mathcal {G}_{\pi },\pi \in \text {Alt}(3)\) have greater log-likelihood than elements in \(\mathcal {E}_\textrm{ind }\). The proof is straightforward and can be found in Appendix A.

Lemma 4.13

(\(\mathcal {G}_{\pi }\) vs \(\mathcal {E}_\textrm{ind}\)) If \(\theta \in \mathcal {G}_{\pi }\) for some \(\pi \in \text {Alt}(3)\) then

4.4 Final Analysis

Using the lemmas from Sects. 4.1, 4.2 and 4.4, we are now ready to prove Theorem 2.4.

Proof of Theorem 2.4

We claim that \(\theta \mapsto \ell (\theta )\) is upper semicontinuous on its domain \([0,1]^{3}\). To see this, first observe that the function \(L:[0,1]^{3}\rightarrow [0,\infty )\) defined by

is continuous since for all \(\alpha \subseteq [2]\), \(\theta \mapsto \bar{p}_{\alpha }(\theta )\) is a polynomial in the variables \(\theta _{1},\theta _{2},\theta _{3}\in [0,1]\) by Eq. (12). Therefore, since \(\ell = \log \circ L\) and since \(\log (\cdot )\) is increasing and upper semicontinuous on \([0,\infty )\), it follows that \(\ell \) is upper semicontinuous on \([0,1]^{3}\). This proves the claim.

Since \(\ell \) is upper semicontinuous, it has at least one maximizer on \([0,1]^{3}\). In order to find the maximizer(s), observe that since

it suffices to consider the maximizers of \(\ell \) on each of these sets. By Lemma 4.3,

whenever \(\theta \in \mathcal {E}_\textrm{triv}\). Therefore if \(\widehat{\theta }\in [0,1]^{3}\) is a global maximum of \(\ell \), then

In Lemmas 4.2, 4.4, 4.6, and 4.5, we computed the log-likelihood of the points in each of the eight sets in this disjoint union (see Table 3 for a summary of these results), so the rest of the proof will simply be a comparison of the likelihoods of elements of these sets.

We start by proving that if \(\textbf{B}\in \mathcal {D}\), then the maximum is given by Eq. (21). Suppose \(\textbf{B}\in \mathcal {D}\). Then by Lemma 4.2, \(\mathcal {E}_\textrm{int}\) is nonempty and consists of a single element

Moreover, Lemmas Lemma 4.8, 4.9, 4.10, and 4.13 together imply that \(\theta ^{*}\) is the global maximizer of \(\ell \). This proves Eq. (21).

Henceforth assume \(\textbf{B}\notin \mathcal {D}\), so that \(\mathcal {E}_\textrm{int}=\emptyset \) by Lemma 4.2. Therefore

Next we will prove part (i) in the statement of the lemma. Suppose that \(B_{\pi _{3}},B_{\pi _{2}}>0\). It will suffice to show that \(\widehat{\theta }\in \mathcal {F}_{\pi _{1}}\).

By the criteria shown in Table 3, it holds that \(\mathcal {G}_{\pi _{3}}\ne \emptyset \) and \(\mathcal {F}_{\pi _{1}}\ne \emptyset \). Let \(\theta '\in \mathcal {G}_{\pi _{3}}\) and \(\theta ''\in \mathcal {F}_{\pi _{1}}\). By Lemmas 4.13 and 4.12,

whenever \(\theta \in \mathcal {E}_\textrm{ind}\cup \mathcal {G}_{\pi _{1}}\cup \mathcal {G}_{\pi _{2}}\). In addition, since \(\pi _{1}\ne \pi _{3}\), Lemma 4.10 implies

Therefore by Eqs. (53) to (52),

Finally, observe that by Lemma 4.11,

whenever \(\theta \in \mathcal {F}_{\pi _{1}}\sqcup \mathcal {F}_{\pi _{2}}\). By Eqs. (55) and (56), we conclude that \(\widehat{\theta }\in \mathcal {F}_{\pi _{1}}\). This proves part (i) of the lemma.

Next, suppose that \(B_{\pi _{3}}>0\) and \(B_{\pi _{2}}<0\). In order to prove part (ii) in the statement of the lemma, it will suffice to show that \(\widehat{\theta }\in \mathcal {G}_{\pi _{3}}\). By the criteria in Table 3, \(\mathcal {F}_{\pi }=\emptyset \) for all \(\pi \in \text {Alt}(3)\), and \(\mathcal {G}_{\pi }=\emptyset \) for all \(\pi \in \text {Alt}(3)\backslash \left\{ \pi _{1},\pi _{2}\right\} \). Therefore by Eq. (52),

By Lemma 4.9, the elements of \(\mathcal {G}_{\pi _{3}}\) have strictly larger log-likelihood than the elements of \(\mathcal {E}_\textrm{ind}\). Therefore \(\widehat{\theta }\in \mathcal {G}_{\pi _{3}}\). This proves part (ii) of the lemma.

It remains to prove part (iii) in the statement of the lemma. Suppose \(B_{\pi _{3}}<0\). By the criteria in Table 3, \(\mathcal {F}_{\pi }=\emptyset \) and \(\mathcal {G}_{\pi }=\emptyset \) for all \(\pi \in \text {Alt}(3)\). Therefore by Eq. (52), \(\widehat{\theta }\in \mathcal {E}_\textrm{ind}\). This proves part (iii), which completes the proof of the theorem.

Notes

For trees with more than 3 leaves, a similar interpretation holds using the inequalities of the 4-point condition.

References

Allman ES, Rhodes JA (2007) Phylogenetic invariants. In: Gascuel O, Steel M (eds) Reconstructing evolution. Oxford University Press, Oxford, pp 108–146

Allman ES, Banos H, Evans R, Hosten S, Kubjas K, Lemke D, Rhodes JA, Zwiernik P (2019) Maximum likelihood estimation of the latent class model through model boundary decomposition. J Algebr Stat 10(1):51–84

Anderson FE, Swofford DL (2004) Should we be worried about long-branch attraction in real data sets? Investigations using metazoan 18S rDNA. Mol Phylogenet Evol 33(2):440–451

Ardiyansyah M, Kosta D, Kubjas K (2021) The model-specific Markov embedding problem for symmetric group-based models. J Math Biol. https://doi.org/10.1007/s00285-021-01656-5

Bergsten J (2005) A review of long-branch attraction. Cladistics 21(2):163–193

Casanellas M, Fernández-Sánchez J, Garrote-López M (2021) Distance to the stochastic part of phylogenetic varieties. J Symb Comput 104:653–682

Chor B, Snir S (2004) Molecular clock fork phylogenies: closed form analytic maximum likelihood solutions. Syst Biol 53(6):963–967

Chor B, Hendy MD, Holland BR, Penny D (2000) Multiple maxima of likelihood in phylogenetic trees: an analytic approach. Mol Biol Evol 17(10):1529–1541

Chor B, Hendy MD, Snir S (2005) Maximum likelihood Jukes-Cantor triplets: analytic solutions. Mol Biol Evol 23(3):626–632

Chor B, Hendy M, Penny D (2007) Analytic solutions for three taxon ML trees with variable rates across sites. Discret Appl Math 155(6–7):750–758

Chor B, Khetan A, Snir S (2003) Maximum likelihood on four taxa phylogenetic trees: analytic solutions. In: Proceedings of the seventh annual international conference on Research in computational molecular biology, pp 76–83

Coons JI, Sullivant S (2021) Toric geometry of the Cavender–Farris–Neyman model with a molecular clock. Adv Appl Math 123:102119

Cover TM (2006) Elements of information theory. Wiley, New York

Evans SN, Speed TP (1993) Invariants of some probability models used in phylogenetic inference. Ann Stat 21:355–377

Garcia Puente LD, Garrote-López M, Shehu E (2022) Computing algebraic degrees of phylogenetic varieties. arXiv:2210.02116

Gross E, Davis B, Ho KL, Bates DJ, Harrington HA (2016) Numerical algebraic geometry for model selection and its application to the life sciences. J R Soc Interface 13(123):20160256

Hendy MD (1991) A combinatorial description of the closest tree algorithm for finding evolutionary trees. Discret Math 96(1):51–58

Hendy MD, Penny D (1993) Spectral analysis of phylogenetic data. J Classif 10:5–24

Hendy MD, Penny D, Steel MA (1994) A discrete Fourier analysis for evolutionary trees. Proc Natl Acad Sci 91(8):3339–3343

Hobolth A, Wiuf C (2024) Maximum likelihood estimation and natural pairwise estimating equations are identical for three sequences and a symmetric 2-state substitution model. Theor Popul Biol 156:1–4

Hoşten S, Khetan A, Sturmfels B (2005) Solving the likelihood equations. Found Comput Math 5(4):389–407

Jukes TH, Cantor CR et al (1969) Evolution of protein molecules. Mamm Protein Metab 3:21–132

Kosta D, Kubjas K (2019) Maximum likelihood estimation of symmetric group-based models via numerical algebraic geometry. Bull Math Biol 81(2):337–360

Magnus JR, Neudecker H (2007) Matrix differential calculus with applications in statistics and econometrics, 3rd edn. Wiley, New York

Matsen FA (2008) Fourier transform inequalities for phylogenetic trees. IEEE/ACM Trans Comput Biol Bioinf 6(1):89–95

Maxim LG, Rodriguez JI, Wang B, Wu L (2024) Logarithmic cotangent bundles, Chern–Mather classes, and the Huh–Sturmfels involution conjecture. Commun Pure Appl Math 77(2):1486–1508

Nguyen L-T, Schmidt HA, Von Haeseler A, Minh BQ (2015) IQ-TREE: a fast and effective stochastic algorithm for estimating maximum-likelihood phylogenies. Mol Biol Evol 32(1):268–274

Parks SL, Goldman N (2014) Maximum likelihood inference of small trees in the presence of long branches. Syst Biol 63(5):798–811

Price MN, Dehal PS, Arkin AP (2010) FastTree 2-approximately maximum-likelihood trees for large alignments. PLoS ONE 5(3):e9490

Rodriguez JI, Wang B (2017) The maximum likelihood degree of mixtures of independence models. SIAM J. Appl. Algebra Geom. 1(1):484–506

Semple C, Steel M (2003) Phylogenetics, vol 24. Oxford University Press on Demand, Oxford

Stamatakis A (2014) RAxML version 8: a tool for phylogenetic analysis and post-analysis of large phylogenies. Bioinformatics 30(9):1312–1313

Steel M (1994) The maximum likelihood point for a phylogenetic tree is not unique. Syst Biol 43(4):560–564

Sturmfels B, Sullivant S (2004) Toric ideals of phylogenetic invariants. J Comput Biol 12(2):204–28

Sullivant S (2018) Algebraic statistics, vol 194. Graduate Studies in Mathematics. American Mathematical Society, Providence, RI

Susko E, Roger AJ (2021) Long branch attraction biases in phylogenetics. Syst Biol 70(4):838–843

Yang Z (2000) Complexity of the simplest phylogenetic estimation problem. Proc R Soc Lond Ser B: Biol Sci 267(1439):109–116

Yang Z (2006) Computational molecular evolution. Oxford University Press, Oxford

Yang Z (2007) PAML 4: phylogenetic analysis by maximum likelihood. Mol Biol Evol 24(8):1586–1591

Acknowledgements

Rodriguez is partially supported by the Alfred P. Sloan Fellowship and the Office of the Vice Chancellor for Research and Graduate Education at UW-Madison with funding from the Wisconsin Alumni Research Foundation. Additional parts of this research were performed while JIR and MH were visiting the Institute for Mathematical and Statistical Innovation (IMSI) for the semester-long program on “Algebraic Statistics and Our Changing World,” IMSI is supported by the National Science Foundation (Grant No. DMS-1929348). MH is grateful for helpful discussions and feedback from Cécile Ané and Kaie Kubjas. The authors are thankful for the valuable comments by the referees.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Omitted Proofs

1.1 A.0.1 Proof of Lemma 4.6

Proof of Lemma 4.6

Let \(\theta \in \mathcal {G}_{\pi }\). For simplicity, write \(i=\pi (1)\), \(j=\pi (2)\), and \(k=\pi (3)\). Since \(\theta _{k}=0\), Eq. (12) implies that

Since the distribution depends only on the product\(\theta _{i}\theta _{j}\), and not on \(\theta _{i}\) or \(\theta _{j}\) independently, the log-likelihood restricted to the set \(G_{\pi }\) may thus be regarded as function of the single variable \(x:=\theta _{i}\theta _{j}\in (0,1)\). Plugging Eq. (57) into Eq. (8), we obtain

where the second equality follows from \(\sum _{\sigma \in \left\{ 1,-1\right\} }s_{\sigma }=N\). Regrouping terms,

Differentiating gives

Solving the equation \(\ell '(x)=0\), we find that f has at most one critical point on (0, 1), which is the point

provided that \(B_{ij}\in (0,1)\). If this is the case, then x is a local maximum by the second derivative test, since

Plugging \(x=B_{ij}\) into Eq. (58) implies Eq. (43), since \(M_{ij}^{\pm }=B_{\pi }^{\pm }\) and \(B_{ij}=B_{\pi }\).

Equation (44) then follows from Eq. (43) along with the observations that \(M^{+}_{\pi }+M^{-}_{\pi }=N\), \(1+ B_{\pi }=\frac{2\,M^{+}_{\pi }}{N}\), and \(1- B_{\pi }=\frac{2\,M^{-}_{\pi }}{N}\). \(\square \)

1.2 A.0.2 Proof of Lemma 4.9

Proof of Lemma 4.9

We prove the case with \(\pi =(1)\), as the proofs for the cases with \(\pi \in \left\{ (123),(132)\right\} \) are similar. If \(\mathcal {E}_\textrm{int}=\emptyset \) then there is nothing to show, so henceforth assume \(\mathcal {E}_\textrm{int}\ne \emptyset \). By Lemma 4.2, \(\mathcal {E}_\textrm{int}=\left\{ \theta ^{*}\right\} \) and

Also by Lemma 4.2 it must be the case that

Moreover since \(B_{12}>0\), it follows that \(\mathcal {G}_{(1)}\ne \emptyset \) by Lemma 4.6.

Let \(\theta \in \mathcal {G}_{\pi }\). By Eq. (44),

Therefore by Theorem 4.7 and by Eq. (59),

Suppose that equality holds in the above, i.e., that \(\ell (\theta )=\ell (\theta ^{*})\). Then by Theorem 4.7,

Therefore,

but this contradicts Eq. (60). We conclude that \(\ell (\theta )<\ell (\theta ^{*})\). \(\square \)

1.3 A.0.3 Proof of Lemma 4.12

Proof

Since \(B_{\pi }=2M^{+}_{\pi }-N\) and \(B_{\tilde{\pi }}=2M^{+}_{\tilde{\pi }}-N\), therefore Eq. (50) can be rewritten

and therefore it holds that

where the last inequality holds by A.1.