Abstract

Instructional designers perform informal formative evaluation in design practice. An evaluation may be used to locate errors in alignment of instructional objectives or to increase the quality or effectiveness of a design. An instructional design review is similar to peer reviews in higher education which are often structured, and tools are provided to contribute to the review. A study was performed to identify the support structures and tools that contribute to building a community of feedback within the practice of instructional design reviews. Six instructional designers and design managers were interviewed to gather the processes they use in design reviews and to understand their perceptions of the practice. There was an alignment between manager support and an environment that promotes a supportive review. The designers described a “culture of feedback” when there was structure and there were supports provided for reviews.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

When students have negative perceptions of feedback during peer assessments in higher education, goals of the assessment may not be met (Lowell & Ashby, 2018; McDonald et al., 2019; McMahon, 2010; Ozogul & Sullivan, 2009; Woolf & Quinn, 2001). This negativity could be due to a lack of a community feeling or unclear instructions for the feedback activity. Instructors put processes in place to minimize the issues students encounter when completing peer feedback using training, checklists, or forms. These measures aid in driving the activities for the classroom toward meeting the educational goals and creating higher satisfaction rates of the activity.

Similarly, feedback in instructional design practice is a part of the formative evaluation process where designers have others review their designs or development work before final sign-off (DeVaughn & Stefaniak, 2020b; Williams et al., 2011; Woolf & Quinn, 2001). When feedback is not sought or considered, undue costs could incur for an organization through errors in the materials (Klein & Kelly, 2018). Oftentimes designers may find a lack of confidence or comfort with giving or receiving feedback during a design review and it is important to understand the reasons behind it. The following study sought to understand the process of instructional designers practicing informal formative evaluation with a close examination into the support structures and tools that contribute to building a community of feedback within the practice of instructional design reviews.

Literature Review

The Role of Informal Formative Evaluation in Instructional Design

Evaluation is a part of many instructional design (ID) models and processes such as the Systems Approach model for designing instruction and the ADDIE (Analysis, Design, Development, Implementation, Evaluation) process (Dick et al., 2005; Guerra-Lopez, 2008; Morrison et al., 2013). Morrison et al. (2013) explained how evaluation is one of the “fundamental components of instructional design” (p. 14). Evaluation continues to be an important part of ID as it leads to creating higher quality design products that meet the educational goals for the product (Chen et al., 2012; Guerra-Lopez, 2008).

Evaluation can be informal or formal and can be categorized as formative, summative, and confirmative (DeVaughn & Stefaniak, 2020a, b; Guerra-Lopez, 2008; Morrison et al., 2013). Formative evaluation is typically implemented iteratively during the design and development phases for the purpose of improvement, which could be performed by internal colleagues or external stakeholders (Scriven, 1967, 1991). Formative evaluation purports to ensure the product is free of errors and meets the objectives of the evaluation (Chen et al., 2012; Guerra-Lopez, 2008). Summative evaluation generally takes place after the educational product is complete and is intended for the purpose of evaluating the effectiveness of the product, while confirmative evaluation serves to test the effectiveness of a product over time (Guerra-Lopez, 2008; Morrison et al., 2013; Scriven, 1967, 1991).

Informal formative evaluation is the everyday process of designers or subject matter experts reviewing the design and development of curricula toward improvement of a product, and it is such an informal part of designers’ work that designers may not consider the act an evaluation process (DeVaughn & Stefaniak, 2020b; Williams et al., 2011). Due to various reasons (such as employers not understanding the need for evaluation, lack of time for deploying a training product, or costs of conducting evaluation), informal formative evaluation is happening in practice more than formal formative, summative, or confirmative evaluation (DeVaughn & Stefaniak, 2020a).

Competencies Expected for ID Practitioners

There are many competencies cited in literature for IDs to have in practice. When ranked, evaluation as a general term is one of the more mentioned, standard competencies (Ritzhaupt et al., 2018; Sugar et al., 2011). A hurdle with researching competencies is that studies often do not delineate evaluation and other competencies or separate evaluation by formative, summative, and confirmative. As an example, within the Rabel and Stefaniak (2018) article, evaluation is grouped with implementation. In Ritzhaupt et al. (2018), project management and providing feedback are grouped as a highly ranked competency. Employers expect designers to have certain core ID competencies when they begin a position in an organization, and they do not believe they should need to train the IDs in core competencies (Rabel & Stefaniak, 2018).

The Klein and Kelly (2018) article compiled competencies into five categories: “(1) instructional design, (2) instructional technology, (3) communication, and interpersonal, (4) management, and (5) personal skills” (p. 238). These five categories should be present in entry-level and expert IDs, with a stronger emphasis on instructional technology for entry-level designers and a higher level of competency in instructional design for expert designers. Interviewees in Klein and Kelly (2018) indicated understanding evaluation is more of an expert skill than an entry-level skill; however, they also mentioned evaluation is not used often in their practices for measuring the effectiveness of products. In Rabel and Stefaniak’s (2018) findings, evaluation was cited as being taught to entry-level designers during onboarding. Rabel and Stefaniak (2018) also mentioned the aspect of IDs having more skills than employers engage them to use. Evaluation was one of the skills Rabel and Stefaniak (2018) indicated it would behoove organizations to use but it was not used as much in practice. A lack of IDs or employers understanding evaluation, and describing evaluation in different ways, contributes to difficulties in knowing where evaluation is ranked in ID competencies.

Even though evaluation may not be as requested by employers, the employers were requesting the skills and competencies aligned to informal formative evaluation or the act of providing design feedback (Klein & Kelly, 2018). Communication and collaboration skills were generally ranked as most mentioned competencies for designers (Klein & Kelly, 2018; Sugar et al., 2011; Wang et al., 2021). Since providing design feedback during formative evaluation requires working with others and providing clear instructions for improvement, communication and collaboration would be key skills used during the act of providing design feedback (Lowell & Ashby, 2018). Designers should be able to effectively communicate and collaborate with internal and external stakeholders and within their teams (Klein & Kelly, 2018; Scriven, 1967, 1991; Wang et al., 2021).

Challenges in Providing Design Feedback

There were studies of the challenges of evaluation in practice that laid the groundwork for how informal formative evaluation is happening more than summative and confirmative (DeVaughn & Stefaniak, 2020b; Williams et al., 2011). There was little research of evaluation in ID practice (DeVaughn & Stefaniak, 2020a). There was also discussion of how faculty and practitioners knew evaluation was important, but students and employers did not always share the understanding of importance. ID practitioners may be performing evaluation, but they may not realize their practices are defined as evaluation. Additional discussion indicated if students or practitioners understood the importance of evaluation, then they may be able to encourage employers to practice evaluation in the field. This mirrored information from Guerra-Lopez (2008) where there was an explanation of how evaluators must be able to articulate the purpose of an evaluation and keep stakeholders focused on the most effective evaluation strategies.

DeVaughn and Stefaniak (2020b) shared the challenge of how evaluators or reviewers of design may not give effective feedback or may veer from the intent of the review. There were similar discussions in Scriven (1967) where he noted there may be challenges when a reviewer is not an expert in the curriculum being reviewed. Some research provided insight into the differences between expert and novice designers and how they perform evaluation or action design feedback (Klein & Kelly, 2018; LeMaistre, 1998). Klein and Kelly (2018) provided an explanation of how the expert designers were more involved in evaluation processes in general. LeMaistre (1998) also conducted a study to help differentiate evaluation processes between expert and novice designers. The findings showed differences between the less experienced and more experienced designers for how the designers chose to action feedback, how much feedback was actioned, and how the designer articulated the feedback they were reviewing.

A recommendation from many studies was to have higher education students perform authentic assignments where evaluation processes are included from the beginning of product design to the end within the curriculum of a course (DeVaughn & Stefaniak, 2020a, b; Klein & Kelly, 2018; Woolf & Quinn, 2001). Sugar (2014) demonstrated the authentic teaching strategy through the creation and implementation of ID case studies that were used within assignments in the classroom. Another recommendation could be within the onboarding of an ID. Rabel and Stefaniak (2018) provided research findings related to onboarding IDs in practice, which included evaluation processes where more expert designers were paired with more novice designers to train them on core competencies needed for the job.

Types of Formative Evaluation Support Tools

Information about the types of tools used by ID practitioners during design reviews for formative evaluation seemed limited, but there were studies where tools were used within higher education during peer reviews or peer assessments (Lowell & Ashby, 2018; McDonald et al., 2019; McMahon, 2010; Woolf & Quinn, 2001). A process of formative evaluation includes using feedback to continuously improve the educational product (Scriven, 1967, 1991), so in higher education, instructors used this same feedback structure as a part of their curriculum and it was described as peer feedback or peer assessment. Furthermore, ID higher education programs incorporated peer feedback practices within the formative review of educational product design and development as a part of their curricula.

Within the studies, instructors used detailed instructions, checklists, forms, and training as formative evaluation support structures and tools (Lowell & Ashby, 2018; McDonald et al., 2019; McMahon, 2010; Woolf & Quinn, 2001). McDonald et al. (2019) studied ID studios for the ID program curriculum and they had more experienced students provide feedback to the less experienced students. Woolf and Quinn (2001) had the instructor provide effective feedback throughout the semester as a part of training students to perform feedback correctly. What was evident in many studies was support structures and tools were needed for formative evaluation to be successful, and even with the inclusion of tools, there were still some challenges with knowing whether to action the feedback (Lowell & Ashby, 2018; Woolf & Quinn, 2001).

Perceptions of the Peer Feedback Process

Lowell and Ashby (2018) shared they redesigned a course formative evaluation activity after students provided negative perceptions of receiving feedback and expressed their lack of confidence in providing feedback. Students shared the negative perceptions kept them from actioning the feedback they received from peers. In their redesign of the activity, additional training was provided to teach the students how to provide feedback and they used a form for guiding the feedback. Another aspect noted was once the students understood the importance of providing feedback, the students paid more attention to the feedback aspect of the assignment. The study pointed out the need for providing a space that felt safe for providing feedback and the need for a community feel within a course. McMahon (2010) and Woolf and Quinn (2001) had previously shared similar findings as Lowell and Ashby (2018) in both categories of creating a safe community space and in providing training for providing feedback. Prior to implementing training, the students in the McMahon (2010) study had shared similar negative feelings about receiving or providing feedback.

In the McDonald et al. (2019) article where the program implemented a design studio experience, the advanced students who provided the feedback expressed some lack of confidence in providing feedback. Both sets of students who received and gave the feedback shared they understood more about the importance of providing feedback after going through the design studio experience and both sets of students had an increase in confidence after completing the peer review process. Students in the Lowell and Ashby (2018) and McMahon (2010) studies felt the same increase in confidence once additional support structures and tools were added to the activity.

Purpose of Study

ID practitioners must undergo design reviews and receive feedback as a part of the informal formative evaluation process to improve the quality of educational products. It is unclear from ID practitioner research if IDs are experiencing similar negative perceptions to what students felt in higher education peer review processes (Lowell & Ashby, 2018; McDonald et al., 2019; McMahon, 2010; Woolf & Quinn, 2001). It is also unclear what support structures or tools ID practitioners are provided in their workplaces that support design review feedback processes and if the support structures and tools are similar to what higher education instructors are using in peer feedback activities. The following research question has been identified: What are the support structures and tools that contribute to building a community of feedback within the practice of instructional design reviews?

Methods

This qualitative study sought to explore if there were common themes with informal formative evaluation support structures and tools. There was also an effort to determine if there were common perceptions about informal formative evaluation amongst instructional design practitioners across various industries.

Participants

Six participants (three women and three men) were purposefully recruited via social media and personal networks (Creswell & Guetterman, 2019). One important criterion was that the participants were responsible for design and/or development of instructional products and they were working within the design and development processes a minimum of 50% of their role.

Two of the participants (See Table 1) were working in higher education, two were in corporate healthcare positions, one was from aviation, and one was from the beverage industry. One of the participants was a manager of an instructional design team. The participants ranged in years of experience from one year to more than 30 years of instructional design experience.

Instruments

An interview protocol was developed to ensure questions were consistent and allowed for the interviewer to ask the participants to expand on their responses (Leedy & Ormrod, 2019). The beginning questions of the protocol asked the interviewee to talk about their own experiences openly. Next, the questions provided were aligned to the research questions, and finally, the end of the interview was where the interviewer expressed appreciation for the interviewees’ time and discussed next steps (Creswell & Poth, 2018).

Procedures

To recruit the participants, a social media post and an email template was created with inclusion-criteria questions. The social media post was shared with other instructional designers on LinkedIn and Facebook and included a call for instructional designers. The inclusion questions were:

-

Are you currently working as an instructional designer or manager of an instructional design team?

-

Does at least 50% of your current role include designing and developing instructional products?

-

Are internal or external stakeholders reviewing the design and/or development of your work (this can include your own design team members)?

Participants who met the criteria reached out via email to begin their interview process.

Following guidelines for semi-structured interviews, the participants were asked the same questions, with additional questions used to query for details related to the main questions (Creswell & Guetterman, 2019; Creswell & Poth, 2018; Leedy & Ormrod, 2019). The interviews took place using web conference software (Zoom) and were recorded. Notes were taken during the interviews and the transcript feature was used within Zoom to capture and transcribe the interviews. Each interview lasted approximately 30–50 minutes.

Using Braun and Clarke’s (2006) phases of thematic analysis, the data underwent many steps to code and produce themes. Member checking was used (Creswell & Poth, 2018), and the participants were asked to review the themes to ensure the information was captured accurately. Last, the themes of the data were reported with the purpose of informing future research.

Data Analysis

The interviews were recorded and transcribed in Zoom and analyzed utilizing Microsoft Word and Excel to determine if there were common themes in the information provided about the formative evaluation support structures and tools or perceptions of the process. To attenuate researchers’ own bias, an analytic memo was kept and all previous experiences with the phenomena were written down to help in separating the researcher’s experiences with the experiences of the participants (Creswell & Poth, 2018; Moustakas, 1994; Saldana, 2021).

Following the six phased process by Braun and Clarke (2006), the interview transcriptions were cleaned and compared to the interview recordings to ensure the text was accurately captured. Table 2 includes the themes identified and defined through the qualitative thematic analysis process. Each theme was aligned to the research question where support structures and tools that contribute to building a community of feedback within the practice of instructional design reviews were identified.

Results

Four themes emerged from the interviews that are aligned to the research question focusing on support structures and tools that contribute to providing feedback during the formative design review process: clarifying criteria defined for reviews, structures for review cycles, project management of reviews and fostering a culture of feedback.

Theme 1: Review Criteria

Elizabeth shared, “challenges for external review are that people often don’t know what they want. And so you have to develop a really clear process on getting every step approved along the way…” The review criteria the reviewers would focus on during reviews was common amongst many of the designers interviewed. They usually had a review where they ensured alignment to goals, objectives, and audience needs. There was often a copyedit type review for grammatical and spelling errors. There was also a review to ensure consistency with organizational brand standards.

The reviews then altered depending on higher education or corporate needs. The reviews also differed amongst the role of the reviewer. Reviewers might be peer reviewers within the same design team, the manager of the design team, subject-matter experts for the content, or stakeholders who would need to sign-off on the final product. Review processes often began within internal to the designer’s team and then to external to the designer’s team but remained within the organization. The scope of the review also changed based on the role of the peer reviewer, subject matter expert, or stakeholder. Designers mentioned the seriousness of the content and how that aspect drove reviews throughout the organization. Other designers mentioned how understanding the larger organization initiatives would play into design reviews. There may be reviewers who were looking for how the course fit into the larger strategic plans and the reviewer ensured it met those criteria.

Review’s Focus

One of the corporate designers shared how their internal team used a collaborative checklist where reviewers could see each other’s feedback. The areas of the checklist included objective items such as correct formatting for the organization and grammatical or spelling errors. They also included a section for subjective feedback where the reviewers provided their opinions or suggestions for the design. The designer would action objective feedback, but they were given the option to choose to action the subjective feedback.

Another corporate designer described how they would keep stakeholders focused during reviews by limiting what they saw during a review. For example, during the content review, the content was placed in a cloud-based document and stakeholders were asked to review the content only. This process allowed for stakeholders to see each other’s reviews and debate amongst each other for what changes should happen. In a similar vein, when the designer needed stakeholders to review the look and formatting of the course, they added text and image placeholders asking stakeholders to concentrate on the formatting of the course and not be distracted by content. Other participants described adding focused questions to the review instructions. The questions would help designers guide stakeholders for the information needed from them for the course. Keeping reviewers focused was one challenge mentioned but mitigated by adding structure into the processes.

Theme 2: Review Structures

When asked to share their feedback processes, Ben, a higher education designer, described how “…back-and-forth e-mails [was] so unproductive, it gets lost.” Many of the participants described processes outside of exchanging emails to share feedback. All participants used meetings with team members and/or stakeholders with varying degrees of success.

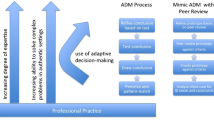

Most participants described iterative reviews that took place throughout the design and development phases. The reviews were iterative in that feedback was provided, the designer actioned on the feedback, and then the changes were shared with the reviewers. They also described how reviews were structured differently if it was a peer-review versus a review from a subject matter expert or stakeholder. All the reviews mentioned by participants were internal to the organization, but they labeled within their team as “internal” and external to their team as “external.” Callie, a manager of corporate designers, described their processes from the beginning to end through the design and development phases (see Fig. 1). Within this process, each section of the design or development phase went through her (as the manager) and then stakeholder reviews and was later returned to the designer to action on the feedback. Once the product went through many rounds of reviews for each stage, the product was ready for beta testing. At this point, the course was provided via a Learning Management System and shared with multiple reviewers. Each reviewer was assigned a specific role or focus in the review. The feedback was then actioned again by the designer toward finalizing the product.

A commonality between a Frank (in higher education) and Callie (in corporate design) was to cater the feedback delivery based on the person. In higher education, Frank might interact with an instructor based on the instructors’ preferences. Callie explained how she changes the structure of feedback delivery for her team based on the individual designers’ preferences. Some of the tools and technologies used by participants are described in Table 3.

While many structures were shared, one designer explained how the process looks different with each team they have been on or with different stakeholders. The tools used also tended to align with the viewpoint or focus of the reviewer. Many of the designers shared they had defined processes for their review processes for each phase of the design or development of the product. A challenge mentioned by most designers was timing of reviews and is highlighted in the next section.

Theme 3: Project Management

The designers had a common ground in expressing a challenge with timeliness of review cycles. An interesting note Elizabeth brought up was that the size of the project did not necessarily equate to the ease of managing a project. When working with external to the team stakeholders, the nuances changed based on the person. Most designers mentioned the lack of time stakeholders had for reviews in their schedules. They also mentioned a review of a product was not always considered a part of the stakeholders’ job roles so there were competing priorities. It was often an external to the team stakeholder who caused issues with a design product being completed on time or not being completed at all. Similar to this, another designer described how it was difficult to plan for stakeholder reviews not knowing how long a stakeholder may have to set aside for reviews. In Table 4, designers’ advice for keeping projects on track was compiled.

Another challenge described was how it was during the stage where stakeholders could see the whole course where there were generally more comments. This stage could take more time due to going back and forth to ensure the requested changes were captured accurately. Overall, many project management processes, and challenges associated with the processes, were described by the designers when providing their structure of formative evaluations.

Theme 4: Culture of Feedback during Evaluation

Several designers mentioned “culture” when describing their team relationships and their review processes. Adam stated providing feedback within a team was “a culture thing” and that it was something organizations could not train. In the same light, however, he spoke of shadowing his manager during review processes and seeing how his manager interacted with others while going through reviews.

Elizabeth mentioned new designers may come from other organizations where providing feedback was not a part of their “culture” and expressed how they had a “strong team culture.” It was the mentions of “culture” where an exploration began to understand what the underlying causes were to create a “culture of feedback.”

Collaboration and Perception of Feedback

The designers interviewed described the multiple ways their organizations would collaborate. Often there were defined project groups with various roles within the group and the team members might create different parts of the product. Most teams were given clear roles to perform for the team during a project. Adam described how each team member looked over each other’s shoulders “in the friendliest way.” The environments described were ones where the focus was on collaborating with each other to get to the best and highest quality product. Designers would comment that they did not take the feedback personally since they understood the goals of feedback. Ben, in higher education, described how a break-down in collaboration came when he did not share his product with other team members. The final product had flaws that were not caught until the end-users pointed out the issues. The higher education designers described a partnership with the instructors they worked with in projects. The end products were of higher quality when they could engage and brainstorm with the instructor.

Designers brought up how their positive or negative perception of feedback often depended on the person they were giving feedback to or receiving feedback from. For example, they felt benefits in internal reviews where the reviewers were designers or reviews where there was a subject matter expert. They mentioned how feedback from stakeholders may not always have the same depth or detail. Frank, in higher education, mentioned making sure to keep in mind how much work a person had put into the design of a course when approaching reviews, thereby making it a more positive experience.

A designer expressed how there were benefits in getting multiple peer reviews and peer perspectives. They also mentioned how team members may not always see it this way. If a team member is new, for example, they may feel intimidated by the review process and may not feel confident in the giving or receiving of feedback. This feeling leads into the next suggestions from designers.

Role of Leadership in Reviews and Contributions to Designer Professional Growth

Most designers mentioned their leader and how their leader helped build a comfortable space for reviews and how leaders often modeled giving and receiving feedback for teams. One designer’s team holds weekly design meetings where they share design ideas with each other. This is used to build confidence in the designers and team member relationships. When considering past experiences, a corporate designer suggested teams take the trends from reviews (where there were issues or where things went well) and share those as a means of professional development in team meetings. Most expressed tactics such as these led to team members being more comfortable with each other and more confident in review processes.

A corporate designer described how they would demonstrate feedback reviews with their manager by openly reviewing each other’s work and showing how the process made the work better. Callie brought up the point of building relationships with her design team and how she provided feedback to the designers based on the preferences of the designer. Callie attributed reviews going well because she had “a rockstar team where ego does not exist.” The team members had a willingness to learn through their reviews toward becoming better designers. They would take what they learned in reviews and transfer the knowledge to future reviews.

Another aspect evident in the teams where review processes went well was in leaders providing designers autonomy in their work. This might be in the aspect of providing a designer a project to own from beginning to end or it was also in giving the designer the support to accept or deny feedback from reviewers. The manager would “empower” the designer to understand the difference between what was a suggestion or what was a necessary change needed to the design. In cases where design feedback went well, there were clear guidelines for scope of reviews and the designers were encouraged to defend their design choices with stakeholders.

Discussion

As described by DeVaughn and Stefaniak (2020a, b), the designers who participated in this study were also using informal formative evaluation techniques as a part of their design and development processes. In line with studies of peer assessments in higher education (Lowell & Ashby, 2018; McDonald et al., 2019; McMahon, 2010; Ozogul & Sullivan, 2009; Woolf & Quinn, 2001), the participants shared their use of tools and support structures which aided in the completion of design reviews and kept design reviews within scope.

There were differences between the work of higher education and corporate instructional designers and subsequently their use of tools and structures was also dissimilar. In higher education, the professors the instructional designers worked closely with were often participating in the role of subject-matter expert and the final product stakeholder. The dual role gave less flexibility to the instructional designer and more autonomy to the professors. The professors had final sign-off and ownership of the courses. Due to this dynamic, the structures and tools varied with the higher education designers. They used structures such as a template to gather the most important information from the professors and a formal checklist such as the Quality Matters © rubric to make suggestions for the course versus in corporate, the checklists were internal to the organization and often allowed for more flexibility in the design choices. In higher education, there was less structure around the review processes and more structure in managing the relationship and expectations with the professor.

A common challenge amongst the participants was keeping the stakeholders within the timeline for course creation so many of the structures and tools they used were within the realm of project management. The corporate designers collaborated with many stakeholders throughout their design review iterations while the higher education designers generally only had individual professors for each course as a stakeholder. An interesting note from corporate designers was that review stakeholders did not necessarily have reviewing courses as a part of their defined job roles versus a professor was more likely to be allotted time for course creation and edits. The inclusion of more reviewers in corporate environments, and the lack of having time allotted within the reviewers’ regular work duties, meant the project management tools and processes differed slightly between corporate and higher education designers. Both industries generally had a kick-off meeting to orient the stakeholders to the project timeline and milestones. In higher education, a contract was utilized to define roles and responsibilities between the professor and designer and to provide the dates for milestones. The corporate designers incorporated templates for sharing instructions since they had multiple groups of stakeholders to engage. In software design, a tracking system was used to organize the hand-offs between the many stakeholders involved in the review processes. For both groups, there was some success in using structures and tools to help keep stakeholders informed and on track in their reviews. The use of tools and structures seemed to align with positive perceptions of evaluation processes, as described in previous studies, and when there was an absence of tools or structures, there was a negative perception (Lowell & Ashby, 2018; McDonald et al., 2019; McMahon, 2010; Ozogul & Sullivan, 2009; Woolf & Quinn, 2001).

The designers described how confidence in design feedback processes stemmed from environments where there was a “culture” of feedback. This aligns with studies where there was a need for an environment where designers could feel safe sharing or receiving feedback (Lowell & Ashby, 2018; McMahon, 2010; Woolf & Quinn, 2001). Leadership support was key in emulating positive feedback processes and bared a similarity to Woolf and Quinn’s (2001) study where the instructor first demonstrated feedback before the peers provided feedback to each other. In the corporate spaces, the role of the manager seemed more prevalent than in the higher education environments. Corporate designers shared how there was a back and forth collaborative design process with their managers and team members. In higher education, the instructional designer seemed more isolated and worked directly with a professor with less emphasis on iterations within a team atmosphere or check points with a manager.

An interesting finding was where the designers stated there was no formal training in formative evaluation as suggested as a need by previous authors during research of peer assessments (Lowell & Ashby, 2018; McMahon, 2010; Woolf & Quinn, 2001). However, while the corporate designers said there was no formal training, they described aspects of cognitive apprenticeships such as shadowing their leaders or more expert designers as they went through the design processes (Brown et al., 1989). Mentoring aspects of apprenticeships was evident in the corporate environments when design team leaders or more expert designers would provide design feedback first with each other, showing more novice team members how it was a safe space to provide feedback and showing the team members the appropriate way to provide feedback. The designers did not specifically name the mentor aspects “training,” but the designers were likely learning their formative evaluation processes by mirroring the actions of more senior designers in line with the purpose of cognitive apprenticeships.

Participants ended their interviews by sharing their future evaluation plans and how they planned to improve their processes in the future. In corporate design, the creation of design templates was one improvement designers suggested that may help in their feedback processes. As also described by Morrison et al. (2013), the designers’ shared thoughts of decreasing development time if there were templates orienting designers to use the style guides for their organizations. The creation of templates was not mentioned by the higher education designers since their designs were often confined to courses within a Learning Management System. For higher education designers, their future plans were about strengthening their relationships with professors. A designer described how they must approach a professor’s work with sensitivity and with the knowledge of how the professor may have strong opinions about the work they have provided. Thereby, there is a need to have a strong partnership with the professor as a stakeholder. The higher education designers also shared how they would like to have more access to summative evaluation (e.g. end of course surveys and final assessments) so they could gauge how their courses were perceived by students and measure the effectiveness of the courses (Guerra-Lopez, 2008; Morrison et al., 2013; Scriven, 1967, 1991).

In the interviews, most participants had a structure for review processes. They also had strong support and mentorship from leadership to engage in reviews. Most expressed positive perceptions of their processes as it related to giving or receiving feedback. Where there were mentions of negative experiences with giving or receiving feedback, there was little to no structure in the processes and limited support from leadership. Most of the negativity expressed was with stakeholder relations. One part of the stakeholder relations was where a stakeholder may give too much or too little feedback and there was a common challenge with keeping stakeholder reviews timely in their project plans. While project management may not fix all challenges with stakeholder feedback, it seemed to alleviate some issues with product timelines.

Overall, this study aligns with the peer assessment research where tools and support structures contribute to successful reviews and where perceptions of the processes were affected by the inclusion or exclusion of structure (Lowell & Ashby, 2018; McDonald et al., 2019; McMahon, 2010; Ozogul & Sullivan, 2009; Woolf & Quinn, 2001). Given the research and the information shared during the interviews, it would benefit instructional design practitioners in corporate environments to incorporate tools and structures into their review and project management processes toward the goal of increasing perceptions of reviews and to increase the quality of training products. Higher education instructional design teams may want to consider adding peer or manager review cycles into their course creation work, in addition to their work with professors. The role of the higher education instructional design manager could also be evaluated to see if there are opportunities for mentoring and coaching during informal formative evaluation processes.

Limitations

A limitation for the study was the sample size and the inclusion of both higher education and corporate. It was evident in the interviews that higher education review processes differed greatly from corporate review processes. It would be helpful in the future to evaluate the two contexts separately to further explore the nuances of each sector.

Future Research

There are several areas to explore further from this study. In future studies, there is a need to dive more into the project management aspects of the study. Project management is cited as one of the top competencies for designers (Ritzhaupt et al., 2018), and it would be valuable to seek how higher education programs are helping designers develop these skills. If the designers are not getting these skills as a part of their programs, then organizations may need to take on providing the skills as a part of their professional development plans for designers.

A common thread in the themes was the role of leadership in review processes. A deeper dive into how to build a culture of feedback could help both higher education programs and practitioners with knowing how to develop this practice. It would behoove the instructional design industry to understand more about how instructional design leaders are oriented to managing design teams and understand the competencies needed in a leader to build the culture of feedback needed for teams.

References

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706QP063OA

Brown, J. S., Collins, A., & Duguid, P. (1989). Situated cognition and the culture of learning. Educational Research, 18(1), 32–42.

Chen, W., Moore, J. L., & Vo, N. (2012). Formative evaluation with novice designers: Two case studies within an online multimedia development course. International Journal of Instructional Media, 39(2), 95–111.

Creswell, J. W., & Guetterman, T. C. (2019). Educational research: Planning, conducting, and evaluating quantitative and qualitative research (6th ed.). Pearson Education Inc..

Creswell, J. W., & Poth, C. N. (2018). Qualitative inquiry and research design: Choosing among five approaches (4th ed.). Sage.

DeVaughn, P., & Stefaniak, J. (2020a). An exploration of how learning design and educational technology programs prepare instructional designers to evaluate in practice. Educational Technology Research and Development, 68(6), 3299–3326. https://doi.org/10.1007/s11423-020-09823-z

DeVaughn, P., & Stefaniak, J. (2020b). An exploration of the challenges instructional designers encounter while conducting evaluations. Performance Improvement Quarterly, 33(4), 443–470. https://doi.org/10.1002/piq.21332

Dick, W., Carey, L., & Carey, J. O. (2005). The systematic design of instruction (6th ed.). Pearson/Allyn and Bacon.

Guerra-Lopez, I. J. (2008). Performance evaluation: Proven approaches for improving program and organizational performance. Jossey-Bass.

Klein, J. D., & Kelly, W. Q. (2018). Competencies for instructional designers: A view from employers. Performance Improvement Quarterly, 31(3), 225–247. https://doi.org/10.1002/piq.21257

Leedy, P. D., & Ormrod, J. E. (2019). Practical research: Planning and design (12th ed.). Pearson Education.

LeMaistre, C. (1998). What is an expert instructional designer? Evidence of expert performance during formative evaluation. Educational Technology Research & Development, 46(3), 21–36.

Lowell, V. L., & Ashby, I. V. (2018). Supporting the development of collaboration and feedback skills in instructional designers. Journal of Computing in Higher Education, 30(1), 72–92. https://doi.org/10.1007/s12528-018-9170-8

McDonald, J. K., Rich, P. J., & Gubler, N. B. (2019). The perceived value of informal, peer critique in the instructional design studio. TechTrends, 63(2), 149–159. https://doi.org/10.1007/s11528-018-0302-9

McMahon, T. (2010). Peer feedback in an undergraduate programme: Using action research to overcome students’ reluctance to criticise. Educational Action Research, 18(2), 273–287.

Morrison, G. R., Ross, S. M., Kalman, H. K., & Kemp, J. E. (2013). Designing effective instruction (7th ed.). Wiley.

Moustakas, C. (1994). Phenomenological research methods. Sage.

Ozogul, G., & Sullivan, H. (2009). Student performance and attitudes under formative evaluation by teacher, self and peer evaluators. Educational Technology Research and Development, 57, 393–410.

Rabel, K., & Stefaniak, J. (2018). The onboarding of instructional designers in the workplace. Performance Improvement, 57(9), 48–60. https://doi.org/10.1002/pfi.21824

Ritzhaupt, A. D., Martin, F., Pastore, R., & Kang, Y. (2018). Development and validation of the educational technologist competencies survey (ETCS): Knowledge, skills, and abilities. Journal of Computing in Higher Education, 30(1), 3–33.

Saldana, J. (2021). The coding manual for qualitative researchers. Sage.

Scriven, M. (1967). The methodology of evaluation. In B. O. Smith (Ed.), Perspectives of curriculum evaluation (pp. 39–83). American Educational Research Association Monograph Series on Curriculum Evaluation. Rand McNally.

Scriven, M. (1991). Evaluation thesaurus (4th ed.). SAGE Publications.

Sugar, W. (2014). Development and formative evaluation of multimedia studies for instructional design and technology students. Tech Trends, 58(5), 36–52.

Sugar, W., Hoard, B., Brown, A., & Daniels, L. (2011). Identifying multimedia production competencies and skills of instructional design and technology professionals: An analysis of recent job postings. Journal of Educational Technology Systems, 40(3), 227–249.

Wang, X., Chen, Y., Ritzhaupt, A., & Martin, F. (2021). Examining competencies for the instructional design professional: An exploratory job announcement analysis. International Journal of Training and Development, 25(2), 95–123.

Williams, D., South, J., Yanchar, S., Wilson, B., & Allen, S. (2011). How do instructional designers evaluate? A qualitative study of evaluation in practice. Educational Technology Research and Development, 59(6), 885–907. https://www.jstor.org/stable/41414978?seq=1&cid=pdf-reference#references_tab_contents. Accessed 7 Nov 2023

Woolf, N., & Quinn, J. (2001). Evaluating peer review in an introductory instructional design course. Performance Improvement Quarterly, 14(3), 20–42.

Funding

This study did not receive any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

This research project was reviewed by the Institutional Review Board at the university under study who then granted an approval.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Smith, S., Luo, T. Identifying Support Structures Associated with Informal Formative Evaluation in Instructional Design. TechTrends 68, 485–495 (2024). https://doi.org/10.1007/s11528-024-00947-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11528-024-00947-0