Abstract

We present the results of a large-scale corpus-based comparison of two German event nominalization patterns: deverbal nouns in -ung (e.g., die Evaluierung, ‘the evaluation’) and nominal infinitives (e.g., das Evaluieren, ‘the evaluating’). Among the many available event nominalization patterns for German, we selected these two because they are both highly productive and challenging from the semantic point of view. Both patterns are known to keep a tight relation with the event denoted by the base verb, but with different nuances. Our study targets a better understanding of the differences in their semantic import.

The key notion of our comparison is that of semantic transparency, and we propose a usage-based characterization of the relationship between derived nominals and their bases. Using methods from distributional semantics, we bring to bear two concrete measures of transparency which highlight different nuances: the first one, cosine, detects nominalizations which are semantically similar to their bases; the second one, distributional inclusion, detects nominalizations which are used in a subset of the contexts of the base verb. We find that only the inclusion measure helps in characterizing the difference between the two types of nominalizations, in relation with the traditionally considered variable of relative frequency (Hay, 2001). Finally, the distributional analysis allows us to frame our comparison in the broader coordinates of the inflection vs. derivation cline.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nominalization is a word-formation process that is highly productive in many languages, and can refer to both the process and the result of “turning something into a noun” (Comrie & Thompson, 2007:334). In this paper, we focus on deverbal nominalizations, which turn verbs into nouns (e.g. English defer-ment, activa-tion), as opposed to de-adjectival (e.g., red-ness) or de-prepositional (e.g., up-ness) nominalizations. More specifically, we focus on event nominalizations, which denote the event itself (abandon-ment) or its result state/object (astonish-ment, contain-ment) – as opposed to, for example, participant nominalizations, which may denote the agent (smok-er) or the instrument (blend-er).

Event nominalizations are a subset of event-denoting nouns characterized by a specific derivational history, which distinguishes them from simple action nouns, such as trip or game. The term nominalization, indeed, points to the transpositional process that takes place when a verb is used as a base for a noun, and conveys the idea that we are talking about a complex word, not a simple one. From a semantic point of view, this class is different from other more prototypical nouns: events are located in time and space, they are perceivable by senses, but their perceptual properties are not constant and stable over time (Lyons, 1977:443).

Languages are usually equipped with multiple affixes which can be applied to verbal roots to produce event nominalizations (Koptjevskaja-Tamm, 1993), and often different patterns apply to different verbal bases. This is also the case for German, the language we focus on in this paper. The set of German event nominalizations include the borrowed -ion (Spekulation, ‘speculate, speculation’ from the verb spekulieren), the native -t (Fahrt, ‘drive, ride’, from fahren), -e (Hilfe, ‘help’, from helfen) and -ung (Verteidigung, ‘defend, defense’, from verteidigenFootnote 1). In addition, stem-derived nominals like Fall (‘fall’, from fallen) and nominal infinitivesFootnote 2 (e.g. das Laufen, ‘walking’) can also be used to derive an event noun.

In this paper, we focus on the comparison between -ung deverbal nouns (henceforth UNGs, evaluieren, ‘to evaluate’ → die Evaluierung, ‘the evaluation’), and nominal infinitives (henceforth NIs, evaluieren, ‘to evaluate’ → das Evaluieren, ‘the evaluating’). We selected these two patterns because they are at the same time highly productive (they apply to a large set of verbal bases, making them good candidates for a corpus-based investigation) and rather challenging from a semantic point of view. Given that both UNGs and NIs keep a tight relation with the event denoted by the base verb, we ask what the difference in their semantic import is, and how it is reflected in usage.

This tight relation is demonstrated in the following examples, which show related usages of a base verb (1), a nominal infinitive (2), and an UNG nominalization (3): Footnote 3

-

(1)

Die Erfolge der Therapiemaßnahmen können schnell evaluiert werden.

‘The successes of the therapeutic activities can be evaluated quickly.’

-

(2)

Die oberste Doktrin ist dabei “fail fast”, also das schnelle Evaluieren von Ansätzen.

‘The highest doctrine is “fail fast”, which is the quick evaluation of the approaches.’

-

(3)

Die Eingabe konzeptueller Entwurfsskizzen muss die schnelle

Evaluierung mehrerer Alternativen ermöglichen […].

‘The input of conceptual drafts must enable the quick evaluation of multiple alternatives.’

These three examples have parallel argument structures: the evaluation event is realized with a Theme. The Agent is not present, but could be realized in all three sentences as a prepositional phrase headed by durch (‘by’). Their semantics is similar and arguably the choice between the base verb (evaluieren) and nominalizations (Evaluierung, Evaluieren) may be due to other syntactic, semantic or discourse factors.

That being said, base verbs and different nominalization patterns often differ clearly with respect to various factors. For example, nominalizations are subject to regularities regarding argument realizations that differ from their base verbs, although there is a lot of debate on the topic (Grimshaw, 1990; Borer, 2005; Alexiadou, 2010). Some studies show an interaction between aktionsart and type of nominalizations. Borer (2005) finds that the English gerundive construction -ing of is acceptable with non-culminating events (the sinking of the ships, the falling of the stock prices) but not with achievements (*the arriving of the train). Other gerund formations and other derived nominals do not have these restrictions (the arrival of the train). Alexiadou (2010) finds the same for Spanish.

Bejan (2007) studies subclasses of German nominal infinitives, finding evidence for a distinction between more nominal and more verbal NIs. The first group can be modified by adjectives and can realize arguments with a post-head von-phrase, while the second, more restricted type only allows adverbs and a preceding bare accusative object. Bejan argues that the first type refers to specific events, and the second to generic ones. These conclusions are mirrored in work on Spanish (Schirakowski, 2017) and Romanian (Iordăchioaia & Soare, 2015). These studies, however, do not consider the comparison between NI and UNG nominalizations.

Our work fills (at least partially) the gaps of previous work by a) establishing an empirical comparison between NIs and UNGs, and b) by contrasting the two nominalizations with regard to the relation to their respective base verbs.

The key theoretical notion of our comparison is semantic transparency with regard to the base verb, which we expect to differ between the two types of nominalizations. It has been frequently argued in the theoretical literature that whereas inflected forms have highly regular and predictable meanings, derived words, on the other hand, often acquire meanings that are not purely compositional, i.e. are not just a function of the meaning of their constituents (Booij, 2000; Laca, 2001). In our case, we expect NIs to have a more transparent meaning than UNGs. NIs are less subject to semantic shifts and their semantics remains closer to the one of the original base verb: this property can be directly linked to their inflectional origin (discussed in Sect. 2.1).

The predictability and regularity of meaning of nominalizations has not been tested empirically. The major factor influencing transparency that has received empirical treatment is (relative) frequency (Hay, 2001). Hay observes that if the base word is less frequent than the derived word, then its meaning is less accessible to speakers during processing, and the output of the derivational process is likely to become less transparent with respect to the semantics of the base. Given her findings, in our experiments we consider relative frequency as a proxy of semantic transparency. However, even in Hay’s study, semantic transparency was not measured in a corpus-based fashion, but approximated as the presence of an explicit referent to the base word in the dictionary definition of the derived word. This method is limited since it accounts for transparency as a binary feature, whereas it is theoretically assumed to be a graded notion. A similar facet of semantic transparency has been investigated by Bonami and Paperno (2018), named by the authors as stability of contrast. Their study was aimed at investigating the difference of “stability” between inflectional and derivational morphological processes. Due to the topic and the methodology applied, our work is directly linked to theirs, even if some major differences are present. Our focus is indeed on derivational processes, specifically on nominalizations.

We propose to investigate the relationship between nominalizations and transparency with a methodology that builds directly on large corpora of naturalistic language and can integrate frequency considerations with more fine-grained semantic observations. Concretely, we employ distributional semantics (Harris, 1954; Firth, 1957) which builds on the assumption that the meaning of a word (e.g., dog) can be empirically approximated in terms of a list of words which frequently occur in its context (e.g., bark, bone, run). The most frequent context words can be interpreted as the most salient semantic features of a word (e.g., typical actions performed by the word referent, typical patients of such actions, locations in which the word referent is typically found, etc.): in this perspective, the list of most salient contexts can therefore be considered as a rich, usage-based counterpart of the lexical entries employed in formal semantics. The meaning of two words can be straightforwardly compared in terms of the extent to which their usage-based lexical entries overlap: similar words occur in similar contexts. This approach to the quantification of similarity can also be applied to words that are connected by a derivational history: this is exactly what we do in this paper.

The distributional semantics literature offers various strategies to capture the different nuances of the very broad notion of semantic similarity/relatedness. In this study, we test the predictions with two distributional semantics measures: cosine similarity, commonly employed to model synonymy, and distributional inclusion, commonly employed to model hypernymy/troponymy. Furthermore, given the correlation between frequency and transparency shown in previous work in the morphological literature, we integrate absolute and relative frequency into our analysis. Our results show that, despite the shared semantics and the comparable syntactic behavior, the distributional inclusion measure can capture fine-grained distinctions between UNG and NI nominalizations, over and above the effect of frequency, which still plays a major role in supporting the distinction between UNG and NI.

As a final contribution, we extend our comparison of UNGs vs. NIs in two directions. First, we explore the potential of our distributional measures in extending the comparison to the agentive nominalization -er (der Evaluierer, ‘the evaluator’): in this case, the derivational process almost consistently erases the eventive reading (preserved in UNG and NI) and produces a noun which almost consistently denotes an external argument (agent or instrument, vs. UNG which has a result object reading). Second, we explore the inflectional nature of NI by introducing in the comparison a case of inflection, namely the present participle -end (evaluierend, ‘evaluating’).

The paper is structured as follows. In Sect. 2, we provide the theoretical background of our work: a description of the target phenomenon and of our research questions (Sect. 2.1), and a definition of the key notions we employ in our analysis, along with their empirical treatment in previous work (Sect. 2.2). In Sect. 3, we outline distributional semantics and conceptualize it in relation to our research questions. In Sect. 4, we present our experiments: we start by introducing our experimental setup and proceed to discuss our main results for the comparison between UNG vs. NI. After that, we incrementally enlarge our picture by introducing the comparison with -er derivatives and then the one with the present participles, followed by a comprehensive discussion of our experimental results and of their interpretation. Section 5 wraps up the paper by drawing general conclusions and discussing open questions and future work.

2 Background

In this section we introduce the word-formation processes that are the object of the study: nominal infinitives and derivatives formed with the -ung suffix. We compare UNG and NI synchronically in terms of their semantics, as well as productivity and syntactic behavior, and provide a diachronic account of their development. After that, in Sect. 2.2, we define the core theoretical notion of our work, namely semantic transparency.

2.1 Two patterns of German nominalization

In this study we focus on two specific patterns of German event nominalizations which can be frequently formed from the same base: deverbal nouns in -ung (UNG) and nominal infinitivesFootnote 4 (NI).

Deverbal nouns in -ung:

UNGs manifest a large range of meanings. Adopting the example of Absperrung from Rossdeutscher & Kamp (2010), the word can denote:Footnote 5

-

An event (the event of cordoning off)

-

(4)

Heerespioniere der 6. Armee besorgten die Absperrung der Schlucht

‘Pioneers of the 6th army carried out the cordoning off of the gorge’

-

(4)

-

A result state (the state of an area having been cordoned off)

-

(5)

Die europäische Kultur hat ihre Stärke unter anderem daraus gewonnen, dass sie keine Absperrungen auf Dauer zuließ [...]

‘European culture has drawn its strength, among other things, from the fact that it did not allow permanent states of cordoning off [...]’

-

(5)

-

A result object (the barricade that was erected)Footnote 6

-

(6)

[...] die Veranstalter nahmen an der Absperrung umgehend bauliche Verbesserungen vor

‘[...] the organizers promptly applied structural improvements to the barricade’

-

(6)

The contextual constraints that disambiguate individual occurrences with regard to the available readings have been object of numerous studies (Ehrich & Rapp, 2000; Hamm & Kamp, 2009; Kountz et al., 2007; Spranger & Heid, 2007; Eberle et al., 2009), but sometimes it is difficult to clearly discern the different readings in the same token, since these meanings are strictly interconnected.

As far as their productivity is concerned, UNGs are considered to be one of the most productive among the class of event-denoting suffixes (Eisenberg, 1994:364, Shin, 2001:297). Yet, there are some restrictions on the formation of UNGs, which have been highly debated in past literature. Esau (1973), Bartsch (1986), and Demske (2002), among others, show that verbs expressing states or verbs referring to the beginning or the repetition of a situation do not allow -ung nouns. However, some counterexamples are presented by Knobloch (2003:338),Footnote 7 e.g. Erblindung, ‘loss of sight’, Erkaltung, ‘becoming cold’. Rossdeutscher & Kamp (2010) notice that most -ung nouns are derived from transitive verbs. Moreover, they argue that verbs that do not allow -ung nominals can be generally defined as activity verbs, like arbeiten, ‘to work’, or wischen, ‘to wipe’.

The syntactic and morphological behavior of UNGs is typical of common nouns: they can be pluralized, their arguments can be realized either by a possessive pronoun or by a post-nominal genitive, they can be modified by adjectives and preceded by a definite or indefinite determiner (Demske, 2002; Scheffler, 2005).

Nominal infinitives:

NIs are truly transpositional, since they keep only the event reading from the base verb. In a few cases, a result state or result object reading is also possible (e.g. Verstehen, Ansehen and Schreiben), but these are usually lexicalized words that are far more frequent than other nominal infinitives.

There are practically no constraints on NI productivity, as they can be formed from every base verb.

From a syntactic point of view, NIs exhibit a clear nominal behavior. They are usually preceded by a definite or indefinite article and can be modified by adjectives, like nouns are:Footnote 8

-

(7)

Das Laufen fiel ihm immer schwerer.

‘Walking was getting harder for him.’

-

(8)

Es herrschte ein Laufen und Springen, ein Rennen und Hüpfen.

‘There was running and jumping, racing and hopping.’

-

(9)

Das schnelle Zerstören der Stadt war notwendig.

‘The rapid destroying of the city was necessary.’

Arguments expressed by a genitive or a possessive pronoun can refer both to the subject or object of the verb. A subject interpretation is preferred (Knobloch, 2003; Scheffler, 2005), even though an object reading is possible:

-

Dieser Raum enthält vertrauliches Material.

Sein Betreten ist verboten.

‘[This room contains confidential data.] Its stepping-in is forbidden.’

They can be compounded to produce further nominals:

-

(11)

Wir schreiben Briefe → Briefeschreiben

We write letters → letter-writing

Contrary to common countable nouns, they do not pluralize and are non-countable:

-

(12)

*Die Zerstören der Stadt waren notwendig.

The destroyings of the city were necessary.

It is possible to derive NI from the passive form of the base verb:Footnote 9

-

(13)

Das Gesehen-werden ist die Hauptdimension der Kunst.

‘The being seen is the main dimension of art’.

The consistently nominal behavior of German NIs is of particular interest from a cross-linguistic perspective, as in other languages NIs exhibit mixed features. In Italian, for example, NIs can have both more verbal patterns (when they are modified by adverbs, ex. 14, or express their direct object as a NP, ex. 15) and more nominal onesFootnote 10 (where the subject is expressed by a PP and the NI is modified by an adjective, ex. 16).

-

(14)

Il lavorare continuamente di Luigi

‘Luigi’s working continuously’

-

(15)

Il degustare un buon bicchiere di vino

‘(The) tasting a good glass of wine’

-

(16)

Il lavorare continuo di Luigi

‘Luigi’s continuous working’

A diachronic perspective on German event nominalizations:

The nominal behavior exhibited by NIs is the result of a change through history which is, in fact, parallel to the one experienced by UNGs (Werner, 2013).

In the Early New High German (ENHG) period, (around the 16th and 17th century), UNGs showed the same argument structure and event interpretation as their corresponding base verb (Demske, 2002:68, but also Göransson, 1911; Behaghel, 1923Footnote 11). This verbal behavior is, indeed, similar to more verbal infinitives. Only in recent times have they evolved a more noun-like character, with increasing restrictions on their productivity. Nominals derived from verbs of states or from inchoative/ingressive verbs, which are not attested in Present Day German (PDG), are attested in ENHG, as Demske (2002:80) shows with a corpus study on newspapers of the 16th and 17th century. In PDG these missing UNGs seem to have been replaced by NIs.

In similar way, NIs went through a change from the verbal to the nominal pole (and also, as argued by Gaeta, 1998, from the inflectional to the derivational side). In Old and Middle High German, NIs had more mixed properties: they could be modified by adverbs, prepositional phrases and direct objects (Gaeta, 1998:6).

Summing up, NIs used to exhibit a mixed behavior which got lost in present day German, preserving only more nominal properties. Thus, as discussed above, they are closer to other event-denoting nominals than the corresponding nominal infinitives in other languages.

2.2 Semantic transparency in derivation

There is wide consensus in the morphological literature that transparency is the major semantic criterion to contrast inflection and derivation.Footnote 12 Definitions of transparency build on the notion of compositionality: “A lexeme is said to be transparent if it is clearly analysable into its constituent morphs and a knowledge of the morphs involved is sufficient to allow the speaker-listener to interpret the lexeme when it is encountered in context.” (Bauer, 1983:19). Dressler (2005:271) claims that inflection can be completely compositional, while word formation (including derivation) cannot.Footnote 13 Plag (2003:15-16) exemplifies this with the word interview. He notes that its meaning “is not the sum of the meaning of its part. The meaning of inter- can be paraphrased as ‘between,’ that of (the verb) view as ‘look at something’ (definitions according to the Longman Dictionary of Contemporary English), whereas the meaning of (the composed verb) interview is ‘to ask someone questions, especially in a formal meeting.’ Thus the meaning of the derived word cannot be inferred on the basis of its constituent morphemes; it is to some extent opaque or non-transparent.”

If a derivational process is compositional in the sense defined above, then the meaning of the derived word should be predictable given the base word. Some authors focus on this property: Aronoff (1976:38) uses the term coherency, Bauer et al. (2013:34) call it semantic regularity or meaning semantic constancy. Bell and Schäfer (2016:158), who investigate transparency in nominal compounds, call this meaning predictability. Coherency and compositionality are arguably just different sides of the same coin. In the words of Bybee (1985:88), when the semantic relation between base and derived word is no longer transparent, we have a lexical split.

Previous work on the empirical characterization of transparency in derivation has highlighted its relation with frequency. Bybee (1985, also 1995) was the first to posit a connection with the frequency of the derived word: the more frequent the derivative is, the more likely it is subject to lexical split.Footnote 14 However, as noted by Hay (2001, 2003), the semantic transparency of a given word is better explained by its relative frequency, defined as the ratio of the derivative’s frequency to the frequency of the base term. Derived words with low relative frequency (i.e., derived words that are less frequent than their bases) are more semantically transparent than words with an high relative one. Hay empirically quantifies lexical split (also called semantic drift) by looking at dictionary definitions. She considers a derived word to be transparent if the base word is cited in its dictionary entry. Thus, the word dishorn is considered transparent since its definition in the Websters 1913 Unabridged English Dictionary is “To deprive of horns”. On the contrary, if the base word is not present in the dictionary entry, the derived word is considered opaque and considered as an instance of lexical split. From her study, Hay concludes that “dictionary calculations reveal that derived forms that are more frequent than their bases are significantly more likely to display symptoms of semantic drift than derived forms containing higher-frequency bases.” (Hay, 2001:1041).

Hay’s work, however, does not take into consideration an important theoretical feature of transparency. Since Cruse (1986:39), semantic transparency has been described as a graded feature, not as a binary one. Words may be more or less transparent with respect to their base. Her methodology is not adequate to catch continuous values. What is missing is an empirical way to compare the semantic representations of base and derived words on a large scale and based on naturalistic linguistic data (vs. dictionary definitions). In the following section, we outline a corpus-based methodology which allows us a) to gather usage-based representations for base and derived words from a large amount of natural data and b) to use these representations to get linguistically motivated insights into the semantic relations between derived words and their bases.

3 Methodology

In this section, we present our corpus-based methodology for the semantic characterization of the relation between base and derived words. In Sect. 3.1, we provide an introduction to distributional semantics which can be skipped by readers already familiar with this method. In Sect. 3.2, we discuss its potential for the investigation of morphological processes. Section 3.3 motivates the transparency measures adopted in our study and discusses our predictions on our target patterns.

3.1 Distributional semantics

Distributional semantics is a widely used method in computational linguistics which builds on the assumption that the meaning of a word (the so-called target, e.g. dog) can be successfully approximated in terms of its linguistic contexts (e.g. words that are used together with it in a sentence, such as for example bark, bone, run).

The foundations of distributional semantics go back to the structuralist take on meaning which characterized early corpus linguistics: in his Distributional Structure, Harris (1954), stated that “difference of meaning correlates with difference of distribution”; in the highly-cited words by Firth (1957:11), “You shall know a word by the company it keeps!”. Later on, in the ’90s, the Distributional Hypothesis elaborated by George Miller and Walter Charles provided the psychological arguments for a usage-based characterization of meaning on the basis of contextual informationFootnote 15 (Miller & Charles, 1991).

In practice, distributional approaches to word meaning extract co-occurrence information from large corpora. For each target word, a distributional representation is constructed in the form of a vector of its co-occurrence values with the contexts considered. For example, the target word dog can be represented with an ordered list of values of its co-occurrence with a set of context words: dog: {bark: 100, pet: 35, meow: 1}. Such representation enables an empirical comparison of the meaning of dog to that of other target words, i.e., cat: {bark: 4, pet: 43, meow: 97}. Indeed, this differential approach to meaning is a signature feature of distributional semantics.

Parameters of distributional models.

Crucially, there is not just one method to build a distributional semantic model (i.e., a collection of vectors of contexts for a set of targets). Indeed, the Distributional Hypothesis can (and has been) implemented in many different ways and concrete implementations, corresponding to different model architectures and parameters.

The most intuitive model architecture is the one based on co-occurrence counts, which gives rise to the so-called bag-of-words (or count) Distributional Semantic Models (DSMs), which we also employ in our work. The toy vectors for cat and bird reported below (Table 2) are an example of such an approach. The construction process of such DSMs involves a large number of design choices (parameters); for a comprehensive overview, see Turney and Pantel (2010). One such parameter is what counts as a linguistic contexts. Frequently, they correspond to the target’s collocates. They can be extracted from a specific window of text around the target word: a 5 words window around the target, or the whole sentence, or even a whole document.Footnote 16 Another option is to employ syntax to determine the most representative collocates (e.g., objects and subjects for verbs, adjectival modifiers and head verbs for nouns, etc.).

In this paper, we will employ a bag-of-words DSM which is based on co-occurrence within a context window: let us demonstrate the extraction process at work on a concrete example. Table 1 shows five example sentences containing the word cat, extracted from a large web-corpus for English, UkWaC (Baroni et al., 2009). To our example sentences, we applied a 5-word window to identify potential contexts (i.e., 5 words to the left and to the right). The extraction process stops at sentence boundaries and ignores punctuation. Closed class words (known also as function words, e.g., a, its, our, etc.) are excluded from the potential contexts and both target and context words are lemmatized. The extraction process outlined above results in the toy vector for the word cat displayed in Table 2 together with a putative vector for a second target, bird. Note that negative information is also relevant: for the difference between cats and birds, it is important that only the latter occur in the context of the verb fly.

Co-occurrence counts between targets and contexts (e.g., 5 for the word bird occurring with fly) are the most straightforward way to quantify the salience of a certain context for a certain target: in our toy example, the context fly is, unsurprisingly, the most salient feature for bird. However, raw co-occurrence is not the most statistically robust option, due to the well known Zipf’s law (few very frequent words and a large number of rare ones; Zipf, 1949). To counteract frequency effects on collocations, different forms of weighting functions are usually applied to vectors in order to assign lower values to high frequent contexts which are not informative about the target (i.e., because they are just very frequent in the entire corpus). One of the most common functions is PPMI (positive pointwise mutual information, Church & Hanks, 1990; Bullinaria & Levy, 2007), which computes the (logarithmic) ratio of the actual joint probability of two terms (i.e. their observed co-occurrence value) to the “expected” joint probability if the two were independent (Evert, 2005:ch. 3).

Furthermore, collecting co-occurrences for a large number of targets will necessarily lead to an extremely high dimensional and sparse representation, i.e., vectors will have a large number of contexts (this is the so called “curse of dimensionality”) but also a very high number of zeros (for context words not co-occurring with a given target). Moreover, there would still be a high number of dimensions which are not informative enough, despite the application of feature weighting. In this scenario, generalizations brought by similar dimensions can be missed. A common solution to this problem is to reduce the number of dimensions into fewer latent dimensions, which reveal latent abstract features and remove sparsity and noise (Deerwester et al., 1990; Landauer & Dumais, 1997). Frequently used algorithms are singular value decomposition (SVD), principal component analysis (PCA) and nonnegative matrix factorization (NMF). Such methods produce a “statistically grounded” summary of the context dimensions, that is to say, one which has a lower dimensionality (from hundreds of thousands to hundreds) and benefits from the latent relation between the original dimensions. The side effect of this is that context dimensions cannot be mapped to a specific word anymore (e.g., fly for bird), making the DSM representation opaque and specific measures (including the one we rely on in this paper) are not applicable.

Whether to rely on syntactic or surface co-occurrences, the size of the context window, whether to apply feature weighting with PPMI (or other measures) and to resort to dimensionality reduction (and with which methods) are precisely the design choices hinted at before. Technically, they are referred to as the parameters of a DSM, and their manipulation obviously affects the contextual representation encoded in the distributional vectors.

Semantic similarity and relatedness.

Even though the interpretation of distributional vectors as direct lists of conceptual features for the target words is tempting, there is typically no direct correspondence, and extracting linguistically or cognitive plausible features requires additional steps (Baroni et al., 2010; Rubinstein et al., 2015). Therefore, distributional semantics typically sets itself the more modest goal of modeling the degree of semantic similarity or semantic relatedness among targets. As sketched above, this is a direct corollary of the distributional hypothesis: complementary occurrence provides negative evidence for semantic similarity.

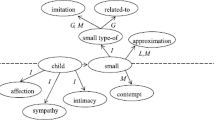

Besides the technicalities of the computation of similarity based on context overlap, which we will discuss in detail in Sect. 3.3, a natural question to ask concerns the semantic nature of DSM similarity. The answer is that there is not just one “similarity”. Indeed, one of the main criticisms against DSMs is that their implementation of semantic similarity may be just too broad to be useful, as it encompasses a wide range of relations with different linguistic properties (Sahlgren, 2006; Lenci, 2008) and a more neutral word such as “relatedness” should be used.Footnote 17

The refinement of the distributional notion of semantic similarity is an active topic of research (Turney, 2008; Baroni & Lenci, 2010): it has been shown, for example, that DSMs can learn relation-specific representations to discriminate between pairs of candidate relations, e.g. synonymy/antonymy (Scheible et al., 2013; Santus et al., 2014). Among previous work on tailoring DSM representations to specific semantic relations, research on the hypernymy/hyponymy relation in terms of distributional inclusion (Weeds et al., 2004; Clarke, 2009; Lenci & Benotto, 2012) is of particular relevance for this paper; see Sect. 3.3 for details.

Distributional semantics beyond co-occurrence counts

As sketched above, bag-of-words DSMs are only one of the possible model architectures. The models outlined above (and employed in this paper) have been labelled count models (Baroni et al., 2014), because they create a distributional representation by accumulating co-occurrence counts. In recent years, new distributional semantic models that are based on neural networks have become increasingly popular and are state-of-the-art in the majority of the tasks (Baroni et al., 2014; Mandera et al., 2017). They are referred to as predict models or word embeddings. Indeed, instead of accumulating co-occurrence counts, these neural architectures are trained in the task of predicting the contexts given a target, or a target given the contexts. The low-dimensional, implicit representation built by the network to perform this language prediction task is then employed as a DSM representation for the target words. In this sense, the DSM vectors (now called word embeddings) come into being as a by-product of the task performed by the network.

Unfortunately, the interpretability of neural models tends to be even worse than that of (dimensionality reduced) count models (Lenci, 2018). Additionally, a lot of work targeting an explicit comparison between count and predict models has uncovered the underlying mathematical equivalence between the two architectures (Levy & Goldberg, 2014b), leading to a refinement of the scope, and constraints on the superiority of predict models over the count ones (Levy & Goldberg, 2014a; Levy et al., 2015; Sahlgren & Lenci, 2016). At any rate, the specific properties of the similarity measure we decided to employ (see Sect. 3.3 for more details) have restricted our choice to models with interpretable dimensions, and thus to the count models without dimensionality reduction.

3.2 Investigating morphology with distributional semantics

Distributional semantics approaches to model the meaning of morphological processes fall into two high-level categories. In the first group, we find those studies which aim at mining the semantic import of a derivation by comparing the distributional representation of the input (the base word) with that of the output (the derived word). In the second group, we find the approaches which aim at learning distributional representations for morphemes (instead of inferring them). The present work belongs to the first set of approaches, as we directly compare base and derived words to get more insight into the nature of the corresponding semantic shifts.

Let us then take a closer look at the relevant literature. As anticipated above, the starting point of a distributional investigation of the meaning shifts produced by a morphological process (e.g., the German suffix -in which derives a female noun from a male noun, Bäcker → Bäckerin) is the extraction of the distributional vectors for pairs of base and derived words (Bäcker, Bäckerin; Ingenieur, Ingenieurin, etc.). Once a representative sample of base/derived pairs for a specific derivation of interest has been collected, distributional semantics provides two alternative (but not mutually exclusive) ways to exploit these usage-based representations to characterize the underlying meaning shifts.

The first approach is based on the comparison between the vector of base and derived words in terms of distributional properties tailored to the theoretical questions addressed. For example, distributional methods devised to detect the antonymy relation can be employed to model the meaning shifts produced by negating prefixes (e.g., happy stands to unhappy in the same relation in which it stands to sad). There is surprisingly little work which adopts such an “analytic” approach to target specific theoretical questions on the nature of the derivational processes. Wauquier (2016) employs this approach in the comparison between French agent nouns in -eur, -euse and -rice (e.g. gagneur, ‘winner’) and event nominalizations (derived by means of different suffixes). By comparing the cosine distance between the base verb and the two derivatives, she found that -eur derivatives were further from the base than the corresponding event noun. Our study goes in the same direction and provides a contribution using this approach by extending the scope of the involved measures (cosine, distributional inclusion, as well as frequency effects).

The second approach frames derivation as a compositional process in the Fregean sense: the meaning of the derived word (e.g., Bäckerin, female ‘baker’) can be predicted as a function of the meanings of its parts (Bäcker, ‘baker’, plus -in, female suffix). In practical terms, this means learning a function for each derivational pattern (-er, -in, etc.) that takes the representation for the base word as input and returns a representation for the derived word. This approach addresses, from a practical point of view, the problem of data sparsity for rare (but productive) derived words. Very often, derived words are less frequent than their bases, and vectors for them can be of a lower quality or completely unavailable (in the case of low frequency and unattested words, respectively). Compositional models allow us to build a semantic representation also for missing complex words, given vectors of the base and of the affix involved (obtained for example by averaging those of the derived words available).

The compositional approach to derivation and compounding is very popular in distributional semantics and has been studied with some success for a number of languages and morphological processes (see Lazaridou et al., 2013; Marelli & Baroni, 2015 for English, Padó et al., 2016; Cotterell & Schütze, 2018 for German, and Melymuka et al., 2017 for Ukrainian, Günther & Marelli, 2018 on compounding). While it is less common in more traditional, count DSMs (but see Keith et al. (2015) and the other works cited above), this approach has found plenty of application in neural DSMs (Luong et al., 2013; Cotterell & Schütze, 2018), and it also characterizes the discriminative learning approach by Baayen et al. (2019) (who, however, do not focus on subword units such as -s, but on semantic units such as plural). The compositional approach does, however, build on the straightforward assumption that derivational shifts are fully learnable – in the sense that they are systematic and predictable based on the meaning of the base word. While the transparency assumption is likely to be met at the phrase level (for which compositional distributional semantic approaches have been devised) it is, however, much less straightforward below word level. Derived words may indeed show different degrees of compositionality.

In the studies by Bonami and Paperno (2018) and Huyghe and Wauquier (2020), the authors use an average among word vectors or a centroid, to distributionally represent a morphological process. Huyghe and Wauquier employ the centroid vector of prototypical agent nouns to represent and investigate this specific derivational pattern. Bonami and Paperno instead compare multiple morphological processes to test distributionally the difference between inflection and derivation. They show that the variance of vector offsets between derivational forms and their corresponding bases is greater than the variance of vector offsets between inflectional forms and their bases. The variance is a measure of what they call stability of morphosyntactic and semantic contrast, a facet of semantic regularity. Given their results, they conclude that inflectionally related words differ from each other in a more regular way than derivational ones.

Both the direct comparison of base/derived words and the compositional approach can be used to investigate morphological processes. Reddy et al. (2011) compared the two in modelling human transparency ratings for compound nouns and found only a small improvement with a compositional approach.

In our study, we opt for the direct comparison of nominalizations with their bases because our focus is on the transparency relation holding (or not holding) between the derivative and the base verb. We leave the investigation of the same processes from a compositional point of view as a matter of future work, since we consider this angle to primarily address a question of learnability and predictability, and not one of transparency.

3.3 Distributional measures of transparency

As discussed in Sect. 2, transparency is the core theoretical notion of our work: we are interested in comparing UNG and NI nominalizations in terms of their transparency to the base terms or, in opposite terms, in quantifying how big the semantic drift introduced by the nominalization process is. In this section, we outline and motivate the two distributional measures of transparency employed in this study.

The first measure of transparency, which has already been employed in the literature, is cosine similarity. It is the standard measure in distributional semantics and has proven to be the most robust way to detect meaning relatedness in distributional vectors. Cosine similarity measures context overlap among vectors of words, and it is commonly assumed that higher values correspond to higher semantic similarity or relatedness. We expect cosine similarity to be a possible measure of semantic transparency, given that the notion of transparency is similar to the one of semantic similarity. Specifically, we measure the cosine similarity between the base verb and the corresponding derived term: higher cosine similarity values will indicate higher semantic transparency of the derived word, since its meaning will be shown to be similar to that of the base.

Cosine similarity quantifies the overlap between the contexts in which two words (in our case, the base verb and the nominalization) are used by measuring the cosine of the angle between the two vectors. Values range from 1 for very similar vectors, over 0 for orthogonal vectors, to -1 for opposite vectors. However, if a PPMI transformation has been applied, the values of cosine will range between 0 and 1, since there will not be negative values.

Cosine is based on the dot product operator (also called inner product): the dot product of two vectors will be high when they have large values in the same dimensions; on the contrary, if two vectors have zeros in different dimensions, they will have a dot product of 0, which represents their strong dissimilarity. The cosine similarity measure is the normalized version of the dot product, i.e. it normalizes the value for the vector length. More frequent words have longer vectors, i.e. less zeros and higher values, since they occur more often and then have higher probability to occur with an higher number of different contexts. The raw dot product will be, thus, consequently higher for more frequent words. To overcome this problem, the cosine measure divides the dot product by the lengths of each of the two vectors. The vector length is defined as the squared root of the sum of the quadratic values of each dimension:

Consequently, the cosine of vector t and c is computed as follows:

Cosine is a symmetric measure: cos(x,y)=cos(y,x) for all words x,y. However, frequently a word x can be similar to y, but word y can have other closer words. This is usually the case with hypernyms - hyponyms (Lenci & Benotto, 2012): a hyponym like lion occurs in many of the contexts of its hypernym animal; on the other hand, animal has a wider meaning and it will have a number of contexts which will not be shared by lion. The hyponym will be closer to its hypernym, but the vice versa will not be true in the same measure.

Given these facts, we consider distributional inclusion (Geffet & Dagan, 2005; Kotlerman et al., 2010) as a second similarity measure. It has been devised to model the relation of nominal hypernymy: distributional inclusion quantifies the extent to which the derived word is used only in a subset of the contexts of the base word. Theoretically, it captures a more fine-grained, asymmetric notion of transparency which comes with an additional nuance of specificity which is completely lacking in the cosine measure. It counts how much the vector of a word u is included in the vector of its hypernym v, i.e. in which proportion its contexts are the same of v, but it then takes into consideration how much of the vector of v is not included in u.Footnote 18 We expect that a fully transparent derivation should behave like a hyponym: it should exhibit the same range (or a part) of meanings of the base, without acquiring other additional or idiosyncratic meanings. A less transparent derivation (i.e., one that acquires novel meanings with respect to the base) will be less included because the values on some of its dimensions (those corresponding to the novel meaning) will be higher compared to the base.

An entire family of hypernymy-tailored similarity measures is based on distributional inclusion (Weeds et al., 2004; Clarke, 2009; Lenci & Benotto, 2012). We conducted some preliminary experiments and selected the most promising one (both in the state-of-the-art and in our own pilot study), InvCL (Lenci & Benotto, 2012). InvCL is derived from the ClarkeDE measureFootnote 19 (Clarke, 2009), which computes the degree of inclusion of word x into word y:

Note that, where cosine has a dot product in the numerator, which mathematically implements context overlap by symmetric pairwise multiplication, here we have a minimum function which is symmetric (i.e., returns the same value for animal/cat and cat/animal) as well and effectively defines a subset of the overlap. It is the denominator which introduces the asymmetry element, taking the “perspective” of just one of the words (of which it calculates the L1-length, and not the euclidean length as in cosine), namely the candidate for being included.

Given this formula, InvCL is computed as follows:

In order to exemplify how this measure works, let’s consider again a toy model (Table 3) for the words cat and animal.

When computing the inclusion of the vector for cat into the vector for animal, we first consider for each dimension the lowest value between the two vectors; we then divide this sum by the sum of the values for cat:

We do the same for the ClarkeDe measure of animal into cat:

Given these values, we can compute the distributional inclusion of cat into animal:

Inclusion values from the InvCL measure range between 0 and 1 (as well as ClarkeDe values), where 1 indicates perfectly included vectors, i.e. identical vectors.

InvCL can describe asymmetrical relations where r(u,v) holds, but r(v,u) does not. In the original context of hypernym detection, the authors thought that the features of a hyponym should be included in the features of the broader term, i.e. the hypernym. Thus, the distributional inclusion of the hyponym will be higher than the inclusion of the hypernym. This is confirmed by the toy example above: if we compute the inclusion of animal into cat, we will see that it is lower than the inverse:Footnote 20

Matching the semantic properties of our transparency measures with the theoretical considerations concerning UNG and NI discussed in Sect. 2 enables us to formulate the following experimental predictions and questions:

-

Cosine similarity quantifies to what extent the nominalization is a viable distributional substitute for the base verb, and their overall semantic relatedness. We can hypothesize that NIs will display a larger overlap with their corresponding base verbs since they keep the base event reading more consistently.

-

Distributional inclusion quantifies to what extent the nominalization behaves like a hyponym of the base verb. Again, we expect that NIs, which are often used in very specific contexts with respect to the base verb (Das Laufen über die Brücke ist verboten, ‘the crossing of the bridge is prohibited’) and which have a lower degree of polysemy than UNGs, will be more included in their bases than UNG. We then expect the values of InvCL(NI, Base) to be higher than InvCL(UNG, Base).

Instead of establishing a competition between cosine and distributional inclusion as measures of semantic transparency, we will investigate their different import as if they were describing two aspects of the notion of transparency. InvCL tells us that Das Laufen is used in a very restricted subset of the contexts of laufen. Cosine similarity, instead, gives us a more general picture of the semantic relatedness of the two words, without further specification of the kind of relation.

4 Experiments

This section presents the results of our experiments. Section 4.1 spells out the experimental setup: we introduce the dataset, provide the details of our distributional semantic model and define our statistical methodology. Our main experiment, comparing NI and UNG, is presented in Sect. 4.2. Section 4.3 reports on auxiliary experiments that locate NI and UNG with respect to more general ‘milestones’ for inflection and derivation.

In the article text, we concentrate on our main findings. Additional analyses and details are made available in a series of online Appendices available at https://osf.io/x6ctj/?view_only=96578ccc91cb4f4087fb442790901347.

4.1 Experimental setup

Dataset

The experimental items for the dataset are sampled from DErivBase, a large-coverage database of German derivational morphology (Zeller et al., 2013). We extract verbs for which both an –ung nominalization and a nominal infinitive are attested.Footnote 21 This results in 770 tuples. We considered paired nominalizations to focus on cases where there are no other factors blocking their formation. In cases where only one nominalization is formed, other features may have a role, and these could be linked mainly to the base verb rather than to the nominalization process. On the contrary, when both are attested we can reliably observe the distributional differences between them - independently of other semantic constraints which may have acted as a blocking factor when only one nominalization is attested.

We manually inspected the top 5% most frequent items in both categories to remove lexicalized derivatives. In this study, indeed, we were interested in modeling the prototypical items of the two derivational processes. Usually, very frequent derivatives acquire more lexicalized meanings (Bybee, 1985; Boleda, 2020), which are not in line with the compositional meaning brought by the suffix. For example, the word Speisen is mainly used in its lexicalized sense of ‘food’, rather than as a nominal infinitive meaning ‘dining’. We then removed 6 cases of NI and 15 cases of UNG.Footnote 22

We then applied frequency filtering, retaining only cases where all the forms have a frequency higher than 50, in order to ensure the reliability of the vectors. Indeed, it has been frequently stated (e.g. Bullinaria & Levy, 2007; Luong et al., 2013; Schnabel et al., 2015; Gong et al., 2018) that low frequency target words have less reliable vectors; notably, Drozd et al. (2015) showed that with frequency increasing from 5 to 100, accuracy in their task improved rapidly. Frequency information for the target words is taken from the same corpus used to build the distributional model, i.e. the 700 million word SdeWaC corpus (Faaß & Eckart, 2013), POS tagged and lemmatized using TreeTagger (Schmid, 1994). The frequency distributions of the three categories before filtering are reported in the online Appendix B, Table 9, available at the URL mentioned at the beginning of this Section. Half of the NIs (381) have a frequency lower than 102 (the median of the category); UNGs have instead a higher median value and only 77 items have a frequency lower than 102. This difference in frequency distribution of UNG vs. NI motivates our decision to establish a frequency filter, as the negative impact of low frequency on the quality of the vectors would have affected mainly the NI vectors.

The end result is a dataset containing 370 triplets of NIs and UNGs, plus their corresponding base verbs. Table 10 in the online Appendix B reports the frequency distributions of the filtered dataset, whereas the complete list of items is provided in online Appendix A.

Distributional model

For each word in the dataset, we extracted distributional count vectors from the SdeWaC corpus (Faaß & Eckart, 2013), adopting default settings for this class of DSMs: a symmetric 5-words context window, PPMI as a scoring function, and the lemmas of the 10 thousands most frequent content words (nouns, adjectives, verbs, and adverbs) as dimensions.Footnote 23

Task and regression analysis

We investigate the usefulness of the distributional measures to distinguish between NI and UNG by setting up a binary classification task: given a set of features that describe a pair of a base verb and a derived noun, we train a logistic regression model to predict whether the derived word is a nominal infinitive or an ung nominalization of the base verb.Footnote 24 The rationale for this setup is that we can interpret the coefficients of each feature as a quantification of its importance for the NI - UNG distinction. We use the following features:

-

log-transformed relative frequency (ratio between the frequency of the derived word and the frequency of the base word);

-

log-transformed frequency of the derived word;

-

cosine similarity between the derived word and its base;

-

distributional inclusion between the derived word as included term and the base as including word.

Frequency distributions for our predictors are reported in the online Appendix B, Table 11. All the numerical variables are scaled and centered on their mean. Note that the identity of the base verb cannot be recovered from the features, so the model cannot succeed by learning base verb-specific lexicalization patterns.

We apply a standard feature selection procedure to construct our model, namely forward selection,Footnote 25 adopting a theory-motivated order. The method is summarized in Table 4.

We start by testing the significance of the frequency predictors (step 1) since they have been previously indicated in the literature as correlates of semantic transparency (Bybee, 1985; Hay, 2001). We first add them in separate models (1.a and 1.b), and we check if the addition of the second frequency variable (model 2) significantly improves the model fit, based on the result of likelihood ratio tests perfomed with the Anova function. The more complex model (model 2 in this step) is retained only if it improves both of the previous models. For example, if model 2 is significantly better than model 1.b but not better than model 1.a, it means that the addition of the derivative frequency does not improve the fit and thus model 1.a will be the selected model for this step.

We then proceed with the two distributional measures: cosine, as it encodes a rather unmarked (and symmetric) notion of similarity, and lastly the (asymmetric) inclusion measure. We add them one at a time to the best model resulting from step 1, and (as done for frequency variables) if both are significant we test if the model is improved by adding both of them (model 4). Lastly, we test if any interactionsFootnote 26 among the significant frequency and distributional variables selected from previous steps improve the model fit (step 3, model 5).

Once the best model has been identified with forward selection, we will interpret its adjusted R2 as an indication of how good of a fit the regression model achieves with respect to the target classification – i.e. the NI vs. UNG distinction. In the model summary, the significance of a predictorFootnote 27 (its p-value) indicates if it contributes to the targeted distinction, i.e. if it shows a difference between the two morphological patterns that is not due to chance; the sign of the corresponding estimate indicates the direction of the effect. In the logistic regression the category NI corresponds to 0, and UNG to 1. Therefore, for example, a significant positive estimate for relative frequency in the NI vs. UNG distinction indicates that UNGs have a higher relative frequency than NIs.

4.2 Main results: NI vs UNG

Before starting the regression analysis, we inspect the variables in order to detect possible problems of collinearity among them. Correlated variables may lead to severe problems in regression models (e.g. Baayen, 2008:6.2.2; Tomaschek et al., 2018), such as suppression effects or unexpected and theoretically uninterpretable parameter estimates. To assess collinearity, we use multiple means: we first inspect the correlation matrix of the variables considered (reported in the online Appendix C); second, we compute the condition number k;Footnote 28 lastly, we check the vif values for the regression models. We consider as warnings correlation values higher than 0.7, k and vif values respectively higher than 6 and 3. In those cases in which values higher than these thresholds signal possible collinearity problems, we inspect our models and their results further.

With regard to the NI-UNG comparison, we observe a high correlation (r=0.84) between the frequency of the derived term and the cosine value (despite the low k of 5.65). It has been previously observed that the cosine similarity measure is influenced by the frequency of the compared terms (e.g. Weeds et al., 2004). In the case of our study, the two nominalization patterns largely differ in terms of frequency (with UNG nouns much more frequent than NIs), and the cosine measure may be affected by this difference. This is an important drawback of this measure. The inclusion measure on the other hand does not suffer of this disadvantage and seems more stable and independent of frequency.Footnote 29

We now illustrate the model selection procedure described above by constructing the best model for the NI vs. UNG distinction (process presented in Table 21, online Appendix D).

In the first step, we start adding frequency predictors (m1.a and m1.b). Both of them significantly improve the model, as shown by a likelihood ratio test (LRT) among m2 and both m1.a and m1.b. The significance levels of the comparison to previous models (reported in the last column)Footnote 30 indicate that the newly introduced predictors contribute to the distinction between the two target categories. Adding cosine as predictor (m3.a) does not improve the model fit: the LRT reveals that the difference with m2 is not significant. This result may be due to the correlation between cosine and the derived’s frequency highlighted above;Footnote 31 however, we cannot remove the derived term’s frequency from predictors in favour of Cosine, because of the very different frequency of the patterns under investigation: frequency is a natural covariate we want our distributional variables to build upon. We observed, moreover, that the sign of the estimates for cosine turns from negative to positive if the derived term’s frequency is removed from the model. This fact is due to the above mentioned correlation and makes us mistrust a model in which cosine is preserved instead of derived frequency.Footnote 32

Given this fact, we do not keep cosine as a variable in the following models. In contrast, the addition of the inclusion variable, InvCL, improved it (m3.b).

The interaction between relative frequency and the inclusion variable significantly improves the fit; model 4.a is our final model and the adjusted R2 value shows that this distinction can be predicted rather well thanks to our corpus-based variables.Footnote 33 The summary of the model fit, reported in Table 5, confirms that the frequency predictors are highly significant, whereas our inclusion variable alone is not signficant ( 0.05 < p < 0.1), but highly significant if moderated by relative frequency.

Let’s look at the direction of the effect of each predictor. As expected, the model picks up the fact that UNG nouns are significantly more frequent with respect to NIs. Similarly, their relative frequency is higher than that of NIs, i.e. UNGs are generally more frequent than their bases with respect to NIs. If we take relative frequency as a correlate of semantic transparency (following statements made by Hay, 2001, for example), we would interpret UNGs to be less transparent than NIs.

Distributional inclusion shows a significant effect only when considered in interaction with relative frequency. Figure 1 shows that NIs vectors are more included in the vectors of the base verbs than those of UNG nominalizations only when their relative frequency is low (longdash blue and dotdash purple lines in the plot). When the relative frequency is higher (solid orange line) we find the opposite trend: UNGs show higher inclusion values than NIs. The effect of InvCL is absent for derivatives with relative frequency around 0 and 1 (i.e. those with frequency equal to their bases, dotted green and dashed red lines).

4.2.1 Discussion

The interaction between relative frequency and inclusion measure brings new insights and partially contradicts our expectations about frequency effects on transparency. Given these data, we can argue that higher relative frequency does not always imply a semantic shift and, vice versa, a lower relative frequency is not always associated with semantic transparency. A more complex view emerges, in which morphological patterns show specific trends. In particular, the two nominalizations exhibit opposite behaviour once related to their relative frequency. Low-frequency NIs actually preserve the meaning of their corresponding base verb. In those cases in which their relative frequency is higher, they acquire a meaning shift that brings their vectors away from those of their base verb. Consider for example the NIs Verschulden (‘fault, blame’) and Bestreben (‘ambition, aspiration’), whose meanings are not totally transparent with respect to those of the base verbs verschulden (‘to get into debt, to cause something’) and bestreben (‘to endeavor’). The behavior of NIs thus matches previous observations concerning relative frequency and transparency.

UNG nouns behave in the opposite way: when their frequency is lower than those of the base verb, they are less transparent. When they are frequent, they are actually similar to their base verbs in meaning; a behaviour that is in contrast with previous observations concerning (relative) frequency and lexical split (a.o. Bybee, 1985; Hay, 2001).

In order to better understand the general results of the model, we manually inspect the items that were correctly and wrongly predicted by the model. Starting from the most confidently predicted UNGs and NIs (i.e. those with a probability respectively higher than 0.9 and lower than 0.1), we notice that in most cases there is a sortal distinction between the two nominalizations. The model has done a better job at predicting cases in which the UNG derivative has a predominant result reading (either a result object or resulting state reading), that clearly differs from the event reading of the NI. Some examples extracted among the top 20 best predicted items are:

-

Abbilden (‘the depicting, representing’) vs. Abbildung (‘reproduction, image’);

-

Äußern (‘the uttering’) vs. Äußerung (‘statement, comment’);

-

Beleuchten (‘the illuminating’) vs. Beleuchtung (‘light’);

-

Beschreiben (‘the describing’) vs. Beschreibung (‘description, report’);

-

Einsparen (‘the economizing’) vs. Einsparung (‘savings’).

-

Absichern (‘the safeguarding’) vs. Absicherung (‘safeguard’);

-

Abstimmen (‘the voting, tuning’) vs. Abstimmung (‘harmonization’);

-

Bewundern (‘the admiring’) vs. Bewunderung (‘admiration’);

-

Darstellen (‘the representing’) vs. Darstellung (‘representation’);

-

Einschätzen (‘the estimating’) vs. Einschätzung (‘assessment’);

-

Einschränken (‘the restricting’) vs. Einschränkung (‘limitation’)

In a few cases, the two nominalizations seem to disambiguate a polysemous base verb by inheriting only part of its senses. Consider as examples the verbs annähern and ausstrahlen. Annähern has two main senses, ‘to approach’ and ‘to approximate’, and each nominalization tends to express only one of these two meanings: the NI Annähern mostly means ‘approximating’, whereas the ‘approach’ meaning is arguably more dominant for the UNG Annäherung. Similarly, from the verb ausstrahlen (‘to emanate, to radiate’), the UNG noun Ausstrahlung dominantly inherits the metaphorical meaning of ‘charisma’, whereas the NI Ausstrahlen is usually used for ‘radiating’.

There are, however, cases correctly predicted by the model that do not show a clear semantic difference between UNG and NI. For example the following pairs do not exhibit a sortal difference or other meaning differences: Aktivieren vs. Aktivierung (‘activation’); Beschaffen vs. Beschaffung (‘acquisition’); Bombardieren vs. Bombardierung (‘bombing’); Durchsetzen vs. Durchsetzung (‘assertion, implementation’); Einbeziehen vs. Einbeziehung (‘inclusion’).

If we look at the errors of the model, it is more difficult to find patterns. Among the pairs that were wrongly predicted (NI’s probability higher than 0.8 and UNG’s probability lower than 0.2), there are cases in which the UNG noun has acquired an idiosyncratic meaning which does not correspond to the act or result of the verb, as in the following cases:

-

Heizen (‘the act of heating’) vs. Heizung (‘heater’);

-

Lesen (‘the act of reading’) vs. Lesung (‘an author reading event’);

-

Schreiben (‘the act of writing’) vs. Schreibung (‘orthography’).

4.3 Further comparison: NI vs. UNG in the inflection/derivation cline

The two nominalization patterns above show some peculiar characteristics: they have similar meanings since they can both be used to refer to events, but they largely differ in terms of frequency. The previous experiment highlightes these properties, but it also shows that there is a fine-grained difference in terms of semantic transparency between the two processes.

In this section, we want to apply our distributional predictors to the comparison with two other morphological processes, namely -er deverbal nouns (ERs) and present participles in -end (ENDs).

Our aim is twofold. First, we want to verify the reliability of our transparency measures outside the event nominalizations domain. For this reason, we chose a derivational process (ER) that produces a type-shift from event to agent or instrument nouns, and an inflectional process (ENDs) that only maintains the event reading and that does not imply a conversion to nouns.Footnote 34 In this way we check that our transparency measures are not simply measuring the relatedness to the base verb (an agent noun is still related to the verb it is formed by), nor are they influenced by the syntactic status of nouns.

Second, we want to relate our results to the general distinction between inflection and derivation. In Sect. 2.1 we have seen that NIs maintain some features of their inflectional origin, since they do not show all the nominal properties. UNG nouns instead are a more typical case of derivation, since they acquired through time all the syntactic and morphological features of typical common nouns. This different origin is mirrored in their different degrees of semantic transparency: as reported above, it has been frequently stated that derived words often acquire idiosyncratic meanings and non-unique form-meaning associations (Booij, 2000; Laca, 2001), whereas inflection can be completely compositional (Dressler, 2005:271). Given these premises, we expect our measures to capture the difference in semantic transparency between inflectional and derivational processes, showing a graded distinction between the two sides. We acknoweldge that other additional processes are needed to fully investigate transparency into the inflection-derivation continuum, but this is out of the scope of our paper. We refer the reader to Bonami and Paperno (2018) for a similar analysis.

In Sect. 4.3.1, we investigate the modulation of our transparency measures in connection with type-shift introducing -er deverbal nouns into the picture. Second, in Sect. 4.3.2 we introduce an inflectional comparison term, the present participles in -end.

4.3.1 A derivational landmark: ER deverbal nouns

In German, the suffix -er is used to create deverbal nouns that denote agents (Läufer, ‘runner’) or instruments (Öffner, ‘opener’) (Plag, 1998). In a few cases, they may also indicate patient nouns (e.g. Aufkleber ‘sticker’, from aufkleben ‘to stick’) or semelfactive events (Seufzer ‘sigh’) (Luschützky & Rainer, 2011; Scherer, 2011; Müller, 2011) – however, the event reading is marginal for ER, particularly in comparison to UNG nominals. In the context of our experiments, we take ER as a derivational landmark in which the event reading of the base verb is commonly lost: we thus expect ERs to be less included in the base verb’s vector than UNGs and NIs.

From the dataset used in 4.2, we extracted for each base verb the corresponding -er nominals from DerivBase (when present), thus restricting the list to 76 quadruples. We report descriptive statistics for the variables considered in online Appendix B.

We then repeated the experimental setup from Sect. 4.2 twice, once using the NI/ER distinction and once with the UNG/ER distinction as the dependent variable (ER being coded as 0). From the correlation matrices (online Appendix C, Table 16 and 17), we observe that there may be some collinearity issues between Cosine and InvCL, since their correlation coefficient is equal (for NI-ER) or above (for UNG-ER) the threeshold of 0.7, and between InvCL and relative frequency (r=0.72) for the UNG-ER comparison.Footnote 35

Considering these values, we proceed with caution analyzing the results of our regression models. We refer the reader to the online Appendix D (Tables 22 and 23) for the detailed model selection procedure for NI/ER and UNG/ER, respectively. For the NI-ER prediction task, the possible correlation between our distributional variables is not relevant, since the addition of Cosine (model m3.a, Table 22 in online Appendix D) yields only a marginally significant improvement (p > 0.01). The final model uses relative frequency and InvCL as predictors (model m3.b). With regard to the UNG-ER comparison, the frequency variables are not significant in the final model, and thus the correlation with InvCL is not an issue. However, the correlation between our distributional measures may be problematic, causing unreliable model. We thus analyze their import in separate models, without considering them together in a single one.

In both comparisons, the frequency of the derived term had no impact on the improvement of model accuracy, whereas relative frequency was significant for the ER-NI comparison. InvCL significantly improved the models, whereas Cosine played a role only in the ER-UNG comparison. Interactions among the variables were discarded because they did not improve the fit. We thus end with relative frequency and InvCL as predictors for the ER-NI distinction (model m3.b), and two distinct models with our distributional variables for the ER-UNG one (model m1.c and m1.d). A collinearity check confirms that the effects of the model can be trusted.Footnote 36 The estimates for the best models are reported in Table 6.

The adjusted R2 values (0.44 and 0.38) are lower than in the NI vs. UNG experiments (R2=0.63), showing that distinguishing the two nominalizations in the core of this paper from ER is less straightforward. This observation indicates that the common event semantics of UNGs and NIs does not make the task more difficult. Our inclusion variables help indeed in discriminating the two event nominalizations.

In the comparisons with ER, the role of relative frequency in the model fit is weaker: the adjusted R2 for the models with only the frequency variables are much lower than those of the best model with distributional measures (0.13 vs 0.44 for the ER-NI comparison; see online Appendix D); for the ER-UNG comparison relative frequency is not significant. This suggests that relative frequency is not enough to explain the difference among derivational patterns and to measure semantic transparency, whereas our distributional measures are better at catching it.

Even if the model fit is lower, our distributional variables are significant in the distinction of ERs from the two event nominalizations. Let us look at the estimates of the logistic regression model displayed in Table 6.

Looking at the effect of the distributional inclusion measure, ER is significantly less included than NI and UNG nouns. Given their event semantics, we expected the latter to have a stronger semantic relationship with the base verb. It suggests that the InvCL measure grasps the strong event semantics of this class of nominalizations, rather than a simple relation in meaning with the base verb semantics (as could be in the case of agent nouns).

The same is not true for the Cosine measure: it is not significant in the ER-NI task, despite the semantic difference between the two patterns. In the ER-UNG comparison instead, cosine is significant and it also explains a larger variance than the inclusion measure (R2=0.38 vs 0.34). If we look at cosine as a measure of substitutability, UNG is confirmed as a better candidate substitute for the base verb than ER. However, the results from the ER-NI comparison do not allow us to fully trust a similar interpretation. Therefore, we can make the following considerations: a) cosine similarity is not a mere correlate of type shift (otherwise NI would have been significantly more similar to the base than ER) and b) it is probably too dependent on frequency.

With regard to relative frequency, its effect is significant only in the comparison between ERs and NIs. The relative frequency of NIs is significantly lower than that of ERs, whereas the latter is not significantly different from that of UNGs. A one-unit increase in relative frequency is associated with the decrease in the log odds of the derivative being an NI vs. an ER nominal in the amount of 1.859. Interestingly, ERs and UNGs, at the derivational extreme of our cline, show the same behaviour with respect to relative frequency.

4.3.2 An inflectional landmark: Present participles

Let us now extend our study to present participles, our inflectional landmark, by introducing in our comparison the present participle END.

Present participles (inflectional verbal adjectives, Haspelmath, 1994) can be formed for every base verb and are mainly used as adjectives: das kochende Wasser (‘the boiling water’), das laufende Jahr (‘the current year’). Some of them (like spannend ‘gripping’, umfassend ‘comprehensive’, zwingend ‘mandatory’) have become true adjectives and can also occur after the auxiliary sein, ‘to be’ (Durrell, 2003). Given their inflectional nature and the fact that they only retain the event reading, we expect ENDs to pattern with NIs.

We extracted from DerivBase the corresponding END derivatives: we obtained 185 items matching NIs and UNGs and 59 matching the previously selected ERs. We report descriptive statistics for the two dataset in the online Appendix B, Table 13 and 14 respectively. In the online Appendix D (Tables 24, 25 and 26), we report the result of model selections for the three comparisons, obtained following the same procedure described in previous sections.

The low R2 values for the comparison between NI and END (best model: R2 = 0.04) show that our predictors are not able to tease apart these two categories: indeed, the inflectional extreme of our cline appears to be quite tight. Instead, END can be better distinguished from UNG (R2 = 0.62) and (to a lower extent, somewhat surprisingly) from ER (R2 = 0.48).

In Table 7, we report the effects of our predictors in the best model for the three comparisons.Footnote 37ENDs correspond always to category 0, whereas ERs, NIs or UNGs to 1. For the END-ER comparison, the two distributional variables seem correlated (r=0.73, vif values > 3; see Table 20, online Appendix C). For this reason, we do not add the two predictors in the same model, but we consider them in two separate final models.