Abstract

Continuous electroencephalographic monitoring of critically ill patients is an established procedure in intensive care units. Seizure detection algorithms, such as support vector machines (SVM), play a prominent role in this procedure. To correct for inter-human differences in EEG characteristics, as well as for intra-human EEG variability over time, dynamic EEG feature normalization is essential. Recently, the median decaying memory (MDM) approach was determined to be the best method of normalization. MDM uses a sliding baseline buffer of EEG epochs to calculate feature normalization constants. However, while this method does include non-seizure EEG epochs, it also includes EEG activity that can have a detrimental effect on the normalization and subsequent seizure detection performance. In this study, EEG data that is to be incorporated into the baseline buffer are automatically selected based on a novelty detection algorithm (Novelty-MDM). Performance of an SVM-based seizure detection framework is evaluated in 17 long-term ICU registrations using the area under the sensitivity–specificity ROC curve. This evaluation compares three different EEG normalization methods, namely a fixed baseline buffer (FB), the median decaying memory (MDM) approach, and our novelty median decaying memory (Novelty-MDM) method. It is demonstrated that MDM did not improve overall performance compared to FB (p < 0.27), partly because seizure like episodes were included in the baseline. More importantly, Novelty-MDM significantly outperforms both FB (p = 0.015) and MDM (p = 0.0065).

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, continuous electroencephalographic monitoring (cEEG) of critically ill patients is an established procedure in intensive care units (ICU). Quantitative EEG (qEEG) allows many hours of EEG data to be compressed onto a graph, greatly reducing the amount of time necessary to detect seizures and transient EEG changes [18]. Up to 48 % of ICU patients experience non-convulsive seizures (NCS), much more than would be detected by clinical observation alone [5, 8, 23, 26, 32]. If those seizures are not detected, and treatment is thus not offered, those patients may suffer brain damage. Therefore, automated seizure detection methods based on qEEG would be an important addition to the cEEG procedure. In past years, numerous seizure detection methods have been developed [1, 2, 7, 12, 21, 24, 29]. Research has mainly focused on two aspects of automatic seizure detection: EEG feature computation and methodological aspects of classification [24]. Due to time-varying EEG dynamics and high variability in EEG characteristics between patients, reliable automated seizure detection is difficult [15]. Because of this, feature normalization is essential for patient-independent epileptic seizure detection. Some research has evaluated the influence of non-stationary EEG background activity on seizure detection [3, 20]. We recently introduced a feature baseline correction (FBC) procedure which reduces inter-patient variability by correcting for differences in background EEG characteristics [3]. FBC is a feature normalization method based on visual inspection wherein a seizure-free EEG segment is selected at the start of a monitoring session. However, during long-term cEEG, background EEG changes and differences between different patients can be of an equivalent magnitude. Therefore, feature normalization should be applied in a dynamic time-dependent manner [20]. EEG baseline variation may be due to circadian rhythm, changes in the state of the patient’s vigilance, a response to medication, or changes in EEG recording quality, e.g. changing electrode-tissue impedances [9, 15]. For optimal FBC functioning, the non-seizure EEG baseline segment needs to be updated to adapt to changes in the background EEG. Logesparan et al. [20] showed that a normalization procedure based on median decaying memory (MDM) might be a promising normalization method. It computes a feature normalization factor NF, based on an on-going unsupervised update of the baseline EEG buffer. However, during long-term cEEG, artefacts, short-duration epileptiform events, and multiple successive seizures can be numerous. Because if this unsupervised baseline updated might result in corrupt NF calculation and by that hamper seizure detection performance, this raises the question of whether unsupervised MDM is robust enough when cEEG conditions are less than optimal. Our hypothesis is that a semi-supervised baseline update may significantly improve MDM performance. The improvement our MDM approach offers is based upon our method’s ability to automatically reject EEG epochs from the baseline buffer. To accomplish this, we implement a novelty detection algorithm. Novelty detection classifies test data that differ in some respect from data available during training [22]. A novelty detector trained on the current baseline segment is used to detect ‘Novel’ epochs. These epochs differ significantly from the current baseline epochs and thus most likely contain artefacts or EEG patterns not similar to actual baseline EEG activity. Consequently, only epochs classified as ‘Non-Novel’ are used to update the baseline buffer. With this updated baseline buffer, the feature normalization factor and novelty detector are updated. Our major aim is to evaluate the effect of MDM feature normalization, with and without ‘Novelty Detection’, on support vector machine (SVM)-based seizure detection performance. Seizure detection performance is evaluated in terms of the area under the receiver operator characteristics (ROC) curve. To complete our study, the standard fixed baseline method [3] is compared to MDM and Novelty-MDM.

2 Materials and methods

2.1 EEG test dataset

The test dataset consists of 53 cEEG registrations recorded as part of an ongoing ICU monitoring study. At our hospital’s general ICU, patients in a comatose state due to central neurological damage (GSC ≤ 8) were prospectively enrolled in a non-blinded, non-randomized observational study between January 2011 and March 2014. This study was approved by our hospital’s ethical committee. With informed consent, and permission from each patient’s legal representatives, cEEG was performed. To be included in this study, patients had to have been admitted to the ICU, be 18 years of age or older, have central neurological damage and be in a coma (GCS ≤ 8), and EEG electrode placement had to be feasible. Patients were selected after consulting the neurologist or neurosurgeon responsible for their treatment. During their EEG registration, 17 out of the 53 patients experienced convulsive and/or non-convulsive seizures due to various aetiologies (Table 1). The total number of seizures in the dataset was 1362 and varied per patient from 1 to 384 with a median number of 49 seizures. The minimal duration of a seizure to be annotated as such was 10 s, as is recommended by the International Federation of Clinical Neurophysiology [6]. The total duration of EEG registration was 4018 h (median duration: 66 h, range 5–210 h). EEG registration was stopped as soon as GCS dropped below 8. EEG electrode configuration was done according to the international 10–20 electrode configuration system, with 19 active electrodes. Signals were processed in the common average derivation. Seizure detection was performed retrospectively while simulating online detection.

2.2 Feature extraction

EEG recordings were recorded with a sample frequency of 250 Hz, band-pass filtered between 0.5 and 32 Hz and subsequently down-sampled to 25 Hz. Each of the 19 EEG channels was then partitioned into 10-s epochs with a 5-s (50 %) overlap between epochs. From each epoch in each common-average referenced EEG channel, 103 quantitative features were extracted [13, 30]. These features stem from different signal description domains such as time, frequency, and information theory and are listed in Table 2. Each EEG epoch is now described by a 103 number long feature vector per channel.

2.3 Baseline buffer selection

At the start of each monitoring session, an EEG expert selected 3 min of artefact- and seizure-free EEG. This EEG segment served as a baseline buffer and was used to calculate feature baseline values (F bsl). Due to various aetiologies, this baseline EEG was allowed to contain non-seizure abnormalities such as periodic discharges, sharp spikes, or a burst-suppression pattern. To restore EEG signal quality, electrode maintenance was often necessary. Because EEG signals registered before electrode restoration can differ markedly from those registered after electrode restoration, a new 3-min baseline was manually chosen each time electrode maintenance was performed.

2.4 MDM-based feature baseline update

The median decaying memory approach [20] is used to update a feature baseline value F bsl for each feature in each channel separately. Updating consists of a weighted average of the median feature value F of the epochs currently in the buffer and the previous value of F bsl:

Equation 1 has two free parameters: buffer size K and λ. Additional memory beyond the median calculation of the K epochs is provided by λ. Optimal results [20] were obtained using a buffer size of K = 236 and λ = 0.99 corresponding to a memory of several minutes (the effect of a single value decays to 1 % in about 15 min for λ = 0.99). In our study, features were calculated for 10-s epochs and baseline update was performed every minute. This approach differs from the approach used by Logesparan et al. [20] who used an epoch duration and update interval of both 1 s. To save computational time, a 1 min update interval was used since feature normalization has to be computed online for each of the 103 features and for each of the 19 channels. Because of the aforementioned difference in update interval and epoch duration, different values for λ and K had to be used. To match the same original decaying rate, a value of λ = 0.72 had to be used instead of 0.99. Similarly for a buffer size within the optimal range (236 s and above), a buffer size of K = 50 epochs (with 50 % overlap) was used, corresponding to the value 250 used by Logesparan.

2.5 Novelty detection using principal component analysis

In contrast to MDM, where F bsl is calculated using the 50 most recent epochs (Eq. 1), Novelty-MDM applies a procedure to select epochs for calculating F bsl. Instead of the most recent 50 epochs, the 50 most recently selected epochs were used to calculate F bsl. Epoch selection was performed by a novelty detector based on principal component analysis (PCA) [16]. This PCA model was trained on the epochs in the actual baseline buffer to detect non-similar epochs.

2.6 Principal component analysis

PCA is a statistical procedure that uses an orthogonal transformation \(T = XW\) to map a set of feature vectors X with correlated variables (EEG features) into a set of new uncorrelated variables called principal components [17]. These principal components are then sorted so that the first component accounts for the greatest amount of variance, the second component for the second amount of variance, and so on. Dimensionality reduction is performed by retaining only the first L components: \(T_{\text{L}} = XW_{\text{L}}\). The original observations can now be reconstructed using only the first L components: \(X_{\text{L}} = T_{\text{L}} W_{\text{L}}^{T}\). The total squared reconstruction error \(E_{\text{rec}} = X - \left. {T_{\text{L}} W_{\text{L}}^{T} } \right\|_{2}^{2}\) can be used as a novelty measure. The idea behind this novelty detector is that the reconstruction error will be small for observations that originate from the same distribution as the observations X used to identify the principal components W. Larger reconstruction errors are expected for observations from another distribution.

2.7 Novelty-MDM implementation

Novelty-MDM is performed per EEG channel separately, starting with the manually selected initial buffer of non-seizure EEG epochs. This buffer is represented by a matrix where each row represents an epoch and each column one of the 103 calculated EEG features. Due to differences in magnitude between different features, each feature is first normalized by subtracting its mean and subsequently dividing it by its standard deviation. Subsequently, the normalized matrix is used to calculate the PCA model. The ‘expected reconstruction error’ is calculated using a leave-one-out (LOO) procedure. Excluding one epoch at a time, a PCA model is trained on the remaining feature vectors, after which the reconstruction error is calculated for the left-out epoch. This is then repeated for each epoch, and the average reconstruction error of this LOO procedure is used to determine a threshold to classify new epochs as similar or novel. If the reconstruction error of a new epoch exceeds Tr times the expected reconstruction error, the epoch is classified as novel. Tr = 2 and L = 5 values were chosen heuristically. In case of Tr, the reconstruction error usually ranged between 1 and 2 times the expected reconstruction error. For artifactual epochs, the reconstruction was much larger (in the range of 20–200 or even above). Regarding the number of principal components, inclusion of more than 5 did not relevantly change the calculated reconstruction errors. This is to be expected because the amount of variance each component accounts for decays exponentially.

2.8 Feature baseline correction

Feature baseline correction (FBC) is an EEG feature normalization method introduced by our group [3] which is performed by subtracting a normalization factor N from a raw feature value F:

with

where A and B are parameters estimated from the training data set. A more detailed description of FBC can be found in our recent paper [3].

2.9 Seizure detection framework

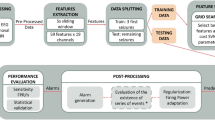

The different seizure detection frameworks evaluated in this study are illustrated in Fig. 1. For each single EEG channel epoch, a set of 103 features is computed. These features are then normalized according to Eq. 2, after which the normalized feature vector is classified by an SVM classifier as either seizure or non-seizure.

Figure 1a illustrates the use of a fixed baseline segment to calculate F bsl. Figure 1b illustrates MDM, with the trivial exception that feature vectors successfully classified as seizure are excluded from the baseline. The Novelty-MDM approach is illustrated in Fig. 1c. With Novelty-MDM, feature vectors classified as non-seizure are subsequently classified by the novelty detector as either ‘Novel’ or ‘Non-Novel’. Only feature vectors classified as ‘Non-Novel’ are incorporated into the baseline buffer. Using the median decaying memory approach, a new set of normalization factors is calculated based on the updated baseline buffer. Based on this updated baseline buffer, a new novelty detector is calculated. The updated normalization factors and novelty detector are then applied to subsequent epochs.

2.10 SVM seizure detection performance evaluation

The SVM seizure detector was trained on a randomly chosen subset of epochs from a set of routine EEG registrations from 39 neonatal patients. This classifier was readily at our disposal and, although it was trained on neonatal EEG, can also successfully be used for seizure detection in adults [10]. Because the random selection of the training data set may introduce variance in performance metrics, Monte-Carlo simulations were performed by training 25 classifiers trained on 25 random training subsets. Seizure detection performance was subsequently evaluated for these 25 classifiers using the test EEG dataset of 17 ICU patients. The average and standard deviation of the performance per patient allow for statistical testing, to determine differences between the three seizure detection frameworks. From our ICU dataset, the 17 patients with seizures were used to evaluate seizure detection performance using the 3 different feature normalization methods: (Fixed baseline (FB), MDM, and Novelty-MDM.

The seizure detection procedure was applied to each channel separately. If a seizure was detected in at least one channel, the complete epoch was classified as seizure.

To evaluate classifier performance, an ROC curve was obtained per EEG registration by plotting sensitivity versus specificity for all possible detection thresholds [11]. The area under this curve (AUC) was then used as a measure of the performance. AUC range is between 0.5 for random and 1 for perfect classification. AUC values were calculated per patient, and final performance measures were obtained by taking the average of the 25 AUC values. Group differences between the 3 seizure detection frameworks were tested for statistical significance using the nonparametric Wilcoxon signed rank test. Differences in performance per patient were tested for statistical significance using a paired t test. p values below 0.05 were considered statistically significant.

3 Results

Looking at each individual patient, seizure detection performance was highest for Novelty-MDM in 8/17 patients, highest for MDM in 4/17 and highest for FB in 3/17 patients. For a single patient, all three methods resulted in the same performance and for another single patient either FB or Novelty-MDM performed best. The Bland–Altman plots in Fig. 2 show performance differences between all three methods by plotting method to method performance difference versus their average performance. Specific AUC value distributions for each of the 3 normalization procedures are shown in Fig. 3. Average AUC values per patient and per normalization method can be found in the appendix.

Mean difference plots of the AUC values per patient. Mean AUC values of two methods are plotted against their difference. Each circle represents the median value of the 25 Monte–Carlo simulations, and each red bars indicate the corresponding 25 and 75 percentile values. Horizontal lines indicate the group median (broken line), 25 and 75 percentile values (solid line). a Group difference MDM versus FB (p = 0.27). b Group difference Novelty-MDM versus FB (p = 0.015). c Group difference Novelty-MDM versus MDM (p = 0.0065). Non-statistically differences are indicated with ns (colour figure online)

3.1 MDM versus FB

To compare MDM versus FB feature normalization, Fig. 2a shows MDM minus FB AUC values versus their mean ((MDM + FB)/2) per patient. Ten patients showed improved performance, 5 patients decreased performance and for the remaining 2 patients, no statistically significant difference was found. In the complete group, MDM performance was not significantly different from FB (p = 0.27, Wilcoxon signed rank test).

3.2 Novelty-MDM versus FB

Overall, Novelty-MDM was significantly better compared to FB (p = 0.015). Figure 2b shows that Novelty-MDM achieved higher performance for 11 patients, lower performance for 4 and equal performance for the remaining two patients.

3.3 Novelty-MDM versus MDM

The numbers presented so far showed that both MDM and Novelty-MDM outperform FB. Patient specific Novelty-MDM versus MDM differences in classification performance are shown in Fig. 2c. Overall, Novelty-MDM performed better than MDM (p = 0.0065). Higher performance was found in 13 patients and lower for the remaining 4 patients. Detailed evaluation of the latter four cases revealed that this lowered performance was due to the presence of periodic epileptiform discharges (PED) and can be explained by the illustration in Fig. 4. It shows that a correctly detected seizure was followed by an episode with PED. Novelty-MDM classified more epochs as seizure compared to MDM. This is because at the start of the PED episode, MDM included these epochs into the baseline buffer, whereas Novelty-MDM did not. As a result, in case of MDM, the subsequent epochs containing PED were more similar to the baseline, and consequently, more likely to be classified as non-seizure. Novelty-MDM, on the other hand, excluded these epochs with PED because they were marked as ‘novel’ as can be seen in the bottom plot. As a result, the subsequent PED epochs were less similar to the baseline and consequently became more likely to be classified as seizure.

SVM classifier output for MDM (a) and Novelty-MDM (b), a detection threshold of 0.5 was used as indicated by the horizontal lines. The novelty detection score (reconstruction error) is shown in subplot (c). The green rectangle indicates an episode with seizures; the red rectangle indicates an episode with PED. The yellow circle indicates an episode of epochs that are classified as novel. The first half of this episode contains numerous electrode artefacts and the second half contains PED. Consequently, Novelty-MDM does not update the baseline during this episode because the artifactual and PED epochs were classified as Novel (colour figure online)

4 Discussion

4.1 Dynamic feature normalization

To correct for changing EEG characteristics and differences between patients, various feature normalization methods have been evaluated by Logesparan et al. [20]. Best performance was achieved by feature normalization using the median decaying memory (MDM) method. MDM uses the median feature value of a sliding baseline buffer for normalization. However, during long-term EEG monitoring, especially in an intensive care unit, seizures, artefacts, epileptiform activity, and other abnormal EEG patterns can be numerous. These patterns might contaminate the baseline buffer and by that, reduce seizure detection performance. Our study provides evidence that MDM is not robust enough for reliable dynamic feature normalization during long-term ICU EEG monitoring. We conclude this from the observation that in our 17 patients, MDM did not result in overall better performance compared to feature normalization with a fixed baseline. However, application of our newly introduced Novelty-MDM approach did result in an overall increase in performance both with regard to FB and MDM normalization. Novelty-MDM is based on a selection procedure to prevent erroneous epochs being included into the baseline buffer thereby improving feature normalization and subsequent seizure detection.

Although Novelty-MDM resulted in better performance for the majority (11/17) of patients, in four patients performance was lower compared to MDM. In three of these four patients, the decrease was due to false detection of epochs containing periodic epileptiform discharges (PED). This finding can be explained by the different behaviour of MDM versus Novelty-MDM. In the presence of PED, whose feature values are between background EEG and seizure EEG activity, MDM includes the PED epochs into the baseline buffer making it more ‘seizure like’ and thereby hampering seizure detection. Novelty-MDM, on the other hand, rejects these PED epochs and in this way prevents contamination of the ‘background EEG’ baseline buffer. As a result, MDM becomes less sensitive but more specific in the presence of PED whereas Novelty-MDM becomes more sensitive but less specific. However, the presence of PED does not necessarily result in lower performance for Novelty-MDM. Actually, better performance was found in four other patients (patient 6, 7, 10 and 17). Whether the presence of PED results in higher or lower AUC values might depend on the relative number of seizure/PED epochs, their occurrence in time relative to each other and the severity of the epileptiform activity, i.e. how closely they resemble true seizure activity. This illustrates that our current seizure detection algorithm is not always accurate enough to distinguish between epileptiform and seizure activity. To improve on this, more sophisticated algorithms are needed [28]. However, the underlying problem is the fact that seizure detection is approached as a two-class problem instead of a multi-class problem (non-seizure, seizure, epileptiform activity, and possibly other EEG phenomena). A restriction with regard to this will be that even an EEG expert cannot always make a clear distinction between epileptiform and epileptic activity [25]. This fundamental issue will always remain and by that limit automated reliable seizure detection.

4.2 Implications, limitations and future research

This paper focused on challenges met in robust dynamic feature normalization applied in automated seizure detection algorithms. So far, MDM feature normalization using a sliding baseline did not take into account the presence of EEG patterns that might corrupt feature normalization. Our results have shown that a non-selective way of baseline update can have a negative effect on classification performance. Baseline epoch selection using a PCA-based novelty detector is a candidate solution to this problem. In cases where Novelty-MDM resulted in lower performance, it became clear that this was due to epochs containing epileptiform activity. Future research should focus on distinguishing epileptiform EEG from epileptic EEG. In the setting of seizure versus non-seizure classification, detection of epileptiform activity, in particular PED, is considered false detections. However, PED detection could also be considered useful because it is associated with a higher risk of seizures [4, 19]. Other sources that may cause lower detection performance, apart from electrode failure, muscle and movement artefacts, are patterns that are rhythmic in nature but do not represent seizure activity. Two of our patients had long-lasting periods of frontal intermittent rhythmic delta activity (FIRDA) which were falsely detected as seizure. If such EEG patterns persistently trigger false detections, future improvements incorporating these patterns into the SVM seizure detection algorithm as non-seizure would be of great value. Moreover, incorporating patient specific seizure information during online monitoring has proven to improve seizure detection performance [14, 27, 31]. Further research could be of great value when focussed on both incorporating various types of patient specific information such as seizure and background EEG data, as well as EEG patterns that caused false detections.

References

Aarabi A, Wallois F, Grebe R (2006) Automated neonatal seizure detection: a multistage classification system through feature selection based on relevance and redundancy analysis. Clin Neurophysiol 117:328–340

Aarabi A, Grebe R, Wallois F (2007) A multistage knowledge-based system for EEG seizure detection in newborn infants. Clin Neurophysiol 118:2781–2797

Bogaarts JG, Gommer ED, Hilkman DMW, van Kranen-Mastenbroek VHJM, Reulen JPH (2014) EEG feature pre-processing for neonatal epileptic seizure detection. Ann Biomed Eng 42(11):2360–2368. doi:10.1007/s10439-014-1089-2

Chong DJ, Hirsch LJ (2005) Which EEG patterns warrant treatment in the critically ill? Reviewing the evidence for treatment of periodic epileptiform discharges and related patterns. J Clin Neurophysiol 22:79–91

Claassen J, Mayer SA, Kowalski RG, Emerson RG, Hirsch LJ (2004) Detection of electrographic seizures with continuous EEG monitoring in critically ill patients. Neurology 62:1743–1748

De Weerd AW, Despland PA, Plouin P (1999) Neonatal EEG. The International Federation of Clinical Neurophysiology. Electroencephalogr Clin Neurophysiol 52:149–157

Deburchgraeve W, Cherian PJ, De Vos M, Swarte RM, Blok JH, Visser GH, Govaert P, Van Huffel S (2008) Automated neonatal seizure detection mimicking a human observer reading EEG. Clin Neurophysiol 119:2447–2454

DeLorenzo RJ, Waterhouse EJ, Towne AR, Boggs JG, Ko D, DeLorenzo GA, Brown A, Garnett L (1998) Persistent nonconvulsive status epilepticus after the control of convulsive status epilepticus. Epilepsia 39:833–840

Duun-Henriksen J, Kjaer TW, Madsen RE, Remvig LS, Thomsen CE, Sorensen HBD (in press) Channel selection for automatic seizure detection. Clin Neurophysiol

Faul S, Temko A, Marnane W (2009) Age-independent seizure detection. Conference on Proceedings of IEEE Engineering in Medicine and Biology Society vol 5, pp 533–553

Fawcett T (2004) ROC graphs: notes and practical considerations for researchers. Technical report HPL-2003-4

Gotman J, Flanagan D, Zhang J, Rosenblatt B (1997) Automatic seizure detection in the newborn: methods and initial evaluation. Electroencephalogr Clin Neurophysiol 103:356–362

Greene BR, Faul S, Marnane WP, Lightbody G, Korotchikova I, Boylan GB (2008) A comparison of quantitative EEG features for neonatal seizure detection. Clin Neurophysiol 119:1248–1261

Haas SM, Frei MG, Osorio I (2007) Strategies for adapting automated seizure detection algorithms. Med Eng Phys 29:895–909

Hartmann MM, Furbass F, Perko H, Skupch A, Lackmayer K, Baumgartner C, Kluge T (2011) EpiScan: online seizure detection for epilepsy monitoring units. Engineering in Medicine and Biology Society, EMBC, 2011 annual international conference of the IEEE, pp 6096–6099

Hoffmann H (2007) Kernel PCA for novelty detection. Pattern Recognit 40:863–874

Jolliffe IT (1986) Principal component analysis. Springer, Berlin

Kennedy J, Gerard E (2012) Continuous EEG monitoring in the intensive care unit. Curr Neurol Neurosci Rep 1–10

Lawrence J, Hirsch RPB (2010) Atlas of EEG in Critical care

Logesparan L, Rodriguez-Villegas E, Casson AJ (2015) The impact of signal normalization on seizure detection using line length features. Med Biol Eng Comput 53:929–942. doi:10.1007/s11517-015-1303-x

Navakatikyan MA, Colditz PB, Burke CJ, Inder TE, Richmond J, Williams CE (2006) Seizure detection algorithm for neonates based on wave-sequence analysis. Clin Neurophysiol 117:1190–1203

Pimentel MAF, Clifton DA, Clifton L, Tarassenko L (2014) A review of novelty detection. Signal Process 99:215–249

Privitera M, Hoffman M, Moore JL, Jester D (1994) EEG detection of nontonic-clonic status epilepticus in patients with altered consciousness. Epilepsy Res 18:155–166

Ramgopal S, Thome-Souza S, Jackson M, Kadish NE, Sánchez Fernández I, Klehm J, Bosl W, Reinsberger C, Schachter S, Loddenkemper T (2014) Seizure detection, seizure prediction, and closed-loop warning systems in epilepsy. Epilepsy Behav 37:291–307

Ronner HE, Ponten SC, Stam CJ, Uitdehaag BMJ (2009) Inter-observer variability of the EEG diagnosis of seizures in comatose patients. Seizure. 18:257–263

Scheuer ML (2002) Continuous EEG monitoring in the intensive care unit. Epilepsia 3:114–127

Shoeb A, Bourgeois B, Treves S, Schachter SC, Guttag J (2007) Impact of patient-specificity on seizure onset detection performance. Conference on Proceedings of IEEE Conferences. Engineering in Medicine and Biology Society, 2007, p 4110-4114

Sierra-Marcos A, Scheuer ML, Rossetti AO (2015) Seizure detection with automated EEG analysis: a validation study focusing on periodic patterns. Clin Neurophysiol 126:456–462

Temko A, Thomas E, Marnane W, Lightbody G, Boylan G (2011) EEG-based neonatal seizure detection with support vector machines. Clin Neurophysiol 122:464–473

Temko A, Nadeu C, Marnane W, Boylan GB, Lightbody G (2011) EEG signal description with spectral-envelope-based speech recognition features for detection of neonatal seizures. IEEE Trans Inf Technol Biomed 15:839–847

Thomas EM, Greene BR, Lightbody G, Marnane WP, Boylan GB (2008) Seizure detection in neonates: improved classification through supervised adaptation. EMBS 2008, 30th annual international conference of the IEEE Engineering in Medicine and Biology Society, pp 903–906

Towne AR, Waterhouse EJ, Boggs JG, Garnett LK, Brown AJ, Smith JR Jr, DeLorenzo RJ (2000) Prevalence of nonconvulsive status epilepticus in comatose patients. Neurology 54:340–345

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bogaarts, J.G., Hilkman, D.M.W., Gommer, E.D. et al. Improved epileptic seizure detection combining dynamic feature normalization with EEG novelty detection. Med Biol Eng Comput 54, 1883–1892 (2016). https://doi.org/10.1007/s11517-016-1479-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11517-016-1479-8