Abstract

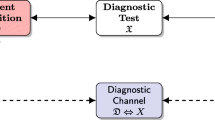

Diagnostic test accuracy, based on sensitivity, specificity, positive/negative predictive values (dichotomous case), and on ROC analysis (continuous case), should be expressed with a single, coherent index. We propose to modelize the diagnostic test as a flow of information between the disease, that is, a hidden state of the patient, and the physicians. We assume that (1) sensitivity, specificity, and false-positive/false-negative rates are the probabilities of a binary asymmetric channel; (2) the diagnostic channel information is measured by mutual information. We introduce two summary measures of accuracy, namely the information ratio (IR) for the dichotomous case, and the global information ratio (GIR) for the continuous case. We apply our model to a study by Pisano et al. (N Engl J Med 353(17):1773–1783, 2005), who compared digital versus film mammography, in diagnosing breast cancer in a screening population of 42,760 women. In film mammography, the maximum IR (0.178) corresponds to the standard cutoff of sensitivity and specificity provided by the ROC analysis (GIR 0.200). Maximum IR and GIR for digital mammography are higher (0.201 and 0.229, respectively), but IR corresponds to a cutoff with higher sensitivity but lower specificity, thus suggesting that larger information provided by digital mammography carries the risk of more false-positive cases.

Similar content being viewed by others

Notes

In the special case of communication channels, the matrix is usually symmetric (\(\alpha =\beta\)), since the system has a symmetric behavior with respect to \(0\) or \(1\).

From the mathematical point of view, \(I(D,R)\) is not a distance, because the symmetry and the triangle inequality are not satisfied.

Standard accuracy sums up the fraction of good reports with respect to the total number of reports. It corresponds to the probability of a correct (positive or negative) diagnosis, that is, \((\hbox {TP}+\hbox {TN})/(\hbox {TP}+\hbox {FP}+\hbox {TN}+\hbox {FN})\).

In the Shannon context, this is a special case of the so-called useless channel, characterized by the relation \(\beta = 1-\alpha\), that leads to a transition matrix with two identical rows.

References

Aczél J, Daróczy Z (1975) On measures of information and their characterizations. Mathematics in science and engineering, vol 115. Academic Press, London

Amblard P, Michel OJJ, Morfu S (2005) Revisiting the asymmetric binary channel: joint noise-enhanced detection and information transmission through threshold devices. In: Kish LB, Lindenberg K, Zoltan G (eds) Proceedings of SPIE 5845, noise in complex systems and stochastic synamics III

Berrar D, Flach P (2012) Caveats and pitfalls of ROC analysis in clinical microarray research (and how to avoid them). Brief Bioinform 13(1):83–97 Epub 2011 Mar 21

Bewick V, Cheeck L, Ball J (2004) Statistic review 13: receiver operating characteristic curves. Crit Care 8(6):508–512

Cover TM, Thomas JA (1991) Elements of information theory. Wiley, New York

El Khouli RH, Macura KJ, Kamel IR, Jacobs MA, Bluemke DA (2011) 3-T dynamic contrast-enhanced MRI of the breast: pharmacokinetic parameters versus conventional kinetic curve analysis. Am J Roentgenol 197(6):1498–1505

Fabris F (2002) Shannon information theory and molecular biology. J Interdisc Math 5:203–220

Flach P (2010) ROC analysis. In: Sammut C, Webb GI (eds) Encyclopedia of machine learning. Springer, Berlin, p 86974

Fluss R, Faraggi D, Reiser B (2005) Estimation of Youden index and its associated cutoff point. Biometrical J 47:458–472

Gehlbach SH (1993) Interpretation: sensitivity, specificity, and predictive value. In: Gehlbach SH (ed) Interpreting the medical literature. McGraw-Hill, New York, pp 129–39

Keyl M (2002) Fundamentals of quantum information theory. Phys Rep 369:431–548

Khinchin AI (1957) Mathematical foundations of information theory. Dover, New York

MacKay DJC (2003) Information theory, inference, and learning algorithms. Cambridge University Press, Cambridge

Moser SM (2009) Error probability analysis of binary asymmetric channels. Dept. El. & Comp. Eng., Nat. Chiao Tung Univ

Obuchowsky NA (2005) ROC analysis. Am J Roentgenol 184(2):364–372

Obuchowsky NA (2003) Receiver operating characteristic curves and their use in radiology. Radiology 229:3–8

Obuchowski NA, Beiden SV, Berbaum KS, Hillis SL, Ishwaran H, Song HH, Wagner RF (2004) Multireader multicase receiver operating characteristic analysis: an empirical comparison of five methods. Acad Radiol 11(9):980–995

Peacock J, Peacock P (2010) Oxford handbook of medical statistics. Oxford University Press, Oxford

Pisano ED, Gatsonis C, Hendrick E, Yaffe M, Baum JK, Acharyya S et al (2005) Diagnostic performance of digital versus film mammography for breast-cancer screening. N Engl J Med 353(17):1773–1783

Posada D, Buckley TR (2004) Model selection and model averaging in phylogenetics: advantages of Akaike information criterion and Bayesian approaches over likelihood ratio tests. Syst Biol 53(5):793808

Shannon CE (1948) A mathematical theory of communication. Bell Syst Tech J 27(379–423):623–656

Tononi G (2004) An information integration theory of consciousness. BMC Neurosci 5:42–64

Verdu S (1998) Fifty years of Shannon theory. IEEE Trans Inf Theory 44:2057–2078

Weinstein S, Obuchowski NA, Lieber ML (2005) Clinical evaluation of diagnostic tests. Am J Roentgenol 184(1):14–19

Zhou XH, Obuchowski NA, McClish DK (2002) Statistical methods in diagnostic medicine. Wiley series in probability and statistics. Wiley, New York

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Here, we discuss some technical points regarding the additional benefits of using the IRC analysis instead of (or in conjunction with) the classical ROC/AUC approach.

The cutoff problem

When we are dealing with multivalued diagnostic tests, we have the problem of choosing the best decision threshold. Even though there are several possible approaches to solve this problem, the choice for the optimal cutoff is substantially arbitrary [15] and depends on the context. Here are some examples: (1) from the geometrical point of view, it could be obvious to select the point \(P=(1-\hbox {SP},\hbox {SE})\) on the ROC curve which minimizes the Euclidean distance between the higher-left corner of the ROC space, whose coordinates are (0,1), and \(P\) itself. But this choice is acceptable, in clinical practice, only when SE or SP have high values; the informational approach and the Fig. 4 explain us why. (2) From the classifier point of view, the best reasonable choice is to use the decision threshold which maximizes the summation \(\hbox {TP}+\hbox {TN}\) [3], or something more elaborated, such as any objective function that is a linear combination of true and false-positive rates via the convex hull [8]. (3) Another possible method is maximizing \(\hbox{SE}+\hbox{SP}\), that is, equivalent to the use of the so-called Youden index [9]; it uses the maximum vertical distance of ROC curve from the point (x, y) on the diagonal (chance) line. So Youden index maximizes the difference between SE and \(1-\hbox {SP}\), that is, \(\hbox {SE}+\hbox {SP}-1\). (4) The clinical practice of the 7-point scale we used in the Pisano’s example uses a cutoff essentially based on the semantics of the graded scale.

If we apply all these different criterions on Table 1, we would get completely different cutoffs.

Among all these reasonable, but arbitrary choice of the optimal cutoff, the IRC analysis is the only one to offer a criterion based on the maximization of the informational flow between the patient and the clinician, that is, the scope of any good diagnostic system. Since we have only one coherent measure of information (remember the unicity theorem of Khinchin [12]), this method is the only “objective” one in the case we assign the same cost to FP and FN.

Fallacy of the undistributed middle

ROC curves, even if widely used, have at least one significant pitfall, that is, the so-called fallacy of the undistributed middle [8]: all random models score an AUC of 0.5, but not every model that scores an AUC of 0.5 is random; in other words, \(\hbox {AUC}=0.5\) does not necessarily imply that the classifier is no better than random guessing. An interesting example of this situation is given in figure 4a of [3], where the “two-threshold classifier” assumes that a certain quantity \(q\), being below a threshold \(t_1\) or exceeding a threshold \(t_2>t_1\), indicates disease, while \(q \in \left[ t_1, t_2 \right]\) means normal. Systolic blood pressure or expression of a gene could be an example of such a situation. If we use ROC analysis, the AUC equals \(0.5\) (figure 4b of [3]), and so the AUC approach is not able to discriminate this good classifier from a random one. On the contrary, if one uses the IRC approach, one can clearly discriminate this classifier, characterized by \(\hbox {GIR}=0.342\), from the random one (\(\hbox {GIR}=0\)).

IRC analysis gives us a global evaluation of the diagnostic test performance (GIR) and a choice for the best threshold of specificity. As a consequence, it could be considered, at least in principle, as a competitor of ROC curves. Unfortunately, up to this moment, the IRC tool is not yet complete, since it lacks a strategy to fit properly the empiric GIR curve and a statistical rule to compare different GIRs, that is, to attest whether different values of GIRs are statistically significant.

Nevertheless, we can appreciate the fact that IRC analysis is able to strengthen ROC analysis, taking it to a deeper level. We suggest here two issues in this perspective:

Informational chart

The iso-informational curves of Fig. 4 that are drawn on the ROC plane could be used as a sort of graph paper (informational chart) over which one can draw a ROC curve; this allows to directly visualize the information associated with each point of the ROC curve, integrating the ROC and the IRC approaches together.

The worst ROC curves are good

Always from Fig. 4, we can note that each iso-level curve of the upper left side of the diagram has a same-level correspondence in the lower right side of the same diagram. Even if this is not usual in clinical practice, this means that, in an informational sense, a bad diagnostic test, whose ROC curve stands entirely below the diagonal, performs as a good one, whose ROC curve stands entirely above the diagonal. This is not surprising, since the complement of a systematically incorrect test result is systematically correct. This would suggest that a more correct measure of the diagnostic test performance using the ROC approach, with a ROC curve entirely standing above or below the diagonal, should be the AUC\(^*\) between the ROC curve and the diagonal, that is, \(\hbox {AUC}^*=|\hbox {AUC}-0.5|\).

Rights and permissions

About this article

Cite this article

Girometti, R., Fabris, F. Informational analysis: a Shannon theoretic approach to measure the performance of a diagnostic test. Med Biol Eng Comput 53, 899–910 (2015). https://doi.org/10.1007/s11517-015-1294-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11517-015-1294-7