Abstract

In this paper, field construction data from the Singapore Metro Line project were used to study the mapping relationship and establish the prediction model between TBM operation data and the ground condition ahead of the excavation face. The study presents a multi-classifier competition mechanism to construct ten separate classifiers, including logistic regression, support vector machine, random forest, extremely randomized trees, adaptive boosting machine, extreme gradient boosting (Xgboost), light gradient boosting (LightGBM), categorical boosting, long short-term memory and convolutional neural network. The acquired data were used to select 28 key TBM operating parameters by a correlation-based feature selection method, and the selected parameters in the stabilization phase after removing the outliers were calculated as the input to the classifier, and a relatively balanced training set was obtained by the synthetic minority oversampling technique. The hyperparameters of each classifier were optimized using tree Parzen estimator Bayesian optimization. The prediction results show that LightGBM presents the best results among ten different machine and deep learning algorithms with an accuracy of 96.22%, precision of 96.94%, recall of 97.33% and F1-score of 97.33%. In addition, the effect of the input parameters of the LightGBM model on the prediction accuracy of the model was analyzed using Shapley additive explanations, and the effect of sample imbalance on the prediction performance was discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tunnel boring machines (TBMs) are commonly used in tunnel construction as it merits from their security and environmental friendliness [56]. However, geological hazards, such as rock bursts, tunnel convergence and faults, may result in construction schedule delays or instrument damages. Moreover, the cutterhead is sensitive to geological conditions, and adjusting the TBM operational parameters in response to alterations in the surrounding rock mass can enhance the excavation efficiency[39, 54]. Therefore, precisely and promptly forecasting the geological conditions ahead of tunnel excavation face is crucial for ensuring safe and effective tunneling.

Forward geological prospecting methods, such as tunnel seismic prediction (TSP) and ground penetrating radar (GPR), can provide visualization of ahead geological structures ahead of the tunnel face, which can be utilized to predict geological conditions. However, those forward geological prospecting methods can provide relatively accurate results of actual geological conditions, which cannot provide a continuous and timely prediction [56].

Additionally, time-series data from partial tunnel boring machine (TBM) sensors have been proven to potentially correlate to the stratigraphic and geological conditions ahead of excavation [24, 36, 55]. Moreover, the relationship between the TBM operational data and the geological condition ahead of the excavation face can be captured by machine learning (ML) and deep learning (DL) models to achieve timely and continuous prediction [57]. In order to establish the prediction models, the classification standard of rock mass quality was developed [21, 37, 54] to quantify the complex geological conditions, such as rock mass rating (RMR), rock quality designation (RQD) and hydropower classification (HC). Numerous models demonstrate the effectiveness and applicability of ML and DL algorithms in forecasting geological conditions ahead of excavation face. Jung et al. [25] used artificial neural networks (ANNs) to predict rock mass one ring ahead of the TBM face, and overall prediction accuracy is higher than 90%. Kim et al. [30] established a random forest (RF) model to predict surface settlement levels. Sebbeh-Newton et al. [48] used RF and extremely randomized trees (ET) to classify geological conditions using TBM operational parameters. The accuracy of ET and RF reached 95% and 94%, respectively. Liu et al. [38] developed and compared five prediction models: the adaptive boosting classification and regression tree (AdaBoost-CART), classification and regression tree (CART), support vector machine (SVM), artificial neural network (ANN) and K nearest neighbors (KNN) models. It found that the accuracy of AdaBoost-CART was 0.865, which was higher than other classifiers. Liu et al. [36] used a long short-term memory (LSTM) network based on a global attention mechanism for geological condition prediction, which outperformed other models in terms of accuracy and F1-score. Wang et al. [50] compared shallow ML and DL algorithms to construct a prediction model of ground conditions ahead of the excavation face. [30] However, there are lack of the comprehensive comparison of the prediction performance among those ML and DL models.

Moreover, the applicability and the prediction performance of the data-driven models are affected not only by the explicit or implicit correlation between input data (TBM operational data) and predicted target [31], but also by the hyperparameters of the models [34]. Specifically, the hyperparameters of the model control the learning process of the model, as well as the mining and utilization of features from the input data, and the prediction performance. Usually, hyperparameters are set before the learning process, and different learning algorithms require different hyperparameters to minimize a predefined loss function of the model [6]. Therefore, it is essential to adjust the hyperparameters of the models correctly. Several hyperparameter optimization methods, such as grid search [23], random search [40] and particle swarm optimization (PSO) [26], have been applied to the ML and DL models to improve the prediction performance of the models [8, 39]. Grid search is searching and evaluating all potential hyperparameter combinations in a limited search boundary to obtain the optimal set of hyperparameters. Random search is randomly testing combinations of hyperparameters to obtain a suitable set of parameter configurations. For grid search as well as a random search, professional experience is required to set the search boundaries effectively, and those methods of search do not fully utilize the valuable guidance information between different hyperparameters, making it computationally expensive to identify the optimal set of hyperparameters in a wide search space [8]. The tree Parzen estimator (TPE) algorithm, which is a kind of Bayesian optimization, will optimize each set of hyperparameters by referring to the evaluation results of the previous hyperparameters optimization, which can effectively reduce the optimization search space. Correspondingly, Bergstra et al. [5] claimed that TPE has better performance than traditional Bayesian optimization for multiparameter optimization and is significantly faster [14]. Kim et al. [29] used a TPE-based Bayesian-optimized ML model to predict surface settlement and successfully achieve optimal performance of the optimized models.

Besides the drawbacks above, previous ML and DL models used to predict the ground condition ahead of the excavation face lack a sufficient model interpretation mechanism [8]. Researchers [4] have attempted to use direct input–output relationships to explain the model. This approach is most commonly used for simpler models, such as linear regression. Because as the complexity of ML and DL models increases, it becomes progressively more difficult to interpret them using global inputs and outputs. To enhance the understanding of the relationship between the inputs and outputs of ML and DL models, Shapley additive explanations (SHAP) models, which use the Shapley values from game theory to represent the relative importance of input variables (i.e., TBM operational data), are used to achieve local interpretability by focusing on explaining what the output of model depends in in a neighborhood around the instance rather than learning the entire input–output mapping of the model.

To address the shortcomings of those learning-based prediction methods and to construct an automatically optimized high-performance model for predicting the ground condition ahead of the excavation face, a dataset is established using the TBM operational data and daily construction report acquired from the construction of Singapore metro Line 2. Data preprocessing, including filtering the abnormal working state and selecting contributing features, is used to minimize the complexity of the model. Then, synthetic minority oversampling technique (SMOTE) is used to overcome the imbalance of various geological types of the dataset. Finally, ten typical ML and DL models (i.e., logistic regression (LR), SVM, RF, ET, adaptive boosting machine (AdaBoost), extreme gradient boosting (Xgboost), light gradient boosting (LightGBM), categorical boosting (CatBoost), LSTM and convolutional neural network (CNN)) with Bayesian TPE optimization are proposed. The SHAP interpretation method is used to reveal how the TBM operational parameters affect the prediction results. Due to those specific technical improvements, there are four aspects to this research: (1) TPE optimization minimized the effect of hyperparameters on the model and helped develop a better prediction performance model; (2) multiple models with hyperparameter optimization are concurrently working to discover the best model, and it also compared the performance of conventional ML and DL models; (3) SHAP interpretation method was utilized to explain the significance of features to the prediction model and highlight the particular effects of features on the prediction outcomes.

2 Dataset acquisition and preprocessing

2.1 Brief description of the tunneling site

The research tunnel was placed on Singapore's metro line 2 between the Beautiful World station and Hillview station (shown in Fig. 1a), with the TBM excavation section located between chainage no. 51174 and no. 53520 and measuring 2.3 km in length. A single-line parallel tunnel connected the construction with two TBM launch shafts and a cut-and-cover box (30 m wide and 164 m long). Furthermore, two shield tunnel boring machines excavated the parallel single-line tunnel from the cut-and-cover box entrance to Beauty World Station (TBM-1 and TBM-2). A second tunnel was initiated from the gate to Hillview Station (TBM-3). TBM-1, TBM-2, the southern section of TBM-3, and the northern half of TBM-3 had relative lengths of 1394 m, 1394 m, 764 m, and 777 m. Moreover, the diameter of the excavation was 6.72 m. Figure 1b–c shows the geological boundary based on borehole logs and daily tunnel progress reports. Briefly, between chainage 51300 m and chainage 51400 m, the rock mass was expected to have a strong transition between fresh rock and residual soil. Similarly, the cross-section from chainage no. 51400 to no. 52300 showed little (or no) distinction of the excavation line between soil and rock. In addition, the tunnel construction was located in the Bukit Timah Granite Zone and a typical tropical region, where soil layers coupled with mainly unweathered core rocks and heavy rainwater penetration generated an irregular bedrock line. Sediments and layers of irregular bedrock were regarded to be the greatest challenges for TBM tunneling. The slurry-shield TBM is utilized for tunnel excavation, and the main technical specifications of this study are provided in Table 1.

The geological-type categorization devised by the International Tunnelling Association (ITA) [52] was utilized to quantify the complex geological conditions. Table 2 displays the classification standards and designations for classes GI through GVI. The ITA classifies granite into six grades, from fresh rock (GI) to residual soil (GVI), wherein the geological condition profiles vary significantly in the vertical direction. Franklin and Chandra [18] raised a slaking test as a reference that graded residual soil (GVI) and completely weathered rock (GV) as soils, while lower weathering grades were graded as rocks. In this study, the moderately weathered rock (GIII) and highly weathered rock (GIV) were treated as gravel, while fresh rock (GI) and slightly weathered rock (GII) were treated as rocks. Finally, this study also considers mixed rock mass that tend to be a mixture of highly weathered rock (GIV) and completely weathered rock (GV). Under the mixed rock mass, soil and rock get presented simultaneously on the tunnel face.

2.2 Filtering processing of abnormal TBM operational data

The acquired dataset may include abnormal information due to sensor damage, equipment failures, or errors in data transmission. Figure 2 shows that the abnormal data were less coherent than the normal ones. The variation law of thrust, torque, and penetration may often characterize the operating situation [8]. In order to collect data during the stabilization phase, abnormal data were filtered out using the discriminant equation (i.e., Eqs. (1–3). Equation (1) is a binary discriminant function wherein the sensors work in a normal state when x > 0 and the sensors do not work properly when x ≤ 0. F, T and P in Eq. (2) refer to thrust force, cutter torque and propulsion, respectively.

The TBM tunneling process consists of multiple tunneling cycles, including three phases: start-up, stable working, and shutdown. The start-up and shutdown phases are not present in the stable tunneling stage. Figure 3a further demonstrates that the data are unreliable during TBM start-up and shutdown. To ensure the applicability of the model, data from the start-up and shutdown phases were filtered, as shown in Fig. 3. Figure 3b shows the change of thrust and torque in the excavation process. The stable excavation phase is extracted by filtering the high value of the changes in the thrust force and cutter torque.

2.3 TBM operational parameters selection by correlation analysis

The correlation between the input feature (i.e., TBM operational parameters) and prediction goal (i.e., ground condition ahead of the excavation face) has a direct effect on prediction accuracy [16]. Literature [22, 38, 39, 46, 53, 54, 57] indicates that the selected input features are highly diverse, with disc cutter thrust, cutter torque, and advancement rate being the most common parameters evaluated in the current research [51]. However, the relevance of each input data to the geological state must be determined for each situation.

Parameter selection methods by correlation are implemented to enhance the applicability of the model and reduce the dimensions of its features. It is important to exclude parameters with low variance or strongly rely on another parameter (i.e., highly correlated parameters). Before filtering the parameters, to eliminate the effect of different dimensions and orders of magnitude of the various input parameters, each TBM parameter is normalized using Eq. (4), where \({x}_{nor}\) denotes the normalized data, \(x\) denotes the raw input data, and \({x}_{\mathrm{max}}\) and \({x}_{min}\) are the maximum and minimum values of the raw input dataset. The normalized data range between 0 and 1. After that, Eq. (5) is taken to filter the parameters with low variance. The parameters with a variance of less than 0.05 were filtered.

Pearson's correlation coefficient is a common method for highly correlated parameter selection. The Pearson correlation coefficient is defined as Eq. (6), which is used to assess the correlation of the linear relationship between two variables, which takes values from − 1 to + 1, and the absolute value of \({r}_{xy}\) close to 1 refers to a stronger linear correlation [19]. The absolute values of correlation coefficients of highly correlated parameters are greater than 0.8 [28]. One significant feature can represent the characteristic of the value of one group selected. The final correlation of the final selected TBM operational data is shown in Fig. 4. There is no significant linear correlation between each type of data.

2.4 Balancing dataset by synthetic minority oversampling technique (SMOTE)

The engineering data collection process generates a dataset that is typically unbalanced due to the limited quantity of data. For unbalanced sample sets, the number of different classes can be made relatively balanced by increasing the number of minority samples (oversampling) or decreasing the number of majority samples (undersampling).

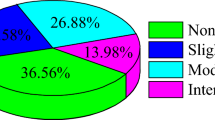

The SMOTE algorithm is an oversampling strategy for synthesizing a small number of samples. Specific SMOTE oversampling stages are as follows: First, each sample \({x}_{i}\) is selected from a small number of samples as the root sample for synthesizing new samples; second, according to the up-sampling multiplier n, a sample is randomly selected from k (normally, k = 5) nearest-neighbor samples of the same category of \({x}_{i}\) as the auxiliary sample for synthesizing new samples, and repeated n times; finally, a linear interpolation is performed as Eq. (7) between samples. Figure 5 shows the percentage distribution of rock classifications in the database before and after SMOTE.

Figure 6 depicts the frequency distribution of each input parameter after SMOTE processing. Table 3 summarizes the distribution of the input TBM operation parameters.

3 Methodology

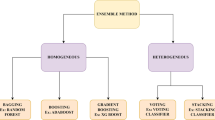

This study compared the prediction performance of ML algorithms. The prediction algorithm specifically contains the following different categories: typical ML algorithms [32, 43], i.e., LR and SVM; ensemble ML learning [1, 7], i.e., RF and ET; boosting-based ML algorithms [11, 17, 27, 47], i.e., AdaBoost, Xgboost, LightGBM and CatBoost. Simultaneously, this study investigated LSTM [36] and CNN [45] for DL algorithms. The specific algorithms will be described in the following. Also, the TPE hyperparameter optimization algorithm and the SHAP interpretation algorithm will be described in detail in the following.

3.1 Typical machine learning methods

3.1.1 Logistic regression (LR)

LR is a typical statistical analysis technique that performs regression analysis to determine the quantitative relationship between two or more variables. It often fits data with a straight line or a curve and then analyzes ways to minimize the distance between the line or curve and the data [15]. The logistic regression (Eq. (10)) is derived from the logistic function (Eq. (8)) (i.e., sigmoid function). Equation (9) is a weighted linear model, \({\beta }_{i}\) denotes the correlation coefficient of the function, \({\beta }_{0}\) indicates the intercept of the function.

3.1.2 Support vector machine (SVM)

SVM is a statistical approach to ML. It specializes in generalization ability and is effective with small sample problems. It simultaneously avoids the problem of easily falling into the local optimum and solves the problem of dimension disaster. The fundamental concept of SVM is to execute a nonlinear mapping of the data from the input space to the high-dimensional feature space, followed by nonlinear regression analysis in this feature space to discover the optimal classification hyperplane.

The primary objective of the SVM is to minimize the margin distance between the sample points and the hyperplane. Consequently, the objective function of the SVM is as Eqs. (11–12), where \(\omega\) represents the weight matrix, \(C\) is the penalty parameter that determines the trade-off between training error and model complexity, \({\xi }_{i}\) and \({\xi }_{i}^{*}\) are slack variables that measure the deviation of training samples, \(b\) denotes the bias and \(\varepsilon\) is the precision parameter, and \(\Phi\) is the kernel function for mapping to high-dimensional space. As the kernel function in this study, the radial basis function (RBF) (Eq. (13)) was used. The two most important parameters in the SVM model are the penalty coefficient \(C\) and the gamma, where gamma = 1/2 \(\sigma\).

3.2 Ensemble machine learning algorithms

3.2.1 Random forest (RF)

RF is a classifier that utilizes several decision trees to fit data. It randomly extracts data from the original samples using bootstrap re-sampling technology to generate multiple samples, builds multiple decision trees for each re-sampled sample using random splitting technology of nodes, and combines multiple decision trees and votes to produce the final prediction result. The prediction results of the random forest are shown in Eq., where \(n\) is the number of decision trees, \(y\) is the average output of \(n\) decision trees, and \({y}_{i}(x)\) is the prediction of tree \(i\).

3.2.2 Extremely randomized tree (ET)

ET utilizes the same concepts as RF, but it randomly selects the best features and the corresponding values used to split the nodes, resulting in a higher level of generalization than RF and less likely to overfit than RF. ET uses the whole training data set to train each decision tree and randomly selects K features and split nodes for each feature to generate K classification nodes. The node with the greatest score is selected as the split node after computing the scores of these K split nodes.

3.3 Boosting-based machine learning algorithms

3.3.1 AdaBoost classifier

AdaBoost is a fast-convergent machine learning algorithm. AdaBoost adjusts the errors of the weak learner. The sample weights change according to the results of the weak learner training errors in the previous iteration. The weights of the misclassified samples are increased so that the weak learner is forced to focus on the samples in the training set. The weighted samples are then used to train the weak learner in the next iteration.

3.3.2 Extreme gradient boosting (Xgboost)

Xgboost is considered to be a highly efficient multi-threaded implementation of the gradient boosting tree algorithm. The GBDT gradient boosting tree used by the Xgboost tool is one of the most widely used algorithms in the boosting family [35]. The basic principle encompasses an overlay strategy that fixes the learned part and adds new trees to compensate for errors, thereby improving accuracy [50].

In general, the Xgboost objective function usually consists of two parts: training loss and regularization. The learning stops when the objective function (Eq. (15)) becomes less than a threshold value, where \(l\) denotes the loss function (the error between the predicted and actual values) of the model, and \(\Omega\) denotes the regularization function (controls the complexity of the model and avoid the overfitting phenomenon).

In Xgboost, the regularization term \(\Omega \left({f}_{t}\right)\) determines the complexity of the model, where \(t\) denotes the number of leaf nodes in the spanning tree and \({\omega }_{\mathrm{j}}\) denotes the output value corresponding to each leaf node. \(\gamma and \lambda\), on the other hand, are the regularization parameters. When the parameters are larger, the greater the proportion of the regularization term in the objective function, the more obvious the regularization effect of the model. (The model becomes more generalized with a difficult overfitting phenomenon). Consequently, the larger parameters make the model simplistic and underfitting, resulting in inadequate model accuracy, thus requiring appropriate adjustment of the regularization term parameters to ensure that the model achieves optimal results.

The obtained objective function values are.

3.3.3 LightGBM

LightGBM is implemented based on the Xgboost and has a similar structure, such as second-order Taylor expansion of the objective function, tree leaf node values, and tree complexity expression. However, the training efficiency is improved, and the sample dimensionality is reduced by introducing gradient-based one-sided sampling (GOSS) and exclusive function bundling (EFB). GOSS samples data instances with larger gradients when calculating the information gain. EFB is used to solve the problem of a large number of features in the data, where proprietary features are bundled into one feature to reduce the number of features. LightGBM can significantly outperform Xgboost in terms of computational speed and memory usage.

3.3.4 CatBoost

CatBoost is also based on the gradient boosting decision tree (GBDT) algorithm framework, with the most important feature of direct support for category features, even for string-type features. CatBoost uses a greedy strategy for feature crossover to consider possible feature combinations. For the first split of the generated tree, CatBoost does not use any crossover features. In later splits, CatBoost uses all the original and crossover features to generate the tree to crossover with all the category features in the dataset.

3.4 Deep learning algorithms

3.4.1 CNN

A basic CNN architecture includes a convolutional layer, a pooling layer, and a fully connected layer [9, 10, 12, 13]. CNN performs as an encoder to capture significant characteristics, enabling it to discriminate without requiring complicated rules. The objective of the convolution process is to extract the distinguishing characteristics of the input data. Increasing convolutional layers allow for the iterative learning of more sophisticated representations from lower-level input. The 1D CNN used in this study (as shown in Fig. 7) is mostly used for time-series data classification. One direction is used to shift the convolutional kernel. Pooling is a sort of downsampling used to minimize the size of a feature map without compromising its depth. MaxPooling, the most prevalent operation among several pooling algorithms, keeps strong traits and removes weak characteristics to decrease complexity and avoid overfitting. The convolution layer's activation function is a linear exponential unit, which speeds convergence and improves the model's resilience.

3.4.2 LSTM

LSTM is a special type of RNN, which can avoid the vanishing gradient problem and accelerate convergence [3]. As for the basic structure of LSTM, there are three cells (input gate, forget gate and output gate) responsible for regulating in and out of the memory cell. The main function of the input gate is to add information to the memory unit. The forget gate controls whether to remember or delete the information from the previous step. The output gate is responsible for providing useful information to the subsequent memory block. The data processing flow in the LSTM unit is shown in Fig. 8. The implementation of the LSTM architecture is shown in Fig. 9.

3.5 Tree Parzen estimator (TPE) hyperparameter optimization

The hyperparameters of the ML and DL models are configured externally and cannot be estimated from the input data. The optimal hyperparameter combination determines the accuracy of the ML and DL model for a given set of input data. Unlike random or grid search for hyperparameter optimization, Bayesian optimization finds the value that minimizes the objective function by building an alternative function (probabilistic model) based on past evaluation results. The Bayesian approach refers to the previous evaluation results when trying the next set of hyperparameters. The evaluation of hyperparameters can be costly as it requires training the model post-evaluating the hyperparameters. Also, Bayesian conditioning uses a continuously updated probabilistic model to focus on promising hyperparameters by extrapolating past results. Hyperparametric optimization can be expressed as Eq. (19), where the function \(f(x)\) indicates the objective function to be minimized, \(\mathcal{X}\) indicates the search space, and \({x}^{\star }\) indicates the optimal hyperparameter setting.

TPE, as a variant of traditional Bayesian optimization, is one of the most popular algorithms for hyperparameter optimization. TPE focuses on low-dimensional continuous optimization problems based on Gaussian processes and transforms the configuration space (represented by uniform, discrete uniform, and logarithmic uniform distributions) into a nonparametric density distribution. Thus, TPE can be more flexible than traditional Bayesian optimization. In TPE, the expected improvement (EI) derived from the widely used Bayes theorem [29, 33, 42] is applied as the acquisition function as shown in Eq. (20), where \(x\) is the candidate hyperparameter, \(y\) is the output of the objective function using \(x\), \({y}^{*}\) is the objective function's threshold, and \(p(y|x)\) is an alternative model indicating the probability of y given x. Searching for the optimal combination of hyperparameters under the proxy function \(p(y|x)\) maximizes the EI with respect to \(x\). For our purposes, it is crucial to assess that this EI function utilizes information from prior trials and related hyperparameter sets to find potential hyperparameter sets with the highest probability of further reducing the loss term (i.e., accuracy). After each evaluation of the loss function, the TPE algorithm analyses the prior trials and provides an EI-updated response surface. New candidates for the solver hyperparameters will be derived from this response surface by picking the hyperparameters with the greatest predicted improvement. When the rate of optimization improvement slows, the TPE will explore other regions of the hyperparameter space, making it more resistant to suboptimal solutions resulting from local minima. As a consequence, TPE learns more efficiently and is less inhibited by local minima than random search approaches, enabling it to locate global minima more accurately.

3.6 Shapley additive exPlanations (SHAP)

The additive feature attribution approach [4] is a common model interpretation method. This interpretation method works by assigning attribution effect value (\({\phi }_{i}\)) to the input factors (i.e., \({x}_{i}\)) using a specific algorithm (e.g., SHAP) to reflect the importance of the feature for a particular prediction, as illustrated in Fig. 10. Specifically, the prediction result of the model \(f(x)\) is treated as the sum of the attribution effect \({\phi }_{i}\).

SHAP, proposed by Lundberg and Lee [41], provides a method to estimate the attribution effect (\({\phi }_{i}\)) of each feature. [49]. As shown in Eq. (21) the Shapley value of a feature \({x}_{i}\) is the gain of adding feature \({x}_{i}\), weighted and summed over all possible feature value combinations in which feature \({x}_{i}\) is absent. Briefly, the Shapley value can be treated as the contribution of the feature to the difference between the actual prediction (including \({x}_{i}\)) and the mean prediction (without \({x}_{i}\)). The \(f\left(S\cup \left\{{x}_{i}\right\}\right)-f\left(S\right)\) can present the marginal contribution of \({x}_{i}\).

where \(x\) is the input feature, \(N\) is the number of input features in \(x\), \(f\) is the original prediction model, \({\phi }_{i}\) is the attribution factor (Shapley value), \(P\) is the set with all the feature values used in the model, \(S\) is the subset of the features used in the model that does not include the feature value \({x}_{i}\), \(|S|\) is the number of nonzero entries in \(S\).

3.7 Evaluation metrics

In this paper, recall, accuracy, precision, and F1-score are used as evaluation metrics to evaluate the model's classification effectiveness. Understandably, accuracy shows the ability to accurately predict different categories of ground conditions, and the F1-score comprehensively indicates the prediction system (higher values of the F1-score indicate better prediction ability). These metrics are calculated from the confusion matrix as follows:

4 Experimental implementation details

Figure 11 illustrates the flowchart of the ML and DL model with hyperparameter optimization implementation procedure using the fivefold cross-validation. As described in Fig. 11, the collected TBM operation data and geological data in the site were manually labeled and then preprocessed in accordance with Sects. 2.2–2.4 (including abnormal TBM operational data filtering, TBM operational parameters selection and dataset balancing by SMOTE). The model then utilized 70 percent of the data for training the model and the remaining 30 percent to test the model. The fold cross-validation approach was employed to improve the reliability of the model with hyperparameter optimization. Finally, the optimized model with the highest prediction accuracy was chosen for model interpretation by SHAP.

The optimal hyperparameter combination for each ML and DL model was determined using the TPE approach with 100 trials. Hyperparameters of ML models are listed in Table 4. The meanings of hyperparameters and additional details of each ML model can be found in the Python ML package scikit-learn [44]. Consider the SVM model as an example: three kernel types, i.e., linear, RBF, and sigmoid, are available for the kernel hyperparameter. The penalty parameter \(C\), used to determine the trade-off between training error and model complexity, is used as the penalty factor hyperparameter. LR methods also share the same hyperparameters. For ensemble ML algorithms, i.e., RF and ET have the same hyperparameter, such as a number of estimators and max depth. Boosting-based ML models are evolved from ensemble ML algorithms. Its prediction performance is influenced by the similar hyperparameters from the ensemble ML model, and according to the previous literature [2, 5, 8, 14, 16, 20, 26, 33, 34, 39, 41, 42, 49, 57], the hyperparameters with the high influence are added to optimization task of the respective models. A detailed description of each hyperparameter and search space range of the ML model is shown in Table 4.

In contrast to ML models, DL models, such as CNN and LSTM, contain complicated architecture and a high number of hyperparameters. As seen in Fig. 7 and Fig. 9, this study employs a basic DL model. CNN models include hyperparameters such as a number of kernels, kernel size and dropout, learning rate, batch size, and activation function; LSTM models include a number of LSTM units, etc. Table 5 provides a comprehensive explanation of each hyperparameter and search space range of the DL model.

5 Result

5.1 Hyperparameter optimization of each ML and DL model

Figures 12, 13, 14 and 15 demonstrate the recording of the TPE hyperparameter tuning process for the typical ML models, ensemble ML models, boosting-based ML models and DL models, respectively, where the scatter points were the test accuracy with each different input hyperparameter, and the 'best accuracy' indicated the optimal accuracy of the current trial. As the trials updated, the color of the scattered points changed from gray to black. After 100 trials, the obtained hyperparameters correlated to optimal accuracy were treated as the optimal hyperparameters combination for each model. The optimization record (shown in Figs. 12, 13, 14 and 15), especially the tuning process of hyperparameter \(C\) of SVM (shown in Fig. 12), showed the different exploration processes of the TPE algorithm to find the globally optimal hyperparameters. The TPE algorithm will continue searching for the global optimum by gradually increasing the search space (including a few distant spikes) after the local optimum. After that, TPE checks a mixture of the globally optimal solution and locally optimal solution until a user-defined limit of the trials is reached.

In the optimization of the typical ML model (e.g., Fig. 12), the hyperparameter tuning process of the SVM can be observed to rapidly converge to optimal values in a wide search space defined as the number of trials increases, such that C is concentrated around 400, gamma is concentrated around 8, and RBF as a kernel function is able to obtain more consistently high prediction accuracy. In contrast, the hyperparameter optimization of LR did not improve the accuracy significantly due to the inability of LR to handle linearly inseparable data directly. Table 6 shows the optimal hyperparameters of typical ML models by TPE optimization.

Figure 13 illustrates the ensemble ML model optimization process. As the optimization process progressed, RF and ET had similar distributions of hyperparameters. The prediction accuracy increased with the increase of the \(\mathrm{max}\_depth\), and the \(n\_estimators\) are concentrated around 100. The optimal hyperparameters for the ensemble ML models that TPE improved are displayed in Table 7.

The optimization process of the boosting-based ML model is depicted in Fig. 14. As both ensemble ML models and these models are based on decision trees. Similar characteristics occurred to the distribution of hyperparameters as optimization proceeds; the value increase of \(n\_estimators\) and \(\mathrm{max}\_depth\) improve prediction accuracy. Adaboost utilizes a weak classifier, which results in lower final prediction accuracy. The boosting-based ML model that TPE improved has the optimum hyperparameters displayed in Table 8.

The DL model optimization process is shown in Fig. 15. It illustrates that a lower learning rate results in improved prediction accuracy for both CNN and LSTM. While LSTM utilizing relu can obtain higher prediction accuracy in this study, CNN employing the tanh activation function would consistently produce higher prediction accuracy. Other hyperparameters, like the number of LSTM units or convolution kernels, did not exhibit distinct trends because of interdependent behavior. The optimal hyperparameters for the DL model that TPE enhanced are shown in Table 9.

5.2 Performance comparisons of the hyperparameter-optimized models

The confusion matrix of hyperparameter-optimized models is depicted in Fig. 16. This matrix indicates real labels and predicted labels. As shown in Fig. 16a–j, with the exception of LR and Adaboost, all other models achieved a prediction accuracy of better than 90%. Specifically, in the instance of the LightGBM model, which had the best overall accuracy (shown in Fig. 16g), soils are partially misclassified as mixed, and rocks are partially misclassified as gravels and soils. This is also consistent with the actual situation that mixed ground conditions often consist of a combination of soil and rock.

Table 10 exhibits the evaluation metrics (i.e., model accuracy and recall, precision, and F1-score) for the hyperparameter-optimized models using the python-sklearn library statistics, which are arithmetically averaged using the metrics for each class separately, resulting in different accuracy in Fig. 16. According to Table 10, the improved LightGBM has the greatest overall prediction accuracy of 96.22%. In addition, SVM, Xgboost, and CNN exhibited more than 95% accuracy in prediction performance.

5.3 Importance analysis of input parameters for prediction using SHAP

SHAP can be utilized for the overall interpretation of the model wherein SHAP value can show the influence of the parameters in each sample, including whether it is positive or negative. The influence of input factors on the prediction of the ground condition ahead of the excavation face can be further explored by SHAP. The global relevance (calculated as the average of the absolute Shapley values for each input parameter in the data) factors of the input variables are displayed in Fig. 17. The input variables (i.e., TBM operation parameters) are ranked by the importance where in greater the average SHAP value corresponds to being more significant. Figure 18 further demonstrates the importance of each input variable for predicting the ground condition of rock, gravel, mixed and soil categories. From Fig. 17, it is obvious that the most relevant variables on the prediction accuracy are cutter output frequency, grease pressure, total cutter absolute torque, etc.

Figure 18 demonstrates the effect of different input variables on the prediction to different ground conditions (soil, mixed, gravel and rock). The y-axis of Fig. 18a–d represents the input variables in order of importance from top to bottom. Also, the color reflects the numerical value of the input variables (from low (blue) to high (red)). The horizontal coordinate represents the value of the SHAP.

Figure 18a is used to interpret the input variable's contribution to the prediction accuracy of soil by SHAP values. As shown in Fig. 18a, the greater the value (more red) of grease pressure(cutter Drum), the greater the SHAP value; in other words, the greater the value of grease pressure(cutter Drum), the greater the probability that the ahead ground condition is soil. It can be interpreted that a greater SHAP value results in better prediction accuracy for a certain target. The analysis of the remaining input variables works in a similar manner.

For prediction of the mixed ahead ground condition (shown in Fig. 18b), the Cutter output frequency has the highest correlation with the accuracy of prediction accuracy, with smaller values suggesting a larger positive correlation with prediction accuracy. Implied from this study, the value of Cutter output frequency is typically smaller when the prediction ahead ground condition is mixed, and the value of Gas detector (co) is also smaller.

Additionally, for the prediction of the gravel (shown in Fig. 18c), Grease pressure (Bulkhead) had the highest correlation to the accuracy of gravel prediction and the smaller this value signifies a stronger positive correlation to the prediction accuracy. In this paper, it can be inferred that the value of Grease pressure (Bulkhead) is usually smaller when the face is gravel, and smaller the value of Gas detector (\({o}^{2}\)) showing better correlation. From the distribution of the Gas detector (\({o}^{2}\)) in Fig. 18c, it can be found that some of the values do not exactly follow the larger values that contribute more to the model. This is why some linear prediction-based methods, especially logistic regression (LR), did not achieve good results in Table 10. However, the variables that obey a certain linear distribution trend are more obvious, such as Grease pressure (Bulkhead). The above data characteristics can also be found in Fig. 18a–d.

Figure 18d illustrates that for the prediction of rock ahead excavation face, the Cutter output frequency was found to correlate positively with prediction accuracy, with greater values indicating a stronger positive association with prediction accuracy. This study demonstrated that the value of the Gas detector (\({o}^{2}\)) decreases when rock conditions are predicted in advance of the excavation face. This distribution is different from that of gravel, mixed and soil.

6 Discussion

6.1 Influence of the unbalance dataset on the prediction performance

SMOTE was utilized to handle the imbalanced samples such that the proportion of samples in each ground type is nearly equal. As demonstrated in Table 11, the dataset samples were oversampled by SMOTE to obtain a relatively balanced training set. The statistical information for the train set from the original dataset, the train set from the balanced dataset by SMOTE, and the test set are presented in Table 11. It was worth mentioning that the test dataset is generated by randomly selecting 30% of the sample size from the original dataset. After SMOTE processing, the proportion of samples from distinct rock types is more evenly distributed.

In order to evaluate the utility of SMOTE, all built classifiers were trained and evaluated using the dataset provided in Table 11. Table 12 illustrates the prediction accuracy of the test set with different classifiers that were trained using the original train set and the relatively balanced train set by SMOTE, while calculating the variation in prediction accuracy between the different training sets. The bold font in Table 12 indicates the overall prediction accuracy for all ground types. Table 12 demonstrates that after SMOTE oversampling, the overall accuracy of each classifier's predictions was enhanced. Specifically, the ground-type samples with a smaller proportion before SMOTE oversampling were unable to achieve a high prediction accuracy. Furthermore, after SMOTE oversampling, each category can attain a more balanced level of accuracy in its predictions. However, for the high proportion of the original data like rock, the prediction accuracy on some classifiers would decline, such as a 5.39% decrease in the prediction accuracy of rocks for RF and a 6.28% decrease in the prediction accuracy of rocks for ET. In summary, the results implied that a more balanced training set facilitated the classifiers' learning process. In general, classifiers with more balanced training sets perform better in terms of training and prediction.

6.2 Limitation of our study

In this study, several prediction models for ground conditions ahead of the excavation face were constructed utilizing ML and DL models, although there were still some limitations as follows:

-

(1)

The ML and DL models require that the training and testing samples have an equal distribution, which restricts the generalizability of the model. Typically, models constructed from data collected under diverse projects, geological conditions, TBM types, and other data-gathering situations produce different outcomes. The models are not applicable generally.

-

(2)

The present ML and DL algorithms used to establish the prediction of ground conditions using TBM operation parameters are still in the development of a relatively straightforward mapping connection. Due to TBM operation data under various geological conditions are not widely available, the limitation of data acquisition restricts the establishment of a generalized model.

-

(3)

This study demonstrated that high prediction accuracy could be achieved using very rudimentary DL networks. The benefit of DL for big data processing provides a possibility for the aforementioned existing models that do not have generalizability. The subsequent research will aim to solve the multi-step prediction of the ground condition and also to build a generalized prediction model based on DL models.

7 Conclusion

In TBM project construction, the ground condition ahead of the excavation face can affect the construction progress as well as construction safety, such as digging vibration, jamming, and even collapse accidents. The establishment of a comprehensive ground condition prediction model needs to consider the selection of input variables, the hyperparameter optimization of the ML and DL model, the selection of the appropriate ML and DL model. However, the hyperparameter of the model directly affects the performance of the model, and it lacks of a simple hyperparameter optimization method to the ground condition prediction model. Also, there are not comprehensive evaluation of the performance of the existing ML and DL models for the ground condition prediction. The interpretation to the contribution of each input variables is also important for the built model. This paper utilizes the TBM operation data of the Singapore Subway Line 2 to build ground condition prediction model, discuss the performance of the existing ML and DL models and analyze the influence of input variables on the model. The detail contribution and findings are as follows.

-

(1)

Ten ML and DL classifiers, including LR, SVM, RF, ET, Adaboost, Xggboost, LightGBM, Catboost, CNN, and LSTM, were used to develop the ground condition ahead of the excavation face prediction model. TPE hyperparameters’ optimization techniques were utilized to determine the optimal hyperparameters for each classifier. Using a competitive approach, the models were evaluated based on metrics like accuracy, recall, precision and F1-score. The classifier with the best overall performance is ultimately chosen. LightGBM achieved the best results in the model competition with 96.22% accuracy, 97.72% precision, 96.94% recall and 97.33% F1-score.

-

(2)

Since the previous model did not inversely account for the rationality of the input data, the rationality of the input data was analyzed in this paper using the SHAP value. The distribution of the data and the output of the model appeared reasonable too. This also justifies the prediction of the ground condition ahead of the excavation face using the operational data of the TBM.

-

(3)

SMOTE oversampling for a few sample classes enhanced the overall prediction accuracy of each classifier. The results suggested that a more balanced training set helped the learning process of classifiers. In general, classifiers with more balanced training sets have superior training and prediction performance.

Data availability

Some or all data, models, or codes generated or used during the study are available from the corresponding author by request.

References

Ahmad MW, Reynolds J, Rezgui Y (2018) Predictive modelling for solar thermal energy systems: a comparison of support vector regression, random forest, extra trees and regression trees. J Clean Prod 203:810–821

Algamal ZY, Qasim MK, Lee MH, Ali HTM (2021) Improving grasshopper optimization algorithm for hyperparameters estimation and feature selection in support vector regression. Chemom Intell Lab Syst 208:104196

Bahad P, Saxena P, Kamal R (2019) Fake news detection using bi-directional LSTM-recurrent neural network. Procedia Comput Sci 165:74–82

Baptista ML, Goebel K, Henriques EMP (2022) Relation between prognostics predictor evaluation metrics and local interpretability SHAP values. Artif Intell 306:103667. https://doi.org/10.1016/j.artint.2022.103667

Bergstra J, Bardenet R, Bengio Y, Kégl B (2011) Algorithms for hyper-parameter optimization. Adv Neural Inf Process Syst 24

Bergstra J, Komer B, Eliasmith C, Yamins D, Cox DD (2015) Hyperopt: a python library for model selection and hyperparameter optimization. Comput Sci Discov 8(1):014008

Biau G, Scornet E (2016) A random forest guided tour. TEST 25(2):197–227

Bo Y, Liu Q, Huang X, Pan Y (2022) Real-time hard-rock tunnel prediction model for rock mass classification using CatBoost integrated with Sequential Model-Based Optimization. Tunn Undergr Space Technol 124:104448. https://doi.org/10.1016/j.tust.2022.104448

Chen C, Chandra S, Han Y, Seo H (2022) Deep learning-based thermal image analysis for pavement defect detection and classification considering complex pavement conditions. Remote Sens 14(1):106

Chen C, Chandra S, Seo H (2022) Automatic pavement defect detection and classification using RGB-thermal images based on hierarchical residual attention network. Sensors 22(15):5781

Chen T, He T, Benesty M, Khotilovich V, Tang Y, Cho H (2015) Xgboost: extreme gradient boosting. R package version 04–2. 1(4)

Chen C, Seo H, Jun CH, Zhao Y (2022) Pavement crack detection and classification based on fusion feature of LBP and PCA with SVM. Int J Pavement Eng 23(9):3274–3283

Chen C, Seo H, Zhao Y (2021) A novel pavement transverse cracks detection model using WT-CNN and STFT-CNN for smartphone data analysis. Int J Pavement Eng 1–13.

Chen B, Zheng H, Luo G, Chen C, Bao A, Liu T et al (2022) Adaptive estimation of multi-regional soil salinization using extreme gradient boosting with Bayesian TPE optimization. Int J Remote Sens 43(3):778–811

Colkesen I, Sahin EK, Kavzoglu T (2016) Susceptibility mapping of shallow landslides using kernel-based Gaussian process, support vector machines and logistic regression. J Afr Earth Sc 118:53–64. https://doi.org/10.1016/j.jafrearsci.2016.02.019

Diao Y, Yan L, Gao K (2021) Improvement of the machine learning-based corrosion rate prediction model through the optimization of input features. Mater Des 198:109326. https://doi.org/10.1016/j.matdes.2020.109326

Dorogush AV, Ershov V, Gulin A (2018) CatBoost: gradient boosting with categorical features support. arXiv preprint arXiv:181011363.

Franklin J, Chandra R (1972) The slake-durability test. Int J Rock Mech Min Sci Geomechan Abstr 325–8.

Fu T, Tang X, Cai Z, Zuo Y, Tang Y, Zhao X (2020) Correlation research of phase angle variation and coating performance by means of Pearson’s correlation coefficient. Prog Org Coat 139:105459

Hakim WL, Rezaie F, Nur AS, Panahi M, Khosravi K, Lee C-W et al (2022) Convolutional neural network (CNN) with metaheuristic optimization algorithms for landslide susceptibility mapping in Icheon South Korea. J Environ Manag 305:114367. https://doi.org/10.1016/j.jenvman.2021.114367

Hoek E, Marinos P, Benissi M (1998) Applicability of the Geological Strength Index (GSI) classification for very weak and sheared rock masses. The case of the Athens Schist Formation. Bull Eng Geol Environ 57(2):151–160

Hou S, Liu Y, Yang Q (2021) Real-time prediction of rock mass classification based on TBM operation big data and stacking technique of ensemble learning. J Rock Mech Geotech Eng 14(1):123–143

Hou S, Liu Y, Yang Q (2022) Real-time prediction of rock mass classification based on TBM operation big data and stacking technique of ensemble learning. J Rock Mech Geotech Eng 14(1):123–143

Jing L-J, Li J-B, Yang C, Chen S, Zhang N, Peng X-X (2019) A case study of TBM performance prediction using field tunnelling tests in limestone strata. Tunn Undergr Space Technol 83:364–372

Jung J-H, Chung H, Kwon Y-S, Lee I-MJKJOCE (2019) An ANN to predict ground condition ahead of tunnel face using TBM operational data. KSCE J Civ Eng 23(7):3200–3206

Kalita DJ, Singh S (2020) SVM hyper-parameters optimization using quantized multi-PSO in dynamic environment. Soft Comput 24(2):1225–1241

Ke G, Meng Q, Finley T, Wang T, Chen W, Ma W et al (2017) Lightgbm: a highly efficient gradient boosting decision tree. Adv Neural Inf Process Syst 30:3146–3154

Khammassi C, Krichen S (2017) A GA-LR wrapper approach for feature selection in network intrusion detection. Comput Secur 70:255–277

Kim D, Kwon K, Pham K, Oh J-Y, Choi H (2022) Surface settlement prediction for urban tunneling using machine learning algorithms with Bayesian optimization. Autom Constr 140:104331. https://doi.org/10.1016/j.autcon.2022.104331

Kim D, Pham K, Oh J-Y, Lee S-J, Choi H (2022) Classification of surface settlement levels induced by TBM driving in urban areas using random forest with data-driven feature selection. Autom Constr 135:104109

Kim1b D, Pham1a K, Park1b S, Oh2a J-Y, Choi H (2020) Determination of effective parameters on surface settlement during shield TBM.

Kleinbaum DG, Dietz K, Gail M, Klein M, Klein M (2002) Logistic regression. Springer

Koehrsen W (2018) A conceptual explanation of bayesian hyperparameter optimization for machine learning. Towards Data Sci.

Li A-D, Xue B, Zhang M (2021) Improved binary particle swarm optimization for feature selection with new initialization and search space reduction strategies. Appl Soft Comput 106:107302. https://doi.org/10.1016/j.asoc.2021.107302

Liang W, Luo S, Zhao G, Wu H (2020) Predicting hard rock pillar stability using GBDT, XGBoost, and LightGBM algorithms. Mathematics 8(5):765

Liu Z, Li L, Fang X, Qi W, Shen J, Zhou H et al (2021) Hard-rock tunnel lithology prediction with TBM construction big data using a global-attention-mechanism-based LSTM network. Autom Constr 125:103647

Liu Q, Liu J, Pan Y, Kong X, Hong K (2017) A case study of TBM performance prediction using a Chinese rock mass classification system–Hydropower Classification (HC) method. Tunn Undergr Space Technol 65:140–154. https://doi.org/10.1016/j.tust.2017.03.002

Liu Q, Wang X, Huang X, Yin X (2020) Prediction model of rock mass class using classification and regression tree integrated AdaBoost algorithm based on TBM driving data. Tunn Undergr Space Technol 106:103595

Liu B, Wang R, Zhao G, Guo X, Wang Y, Li J et al (2020) Prediction of rock mass parameters in the TBM tunnel based on BP neural network integrated simulated annealing algorithm. Tunn Undergr Space Technol 95:103103

Lujan-Moreno GA, Howard PR, Rojas OG, Montgomery DC (2018) Design of experiments and response surface methodology to tune machine learning hyperparameters, with a random forest case-study. Expert Syst Appl 109:195–205

Lundberg S, Lee S-I (2017) A unified approach to interpreting model predictions. arXiv preprint arXiv:170507874.

Newcomer MW, Hunt RJ (2022) NWTOPT–a hyperparameter optimization approach for selection of environmental model solver settings. Environ Model Softw 147:105250. https://doi.org/10.1016/j.envsoft.2021.105250

Noble WS (2006) What is a support vector machine? Nat Biotechnol 24(12):1565–1567

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O et al (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12:2825–2830

Phan H, Andreotti F, Cooray N, Chén OY, De Vos M (2018) Joint classification and prediction CNN framework for automatic sleep stage classification. IEEE Trans Biomed Eng 66(5):1285–1296

Ribacchi R, Fazio ALJRM, Engineering R (2005) Influence of rock mass parameters on the performance of a TBM in a gneissic formation (Varzo Tunnel). 38(2):105-27.

Schapire RE (2013) Explaining adaboost. Empirical inference. Springer. pp 37–52.

Sebbeh-Newton S, Ayawah PEA, Azure JWA, Kaba AGA, Ahmad F, Zainol Z et al (2021) Towards TBM automation: on-the-fly characterization and classification of ground conditions ahead of a TBM using data-driven approach. Appl Sci 11(3):1060

Štrumbelj E, Kononenko I (2014) Explaining prediction models and individual predictions with feature contributions. Knowl Inf Syst 41(3):647–665

Wang Z, Hong T, Piette MA (2020) Building thermal load prediction through shallow machine learning and deep learning. Appl Energy 263:114683

Wang X, Zhu H, Zhu M, Zhang L, Ju JW (2021) An integrated parameter prediction framework for intelligent TBM excavation in hard rock. Tunn Undergr Space Technol 118:104196. https://doi.org/10.1016/j.tust.2021.104196

Wengang Z, Liang H, Zixu Z, Yanmei Z (2020) Digitalization of mechanical and physical properties of Singapore Bukit Timah granite rocks based on borehole data from four sites. Undergr Space

Yagiz S (2008) Utilizing rock mass properties for predicting TBM performance in hard rock condition. Tunn Undergr Space Technol 23(3):326–339

Yu H, Tao J, Qin C, Xiao D, Sun H, Liu C (2021) Rock mass type prediction for tunnel boring machine using a novel semi-supervised method. Measurement 179:109545. https://doi.org/10.1016/j.measurement.2021.109545

Zare Naghadehi M, Ramezanzadeh A (2017) Models for estimation of TBM performance in granitic and mica gneiss hard rocks in a hydropower tunnel. Bull Eng Geol Environ 76(4):1627–1641

Zhang Q, Liu Z, Tan J (2019) Prediction of geological conditions for a tunnel boring machine using big operational data. Autom Constr 100:73–83

Zhou J, Qiu Y, Armaghani DJ, Zhang W, Li C, Zhu S et al (2021) Predicting TBM penetration rate in hard rock condition: A comparative study among six XGB-based metaheuristic techniques. Geosci Front 12(3):101091. https://doi.org/10.1016/j.gsf.2020.09.020

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, C., Seo, H. Prediction of rock mass class ahead of TBM excavation face by ML and DL algorithms with Bayesian TPE optimization and SHAP feature analysis. Acta Geotech. 18, 3825–3848 (2023). https://doi.org/10.1007/s11440-022-01779-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11440-022-01779-z