Abstract

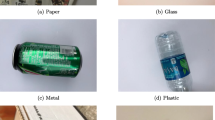

Automated waste sorting can dramatically increase waste sorting efficiency and reduce its regulation cost. Most of the current methods only use a single modality such as image data or acoustic data for waste classification, which makes it difficult to classify mixed and confusable wastes. In these complex situations, using multiple modalities becomes necessary to achieve a high classification accuracy. Traditionally, the fusion of multiple modalities has been limited by fixed handcrafted features. In this study, the deep-learning approach was applied to the multimodal fusion at the feature level for municipal solid-waste sorting. More specifically, the pre-trained VGG16 and one-dimensional convolutional neural networks (1D CNNs) were utilized to extract features from visual data and acoustic data, respectively. These deeply learned features were then fused in the fully connected layers for classification. The results of comparative experiments proved that the proposed method was superior to the single-modality methods. Additionally, the feature-based fusion strategy performed better than the decision-based strategy with deeply learned features.

Similar content being viewed by others

References

Zhu M W, Ma H B, He J, et al. Metal recycling from waste memory modules efficiently and environmentally friendly by low-temperature alkali melts. Sci China Tech Sci, 2020, 63: 2275–2282

Luo C, Ju Y, Giannakis M, et al. A novel methodology to select sustainable municipal solid waste management scenarios from three-way decisions perspective. J Cleaner Production, 2021, 280: 124312

Wang W, You X. Benefits analysis of classification of municipal solid waste based on system dynamics. J Cleaner Production, 2021, 279: 123686

Wang S, Wang J, Yang S, et al. From intention to behavior: Comprehending residents’ waste sorting intention and behavior formation process. Waste Manage, 2020, 113: 41–50

Wang Z, Peng B, Huang Y, et al. Classification for plastic bottles recycling based on image recognition. Waste Manage, 2019, 88: 170–181

Ruiz V, Sánchez Á, FVélez J F, et al. Automatic image-based waste classification. In: Ferrández V J, Sánchez Á, Toledo M J, et al. eds. From Bioinspired Systems and Biomedical Applications to Machine Learning. Lecture Notes in Computer Science, vol 11487. Cham: Springer, 2019. 422–431

Lu G, Wang Y, Yang H, et al. One-dimensional convolutional neural networks for acoustic waste sorting. J Cleaner Production, 2020, 271: 122393

Long X Y, Zhao S K, Jiang C, et al. Deep learning-based planar crack damage evaluation using convolutional neural networks. Eng Fract Mech, 2021, 246: 107604

Cheng C, Zhou B, Ma G, et al. Wasserstein distance based deep adversarial transfer learning for intelligent fault diagnosis with unlabeled or insufficient labeled data. Neurocomputing, 2020, 409: 35–45

Cheng C, Ma G, Zhang Y, et al. A Deep learning-based remaining useful life prediction approach for bearings. IEEE/ASME Trans Mechatron, 2020, 25: 1243–1254

Yuan Y, Tang X, Zhou W, et al. Data driven discovery of cyber physical systems. Nat Commun, 2019, 10: 4894

Yuan J H, Wu Y, Lu X, et al. Recent advances in deep learning based sentiment analysis. Sci China Tech Sci, 2020, 63: 1947–1970

Ramachandram D, Taylor G W. Deep multimodal learning: A survey on recent advances and trends. IEEE Signal Process Mag, 2017, 34: 96–108

Atrey P K, Hossain M A, El Saddik A, et al. Multimodal fusion for multimedia analysis: A survey. Multimedia Syst, 2010, 16: 345–379

Lahat D, Adali T, Jutten C. Multimodal data fusion: An overview of methods, challenges, and prospects. Proc IEEE, 2015, 103: 1449–1477

Baltrusaitis T, Ahuja C, Morency L P. Multimodal machine learning: A survey and taxonomy. IEEE Trans Pattern Anal Mach Intell, 2019, 41: 423–443

Zeng J, Tong Y F, Huang Y, et al. Deep surface normal estimation with hierarchical RGB-D fusion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, 2019. 6153–6162

Hazirbas C, Ma L, Domokos C, et al. FuseNet: Incorporating depth into semantic segmentation via fusion-based CNN architecture. In: Proceedings of the 13th Asian Conference on Computer Vision. Taipei, 2016. 213–228

Zadeh A, Chen M, Poria S, et al. Tensor fusion network for multimodal sentiment analysis. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Copenhagen, Denmark, 2017. 1114–1125

Liu Z, Shen Y, Lakshminarasimhan V B, et al. Efficient low-rank multimodal fusion with modality-specific factors. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne, Australia, 2018. 2247–2256

Sahu G, Vechtomova O. Dynamic fusion for multimodal data. In: Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics. 2019. 3156–3166

Pérez-Rúa J M, Vielzeuf V, Pateux S, et al. MFAS: Multimodal fusion architecture search. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach, CA, USA, 2019. 6966–6975

Wang Y, Huang W, Sun F, et al. Deep multimodal fusion by channel exchanging. In: Proceedings of 34th Conference on Neural Information Processing Systems. Vancouver, Canada, 2020

Zadeh A, Liang P P, Mazumder N, et al. Memory fusion network for multi-view sequential learning. In: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence. New Orleans, Lousiana, USA, 2018

Hu X, Yang K, Fei L, et al. ACNet: Attention based network to exploit complementary features for RGBD semantic segmentation. In: Proceedings of IEEE International Conference on Image Processing. Taipei, 2019. 1440–1444

Cangea C, Velickovic P, Lio P. XFlow: Cross-modal deep neural networks for audiovisual classification. IEEE Trans Neural Netw Learning Syst, 2020, 31: 3711–3720

Zheng Z, Ma A, Zhang L, et al. Deep multisensor learning for missing-modality all-weather mapping. ISPRS J Photogrammetry Remote Sens, 2021, 174: 254–264

Chu Y, Huang C, Xie X, et al. Multilayer hybrid deep-learning method for waste classification and recycling. Comput Intell Neurosci, 2018, 2018: 5060857

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. In: Proceedings of International Conference on Learning Representations. Banff, Canada, 2014

Mao L, Xie M, Huang Y, et al. Preceding vehicle detection using Histograms of Oriented Gradients. In: Proceedings of International Conference on Communications, Circuits and Systems. Chengdu, 2010. 354–358

Kapoor R, Gupta R, Son L H, et al. Detection of power quality event using histogram of oriented gradients and support vector machine. Measurement, 2018, 120: 52–75

Kiranyaz S, Avci O, Abdeljaber O, et al. 1D convolutional neural networks and applications: A survey. Mech Syst Signal Processing, 2019, 151: 107398

Rubin J, Abreu R, Ganguli A, et al. Classifying heart sound recordings using deep convolutional neural networks and mel-frequency cepstral coefficients. In: Proceedings of Computing in Cardiology Conference. Vancouver, BC, Canada, 2016. 813–816

Jeancolas L, Benali H, Benkelfat B E, et al. Automatic detection of early stages of Parkinson’s disease through acoustic voice analysis with mel-frequency cepstral coefficients. In: Proceedings of International Conference on Advanced Technologies for Signal and Image Processing. Fez, Morocco, 2017. 1–6

Yuan B. Efficient hardware architecture of softmax layer in deep neural network. In: Proceedings of 29th IEEE International System-on-Chip Conference. Seattle, WA, USA, 2016. 323–326

Kuncheva L I. A theoretical study on six classifier fusion strategies. IEEE Trans Pattern Anal Machine Intell, 2002, 24: 281–286

Islam M. Feature and score fusion based multiple classifier selection for iris recognition. Comput Intell Neurosci, 2014, 2014: 380585

Malmasi S, Dras M. Language identification using classifier ensembles. In: Proceedings of the Joint Workshop on Language Technology for Closely Related Languages, Varieties and Dialects. Hissar, Bulgaria, 2015. 35–43

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China (Grant Nos. 51875507, 52005439) and the Key Research and Development Program of Zhejiang Province (Grant No. 2021C01018).

Rights and permissions

About this article

Cite this article

Lu, G., Wang, Y., Xu, H. et al. Deep multimodal learning for municipal solid waste sorting. Sci. China Technol. Sci. 65, 324–335 (2022). https://doi.org/10.1007/s11431-021-1927-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11431-021-1927-9