Abstract

Data-based decision-making is a well-established field of research in education. In particular, the potential of data use for addressing heterogeneous learning needs is emphasized. With data collected during the learning process of students, teachers gain insight into the performance, strengths, and weaknesses of their students and are potentially able to adjust their teaching accordingly. Digital media are becoming increasingly important for the use of learning data. Students can use digital learning platforms to work on exercises and receive direct feedback, while teachers gain data on the students’ learning processes. Although both data-based decision-making and the use of digital media in schools are already widely studied, there is little evidence on the combination of the two issues. This systematic review aims to answer to what extent the connection between data-based decision-making and the use of digital learning platforms has already been researched in terms of using digital learning data for further instructional design. The analysis of n = 11 studies revealed that the use of data from digital learning platforms for instructional design has so far been researched exploratively. Nevertheless, we gained initial insights into which digital learning platforms teachers use, which data they can obtain from them, and how they further use these data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

“The focus should be continuously adapting instruction in the classroom and beyond, to facilitate and optimize students’ learning processes, taking into account learners’ needs and individual characteristics” (Mandinach & Schildkamp, 2021, p. 7).

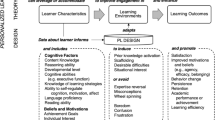

As student heterogeneity increases, so does the demand for adaptive instruction (Hardy et al., 2019; Kruse & Dedering, 2018). Adaptive instruction aims to fit the instructional design, such as learning material or tasks, to the individual needs of students. To do so, teachers require a comprehensive overview of students’ learning processes (Hardy et al., 2019; Kippers et al., 2018b; Plass & Pawar, 2020). Digital learning platforms have the potential to support teachers in obtaining data on their students’ learning progress as well as in making direct adjustments to the learning material (Altenrath et al., 2021; Holmes et al., 2018; Krein & Schiefner-Rohs, 2021). In the following, we will provide an overview of (1) data-based decision-making to improve instructional design and (2) the possibilities of digital learning platforms for teaching and learning. Finally, we will explore the potential of connecting the two areas in the systematic review of n = 11 studies from primary and secondary schools.

Data-based decision-making to improve instructional design

To address student heterogeneity, teachers need to improve their instructional designFootnote 1 (Hebbecker et al., 2022). For this purpose, it can be helpful to take student data into account (Ansyari et al., 2020; Gelderblom et al., 2016; Lai & McNaughton, 2016; Van Geel et al., 2016). With student data, teachers have information about students’ progress and receive feedback on their teaching quality (Kippers et al., 2018b; Prenger & Schildkamp, 2018). The use of student data for decision-making for the purpose of instructional designFootnote 2 can be defined “as the systematic collection and analysis of different kinds of data to inform educational decisions” (Mandinach & Schildkamp, 2021, p. 1). For this teacher action, different terms have developed over approximately two decades of research. In the literature, the terms ‘data use’, ‘data-based decision-making’ (Lai & Schildkamp, 2013; Mandinach & Schildkamp, 2021; Schildkamp et al., 2014), ‘data-driven decision-making’ (Mandinach, 2012; Sampson et al., 2022; Schildkamp & Kuiper, 2010), or ‘data-informed decision-making’ (Shen & Cooley, 2008) are most common. The complexity of data-based decision-making becomes evident in the process of data use (Mandinach & Schildkamp, 2021). The circular process of data use contains several steps (Keuning et al., 2019; Lai & Schildkamp, 2013; Mandinach & Gummer, 2016; Sampson et al., 2022). We are following the data use for teaching process consisting of five steps: (1) the identification of problems and framing questions, (2) the use of data, (3) the transformation of data into information, (4) the transformation of information into decisions, and (5) the evaluation of outcomes (Mandinach & Gummer, 2016). Data should not be collected without a specific goal. Therefore, the process starts with identifying problems and formulating leading questions to solve the problems by using data (Schildkamp, 2019). In the next step, for example, teachers need to think about which sources they want to use and collect the data (Mandinach & Gummer, 2016; Schildkamp, 2019). The transformation of data into information is part of the data analysis. In this step, the data need to be understood and imbued with meaning. Thus, new information arises, for example, that students misunderstood the topic (Hebbecker et al., 2022; Mandinach & Gummer, 2016; Schildkamp, 2019). Additionally, teachers must combine the data with contextual details, such as pedagogical information about students, to obtain added value from the data (Wilcox et al., 2021). Based on an informed understanding of the data, teachers derive suitable actions for students from the information (Mandinach & Gummer, 2016). One example is the provision of additional exercises for individual students. The last step of the circular process includes the evaluation of the outcomes (Mandinach & Gummer, 2016): Has the initial question been answered or has the identified problem been solved? Have other changes occurred as a result of the actions taken with individual students or within the whole class? The process of data-based decision-making can be challenging for teachers (Peters et al., 2021; Prenger & Schildkamp, 2018; Wilcox et al., 2021). Teachers often struggle with analyzing and interpreting the data (Hebbecker et al., 2022; Kippers et al., 2018a). In addition, transforming information into decisions seems to be difficult for teachers and is therefore often neglected (Keuning et al., 2017; Kippers et al., 2018a; Marsh, 2012; Visscher, 2021).

For data-based decision-making, different forms of data can be considered. Input data include data on teacher and student characteristics (e.g., student background data). Process data include data about the entire learning process. Context data might influence the learning process and contain data on school culture, school curriculum, and school and teaching equipment. Output data are data on student progress and other results of the learning process, such as students’ well-being (Blumenthal et al., 2021; Lai & Schildkamp, 2013). In addition to these forms of data, there are different levels of data-based decision-making: the school system level, the school level, the class level, and the student level (Blumenthal et al., 2021). This systematic review focuses solely on the class and student levels because those consider the instructional design and its effect on individual students. Data-based decision-making on these levels aims to adapt the instructional design to the identified needs of students (Visscher, 2021).

Studies on data-based decision-making are often based on data from different forms of assessments, so-called assessments for learning, such as paper-and-pencil tests, oral tests, and homework assignments (Kippers et al., 2018b). Assessment for learning “focuses on daily practice in which teachers, students, and peers continuously gather information about student learning processes” (Kippers et al., 2018b, p. 201) to understand current learning status and weigh possibilities for future learning paths (Van der Kleij et al., 2015). Assessments intend to involve students more in their learning process by using data to provide students with feedback and help them understand their performance (Hamilton et al., 2009; Mandinach & Schildkamp, 2021). Mandinach and Gummer (2016) stated that they focus not only on assessment data but on all education data. For example, in addition to assessment data, teachers may consider behavioral data to get a more comprehensive picture of their students.

Even if teachers collect a large amount of data, the explicit use of these data for improving individual student progresses is still scarce (Wilcox et al., 2021). This situation raises the following question: What does a teacher’s use of data depend on? On the one hand, teachers’ positive attitudes towards data can influence their use of data (Blumenthal et al., 2021; Hase et al., 2022; Kippers et al., 2018b). On the other hand, prerequisites for the implementation of data-based decision-making are teachers’ competencies: knowledge of data and skills for using data (data literacy) as well as pedagogical, didactical, and content knowledge, which need to be combined (Blumenthal et al., 2021; Cui & Zhang, 2022; Mandinach & Gummer, 2016; Visscher, 2021). Data literacy is especially fundamental for data-based decision-making as it includes the ability to collect, organize, analyze, and interpret data to inform instruction (Kippers et al., 2018a; Mandinach & Gummer, 2016; Wilcox et al., 2021). A person who is able to follow all steps of the data use for the teaching process can be considered as data literate (Mandinach & Gummer, 2016).

Possibilities of digital learning platforms for teaching and learning

Within the research on data-based decision-making, the role of digital tools is increasing. Digital tools are mentioned with their possibilities to support teachers in collecting, storing, analyzing, and interpreting data (Mandinach & Gummer, 2016; Schildkamp, 2019; Wilcox et al., 2021). For example, when using digital learning platforms, multiple data are generated (Greller & Drachsler, 2012; Krein & Schiefner-Rohs, 2021; Schaumburg, 2021). In this context, the term ‘learning analytics’ arises, which is used to express the automatically collected, analyzed, and reported student data within digital learning processes (Van Leeuwen et al., 2021). Learning analytics aim to provide students with direct feedback, and, in some cases, direct adjustments to the learning path, as well as providing teachers with student data. Using the information gathered from the data, teachers can improve their teaching and enable individualized learning processes (Greller et al., 2014; Krein & Schiefner-Rohs, 2021; Van Leeuwen et al., 2021; Verbert et al., 2014). Therefore, the use of learning analytics can be seen in line with the fundamental concept of data-based decision-making. Verbert et al. (2013) developed the learning analytics process model consisting of four stages: (1) awareness, (2) reflection, (3) sensemaking, and (4) impact.

Within the process model, people need the following: (1) to be aware of the data; (2) to ask questions to reflect on the data; (3) to answer the questions and create new insights; and (4) to deduce consequences for their behavior (Verbert et al., 2013). Learning analytics data is provided on dashboards within digital tools. Taking the dashboard into account, teachers get insights into students’ performance, progress, and misunderstandings via visual displays (Greller & Drachsler, 2012; Van Leeuwen et al., 2021). The stages of the learning analytics process model can be applied to teachers’ dashboard use:

[Dashboards] are deployed to support teachers to gain a better overview of course activity (Stage 1, awareness), to reflect on their teaching practice (Stage 2), and to find students at risk or isolated students (Stage 3). Few, if any, address Stage 4 of actual impact (Verbert et al., 2013, p. 1502).

Using the dashboard, teachers can either monitor students’ learning processes during the lesson to directly intervene or after the lesson to reflect and reconsider their future instructional design (Aleven et al., 2016; Van Leeuwen et al., 2021). Learning analytics also includes log-in data and other data on students’ behavior using the digital tool (Aleven et al., 2016; Verbert et al., 2014). This systematic review focuses on data about the learning process from exercises in digital learning platforms.

Digital learning platforms enable the individualization of teaching and learning with regard to several aspects, such as the learning goal, the learning content, the learning path, but also the learning time and the place to learn (Daniela & Rūdolfa, 2019; Schaumburg, 2021). The collection, storage, and analysis of student data, generated during their use, offer great potential for individualization. The available data is often addressed as the added value of learning platforms (Cui & Zhang, 2022; Schaumburg, 2021). Overall, digital learning platforms include a large number of exercises, provide students with direct feedback according to their performance on the exercises, display students’ learning data on dashboards for teachers, and allow adaptive learning (Daniela & Rūdolfa, 2019; Greller & Drachsler, 2012; Hase et al., 2022; Hillmayr et al., 2020). Examples of digital learning platforms include drill-and-practice programs or intelligent tutoring systems. Drill-and-practice programs offer the opportunity to practice existing knowledge in exercises and provide students and teachers with feedback (Hillmayr et al., 2020). In intelligent tutoring systems, in addition to consolidating existing knowledge, it is also possible to acquire new knowledge. Intelligent tutoring systems also offer the possibility of adaptive exercise design and thus enable individual learning paths (Hillmayr et al., 2020; Holmes et al., 2018). Even though learning management systems are often used more to organize the learning process, exercises can also be made available to and worked on by students. Thus, data on the learning process of the students are also collected here and become available to teachers for further use (Holmes et al., 2018). Since the aspect of data generation during the processing of exercises is the focus of this systematic review, different types of digital learning platforms can be considered (Reinhold et al., 2020).

Research questions

Data from digital learning platforms are often not explicitly mentioned in previous research on data-based decision-making (Gelderblom et al., 2016; Kippers et al., 2018b). Nevertheless, digital media gain importance in everyday instruction. As a meta-analysis revealed, there are already several studies showing a positive impact of digital learning platforms on students’ learning outcomes that result from individual feedback and the possibility of adaptive learning (Hillmayr et al., 2020). How teachers can derive added value from digital learning platforms, in particular how they interpret and use information gained from learning platforms for decision-making, needs to be further investigated (Krein & Schiefner-Rohs, 2021; Van Leeuwen et al., 2021). Similarly, the impact of using data from digital learning platforms on improving teaching quality as well as school development needs has not been sufficiently researched (Schildkamp, 2019).

To gain insight into existing studies on the use of data from digital learning platforms for data-based decision-making, especially the further (adaptive) instructional design of teachers, we conducted this systematic review addressing the overall research question: What is the current state of research on teachers’ use of data from digital learning platforms for further instructional design?

In order to address this overall research question, we defined additional subordinate research questions for the analysis of existing studies:

-

(1)

Which digital learning platforms do teachers use?

-

(2)

What kind of digital data do teachers use?

-

(3)

For what purpose do teachers derive which instructional actions from the digital data?

Thus, this systematic review differs from other systematic reviews on data use, which focus, for example, on the use of data in school development contexts in general (Altenrath et al., 2021) or the use of data for instructional design as influenced by interventions for the development of professional competence (Ansyari et al., 2020).

Methods

With this systematic review, we aim to provide an overview of the existing research on teachers’ use of data from digital learning platforms for instructional design. The systematic review was prepared following the PRISMA-P scheme (Moher et al., 2009, 2015).

Literature search

Based on the literature review and the research questions, the terms ‘data use’ and ‘learning platform’ were combined to form parts of a search string. The term ‘teach’ was added to focus on the context of the institution school and here on teaching (see Fig. 1). Additionally, we included their synonyms for a more comprehensive search string. We used asterisks to include other word combinations. The English search string was translated into German to identify German papers in addition to other international papers to also explore our national research context.

The databases FIS Bildung, Psyndex, PsycInfo, Scopus, and Web of Science were searched using the search string to identify potentially relevant articles. The search was conducted in February 2022 and resulted in N = 2119 records (see Fig. 2).

Inclusion–exclusion criteria

For the inclusion and exclusion of studies within the review we developed the following criteria: First, studies needed to be empirical studies focusing on the use of digital data in schools. Second, digital data had to be derived from digital learning platforms. Thus, we did not consider studies with other digital data, for example, data from learning progress diagnostics programs (e.g., Hebbecker et al., 2022) or monitoring systems (e.g., Keuning et al., 2019). Third, the studies had to focus on the perspective of teachers and fourth, could contain only teachers from primary, secondary, and high school. Higher education teachers were not of interest in this review. Fifth, the studies had to be published in English or German; other languages could not be considered. The publication dates of the studies were not limited.

Search process

The search process is illustrated in Fig. 2 and is explained further as follows: After removing duplicates, n = 1880 records were left for the screening process. The screening of the titles and the abstracts followed the inclusion and exclusion criteria and was conducted by three independent raters to ensure the reliability of the screening process (investigator triangulation method; Denzin, 1978). All raters read every title and scored them with 1 = ‘relevant’ or 0 = ‘not relevant’. Titles that totaled two or three points across the three raters were selected for the next screening step, whereas titles with one or zero points were excluded from the further screening process. The screening of abstracts followed the same procedure. During the full-text screening, n = 128 studies were subsequently read and rated for eligibility by one rater. The full-text screening was also based on the established inclusion and exclusion criteria. After the full-text screening, n = 11 studies were included in the final qualitative synthesis.

Literature analysis

We extracted data and collected the following information from the included studies: Authors, year, country, language, type of school, school subject, sample size, participants, study design, methods, and research questions. After the first data extraction, we started to analyze the studies in more detail following the overall and subordinate research questions.

Results

To give an overview of the n = 11 included studies, we will first resume the general study information. Afterwards, we will answer the subordinate research questions to present a qualitative analysis of the studies. In Table 1, we present general information and methodological details about the included studies.

Interestingly, even though the publication period was not limited, the studies were published only in the period from 2011 to 2021. Most of the studies originated from the US (n = 5), followed by n = 2 studies from the Netherlands. However, these two studies from the Netherlands were published by the same authors. The remaining studies all originated from different countries (Cyprus, England, Greece, Israel, and New Zealand). All studies were written in English and thus relate to international empirical research. Most of the studies were published as journal articles (n = 7). We also included empirical studies published as conference papers (n = 2) and dissertation papers (n = 2).

As specified in the inclusion criteria, the studies all contained teachers as participants. In addition, n = 5 studies also examined other school stakeholders such as students, school administrators, parents, and administrative staff. The studies took place in nine primary schools, six middle schools, and one high school. Some of the studies examined two types of schools. All but three studies reported not only the type of school but also the subject examined in the studies. In this regard, most studies focused on mathematics as a subject for data-based decision-making. While n = 3 studies only contained math lessons, n = 4 studies researched math and other lessons like reading, writing, and science. One study did not mention a focus on math but on STEM and humanities.

Since n = 9 studies had a qualitative study design, the sample sizes of these studies were not large. The sample sizes of all included studies varied between N = 1 teacher to overall N = 277 participants. The qualitative studies included a mixture of research methods: observations (n = 9), interviews (n = 7), document analyses (n = 2), group discussions (n = 1), and think-out-loud-protocols (n = 1). The mixed-methods study used questionnaires and interviews, the quantitative study only a questionnaire. The research questions were quite diverse and aimed at different aspects of using digital data (Table 1). Some research questions focused on the description of teachers’ dashboard usage and which actions teachers derived from the data. Others considered the impact that digital data can have on feedback and individualized learning. As part of the quantitative study, the researchers asked which factors influenced the use of digital data.

Which digital learning platforms do teachers use?

All studies involved the use of digital learning platforms, which in turn included various technologies. The different digital learning platforms will be described in more detail, with a focus on functionalities for teachers.

While in n = 3 studies the authors did not specify the used learning platform, but only reported the use of learning platforms in general, learning analytics, or dashboards (Jewitt et al., 2011; Mavroudi et al., 2021; Michaeli et al., 2020), the remaining n = 8 studies all involved different kinds of digital learning platforms. Basham et al. (2016), Bonham (2018), and Edmunds and Hartnett (2014) more explicitly specified the tool, stating specific learning management systems (Buzz; KnowledgeNET; Schoology). Buzz is a learning management system that enables personalized, project-based, and mastery-based education (Agilix, 2023a; Basham et al., 2016). Teachers can track student’s usage and performance with reports. Students’ learning paths are displayed to teachers on a dashboard and are compared with national educational standards (Agilix, 2023b). Buzz also offers the option of assessing students’ emotional well-being (Agilix, 2023b). In the study of Basham et al. (2016), besides Buzz additional learning platforms that support students and teachers were also used but not further named. Edmunds and Hartnett (2014) included only schools in their study which used the learning management system called KnowledgeNET. The structure of the KnowledgeNET learning management system is not described in more detail in their article. The learning management system also seems to no longer exist, thus no further information on the functionalities can be provided. Schoology is a learning management system that enables personalized education, communication, and collaboration (PowerSchool, 2023). In addition, explicit attention is given to the potential of Schoology for data-based teaching and learning (PowerSchool, 2019). On a dashboard, teachers can see the progress of their students in real time as well as long-term trends in teaching and learning like students with low interaction. As with Buzz, the mastery of national educational standards is shown (Bonham, 2018; PowerSchool, 2019). Focusing on the potential of formative assessment for teachers’ instructional design, Bonham (2018) also investigated the use of formative assessment without referring to the learning management system Schoology. In the studies of Knoop-van Campen and Molenaar (2020) as well as Molenaar and Knoop-van Campen (2018), Snappet as an adaptive learning platform was used by teachers and students. Snappet contains a comprehensive dashboard for teachers, which provides data in real time about the learning progress of individual tasks, but also with regard to the mastery of competencies of the curriculum (Knoop-van Campen & Molenaar, 2020; Snappet, 2023). Other specified learning platforms were (intelligent) tutoring systems like the integrated tutor system within the NumberShire learning game (Hinkle, 2021), Carnegie Learning Tutor (Xhakaj et al., 2016), and Luna, which is a dashboard prototype for an intelligent tutoring system (Xhakaj et al., 2017). The NumberShire Integrated Tutor System includes a dashboard where teachers can see students’ progress including mastery of standards after students finished an assignment (Hinkle, 2021). The aim of the NumberShire Integrated Tutor System is not only to enable students to practice math skills but also to improve teachers’ data-based decision-making (University of Oregon, 2023). The Carnegie Learning Tutor provides teachers with real-time updates on student progress, but also with detailed reports from student to district level. The Learning Tutor can be used with different intelligent tutoring systems (Carnegie Learning, 2023; Xhakaj et al., 2016). Also, Luna is a dashboard which can be used with different intelligent tutoring systems. Luna includes information on mastery of skills, students learning progress, as well as misconceptions (Xhakaj et al., 2017).

A more detailed description of how the dashboards were designed and worked cannot be given as this information is often only available to full users of the respective digital learning platforms. The functionalities of the dashboards were also not described more precisely in the studies that are included in this systematic review. What kind of data teachers receive on the dashboards of the digital learning platforms is described in the following section.

What kind of digital data do teachers use?

The identified studies focused on student performance data at different levels: individual student level, classroom level, and school level. As in the description of the digital learning platform, the study by Mavroudi et al. (2021) did not describe in detail which data the teachers used. Data are mentioned as student data records, and any other data records. Student postings and student responses from the learning management system were used in the study of Edmunds and Hartnett (2014). Bonham (2018) and Michaeli et al. (2020) reported the use of student knowledge data, including student understanding and misconceptions. As Bonham (2018) focused on formative assessment data in general, she considered analog and other digital data besides data from a digital learning platform. More specifically, the data are identified in the study of Basham et al. (2016): Teachers in their study used analog and digital data, including classroom and system data, student voice recordings, student self-reported data, and data from pathways containing current learning and progress data. The use of progress data such as information on students’ mastery of skills, misconceptions, and time spent with the learning platform, was explicitly mentioned in the studies of Hinkle (2021), Jewitt et al. (2011), Molenaar and Knoop-van Campen (2018) and Xhakaj et al. (2016, 2017). In some cases, the visualization of the data was described in more detail (Hinkle, 2021; Knoop-van Campen & Molenaar, 2020; Molenaar & Knoop-van Campen, 2018): Colors were primarily used to classify student performance into different levels, for example green icons for students who are making progress, red icons for no progress, and grey icons for unknown progress status (Knoop-van Campen & Molenaar, 2020). The color coding is expected to help teachers to get a quick overview of the data within the dashboards.

For what purpose do teachers derive which instructional actions from the digital data?

After describing which digital learning platforms and which data are used, the reasons why teachers used the data and how they used it for further instructional actions are of particular interest. Within the identified studies, the purposes of using learning data can be divided into two areas: First, all n = 11 studies focused on the added value of the data for teachers and their teaching. Second, n = 6 studies also addressed the potential of data for students and, in some cases, for their parents.

Regarding the purposes of using data for teaching, all included studies mentioned understanding students’ learning process as one reason for data use. For example, teachers wanted to identify barriers to learning (Basham et al., 2016), aimed to identify students’ misconceptions (Xhakaj et al., 2016), and tried to get generally more knowledge about their students (Michaeli et al., 2020). The understanding of the learning process is also expressed when teachers asked what their students learned and what they should learn next (Edmunds & Hartnett, 2014). Teachers also saw an opportunity for data use in comparing individual student progress with progressions of other students in the same class or other comparable users of the learning platform (Molenaar & Knoop-van Campen, 2018).

Based on the purpose of supporting students learning, instructional actions for individualization and differentiation were reported in the studies. To this mean, two studies reported digital learning platforms that automatically developed appropriate learning pathways and provided differentiated tasks based on students’ prior learning results (Bonham, 2018; Molenaar & Knoop-van Campen, 2018). In addition, teachers implemented actions to support student learning. Teachers took actions for differentiation as well as actions for individualization. In terms of differentiation, they divided students based on their learning outcomes into more homogeneous groups which received further learning materials according to their knowledge (Edmunds & Hartnett, 2014; Michaeli et al., 2020). Individualized actions were evident, for example, in teachers setting learning goals with each student to make students’ learning visible (Jewitt et al., 2011), allowing students to work self-regulated in their own learning pace (Bonham, 2018), teachers designing tasks specifically for individual students to encourage or challenge them (Michaeli et al., 2020), or teachers interacting with students individually (Xhakaj et al., 2017). Teachers also made some instructional changes for the whole learning group in n = 7 studies (Bonham, 2018; Edmunds & Hartnett, 2014; Hinkle, 2021; Michaeli et al., 2020; Molenaar & Knoop-van Campen, 2018; Xhakaj et al., 2016, 2017). This included for example using information about class progress gained from digital data as a starting point for designing the introduction of the following lesson (Bonham, 2018), differentiating instructions, or spending more class time on tasks (Michaeli et al., 2020).

Additionally, teachers justified the improvement of feedback for students (Bonham, 2018; Edmunds & Hartnett, 2014; Jewitt et al., 2011; Knoop-van Campen & Molenaar, 2020; Mavroudi et al., 2021; Molenaar & Knoop-van Campen, 2018) or the ability to evaluate students more precisely and fairly as another reason for data use and also improved their feedback practices based on the data (Michaeli et al., 2020). The improvement in feedback quality could be attributed to the fact that digital learning platforms provided more types of feedback, and that feedback was provided directly to students (Knoop-van Campen & Molenaar, 2020). Another study reported that feedback was also made available to parents (Jewitt et al., 2011).

Although indirect benefits for students were mentioned as advantages of data use for teachers, some studies also mentioned direct benefits for students. Discussing learning data with students was listed as a reason for data use by teachers (Basham et al., 2016; Bonham, 2018; Edmunds & Hartnett, 2014; Michaeli et al., 2020). This involved clarifying misconceptions with students (Bonham, 2018). In addition, it should help students to take more responsibility for their learning processes (Bonham, 2018; Edmunds & Hartnett, 2014; Jewitt et al., 2011; Michaeli et al., 2020). The data were also intended to be used to provide parents with more information about their children’s learning (Jewitt et al., 2011; Mavroudi et al., 2021; Michaeli et al., 2020).

Finally, Molenaar and Knoop-van Campen (2018) report that no explicit teacher actions followed from about a quarter of the dashboard consultations (i.e., looking at the digital learning data). However, regarding all studies, data use influenced the majority of teachers’ daily teaching practice.

Discussion

In the analysis of the studies, it became obvious that the research field dealing with the use of data from digital learning platforms is still rather young. This was evident, on the one hand, by the fact that the publications were all published after 2011, and, on the other hand, from the finding of predominantly qualitative studies that are still exploratory. This is also underlined with the need for research on how teachers use data from digital learning platforms with added value (Krein & Schiefner-Rohs, 2021; Schildkamp, 2019; Van Leeuwen et al., 2021). In general, there was a research concentration in the US and the Netherlands. Only a few studies from other countries could be included. Besides considering the international research context, a German search string was used to include the national research context. However, no studies from the German search string could be integrated into the systematic review.

Regarding the first sub-question, it was found that different digital learning platforms like intelligent tutoring systems or learning management systems were used for practice in the studies. The specific type of digital learning platform appears to be not that relevant, as different platforms had been used for data-based decision-making (Hillmayr et al., 2020; Holmes et al., 2018; Reinhold et al., 2020). However, the functionalities of the digital learning platforms are not known in detail, as they were neither specified in the studies nor described comprehensively for non-users by the producers of the digital learning platforms. Therefore, only limited conclusions can be drawn about the difference of data use in relation to the functionalities of the digital learning platforms.

Various learning data were involved in the use of digital learning platforms by teachers, which led us to answer the second sub-question. The data were limited to the student- and class-level in the studies (Blumenthal et al., 2021). For example, teachers considered how much time their students spent in the digital learning platform, how they solved the exercises, and which errors occurred most frequently when they completed the exercises. Accordingly, process and output data were used by teachers (Blumenthal et al., 2021; Lai & Schildkamp, 2013). Teachers gained insight into their students’ learning progress and processes through the learning data provided by the digital learning platform (Greller & Drachsler, 2012; Van Leeuwen et al., 2021).

This was also a determining reason for many teachers to use data from digital learning platforms for further instructional actions, which we addressed with the third sub-question. As reasons for using data from digital learning platforms, teachers indicated that they want to involve students and, in some cases, parents more closely in the learning process. This includes improving feedback for their students. The use of data to improve feedback has likewise been captured as a benefit in previous research on data-based decision-making and learning analytics (Hamilton et al., 2009; Mandinach & Schildkamp, 2021; Verbert et al., 2014). Actual positive changes in feedback to students were noted in the studies. Parents also gained greater insight into their children’s performance through data used by teachers. In addition, teachers wanted to use the data to get a better overview of their students’ performance and the quality of their teaching (Verbert et al., 2013). Teachers would like to better understand which exercises students can solve well and which ones they cannot. With this knowledge, teachers aim to adjust their teaching and enable individual learning paths. The fact that teachers derived actions in most cases indicates that they went through multiple steps of the data use cycle (Mandinach & Gummer, 2016) and presumably also had less difficulty analyzing and interpreting data as well as the resulting decision-making, which contrasts with other studies (e.g., Hebbecker et al., 2022; Kippers et al., 2018a; Visscher, 2021). Teachers implemented actions to differentiate and individualize in order to make their students’ learning more adaptive. Accordingly, they addressed the need for adaptive instruction and therefore exploited the potential of using learning data (e.g., Hardy et al., 2019; Mandinach & Schildkamp, 2021). Actions were also provided for the whole class, such as re-teaching a topic. Teachers used the data both in real-time where they derive direct action, and after instruction so that long-term instructional changes were implemented (Aleven et al., 2016; Van Leeuwen et al., 2021). At least one study also found that no actions were accomplished even after examining the data. In general, not all studies specified how the process of teachers’ usage of digital data occurred.

Strengths and limitations

This systematic review provided a comprehensive overview of the usage of data from digital learning platforms for instructional design. In sum, our findings are not that different from common understandings of possible usages of digital learning platforms. This systematic review revealed a rather young research field that has been investigated in a predominantly exploratory fashion to date. The studies included different research foci. For this systematic review, we established a specific search string based on prior research. Nevertheless, search terms could conceivably have been missing in the definition of the search string. It is possible that studies that did not include the words of the search string in their titles, abstracts, or keywords but which would still have been appropriate in terms of content were not included in this systematic review. Furthermore, only English and German articles could be considered for inclusion in this systematic review. Nationally published studies on this topic may exist in other languages. Owing to the predominantly qualitative studies, mainly exemplary results with limited generalizability were summarized. Finally, we included conference papers and dissertation papers besides journal articles. Therefore, it is not ensured that all publications have passed a peer review process.

Conclusion and implications for research and practice

This systematic review provided an overview of the use of data from digital learning platforms for further instructional design. We achieved a first understanding of which digital learning platforms teachers use for data use, which data they extract from these platforms, and why and how they use these data for actions. This highlighted the added value of using data to address heterogeneous student groups and enable individualized learning pathways.

Based on this systematic review, research needs and implications for practice can be derived. There is a need for large-scale quantitative studies that systematically verify the initial findings. The extent to which the use of data from digital learning platforms for further instructional actions depends on the functionalities of the digital learning platform should also be considered in further studies. Quantitative as well as qualitative studies on the use of data from digital learning platforms should be conducted in countries where few contributions to knowledge have been achieved so far. Similarly, the use of data from digital learning platforms is still scarce and should become more common in schools. To this end, teachers must be equipped with the necessary technology, but also be trained in the required skills. If this can be accomplished, the use of data from digital learning platforms can contribute greatly to adaptive instructional design that considers learners’ needs and individual characteristics.

Data availability

The data supporting the conclusions of this article will be provided by the authors upon request.

Notes

“Instruction is defined as the goal-oriented actions of the teacher in a classroom that focus on explaining a concept or procedure, or on providing students with insights that will initiate or sustain their learning process” (Prenger & Schildkamp, 2018, p. 736; see also Gelderblom et al., 2016; Hattie, 2009).

References

Agilix. (2023a). Buzz. Retrieved November 9, 2023, from https://www.agilix.com/products/buzz

Agilix. (2023b). Teach better on buzz. Retrieved November 9, 2023, from https://assets.website-files.com/63e3df066454cf5d1a3762bf/64875f4038b327ed912e6e63_Teach%20Better%20on%20Buzz.pdf

Aleven, V., Xhakaj, F., Holstein, K., & McLaren, B. M. (2016). Developing a teacher dashboard for use with intelligent tutoring systems. In R. Vatrapu, M. Kickmeier-Rust, B. Ginon, & S. Bull (Eds.), Proceedings of the fourth international workshop on teaching analytics, in conjunction with EC-TEL 2016 (Vol. 1738, pp. 15–23). CEUR workshop proceedings. https://ceur-ws.org/Vol-1738/IWTA_2016_paper4.pdf

Altenrath, M., Hofhues, S., & Lange, J. (2021). Optimierung, Evidenzbasierung, Datafizierung: Systematisches Review zum Verhältnis von Daten und Schulentwicklung im internationalen Diskurs. MedienPädagogik, 44(Datengetriebene Schule), 92–116. https://doi.org/10.21240/mpaed/44/2021.10.30.X

Ansyari, M. F., Groot, W., & De Witte, K. (2020). Tracking the process of data use professional development interventions for instructional improvement: A systematic literature review. Educational Research Review, 31(100362), 1–18. https://doi.org/10.1016/j.edurev.2020.100362

Basham, J. D., Hall, T. E., Carter, R. A., Jr., & Stahl, W. M. (2016). An operationalized understanding of personalized learning. Journal of Special Education Technology, 31(3), 126–136. https://doi.org/10.1177/0162643416660835

Blumenthal, S., Blumenthal, Y., Lembke, E. S., Powell, S. R., Schultze-Petzold, P., & Thomas, E. R. (2021). Educator perspectives on data-based decision making in Germany and the United States. Journal of Learning Disabilities, 54(4), 284–299. https://doi.org/10.1177/0022219420986120

Bonham, J. (2018). A study of middle school mathematics teachers’ implementation of formative assessment [Dissertation, University of Delaware]. Ann Arbor: ProQuest.

Carnegie Learning. (2023). Middle school math solution. Retrieved November 13, 2023, from https://www.carnegielearning.com/solutions/math/middle-school-math-solution/

Cui, Y., & Zhang, H. (2022). Integrating teacher data literacy with TPACK: A self-report study based on a novel framework for teachers’ professional development. Frontiers in Psychology, 13(966575), 1–17. https://doi.org/10.3389/fpsyg.2022.966575

Daniela, L., & Rūdolfa, A. (2019). Learning platforms: How to make the right choice. In L. Daniela (Ed.), Didactics of smart pedagogy (pp. 191–209). Springer. https://doi.org/10.1007/978-3-030-01551-0_10

Denzin, N. K. (1978). The research act: A theoretical introduction to sociological methods (2nd ed.). McGraw Hill.

Edmunds, B., & Hartnett, M. (2014). Using a learning management system to personalize learning for primary school students. Journal of Open, Flexible and Distance Learning, 18(1), 11–29.

Gelderblom, G., Schildkamp, K., Pieters, J., & Ehren, M. (2016). Data-based decision making for instructional improvement in primary education. International Journal of Educational Research, 80, 1–14. https://doi.org/10.1016/j.ijer.2016.07.004

Greller, W., & Drachsler, H. (2012). Translating learning into numbers: A generic framework for learning analytics. Educational Technology and Society, 15(3), 42–57.

Greller, W., Ebner, M., & Schön, M. (2014). Learning analytics: From theory to practice—Data support for learning and teaching. In M. Kalz & E. Ras (Eds.), Computer assisted assessment. Research into E-assessment. Communications in computer and information science (Vol. 439, pp. 79–87). Springer.

Hamilton, L., Halverson, R., Jackson, S. S., Mandinach, E., Supovitz, J. A., & Wayman, J. C. (2009). Using student achievement data to support instructional decision making (NCEE 2009-4067). National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, United States Department of Education. https://ies.ed.gov/ncee/wwc/Docs/PracticeGuide/dddm_pg_092909.pdf

Hardy, I., Decristan, J., & Klieme, E. (2019). Adaptive teaching in research on learning and instruction. Journal for Educational Research Online, 11(2), 169–191. https://doi.org/10.25656/01:18004

Hase, A., Kahnbach, L., Kuhl, P., & Lehr, D. (2022). To use or not to use learning data: A survey study to explain German primary school teachers’ usage of data from digital learning platforms for purposes of individualization. Frontiers in Education. https://doi.org/10.3389/feduc.2022.920498

Hattie, J. A. C. (2009). Visible learning. A synthesis of over 800 meta-analyses relating to achievement. Routledge.

Hebbecker, K., Förster, N., Forthmann, B., & Souvignier, E. (2022). Data-based decision-making in schools: Examining the process and effects of teacher support. Journal of Educational Psychology, 114(7), 1695–1721. https://doi.org/10.1037/edu0000530

Hillmayr, D., Ziernwald, L., Reinhold, F., Hofer, S. I., & Reiss, K. M. (2020). The potential of digital tools to enhance mathematics and science learning in secondary schools: A context-specific meta-analysis. Computers and Education, 153, 1–25. https://doi.org/10.1016/j.compedu.2020.103897

Hinkle, H. (2021). Enhancing teacher instruction through evidence-based educational technology: Evaluating teacher’s use of differentiated instruction [Dissertation, University of Oregon].

Holmes, W., Anastopoulou, S., Schaumburg, H., & Mavrikis, M. (2018). Technology-enhanced personalised learning: Untangling the evidence. Robert Bosch Stiftung.

Jewitt, C., Clark, W., & Hadjithoma-Garstka, C. (2011). The use of learning platforms to organise learning in English primary and secondary schools. Learning, Media and Technology, 36(4), 335–348. https://doi.org/10.1080/17439884.2011.621955

Keuning, T., van Geel, M., & Visscher, A. (2017). Why a data-based decision-making intervention works in some schools and not in others. Learning Disabilities Research and Practice, 32(1), 32–45. https://doi.org/10.1111/ldrp.12124

Keuning, T., van Geel, M., Visscher, A., & Fox, J.-P. (2019). Assessing and validating effects of a data-based decision-making intervention on student growth for mathematics and spelling. Journal of Educational Measurement, 56(4), 757–792. https://doi.org/10.1111/jedm.12236

Kippers, W. B., Poortman, C. L., Schildkamp, K., & Visscher, A. J. (2018a). Data literacy: What do educators learn and struggle with during a data use intervention? Studies in Educational Evaluation, 56, 21–31. https://doi.org/10.1016/j.stueduc.2017.11.001

Kippers, W. B., Wolterinck, C. H. D., Schildkamp, K., Poortman, C. L., & Visscher, A. J. (2018b). Teachers’ views on the use of assessment for learning and data-based decision making in classroom practice. Teaching and Teacher Education, 75, 199–213. https://doi.org/10.1016/j.tate.2018.06.015

Knoop-van Campen, C., & Molenaar, I. (2020). How teachers integrate dashboards into their feedback practices. Frontline Learning Research, 8(4), 37–51. https://doi.org/10.14786/flr.v8i4.641

Krein, U., & Schiefner-Rohs, M. (2021). Data in schools: (Changing) practices and blind spots at a glance. Frontiers in Education, 6(672666), 1–13. https://doi.org/10.3389/feduc.2021.672666

Kruse, S., & Dedering, K. (2018). The idea of inclusion: Conceptual and empirical diversities in Germany. Improving Schools, 21(1), 19–31. https://doi.org/10.1177/1365480217707835

Lai, M. K., & McNaughton, S. (2016). The impact of data use professional development on student achievement. Teaching and Teacher Education, 60, 434–443. https://doi.org/10.1016/j.tate.2016.07.005

Lai, M. K., & Schildkamp, K. (2013). Data-based decision making: An overview. In K. Schildkamp, M. K. Lai, & L. Earl (Eds.), Data-based decision making in education: Challenges and opportunities (pp. 9–21). Springer.

Mandinach, E. B. (2012). A perfect time for data use: Using data-driven decision making to inform practice. Educational Psychologist, 47(2), 71–85. https://doi.org/10.1080/00461520.2012.667064

Mandinach, E. B., & Gummer, E. S. (2016). What does it mean for teachers to be data literate: Laying out the skills, knowledge, and dispositions. Teaching and Teacher Education, 60, 366–376. https://doi.org/10.1016/j.tate.2016.07.011

Mandinach, E. B., & Schildkamp, K. (2021). Misconceptions about data-based decision making in education: An exploration of the literature. Studies in Educational Evaluation, 69(100842), 1–10. https://doi.org/10.1016/j.stueduc.2020.100842

Marsh, J. A. (2012). Interventions promoting educators’ use of data: Research insights and gaps. Teachers College Record: The Voice of Scholarship in Education, 114(11), 1–48. https://doi.org/10.1177/016146811211401106

Mavroudi, A., Papadakis, S., & Ioannou, I. (2021). Teachers’ views regarding learning analytics usage based on the technology acceptance model. TechTrends, 65(3), 278–287. https://doi.org/10.1007/s11528-020-00580-7

Michaeli, S., Kroparo, D., & Hershkovitz, A. (2020). Teachers’ use of education dashboards and professional growth. International Review of Research in Open and Distributed Learning, 21(4), 61–78. https://doi.org/10.19173/irrodl.v21i4.4663

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., The PRISMA Group. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Medicine, 6(7), 1–6. https://doi.org/10.1371/journal.pmed.1000097

Moher, D., Shamseer, L., Clarke, M., Ghersi, D., Liberati, A., Petticrew, M., Shekelle, P., Stewart, L. A., PRISMA-P Group. (2015). Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Systematic Reviews, 4(1), 1–9. https://doi.org/10.1186/2046-4053-4-1

Molenaar, I., & Knoop-van Campen, C. A. N. (2018). How teachers make dashboard information actionable. IEEE Transactions on Learning Technologies, 12(3), 347–355. https://doi.org/10.1109/TLT.2018.2851585

Peters, M. T., Förster, N., Hebbecker, K., Forthmann, B., & Souvignier, E. (2021). Effects of data-based decision-making on low-performing readers in general education classrooms: Cumulative evidence from six intervention studies. Journal of Learning Disabilities, 54(5), 334–348. https://doi.org/10.1177/00222194211011580

Plass, J. L., & Pawar, S. (2020). Toward a taxonomy of adaptivity for learning. Journal of Research on Technology in Education, 52(3), 275–300. https://doi.org/10.1080/15391523.2020.1719943

PowerSchool. (2019). Empower data-driven teaching and learning throughout your organization with Powerschool unified classroom Schoology learning. Retrieved November 9, 2023, from http://go.powerschool.com/rs/861-RMI-846/images/schoology_product_flyer_april_2021.pdf?utm_campaign=CORE-AIRC-WC-2021-04-30_Schoology_Acceleration_Flyer&utm_content=ungated_sgy&utm_term=618172

PowerSchool. (2023). PowerSchool Schoology Learning. Retrieved November 9, 2023, from https://www.powerschool.com/classroom/schoology-learning/

Prenger, R., & Schildkamp, K. (2018). Data-based decision making for teacher and student learning: A psychological perspective on the role of the teacher. Educational Psychology, 38(6), 734–752. https://doi.org/10.1080/01443410.2018.1426834

Reinhold, F., Hoch, S., Werner, B., Richter-Gebert, J., & Reiss, K. (2020). Learning fractions with and without educational technology: What matters for high-achieving and low-achieving students? Learning and Instruction, 65, 1–19. https://doi.org/10.1016/j.learninstruc.2019.101264

Sampson, D., Papamitsiou, Z., Ifenthaler, D., Giannakos, M., Mougiakou, S., & Vinatsella, D. (2022). Educational data literacy. Springer. https://doi.org/10.1007/978-3-031-11705-3

Schaumburg, H. (2021). Personalisiertes Lernen mit Digitalen Medien als Herausforderung für die Schulentwicklung. Ein systematischer Forschungsüberblick. Medienpädagogik, 41(Themenheft Inklusive Digitale Bildung), 134–166. https://doi.org/10.21240/mpaed/41/2021.02.24.x

Schildkamp, K. (2019). Data-based decision-making for school improvement: Research insights and gaps. Educational Research, 61(3), 257–273. https://doi.org/10.1080/00131881.2019.1625716

Schildkamp, K., Karbautzki, L., & Vanhoof, J. (2014). Exploring data use practices around Europe: Identifying enablers and barriers. Studies in Educational Evaluation, 42, 15–24. https://doi.org/10.1016/j.stueduc.2013.10.007

Schildkamp, K., & Kuiper, W. (2010). Data-informed curriculum reform: Which data, what purposes, and promoting and hindering factors. Teaching and Teacher Education, 26, 482–496. https://doi.org/10.1016/j.tate.2009.06.007

Shen, J., & Cooley, V. E. (2008). Critical issues in using data for decision-making. International Journal of Leadership in Education, 11(3), 319–329. https://doi.org/10.1080/13603120701721839

Snappet. (2023). Solution. A view of all classes at a glance? Retrieved November 9, 2023, from https://snappet.org/solutions/a-view-of-all-classes-at-a-glance/

University of Oregon. (2023). NumberShire Integrated Tutor System: Supporting schools to scale up evidence-based education technology to improve math outcomes for students with disabilities. Retrieved November 13, 2023, from https://ctl.uoregon.edu/research/projects/numbershire-integrated-tutor-system-supporting-schools-scale-evidence-based

Van der Kleij, F. M., Vermeulen, J. A., Schildkamp, K., & Eggen, T. J. H. M. (2015). Integrating data-based decision making, assessment for learning and diagnostic testing in formative assessment. Assessment in Education: Principles, Policy and Practice, 22(3), 324–343. https://doi.org/10.1080/0969594X.2014.999024

Van Geel, M., Keuning, T., Visscher, A. J., & Fox, J. P. (2016). Assessing the effects of a school-wide data-based decision-making intervention on student achievement growth in primary schools. American Educational Research Journal, 53(2), 360–394. https://doi.org/10.3102/0002831216637346

Van Leeuwen, A., Knoop-van Campen, C. A. N., Molenaar, I., & Rummel, N. (2021). How teacher characteristics relate to how teachers use dashboards: Results from two case studies in K–12. Journal of Learning Analytics, 8(2), 6–21. https://doi.org/10.18608/jla.2021.7325

Verbert, K., Duval, E., Klerkx, J., Govaerts, S., & Santos, J. L. (2013). Learning analytics dashboard applications. American Behavioral Scientist, 57(10), 1500–1509. https://doi.org/10.1177/0002764213479363

Verbert, K., Govaerts, S., Duval, E., Santos, J. L., Van Assche, F., Parra, G., & Klerkx, J. (2014). Learning dashboards: An overview and future research opportunities. Personal and Ubiquitous Computing, 18, 1499–1514. https://doi.org/10.1007/s00779-013-0751-2

Visscher, A. J. (2021). On the value of data-based decision making in education: The evidence from six intervention studies. Studies in Educational Evaluation, 69, 1–9. https://doi.org/10.1016/j.stueduc.2020.100899

Wilcox, G., Fernandez Conde, C., & Kowbel, A. (2021). Using evidence-based practice and data-based decision making in inclusive education. Education Sciences, 11(3), 1–11. https://doi.org/10.3390/educsci11030129

Xhakaj, F., Aleven, V., & McLaren, B. M. (2016). How teachers use data to help students learn: Contextual inquiry for the design of a dashboard. In K. Verbert, M. Sharples, & T. Klobučar (Eds.), Adaptive and adaptable learning. EC-TEL 2016. Lecture notes in computer science (Vol. 9891, pp. 340–354). Cham: Springer. https://doi.org/10.1007/978-3-319-45153-4_26

Xhakaj, F., Aleven, V., & McLaren, B. M. (2017). Effects of a dashboard for an intelligent tutoring system on teacher knowledge, lesson plans and class sessions. In E. André, R. Baker, X. Hu, M. M. T. Rodrigo, & B. du Boulay (Eds.), Proceedings of the 18th international conference on artificial intelligence in education (AIED 2017). LNAI 10331 (pp. 582–585). Berlin: Springer. https://doi.org/10.1007/978-3-319-61425-0_69

Acknowledgements

We thank our student assistants for their support in searching and rating the articles.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research article was funded by the Open Access Publication Fund of the Leuphana University Lüneburg. The systematic review developed within the CODIP project which is funded by the Quality Initiative Teacher Training (Qualitätsoffensive Lehrerbildung), a joint initiative of Federal Government and the German states. The financial means are provided by the Federal Ministry of Education and Research (BMBF) under Grant 01JA2002.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors report there are no competing interests to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hase, A., Kuhl, P. Teachers’ use of data from digital learning platforms for instructional design: a systematic review. Education Tech Research Dev (2024). https://doi.org/10.1007/s11423-024-10356-y

Accepted:

Published:

DOI: https://doi.org/10.1007/s11423-024-10356-y