Abstract

We develop an agent-based simulation of the catastrophe insurance and reinsurance industry and use it to study the problem of risk model homogeneity. The model simulates the balance sheets of insurance firms, who collect premiums from clients in return for insuring them against intermittent, heavy-tailed risks. Firms manage their capital and pay dividends to their investors and use either reinsurance contracts or cat bonds to hedge their tail risk. The model generates plausible time series of profits and losses and recovers stylized facts, such as the insurance cycle and the emergence of asymmetric firm size distributions. We use the model to investigate the problem of risk model homogeneity. Under the European regulatory framework Solvency II, insurance companies are required to use only certified risk models. This has led to a situation in which only a few firms provide risk models, creating a systemic fragility to the errors in these models. We demonstrate that using too few models increases the risk of nonpayment and default while lowering profits for the industry as a whole. The presence of the reinsurance industry ameliorates the problem but does not remove it. Our results suggest that it would be valuable for regulators to incentivize model diversity. The framework we develop here provides a first step toward a simulation model of the insurance industry, which could be used to test policies and strategies for capital management.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The modern insurance systemFootnote 1 has its roots in the establishment of Lloyd’s of London in the 1680s, which was named for a coffee house that catered to marine insurance brokers. The first major crisis followed less than a decade later after the Battle of Lagos in 1693. During this battle a fleet of French privateers attacked an Anglo-Dutch merchant fleet causing estimated losses of around 1 million British poundsFootnote 2 (Leonard 2013a; Go 2009; Anderson 2000). Risk assessment was inadequate and underestimated several risk factors.Footnote 3 Worse, it was not only some few underwriters that took the risk of writing policies for this merchant fleet, it was a significant part of the industry. 33 underwriters went bankrupt. The English parliament considered legislation that would have resulted in a government bailout (House of Commons 1693), but the bill failed (Leonard 2013a, b).

Today’s insurance–reinsurance systems build on centuries of experience. Modern insurance companies have moved beyond the coffee house and are built on a more solid institutional foundation and are hopefully more prudent and more competent in assessing risks. Nonetheless, the example serves to illustrate two points. First, catastrophic damages are difficult to anticipate with any accuracy and unanticipated high losses remain a reality. More recent examples include the Asbestos case, the Piper Alpha disaster, the 2017 Caribbean hurricane season, and the fallout of the 2020 Covid-19 pandemic. Second, a lack of diversity in risk models can lead to problems at a systemic scale.

The insurance industry has made huge progress in its ability to estimate risks. But there is more to the insurance business than simply estimating individual risks. Companies need to make a variety of decisions, such as how much total risk to take, how much capital to hold in reserve, and how to set premiums. Insurance companies compete with each other and so they do not make these risks in isolation. This can lead to systemic effects that create systemic risks that are not visible to individual firms.

Our goal here is to create a model that makes it possible to study systemic effects at the level of the insurance industry as a whole. To do this we simulate individual firms and the perils they ensure using an agent-based approach. We also simulate how firms set premiums, how they manage their capital, and how these actions affect each other. Our model is the first to simulate the catastrophe insurance industry at this level. Here we use the model to address a specific problem, the dangers of consolidating the entire industry under a few risk models. This is relevant as regulatory frameworks, such as the European Solvency II, may cause such consolidation as a side effect. We also explore the role of the reinsurance industry in mitigating risks. However, the possible uses of this model go beyond those we explore here.

The paper is organized as follows: Section 2 provides a short description of the insurance industry for context. Section 3 gives an overview of previous work, and Sect. 4 introduces the model. The results are discussed in Sect. 5. Section 6 concludes.

2 Background: the insurance industry

The business of the retail insurer is to pool and hedge risks and to hold sufficient capital in sufficiently liquid form to compensate for damages when they happen. This works very well as long as damages are small and uncorrelated. In this case, providing all moments exist and the distribution of damages is known, the central limit theorem makes it possible to estimate damages accurately. For catastrophes, in contrast, the distributions are not always well understood, the tails are typically heavy, and events are not always independent.

Perils such as earthquakes, hurricanes, flooding, and other natural catastrophes occur rarely, but when they do the damages can be significant. Both the intervals between perils and the damage sizes follow long tailed distributions (Emanuel and Jagger 2010; Embrechts et al. 1999; Christensen et al. 2002). Insurers typically take out reinsurance to cover their tail risk; in present times this typically applies to damages beyond 50 million USD and up to 200 million USD. Each insurance or reinsurance firm typically enters into reinsurance contracts with a wide range of other firms, spreading the risk. Each firm attempts to estimate risks using models that are typically provided by third parties. Modern catastrophe risk models are sophisticated but are inevitably still inaccurate due to the difficulty of fitting heavy tailed, non-stationary distributions. Climate change, for instance, affects the frequency and severity of windstorms, while changing settlement patterns affect damage sizes (Grinsted et al. 2019).

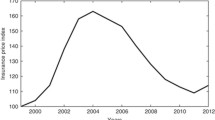

The insurance cycle is shown here in terms of the global reinsurance Rate-on-Line (ROL) index, which is plotted as a function of time. The ROL is the ratio of the average premium paid to recoverable losses (shown here in percent). The ROL index is computed from the ROLs of contracts and renewals in a number of markets across the globe and provides a measure of the quality of the market for the industry as a whole. The striking feature is that the ROL varies across a large range, from \(100\%\) to almost \(400\%\), and it is highly correlated from year to year. The data is from Guy Carpenter, who is a reinsurance broker; raw data and details of the computation are not public

The size of the combined insurance and reinsurance sector is trillions US Dollars, one order of magnitude below the total of the world’s capital markets. In turn, the reinsurance sector, making up about 10% of the insurance business, is an order of magnitude smaller than the insurance sector. For example, for the third quarter of 2015, the total global capital was reported (AON Benfield 2016) as \(\$4.2\) trillion for insurance and \(\$565\) billion for reinsurance.

The insurance industry has historically tended to oscillate between strongly competitive periods, called soft markets, and less competitive periods, called hard markets. This oscillation is usually referred to as the insurance cycle or underwriting cycle. As shown in Fig. 1, the insurance cycle is irregular in both period and amplitude. During soft markets competition increases, premiums are down, insurers are more willing to underwrite new business, underwriting criteria are relaxed, and the capacity of the industry increases. Conversely, during hard markets premiums are high, competition is low, underwriting criteria are tight, and the capacity of the industry contracts. For catastrophe insurance the cycle bears some relationship to the incidence of catastrophes, but it does not track this as closely as one might expect, and depends also on other factors, such as available capital. The insurance cycle occurs even in sectors such as life insurance and casualty insurance that do not suffer large fluctuations in claims. There is no consensus about the cause of the insurance cycle.Footnote 4 During recent years the amplitude of the insurance cycle has been reduced, which some attribute to an increased influx of capital.

This volatility and the potential damages in case of a systemic breakdown of the insurance industry make some degree of regulation necessary. In the European context, the Solvency II framework was adopted by the EU in 2009 and implemented after several delays in 2016. It includes requirements for capital, liquidity, and transparency on the part of the insurance companies. In addition it also includes standards for risk models, which must be certified. While the resulting quality control likely has substantial beneficial effects, there are concerns that the perspective of Solvency II is too strongly microprudential, and may ignore possible negative effects at the systemic level Gatzert and Wesker (2012).

There are concerns about systemic fragility that may be caused by using a small number of risk models. In part because of the strict requirements of Solvency II, insurance firms have been driven to outsource the problem of risk modeling. The provision of risk models has come to be dominated by three major competitors, RMS (Risk Management Solutions), AIR (Applied Insurance Research) and EQECAT. According to a survey carried out by the Bermuda Monetary Authority, these three vendors “practically control the entire catastrophe modelling sector” (BMA (Bermuda Monetary Authority) 2016). In Table 1 we show the relative overall usage of risk models by insurance firms from 2011–2017. A few overall trends are apparent: Many firms only use a single risk model. The dominant provider, RMS, is used exclusively by \(41\%\) of the firms in 2017, rising from \(33\%\) in 2011. The second largest provider, AIR, is used exclusively by \(19\%\) of firms, and the third largest provider, EQECAT, is not used exclusively by any firms. The remaining firms use a mixture of models by RMS and AIR. In 2001 \(21\%\) of the firms used a mixture of models by all three providers; there are now no firms that do this.

There are good reasons why using more than one risk model is desirable. Risk models are inevitably inaccurate, and using more than one makes it possible to average out inaccuracies and improve forecasts. This is a consequence of the general desirability of forecast combination.Footnote 5 Given a set of models, each of which makes useful forecasts that are not perfectly correlated, forecasts can be improved by taking weighted averages of the forecasts of each model. This provides an incentive for individual firms to use more than one model. From a systemic point of view, it is desirable for different insurance firms to use different models. This is because one firm goes bankrupt due to errors in its model, firms with different models (and different errors) may survive as a result of their diversity. Thus even if one provider’s risk models are superior to those of other providers, it may still be desirable for the industry as a whole if firms use a diversity of models from different providers.

3 Literature review

We will next discuss the state of the art of models of the insurance sector (Sect. 3.1) that our model could potentially build upon. Sections 3.2 and 3.3 review previous findings on two applications of our model, the modeling of the insurance cycle (Sect. 3.2) and the investigation of systemic risk in insurance systems (Sect. 3.3). We discuss empirical findings that may be used for calibration in Sect. 3.4. How these stylized facts are reflected in the model design is discussed in more detail in Sect. 4.

3.1 Modeling the insurance sector

Very few agent-based models of the insurance sector have been developed so far, although there are three notable exceptions: The London flood insurance model by Dubbelboer et al. (2017), the model of premium price setting in non-catastrophe retail insurance by Zhou (2013), further developed in Owadally et al. (2018), and the agent-based model extension to Maynard’s study on catastrophe risk (Maynard 2016).

Non-agent-based analytical contributions often take an equilibrium approach based on game-theory and common assumptions of frictionless markets and rational decision-making. While this can offer some basic guidance on modeling specific elements of insurance markets, their value for system-level analyses and for predictions is limited due to strong assumptions. An example is the hypothesis of the “square-root rule of reinsurance” (Powers and Shubik 2006), that derives the optimal relation of the number of reinsurers to that of insurers as following a square-root function of the size of the system. While the empirical relationship is indeed sub-linear, studies (Venezian et al. 2005; Du et al. 2015) cannot confirm the exact square-root nature. Other examples include Plantin’s (2006) model of the reinsurance market, which aims to prove that reinsurance is necessary for a functioning insurance sector and profitable as a business model under normal conditions. It proceeds to assume that under rationality assumptions, some insurance firms will become reinsurers if the reinsurance sector is not sufficiently large, while making no comment about the dynamics of and possible friction in this process. The limitations of models of this type are well known in the literature (see, e.g., Powers and Ren 2003). These limitations can potentially be overcome by agent-based models.

Zhou (2013) and Owadally et al. (2018) consider pricing in non-life insurance. Risk modeling, systemic effects, and catastrophes are side-aspects in this model. Data used to validate the model are taken from the motor insurance sector of the UK, where catastrophic damages at system-scale are unlikely. The study considers various pricing strategies and is able to recover a realistic insurance cycle with direct local interactions (as opposed to a centralized market) being a major factor. They conclude that the insurance cycle cannot be solely driven by repeated catastrophic shocks.

Maynard (2016) investigates whether the use of scientific models can improve insurance pricing. An agent-based approach is used to evaluate how useful those forecasts are in systems with competing insurance firms. To remove interference from other effects, the number of companies is limited to two and the forecasting strategies are fixed, which makes it possible to investigate survival time and commercial success in a controlled setting.

Dubbelboer et al. (2017) explores the dynamical evolution of flood risk and vulnerability in London. This agent-based model is used to study the vulnerability of homeowners to surface water flooding, a major source of catastrophe risks in the UK. The model focuses on the role of flood insurance, especially in the public–private partnership between the government and insurers in the UK, and the UK’s flood reinsurance scheme Flood Re, which has been introduced as a temporary measure for 25 years starting in 2014 to support the development of a functioning flood reinsurance sector in the country.

In contrast to these approaches, we aim to construct a more comprehensive, generic, and flexible agent-based model of the insurance sector, as introduced in Sect. 4.

3.2 The insurance cycle

There is no consensus in the literature on the causes of the insurance cycle. One major literature tradition believes that natural disasters and large catastrophes are the main driving force (cf. Lamm-Tennant and Weiss 1997). Such events are believed to trigger the transition from a soft market to a hard market. After a catastrophe, the insurance industry receives a large amount of claims that deplete the capital of most insurers while driving those that are less capitalized out of business. The ones surviving reconsider their underwriting criteria are more reluctant to take risks, and premiums start rising as a consequence. Mergers and acquisitions activity also increases during a hard market, especially after a catastrophe when the claims start depleting the capital of the industry. This may already start in the immediate aftermath of the event before any claims are filed, as market participants anticipate substantial damages. The mergers and acquisitions activity also contributes to the reduction of capital in the industry and the increase of prices, since the surviving firms have to absorb the losses of the firms that go out of business and possibly also since they enjoy more market power. In reality, very few firms in the sector file for bankruptcy since the ones in financial difficulties are absorbed by the better capitalized ones due to the value of their customers, branding, insurance information, and human capital.

This literature tradition is exemplified by Lamm-Tennant and Weiss (1997), who aim to identify the insurance cycle empirically by fitting an AR(2) process and to explain its existence and period by running regressions with incidence of catastrophe events and various other explaining variables. They find that catastrophe events are significant while many other variables are not. A drawback of this analysis is that the time series considered are only 20 years long, though they include data for a number of countries.

Other contributions, most notably in this context the ABM analysis by Zhou (2013) and Owadally et al. (2018), contradict this explanation, as they are able to model the emergence of an insurance cycle from price effects without any catastrophe events.

3.3 Systemic risk in insurance

The problem of systemic risk in insurance came into focus after the reinsurer AIG became illiquid and had to be bailed out by the US government during the financial crisis in 2008. Park and Xie (2014) conduct a stress test and find that the systemic damage resulting from one big reinsurer defaulting in the US market would be very limited. Cummins and Weiss (2014) are more cautious; they point out that there is significant counterparty exposure within the reinsurance market through retrocession,Footnote 6 and that this is exactly what led to the historic near-meltdown of the insurance system in the LMX spiral.Footnote 7

Cummins and Weiss (2014) further note possible challenges from other aspects of the system, such as the size distribution of the firms and interconnections with asset markets. This hints at other contagion channels of systemic risk besides counterparty exposure. As in banking, portfolio similarity may be a serious compounding factor in the case of sell-offs, and the interaction of multiple contagion channels may aggravate systemic risk disproportionately (Caccioli et al. 2015).

Solvency II has been hailed for its capacity to decrease capital and liquidity risk (Ronkainen et al. 2007; Gatzert and Wesker 2012). Even authors with a macroprudential focus (Gatzert and Wesker 2012; Kessler 2014) judge systemic risk in modern insurance systems with up-to-date regulation (and explicitly Solvency II) as unlikely. However, their assessment is limited to contagion channels present in banking systems. In this regard, insurance firms, which are not highly leveraged, appear quite safe. However, Eling and Pankoke (2014) voice concerns regarding a potential pro-cyclicality of the Solvency II regulation framework.

A final contagion channel, the one investigated in Sect. 5.2, may be caused by risk model homogeneity. This has been mentioned in passing remarks (Petratos et al. 2017; Tsanakas and Cabantous 2018), but the present study is to our knowledge the first to investigate this issue in a systematic way.

3.4 Empirical findings

Empirical research relevant to agent-based model development in the field of catastrophe insurance includes studies on the insurance–reinsurance system by Froot (2001) and Garven and Lamm-Tennant (2003) as well as Boyer and Dupont-Courtade’s (2015) analysis of reinsurance programs.Footnote 8 All three papers use proprietary data sets.

Traditional wisdom holds that the insurance cycle is mainly driven by the steady stream of catastrophe events. Froot (2001) reports extensive data on reinsurance pricing and shows that prices (in terms of the relation of premium to expected loss) have decreased in the second half of the 1990s, i.e., in recent years before his paper was published. He states that the absence of large catastrophic events during this time frame is the main reason for this decrease of premiums, but also mentions the alternative interpretation of an insurance cycle driven by a mechanism different from catastrophe events. Garven and Lamm-Tennant (2003) find, perhaps unsurprisingly, that demand for reinsurance decreases with the firm size of the insurance firm buying reinsurance (the ceding insurance firm) and its concentration in line-of-business and location and increases with leverage of the ceding firm and with the tail weight (thus, risk) of its written insurance. Boyer and Dupont-Courtade (2015) discuss the layered structure of reinsurance programs. Data reported in the paper show that treaties with one to five layers are common,Footnote 9 but much larger treaties with up to eleven layers occur. A temporary decrease of the number of contracts with the financial crisis in 2008 is evident in their data. They report that parameters of the contracts (premium of the accepted bid, dispersion of the received bids) vary widely across the lines of business. Higher layers tend to be cheaper in terms of rate-on-line (defined as premium divided by limit), as losses affecting these layers are less likely albeit potentially heavy.

The amount of capital used to support reinsurance worldwide has been growing quickly. Most of the growth continues to come from reinsurer and insurer profits and investments, but a substantial amount of capital has recently been injected from sources that did not exist 20 years ago. While these alternative capital sources have almost no impact on the typical policyholder, they have significantly affected the way reinsurance is currently written worldwide. Catastrophe bonds (also known as CAT bonds) (Cummins 2008) are securities that allow the transfer of risks from insurers and reinsurers to institutional investors like hedge funds, mutual funds and pension funds. CAT bonds are attractive to these investors since they have a relatively low correlation with the rest of the financial market and allow the investors to achieve higher diversification. The CAT bond market has been growing steadily over the last 20 years and may have contributed to dampen the insurance cycle. The analysis of CAT bonds by Lane and Mahul (2008) shows that the equivalent measure for CAT bonds to the premium, spread at issue over LIBOR, is explained quite well by a simple linear model (spread at issue as a function of expected loss) although there are other minor influences,Footnote 10 which make it possible to model the pricing of these instruments in a rather simple way.

4 Model description

Based on stylized facts from the literature in the previous section, we develop an agent-based model of insurance–reinsurance systems. To make it possible to study both systemic aspects and characteristics of individual elements, we choose a modular design, so that agents of different types can be switched on and off individually. We discuss a range of relevant applications in Sect. 5.

4.1 Agents

The model, illustrated in Fig. 2, includes five types of agents: insurance customers, insurers, reinsurers, shareholders, and catastrophe bonds. Customers buy insurance coverage and pay premiums. Insurance firms may obtain reinsurance from either traditional reinsurance companies or catastrophe bonds. Insurance and reinsurance contracts oblige the customer (or the insurer obtaining reinsurance) to make regular premium payments, but entitle them to claim reimbursements for covered damages under certain conditions.

Insurance and reinsurance firms (discussed in more detail below) are the core of the model. Most of the decision making capacity in the model lies with them. They consult risk models to support their decision making. They further pay dividends to shareholders.

4.2 Customer side

4.2.1 Insurance customers (households)

Customers are modeled in a very simple way. They own insurable risks which they attempt to insure. They approach one insurer per time step and accept the current market premium if the insurer offers to underwrite the contract. The value of the insurable risks is normalized to 1 monetary unit each and the total number of insurable risks is fixed. The risks are not destroyed but are assumed repaired to their previous value after each damage incident.Footnote 11

Peril regions and firms. A given insurance firm may issue insurance in multiple peril regions. When a damage occurs in a given peril region, we assume it affects all the policies issued in that region, leading to multiple claims with all the firms involved, but it does not affect policies issued in other peril regions. Claims in each peril region therefore occur in clusters

4.2.2 Perils and peril regions

It is convenient to distinguish catastrophic and non-catastrophic perils. Catastrophic perils are the ones affecting most of the risks of a particular peril region, e.g., resulting from a hurricane in Florida, an earthquake in Japan or a flood in Southern England. While perils are rare, they can lead to heavy losses and are thus a primary reason for reinsurance. Non-catastrophic perils, on the other hand, typically affect only individual risks and are more frequent and uncorrelated in time. Examples of this type of perils are car accidents, residential fires, or retail burglaries.

In our model we only consider catastrophic perils, assuming that the effect of the non-catastrophic ones is minor, sometimes covered by deductibles, and subject to averaging out across many risks as a result of the central limit theorem. Catastrophic perils are modeled as follows:

-

Catastrophe event times are determined by a Poisson process, i.e., event separation times are distributed exponentially with parameter \(\lambda \).

-

Total damage follows a power-law with exponent \(\sigma \) that is truncated at total exposure (since insurance payouts cannot be higher than the amount insured).

-

Total damage is assigned to individual risks following a beta distribution calibrated to add up to the total damage.

In the model each insurable risk belongs to one of n peril regions, see Fig. 3. For simplicity we assume that all the risks of the respective peril region are affected by every catastrophic peril hitting that region. In this study we typically consider \(n=4\).

4.2.3 Risk event and loss distributions

Time distribution of catastrophes We assume that the number of catastrophes in the different peril regions follow a Poisson distribution, which means that the separation time between them is exponentially distributed with density function

where \(\lambda \) is the parameter of the exponential distribution and the inverse of the average time between catastrophes. We generally set \(\lambda =3/100\), that is, a catastrophe should occur on average every 33 years. We draw all the random variables necessary to set the risk event profile (when a catastrophe occurs and of what size the damages are) at the beginning of the simulation. In order to compare the n different “worlds” with different risk model diversity settings, we set the same M risk event profiles for the M replications of all n risk model diversity settings. That is, we compare the same hypothetical “worlds” with different risk model settings, but with the same catastrophes happening at the same time and with the same magnitude.

Global loss distribution We use a Pareto probability distribution \(\varphi \) for the total damage inflicted by every catastrophe, since historically they follow a power law. The Pareto distribution is defined as

where \(D_x\) are the values of the damages caused by the catastrophes. We generally set the exponent \(\sigma =2\). The distribution is truncated with a minimum (below which the damage would be too small to be considered a catastrophic event) and a maximum. The maximum is given by the value of insured damages. The density function is therefore:

Like the separation times, the damages of the catastrophes are drawn at the beginning of the simulation and are the same for the different risk model settings. We denote the total normalized loses drawn from this truncated Pareto distribution as \(L_i\).

4.2.4 Individual loss distribution

For the sake of simplicity we assume all risks in the region to be affected by the catastrophe, albeit with a different intensity. To determine the specific distribution of the known total damage across individual risks we use a beta distribution, defined as

where \(\varGamma \) is the Gamma function and \(d_x\) is in this case the individual loss inflicted by the catastrophe to every individual risk. The two parameters g and h determine the shape of the distribution and define the expected value of the beta distribution which is,

Since the total loss inflicted by the catastrophe is \(L_x\) and this should match the expected value (for large numbers of risks), we use this fact to compute h for every catastrophe while always setting \(g=1\). That is,

Solving for b we get

The shape of the individual loss distribution depends on the total loss value and has to be adjusted for every catastrophe. We draw as many values from the distributions as we have risks in the peril region. Finally, the claims received by the insurer j from all risks i insured by j are computed as

where \(e_i\) is the excess of the insurance contract, \(d_{x,i}\) is the individual loss, \(v_i\) the total value of the risk and \(Q_i\) is the deductible. For convenience, we generally have \(v_i=1\).

4.3 Insurer side

4.3.1 Firms, capital, entry, exit

The number of firms in the model at time t is \(f_{t}=i_{t}+r_{t}\), of which \(i_{t}\) are insurance firms and \(r_{t}\) are reinsurance firms. The number of firms is dynamic and endogenous with initial values \(i_{0}\) and \(r_{0}\).

Market entry is stochastic with constant entry probabilities for insurers (\(\eta _i\)) and reinsurers (\(\eta _r\)). New insurance firms have a given initial capital \({\overline{k}}_i\) and new reinsurance firms have initial capital \({\overline{k}}_r\). These are both constants, chosen so that \({\overline{k}}_r\) is substantially larger than \({\overline{k}}_i\).

Market exit occurs with bankruptcy or when insurers or reinsurers are unable to find enough business to employ at least a minimum share \(\gamma \) of the cash that they hold for \(\tau \) time periods. (We calibrate the model so that one time period is roughly a month). Since the return on capital would be extremely low in that case insurers and reinsurers prefer to leave the market or focus on other lines of business. We typically set the parameters to \(\gamma _i=0.6\), \(\tau _i=24\) for insurance firms and to \(\gamma _r=0.4\), \(\tau _r=48\) for reinsurance firms. That is insurance firms exit if they employ less than 60% of their capital for 24 months, reinsurance firms when they employ less then 40% of their capital for 48 months.Footnote 12

Firms obtain income from premium payments and interest on capital \(k_{j,t}\) (of firm j at time t) at interest rate \(\xi \). Firms also cover claims and may attempt to increase capacity by either obtaining reinsurance or issuing CAT bonds. They pay dividends at a rate \(\varrho \) of positive profits. Firms decide whether or not to underwrite a contract based on whether their capital \(k_{j,t}\) can cover the combined value-at-risk (VaR) of the new and existing contracts in the peril region with an additional margin of safety corresponding to a multiplicative factor \(\mu \). They additionally try to maintain a diversified portfolio with approximately equal values at risk across all n peril regions.

Policyholders, shareholders, catastrophe bonds, and institutional investors that would buy catastrophe bonds (such as pension funds and mutual funds ) are not represented as sophisticated agents in this model. Shareholders receive dividend payments. Institutional investors buy catastrophe bonds at a time-dependent price that follows the premium price. They do not otherwise reinvest or have any impact on the companies’ policies.

CAT bonds pay claims as long as they are liquid and are dissolved at bankruptcy or otherwise at the scheduled end of life (at which point the remaining capital is paid out to the owners). The modular setup of the ABM allows us to run replications with and without reinsurance and CAT bonds.

4.3.2 Dividends

Firms in the simulation pay a fixed share of their profits as dividends in every iteration, provided there were positive profits. In time periods in which the firm writes losses no dividend is paid. That is,

where R are the dividends and \(\varrho \) is the share of the profits that is paid as dividends. For the results that we report in this paper we have fixed \(\varrho =0.4\).

4.3.3 Risk models

VaR Each insurance and reinsurance firm employs only one risk model. It uses this risk model to evaluate whether it can underwrite more risks (or not) at any given time. We assume that risk models are imperfect in order to allow investigation of effects of risk model homogeneity and diversity.

There is empirical evidence that risk models are inaccurate (see Sect. 2). In some peril regions they tend to underestimate risk while in others they overestimate it. In our model risk models are inaccurate in a controlled way: they are calibrated to underestimate risks in exactly one of the n peril regions and to overestimate the risks in all other peril regions by a given factor \(\zeta \) (see Table 2). Since the n peril regions are structurally identical, with about the same number of risks and with risk events governed by the same stochastic processes, this allows up to n different risk models of identical quality.Footnote 13

The risk models use the VaR in order to quantify the risk of the insurers in each one of the peril regions. The VaR is a statistic that measures the level of financial risk within an insurance or reinsurance firm over a specific time frame. It is employed in some regulation frameworks including Solvency II, where it is used to estimate the Solvency Capital Requirement. Under Solvency II, insurers are required to have \(99.5\%\) confidence they could cope with the worst expected losses over a year. That is, they should be able to survive any year-long interval of catastrophes with a recurrence frequency equal to or less than 200 years. The probability that catastrophes generating net losses exceeding the capital of the insurer in any given year is \(\alpha =\frac{1}{\mathrm {recurrence\, interval}}=\frac{1}{200}= 0.005\). For a random variable X that would represent the losses of the portfolio of risks of the insurer under study, the VaR with exceedance probability \(\alpha \in [0, 1]\) is the \(\alpha \)-quantile defined as

This means that, e.g., under Solvency II, the capital that the insurer is required to hold can be computed with the \(\mathrm{VaR}_{0.005}(X)\).

Computation of the firm’s capital requirement A firm’s VaR can be derived from the firm’s risk model as a margin of safety factor over the VaR of the entire portfolio: companies should hold capital \(k_{j,t}\) such that

where the \(X_i\) represent all sources of cash flow for the company (including investment returns, credit risk, insurance losses, premium income, expenses, operational failures, etc.) and \(\mu \ge 1\) is a factor for an additional margin of safety. In other words the firm’s whole balance sheet from \(t_0\) to \(t_0+1\) year must be modeled and capital must be sufficient for the firm to have a positive balance sheet \(99.5\%\) of the time as a minimum. Due to catastrophes, this condition can occasionally be violated, e.g., if the company takes a loss such that \(k_{j}\) is suddenly and severely reduced. In the present model, the companies will in such cases stop underwriting until enough capital is recovered.

Estimation of the VaR in the simulation We opt for a simplified approximation of the computation of the true VaR in the firm’s risk models. This simplification is necessary in order to save significant amounts of the otherwise prohibitively long computation time. This section elaborates on why this simplification is necessary and how we nevertheless ensure a largely accurate result.

Computing the VaR over the firm’s portfolio requires computation of the convolution of the distributions of damages and those of the frequency of catastrophes both over time and in all peril regions while also taking into account reinsurance contracts. Reinsurance contracts essentially remove part of the support of the damage distribution and make them non-continuous.Footnote 14 Estimating the non-continuous distribution of cash flows would require a Monte Carlo approach. Since this is necessary for every underwriting decision, it would increase the computation time required for the ABM by orders of magnitude.

We argue that to study the effects of systemic risk of risk model homogeneity, it is not necessary to compute the \(\mathrm{VaR}\) combined for all peril regions and over the entire year.Footnote 15 We will make two simplifications: (1) working with the values at risk due to individual catastrophes in the model and (2) considering the VaR separately by peril region and combining the peril regions with a maximum function.

(1) The focus on individual catastrophes instead of on 12-month periods transforms the timescale in the results of our simulations, but the type of dynamics and the shape of distributions obtained are the same. Evidently, bankruptcies should be more frequent in our approach since we are only holding capital to survive individual catastrophes with a returning period of 200 years, but not catastrophe recurrence in 12-month period intervals. However, bankruptcy frequency is the only aspect that is affected.Footnote 16

(2) Further, computationally expensive convolution of distributions across peril regions can be avoided, since a good approximation can be obtained with the maximum function over the VaRs in individual peril regions. To see this, consider two extreme cases. If, on the one hand, the separation times of catastrophes were perfectly correlated between all n peril regions and catastrophes would therefore always coincide, we would have \(\mathrm{VaR}^c=\mathrm{VaR}^1+\mathrm{VaR}^2+\cdots +\mathrm{VaR}^n\). If, on the other hand, catastrophes would never coincide, we would have \(\mathrm{VaR}^c=\max (\mathrm{VaR}^1,\mathrm{VaR}^2, \ldots , \mathrm{VaR}^n)\). The first scenario overestimates the VaR; the second underestimates it, by neglecting the probability of the coincidence of multiple catastrophes. In other words, there is a residual VaR term \(\mathrm{VaR}^{r}\) to account for this:

We choose our parameters such that the probability of such a coincidence happening is small,

Namely, we choose \(\lambda =100/3\), \(n=4\), hence \(P_\mathrm{coincidence}\approx 0.005\). Consequently, our \(\mathrm{VaR}^{r}\) is smaller than the Solvency II capital requirement threshold. We can therefore avoid performing the prohibitively resource-consuming exact computation of the VaR in the risk models and approximate

Balancing of portfolios based on VaR in the simulation In addition, and especially when getting close to the limit \(k_{j,t} \approx \mu \mathrm{VaR}^i\), firms will prefer to underwrite risks in different peril regions such that the portfolio is approximately balanced, keeping a similar amount of risk in every peril region. More specifically, they underwrite a new contract only if the new standard deviation of the \(\mathrm{VaR}^*\) in all peril regions is lower with than without this new contract. That is,

where \(\mathrm{VaR}^{n*}\) would be the estimated VaR in every peril region if the new contract is accepted. If the standard deviation is higher, firms will only be willing to accept a new contract if they are already balanced enough. In other words, the standard deviation computed with the new \(\mathrm{VaR}^{n*}\) must be small compared to the total cash held by the firm:

where \(\vartheta \in [0, 1]\) is a parameter that regulates how balanced a firm wants to be and n is the number of peril regions.

4.3.4 Premium prices

The insurance industry is highly competitive. This justifies the assumption that all agents are price takers. Insurance and reinsurance premiums depend on the total capital \(K^{T}_{t}=\sum _{j=1}^{f_t} k_{j,t}\) available in the insurance sector. For the sake of simplicity we assume that insurance premiums oscillate around the fairFootnote 17 premium \(p_f\). When the total capital of the industry increases, the premiums paid by a policyholder decrease, and conversely, they increase when the total capital decreases. To avoid unrealistically high volatility, we set hard upper and lower bounds to the premium proportional to \(p_f\), \(p_f \cdot Max_L\) and \(p_f \cdot MinL\). This gives us a development equation for the premium price:

where the slope \(\frac{s \times K^{T}_t}{K^{I}_0 \times {\widetilde{D}} \times H}\) depends on the available capital \(K^{T}_{t}\), the number of risks available in the market H, the expected damage by risk \({\widetilde{D}}\), and the initial capital held by insurers at the beginning of the simulation, \(K^{I}_0\). \(s=s_i\) is a sensitivity parameter.

The thresholds \(Max_L\) and MinL are implemented in the model as parameters and can be varied, although we have run most of the simulations with values of \(MinL=70\%\) of the fair premium as lower boundary and \(MaxL=135\%\) of the fair premium as upper boundary. The boundaries are rarely hit.

Reinsurance prices also follow Eq. 14. The thresholds \(Max_L\) and MinL are the same. The only differences are that

-

the reinsurance premium depends only on the capital available in the reinsurance market

$$\begin{aligned} K^{T}_{t}=\sum _{j=1}^{f_t} z_{j,t}k_{j,t} \end{aligned}$$where z is a vector of length \(f_t\) such that element \(z_{j,t}\) is 1 for reinsurers and 0 for insurers)

-

initial capital is also that of the reinsurers \(K^{R}_0\)

-

the sensitivity to capital changes, \(s=s_r\) is larger than for the insurance premium (steeper slope), \(s_r>s_i\).

The capital in the reinsurance market is usually an order of magnitude below the capital available in the insurance market. This is true for contemporary global insurance and reinsurance markets (see Sect. 2) and is reproduced by the model in the average steady-state values of the capital after careful calibration (see Sect. 5).

For the sake of simplicity premiums are the same for all peril regions. This is a base case that allows us to design risk models of identical quality.

4.4 Contracts

4.4.1 Insurance and traditional reinsurance contracts

Insurers provide standard insurance contracts lasting 12 iterations (months). At the end of a contract the parties try to renew the contract, which leads to a high retention rate.

Insurers may obtain excess-of-loss reinsuranceFootnote 18 for any given peril region. The standard reinsurance contract lasts 12 iterations (months). The insurer proposes a deductible for the reinsurance contract; the reinsurer will evaluate whether or not to underwrite the contract. Each reinsurance contract has a deductible (i.e., the maximum amount of damages the insurer has to pay before the contract kicks in). In our model, the deductible for each contract is drawn from a uniform distribution between [25%, 30%] of the total risk held per peril region by the insurer at the start of the contract.

4.4.2 Alternative reinsurance: CAT bonds

The model also includes a simplified alternative insurance capital market: Both insurers and reinsurers may issue catastrophe bonds (CAT bonds) by peril region. A CAT bond is a risk-linked security that allows institutional investors like mutual funds and pension funds to reinsure insurers and reinsurers. They are structured like a typical bond, where the investors transfer the principal to a third party at the beginning of the contract and they receive coupons (some points over LIBOR) every year for it. If during the validity of the contract no catastrophe occurs the principal is returned to the investor. If a catastrophe occurs the losses of the insurer and reinsurer are covered with the principal until it is exhausted. CAT bonds are attractive since they are uncorrelated with the other securities available in the financial market. Since institutional investors are risk averse only the high layers of the reinsurance programs with a low probability of loss are covered by CAT bonds.

If insurers cannot get reinsurance coverage over five or more iterations they issue a CAT bond. The premium of CAT bonds is a few points over the reinsurance premium of the traditional reinsurance capital market.

4.5 Model setup and design choices

4.5.1 Settings

Risk model homogeneity and diversity can be studied by comparing settings with different numbers of risk models used by different firms. In a one risk model case, all firms use the same (imperfect) risk model. In a two risk model case, firms are divided between two equally imperfect risk models, etc.

4.5.2 Experimental design

We compare n settings with different numbers of risk models \(\nu =1, 2, \ldots , n\). The up to \(\nu =n\) different risk models are of identical accuracy and distinguished by underestimating risks in different peril regions. As a consequence, we need to model n different peril regions; we retain this number of peril regions in all settings including the ones with \(\nu <n\) different risk models in order to allow for a more direct comparison.

Simulations are run as ensembles of M replications for each of the n settings considered. In every replication we run the model with identical parameters, changing only the original random seed. We typically set \(M=400\) and \(n=4\), which means we run \(4\times 400=1600\) replications of a simulation just varying the number of risk models (\(M=400\) each for \(\nu = 1, 2, 3, 4\)).

The catastrophes in the model are random, but occur at the same time steps for the different model diversity settings to provide a meaningful comparison. If a catastrophe x of size \(D_x\) happens in time step \(t_x\) in replication \(m_x\) for the one risk model case, then a catastrophe of the same size \(D_x\) will hit the simulation in the same time step (\(t_x\)) in the same replication \(m_x\) in the two risk model case, in the three risk model case, and in the four risk model case. This allows us to isolate the effects of risk model diversity as the four different settings are exposed to the same sequences of perils.

We run experiments for various parameter values, e.g., to consider the effects of different margins of safety \(\mu \) and the effect of the presence or absence of reinsurance.

We have designed the model so that after transients die out the behavior is stationary. This allows us to take long time averages of quantities such as the frequency of bankruptcies. Quantities such as the number of firms and number of reinsurance firms are set initially, but these change in time in response to the other parameters. After a long time the initial settings become irrelevant. To avoid biasing our results with data from the transient stage we remove the first 1200 periods (100 years) of the simulations.Footnote 19

4.5.3 Software

The model was written in Python. The source code is publicly available.Footnote 20 It is still under development, e.g., with extensions for validation, calibration, and visualization.

5 Results

We now demonstrate applications of the model. We aim to highlight its capabilities and demonstrate insights on the behavior of catastrophe insurance systems that can be gained from it.

Section 5.1 discusses the behavior of the model within single replications. Using the example of the insurance cycle, it will be highlighted that the model is able to reproduce realistic time series.

Section 5.2 compares ensemble simulations with four different risk model diversity settings. The four risk model diversity settings correspond to the one, two, three, and four risk models used by the firms in the respective simulation. It serves to demonstrate that the model can be used to investigate systemic risk of model homogeneity in insurance. We show time development patterns in the ensemble simulations (premiums, revenue, numbers of active firms), provide evidence of systematic differences between risk model diversity settings and discuss distributions of firm bankruptcy cascade sizes and of amounts of non-recovered claims.

Section 5.3 investigates the effect of reinsurance by comparing simulations in the base scenario with the normal complement of reinsurance firms (as discussed in previous subsection) on the one hand and counterfactual ones without a reinsurance sector on the other.

Section 5.4 discusses emerging distributions of firm sizes that reproduce asymmetric firm size distributions in reality nicely in spite of initially equal firm sizes in the model.

If not indicated otherwise, the parameter settings are as given in Table 8.

Insurance cycle for the model. a Time series of the premium in percent of the fair premium (expected loss) in a typical simulation run, where \(100\%\) means that premiums are on average equal to claims. b Time series for the same simulation run of the sum of profits and losses of all insurers in normalized monetary units. The insurance cycle emerging in the development of the premium (a) has realistic characteristics and is distinct from (albeit influenced by) the development of profits and losses (b)

a Real insurance cycle in terms of the Rate-on-Line index (same as Fig. 1). b Insurance cycle as generated by the ABM, rescaled to the same magnitude as in panel a and over a shorter span of time in comparison with the previous figure. We had to rescale because the algorithm used for computing the Rate-on-Line is not public, but the range of variation of the period and amplitude are similar

5.1 Reproducing the insurance cycle

The insurance model presented here is able to reproduce the most important stylized facts of the insurance cycle as discussed in Sects. 2 and 3.2. In panel (a) of Fig. 4, the time evolution of the premium in a single run of the model over a span of more than 80 years is shown. For the sake of simplicity we ran this simulation without reinsurance. The transitions from soft markets to hard markets can take several years. In line with real insurance cycles, fluctuations are irregular in both frequency and amplitude. The time series of profits and losses of the industry in the same simulation run is shown in panel (b) of Fig. 4. During most years, the industry is growing (profits are positive), but this growth is disrupted in years with catastrophes and the immediately following years. The industry as a whole experiences losses only in years with catastrophes.

In Fig. 5 we show a comparison between the real insurance cycle published by the reinsurance broker Guy Carpenter and a simulated insurance cycle generated by the model.Footnote 21 The cycle generated by the model is a 25-year sample in a single run of more than 200 years. The algorithm Guy Carpenter uses to generate the index is not public as it is commercially sensitive information. To obtain a comparable measure, we have rescaled the premium time series produced by the ABM using a linear transformation to obtain fluctuations of the same magnitude as in the real index. Although there are differences in detail, both time series share approximately the same range of variation in period and amplitude. The rescaled premium time series also have a very similar mean and standard deviation as shown in Table 3.

We also show the autocorrelation spectrum in Fig. 6. Here we can see that the real insurance cycle has a peak at 8 years, while the data generated artificially with the ABM has peaks at 8 and 11 years, which constitutes a fair approximation.

5.2 Systemic risk due to model homogeneity

We now study the characteristics of the insurance system as we vary the number of distinct risk models available to the insurance and reinsurance companies from absolute homogeneity (one risk model) to four risk models with intermediate cases of two and three alternatives.

We have chosen the models so their average accuracy is the same but they are inaccurate in different circumstances (see Sect. 4). Specifically, each model underestimates risks in a different peril region. A catastrophe in a particular peril region will therefore hit firms that employ the one risk model which underestimates this peril region particularly hard.

The results for any given simulation are very diverse, with large variations from run to run. By performing 400 simulations we reduce the variation sufficiently to make the differences clear. To reduce the variance we construct the \(M=400\) ensembles for each of the four risk model diversity settings so that they have identical risk event profiles.Footnote 22 The mean results corresponding to the four settings are shown in Figs. 78, 9 and 10: red (circles) for the setting where all firms use the same risk model, blue (squares) for the setting with two different risk models, green (crosses) for the setting with three risk models, and yellow (triangles) for the setting with four risk models. To convey an idea of the dispersion, the figures include interquartile ranges for the one- and two-riskmodel cases as shaded areas. We also report the mean differences between the settings for all variables visualized in these figures in Table 4. Two-sided t-tests were performed to make sure that the differences are significant.Footnote 23

Number of non-insured risks as a function of time. Ensemble simulation with 400 replications for each risk model diversity settings. Margin of safety is \(\mu =2\). Time steps 1200–4000 (months) are shown (transient in time steps 1–1199 removed). Ensemble means are shown as solid lines. The interquartile ranges of the settings with one (red) and two (blue) risk models are depicted as shaded areas. (The overlap of both areas is shaded in magenta.) (color figure online)

Number of operational insurance firms. See caption for Fig. 7

Amount of excess capital (beyond the capital required to cover currently underwritten contacts). This provides a measure of of the capacity to write additional business. See caption for Fig. 7

Insurance premiums. See caption for Fig. 7

As shown in Fig. 7, the setting with one risk model typically results in more risks left without insurance coverage. Since the number of insurable risks is held constant, if fewer contracts are issued, there are more risks that cannot be insured because no insurance firm is willing to insure them. Insurance firms find it more difficult to diversify their portfolio with only one risk model hence they are more reluctant to issue more contracts. For instance, diversity with four risk models decreases the number of non-insured risks by \(50\%\) on average compared to the homogeneity setting with only one risk model (Table 4).

The market is more competitive with a higher diversity of risk models: Fig. 8 shows that the number of insurance firms is increased from an average of 54 firms to around 66 when more models are used, an increase of more than \(20\%\) (Table 4). Surprisingly, there is very little change in the number of reinsurers. As shown in Fig. 9, this also results in a reduction in the amount of available capitalFootnote 24 for both insurance and reinsurance firms. Here the change is more dramatic: For insurance companies, the available capital when there are four risk models is about \(55\%\) higher than it is with one risk model. For reinsurance companies, available capital it is almost \(76\%\) higher (Table 4). This indicates that risk buffers are higher; companies are able to absorb more catastrophic loses with more model diversity.

Finally, Fig. 10 shows that for insurance firms the risk premiums are lower with one risk model than they are for four risk models, though here the difference is small (less than \(1\%\)). Surprisingly, this effect is reversed for reinsurance firms,Footnote 25 though again, the difference is similarly small. The premium for reinsurance firms in the case of the setting with four risk models is around \(1.6\%\) cheaper than the setting with only one risk model.

Figure 11 shows how the insurance cycle is affected by this in a simulation with the same schedule of catastrophes and random seed for all four risk model diversity settings. The premium tends to be lower when more risk models are available. We also find that the volatility/capital ratio is similar in all cases. The insurance cycle seems to be longer in the case of one risk model.

Bankruptcies are a key measure of systemic risk. To study how the number of risk models affects this, we compile statistics about the size of bankruptcy cascades, which we measure as the share of bankrupt firms \(B_{t}=b_{t}/f_{t}\). Here, \(b_{t}\) is the number of bankrupt firms at time t and \(f_{t}\) the total number of firms at time t). Both numbers include both insurance and reinsurance firms. We study the distribution of these variables across all replications and the entire history of each replication of every setting of the simulation. Figure 12 shows the distributions of sizes of bankruptcy cascades.Footnote 26

The total number of bankruptcy events shown in Fig. 12 does not differ very much between the four risk model diversity settings. However, the number of very large events is very different. For the one risk model case (uppermost panel, red), the body of the distribution extends continuously up to more than a third of the sector (0.35) while in the four risk model case (lowermost panel) only some scattered outliers beyond 0.27 are observed across all 160, 000 time steps from all 400 replications. As shown in Table 6, roughly 4200 firms default with one risk model, whereas there are only about 1500 firms defaulting with four risk models.

Histogram of the number of non-recovered claims \(C_t\) in each bankruptcy cascade, where \(C_t\) is defined on each time step t. See caption for Fig. 12

The linearity of these histograms on semi-log scale suggests that exponentials provide a crude fit to the body of these distributions. As an alternate measure of systemic risk we fit exponentials to each distributionFootnote 27 to measure the slope \({\widehat{\lambda }}\) with which the distribution decays in these semi-log plots. Lower values indicate higher risk of very large events. As shown in as shown in Table 6, we find that the slope for the distribution of sizes of bankruptcy cascades B is steeper for settings with more diversity, changing from \({\widehat{\lambda }}_{\nu =1} = 33.99 \pm 0.07\) with one risk model to \({\widehat{\lambda }}_{\nu =4} = 42.99 \pm 0.1\) with four risk models.Footnote 28 This finding is robust and holds throughout four different series of simulations (each with all four risk model diversity settings) reported in the table: (1) the standard case, (2) a comparative case without reinsurance but all other settings identical (discussed in Sect. 5.3), (3) a comparative case with lower margin of safety (\(\mu =1\)), and (4) a case with lower margin of safety and without reinsurance (discussed in Appendix B). More diversity thus leads to significantly fewer events in specific tail quantiles than comparative settings with less diversity. This is confirmed by the number of bankruptcy events affecting more than \(10\%\) of the insurance and reinsurance firms as reported in the lower part of Table 6. The difference between the four risk model diversity settings becomes larger in cases without reinsurance. This is discussed in more detail in Sect. 5.3 (compare Fig. 14).

We also study the number of non-recovered claims, which yields similar albeit less pronounced results shown in Fig. 13. This is the number of times that a policy is not paid due to the default of an insurance company. We measure this in terms of the number of unpaid claims \(C_{t}\) at each time step.

Histogram of the total sizes of bankruptcy events without reinsurance. See caption for Fig. 12

Histogram of the number of non-recovered claims \(C_t\) at each time step when reinsurance is used. See caption for Fig. 12

Number of operational firms and number of insured risk without reinsurance. See caption for Fig. 7

Insurance premiums without reinsurance. See caption for Fig. 7

5.3 Effect of reinsurance

The effect of reinsurance can be observed by running the simulation without reinsurance firms. The results are reported in Figs. 14 (histogram of sizes of bankruptcy cascades), 15 (histogram of amounts of non-recovered claims), as well as Figs. 16 and 17 (time series). Mean differences for all variables are reported in Table 5; two-sided t-tests show that all mean differences are highly significant.

It can be seen that the effect of risk model diversity or homogeneity on bankruptcy cascades is much stronger in this case. For example, the shapes of the distributions for the four risk model settings are more markedly different in the case without reinsurance (Fig. 14) with the tail becoming shorter for settings with more diversity. With only one risk model, there were 4385 events in the sample that affected more than \(10\%\) of the insurance firms. With four risk models, this reduces to 1229 events, a reduction of \(71\%\) (see Table 6, second column). In the equivalent settings with reinsurance (Fig. 12), this reduction is only \(62\%\) from 4212 to 1561 events. The difference is also clear from the slope of the fit line of the histograms of bankruptcy sizes in semi-log (that is, the parameter of the exponential distribution, \({\widehat{\lambda }}\)): Without reinsurance it changes from \({\widehat{\lambda }}_{\nu =1}=36.81\) to \({\widehat{\lambda }}_{\nu =4}=58.78\), a change of \(60\%\), with reinsurance only \(26\%\) from \({\widehat{\lambda }}_{\nu =1}=33.99\) to \({\widehat{\lambda }}_{\nu =4}=42.99\) (see Table 6, first and second column).

While reinsurance adds a second contagion channel to systemic risk due to the counterparty exposure from reinsurance contracts, it partially alleviates the systemic effects of risk model homogeneity. Therefore, the number of large bankruptcy events (more than \(10\%\) of firms affected) is up to \(20\%\) higher with reinsurance. This can be seen in the first and second column in Table 6. It holds for all settings with at least two risk models. In the setting with risk model homogeneity (only one risk model) this is reversed and the number of large bankruptcy events is larger without than with reinsurance. The increased effect of risk model homogeneity with reinsurance discussed in the previous paragraph is in this setting stronger than the counterparty exposure effect.

The stronger effects of risk model homogeneity are also visible in the time series shown in Figs. 16 and 17 (compared to Figs. 8, 7, and 10): The ensemble means for the different risk model diversity settings lie further apart: With reinsurance, risk model diversity can increase the number of active insurers from about 50 to about 65 (Fig. 8) and reduce the number of uninsured risks from about 6000 to half that (Fig. 7). Without reinsurance, risk model diversity can increase the number of active insurers from about 70 to about 120 and reduce the number of uninsured risks from about 8000 to about 2000 (Fig. 16). With reinsurance, risk model diversity seemed to lead to higher insurance premiums but lower reinsurance premiums. Without reinsurance, the insurance premiums tend to decrease with risk model diversity and thus follow the behavior shown by reinsurance premiums above. Moreover, while the ensemble interquartile ranges overlap for each of these variables with reinsurance, this overlap is not present without reinsurance, except in the premium prices, and there the overlap is small.

It should be noted, however, that the numbers of uninsured risks are higher without than with reinsurance for almost all risk model diversity settings. This emphasizes that reinsurance does have a productive and important role in the insurance system beyond rearranging the patterns of systemic risk of modeling.

Ensemble of empirical complementary cumulative distribution functions (cCDFs) of the distribution of insurance firm sizes in terms of capital after 1000 time steps in an ensemble of 70 replications of simulations with margin of safety \(\mu =2\). The median is shown as solid line, the mean as dashed line, the interquartile range as shaded area. Mean, median, and interquartile range are with respect to the ensemble of cCDFs, i.e., evaluated in x-axis direction

5.4 Reproducing the emergence of asymmetric firm-size distributions

Figure 18 shows the complementary cumulative distribution function of the distribution of insurance firm sizes in terms of capital after 1000 time steps with dispersion in an ensemble run for one parameter setting. Table 7 shows the mean skew and associated confidence measures. The number of firms ranges up to several hundred per replication. Distributions with a long tail emerge consistently across all risk model diversity settings. The skew is always positive and greater than one, indicating highly right-skewed distributions. This is insensitive to parameters (e.g., \(\mu \), presence or absence or reinsurance, etc.) and may also appear for reinsurance firms, although that is not significant because of the smaller number of reinsurance firms (see Appendix B). This corresponds nicely with established empirical facts about firm size distributions, which are found to be long tailed although the findings on the concrete functional form diverge;Footnote 29 lognormal, exponential, or power law shapes have been proposed. This fact can be confirmed for the insurance sector, but the number of firms, both in the simulation replications in our study and in empirical data sets on insurance firms, is not large enough to fit concrete functional forms with sufficient confidence.

6 Conclusion

Solvency II, the EU insurance regulation framework, came into effect in January 2016. It constitutes a major step for insurance regulation in terms of liquidity, capital, and transparency requirements, making it possible to address microprudential aspects as well as potentially systemic risk from counterparty exposure. It also includes provisions for usage and design of risk models. But whether we understand the systemic level of the insurance and reinsurance business sufficiently well to confidently design regulatory measures for a resilient insurance sectors is still an open question. No scholarly consensus has as yet emerged about what drives the insurance cycle. It remains unclear what effect new financial market vehicles like CAT bonds will have on the industry compared to traditional reinsurance. The investigation of systemic risk in insurance is a new and unexplored field.

In the present paper we present an agent-based model of the insurance sector to help address these questions. The model includes reinsurance and a number of other aspects. It reproduces a variety of stylized facts, ranging from the insurance cycle to the firm size distribution to the importance of reinsurance. It also allows investigating the roles of various elements of the insurance system and the mechanisms behind some of its characteristics.

We have demonstrated the capabilities of the model to reproduce said stylized facts and used it to show the existence and the properties of systemic risk of modeling in insurance systems. To do so, we considered ensemble runs with the same environment, the same parameters, and the same profile of risk events but different numbers of (one, two, three, and four) risk models of identical quality employed by the insurance and reinsurance firms in the simulations. We found that settings with greater diversity tend to experience less severe bankruptcy cascades, especially in cases with a low margin of safety and in counterfactual cases without reinsurance.

We found that settings with risk model diversity not only succeeded in partially offsetting the risk of large bankruptcy cascades, but also tended to lead to an insurance–reinsurance sector with greater penetration (higher share of risks underwritten), more active firms, and more available capital for additional endeavors on the part of the insurance firms. However, we found that the benefits differed between the insurance and the reinsurance part of the business; doubtlessly, different parameters can lead to a reallocation of assets between these sectors. Reinsurance tends to mitigate the strength of the systemic risk effect of risk model homogeneity but can exacerbate it in some cases by adding an additional contagion channel (reinsurance counterparty exposure).

It should be noted that the results reported here represent an entirely hypothetical world that was only calibrated in terms of accurate functioning of the interaction mechanisms and credible settings of the environmental parameters, such as the distributions of perils, the interest rate, and the rate of market entry. Running simulations that are calibrated to empirical data of real insurance–reinsurance markets are highly desirable, but will require high-quality data as well as significant efforts in model calibration,Footnote 30 and are left for future research.

Notes

The first insurance contracts emerged much earlier, but the related practices bore little resemblance of the modern insurance system.

This event is also known as the Smyrna fleet disaster and was national tragedy for England. The value of the English GDP in the year 1693 is estimated to be around 59 million pounds (Campbell et al. 2015).

A large number of merchant ships (around 400) with extraordinarily valuable cargo traveled together and were therefore vulnerable to the same risks. In addition, the measures taken to protect the fleet (22 escorting warships) were inadequate.

Reinsurance of reinsurance companies is called retrocession.

Reinsurers at the Lloyd’s of London were present in multiple layers of retrocession without realizing it. When the disaster at the Piper Alpha oil rig in 1988 caused unanticipated high losses, these retrocession layers were triggered, hitting already cash-strapped firms again and at the same time denying lower layers a speedy recovery of claim payments. The retrocession branch and the insurance business as a whole have become more prudent in this regard (Cummins and Weiss 2014).

Insurers tend to slice all the risks in a peril region or the whole firm in various layers of reinsurance by damage size. Each layer is ceded in different contracts and likely to different reinsurance firms.

They do, however, report a slightly decreasing frequency with the number of layers in their data set: 277 one-layered treaties, 201 two-, 235 three-, 129 four-, 79 five-layered treaties.

Data from Lane Financial LLC. seen by the authors of the present paper confirm this.

Identical or similar values for all risks is fairly realistic for property insurance, which is a large part of of the catastrophe insurance business. Other types of insurance (ships, airplanes, satellites, etc.) are subject to size effects on the part of the individual risk and may therefore show a different behavior, but may have similar characteristics to the excess-of-loss reinsurance business included in our model, which also involves individual contracts of large value.

While underwriting decisions are not discussed publicly, it is reasonable to assume that investors would pull their capital if the firm is unable to write enough business. The different values for insurance and reinsurance companies reflect the longer time horizon and the larger scope reinsurance should have compared to insurance.

To investigate the effects of risk model diversity, it is important that the risk models should be of identical quality in order to avoid interference from effects based on quality differences.

For reinsurance firms, reinsurance contracts add parts of other companies’ risk distributions between the contract’s limit and deductible, also making the resulting distribution non-continuous.

We aim to assess the effect of risk model diversity. As explained below, we consider risk models that underestimate the risk in exactly one peril region, while overestimating that in others. The model setup for this approach is correct as long as the true VaR is between the underestimating and overestimating values returned by the various risk models. In fact, we can expect our estimate to be much closer to the true VaR.

Changing the interpretation of the model’s time steps from months to years would correct this aspect exactly. We opt not to do this, as it entails other problems such as the companies’ reaction times, as well as loss of the model’s present level of detail.

By fair premium we mean in this context a premium that would on average offset the damages and thus lead to zero profits and zero losses.

The ABM also allows the possibility of using only proportional reinsurance, although we this is not explored in the present study.

We have observed that the model settles after about 800 time periods. We remove 1.5 times that, i.e., 1200 time periods, to be absolutely sure the transient is not included in the data.

Source: http://www.guycarp.com/. We only have ca. 25 years of data since time series are only available starting in 1990.

That is, we consider 400 different realizations of the stochastic processes governing when and where catastrophic perils of what size occur and run these realizations for each of the risk model settings. Initializing the simulation with the same emsemble of random seeds would not give similar risk event profiles and would not be a sufficiently meaningful comparison. As many aspects of the system are subject to heavy-tailed distributions, individual realizations might dominate the ensemble and bias the comparison if not present in the other three ensembles for the other risk model diversity settings.

It should be noted that the large sample size of 1.12 million observations per setting causes even small differences to be highly significant.

Available capital is the capital that is not tied up covering existing contracts, essentially the capacity to write additional business.

This is influenced by the amount of capital available in these sectors and ultimately by risk models and by firm entry and exit as defined in Sect. 4.3

To account for indirect effects, a bankruptcy cascade is here defined as series of defaulting firms in successive time steps without intermediating time steps in which no bankruptcies occur.

The effect is less clear-cut for the amount of unrecovered claims C. For instance, C is lower for the setting with just one risk model, since the size of the insurance business is smaller, but the expected shortfall is larger.

The empirical distribution is even more heavy-tailed than the ones typically emerging in the model, perhaps because of additional effects from the behavior of financial markets, etc. in reality.

References

Anderson S (2000) The Anglo-Dutch ’Smyrna fleet’ of 1693. In: Hamilton A, Hendrik de Groot A, Van Den Boogert M (eds) Friends and rivals in the east: studies in Anglo-Dutch relations in the Levant from the seventeenth to the early nineteenth century. Publications of the Sir Thomas Browne Institute Leiden, The Netherlands; Brill, Leiden, pp 95–116

Benfield AON (2016) Reinsurance market outlook January 2016. http://thoughtleadership.aonbenfield.com/Documents/20160101-ab-analytics-reinsurance-market-outlook-january-2016.pdf

Barde S, van der Hoog S (2017) An empirical validation protocol for large-scale agent-based models. University of Kent School of Economics Discussion Papers: KPDE 1712. https://EconPapers.repec.org/RePEc:ukc:ukcedp:1712

Bates JM, Granger CWJ (1969) The combination of forecasts. J Oper Res Soc 20(4):451–468. https://doi.org/10.1057/jors.1969.103

BMA (Bermuda Monetary Authority) (2016) Catastrophe risk in Bermuda: BSCR stress testing and modeling practice analysis (2015 report). https://www.bma.bm/viewPDF/documents/2018-12-28-07-39-44-BMA-Catastrophe-Risk-in-Bermuda-Report-2015.pdf

BMA (Bermuda Monetary Authority) (2018) Catastrophe risk in Bermuda: BSCR stress testing and modeling practice analysis (2017 report). http://cloudfront.bernews.com/wp-content/uploads/2018/12/Catastrophe-Risk-in-Bermuda-2017-Report.pdf

Boyer MM, Dupont-Courtade T (2015) The structure of reinsurance contracts. Geneva Papers Risk Insur Issues Pract 40(3):474–492

Caccioli F, Farmer JD, Foti N, Rockmore D (2015) Overlapping portfolios, contagion, and financial stability. J Econ Dyn Control 51:50–63. https://doi.org/10.1016/j.jedc.2014.09.041

Campbell B, Klein A, Overton M, van Leeuwen B (2015) British economic growth, 1270–1870. Cambridge University Press, Cambridge

Christensen K, Danon L, Scanlon T, Bak P (2002) Unified scaling law for earthquakes. Proc Natl Acad Sci USA 99(1):2509–2513. https://doi.org/10.1073/pnas.012581099

Cummins J (2008) Cat bonds and other risk-linked securities: State of the market and recent developments. Risk Manag Insur Rev 11(1):23–47

Cummins JD, Weiss MA (2014) Systemic risk and the U.S. insurance sector. J Risk Insur 81(3):489–528. https://doi.org/10.1111/jori.12039

Deutsch M, Granger CW, Teräsvirta T (1994) The combination of forecasts using changing weights. Int J Forecast 10(1):47–57. https://doi.org/10.1016/0169-2070(94)90049-3

Du N, Zeng Z, Viswanathan K (2015) The square-root rule for reinsurance: further analysis and consideration of China’s market. Asia-Pac J Risk Insur 9(1):125–148

Dubbelboer J, Nikolic I, Jenkins K, Hall J (2017) An agent-based model of flood risk and insurance. J Artif Soc Soc Simul 20(1):1–23