Abstract

A multiple-camera system (more than two cameras) has been developed to measure the shape variations and the 3D displacement field of a sheet metal part during a Single Point Incremental Forming (SPIF) operation. The modeling of the multiple-camera system and the calibration procedure to determine its parameters are described. The sequence of images taken during the forming operation is processed using a multiple-view Digital Image Correlation (DIC) method and the 3D reconstruction of the part shape is obtained using a Sparse Bundle Adjustment (SBA) method. Two experiments that demonstrate the potentiality of the method are described.

Similar content being viewed by others

Notes

Twenty parameters for k, 12 parameters for d, 9 parameters for R s , 9 parameters for t s , 3 parameters for each R i , 3 parameters for each t i and 3 parameters for each 3D point M.

4 × 2 n p is a maximum, corresponding to the situation where all the p points of the calibration target are seen by all the 4 cameras for all the n positions of the target. In practice, the number of equations N e is a little smaller since in equation (3) the d ijk terms are only computed for the image-points that are seen in at least two cameras.

Typically, from 15 to 25 images of the target are taken during the calibration step. The estimation of the calibration parameters is performed by using the “best” target positions leading to the best reprojection error (19 target positions in the experiment under concern).

In both experiments, the skew factor is set to 0 and we only estimate the 1st order radial distortion parameter. Thus, there are only 20 intrinsic parameters: 16 parameters for k and 4 parameters for d.

The mesh size is smaller than the one shown in Fig. 7. Typically, the grid size is 5 × 5 pixels.

t0 + Δt − 1 and t0 + Δ t correspond to two consecutive acquisition instants.

Note that the distortion parameters are also intrinsic to the camera and they could be added to the intrinsic parameters vector.

References

Jeswiet J, Micari F, Hirt G, Bramley A, Duflou J, Allwood J (2005) Asymmetric single point incremental forming of sheet metal. CIRP Ann Manuf Technol 54(2):88–114

Jeswiet J, Young D, Ham M (2005) Non-traditional forming limit diagrams for incremental forming. Adv Mat Res 6–8:409–416

Shim MS, Park JJ (2001) The formability of aluminium sheet in incremental forming. J Mater Process Technol 113:654–658

Micari F, Ambrogio G, Filice L (2007) Shape and dimensional accuracy in single point incremental forming: state of the art and future trends. J Mater Process Technol 191:390–395

Kim TJ, Yang DY (2000) Improvement of formability for the incremental sheet metal forming process. Int J Mech Sci 42:1271–1286

He S, Van Bael A, Van Houtte P, Tunckol Y, Duflou J, Henrard C, Bouffioux C, and Habraken AM (2005) Effect of FEM choices in the modelling of incremental forming of aluminium sheets. In: Proceedings of the 8th ESAFORM conference. Cluj-Napoca, Romania

Vic-Snap® and Vic-3D® (2008) Correlated Solutions Inc. http://www.correlatedsolutions.com/

Bugarin F, Decultot N, Devy M, Harvent J, Orteu J-J, Robert L, Velay V (2008) Multi-camera computer vision for large volume inspection: (a) aeronautical parts inspection and (b) incremental forming. In: Large Volume Metrology Conference 2008 (LVMC’2008), Liverpool, UK, 4–5 November 2008. Available from http://www.lvmc.org.uk/2008_presentations.shtml

Robert L, Decultot N, Velay V, Bernhart G (2008) Behaviour identification of aluminium sheet in single point incremental forming by finite element method updated. In: Photomechanics’2008, Loughborough, UK, 7–9 July 2008

Sutton MA, Orteu J-J, Schreier HW (2009) Image correlation for shape, motion and deformation measurements—basic concepts, theory and applications, Hardcover, Springer, 364 p, 100 illus. ISBN:978-0-387-78746-6

Sutton MA, Wolters WJ, Peters WH, McNeill SR (1983) Determination of displacements using an improved digital correlation method. Image Vis Comput 1:133–139

Harvent J, Bugarin F, Orteu J-J, Devy M, Barbeau P, Marin G (2008) Inspection of aeronautics parts for shape defect detection using a multi-camera system. In: Proceedings of SEM XI International Congress on Experimental and Applied Mechanics. Orlando, FL, USA, 2–5 June 2008

Harvent J (2010) Mesure de formes par corrélation multi-images: application à l’inspection de pièces aéronautiques à l’aide d’un système multi-caméras. Ph.D. thesis, Université de Toulouse, France

Vasilakos I, Gu J, Belkassem B, Sol H, Verbert J, Duflou JR (2009) Investigation of deformation phenomena in SPIF using an in-process DIC technique. Key Eng Mater 410–411:401–409

Sasso M, Callegari M, Amodio D (2008) Incremental forming: an integrated robotized cell for production and quality control. Meccanica 43:153–163

Faugeras O (1993) Three-dimensional computer vision: a geometric viewpoint. The MIT Press. ISBN:0-262-06158-9

Hartley RI, Zisserman A (2004) Multiple view geometry in computer vision, 2nd edn. Cambridge University Press. ISBN:0521540518

Garcia D, Orteu J-J, Devy M (2000) Accurate calibration of a stereovision sensor: comparison of different approaches. In: Proceedings of 5th workshop on Vision, Modeling, and Visualization (VMV’2000), Saarbrücken, Germany, 22–24 November 2000, pp 25–32

Garcia D (2001) Mesure de formes et de champs de déplacements tridimensionnels par stéréo-corrélation d’images. Ph.D. thesis, Institut National Polytechnique de Toulouse, France

Lourakis MIA, Argyros AA (2004) The design and implementation of a generic sparse bundle adjustment software package based on the levenberg-marquardt algorithm. Tech. Rep. 340, Institute of Computer Science—FORTH, Heraklion, Crete, Greece. Available from http://www.ics.forth.gr/~lourakis/sba

Ayache N (1991) Artificial vision for mobile robots—stereo-vision and multisensory perception. The MIT Press. ISBN:978-0-262-01124-2

Garcia D, Orteu J-J, and Penazzi L (2002) A combined temporal tracking and stereo-correlation technique for accurate measurement of 3D displacements: application to sheet metal forming. J Mater Process Technol 125–126:736–742

Orteu J-J, Cutard T, Garcia D, Cailleux E, Robert L (2007) Application of stereovision to the mechanical characterisation of ceramic refractories reinforced with metallic fibres. Strain 43(2):96–108

Orteu J-J (2009) 3-D Computer vision in experimental mechanics. Opt Lasers Eng 47(3–4):282–291

Triggs B, McLauchlan PF, Hartley RI, Fitzgibbon AW (2000) Bundle Adjustment—A Modern Synthesis. In: ICCV’99: proceedings of the international workshop on vision algorithms. Springer, London, UK, pp 298–372

Weng J, Cohen P, Herniou M (1992) Camera calibration with distorsion models and accuracy evaluation. Pattern Analysis and Machine Intelligence (PAMI) 14(10):965–980

Zhang Z (2004) Camera calibration with one-dimensional objects. IEEE Trans Pattern Anal Mach Intell 26(7):892–899

Pribanić T, Sturm P, Peharec S (2009) Wand-based calibration of 3D kinematic system. IET Comput Vis 3:124–129

Lavest J-M, Viala M, Dhome M (1998) Do we really need an accurate calibration pattern to achieve a reliable camera calibration? In: Proceedings of the 5th European Conference on Computer Vision (ECCV’98), vol I. Springer, London, UK, pp 158–174

Ravn O, Andersen NA, Sorensen AT (1993) Auto-calibration in automation systems using vision. In: 3rd International Symposium on Experimental Robotics (ISER’93) (Japan), pp 206–218

Zhang Z (2000) A flexible new technique for camera calibration. IEEE Trans Pattern Anal Mach Intell 22(11):1330–1334

Acknowledgement

We thank Didier Adé for his helping us carry out the experiments.

Author information

Authors and Affiliations

Corresponding author

Appendix: Modeling and Calibration of a Single Camera

Appendix: Modeling and Calibration of a Single Camera

In this Appendix, the modeling and the calibration of a single camera is presented in order to introduce the general methodology of our approach that has been extended to the modeling and calibration of a multiple-camera system (see Section “Modeling and Calibration of the Multiple-Camera System”).

A classical pinhole camera model (linear model) is used. A set of distortion parameters is added to this model to take into account the optical distortion of the lens (the model becomes non linear) [26].

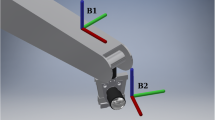

As shown in Fig. 16, we denote \(\mathcal{R}_\mathsf{w}\) the world coordinate system, \(\mathcal{R}_\mathsf{c}\) the camera coordinate system (which has its origin at the lens center), \(\mathcal{R}_\mathsf{r}\) the retinal coordinate system associated to the sensor plane and \(\mathcal{R}_\mathsf{s}\) the image coordinate system (in pixel units).

The three elementary transformations of the pinhole camera model, and the associated coordinate systems. The first transformation relates the coordinate of a scene point into the camera coordinate system. The second one is the projection transformation of this point onto the retinal plane. The third one transform the point into the sensor coordinate system (undistorted pixel)

The transformation that maps any 3D point M in \(\mathcal{R}_\mathsf{w}\) into a 2D image-point m (in pixel units) consists of four consecutive transformations:

-

1.

a transformation between \(\mathcal{R}_\mathsf{w}\) and \(\mathcal{R}_\mathsf{c}\). This is a rigid-body transformation (rotation R + translation t) L = (R, t), that transforms a 3D point M in \(\mathcal{R}_\mathsf{w}\) into a 3D point M c in \(\mathcal{R}_\mathsf{c}\): M c = L(M)

-

2.

a transformation between \(\mathcal{R}_\mathsf{c}\) and \(\mathcal{R}_\mathsf{r}\). This is a projective transformation P (imaging process) that transforms the 3D point M c into a 2D point m r in the retinal plane: m r = P(M c )

-

3.

a transformation between \(\mathcal{R}_\mathsf{r}\) and \(\mathcal{R}_\mathsf{s}\). This transformation describes the sampling of the intensity field incident on the sensor array. A 2D point m r in the retinal plane (in metric units) is transformed into a 2D undistorted point m in the sensor image plane (in pixel units): \(\tilde{m}=\mathbf{K}_\mathbf{k}(m_r)\).

This transformation depends on the five camera intrinsic parameters k = (c x , c y , f x , f y , s), where c x and c y are the coordinates of the principal point (in pixel units), given by the intersection of the optical axis with the retinal plane, f x and f y are the focal length in pixels in the x and y direction, and s is the skew factor.

-

4.

a transformation D d that transforms an ideal undistorted pixel into a distorted pixel: \(m=\mathrm{D_\mathbf{d}}(\tilde{m})\).

This transformation depends on several distortions parameters.Footnote 8 The number of distortion parameters (typically from 1 to 7) depends on the distortion model that is used [26]. In the sequel, we will suppose that a 3-parameter-distortion-model is used (3rd order radial distortion model).

The composition of these four transformations can be written:

and leads to the following equation that relates a 3D point in space M and its 2D projection m onto the camera sensor array (pixel coordinates) :

Calibrating the camera consists in determining its parameters k, d, R and t. The experimental calibration procedure generally uses a calibration target that provides known 3D points. In our work, the calibration is performed by acquiring a series of n images of a planar calibration target made up of p circular points, observed at different positions and orientations (see Fig. 17). Thus, the calibration target provides a set of p known 3D points.

We call \(m_i^j\) the 2D projection into the camera image plane of the jth 3D point M j (j = 1...p) of the ith view (i = 1...n). We define a set of localization matrices L i = (R i , t i ) (i = 1...n) that represent the ith position of the calibration target with respect to camera reference frame (see Fig. 17).

The estimation of the parameters in the least-square sense leads to a non-linear optimization process, where the sum of the 2D Euclidean distance between the estimated projection of the j-th point of the i-th view onto the camera (using equation (5)) and the actual image-point \(m_i^j\) extracted in the i-th image is minimized:

where θ = (k, d, R 1...R n , t 1...t n , M 1...M p ) is the vector of the 8 + 6 n + 3 p parameters that are estimated during the minimization process. It should be noted that there are 2 n p equations (each 3D point projection gives two relations). With a judicious choice of n and p, there are more equations than unknowns (over-determined system).

The estimation of the camera intrinsic parameters k and d, the extrinsic parameters R i and t i of each view, and the 3D points M j of the calibration target is a well-known problem referred to as Bundle Adjustment [25, 29]. It is solved using the Levenberg-Marquardt algorithm. In order the algorithm to converge, an initial guess of the parameters is required. An initial guess of the 5 intrinsic parameters (vector k) and of the extrinsic parameters related to each view (R i and t i ) is computed using the closed-form solution described in [30] or [31]. The distortion parameters (vector d) are simply initialized to 0. An initial guess of the 3D points (M j ) is given by the user supplied model of the target. As the points of the target will be re-estimated, the initial model need not be known accurately which is a great advantage of this calibration method.

Rights and permissions

About this article

Cite this article

Orteu, JJ., Bugarin, F., Harvent, J. et al. Multiple-Camera Instrumentation of a Single Point Incremental Forming Process Pilot for Shape and 3D Displacement Measurements: Methodology and Results. Exp Mech 51, 625–639 (2011). https://doi.org/10.1007/s11340-010-9436-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11340-010-9436-1