Abstract

The key assumption of conditional independence of item responses given latent ability in item response theory (IRT) models is addressed for multistage adaptive testing (MST) designs. Routing decisions in MST designs can cause patterns in the data that are not accounted for by the IRT model. This phenomenon relates to quasi-independence in log-linear models for incomplete contingency tables and impacts certain types of statistical inference based on assumptions on observed and missing data. We demonstrate that generalized residuals for item pair frequencies under IRT models as discussed by Haberman and Sinharay (J Am Stat Assoc 108:1435–1444, 2013. https://doi.org/10.1080/01621459.2013.835660) are inappropriate for MST data without adjustments. The adjustments are dependent on the MST design, and can quickly become nontrivial as the complexity of the routing increases. However, the adjusted residuals are found to have satisfactory Type I errors in a simulation and illustrated by an application to real MST data from the Programme for International Student Assessment (PISA). Implications and suggestions for statistical inference with MST designs are discussed.

Similar content being viewed by others

Notes

Bayesian inference is not considered in this paper.

For example, averaged bias for the item intercepts is computed as \(R^{-1}\sum _{r=1}^R J^{-1}\sum _{j=1}^J {\hat{\beta }}_{jr} - \beta _{jr}\).

Although we distinguish between low- and high-difficulty modules, there generally is considerable overlap due to the variation of item difficulties within units.

Note that we are not at liberty to share the content of the units.

References

Ali, U. S., Shin, H. J., & van Rijn, P. W. (in press). Applicability of traditional statistical methods to multistage test data. In D. Yan & A. von Davier (Eds.), Research for practical issues and solutions in computerized multistage testing. Taylor and Francis.

Berger, M. P. (1992). Sequential sampling designs for the two-parameter item response theory model. Psychometrika, 57, 521–538.

Bishop, Y. M., Fienberg, S. E., & Holland, P. W. (2007). Discrete multivariate analysis: Theory and practice. Springer.

Bock, R. D. (1972). Estimating item parameters and latent ability when responses are scored in two or more nominal categories. Psychometrika, 37, 29–51.

Bock, R. D., & Aitkin, M. (1981). Marginal maximum likelihood estimation of item parameters: Application of an EM algorithm. Psychometrika, 46, 443–459.

Cai, L., & Hansen, M. (2013). Limited-information goodness-of-fit testing of hierarchical item factor models. British Journal of Mathematical and Statistical Psychology, 66, 245–276.

Chalmers, R. (2012). mirt: A multidimensional item response theory package for the R environment. Journal of Statistical Software, 48(6), 1–29.

Chen, W.-H., & Thissen, D. (1997). Local dependence indexes for item pairs using item response theory. Journal of Educational and Behavioral Statistics, 22, 265–289.

Christensen, K. B., Makransky, G., & Horton, M. (2017). Critical values for Yen’s Q3: Identification of local dependence in the Rasch model using residual correlations. Applied Psychological Measurement, 41(3), 178–194.

Eggen, T. J. H. M., & Verhelst, N. D. (2011). Item calibration in incomplete testing designs. Psicológica, 32(1), 107–132.

Gibbons, R. D., & Hedeker, D. (1992). Full-information item bi-factor analysis. Psychometrika, 57, 423–436. https://doi.org/10.1007/BF02295430

Glas, C. A. W. (1988). The Rasch model and multistage testing. Journal of Educational Statistics, 13, 45–52.

Glas, C. A. W. (1989). Contributions to estimating and testing rasch models (Unpublished doctoral dissertation). University of Twente.

Goodman, L. A. (1968). The analysis of cross-classified data: Independence, quasi-independence, and interactions in contingency tables with or without missing entries. Journal of the American Statistical Association, 63, 1091–1131.

Haberman, S. J. (2007). The interaction model. In M. von Davier & C. H. Carstensen (Eds.), Multivariate and mixture distribution Rasch models (pp. 201–216). Springer.

Haberman, S. J. (2013). A general program for item-response analysis that employs the stabilized Newton–Raphson algorithm (ETS Research Report RR-13-32). https://doi.org/10.1002/j.2333-8504.2013.tb02339.x

Haberman, S. J., & Sinharay, S. (2013). Generalized residuals for general models for contingency tables with application to item response theory. Journal of the American Statistical Association, 108, 1435–1444. https://doi.org/10.1080/01621459.2013.835660

Haberman, S. J., Sinharay, S., & Chon, K. H. (2013). Assessing item fit for unidimensional item response theory models using residuals from estimated item response functions. Psychometrika, 78, 417–440. https://doi.org/10.1007/s11336-012-9305-1

Haberman, S. J., & von Davier, A. A. (2014). Considerations on parameter estimation, scoring, and linking in multistage testing. In D. Yan, A. A. von Davier, & C. Lewis (Eds.), Computerized multistage testing: Theory and applications (pp. 229–248). CRC Press.

Houts, C. R., & Cai, L. (2016). flexMIRT: user manual version 3.5: Flexible multilevel multidimensional item analysis and test scoring. Vector Psychometric Group.

Ip, E. H. (2002). Locally dependent latent trait model and the Dutch identity revisited. Psychometrika, 67, 367–386.

Jewsbury, P. A., & van Rijn, P. W. (2020). IRT and MIRT models for item parameter estimation with multidimensional multistage tests. Journal of Educational and Behavioral Statistics, 45, 383–402.

Joe, H., & Maydeu-Olivares, A. (2010). A general family of limited information goodnessof- fit statistics for multinomial data. Psychometrika, 75, 393–419.

Johnson, E. G. (1992). The design of the national assessment of educational progress. Journal of Educational Measurement, 29(2), 95–110.

Kelderman, H., & Rijkes, C. P. M. (1994). Loglinear multidimensional IRT models for polytomously scored items. Psychometrika, 59(2), 149–176.

Kolen, M., & Brennan, R. (2004). Test equating, scaling, and linking: Methods and practices. Springer.

Liu, Y., & Maydeu-Olivares, A. (2013). Local dependence diagnostics in IRT modeling of binary data. Educational and Psychological Measurement, 73, 254–274.

Lord, F. M., & Novick, M. R. (1968). Statistical theories of mental test scores. Addison- Wesley.

Lord, F. M., & Wingersky, M. S. (1984). Comparison of IRT true-score and equipercentile observed-score “equatings’’. Applied Psychological Measurement, 8, 453–461.

Louis, T. (1982). Finding the observed information matrix when using the EM algorithm. Journal of the Royal Statistical Society Series B (Methodological), 44, 226–233. https://doi.org/10.2307/2345828

Maydeu-Olivares, A., & Joe, H. (2005). Limited- and full-information estimation and goodness-of-fit testing in 2n contingency tables: A unified framework. Journal of the American Statistical Association, 100, 1009–1020.

McDonald, R. P. (1999). Test theory: A unified treatment. Lawrence Erlbaum.

Messick, S., Beaton, A., & Lord, F. (1983). National assessment of educational progress reconsidered: A new design for a new era (Tech. Rep.).

Mislevy, R. J., & Chang, H. H. (2000). Does adaptive testing violate local independence? Psychometrika, 65(2), 149–156.

Mislevy, R. J., & Wu, P.-K. (1996). Missing responses and IRT ability estimation: Omits, choice, time limits, and adaptive testing (ETS Research Report RR-96-30). Educational Testing Service.

Monseur, C., Baye, A., Lafontaine, D., & Quittre, V. (2011). PISA test format assessment and the local independence assumption. IERI Monographs Series: Issues and Methodologies in Large-Scale Assessments, 4.

Naylor, J. C., & Smith, A. F. M. (1982). Applications of a method for efficient computation of posterior distributions. Applied Statistics, 31, 214–225. https://doi.org/10.2307/2347995

Nikoloulopoulos, A. K., & Joe, H. (2015). Factor copula models for item response data. Psychometrika, 80(1), 126–150.

Pommerich, M., & Segall, D. O. (2008). Local dependence in an operational CAT: Diagnosis and implications. Journal of Educational Measurement, 45(3), 201–223.

R Core Team. (2019). R: A language and environment for statistical computing [Computer software manual]. Retrieved from https://www.R-project.org/

Reckase, M. D. (2009). Multidimensional item response theory. Springer.

Reiser, M. (1996). Analysis of residuals for the multinomial item response model. Psychometrika, 61, 509–528.

Robin, F., Steffen, M., & Liang, L. (2014). The multistage test implementation of the GRE revised general test. In D. Yan, A. A. von Davier, & C. Lewis (Eds.), Computerized multistage testing: Theory and applications (pp. 325–341). CRC Press.

Rubin, D. B. (1976). Inference and missing data. Biometrika, 63(3), 581–592.

Tjur, T. (1982). A connection between Rasch’s item analysis model and a multiplicative poisson model. Scandinavian Journal of Statistics, 9, 23–30.

van Rijn, P. W., Sinharay, S., Haberman, S. J., & Johnson, M. S. (2016). Assessment of fit of item response theory models used in large-Scale educational survey assessments. Large-scale Assessments in Education. https://doi.org/10.1186/s40536-016-0025-3

Verhelst, N. D., & Verstralen, H. H. F. M. (2008). Some considerations on the partial credit model. Psicologica, 29, 229–254.

von Davier, M., Yamamoto, K., Shin, H. J., Chen, H., Khorramdel, L., Weeks, J., & Kandathil, M. (2019). Evaluating item response theory linking and model fit for data from PISA 2000–2012. Assessment in Education: Principles, Policy & Practice, 26(4), 466–488.

Wainer, H., Bradlow, E., & Wang, X. (2007). Testlet response theory and its applications. Cambridge University Press.

Wainer, H., & Thissen, D. (1996). How is reliability related to the quality of test scores? What is the effect of local dependence on reliability? Educational Measurement: Issues and Practice, 15(1), 22–29.

Warm, T. A. (1989). Weighted likelihood estimation of ability in item response theory. Psychometrika, 54, 427–450. https://doi.org/10.1007/BF02294627

Woods, C. M. (2015). Estimating the latent density in unidimensional IRT to permit nonnormality. In S. P. Reise & D. A. Revicki (Eds.), Handbook of item response theory modeling: Applications to typical performance assessment (pp. 60–84). Routledge.

Yamamoto, K., Shin, H. J., & Khorramdel, L. (2019). Introduction of multistage adaptive testing design in PISA 2018 (OECD Education Working Papers No. 209). https://doi.org/10.1787/b9435d4b-en

Yen, W. M. (1984). Effect of local item dependence on the fit and equating performance of the three-parameter logistic model. Applied Psychological Measurement, 8(2), 125–145.

Yen, W. M. (1993). Scaling performance assessments: Strategies for managing local item dependence. Journal of Educational Measurement, 30, 187–213.

Zenisky, A. L., Hambleton, R. K., & Sireci, S. G. (2001). Effects of local item dependence on the validity of IRT item, test, and ability statistics. (MCAT-5). https://doi.org/10.1002/j.2333-8504.2006.tb02009.x

Zhang, J. (2013). A procedure for dimensionality analyses of response data from various test designs. Psychometrika, 78(1), 37–58.

Zwitser, R. J., & Maris, G. (2015). Conditional statistical inference with multistage testing designs. Psychometrika, 80(1), 65–84.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: R Code

In this appendix, R code to compute the adjusted residuals is presented. First, we need the item-response functions for the 2PL:

Next, the Lord-Wingersky algorithm is needed (code works for dichotomous items only):

Then, we can create a function for the adjusted residuals for the basic MST design, which is shown below. To compute the adjusted residuals, several pieces of output from the MIRT program (Haberman, 2013) are needed. These are the estimated item parameters, the individual gradients, the individual posterior distributions of \(\theta \), and the estimated asymptotic covariance matrix of item parameters. Since the MIRT program can write each piece of output to a separate csv-file, it is straightforward to obtain them and read them into R (see the manual on https://github.com/EducationalTestingService/MIRT).

Appendix B: Additional Simulation Results

Table 3 shows the mean results with respect to recovery of item parameters over 200 replications. The table displays the mean and standard deviation (SD) of average bias and RMSE of intercept and slope parameters for each simulation condition. The means were computed by, for each replication, averaging the bias or RMSE across the parameters, and then averaging across replications. The SDs were computed by taking the standard deviation across replications of these means for each replication. In addition, the mean and SD of bias, RMSE, and reliability are shown for EAP ability estimates. In the last column, the logarithm of the determinant of the item parameter information matrix is shown.

Apart from the results for the complete design, which serve as a reference, the results with respect to item parameter recovery are best for the random design. For \(N=600\) and \(J=9\), there are substantial differences in bias in slope between the designs with the basic MST producing the largest bias. However, the differences in bias between the designs for the other conditions are relatively small. This could be due to the dependencies being relatively larger for smaller J in the basic MST design. For the RMSE, the random design produces the smallest values, although the results for the balanced MST design are generally close. The observation that the random design and the balanced MST design produce similar item parameter recovery is supported by the similar values for the log determinant of the item parameter information matrix. For the basic MST, the results for the intercept seem somewhat surprising, but the intercept parameter should not be confused with the difficulty parameter. That is, the adaptivity targets the difficulty, not the intercept.

With respect to ability estimation, differences in bias between the designs are negligible. For the RMSE and reliability, apart from the complete design, the basic and balanced MST designs produce the best values. In these simulations, the balanced MST design seems to have the best of both worlds in terms item and ability parameter recovery.

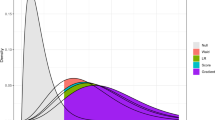

Figure 7 shows the distribution of the \(Q_3\) statistic across all item pairs under the different designs with \(N=6000\) and \(J=45\) using either the posterior or the WLE (Warm, 1989). For the posterior-based \(Q_3\), there appears to be a negative bias, which is claimed to be approximately \(-1/(J-1)\) (Yen, 1993) but appears to be slightly closer to zero here. For the WLE-based \(Q_3\), this bias does not seem to appear for complete and random designs. The basic MST design does seem to result in a small negative bias.

Although the above \(Q_3\) distributions are not that strange, there are interactions between how the \(Q_3\) is computed and which design is used. These can be revealed using heatmaps for the average \(Q_3\) across replications for item pairs, which are shown in Fig. 8. The top row shows the heatmap when sorted by item difficulty and the bottom row shows the heatmap when sorted by module in the basic MST. Clearly, the \(Q_3\) computation and the design interact, although the range of these average \(Q_3\) statistics is actually not that large (see the legend).

Table 4 shows summary statistics of the distribution of the \(M_2\) statistic in the conditions with \(N=6000\) and \(J=45\) as computed by the R package mirt (Chalmers, 2012). Since the mirt package can only compute the statistic for complete data, we only show results based on items in the routing and easy modules for the complete design and the basic MST design (so that \(J=30\)).

Figures 9 and 10 show QQ plots of the generalized residuals for item pair frequencies under the different designs for the two conditions with \(N=600\) and \(N=6000\) with \(J=9\). The QQ plots for the other two conditions with \(J=45\) are not shown as the images are very large.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

van Rijn, P.W., Ali, U.S., Shin, H.J. et al. Adjusted Residuals for Evaluating Conditional Independence in IRT Models for Multistage Adaptive Testing. Psychometrika (2023). https://doi.org/10.1007/s11336-023-09935-4

Received:

Published:

DOI: https://doi.org/10.1007/s11336-023-09935-4