Abstract

Graph-based causal models are a flexible tool for causal inference from observational data. In this paper, we develop a comprehensive framework to define, identify, and estimate a broad class of causal quantities in linearly parametrized graph-based models. The proposed method extends the literature, which mainly focuses on causal effects on the mean level and the variance of an outcome variable. For example, we show how to compute the probability that an outcome variable realizes within a target range of values given an intervention, a causal quantity we refer to as the probability of treatment success. We link graph-based causal quantities defined via the do-operator to parameters of the model implied distribution of the observed variables using so-called causal effect functions. Based on these causal effect functions, we propose estimators for causal quantities and show that these estimators are consistent and converge at a rate of \(N^{-1/2}\) under standard assumptions. Thus, causal quantities can be estimated based on sample sizes that are typically available in the social and behavioral sciences. In case of maximum likelihood estimation, the estimators are asymptotically efficient. We illustrate the proposed method with an example based on empirical data, placing special emphasis on the difference between the interventional and conditional distribution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Graph-Based Models for Causal Inference

The graph-based approach to causal inference was primarily formalized by Judea Pearl (1988, 1995, 2009) and Spirtes, Glymour, and Scheines (2001). A causal graph represents a researcher’s theory about the causal structure of the data-generating mechanism. Based on a causal graph, causal inference can be conducted using the interventional distribution, from which standard causal quantities such as average treatment effects (ATEs) can be derived. In the most general formulation, a causal graph is accompanied by a set of nonparametric structural equations. Thus, a common acronym for Pearl’s general nonparametric model is NPSEM, which stands for non-parametric structural equation model (Pearl, 2009; Shpitser, Richardson, & Robins, 2020).

Graph-based causal models share many common characteristics with the traditional literature on structural equation models (SEM) prevalent in the social and behavioral sciences and economics (Bollen & Pearl, 2013; Heckman & Pinto, 2015; Pearl, 2009, 2012). However, these two approaches also differ in several aspects including the underlying assumptions (e.g., graph-based models assume modularity), notational conventions (e.g., the meaning of bidirected edges in graphical representations), research focus (e.g., nonparametric identification in graph-based models vs. parametric estimation in traditional SEM), and standard procedures.

Graph-based procedures often focus on a single causal quantity of interest (e.g., ATE) and establishing its causal identification based on a minimal set of assumptions (e.g., without making parametric assumptions). Causal quantities are well defined via the do-operator and the resulting interventional distribution and causal identification can be established based on graphical tools such as the back-door criterion (Pearl, 1995) or a set of algebraic rules called do-calculus (Shpitser & Pearl, 2006; Tian & Pearl, 2002a). The central insights developed within the graph-based approach relate to causal identification, whereas less attention has been devoted to the estimation of causal quantities.Footnote 1

On the other hand, the traditional literature on SEM frequently assumes parametrized (often linear) models and usually focuses on identification and estimation of the entire model.Footnote 2 Causal quantities such as direct, indirect and total effects can be defined based on reduced-form equations and partial derivatives (Alwin & Hauser, 1975; Bollen, 1987; Stolzenberg, 1980). A main focus within the traditional SEM literature lies on the model implied joint distribution of observed variables and its statistical modeling. A considerable body of literature is available on model identification (Bekker, Merckens, & Wansbeek, 1994; Bollen, 1989; Fisher, 1966; Wiley, 1973) and estimation (Browne, 1984; Jöreskog, 1967; Satorra & Bentler, 1994) for parametrized SEM.

In this paper, we combine causal quantities from graph-based models with identification and estimation results from the traditional literature on linear SEM. For this purpose, we formalize the do-operator using matrix algebra in the section on “Graph-Based Causal Models with Linear Equations.” Based on this matrix representation, we derive a closed-form parametric expression of the interventional distribution and several causal quantities in the section entitled “Interventional Distribution.” Linear graph-based models imply a parametrized joint distribution of the observed variables. We define causal effect functions as a mapping from the parameters of the joint distribution of observed variables onto the causal quantities defined via the do-operator in the section entitled “Causal Effect Functions.” Methods for identifying parametrized causal quantities are discussed in the section entitled “Identification of Parametrized Causal Quantities”. Estimators of causal quantities that are consistent and converge at a rate of \(N^{-1/2}\) are proposed in the section on "Estimation of Causal Quantities." We show that the proposed estimators are asymptotically efficient in case of maximum likelihood estimation.

Our work extends the literature on traditional SEM by providing closed-form expressions of graph-based causal quantities in terms of model parameters of linear SEM. Furthermore, we extend the literature on linear graph-based models by providing a unifying estimation framework for (multivariate) causal quantities that also allows estimation of causal quantities beyond the mean and the variance. We illustrate the method using simulated data based on an empirical application and provide a thorough discussion of the differences between conditional and interventional distributions in the illustration section.

Throughout this paper, we focus on situations in which direct causal effects are functionally independent of the values of variables in the system. In other words, direct causal effects are constant. In such situations, the data-generating mechanisms can be adequately represented by linear structural equations and the use of linear graph-based causal models is justified. A priori knowledge that suggests constant direct causal effects sometimes allows identifying causal quantities that would not be identified under the more flexible assumptions of the NPSEM (see illustration section for an example). However, scientific theories that suggest constant direct causal effects might be incorrect and consequently, linear models might be misspecified. We will discuss issues related to model misspecification in the discussion section, where we will also point to future research directions.

2 Graph-Based Causal Models with Linear Equations

Linear graph-based causal models are an appropriate tool in situations in which a priori scientific knowledge suggests that each of the following statements is true:Footnote 3

-

1.

The causal ordering of observed variables and unobserved confounders is known.

-

2.

Interventions only alter the mechanisms that are directly targeted (modularity).Footnote 4

-

3.

The treatment status of a unit (e.g., person) does not affect the treatment status or the outcome of other units (no interference).

-

4.

Direct causal effects are constant across units (homogeneity).

-

5.

Direct causal effects are constant across value combinations of observed variables and unobserved error terms (no effect modification).

-

6.

Omitted direct causes as comprised in the error terms follow a multivariate normal distribution.Footnote 5

The first three assumptions listed above are generic to the graph-based approach to causal inference and need to hold in its most general nonparametric formulation. Assumptions 4 and 5 justify the use of linear structural equations. Assumptions 6 justifies the use of multivariate normally distributed error terms. We further assume that variables are measured on a continuous scale and are observed without measurement error. Throughout this paper, we assume that the model is correctly specified. In the discussion section, we briefly point to the literature on statistical tests of model assumptions and methods for analyzing the sensitivity of causal conclusions with respect to violations of untestable assumptions. Furthermore, we briefly discuss possible ways to relax the model assumptions (e.g., measurement errors, unobserved heterogeneity, effect modification, excess kurtosis in the error terms).

A linear graph-based causal models over the set \({\mathcal {V}}=\{V_1,...,V_n\}\) of observed variables are defined by the following set of equations (Brito & Pearl, 2006, p.2):Footnote 6

We assume that all variables are deviations from their means and no intercepts are included in Eq. (1). A nonzero structural coefficient (\(c_{ji}\ne 0\)) expresses the assumption that \(V_i\) has a direct causal influence on \(V_j\). Restricting a structural coefficient to zero (\(c_{ji}=0\)) indicates the assumption that \(V_i\) has no direct causal effect on \(V_j\). The parameter \(c_{ji}\) quantifies the magnitude of a direct effect. The \(q \times 1\) parameter vector \({\varvec{\theta }}_{{\mathcal {F}}}\in {\varvec{\Theta }}_{{\mathcal {F}}}\subseteq {\mathbb {R}}^q\) contains all distinct, functionally unrelated and unknown structural coefficients \(c_{ji}\). \({\varvec{\Theta }}_{{\mathcal {F}}}\) denotes the parameter space, and it is a subspace of the q-dimensional Euclidean space. Restating Eqs. (1) in matrix notation yields:

The \(n \times n\) identity matrix is denoted as \({\mathbf{I}}_n\). The \(n \times n\) matrix of structural coefficients is denoted as \({\mathbf {C}}\), and we sometimes use the notation \({\mathbf {C}}({\varvec{\theta }}_{{\mathcal {F}}})\) to emphasize that \({\mathbf {C}}\) is a function of \({\varvec{\theta }}_{{\mathcal {F}}}\). We restrict our attention to recursive systems for which the variables \({\mathbf {V}}\) can be ordered in such a way that the matrix \({\mathbf {C}}\) is strictly lower triangular (which ensures the existence of the inverse in Eq. (2); Bollen, 1989). The set of error terms is denoted by \({\mathcal {E}}=\{\varepsilon _1,...,\varepsilon _n\}\). Each error term \(\varepsilon _i\), \(i=1,...,n\), comprises variables that determine the level of \(V_i\) but are not explicitly included in the model. Typically the following assumptions (or a subset thereof) are made (Brito & Pearl, 2002; Kang & Tian, 2009; Koster, 1999):

-

(a)

\(\mathrm {E}({\varvec{\varepsilon }})={\mathbf {0}}_n\), where \({\mathbf {0}}_n\) is an \(n\times 1\) vector that contains only zeros.

-

(b)

\(\mathrm {E}({\varvec{\varepsilon }}{\varvec{\varepsilon }}^\intercal )={\varvec{\Psi }}\), where the \(n\times n\) matrix \({\varvec{\Psi }}\) is finite, symmetric and positive definite.

-

(c)

\({\varvec{\varepsilon }}\sim {{N}}_n({\mathbf {0}}_n,{\varvec{\Psi }})\), where \({{N}}_n\) denotes the n-dimensional normal distribution.

A nonzero covariance \(\psi _{ij}\) indicates the existence of an unobserved common cause of the variables \(V_i\) and \(V_j\). The \(p \times 1\) parameter vector \({\varvec{\theta }}_{{\mathcal {P}}}\in {\varvec{\Theta }}_{{\mathcal {P}}}\subseteq {\mathbb {R}}^p\) contains all distinct, functionally unrelated and unknown parameters from the error term distribution. \({\varvec{\Theta }}_{{\mathcal {P}}}\) denotes the parameter space, and it is a subspace of the p-dimensional Euclidean space. We sometimes use the notation \({\varvec{\Psi }}({\varvec{\theta }}_{{\mathcal {P}}})\) to emphasize that \({\varvec{\Psi }}\) is a function of \({\varvec{\theta }}_{{\mathcal {P}}}\). The resulting model implied joint distribution of the observed variables is denoted by \(\{P({\mathbf {v}},{\varvec{\theta }})\mid {\varvec{\theta }} \in {\varvec{\Theta }}\}\), where \({\varvec{\Theta }}={\varvec{\Theta }}_{{\mathcal {F}}}\times {\varvec{\Theta }}_{{\mathcal {P}}}\), and P is the family of n-dimensional multivariate normal distributions.

The graph \({\mathcal {G}}\) is constructed by drawing a directed edge from \(V_i\) pointing to \(V_j\) if and only if the corresponding coefficient is not restricted to zero (i.e., \(c_{ji}\ne 0\)). A bidirected edge between vertices \(V_i\) and \(V_j\) is drawn if and only if \(\psi _{ij}\ne 0\) (bidirected edges are often drawn using dashed lines). The absence of a bidirected edge between \(V_i\) and \(V_j\) reflects the assumption that there is no unobserved variable that has a direct causal effect on both \(V_i\) and \(V_j\) (no unobserved confounding).Footnote 7 For recursive systems, the resulting graph belongs to the class of acyclical directed mixed graphs (ADMG), whereas mixed refers to the fact that graphs in this class contain directed edges as well as bidirected edges (Richardson, 2003; Shpitser, 2018). An example model with \(n=6\) variables and the corresponding causal graph is introduced in the illustration section.

At the heart of the graph-based approach to causal inference lies a hypothetical experiment in which the values of a subset of observed variables are controlled by an intervention. This exogenous intervention is formally denoted via the do-operator, namely \(do({\mathbf {x}})\), where \({\mathbf {x}}\) denotes the interventional levels and \({\mathcal {X}}\subseteq {\mathcal {V}}\) denotes the subset of variables that are controlled by the experimenter. The system of equation under the intervention \(do({\mathbf {x}})\) is obtained from the original system by replacing the equation for each variable \(V_i\in {\mathcal {X}}\) (i.e., for each variable that is subject to the \(do({\mathbf {x}})\)-intervention) with the equation  , where

, where  is a constant interventional level (Pearl, 2009; Spirtes et al., 2001). Note that the \(do({\mathbf {x}})\)-intervention does not alter the equations for variables that are not subject to intervention, an assumption known as autonomy or modularity (Pearl, 2009; Peters, Janzing, & Schölkopf, 2017; Spirtes et al., 2001).

is a constant interventional level (Pearl, 2009; Spirtes et al., 2001). Note that the \(do({\mathbf {x}})\)-intervention does not alter the equations for variables that are not subject to intervention, an assumption known as autonomy or modularity (Pearl, 2009; Peters, Janzing, & Schölkopf, 2017; Spirtes et al., 2001).

The probability distribution of the variables \({\mathbf {V}}\) one would observe had the intervention \(do({\mathbf {x}})\) been uniformly applied to the entire population is called the interventional distribution, and it is denoted as \(P({\mathbf {V}}\mid do({\mathbf {x}}))\).Footnote 8 The interventional distribution differs formally and conceptually from the conditional distribution \(P({\mathbf {V}}\mid {\mathbf {X}}={\mathbf {x}})\). The former describes a situation where the data-generating mechanism has been altered by an external \(do({\mathbf {x}})\)-type intervention in an (hypothetical) experiment. The latter describes a situation where the data-generating mechanism of \({\mathbf {V}}\) has not been altered, but the evidence \({\mathbf {X}}={\mathbf {x}}\) about the values of a subset of variables \({\mathcal {X}}\subseteq {\mathcal {V}}\) is available. These differences will be further discussed in the illustration section (see also, e.g., Gische, West, & Voelkle, 2021; Pearl, 2009).

In the remainder of this section, we translate the changes in the data-generating mechanism induced by the \(do({\mathbf {x}})\)-intervention into matrix notation (see Hauser and Bühlmann (2015) for a similar approach). The following definition introduces the required notation.

Definition 1

(interventions in linear graph-based models)

-

1.

Variables \({\mathcal {X}}\subseteq {\mathcal {V}}\) are subject to an external intervention, where \(|{\mathcal {X}}|=K_x \le n\) denotes the set size. The \(K_x\times 1\) vector of interventional levels is denoted by \({\mathbf {x}}\). The external intervention is denoted by \(do({\mathbf {x}})\).

-

2.

Let \({\mathcal {I}} \subseteq \{1,2,...,n\},\, |{\mathcal {I}}|=K_x\) denote the index set of variables that are subject to intervention. The index set of all variables that are not subject to intervention is denoted by \({\mathcal {N}}\), namely \({\mathcal {N}}:=\{1,2,...,n\}\setminus {\mathcal {I}},\, |{\mathcal {N}}|=n-K_x\), where the operator \(\setminus \) denotes the set complement.

-

3.

Let \(\varvec{{{\imath }}}_i \in {\mathbb {R}}^n\) be the i-th unit vector, namely a (column) vector with entry 1 on the i-th component and zeros elsewhere. The \(n \times K_x\) matrix \({\mathbf {1}}_{{\mathcal {I}}}:=(\varvec{{{\imath }}}_i)_{i\in {\mathcal {I}}}\) contains all unit vectors with an interventional index. The \(n \times (n-K_x)\) matrix \({\varvec{1}}_{{\mathcal {N}}}\) is defined analogously, namely \({\mathbf {1}}_{{\mathcal {N}}}:=(\varvec{{{\imath }}}_i)_{i\in {\mathcal {N}}}\). The matrices \({\mathbf {1}}_{{\mathcal {I}}}\) and \({\mathbf {1}}_{{\mathcal {N}}}\) are called selection matrices.

-

4.

Let \({\mathbf{I}}_ {{\mathcal {N}}}\) be an \(n \times n\) diagonal matrix with zeros and ones as diagonal values. The i-th diagonal value is equal to one if \(i \in {\mathcal {N}}\) and zero otherwise.

Note that all of the elements of the matrices \({\mathbf {1}}_{{\mathcal {I}}},{\mathbf {1}}_{{\mathcal {N}}},\) and \({\mathbf{I}}_ {{\mathcal {N}}}\) are either zero or unity. The variables \({\mathbf {V}}\) in a linear graph-based model under the intervention \(do({\mathbf {x}})\) are determined by the following set of structural equations:Footnote 9

The corresponding interventional reduced form equation is given by:

The matrix \({\mathbf{I}}_ {{\mathcal {N}}}{\mathbf {C}}\) is obtained from \({\mathbf {C}}\) by replacing its rows with interventional indexes by rows of zeros, and consequently \(({\mathbf{I}}_ {n}-{\mathbf{I}}_ {{\mathcal {N}}}{\mathbf {C}})\) is non-singular. Equation (4) states that \({\mathbf {V}}\mid do({\mathbf {x}})\) is a linear transformation of the random vector \({\varvec{\varepsilon }}\). The corresponding transformation matrix is labeled as \({\mathbf {T}}_1\), and the additive constant is \({\mathbf {a}}_1{\mathbf {x}}\).

The target quantity of interest is the interventional distribution of those variables that are not subject to intervention, denoted by \({\mathbf {V}}_{{\mathcal {N}}}\). The reduced form equation of all non-interventional variables is given by:

Important characteristics of the distribution of a linear transformation of a random vector depend on the rank of the transformation matrix.

Lemma 2

(rank of transformation matrices) The \(n \times n\) transformation matrix \({\mathbf {T}}_1:=({\mathbf{I}}_n-{\mathbf{I}}_ {{\mathcal {N}}}{\mathbf {C}})^{-1}{\mathbf{I}}_ {{\mathcal {N}}}\) has reduced rank \(n-K_x\). The \((n-K_x)\times n\) transformation matrix \({\mathbf {T}}_2:={\mathbf {1}}_{{\mathcal {N}}}^{\intercal }({\mathbf{I}}_n-{\mathbf{I}}_ {{\mathcal {N}}}{\mathbf {C}})^{-1}{\mathbf{I}}_ {{\mathcal {N}}}\) has full row rank \(n-K_x\).

Proof

See Appendix. \(\square \)

Based on the reduced form equations, we derive the interventional distribution and its features in the following section.

3 Interventional Distribution

Combining the reduced form stated in Eq. (4) with the assumptions on the first- and second-order moments of the error term distribution yields the following moments of the interventional distribution:

The results are obtained via a direct application of the rules for the computation of moments of linear transformations of random variables. Note that these results do not require multivariate normality of the error terms. The interventional mean vector is functionally dependent on the vector of interventional levels \({\mathbf {x}}\), whereas the interventional covariance matrix is functionally independent of \({\mathbf {x}}\). The interventional distribution in linear graph-based models with multivariate normal error terms is given as:

Result 3

(interventional distribution for Gaussian linear graph-based models)

Proof

Both results follow from the fact that linear transformations of multivariate normal vectors are also multivariate normal (Rao, 1973). Results on the rank of the transformation matrices \({\mathbf {T}}_1\) and \({\mathbf {T}}_2\) can be found in Lemma 2. \(\square \)

Equation (7a) states that the interventional distribution of all variables is a singular normal distribution in \({\mathbb {R}}^n\) with reduced rank \(n-K_x\) as denoted by the superscript \(n-K_x\). Singularity follows from the fact that the \(K_x\) interventional variables are no longer random given the \(do({\mathbf {x}})\)-intervention, but are fixed to the constant interventional levels \({\mathbf {x}}\). Therefore, the random vector \({\mathbf {V}}\mid do({\mathbf {x}})\) satisfies the restriction \({\mathbf {1}}_{{\mathcal {I}}}^{\intercal }({\mathbf {V}}\mid do({\mathbf {x}}))={\mathbf {x}}\) with a probability of one. Equation (7b) states that the vector of all non-interventional variables follows a \((n-K_x)\)-dimensional normal distribution.

Typically, one is interested in a subset \({\mathcal {Y}}\subseteq {\mathcal {V}}_{{\mathcal {N}}}\) of outcome variables. The marginal interventional distribution \(P({\mathbf {y}}\mid do({\mathbf {x}}))\) can be obtained as follows:

Result 4

(marginal interventional distribution for Gaussian linear graph-based models) Let the outcome variables \({\mathcal {Y}}\) be a subset of the non-interventional variables (i.e., \({\mathcal {Y}}\subseteq {\mathcal {V}}_{{\mathcal {N}}}, \, |{\mathcal {Y}}|=K_y\)). The index set of the outcome variables is denoted as \({\mathcal {I}}_y\). Then, the following result holds:

The result follows from the fact that the family of multivariate normal distributions is closed with respect to marginalization (Rao, 1973). An important special case of Result 4 is the ATE of a single variable \(V_i\) on another variable \(V_j\), which is obtained by the setting \({\mathcal {Y}}=\{V_j\}\) and \({\mathcal {X}}=\{V_i\}\) (and consequently \({\mathcal {I}}_x=\{i\}\), \({\mathcal {I}}_y=\{j\}\), \(K_y=K_x=1\)). The ATE of the intervention do(x) relative to the intervention \(do(x')\) (where x and \(x'\) are distinct treatment levels) on Y is defined as the mean difference \(\mathrm {E}(y\mid do(x))-\mathrm {E}(y\mid do(x'))\). For a single outcome variable \(\{V_j\}\), the selection matrix \({\mathbf {1}}_{{\mathcal {I}}_y}\) simplifies to the unit vector \(\varvec{{{\imath }}}_j\) and \(\mathrm {E}(y\mid do(x))-\mathrm {E}(y\mid do(x'))\) can be expressed as \(\varvec{{{\imath }}}_j^\intercal {\mathbf {a}}_1(x-x')\) (using the mean expression from the normal distribution in Eq. [8]).

The probability density function (pdf) of the interventional distribution of all non-interventional variables is given as follows:

Many features of the interventional distribution that hold substantive interest in applied research (e.g., probabilities of interventional events, quantiles of the interventional distribution) can be calculated from the pdf via integration. For example, a physician would like a patient’s blood glucose level (outcome) to fall into a predefined range of values (e.g., to avoid hypo- or hyperglycemia) given an injection of insulin (intervention). More formally, let \([{\mathbf {y}}^{\textit{low}},{\mathbf {y}}^{\textit{up}}]\) denote a predefined range of values of a set of outcome variables \({\mathcal {Y}}\subseteq {\mathcal {V}}_{{\mathcal {N}}}\). The interventional probability \(P({\mathbf {y}}^{{low}}\le {\mathbf {y}} \le {\mathbf {y}}^{{up}} \mid do({\mathbf {x}}))\) is given by:

The interventional distribution and its features will be used to formally define parametric causal quantities in the following section.

4 Causal Effect Functions

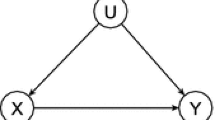

In this section, we formally define terms containing the do-operator as causal quantities denoted by \({\varvec{\gamma }}\). According to this definition, any feature of the interventional distribution that can be expressed using the do-operator is a causal quantity. Let the space of causal quantities be denoted as \({\varvec{\Gamma }}\). As discussed in earlier in the section on “Graph-Based Causal Models with Linear Equations,” linear causal models imply a joint distribution of observed variables that is parametrized by \({\varvec{\theta }}\in {\varvec{\Theta }}\subseteq {\mathbb {R}}^{q+p}\) and denoted by \(\{P({\mathbf {v}},{\varvec{\theta }})\mid {\varvec{\theta }} \in {\varvec{\Theta }}\}\). A function \({\mathbf {g}}\) that maps the parameters \({\varvec{\theta }}\) of the model implied joint distribution onto a causal quantity \({\varvec{\gamma }}\) is called causal effect function. This idea is illustrated in Fig. 1 and stated in Definition 5.

Causal Effect Functions. Figure 1 displays the mapping \({\mathbf {g}}:{\varvec{\Theta }}\mapsto {\varvec{\Gamma }}\) that corresponds to a causal effect function \({\varvec{\gamma }}={\mathbf {g}}({\varvec{\theta }})\). The domain \({\varvec{\Theta }}\subseteq {\mathbb {R}}^{q+p}\) (left-hand side) contains the parameters of the model implied joint distribution of observed variables (no do-operator). The co-domain \({\varvec{\Gamma }}\subseteq {\mathbb {R}}^{r}\) (right-hand side) contains causal quantities \({\varvec{\gamma }}\) that are defined via the do-operator.

Definition 5

(causal quantity and causal effect function) Let \({\varvec{\gamma }}\) be an r-dimensional feature of the interventional distribution. Let \({\varvec{\Theta }}_{\varvec{\gamma }}\subseteq {\varvec{\Theta }}\) be an s-dimensional subspace of the parameter space of the model implied joint distribution of observed variables. A mapping \({\mathbf {g}}\)

is called a causal effect function. The image \({\varvec{\gamma }}\) of a causal effect function is called a causal quantity which is parametrized by \({\varvec{\theta }}_{\varvec{\gamma }}\). If the value of a causal quantity depends on other variables (e.g., the interventional level \({\mathbf {x}}\in {\mathbb {R}}^{K_x}\), the values \({\mathbf {v}}_{{\mathcal {N}}}\in {\mathbb {R}}^{n-K_x}\) of non-interventional variables), we include these variables as auxiliary arguments in the causal effect function separated by a semicolon (e.g., \({\mathbf {g}}({\varvec{\theta }}_{\varvec{\gamma }};{\mathbf {x}}, {\mathbf {v}}_{{\mathcal {N}}})\)).

This idea can be applied to the interventional mean from Eq. (6a) by defining it as a causal quantity \({\varvec{\gamma }}_1\) as follows:

The right-hand side of Eq. (12a) is free of the do-operator and contains the parameter vector \({\varvec{\theta }}_{{\mathcal {F}}}\) (structural coefficients ) as a main argument and the interventional level \({\mathbf {x}}\) as an auxiliary argument. Thus, the causal effect function \({\mathbf {g}}_1\) maps the parameter vector \({\varvec{\theta }}_{{\mathcal {F}}}\) onto the interventional mean. The interventional mean is an \(n\times 1\) vector and therefore the co-domain of \({\mathbf {g}}_1\) is \({\mathbb {R}}^{n}\) (i.e., \(r=n\)), as stated in Eq. (12b). Note that the causal effect function \({\mathbf {g}}_1\) depends on the distinct and functionally unrelated structural coefficients \({\varvec{\theta }}_{{\mathcal {F}}}\) but is independent of the parameters from the error term distribution \({\varvec{\theta }}_{{\mathcal {P}}}\). Therefore, the domain of \({\mathbf {g}}_1\) is \({\varvec{\Theta }}_{{\mathcal {F}}}\) and \(s=q\).

The interventional covariance matrix from Eq. (6b) can be expressed using the notation from Definition 5 as follows:

To avoid matrix valued causal effect functions, we defined \({\varvec{\gamma }}_2\) as the half-vectorized interventional covariance matrix, which is of dimension \(r=n(n+1)/2\) (the operator \({{{\,\mathrm{\textsf {vech}}\,}}}\) stands for half-vectorization). The interventional covariance matrix is a function of both the structural coefficients \({\varvec{\theta }}_{{\mathcal {F}}}\) and the entries of the covariance matrix \({\varvec{\theta }}_{{\mathcal {P}}}\). Thus, \({\varvec{\theta }}_{{{\varvec{\gamma }}}_2}={\varvec{\theta }}\) and \(s=q+p\). No auxiliary arguments are included in the causal effect function \({\mathbf {g}}_2\), since the value of \({\varvec{\gamma }}_2\) only depends on the values of \({\varvec{\theta }}\) (recall that \({\mathbf{I}}_n,{\mathbf{I}}_{{\mathcal {N}}},{\mathbf {1}}_{{\mathcal {I}}}\) are constant zero-one matrices).

The interventional pdf \(f({\mathbf {v}}_{{\mathcal {N}}}\mid do({\mathbf {x}}))\) from Eq. (9) can be formally defined as a causal effect function as follows:

The interventional density depends on both the structural coefficients and the parameters of the error term distribution, yielding \({\varvec{\theta }}_{\gamma _3}={\varvec{\theta }}\), \({\varvec{\Theta }}_{\gamma _3}={\varvec{\Theta }}\) and \(s=q+p\). The interventional density is scalar-valued and thus \(r=1\). Since the value of the interventional pdf depends on \({\mathbf {x}}\) and \({\mathbf {v}}_{{\mathcal {N}}}\), both are included as auxiliary arguments in the causal effect function \(g_3\), namely \(g_3({\varvec{\theta }};{\mathbf {x}},{\mathbf {v}}_{{\mathcal {N}}})\).

Probabilities of interventional events can be understood as a causal quantity in the following way:

Where \({\varvec{\theta }}_{\gamma _4}\) is the subset of parameters that appear in the marginal interventional pdf \(f({\mathbf {y}}\mid do({\mathbf {x}}))\). The causal effect function \(g_4\) is a scalar-valued and thus \(r=1\). The value of the interventional probability depends on \({\mathbf {x}}\), \({\mathbf {y}}^{{low}}\), and \({\mathbf {y}}^{{up}}\) (\({\mathbf {y}}\) integrates out), which are included as auxiliary arguments in the causal effect function \(g_4\).

5 Identification of Parametrized Causal Quantities

The meaning of the term “identification” as used in the nonparametric graph-based approach slightly differs from the meaning in the field of traditional SEM. A graph-based causal quantity is said to be identified if it can be expressed as a functional of joint, marginal, or conditional distributions of observed variables (Pearl, 2009). The latter distributions can in principle be estimated based on observational data using nonparametric statistical models. In other words, an identified nonparametric causal quantity could in theory be computed from an infinitely large sample without further limitations.Footnote 10 Graph-based tools for identification exploit the causal structure depicted in the causal graph and are independent of the functional form of the structural equations. Thus, causal identification is established in the absence of the risk of misspecification of the functional form.

By contrast, model identification in traditional parametric SEM relies on the solvability of a system of nonlinear equations in terms of a finite number of model parameters. A single parameter \(\theta \in {\varvec{\Theta }}\) is identified if it can be expressed as a function of moments of the the joint distribution of observed variables in a unique way (Bekker et al., 1994; Bollen & Bauldry, 2010). If all parameters in \({\varvec{\theta }}\) are identified, then the model is identified. Definition 6 uses causal effect functions to combine the above ideas.

Definition 6

(causal identification of parametrized causal quantities) Let \({\varvec{\gamma }}\) be a parametrized causal quantity in a linear graph-based model. \({\varvec{\gamma }}\) is said to be causally identified if (i) it can be expressed in a unique way as a function of the parameter vector \({\varvec{\theta }}_{\varvec{\gamma }}\) via a causal effect function, namely \({\varvec{\gamma }}={\mathbf {g}}({\varvec{\theta }}_{\varvec{\gamma }})\), and (ii) the value of \({\varvec{\theta }}_{\varvec{\gamma }}\) can be uniquely computed from the joint distribution of the observed variables.

Based on this insight, graph-based techniques for causal identification in linear models have been derived, for example by Brito and Pearl (2006); Drton, Foygel, and Sullivant (2011); Kuroki and Cai (2007). Furthermore, part (ii) of the above definition has been dealt with extensively in the literature on traditional linear SEM (see, e.g., Bekker et al., 1994; Bollen, 1989; Fisher, 1966; Wiley, 1973).

We now illustrate Definition 6 for the causal quantities defined in Eqs. (22a) and (22b) from the illustration section. For the interventional mean stated in Eq. (22a), part (i) of the definition is satisfied, since the causal quantity \(\gamma _1\) can be expressed as a function of the parameter \({\theta }_{\gamma _1}={c_{yx}}\) in a unique way as follows: \(\gamma _{1}:=\mathrm{E}(Y_3 \mid do(x_2))=g_1({\theta }_{\gamma _1};x_2)=c_{yx}x_2\). Part (ii) of the above definition requires that the single structural coefficient \(c_{yx}\) can be uniquely computed from the joint distribution of the observed variables.

Similarly, part (i) of the definition is satisfied for the the causal quantity \(\gamma _{2}:=\mathrm{V}(Y_3 \mid do(x_2))=g_2({\varvec{\theta }}_{\gamma _2})\) in Eq. (22b). Part (ii) of the above definition requires that each of the structural coefficients and (co)variances on the right-hand side of Eq. (22b), namely \({\varvec{\theta }}_{\gamma _2}=(c_{yx},c_{yy},\psi _{x_1x_1},\psi _{x_1y_1},\psi _{y_1y_1},\psi _{y_1y_2},\psi _{y_2y_3},\psi _{yy})^\intercal \), can be uniquely computed from the joint distribution of the observed variables.

Note that both of the causal quantities discussed above require only a subset of parameters to be identified (i.e., it is not required to identify the entire model \({\varvec{\theta }}\)). After causal identification of a parametrized causal quantity has been established, it can be estimated from a sample using the techniques described in the following section.

6 Estimation of Causal Quantities

Estimators of causal quantities as defined in Eq. (11) are constructed by replacing the parameters in the causal effect function with a corresponding estimator, namely \(\widehat{{\varvec{\gamma }}}={\mathbf {g}}(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }})\). This plug-in procedure is summarized in the following definition.

Definition 7

(estimation of parametrized causal quantities) Let \({\varvec{\gamma }}\) be an identified causal quantity in a linear graph-based models and \({\mathbf {g}}({\varvec{\theta }}_{\varvec{\gamma }})\) the corresponding causal effect function. Let \(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }}\) denote an estimator of \({\varvec{\theta }}_{\varvec{\gamma }}\), then \(\widehat{{\varvec{\gamma }}}:={\mathbf {g}}(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }})\) is an estimator of the causal quantity \({\varvec{\gamma }}\).

A main strength of the traditional SEM literature is that a variety of estimation procedures have been developed. Common estimation techniques include maximum likelihood (ML; Jöreskog, 1967; Jöreskog & Lawley, 1968), generalized least squares (GLS; Browne, 1974), and asymptotically distribution free (ADF; Browne, 1984).Footnote 11 Note that some estimation techniques do not rely on the assumption of multivariate normal error terms and for others robust versions have been proposed that allow for certain types of deviations from multivariate normality (Satorra & Bentler, 1994; Yuan & Bentler, 1998).

In the following, we assume that causal effect functions \({\mathbf {g}}\) and estimators \(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }}\) satisfy certain regularity conditions stated as Properties A.1 and A.2 in the Appendix. The following theorem establishes the asymptotic properties of estimators of causal quantities \(\widehat{{\varvec{\gamma }}}={\mathbf {g}}(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }})\).

Theorem 8

(asymptotic properties of estimators of causal quantities) Let \({\varvec{\gamma }}\) be a causal quantity and \({\mathbf {g}}({\varvec{\theta }}_{\varvec{\gamma }})\) the corresponding causal effect function. Let \(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }}\) be an estimator of \({\varvec{\theta }}_{\varvec{\gamma }}\). Assume that \({\mathbf {g}}\) and \(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }}\) satisfy Property A.1 and Property A.2, respectively.

Where \({\varvec{\theta }}_{{\varvec{\gamma }}}^*\) denotes the true population value and \(\xrightarrow { \ p \ }\) (\(\xrightarrow { \ d \ }\)) refers to convergence in probability (distribution) as the sample size N tends to infinity. \(\text {AV}(\sqrt{N}\widehat{{\varvec{\theta }}}_{\gamma })\) denotes the covariance matrix of the limiting distribution.

Proof

The results are obtained via a straightforward application of standard results on transformations of convergent sequences of random variables (Mann & Wald, 1943; Serfling, 1980, Chapter 1.7), one of which is known as the multivariate delta method (Cramér, 1946; Serfling, 1980, Chapter 3.3). \(\square \)

Theorem 8 establishes that the estimator \(\widehat{{\varvec{\gamma }}}={\mathbf {g}}(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }})\) is consistent and converges at a rate of \(N^{-\frac{1}{2}}\) to the true population value \({\varvec{\gamma }}^*\!\!={\mathbf {g}}({\varvec{\theta }}_{\varvec{\gamma }}^*)\). The rate of convergence is independent of the finite number of parameters and variables in the model. If the causal effect function contains auxiliary variables, then the results in Theorem 8 hold pointwise for any fixed value combination of the auxiliary variable.

Note that the results in Theorem 8 hold whenever an estimator satisfies Property A.2 and they do not depend on a particular estimation method. However, if \({\varvec{\theta }}_{\varvec{\gamma }}\) is estimated via maximum likelihood, the proposed estimator \(\widehat{{\varvec{\gamma }}}\) of the causal quantity has the following property:

Theorem 9

(asymptotic efficiency of \(\widehat{{\varvec{\gamma }}}={\mathbf {g}}(\widehat{{\varvec{\theta }}}^{ML}_{\varvec{\gamma }})\)) Let the situation be as in Theorem 8 and \(\widehat{{\varvec{\theta }}}^{ML}_{\varvec{\gamma }}\) denote the maximum likelihood estimator of \({\varvec{\theta }}_{\varvec{\gamma }}\). Then, the estimator \(\widehat{{\varvec{\gamma }}}={\mathbf {g}}(\widehat{{\varvec{\theta }}}^{ML}_{\varvec{\gamma }})\)

-

(i)

is the maximum likelihood estimator \(\widehat{{\varvec{\gamma }}}^{ML}\) of the causal quantity \({\varvec{\gamma }}\);

-

(ii)

is asymptotically efficient, namely the asymptotic covariance matrix AV\((\sqrt{N}\widehat{{\varvec{\gamma }}})\) reaches the Cramér–Rao lower bound.

Proof

Result (i) is a direct consequence of the functional invariance of the ML-estimator (Zehna, 1966; see, for example, Casella & Berger, 2002, Chapter 7.2) and result (ii) was established by Cramér (1946) and Rao (1945). \(\square \)

To make inference feasible in practical applications, a consistent estimator of \(\mathrm{AV}(\sqrt{N}\widehat{{\varvec{\gamma }}})\) is required.

Corollary 10

(consistent estimator of \(\mathrm{AV}(\sqrt{N}\widehat{{\varvec{\gamma }}})\)) Let the situation be as in Theorem 8 and let the estimator of AV\((\sqrt{N}\widehat{{\varvec{\gamma }}})\) be defined as:

Then, \({\widehat{\mathrm{AV}}}(\sqrt{N}\widehat{{\varvec{\gamma }}})\) is a consistent estimator of AV\((\sqrt{N}\widehat{{\varvec{\gamma }}})\) if \({\widehat{\mathrm{AV}}}(\sqrt{N}\widehat{{\varvec{\theta }}}_{\varvec{\gamma }}) \xrightarrow { \ p \ } {\mathrm{AV}}(\sqrt{N}\widehat{{\varvec{\theta }}}_{\varvec{\gamma }})\).

Proof

Note that the partial derivatives \(\frac{\partial {\mathbf {g}}({\varvec{\theta }}_{\varvec{\gamma }})}{\partial {\varvec{\theta }}_{\varvec{\gamma }}^\intercal }\) are continuous (see Property A.1) and that \(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }}\xrightarrow { \ p \ }{\varvec{\theta }}^*_{\varvec{\gamma }}\) holds (see Property A.2). Thus, the result is a direct consequence of standard results on transformations of convergent sequences of random variables (Mann & Wald, 1943; Serfling, 1980, Chapter 1.7). \(\square \)

Equation (17) states that estimates of the asymptotic covariance matrix of a causal quantity \(\widehat{{\varvec{\gamma }}}\) can be computed based on (i) the estimate of the asymptotic covariance matrix \({\widehat{\text{ AV }}}(\sqrt{N}\widehat{{\varvec{\theta }}}_{\varvec{\gamma }})\), and (ii) the Jacobian matrix \(\frac{\partial {\mathbf {g}}({\varvec{\theta }}_{\varvec{\gamma }}\!)}{\partial {\varvec{\theta }}^{\intercal }_{\varvec{\gamma }}}\) (evaluated at \(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }}\)). Estimation results for (i) the asymptotic covariance matrix depend on the estimation method that is used to obtain \(\widehat{{\varvec{\theta }}}_{\varvec{\gamma }}\). For many standard procedures (e.g., 3SLS, ADF, GLS, GMM, ML, IV), theoretical results on the asymptotic covariance matrix are available in the corresponding literature and estimators are implemented in various software packages (e.g., see Muthén & Muthén, 1998-2017; Rosseel, 2012). Explicit expressions of (ii) the Jacobian matrices for the causal effect functions \(\mathbf {g_1}\), \(\mathbf {g_2}\), \(g_3\), and \(g_4\) are provided in the following corollary.

Corollary 11

(Jacobian matrices of basic causal effect functions) Let the causal effect functions \({\mathbf {g}}_1\), \({\mathbf {g}}_2\), \(g_3\), and \(g_4\) be defined as in Eqs. (12a), (13), (14), and (15), respectively. Then, the Jacobian matrices with respect to \({\varvec{\theta }}\) are given by:

Where the unit vector in the upper entry of the vector in Eq. (18d) is of dimension \((n \times 1)\) and the unit vector in the lower entry is of dimension \((n^2 \times 1)\). The matrices denoted by \(\mathbf {G}\) and a subscript are defined as follows:

Where \({\mathbf {L}}_n\), \({\mathbf {D}}_n\), and \({\mathbf {K}}_n\) denote the elimination matrix, duplication matrix, and commutation matrix for \(n \times n\)-matrices, respectively (Magnus & Neudecker, 1979, 1980). \(\mu _y\) and \(\sigma _y\) denote univariate inerventional moments.

Proof

See Appendix. \(\square \)

Note that the Jacobian matrix for interventional probabilities stated in Eq. (18d) is given for a single outcome variable \(Y=V_j\) (i.e., \(|{\mathcal {Y}}|=K_y=1\)). For simplicity of notation, the derivatives in Corollary 11 are taken with respect to the entire parameter vector \({\varvec{\theta }}\). Recall that a causal quantity is a function of the \(s\times 1\) subvector \({\varvec{\theta }}_{\varvec{\gamma }}\). Consequently, the \(r \times (q+p)\) Jacobian matrix \(\frac{\partial {\mathbf {g}}({\varvec{\theta }}_{\varvec{\gamma }})}{\partial {\varvec{\theta }}^\intercal }\) will contain \((q+p-s)\) columns with zero entries that can be eliminated by pre-multiplication with an appropriate selection matrix.

These asymptotic results can be used for approximate causal inference based on finite samples, as will be illustrated in the following section.

7 Illustration

We illustrate the method proposed in the previous paragraphs using simulated data. In this way, the data-generating process is known and we know with certainty that the model is correctly specified. For didactic purposes, we link the simulated data to a real-world example: The data are simulated according to a modified version of the model used in a study by Ito et al. (1998).Footnote 12

Our simulation mimics an observational study where \(N=100\) persons are randomly drawn from a target population of homogeneous individuals and measured at three successive (\(\Delta t=6\) min) occasions. Variables \(X_1,X_2,X_3\) represent mean-centered blood insulin levels at three successive measurement occasions measured in micro international units per milliliter (mcIU/ml). Variables \(Y_1,Y_2,Y_3\) represent mean-centered blood glucose levels measured in milligrams per deciliter (mg/dl). Mean-centered blood glucose levels below \(-40 \ \text {mg/dl}\) or above \(80 \ \text {mg/dl}\) indicate hypo- or hyperglycemia, respectively. Both hypo- and hyperglycemia should be avoided, yielding an acceptable range for blood glucose levels of \([y^{low},y^{up}]=[-40,80]\). The graph of the assumed linear graph-based models is depicted in Fig. 2.

Causal Graph (ADMG) in the Absence of Interventions. Figure 2 displays the ADMG corresponding to the linear graph-based model. The dashed bidirected edge drawn between \(X_1\) and \(Y_1\) represents a correlation due to an unobserved common cause. Directed edges are labeled with the corresponding path coefficients that quantify direct causal effects. For example, the direct causal effect of \(X_2\) on \(Y_{3}\) is quantified as \(c_{yx}\). Traditionally, disturbances (residuals, error terms), denoted by \({\varvec{\varepsilon }}\) in Eq. (19), are not explicitly drawn in an ADMG.

Each directed edge corresponds to a direct causal effect and is quantified by a nonzero structural coefficient. We assume that direct causal effects are identical (stable) over time. For example, we assign the same parameter \(c_{yx}\) to the directed edges \(X_1 {\mathop {\rightarrow }\limits ^{c_{yx}}} Y_2\) and \(X_2 {\mathop {\rightarrow }\limits ^{c_{yx}}} Y_3\) to indicate that we assume time-stable direct effects of \(X_{t-1}\) on \(Y_t\). The absence of a directed edge from, say, \(X_1\) to \(Y_3\) in the ADMG encodes the assumption that there is no direct effect of insulin levels at \(t=1\) on glucose levels at \(t=3\). In other words, we assume that \(X_1\) only indirectly affects \(Y_3\) via \(X_2\) or via \(Y_2\). Furthermore, we assume the absence of effect modification which justifies the use of the following system of linear structural equations:

Each bidirected edge in the ADMG indicates the existence of an unobserved confounder. In linear graph-based models, unobserved confounders are formalized as covariances between error terms. The covariance matrix of the error terms implied by the graph is given by:

The entries \(\psi _{x_1x_1}\), \(\psi _{y_1y_1}\) and \(\psi _{x_1y_1}\) describe the (co-)variances of the initial values of blood insulin and blood glucose. (Co-)Variances of error terms at time 2 and time 3 are assumed to be constant and are denoted as \(\psi _{xx}\), \(\psi _{yy}\), and \(\psi _{xy}\). Serial correlations in the X-series (Y-series) are denoted by \(\psi _{x_1x_2}\), \(\psi _{x_2x_3}\) (\(\psi _{y_1y_2}\), \(\psi _{y_2y_3}\)). The covariances \(\mathrm {COV}(X_t,Y_t)\), \(t=1,2,3\), encode the assumption that the contemporaneous relationship of blood insulin and blood glucose is confounded. The absence of a bidirected edge between \(X_t\) and \(Y_{t+1}\) encodes the assumption that there are no unobserved confounders that affect the lagged relationship of blood insulin and blood glucose.

Further, we assume that the error terms follow a multivariate normal distribution. Thus, the linear graph-based model is parametrized by the following vector of distinct, functionally unrelated and unknown parameters: \({\varvec{\theta }}^\intercal =({\varvec{\theta }}^\intercal _{{\mathcal {F}}},{\varvec{\theta }}^\intercal _{{\mathcal {P}}})\) with \({\varvec{\theta }}^\intercal _{{\mathcal {F}}}=(c_{xx},c_{xy},c_{yx},c_{yy})\) and \({\varvec{\theta }}^\intercal _{{\mathcal {P}}}=(\psi _{x_1x_1},\psi _{y_1y_1},\psi _{x_1y_1},\psi _{xx},\psi _{yy},\psi _{xy},\psi _{x_1x_2},\psi _{x_2x_3},\psi _{y_1y_2},\psi _{y_2y_3})\).

We are interested in the effect of an intervention on blood insulin at the second measurement occasion (i.e., \(X_2\)) on blood glucose levels at the third measurement occasion (i.e., \(Y_3\)). We set the interventional level of blood insulin to one standard deviation, namely \(x_2=\sqrt{\text {V}(X_2)}=11.54\). The graph of the causal model under the intervention \(do(x_2)\) is depicted in Fig. 3.

Causal Graph (ADMG) Under the Intervention \(do(x_2)\). Figure 3 displays the ADMG of the graph-based model under the intervention \(do(x_2)\). Edges that enter node \(X_2\) (i.e., that have an arrowhead pointing at node \(X_2\)) are removed since the value of \(X_2\) is now set by the experimenter via the intervention \(do(x_2)\). The interventional value \(x_2\) is neither determined by the values of the causal predecessors of \(X_2\) nor by unobserved confounding variables. All other causal relations are unaffected by the intervention reflecting the assumption of modularity.

Based on the above description of the research situation and the hypothetical experiment, all terms in Definition 1 are uniquely determined and given by:

The target quantity of causal inference in this example is the interventional distribution \(P(Y_3 \mid do(x_2))\), which can be characterized, for example, by the following causal quantities:Footnote 13

Where \(\Phi \) denotes the cumulative distribution function (cdf) of the standard normal distribution. A central goal of a treatment at time 2 (i.e., \(do(x_2)\)) is to avoid hypo- or hyperglycemia at time 3. We therefore refer to the event \(\{y^{low} \le Y_3 \le y^{up} \mid do(x_2)\}\) as treatment success. Using this terminology, the causal quantity \(\gamma _{4}\) from Eq. (22d) is called the probability of treatment success.

The causal effect functions corresponding to these causal quantities are stated below and satisfy Property A.1:

Figure 4 displays the pdfs of interventional distributions that result from three distinct (hypothetical) experiments where different interventional levels are chosen, namely \(-11.54\), 0, and 11.54. Note that the interventional mean \(\gamma _{1}=g_1(\theta _{\gamma _1};x_2)\) is functionally dependent on the interventional level \(x_2\) (see also Eq. [22a]). Thus, the location of the interventional distributions in Fig. 4 depends on the interventional level \(x_2\). By contrast, the interventional variance \(\gamma _{2}=g_2({\varvec{\theta }}_{\gamma _2})\) is functionally independent of \(x_2\) (see also Eq. [22b]). Consequently, the scale of the interventional distributions in Fig. 4 is the same for all interventional levels.

Interventional Distributions for Three Distinct Treatment Levels. Figure 4 displays several features of the interventional distribution for three distinct interventional levels \(x_2=11.54\) (solid), \(x_2'=0\) (dashed), and \(x_2''=-11.54\) (dotted). The pdfs of the interventional distributions are represented by the bell-shaped curves. The interventional means are represented by vertical line segments. The interventional variances correspond to the width of the bell-shaped curves and are equal across the different interventional levels. The probabilities of treatment success are represented by the shaded areas below the curves in the interval \([-40,80]\).

Equations 23(a-d) display the causal effect functions corresponding to the causal quantities \(\gamma _{1},\dots ,\gamma _{4}\). Definition 6 states that the parametrized causal quantities \(\gamma _{1},\dots ,\gamma _{4}\) are identified if the corresponding parameters \(\theta _{\gamma _1},{\varvec{\theta }}_{\gamma _2},{\varvec{\theta }}_{\gamma _3}, \mathrm{and}\, {\varvec{\theta }}_{\gamma _4}\) can be uniquely computed from the joint distribution of the observed variables. We show in the Appendix that the values of the entire parameter vector \({\varvec{\theta }}\) can be uniquely computed from the joint distribution of the observed variables. In fact, the values of \({\varvec{\theta }}\) can be uniquely computed from the covariance matrix of the observed variables.Footnote 14

The joint distribution of the observed variables is given by \(\{P({\mathbf {v}},{\varvec{\theta }})\mid {\varvec{\theta }} \in {\varvec{\Theta }}\}\), where P is the family of 6-dimensional multivariate normal distributions. We estimated all parameters simultaneously by minimizing the maximum likelihood discrepancy function of the model implied covariance matrix and the sample covariance matrix. The ML-estimator \(\widehat{{\varvec{\theta }}}^{ML}\) is consistent, asymptotically efficient, and asymptotically normally distributed (Bollen, 1989) and therefore satisfies Property A.2. Additionally, the asymptotic covariance matrix of the ML-estimator is known (e.g., see Bollen, 1989) and consistent estimates thereof are implemented in many statistical software packages (e.g., in the R package lavaan; Rosseel, 2012). The corresponding estimation results for \({\varvec{\theta }}\) are displayed in Table 1.

Since Property A.1 and Property A.2 are satisfied, the asymptotic properties of the estimators \(\widehat{{\gamma }}_1,\widehat{{\gamma }}_2,\widehat{{\gamma }}_3\) and \(\widehat{{\gamma }}_4\) can be established via Theorem 8. The Jacobian matrices of the causal effect functions in Eq. (23) can be calculated according to Corollary 11. Estimates of the causal quantities are reported in Table 2 together with estimates of the asymptotic standard errors and approximate z-values.

Estimate of the Probability Density Function of the Interventional Distribution. Figure 5 displays the estimated interventional pdf \({\widehat{f}}(y_3 \mid do(x_2=11.54))\) (black solid line) with pointwise \(95\%\) confidence intervals, that is, \(\pm 1.96\cdot {\widehat{\mathrm{ASE}}}[{\widehat{f}}(y_3 \mid do(x_2=11.54))]\) (gray shaded area). The true population interventional pdf \(f(y_3 \mid do(x_2=11.54))\) is displayed by the gray dashed line.

From Theorem 8, we know that \(\widehat{\gamma }_3=g_3(\widehat{{\varvec{\theta }}}_{\gamma _3};x_2, y_3)=\widehat{f}(y_3 \mid do(x_2))\xrightarrow { \ p \ } f(y_3 \mid do(x_2))\) holds pointwise for any \((y_3,x_2) \in {\mathbb {R}}^2\). Figure 5 displays the estimated interventional pdf together with its population counterpart as well as pointwise asymptotic confidence intervals for the fixed interventional level \(x_2=11.54\) over the range \(y_3\in [-100,100]\).

Figure 5 shows that a sample size of \(N=100\) yields very precise estimates of the interventional pdf over the whole range of values \(y_3\in [-100,100]\), which is a consequence of the rate of convergence \(N^{-\frac{1}{2}}\) established in Theorem 8.

Figure 6 displays the estimated probability that the blood glucose level falls into the acceptable range (i.e., hypo- and hyperglycemia are avoided) at \(t=3\), given an intervention \(do(x_2)\) on blood insulin at \(t=2\), as a function of the interventional level \(x_2\). Just like in the case of the interventional pdf, Fig. 6 shows that a sample size of \(N=100\) yields very precise estimates of interventional probabilities over the whole range of values \(x_2\in [-50,50]\). Given the intervention \(do(x_2=11.54)\), the probability of treatment success (i.e, blood glucose level within the acceptable range at \(t=3\)) equals .85, as depicted in Fig. 6. Since the curve in Fig. 6 displays a unique (local and global) maximum, the interventional level can be chosen such that the probability of treatment success is maximized. The maximal probability of treatment success is equal to .94 and can be obtained by administering intervention \(do(x_2^*=-38.3)\). Note that the curve is relatively flat around its maximum, meaning that slight deviations from the optimal treatment level will result in a small decrease in the probability of treatment success.

Estimated Probability of Treatment Success. Figure 6 displays the estimated probability of treatment success (i.e., \({\widehat{\gamma }}_4={\widehat{P}}(-40 \le Y_3 \le 80\mid do(x_2))\); black solid line) as a function of the interventional level \(x_2\). The pointwise confidence intervals \(\pm 1.96\cdot {\widehat{\mathrm{ASE}}}[{\widehat{P}}(-40 \le Y_3 \le 80\mid do(x_2))]\) are displayed by the (very narrow) gray shaded area around the solid black line (see electronic version for high resolution). The vertical dashed lines are drawn at the interventional levels \(x_2=11.54\) and \(x_2=-38.3\). The horizontal dashed lines correspond to the probabilities of treatment success for the treatments \(do(X_2=11.54)\) and \(do(X_2=-33.3)\).

7.1 Interventional Distribution vs. Conditional Distribution

To illustrate the conceptual differences between the interventional and conditional distribution, we use the numeric population values from the first row of Table 1 and Table 2, respectively. The interventional distribution is given by \(P(Y_3 \mid do(x_2))={{N}}_{1}(-0.6x_2 \, , \, 1096.39)\) and it differs from both the conditional distribution, \(P(Y_3 \mid X_2=x_2)={{N}}_{1}(1.76x_2 \, , \, 353.99)\), and the unconditional distribution, \(P(Y_3)={{N}}_{1}(0 \, , \,766.91)\), as depicted in Fig. 7.Footnote 15

Marginal, Conditional, and Interventional Distribution. The panels depict (i) the pdf of the unconditional distribution \(P(Y_3)\) (top panel), (ii) the conditional distribution \(P(Y_3 \mid X_2=x_2)\) (middle panel), and (iii) the interventional distribution \(P(Y_3 \mid do(x_2))\) (bottom panel). In (ii) the level \(x_2=11.54 \ \text {mg/dl}\) was passively measured whereas in (iii) the intervention \(do(X_2=11.54)\) was performed. The central vertical black solid lines are drawn at the mean and shaded areas cover \(95\%\) of the probability mass.

The unconditional distribution (upper panel) corresponds to a situation where no prior observation is available and no intervention is performed. Note that the conditional distribution (middle panel) is shifted to the right (for \(X_2=11.54\)), whereas the interventional distribution (bottom panel) is shifted to the left for \(do(X_2=11.54)\), as displayed in Fig. 7. The differences displayed between \(P(Y_3 \mid X_2=11.54)\) and \(P(Y_3 \mid do(X_2=11.54))\) reflect the fundamental difference between the mode of seeing, namely passive observation, and the mode of doing, namely active intervention (Pearl, 2009).

On the one hand, observing a blood insulin level of \(X_2=11.54\) at the second measurement occasion leads to an expected value of \(20.31 \ \text {mg/dl}\) for blood glucose at the third measurement occasion (i.e., \(\mathrm{E}(Y_3 \mid X_2=11.54)=1.76\cdot 11.54=20.31\)). Using the conditional variance \(\mathrm{V}(Y_3 \mid X_2=11.54)=353.99\) to compute a \(95\%\) forecast interval yields \(P(Y_3 \in [-16.56,57.19] \mid X_2=11.54)=.95\), as indicated by the shaded area under the curve in the middle panel of Fig. 7.

On the other hand, setting the level of blood insulin to \(do(X_2=11.54)\) at the second occasion by an active intervention leads to an expected value of \(-6.92 \ \text {mg/dl}\) for blood glucose at the third measurement occasion (i.e., \(\mathrm{E}(Y_3 \mid do(x_2=11.54))=-0.60\cdot 11.54=-6.92\)). Using the interventional variance \(\mathrm{V}(Y_3 \mid do(11.54))=1096.39\) to compute a \(95\%\) forecast interval yields \(P(Y_3 \in [-71.82,57.97] \mid do(x_2=11.54))=.95\), as indicated by the shaded area under the curve in the bottom panel of Fig. 7.

Based on both the conditional and interventional distribution, valid statements about values of blood glucose can be made. A patient who measures a high level of insulin at time 2 in the absence of an intervention (e.g., self-measured monitoring of blood insulin; mode of seeing) will predict a high level of blood glucose at time 3 based on the conditional distribution. A physician who actively administers a high dose of insulin at time 2 (e.g., via an insulin injection; mode of doing) will forecast a low value of blood glucose at time 3 based on the interventional distribution.

Incorrect conclusions arise if the conditional distribution is used to forecast effects of interventions, or the other way around, the interventional distribution is used to predict future values of blood glucose in the absence of interventions. For example, a physician who correctly uses the interventional distribution to choose the optimal treatment level would administer \(do(X_2=-38.3)\), resulting in a \(94\%\) probability of treatment success (see Fig. 6). A physician who erroneously uses the conditional distribution to specify the optimal treatment level would administer \(do(X_2=11.4)\). Such a non-optimal intervention would result in a \(85\%\) probability of treatment success. Thus, an incorrect decision results in an absolute decrease of \(9\%\) in the probability of treatment success (Gische et al., 2021).

8 Discussion

Graph-based causal models combine a priori assumptions about the causal structure of the data-generating mechanism (e.g., encoded in a ADMG) and observational data to make inference about the effects of (hypothetical) interventions. Causal quantities are defined via the do-operator and may comprise any feature of the interventional distribution (e.g., the mean vector, the covariance matrix, the pdf). This flexibility allows researchers to analyze effects of interventions beyond changes in the mean level. Causal effect functions map the parameters of the model implied joint distribution of observed variables onto causal quantities and therefore enable analyzing causal quantities using tools from the literature on traditional SEM. We propose an estimator for causal quantities and show that it is consistent and converges at a rate of \(N^{-\frac{1}{2}}\). In case of maximum likelihood estimation, the proposed estimator is asymptotically efficient.

In the remainder of the paper, we discuss several situations in which linear graph-based models are misspecified and how the proposed procedure can be extended to be applicable in such situations.

8.1 Causal Structure, Modularity, and Conditional Interventions

A researcher’s beliefs about the causal structure are encoded in the graph. Based on the concept of d-separation, every ADMG implies a set of (conditional) independence relations between observable variables that can be tested parametrically (Chen, Tian, & Pearl, 2014; Shipley, 2003; Thoemmes, Rosseel, & Textor, 2018) or nonparametrically (Richardson, 2003; Tian & Pearl, 2002b). One drawback of these tests is that they only distinguish between equivalence classes of ADMGs and do not evaluate the validity of a single graph.

One way of dealing with this situation is to further analyze the equivalence class to which a specified model belongs (Richardson & Spirtes, 2002). Some authors have proposed methods to draw causal conclusions based on common features of an entire equivalence class instead of using a single model (Hauser & Bühlmann, 2015; Maathuis, Kalisch, & Bühlmann, 2009; Perkovic, 2020; Zhang, 2008). However, equivalence classes can be large and its members might not overlap with respect to the causal effects of interest (He & Jia, 2015).

Another approach discussed in the literature is to complement the available observational data with experimental data. If these experiments are optimally chosen, the size of an equivalence class can be substantially reduced (Eberhardt, Glymour, & Scheines, 2005; Hyttinen, Eberhardt, & Hoyer, 2013). The idea of combining observational data and experimental data is theoretically appealing for many reasons, and it has stimulated the development of a variety of techniques (He & Geng, 2008; Peters, Bühlmann, & Meinshausen, 2016; Sontakke, Mehrjou, Itti, & Schölkopf, 2020). Most importantly, the combination of observational and interventional data allows differentiating causal models that cannot be distinguished solely based on observational data.

Furthermore, the availability of experimental evidence enables (partly) testing further causal assumptions such as the assumption of modularity, which cannot be tested solely based on observational data. While modularity seems rather plausible if the mechanisms correspond to natural laws (e.g., chemical or biological processes, genetic laws, laws of physics), it needs additional reflection if the mechanisms describe human behavior. For example, humans might respond to an intervention by adjusting behavioral mechanisms different from the one that is intervened on. The proposed method can readily be adjusted to capture such violations of the modularity assumption if an intervention changes other mechanisms in a known way. However, if the ways in which humans adjust their behavior in response to an intervention are unknown, they need to be learned. Well-designed experiments may be particularly useful for this purpose.

Throughout the manuscript, we focus on specific do-type interventions that assign fixed values to the interventional variables according to an exogenous rule. However, in practical applications interventional values are often chosen conditionally on the values of other observed variables. In our illustrative example, the interventional insulin level at \(t=2\) might be chosen in response to the glucose level observed at \(t=1\). Such situations are discussed in the literature on conditional interventions (Pearl, 2009) and dynamic treatment plans (Pearl & Robins, 1995; Robins, Hernán, & Brumback, 2000). In principle, the proposed method can be extended to evaluate conditional interventions and effects of dynamic treatment plans. However, the derivation of the closed-form representations of parametrized causal quantities and the corresponding causal effect functions in these settings require further research.

Finally, consequences of specific violations of non-testable causal assumptions can be gauged via sensitivity analyses and robustness checks (Ding & VanderWeele, 2016; Dorie, Harada, Carnegie, & Hill, 2016; Franks, D’Amour, & Feller, 2020; Rosenbaum, 2002).

8.2 Effect Modification and Heterogeneity

In this article, we have focused on situations in which direct causal effects are constant across value combinations of observed variables and error terms. In such situations, the use of linear models is justified. Statistical tests for linearity of the functional relations exist for both nested and non-nested models (Amemiya, 1985; Lee, 2007; Schumacker & Marcoulides, 1998). If these tests provide evidence against linearity, the assumption of constant direct effects is likely to be violated.

Theoretical considerations often suggest the existence of so-called effect modifiers (moderators), which can be modeled in parametrized graph-based models via nonlinear structural equations (Amemiya, 1985; Klein & Muthén, 2007). However, a closed-form representation of the entire interventional distribution in case of nonlinear structural relations cannot be derived via a direct application of the method proposed in this paper. The extent to which the proposed parametric method can be generalized to capture common types of nonlinearity (e.g., simple product terms that capture certain types of effect modification) is a focus of ongoing research. Preliminary results suggest that parametrized closed-form expressions of certain features of the interventional distribution (e.g., its moments) can be obtained (Kan, 2008; Wall & Amemiya, 2003), which in turns enables analyzing ATEs and other causal quantities.

Furthermore, we assumed that direct causal effects quantified by structural coefficients are equal across individuals in the population. However, (unobserved) heterogeneity in mean levels or direct effects might be present in many applied situations. A common procedure to capture specific types of unobserved heterogeneity is to include random intercepts or random coefficients in panel data models (Hamaker, Kuiper, & Grasman, 2015; Usami, Murayama, & Hamaker, 2019; Zyphur et al. 2019). Gische et al. (2021) apply the method proposed in this paper to linear cross-lagged panel models with additive person-specific random intercepts and show how absolute values of optimal treatment levels differ across individuals.

Even though additive random intercepts capture unobserved person-specific differences in the mean levels of the variables, these models still imply constant effects of changes in treatment level across persons. The latter implication might be overly restrictive in many applied situations in which treatment effects vary across individuals (e.g., different patients respond differently to variations in treatment level). An extension of the proposed methods to more complex dynamic panel data models (e.g., models including random slopes) requires further research. Several alternative approaches to model effect heterogeneity have been proposed for example within the social and behavioral sciences (Xie, Brand, & Jann, 2012), economics (Athey & Imbens, 2016), the political sciences (Imai & Ratkovic, 2013), and the computer sciences (Nie & Wager, 2020; Wager & Athey, 2018).

8.3 Measurement Error and Non-Normality

We assumed that variables are observed without measurement error. The proposed method can be extended to define, identify, and estimate causal effects among latent variables. In other words, measurement errors and measurement models can be included. The model implied joint distribution of observed variables in latent variable SEM is known (Bollen, 1989), and the derivation of the parametric expressions for causal quantities and causal effect functions in such models is subject to ongoing research.

However, measurement models for latent variables often can only mitigate measurement error issues (unless the true measurement model is known and everything is correctly specified). Furthermore, the degree to which interventions on certain types of latent constructs is feasible in practice needs further discussion (e.g., see Bollen, 2002; Borsboom, Mellenbergh, & van Heerden, 2003; van Bork, Rhemtulla, Sijtsma, & Borsboom, 2020).

Some population results derived in this paper rely on multivariate normally distributed error terms (e.g., Result 3), while others do not (e.g., the moments of the interventional distribution in Eqs. (6a) and (6b) or Theorem 8). For the former results, a systematic analytic inquiry of the consequences of incorrectly assuming multivariate normal error terms requires specific knowledge about the type of misspecification. If such knowledge is not available, one could attempt to assess the sensitivity of, for example, the interventional pdf, to misspecifications in the error term distribution via simulation studies.

Some estimation results derived in this paper rely on a known parametric distributional family of the error terms (e.g., Theorem 9 requires maximum-likelihood estimation), while others do not (e.g., Theorem 8 ensures consistency of the estimators of causal quantities for a broad class of estimators including ADF or WLS estimation of \({\varvec{\theta }}\)). Thus, inference about the interventional moments can be conducted in the absence of parametric assumptions on the error term distribution. Furthermore, it has been shown that ML-estimators in linear SEM are robust to certain types of distributional misspecification but sensitive to others (West, Finch, & Curran, 1995) and robust estimators have been developed for several types of distributional misspecifications (Satorra & Bentler, 1994; Yuan & Bentler, 1998).

8.4 Conclusion

Causal graphs (e.g., ADMGs) allow researchers to express their causal beliefs in a transparent way and provide a sound basis for the definition of causal effects using the do-operator. Causal effect functions enable analyzing causal quantities in parametrized models. They are a flexible tool that allow researchers to model causal effects beyond the mean and covariance structure and can thus be applied in a large variety of research situations. Consistent and asymptotically efficient estimators of parametric causal quantities are provided that yield precise estimates based on sample sizes commonly available in the social and behavioral sciences.

Change history

08 February 2022

An Erratum to this paper has been published: https://doi.org/10.1007/s11336-021-09836-4

Notes

Exceptions from this statement include, for example Ernest and Bühlmann (2015) and Bhattacharya, Nabi, and Shpitser (2020). Furthermore, statistical procedures from related fields such as the potential outcome framework (Robins, 1986, 1987; Robins, Rotnitzky, & Zhao, 1994; Rosenbaum & Rubin, 1983; van der Laan & Rubin, 2006) or econometrics (Chernozhukov, Fernández-Val, Newey, Stouli, & Vella, 2020; Matzkin, 2015) could be adjusted such that they can be used to estimate causal quantities in the NPSEM framework.

If the listed statements are indeed true, the causal Markov assumption is implied. For a detailed discussion of the logical relation of causal assumptions encoded in graph-based models and causal assumptions from the Neyman–Rubin potential outcome framework (e.g., ignorability, SUTVA), see, for example, Holland (1988); Pearl (2009); Shpitser et al. (2020).

Similar concepts such as autonomy (Aldrich, 1989), exogeneity (Mouchart, Russo, & Wunsch, 2009), and invariance (Cartwright, 2009) have been discussed in the econometric literature. However, we believe that these concepts are not part of the canonical assumptions of traditional SEM as used in the social and behavioral sciences.

Throughout this article, we use the following conventions: Sets of random variables are denoted by calligraphic letters (e.g., \({\mathcal {V}}=\{V_1,...,V_n\}\)). Single random variables from a set are denoted by corresponding upper-case Latin letters (e.g., \(V_i\)). The column vector containing all random variables in a set is denoted by the corresponding bold Latin letter (e.g., \({\mathbf {V}}=(V_1,...,V_n)^\intercal \)). Realizations of a random vector \({\mathbf {V}}\) are denoted by lower-case Latin letters (e.g., \({\mathbf {v}}\)).

Note that bidirected edges in a causal graph (see Fig. 2 in the illustration section) represent a nonzero covariance between error terms that is due to an unobserved common cause. This convention for causal graphs is different for path diagrams from the traditional SEM literature where bidirected edges simply indicate a correlation without being specific about its origin.

A detailed justification that the matrix expressions in Eq. (3) adequately represent the changes to the linear system imposed by the do-operator is provided in the online supplementary material.

In practice, nonparametric estimation of multivariate distributions requires certain regularity conditions and large sample sizes due to reduced rates of convergence (as compared to parametric estimation procedures). This practical limitation will be particularly pronounced in high dimensional systems with continuous variables, a phenomenon known as the curse of dimensionality.

A more detailed description of the data simulation is provided in the online supplementary material.

For the detailed derivation of analytic expressions and computational details, we refer the reader to the online supplementary material.

Put more technically, we show that the model is locally identified using a generalized version of Wald’s (1950) rank rule (Bekker et al., 1994). Given the triangular structure of the matrix of structural coefficients and the special structure of the covariance restrictions, we believe that the model is also globally identified (Hausman & Taylor, 1983; Hsiao, 1983).

For the detailed derivation of analytic expressions and computational details, we refer the reader to the online supplementary material.

Note that the functions \({\mathbf {g}}_1\), \({\mathbf {g}}_2\), \(g_3\) and \(g_4\) introduced in Eqs. (12a), (13), (14) and (15) satisfy Property A.1 at every point in the interior of \({\varvec{\Theta }}\) for any fixed \(({\mathbf {x}},{\mathbf {v}}_{{\mathcal {N}}})\in {\mathbb {R}}^{K_x}\times {\mathbb {R}}^{n-K_x}\).

Note that many standard estimators from the field of linear SEM (e.g., 3SLS, ADF, GLS, GMM, ML, IV) satisfy Property A.2 under fairly general conditions.

References

Abadir, K. M., & Magnus, J. R. (2005). Matrix algebra. Cambridge University Press. https://doi.org/10.1017/CBO9780511810800

Aldrich, J. (1989). Autonomy. Oxford Economic Papers, 4–1(1), 15–34. https://doi.org/10.1093/oxfordjournals.oep.a041889

Alwin, D. F., & Hauser, R. M. (1975). The decomposition of effects in path analysis. American Sociological Review, 40(1), 37–47. https://doi.org/10.2307/2094445

Amemiya, T. (1985). Advanced econometrics (1st ed.). Harvard University Press.

Athey, S., & Imbens, G. (2016). Recursive partitioning for heterogeneous causal effects. Proceedings of the National Academy of Sciences, 113(27), 7353–7360. https://doi.org/10.1073/pnas.1510489113

Bekker, P. A., Merckens, A., & Wansbeek, T. J. (1994). Identification, equivalent models, and computer algebra. Academic Press.

Bhattacharya, R., Nabi, R., & Shpitser, I. (2020). Semiparametric inference for causal effects in graphical models with hidden variables. Retrieved fromhttps://arxiv.org/abs/2003.12659

Bollen, K. A. (1987). Total, direct, and indirect effects in structural equation models. Sociological Methodology, 17, 37–69. https://doi.org/10.2307/271028

Bollen, K. A. (1989). Structural equations with latent variables. John Wiley & Sons. https://doi.org/10.1002/9781118619179

Bollen, K. A. (1996). An alternative two-stage least squares (2SLS) estimator for latent variable equations. Psychometrika, 61(1), 109–121. https://doi.org/10.1007/BF02296961

Bollen, K. A. (2002). Latent variables in psychology and the social sciences. Annual Review of Psychology, 53, 605–634. https://doi.org/10.1146/annurev.psych.53.100901.135239

Bollen, K. A., & Bauldry, S. (2010). A note on algebraic solutions to identification. The Journal ofMathematical Sociology, 34(2), 136–145. https://doi.org/10.1080/00222500903221571

Bollen, K. A., Kolenikov, S., & Bauldry, S. (2014). Model-implied instrumental variable-generalized method of moments (MIIV-GMM) estimators for latent variable models. Psychometrika, 79(1), 20–50. https://doi.org/10.1007/s11336-013-9335-3

Bollen, K. A., & Pearl, J. (2013). Eight myths about causality and structural equation models. In S. L. Morgan (Ed.), Handbook of causal analysis for social research (pp. 301–328). Springer. https://doi.org/10.1007/978-94-007-6094-3_15

Borsboom, D., Mellenbergh, G. J., & van Heerden, J. (2003). The theoretical status of latent variables. Psychological Review, 110(2), 203–19. https://doi.org/10.1037/0033-295X.110.2.203

Bowden, R. J., & Turkington, D. A. (1985). Instrumental variables. Cambridge University Press. https://doi.org/10.1017/CCOL0521262410