Abstract

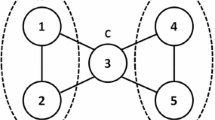

When latent variables are used as outcomes in regression analysis, a common approach that is used to solve the ignored measurement error issue is to take a multilevel perspective on item response modeling (IRT). Although recent computational advancement allows efficient and accurate estimation of multilevel IRT models, we argue that a two-stage divide-and-conquer strategy still has its unique advantages. Within the two-stage framework, three methods that take into account heteroscedastic measurement errors of the dependent variable in stage II analysis are introduced; they are the closed-form marginal MLE, the expectation maximization algorithm, and the moment estimation method. They are compared to the naïve two-stage estimation and the one-stage MCMC estimation. A simulation study is conducted to compare the five methods in terms of model parameter recovery and their standard error estimation. The pros and cons of each method are also discussed to provide guidelines for practitioners. Finally, a real data example is given to illustrate the applications of various methods using the National Educational Longitudinal Survey data (NELS 88).

Similar content being viewed by others

Notes

The T-score is a standardized score, which was in fact a transformation of an IRT \(\theta \) score.

Originally, \({\varvec{\Sigma }}_{u}\) needs to be constrained to be nonnegative definite. However, this is not a box constraint that “optim” function can handle. We therefore impose constraints on the variance and correlation terms.

We used the list-wise deletion because we wanted to create a complete data set for illustration. Our intention was to evaluate the performance of different methods without possible interference of missing data. Because we used the NELS provided item parameters and because our structural model is simple, the possible bias introduced by list-wise deletion may be ignored.

References

Adams, R. J., Wilson, M., & Wu, M. (1997). Multilevel item response models: An approach to errors in variables regression. Journal of Educational and Behavioral Statistics, 22(1), 47–76.

Anderson, J. C., & Gerbing, D. W. (1984). The effect of sampling error on convergence, improper solutions, and goodness-of-fit indices for maximum likelihood confirmatory factor analysis. Psychometrika, 49(2), 155–173.

Anderson, J. C., & Gerbing, D. W. (1988). Structural equation modeling in practice: A review and recommended two-step approach. Psychological Bulletin, 103, 411–423.

Bacharach, V. R., Baumeister, A. A., & Furr, R. M. (2003). Racial and gender science achievement gaps in secondary education. The Journal of Genetic Psychology, 164(1), 115–126.

Baker, F. B., & Kim, S.-H. (2004). Item response theory: Parameter estimation techniques. NewYork: Dekker.

Bianconcini, S., & Cagnone, S. (2012). A general multivariate latent growth model with applications to student achievement. Journal of Educational and Behavioral Statistics, 37, 339–364.

Bollen, K. A. (1989). Structural equations with latent variables. New York: Wiley.

Broyden, C. G. (1970). The convergence of a class of double-rank minimization algorithms 1. General considerations. IMA Journal of Applied Mathematics, 6, 76.

Buonaccorsi, J. P. (1996). Measurement error in the response in the general linear model. Journal of the American Statistical Association, 91(434), 633–642.

Burt, R. S. (1973). Confirmatory factor-analytic structures and the theory construction process. Sociological Methods and Research, 2(2), 131–190.

Burt, R. S. (1976). Interpretational confounding of unobserved variables in structural equation models. Sociological Methods and Research, 5(1), 3–52.

Byrd, R. H., Lu, P., Nocedal, J., & Zhu, C. (1995). A limited memory algorithm for bound constrained optimization. SIAM Journal on Scientific Computing, 16, 1190–1208.

Cai, L. (2008). SEM of another flavour: Two new applications of the supplemented EM algorithm. British Journal of Mathematical and Statistical Psychology, 61(2), 309–329.

Carroll, R., Ruppert, D., Stefanski, L., & Crainiceanu, C. (2006). Measurement error in nonlinear models: A modern perspective (2nd ed.). London: Chapman and Hall.

Chang, H., & Stout, W. (1993). The asymptotic posterior normality of the latent trait in an IRT model. Psychometrika, 58, 37–52.

Cohen, A. S., Bottge, B. A., & Wells, C. S. (2001). Using item response theory to assess effects of mathematics instruction in special populations. Exceptional Children, 68(1), 23–44. https://doi.org/10.1177/001440290106800102.

Congdon, P. (2001). Bayesian statistical modeling. Chichester: Wiley.

De Boeck, P., & Wilson, M. (2004). A framework for item response models. New York: Springer.

De Fraine, B., Van Damme, J., & Onghena, P. (2007). A longitudinal analysis of gender differences in academic self-concept and language achievement: A multivariate multilevel latent growth approach. Contemporary Educational Psychology, 32(1), 132–150.

Dempster, A. P., Laird, N. M., & Rubin, D. B. (1977). Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society Series B (Methodological), 39, 1–38.

Devanarayan, V., & Stefanski, L. (2002). Empirical simulation extrapolation for measurement error models with replicate measurements. Statistics and Probability Letters, 59, 219–225.

Diakow, R. (2010). The use of plausible values in multilevel modeling. Unpublished masters thesis. Berkeley: University of California.

Diakow, R. P. (2013). Improving explanatory inferences from assessments. Unpublished doctoral dissertation. University of California-Berkley.

Drechsler, J. (2015). Multiple imputation of multilevel missing data—Rigor versus simplicity. Journal of Educational and Behavioral Statistics, 40(1), 69–95.

Fan, X., Chen, M., & Matsumoto, A. R. (1997). Gender differences in mathematics achievement: Findings from the National Education Longitudinal Study of 1988. Journal of Experimental Education, 65(3), 229–242.

Fletcher, R. (1970). A new approach to variable metric algorithms. The Computer Journal, 13, 317.

Fox, J.-P. (2010). Bayesian item response theory modeling: Theory and applications. New York: Springer.

Fox, J.-P., & Glas, C. A. (2001). Bayesian estimation of a multilevel IRT model using Gibbs sampling. Psychometrika, 66(2), 271–288.

Fox, J.-P., & Glas, C. A. (2003). Bayesian modeling of measurement error in predictor variables using item response theory. Psychometrika, 68(2), 169–191.

Fuller, W. (2006). Measurement error models (2nd ed.). New York, NY: Wiley.

Goldfarb, D. (1970). A family of variable metric updates derived by variational means. Mathematics of Computation, 24, 23–26.

Goldhaber, D. D., & Brewer, D. J. (1997). Why don’t schools and teachers seem to matter? Assessing the impact of unobservables on educational productivity. The Journal of Human Resources, 32(3), 505–523.

Hill, H. C., Rowan, B., & Ball, D. L. (2005). Effects of teachers’ mathematical knowledge for teaching on student achievement. American Educational Research Journal, 42(2), 371–406.

Hong, G., & Yu, B. (2007). Early-grade retention and children’s reading and math learning in elementary years. Educational Evaluation and Policy Analysis, 29, 239–261.

Hsiao, Y., Kwok, O., & Lai, M. (2018). Evaluation of two methods for modeling measurement errors when testing interaction effects with observed composite scores. Educational and Psychological Measurement, 78, 181–202.

Jeynes, W. H. (1999). Effects of remarriage following divorce on the academic achievement of children. Journal of Youth and Adolescence, 28(3), 385–393. https://doi.org/10.1023/A:1021641112640.

Kamata, A. (2001). Item analysis by the hierarchical generalized linear model. Journal of Educational Measurement, 38, 79–93.

Khoo, S., West, S., Wu, W., & Kwok, O. (2006). Longitudinal methods. In M. Eid & E. Diener (Eds.), Handbook of psychological measurement: A multimethod perspective (pp. 301–317). Washington, DC: APA.

Koedel, C., Leatherman, R., & Parsons, E. (2012). Test measurement error and inference from value-added models. The B. E. Journal of Economic Analysis and Policy, 12, 1–37.

Kohli, N., Hughes, J., Wang, C., Zopluoglu, C., & Davison, M. L. (2015). Fitting a linear–linear piecewise growth mixture model with unknown knots: A comparison of two common approaches to inference. Psychological Methods, 20(2), 259.

Kolen, M. J., Hanson, B. A., & Brennan, R. L. (1992). Conditional standard errors of measurement for scale scores. Journal of Educational Measurement, 29, 285–307.

Lee, S., & Song, X. (2003). Bayesian analysis of structural equation models with dichotomous variables. Statistics in Medicine, 22, 3073–3088.

Lindstrom, M. J., & Bates, D. (1988). Newton–Raphson and EM algorithms for linear mixed-effects models for repeated measure data. Journal of the American Statistical Association, 83, 1014–1022.

Liu, Y., & Yang, J. (2018). Bootstrap-calibrated interval estimates for latent variable scores in item response theory. Psychometrika, 83, 333–354.

Lockwood, L. R., & McCaffrey, D. F. (2014). Correcting for test score measurement error in ANCOVA models for estimating treatment effects. Journal of Educational and Behavioral Statistics, 39, 22–52.

Lu, I. R., Thomas, D. R., & Zumbo, B. D. (2005). Embedding IRT in structural equation models: A comparison with regression based on IRT scores. Structural Equation Modeling, 12(2), 263–277.

Magis, D., & Raiche, G. (2012). On the relationships between Jeffrey’s model and weighted likelihood estimation of ability under logistic IRT models. Psychometrika, 77, 163–169.

Meng, X. (1994). Multiple-imputation inferences with uncongenial sources of input. Statistical Science, 10, 538–573.

Meng, X., & Rubin, D. (1993). Maximum likelihood estimation via the ECM algorithm: A general framework. Biometrika, 80, 267–278.

Mislevy, R. J., Beaton, A. E., Kaplan, B., & Sheehan, K. M. (1992). Estimating population characteristics from sparse matrix samples of item responses. Journal of Educational Measurement, 29(2), 133–161.

Monseur, C., & Adams, R. J. (2009). Plausible values: How to deal with their limitations. Journal of Applied Measurement, 10(3), 320–334.

Murphy, K. (2007). Conjugate Bayesian analysis of the Gaussian distribution. Online file at https://www.cs.ubc.ca/~murphyk/Papers/bayesGauss.pdf

Nelder, J. A., & Mead, R. (1965). A simplex algorithm for function minimization. Computer Journal, 7, 308–313.

Nussbaum, E., Hamilton, L., & Snow, R. (1997). Enhancing the validity and usefulness of large-scale educational assessment: IV.NELS:88 Science achievement to 12th grade. American Educational Research Journal, 34, 151–173.

Pastor, D. A., & Beretvas, N. S. (2006). Longitudinal Rasch modeling in the context of psychotherapy outcomes assessment. Applied Psychological Measurement, 30, 100–120.

Pinheiro, J. C., & Bates, D. M. (1995). Approximations to the log-likelihood function in the nonlinear mixed-effects model. Journal of computational and Graphical Statistics, 4(1), 12–35.

Rabe-Hesketh, S., & Skrondal, A. (2008). Multilevel and longitudinal modeling using Stata. New York: STATA Press.

Rabe-Hesketh, S., Skrondal, A., & Pickles, A. (2004). GLLAMM manual. Oakland/Berkeley: University of California/Berkeley Electronic Press.

Raudenbush, S. W., & Bryk, A. S. (1985). Empirical Bayes meta-analysis. Journal of Educational and Behavioral Statistics, 10, 75–98.

Raudenbush, S. W., & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods. Thousand Oaks, CA: Sage.

Raudenbush, S. W., Bryk, A. S., & Congdon, R. (2004). HLM 6 for windows (computer software). Lincolnwood, IL: Scientific Software International.

Raudenbush, S. W., & Liu, X. (2000). Statistical power and optimal design for multisite randomized trials. Psychological Methods, 5(2), 199.

Rijmen, F., Vansteelandt, K., & De Boeck, P. (2008). Latent class models for diary method data: Parameter estimation by local computations. Psychometrika, 73(2), 167–182.

Rosseel, Y. (2012). Lavaan: An R package for structural equation modeling. Journal of Statistical Software, 48(2), 1–36. https://doi.org/10.18637/jss.v048.i02.

Shang, Y. (2012). Measurement error adjustment using the SIMEX method: An application to student growth percentiles. Journal of Educational Measurement, 49, 446–465.

Shanno, D. F. (1970). Conditioning of quasi-Newton methods for function minimization. Mathematics of Computation, 24, 647–656.

Sirotnik, K., & Wellington, R. (1977). Incidence sampling: an integrated theory for “matrix sampling”. Journal of Educational Measurement, 14, 343–399.

Skrondal, A., & Kuha, J. (2012). Improved regression calibration. Psychometrika, 77, 649–669.

Skrondal, A., & Laake, P. (2001). Regression among factor scores. Psychometrika, 66(4), 563–575.

Skrondal, A., & Rabe-Hesketh, S. (2004). Generalized latent variable modeling: Multilevel, longitudinal, and structural equation models. Boca Raton: CRC Press.

StataCorp., (2011). Stata statistical software: Release 12. College Station, TX: StataCorp LP.

Stoel, R. D., Garre, F. G., Dolan, C., & Van Den Wittenboer, G. (2006). On the likelihood ratio test in structural equation modeling when parameters are subject to boundary constraints. Psychological Methods, 11(4), 439.

Thompson, N., & Weiss, D. (2011). A framework for the development of computerized adaptive tests. Practical Assessment, Research and Evaluation, 16(1). http://pareonline.net/getvn.asp?v=16&n=1.

Tian, W., Cai, L., Thissen, D., & Xin, T. (2013). Numerical differentiation methods for computing error covariance matrices in item response theory modeling: An evaluation and a new proposal. Educational and Psychological Measurement, 73(3), 412–439.

van der Linden, W. J., & Glas, C. A. W. (Eds.). (2010). Elements of adaptive testing (Statistics for social and behavioral sciences series). New York: Springer.

Verhelst, N. (2010). IRT models: Parameter estimation, statistical testing and application in EER. In B. P. Creemers, L. Kyriakides, & P. Sammons (Eds.), Methodological advances in educational effectiveness research (pp. 183–218). New York: Routledge.

von Davier, M., & Sinharay, S. (2007). An importance sampling EM algorithm for latent regression models. Journal of Educational and Behavioral Statistics, 32(3), 233–251.

Wang, C. (2015). On latent trait estimation in multidimensional compensatory item response models. Psychometrika, 80, 428–449.

Wang, C., Kohli, N., & Henn, L. (2016). A second-order longitudinal model for binary outcomes: Item response theory versus structural equation modeling. Structural Equation Modeling: A Multidisciplinary Journal, 23, 455–465.

Wang, C., & Nydick, S. (2015). Comparing two algorithms for calibrating the restricted non-compensatory multidimensional IRT model. Applied Psychological Measurement, 39, 119–134.

Warm, T. A. (1989). Weighted likelihood estimation of ability in item response theory. Psychometrika, 54, 427–450.

Ye, F. (2016). Latent growth curve analysis with dichotomous items: Comparing four approaches. British Journal of Mathematical and Statistical Psychology, 69, 43–61.

Zwinderman, A. H. (1991). A generalized Rasch model for manifest predictors. Psychometrika, 56(4), 589–600.

Acknowledgements

This project is supported by IES R305D160010 and NSF SES-1659328

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Closed-form Marginal Likelihood

In this appendix, we provide detailed derivations for the closed-form marginal likelihood for a general model where the design matrices for the fixed and random effects in the latent growth curve model are different, i.e., Eq. (3) in the paper is updated as

The subscript in \(\varvec{X}_i\) and \(\varvec{Z}_i\) indicates the model allows for unbalanced design.

Given (1) and the measurement error model, the marginal likelihood of the structural parameters, \(L(\beta , \varvec{\Sigma }_u, \sigma ^2)\), is proportional to

where \(|\cdot |\) denote the determinant of a matrix and \(||\theta ||^2 = \theta ^t\theta \).

Observing the coefficient of the squared term of \(\varvec{u}_i\) in the power of e is

and the coefficient of \(u_i\) in the power of e is

Thus,

where

For multivariate models, let \(\varvec{\theta }_i=(\theta _{i11},\ldots ,\theta _{i1T},\ldots ,\theta _{iD1},\ldots ,\theta _{iDT})^t\), a \(n_iD \times 1\) vector where \(n_i\) denotes the number of measurement waves for person i. Then in the general form, the associative latent growth curve model still takes the same form as in (1), but \(\varvec{X}_i\) becomes a \(n_iD \times Dp\) design matrix, and \(\varvec{\beta } = (\beta _{01}, \beta _{02},\ldots , \beta _{0D}, \beta _{11}, \beta _{12},\ldots , \beta _{1d},\ldots , \beta _{(p-1)1},\ldots , \beta _{(p-1)D})^t\) is a \(Dp \times 1\) vector. \(\varvec{Z}_i\) is \(n_iD \times Dk\) design matrix, assuming there are k random effects. In our simulation setting, \(n_i=4\), \(p=k\); hence, \(\varvec{Z}\) takes the same form as \(\varvec{X}\).

\(\varvec{u}_i\) is a \(Dk \times 1\) vector. The covariance matrix of \(\varvec{u}_i\) is \(\varvec{\Sigma }_u\). \(e_i = (e_{i11},\ldots ,e_{i1T},\ldots ,e_{iD1},\ldots ,e_{iDT})^t\) is a \(n_iD \times 1\) vector of residuals. The covariance matrix of \(e_i\), \(\varvec{\Sigma }\), is a diagonal block matrix. It has the structure of \(\begin{pmatrix} \varvec{\Sigma } &{}\quad \cdots &{}\quad \cdots &{}\quad 0 \\ 0 &{}\quad \varvec{\Sigma } &{}\quad \cdots &{}\quad 0 \\ \vdots &{}\quad \vdots &{}\quad \ddots &{}\quad \vdots \\ 0 &{}\quad \cdots &{}\quad \cdots &{}\quad \varvec{\Sigma }\\ \end{pmatrix}_{n_iD \times n_iD}\) where \(\Sigma _{D\times D} = diag((\sigma _1)^2, (\sigma _2)^2,\ldots , (\sigma _D)^2)\) and \(\Sigma \) has \(n_i\) such diagonal blocks. Then the marginal likelihood of model parameters is:

where

Appendix B: Computational Details of the EM Standard Error (MIRT)

The important component of computing the standard error is the complete data Fisher information matrix \({\varvec{I}}_{c} ({\hat{\varvec{\psi }}})\). Below, we present the specific forms of these components for the MIRT models. Results from the UIRT models can be considered as a special case. Assuming a Monte Carlo sampling version of the EM algorithm is used, i.e., Eq. (19), we have,

To obtain the second derivatives with respect to the elements in the covariance matrix

where \(x_{p}\) and \(x_{q}\) are the two elements in the covariance matrix \(\hat{\Sigma }_{u}\). For instance, using UIRT set up as an example, if taking the second derivative of log-likelihood with respect to \(\tau _{00}\), we would set \(x_{p}=x_{q}=\tau _{00}\) in (B4), and then \(\frac{\partial \hat{\Sigma }_{u}}{\partial \tau _{00}}=\left[ {\begin{array}{c@{\quad }c} 1 &{} 0\\ 0 &{} 0\\ \end{array} } \right] \). The parameters in (B1)–(B4) are final estimates upon convergence. Hence, the Fisher information matrix for the complete data has the following form as

The information matrix can also be obtained similarly if a closed-form conditional expectation is obtained.

Appendix C: The MCMC Algorithm

The Metropolis–Hastings algorithm within Gibbs sampler is used. For the ease of exposition below, we can rewrite the linear mixed model in (3) as follows:

The conjugate priors are selected whenever available. Below is an outline of the sampling schemes. At the \((m+1)^{\mathrm {th}}\) iteration, we have

Step 1: Sample \({{{\varvec{\uptheta }}}}_{i}^{*} \sim N({{{\varvec{\uptheta }} }}_{i}^{(m)},\sigma _{\theta }^{2} )\) and \(\mathrm{\mathbf{u}}_{i} \sim U( {0,1} )\), and set \(\theta _{it}^{(m+1)} =\theta _{it}^{*} \) when

where \(\varvec{\pi }_{i}={(\pi _{0i},\pi _{1i})}^{t},\)\(L({\varvec{Y}}_{i} \vert \theta _{it}^{*} )\) is the likelihood obtained from item responses, and \(P({{{\varvec{\uptheta }} }}_{i}^{*} \vert {\mathbf{X}{\varvec{\uppi }} }_{i}^{(m)},\sigma ^{2(m)})\) is the normal density with a mean of \({\varvec{X}}_{i} {{{\varvec{\uppi }} }}_{i}^{(m)} \) and variance of \(\sigma ^{2(m)}\).

Step 2: Sample \(\pi _{i}^{(m+1)} \) from the multivariate normal distribution with covariance

and mean

Step 3: Sample \(\sigma ^{2(m+1)}\) from the full condition distribution

where the prior distribution of \(\sigma ^{2}\) is Inv-Gamma (\(\alpha _{0},\alpha _{1})\). We selected \(\alpha _{0}=.0001\) and \(\alpha _{1}=1\) (Congdon, 2001) as the hyper-parameters of a non-information prior distribution.

Step 4: Sample \({\varvec{\upbeta }}^{(m+1)}\) and \({{\varvec{\Sigma }}}_{u}^{(m+1)} \) from the normal inverse Wishart distribution with parameters \(( {{{\varvec{\upmu } }}_{{\varvec{\upbeta }}| {{\varvec{\uppi }}} }^{(m+1)},\kappa _{n},{{\varvec{\Sigma }}}_{{\mathbf {u}}| {{\varvec{\uppi }}} }^{(m+1)},\nu _{n} } )\). Here

where \({{\bar{\varvec{\uppi }}}}^{(m+1)}=( {\sum \nolimits _i {{\pi _{0i}^{(m+1)} } / {N},} \sum \nolimits _i {{\pi _{1i}^{(m+1)} } / N} })^{t}\). And the prior distribution is a normal inverse Wishart distribution with parameters \(({{{\varvec{\upmu } }}_{{\varvec{\upbeta }},0},\kappa _{0},{{\varvec{\Sigma }}}_{u,0},\nu _{0} })\). Regarding the hyper-parameters, \({\varvec{\upmu }}_{{\beta ,0}}\) is a 2-by-1 zero vector, \(\kappa _{{0}}=0\), \({{\varvec{\Sigma }}}_{u,0} \) is a 2-by-2 identity matrix, and \(\nu _{{0}}=-1\), yielding a non-informative prior (Murphy, 2007).

Rights and permissions

About this article

Cite this article

Wang, C., Xu, G. & Zhang, X. Correction for Item Response Theory Latent Trait Measurement Error in Linear Mixed Effects Models. Psychometrika 84, 673–700 (2019). https://doi.org/10.1007/s11336-019-09672-7

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-019-09672-7