Abstract

Identifying cases of intellectual property violation in multimedia files poses significant challenges for the Internet infrastructure, especially when dealing with extensive document collections. Typically, techniques used to tackle such issues can be categorized into either of two groups: proactive and reactive approaches. This article introduces an approach combining both proactive and reactive solutions to remove illegal uploads on a platform while preventing legal uploads or modified versions of audio tracks, such as parodies, remixes or further types of edits. To achieve this, we have developed a rule-based focused crawler specifically designed to detect copyright infringement on audio files coupled with a visualization environment that maps the retrieved data on a knowledge graph to represent information extracted from audio files. Our system automatically scans multimedia files that are uploaded to a public collection when a user submits a search query, performing an audio information retrieval task only on files deemed legal. We present experimental results obtained from tests conducted by performing user queries on a large music collection, a subset of 25,000 songs and audio snippets obtained from the Free Music Archive library. The returned audio tracks have an associated Similarity Score, a metric we use to determine the quality of the adversarial searches executed by the system. We then proceed with discussing the effectiveness and efficiency of different settings of our proposed system.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The internet has greatly improved the effortless sharing of multimedia content across devices, leading to enhanced efficiency. However, it frequently neglects to prioritize certain fundamental aspects of data. This encompasses the sharing of copyright-protected files, which is challenging to monitor due to the absence of comprehensive and collectively followed Intellectual Property protection laws [1], as well as the complexity of overseeing vast amounts of data transmitted in a decentralized manner. One effective strategy to consider Intellectual Property infringement is to incorporate a digital signature or watermark [2,3,4] into multimedia files using unique encryption keys. Incorporating a digital signature into multimedia files presents a set of challenges that span both methodological and technical considerations. A pivotal obstacle lies in the development of robust algorithms capable of securely embedding watermarks without compromising the integrity of multimedia content. Crucially, visible watermarks should minimally impact user experience, whereas invisible digital signatures necessitate resilience against diverse forms of manipulation. Moreover, compatibility poses another hurdle, as different file formats and compression methods may react differently to watermark embedding techniques. Practical challenges involve the delicate balance between copyright protection and user experience. Intrusive watermarks might deter users from engaging with the content, while insufficiently secure signatures may fail to safeguard intellectual property. Our approach involves utilizing the original data of protected multimedia files while preserving the integrity of the original content. It selectively stores information related to indexing audio files, leaving the core data untouched. To achieve this, we employ a focused Web crawler to gather data pertaining to multimedia files hosted on the internet. Focused crawlers [5] are specialized Web crawlers developed to monitor specific topics or segments of the Web. During their crawling process, they have the capability to selectively filter pages by identifying content relevant to predefined topics in accordance with their On-Line Selection Policy. This enables them to meticulously analyze particular segments of the Web. Our version of the crawler operates in the multimodal content based information retrieval domain, which encompasses searching for information in multimedia data formats such as audio files, typed text or the metadata contained within a file [6]. The primary objective of our crawler is to ascertain whether the retrieved set of documents are relevant to the end user’s objectives while ensuring that the obtained data does not contain any potentially illegal document. Because we’re focused on detecting illegal content, our crawler needs a way to distinguish between legal and illegal uploads. To achieve this, it utilizes deep neural networks (DNNs) to filter out audio tracks that do not comply with copyright laws, referencing a collection of copyrighted tracks as a benchmark. Intellectual property laws present numerous challenges due to their typically vague legal languageFootnote 1 [1], necessitating the exploration of less stringent methods for verifying online data integrity. This task can be likened to a recommendation task [7] where illegal content is “recommended" to a legality verification bot by finding similarity with a reference collection of illicit documents. Additionally, the focused crawler implemented for this paper engages in adversarial information retrieval, meaning that only a portion of the retrieved data is shown to the user as a response to their query, hiding part of the result set. This is beneficial both to the system administrators, as the automatic detection of potentially compromising content frees them from the obligation of manually checking every IP violation, and to the rights holders, as they only need to provide their legitimate copy of the audio track to the repository to prevent illegal uploads from showing up in the result set. Another crucial facet of our approach pertains to its capacity for results obfuscation: rather than simply removing illegal files, our system employs a mechanism to retain an abstract representation of the pertinent subset of files that necessitate concealment from the user [8]. All of the retrieved files violating copyright law are then utilized to enhance the detection capabilities of the DNNs, allowing us to identify more sophisticated modifications of legal audio data. Consequently, server administrators overseeing extensive collections of audio files and website owners can automate the manual monitoring and removal of illicit content on their platforms. This eliminates the need to invest in costly DRM (Digital Rights Management) software [9] or manually analyze and respond to legally gray uses of copyrighted content as they get reported [10]. The retrieved data is not kept in its original form. Instead, It is analyzed to extract the intrinsic information of the audio signal and the textual metadata contained within each audio file. We employ a different methodology for mapping similarities among the abstract representations of audio tracks, leveraging the strengths of deep neural networks. These networks present several advantages in comparison to conventional machine learning techniques [11], including the ability to solve problems of a completely different field than the ones they were originally designed to tackle. The neural networks considered for this study include convolutional neural networks (CNNs) and recurrent neural networks (RNNs). While CNNs must recalculate input data at each execution cycle, RNNs can maintain information from prior cycles, utilizing it to obtain new insights from the input data in subsequent cycles. The similarity assessment in our work relies on a vector of features [12] extracted from the original audio tracks through segmentation and frequency filters. These feature vectors, also known as Mel-filtered Cepstral Coefficients (MFCCs) [13], provide a visual representation of the audio tracks. Further elaboration on MFCCs is provided in Section 3.4. The system makes use of neural networks and metadata for similarity checks. Finally, we use a music knowledge graph to graphically represent the relationship between different audio tracks, their metadata, the intrinsic properties extracted by our system, and concept such as ownership and file legality. This allows us to connect relevant information to groups of retrieved audio files and to search for the correct audio files more efficiently. Our contributions to the field include utilizing Deep Neural Networks to extract descriptive feature vectors from audio tracks, then incorporating the obtained knowledge with node graphs during Adversarial Information Retrieval tasks.

The rest of the paper is structured as follows: In Section 2, we provide an overview of the literature pertaining to the treatment of audio information. This includes discussions on focused crawlers, digital watermarking systems, and machine learning and DNNs-based approaches to Audio Retrieval. Section 3 presents our proposed system, outlining its composing modules and operational behavior. We also present the technical implementation of the system in Section 3.7. In Section 4, we present various experiments and the criteria used to assess the quality and effectiveness of the system. Finally, Section 5 concludes our work, offering insights into the results obtained and potential future enhancements for our model.

2 Related work

While focused Web crawling has been a subject of study for many years, audio recognition has only recently garnered attention, partly due to advancements in the field of DNNs. These advancements have led to the development of several techniques for Web data retrieval and the analysis and recognition of audio tracks. Bifulco et al. [14] analyze the problem of focused crawling for Big Data sources. They divide the problem into two macro-areas: crawling artifacts from the Web with high degrees of significance compared to the driving topic or query of the crawler and matching the properties of crawled artifacts against data stored within local sources of pre-obtained files. Their solution combines machine learning and natural language processing techniques in an intelligent system named Crawling Artefacts of Interest and Matching them Against eNterprise Sources (CAIMANS), which extracts data from the Web, pre-processes it and formats it to enable further analyses while also providing data visualization for the crawled artifacts. Liu et al. [15] discuss the significance of capturing relevant Web resources, particularly through focused crawling methods and especially on more niche parts of the Web. The challenges with traditional approaches the authors have found are related to the difficulty in determining weighted coefficients for evaluating hyperlinks, which can lead to crawler divergence from the intended topic. To address this, they propose a novel framework called Web Space Evolution (WSE), which incorporates multi-objective optimization and a domain ontology to enhance crawling effectiveness. The resulting focused crawler, “FCWSEO", demonstrates high performances in experiments dealing with the retrieval of topic-relevant webpages related to the rainstorm disaster domain. Capuano et al. [16] introduced an ontology-based approach for developing a focused crawler in the cultural heritage domain. They demonstrated how Linked Open Data and Semantic Web concepts are capable of enhancing information retrieval, particularly in terms of semantics. In a related context, Knees, Pohle et al. [17] invented a technique for constructing a search engine optimized for very large audio collections. The focused crawler they realized utilizes metadata from downloaded multimedia files to categorize the host Web pages, filtering out potentially irrelevant information, like publication years or sources. Addressing intellectual property violations performed on multimedia files is a multifaceted challenge. Various techniques have been proposed throughout the years. Some popular methods include those based on digital watermarks: Kim [18] proposed a technique for detecting illegal copies of images protected by copyright by reducing them to 8x8 pixel representations, applying discrete cosine transforms, then using AC coefficients for similarity detection. Dahr and Kim [19] introduced a system to introduce digital signatures in the domain of audio information retrieval, applying watermarks to the content of audio tracks on amplitude peaks using Fast Fourier Transforms. The method ensures watermark integrity even when pitch or tempo is modified, and it can be extracted with the right secure encrypted key. Audio files are much more than just their audio signal. Thanks to the rich metadata content, they can contain many distinguishing information that can be extracted, analyzed, and employed for file legality checks. The .mp3 file format is of particular interest to us. The MP3 (MPEG Audio Layer III) file format is a widely employed standard for audio compression that efficiently stores and transmits digital audio data. Developed by the Moving Picture Experts GroupFootnote 2 (MPEG), the format employs lossy compression, meaning that it reduces file sizes by discarding some of the audio data that the human ear is incapable of picking up, due to lower sensibility to a certain frequency range. Despite this data loss, MP3 files generally maintain high audio quality and have become the de facto standard for digital audio distribution.

The innovation in this paper is mapping information related to .mp3 files to a knowledge graph-based database, which allows us to exploit the rich information and compact file sizes of .mp3 files. The MPEG standard describes several fields that can be attributed to an audio file, such as Label, CD, Track, and many more. A central aspect of our method is using DNNs to derive feature vectors from audio tracks. This technique has proven to be effective not only for audio but also for other domains [20, 21].

Plenty of methods exist to monitor the similarity of features, manipulate them, and extract them from the original audio data using machine learning methods. A few of these approaches employ non-supervised learning approaches to tackle audio similarity issues. For example, Henaff and Jarrett [22] encoded audio tracks using base functions and used support vector machines for classification. Guo and Li [23] also utilized binary SVM techniques, introducing a metric they dubbed Distance-From-Boundary to ascertain the similarity between files. In audio classification, DNNs predominantly adopt supervised learning approaches, where deep learning techniques extract feature vectors from original audio files for tasks like classification and similarity Checking. Rajanna et al. [24] compared classical machine learning techniques in audio classification tasks with neural networks operating on the same data, finding that, on average, DNNs yield the best results. They employed manifold learning to diminish dimensionality after extracting Mel Frequency Cepstral Components [13, 25] from a set of audio tracks. Feature vectors are handy for characterizing audio files, whether for similarity checking, classification, or dimensionality reduction in DNN-based tasks. The choice of features depends heavily on the specific model employed for feature extraction, and various techniques have been described in the literature, as evidenced by studies such as those by Safadi et al. [26]. Deep neural networks are well-suited for extracting feature vectors, offering a way to uniquely visualize intrinsic information of audio data. Becker et al. [27] explored feature extraction using deep neural networks in the audio domain, employing an approach named Layer-Wise Relevance Propagation to identify relevant characteristics. We attempt to distinguish our approach from those in the literature by employing transfer learning from the visual to the audio domain. While traditional transfer learning associates trained weights with related fields, we extend it to address tasks across entirely different domains, such as utilizing visual domain training to tackle audio domain tasks, as demonstrated by Mun and Shon [28].

Our method uses DNNs trained on standard visual classification problems as feature extraction tools in the visual domain, operating on audio files which have been turned into their own unique visual representations.

Another important aspect of our system is the use of knowledge graphs to represent audio files through a graph database in place of classic relational databases. Visualizing audio data through graphs can be immensely helpful when dealing with the commonalities and similarities between them and lets us understand common traits of different pairs of .mp3 files. Cui et al. [29] use a multi-modal Knowledge Graph Convolutional Network (MKGCN) to determine the similarity of different audio tracks and create a recommendation system that takes advantage of the multi-modal knowledge of audio data and the high-order semantic and structural information contained in each item.

Our approach maps the retrieved crawling artifacts to nodes of a graph database to find their common elements. We summarize in Table 1 all the approaches used in this paper.

3 The proposed approach

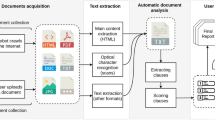

A rule-based focused crawler capable of detecting Copyright infringement (Figure 1) has been implemented to address the previously described issues. The crawler is capable of performing adversarial information retrieval tasks in the audio domain by taking into account the legality of the files. Additionally, the system is highly scalable, considers more abstract notions such as fair use and remixes when dealing with potential copies of legal files, and is capable of analyzing complex relationships between stored representations of the audio files thanks to its knowledge graph database.

We define the focused crawler as “Rule-based". The crawler must follow a set of rules related to the type of audio-files to be retrieved while following different hyperlinks. Unlike its on-line selection policy, these rules are related to the properties of the audio files, such as the file extension, length, date of upload and other information obtained from the metadata of each file. The actual content of the audio track, that is, the audio signal itself, will be later analyzed to extract an abstract representation of the file. However, the rules used to initiate the crawling operations deal solely with the information immediately determined from looking up the audio file. Adversarial information retrieval tasks are then performed on the retrieved audio data. Adversarial information retrieval refers to the study and practice of retrieving relevant information while contending with deliberate attempts to manipulate the retrieved data or mislead in any way the retrieval process. In this context, adversaries may employ various tactics, such as modifying the audio data to mask illegal content, abuse the tagging system using techniques like keyword stuffing or deliberately inject illegal content in the audio collection. In order to maintain the integrity and reliability of search engine results despite adversarial actions, our Focused Crawler interacts with modules that allow it to determine whether the obtained files resemble any of the illegal or otherwise copyright-protected audio files stored in a reference collection. We will discuss the methods employed to determine file illegality in the following paragraphs. The system is divided into the following components:

-

Graphic User Interface (GUI): the system interface that allows users and administrators to send queries to the focused crawler and database;

-

Focused Web crawler: performs audio retrieval tasks on very large repositories (either locally or online) given an initial seed or starting query. The crawler itself has different submodules used to schedule its next visited pages, filter pages according to its selection policy, and download the content of the pages to a local database. This particular instance of the crawler works in the audio retrieval domain and hence returns .mp3 files;

-

Feature vector extractor: part of the pre-processing that deals with extracting information from the retrieved audio signals. The Mel Frequency Cepstral Components obtained from an audio file are visual representations of the intrinsic properties of the track;

-

Metadata extractor: part of the pre-processing that deals with extracting the metadata of each retrieved audio file, which consists of tags written by the uploader of the track to distinguish it from other ones;

-

Knowledge base: all the information retrieved by the crawler (Web pages, .mp3 files, repositories, etc.) as well as all the data pre-processed by the system (extracted Mel Frequency Cepstral Components, metadata, legality flags, etc.) are stored in a graph database. The nodes and relationships between them form a large knowledge graph, which allows us to obtain information on how each track is related to other tracks, as well as possible elements to determine file similarity;

-

Combined similarity scorer: the system combines the data obtained by the metadata and feature vector extractor modules to determine file similarity between newly retrieved audio files and those present in a reference collection, flagging the files that most closely match the references.

The main issue with implementing a focused crawler performing an Adversarial Retrieval task in the audio domain stems from the larger size and wealth of information of the files contained in the browsed repositories. These files are defined by both their content (the audio signal and its characteristics) and the metadata related to the various aspects of their creation (including information on title, authorship, audio codecs, bitrate, and so on). While metadata can be considered a structured text document and thus handled as one, the actual content of the audio file hides an extra layer of complexity. Two audio files with wildly different metadata and different audio signals may still legally refer to the same audio track, even though the two files have very little data in common. While classic Adversarial Retrieval tasks mainly deal with textual documents, blocking certain results is as trivial as checking for specific keywords and their alterations; audio data present a more nuanced problem. Audio files can be modified in several ways, which prevents us from discarding some and saving others using simple rules. To circumvent this issue, the proposed approach uses the intrinsic information of legal audio data to determine whether newer files scanned by the focused crawler must be discarded. The system we have proposed has a highly scalable architecture. This is thanks to the individual crawling processes acting completely independent from each other, retrieving data from disparate parts of the Web and storing their partial results before they are collectively compared to the reference collection. This can potentially allow us to create multiple instances of the same focused crawler on different servers, using sharding and duplication techniques to store an ever increasing amount of audio files which can then be transformed in its abstract, much less space-consuming formats. This paper however will not discuss any distributed architecture, leaving the possibility of creating a multi-server instance of the rule-based focused crawler to future studies.

3.1 System knowledge representation

Our focused crawler analyses the textual metadata and the extracted features for each audio track. However, there is still the need to better contextualize this data within the larger environment that is our music repository. However, we can take advantage of the fact that we are indexing audio files with a .mp3 file extension and use the specific knowledge contained in such files to create a knowledge graph representation of the structure of all repositories involved in the crawling phase. We have used the MPEG-21 audio standard to represent all metadata information pertaining to .mp3 files formally. We have picked .mp3 as our preferred item to query through the focused crawler thanks to the trade-off between the wealth of information stored in a single audio file and its relatively compact size. .mp3 audio files contain extensive information in the form of metadata coupled with the audio signal. This also lets us use a music ontologyFootnote 3 to express general concepts for audio file relationships and metadata through the use of the RDF/XMLFootnote 4 language. The ontology allows the creation of semantic artefacts that let us discern information from raw data, like what a generic audio signal can provide. To formalize the different aspects of the audio files, we can use an ontology-based knowledge graph, which lets us better express the difficulty in dealing with both legal and illegal audio files and show the relationship between them. A knowledge graph specifically built to handle information in the music domain contains the data, sources, and relationships needed to determine file legality once retrieved by the implemented focused crawler. This knowledge graph has been implemented using the NoSQL graph database ArangoDBFootnote 5, which lets us index large amounts of data and their relationship within a moderate amount of disk space, speeding up retrieval, comparison, and feature extraction operations on the audio files. The Knowledge Graph has been implemented using RDF (Resource Description Framework), which allowed us to create rich relationship between the data elements. RDF is a general, standardized framework utilized for representing connected concepts, especially when dealing with Web data. The data of our Knowledge Graph has been represented using RDF Triples, semantic blocks that contain atomic information concepts in the form of !‘Subject?‘ !‘Relationship?‘ Object. For example, the relationship between an author and their song is mapped as !‘Author?‘ !‘Has created?‘ Song. This system is incredibly expressive and allows for quick searches and clustering of the available data. Our focused crawler writes information discovered on the Web or local repositories to the graph-DB instance, mapping metadata and relationships to individual nodes of the graph. Each audio file the crawler finds is divided into its metadata and the corresponding audio data, which is saved as a document and managed by the database in a multi-model manner.

A simplified version of the knowledge graph discussed in this section is shown in Figure 2. A knowledge graph is a structured representation of knowledge that incorporates entities, relationships, and attributes, providing a comprehensive and interconnected view of information related to our problem. The graph represents the elements we are interested in indexing, analyzing, and potentially hiding from the end user. It also shows the relationship between individual elements composing the environment the focused crawler explores. The graph also illustrates a breakdown of .mp3 audio files as defined by the MPEG-21 standard. The elements are divided as such:

-

Audio Tracks: audio signals and the corresponding metadata, they represent tracks a user wishes to listen to;

-

Illegal audio Tracks: audio tracks illegally uploaded to a repository. They can be an exact copy of a legal audio file or a modified version of the original audio track to elude recognition systems;

-

Repositories: containers of audio files that can be accessed either locally or through connecting to the internet;

-

Reference audio Tracks: audio tracks that have been designated as protected by either copyright law or due to a conscious choice by the system administrators;

-

Reference collections: repositories of Reference audio Tracks decoupled from other Repositories;

-

Albums: a group of audio tracks released by the same artists.

The relationships between said elements can be defined depending on which elements are connected to each other. They can be described as:

-

collection-to-audio Track (C-AT): describes the ownership of a certain audio Track to a specific Repository;

-

Reference collection-to-Reference Track (RC-RT): describes the ownership of a certain Reference audio Track to a specific Reference collection, as these files are not visible to the end user;

-

Illegal audio Track-to-Reference Track (IAT-RT): describes the relationship between a legal file and its illegal copy. As this relationship is non-trivial, due to possible modification of the illegal file metadata and properties of its signal, the pair of files must be analyzed first in order to determine possible violations of the repository rules;

-

collection-to-Album (C-AL): describes the presence of a set of audio tracks linked together by a set of similar tags and their relationship to the repository it has been uploaded onto;

-

Audio Track-to-Album (AT-AL): describes the audio tracks contained in a single album, sharing part of its metadata.

Virtually all audio Tracks contained in any Repository can potentially be illegal, but for the sake of clarity, only the IAT-RT relationship has been highlighted. The audio data cannot be directly compared to other reference audio files, as copies may contain alterations that can bypass simple checks on file integrity. However, audio files maintain their intrinsic properties even after being modified, which can be fully exploited to determine file similarity. We will discuss in more depth how we reach a method to assess file similarity in the following subsections.

3.2 Crawler-based architecture

A rule-based focused crawler with obfuscating capabilities has been developed as a software system comprising various interconnected modules designed to provide both versatility and extensibility. This system incorporates a user-friendly graphical interface, a crawling component responsible for file downloads, an indexing component for metadata handling, a reference collection of audio data housing illegal tracks, a neural network employed for extracting feature vectors from the reference tracks, as well as a scoring module responsible for calculating a combined similarity score by computing scores from metrics obtained from other modules. The various components of the system, including the focused crawler itself, have all been implemented using Python modules, which lends itself perfectly to both Web crawling and feature extraction operations thanks to its vast assortment of problem-specific libraries, whereas the indexing component has been developed using Apache SolrFootnote 6. We provide a representation of the system architecture in Figure 3.

Our system waits for user queries to begin operations during idle. These queries can be submitted through the graphical user interface and serve as the trigger for initializing the process of crawling through a document collection, which can either be hosted on a local machine or obtained from a webpage. The Web crawler is an Apache NutchFootnote 7 instance, and its crawling and indexing modules can be invoked by different programs by employing a Python API. It is essential that data requests do not overwhelm the target website. Thus, the Nutch crawler adheres to principles of politeness, ensuring it does not overwhelm the website with an excessive number of requests. Moreover, it respects the crawling protocols stipulated by the owner of the target website, which is outlined in the robots.txt file on the Web server, and explicitly marks itself as a Web crawler to declare its intentions to the website. The Nutch crawler initiates a preliminary filtering process on the acquired data, analyzing the downloaded pages to identify all .mp3 files obtained during the crawling phase, which are the only ones we are interested in maintaining on local storage. We can also specify a depth level for Nutch, so that it may explore further through the directories of a local repository or follow the hyperlinks present in the target Web page. Subsequently, the system proceeds to analyze the pertinent audio files retrieved. These files are initially indexed in an Apache Solr instance, organized based on the metadata that the Nutch Crawler has extracted from each retrieved audio file. This preliminary indexing operation is performed as handling metadata is much less resource-intensive than handling large feature vectors. Thus, the crawler can temporarily store information to aid in its scheduled searches without violating its own selection policy. All the metadata retrieved and stored in the Solr instance is transferred to ArangoDB once the crawling phase is complete. This is done to form relationships between files only once all the files have been successfully retrieved. To determine the similarity between files, the system compares the metadata contained in the files retrieved by the crawler with that found in the reference audio files, deriving textual similarity scores for each pair of files. This scoring process is based on the semantic distance between metadata tags of each pair of files, utilizing Cosine similarity as the underlying metric. If any of the tags of the audio file are missing, our system disregards the contribution of that particular tag to the Cosine similarity calculation. Following this textual analysis, the audio files are converted to .wav format, filtered to determine their visual representation using Mel Frequency Cepstral Coefficients (MFCCs), and finally passed to a module that extracts their feature vectors and compares it to other reference feature vectors. This module contains a trained neural network designed to obtain feature vectors from the tracks. We employ cosine similarity as the technique for comparing these feature vectors to those extracted from the tracks in the reference collection. The system offers flexibility in its execution, allowing it to operate either sequentially or in parallel. In the parallel execution mode, the two scores (textual and feature similarity scores) are computed simultaneously. The scores for each of the pairs of audio files and the ones in the reference collection are then submitted to the scorer module. Within the scorer module, the system computes a combined similarity score for each pair of files using one method chosen among Weighted Sum, an OWA operator, or a textual-feature sequential score. These combined similarity scores are then compared against a predetermined threshold derived from previous results on a partial set of the reference collection. If the score for any pair surpasses the threshold, the analyzed file is marked as potentially illegal. Instead of outright deletion, the system employs obfuscation for the raw data of the flagged file. This way, potential disputes on file legality or erroneous decisions can be solved in the future without having to remove data from the repository. After every combined similarity score is determined, the system selects which files to display based on user queries, concealing flagged illegal files from public view. Every file in the reference collection is already designated as “illegal” to simplify our system, and is thus excluded from user query results to prevent any sensitive data from leaking to malicious users.

3.3 Crawling phase

Figure 4 represents the operations performed during the entire crawling phase.

The Graphic User Interface (GUI) allows users to input queries. System administrators can also access tools to modify the parameters used for the crawling phase and the weights for different scoring systems. These parameters encompass the initial Seed, which can be either the URL of a Web page that must be crawled, or the full path of the directory that the crawler will explore. Additionally, users can provide query keywords to search for within the tags of files slated for indexing. The keywords must be separated by spaces. There is also an option to use a Blacklist, housing Keywords that must be excluded from the results, with the ability to specify its file path. Upon clicking the “Begin Crawl" button, Apache Nutch starts several crawling threads. The GUI communicates with Apache Nutch and Apache Solr using some Python libraries, nutch-api and subprocess. The former interacts with the Apache Nutch Server operating in the background, providing details about the instance of the Solr Server accepting data for indexing and various parameters to configure the crawler. These parameters may include specifications like the level of depth or stopping criteria. The default level of depth of crawling searches is one, meaning that the crawler exclusively preserves files from the main webpage or chosen directory and from the initial pages or subdirectories available. Within the crawling phase, the GUI interacts with a module called crawlerlogic. This module evaluates and assigns legality Flags and legality scores to discovered audio files. The crawling phase results in .mp3 audio files enriched with metadata. Tags contained within the metadata can pertain to the properties of the audio file (bitrate, length, codecs) or can be related to the origin of the file (author, year of release, album), as well as external information (album cover art, spot on a playlist), and so on so forth. This metadata is extracted using Apache Tika. As previously mentioned, the textual similarity scores of each pair of audio file under analysis and the tracks sourced from a reference collection are computed using Cosine similarity. The formula for Cosine similarity is:

where vectors \(\overrightarrow{a}\) and \(\overrightarrow{b}\) must contain elements of a matching data type. Textual similarity scores are computed using two vectors consisting of string arrays correlating with metadata tags. The maximum score occurs when both files share identical metadata, whereas the minimum score occurs when they lack common keywords in their tags. Every textual similarity score generated between pairs of audio files is stored locally on the reference repository and as part of the info saved in the knowledge graph. The scores are then transmitted to the combined similarity scorer module. The acquired audio tracks cannot be directly inserted in the deep neural networks used in a specific crawling phase, as these networks are trained to function within the image processing domain. Consequently, a visual representation of the tracks is imperative to extract their feature vectors. Initially, audio data must be converted from the .mp3 to the .wav file extension. Additionally, we reduce the input dimensionality for the deep neural networks by cropping only 30 seconds’ worth of data out of the modified audio files. For shorter files, an appropriate padding length is appended at the end. The modified files undergo processing to derive their visual representations, characterized by their individual Mel Frequency Cepstral Coefficients.

3.4 Mel frequency cepstral components

Davis and Mermelstein introduced a significant contribution to the field of audio recognition through their development of Mel Frequency Cepstral Coefficients (MFCCs) [13]. These numeric values are distinctive of every audio signal, collectively forming what is known as their Mel Frequency Cepstrum. The term “Cepstrum" [30] denotes the outcome of a mathematical transformation within Fourier Analysis, enabling the study of phenomena related to periodic signals by examining their spectral components. A “mel" denotes a measurement unit for the perceived acoustic intonation of an individual tone, appropriately adjusted to accommodate the sensitivity of the human ear. An MFC is a particular representation of the frequency spectrum of a signal that emerges after applying a logarithmic transformation to the spectrum. We start with the original audio data, as shown in Figure 5. To derive its Mel components, an audio track undergoes a transformation process involving a sequence of triangular filters, as depicted in Figure 6. The filters are spaced so that each preceding filter central frequency aligns with the subsequent filter source frequency. The response of each filter progressively amplifies from its source frequency to its central frequency before gradually diminishing to zero at its end frequency. After this stage is complete, a Discrete Cosine Transform is performed on the newly filtered signal. The objective of this phase is the decorrelation of the coefficients originating from the series of filters. These decorrelated coefficients constitute the mel components of the audio signal. An example of the extraction of MFCCs from an audio signal is illustrated in the following figures. The original audio data is depicted in Figure 5, while 6 shows the triangular filters employed. The formula devised by Davis and Mermelstein for calculating MFCCs considers a sequence of 20 of these triangular filters, and can be described as:

Where M is the number of coefficients that must be retrieved from the input signal, while \(X_{k}\) is the logarithm of the output of the k-th filter.

The output of this process can be seen in Figures 7 and 8. The spectrogram of the modified audio file is analyzed to derive its graphical representation thanks to Mel filtering.

3.5 Feature extraction phase

Following the transformation of the audio tracks in their visual representation, the system proceeds with the extraction of the feature vector of each file. The visual representations serve as inputs to one of the selected neural networks, which have all been pre-trained on a music genre prediction task. The pre-training phase for the selected network is performed on a annotated reference collection which has been annotated with the specific music genre of each track. The central focus of this paper lies on the quality of the features that can be extracted from the audio files, as they can significantly influence the ability of the system to match tracks to similar ones. We extract the hidden layer from the network immediately preceding the classification output layer. The matrix obtainable from the final hidden layer is notably larger than all the preceding ones, encompassing a large amount of information obtained from the low-level features of the input data in order to define the higher-level features. The output of this hidden layer constitutes a unique feature vector for an individual audio file, enabling a comparison with other reference files to assess its legality. The comparison of similar feature vectors across a very large set of audio tracks is essential for correctly identifying illegal copies of reference audio files, even if they underwent various modifications. In our work, we employed various neural networks, including VGG-16 [31], ResNet [32], a Monodimensional VGG-16, and a custom Small Convolutional Network (Small Convnet) containing a reduced amount of internal layers than the reference networks. As for recurrent neural networks, we have chosen to use a Long-Short Term Memory network (LSTM) [33]. An internal batch normalization layer has been added to all the networks in order to accelerate convergence times during their execution of classification tasks.

The VGG-16 and ResNet networks are of particular interest to us. These two networks have been improved thanks to the use of predefined weights obtained from a pre-training on the very popular Imagenet dataset [34]. Transfer learning plays a pivotal role in this context, allowing us to leverage knowledge acquired for one problem to address a completely different one. The weights derived from Imagenet data, used initially to discern object classes in images, are repurposed to identify discontinuities in the visual representation of the power spectrum of the audio tracks. Figure 8 depicts the obtained MFCCs for an audio track. To accommodate our networks minimum input matrix size requirement of 32x32x3, audio signal representations have been expanded through sampling at mel frequencies significantly higher than the ones contained in the original audio data. The training dataset has been extracted from the GTZAN libraryFootnote 8 and is made of 1,000 audio files. The tracks last 30 seconds each are and distributed across 10 different music genres. These files originally had the .au file extension, requiring a conversion in .wav files in order to make them compatible with the deep neural networks. These networks are defined through the use of Python libraries provided by Keras and SciPy. The cosine similarity function has also been implemented through SciPy. The MFCC coefficients are obtained using the features of the python_speech_featuresFootnote 9 library. The neural networks described earlier in the section require visual representations of the audio files as their inputs. The output of this module does not come from the actual output of the neural networks, but rather from the activation from their final hidden layer. The resulting feature vector is distinctive for each pair of analyzed-reference audio files. However, when the file under analysis shows substantial similarity to another reference audio file, despite potential variations in any of its properties, like tone, duration, pitch, volume, and other attributes, their respective feature vectors will exhibit high similarity. A quantification of how closely a pair of files align regarding their features is given by their feature similarity score.

3.6 Scoring and evaluation phase

The scorer module is responsible for calculating textual and feature similarity scores and subsequently transforms them into a combined similarity score. To achieve this, we have defined three different combinations of scores:

i) Sum of the scores: In this approach, both the textual and the feature similarity scores are subject to multiplication by predefined weights, and the results are summed together. The reference file associated with the highest combined similarity score is identified for each of the analyzed files, by applying the following formula:

This method effectively evaluates the similarity between files by considering both textual and feature-based aspects, with customizable weights offering flexibility in the scoring process.

ii) Ordered Weighted Average operator over feature similarity scores: In this approach, the textual similarity scores of an analyzed file are first normalized by dividing them by the number of all reference files, resulting in a sum equal to one. Subsequently, this normalized score is employed as a multiplier for the feature similarity score of each reference file. The reference file with the highest cumulative score is recognized as the one most similar to the track under being analyzed. The formula for this combination of scores can be expressed as follows:

This method lets us evaluate the similarity between files by considering the normalization of textual similarity scores and leveraging them in combination with feature similarity scores to determine the most similar reference file to the analyzed one.

iii) Sequential textual-feature score: This method involves a two-step process. Initially, only the textual similarity score is considered for each pair of analyzed file and reference file, eliminating from any future checks all the reference files with textual similarity scores that fall below a certain threshold set by experiments. Only those files that surpass this threshold are retained, forming a new filtered set of reference audio tracks. Subsequently, the system computes the feature similarity score between the remaining group of reference audio files and the audio files under analysis. We pick the reference file with the largest feature similarity score among the processed ones as the matching track. We can summarize the steps taken in this mode as follows:

Each of these scores is stored in dedicated variables. System administrators can extract and analyze these scores to determine which combination yields the best results for the collection. The systems defaults to the “Sum of the scores" method, but it can be changed in the system settings. It is worth noting that different thresholds may need to be considered depending on the scoring function used to determine the legality of the audio tracks. As an example, the OWA operator typically generates the lowest output scores when compared to the other methods, necessitating more precise thresholding. The sum of the scores is strongly dependent on the selection of its weights f e t. The sequential textual-feature score discard all files that have had all their metadata stripped. Tuning of the threshold values is achieved through various tests on subsets of the original data and must align with the scoring system employed for a subset of the reference collection.

3.7 The implemented system

As previously stated, our system has been implemented via the interaction of Python modules, the Apache Solr and Apache Nutch environments, and a repository of audio files. At the end of the crawling phase, the Apache Solr instance communicates all retrieved elements to an ArangoDB database instance.

Figure 9 contains the GUI of the system. The GUI has been implemented using the Python tkinter library, allowing developers to create custom graphical interfaces quickly. Each field in the GUI sends a signal to intermediate functions that work on the user-entered information. The user specifies the base domain from which to start crawling or the file path of a folder, along with a list of resources they are interested in or not, respectively, in the Query Keywords and Blacklist Location options. The second option must be confirmed by checking a box on the GUI. If separate words are used within the blacklist, and its option is checked, then all files containing those words will be excluded from the final search. Alternatively, the user can specify only the keywords without a domain name. Doing so will result in a direct search of the indexed files to check their legality against a set of keywords. Finally, the system will not perform any operations if no option is entered.

When the user presses the “Start Crawl" button, the program interprets the domain name and keywords, optionally normalizing and formatting the URL or folder name and using the keywords to search the indexed data. If no keyword is entered, the program assumes that any audio file found during crawling should be preserved and indexed. Afterwards, the GUI communicates the query to both Apache Nutch and Apache Solr by the use of two Python libraries, nutch-api and subprocess, respectively. The first interacts with the background Nutch Server, which contains information about the instantiated Solr Server to send the indexing information and the depth of the crawl operations, defined as the number of rounds or page-to-page jumps that the crawler must perform. The crawler browses only the first encountered Web page and its immediate subpages, and only saves the audio data found within them. The module responsible for controlling and assigning values for the legality Flag and legality score for a given track file when a search is started from the GUI is called crawlerlogic. A special option, stored in a dropdown menu, allows the selection of the neural network selected for feature the extraction of feature vectors from the retrieved audio files and similarity score calculation with the reference collection. This option is only available to system administrators to test the various combinations of neural networks; thus, it can only be selected during testing phases and is otherwise hidden from the end user.

An instance of an Apache Nutch is executed in parallel with the main program. The Apache Nutch server must be manually initialized from the command line to perform crawling or indexing operations. After clicking the “Start Crawl" button, the program interacts with the Nutch Server using the nutch-calls module. This module facilitates the communication between Python and a Nutch Server by instantiating a JobClient object. The JobClient object contains all the logic related to the basic operations executed by Nutch and the Solr Server. It also includes the starting and destination folders or sites and optional steps for crawling and indexing. The JobClient instance is used to create objects of the Crawl class responsible for initiating the actual crawling through the folder or domain. Moreover, these objects are responsible for sending a signal to Solr for indexing. They send basic commands to the Nutch Server, which can run locally or remotely. The data retrieved by Nutch is saved locally and later analyzed during the indexing steps to send the appropriate information to Solr. An Apache Solr Server runs in parallel with the main program. The Apache Solr server must be manually started from the command line to perform indexing and search operations. The main Python module for interaction with Solr is solr_calls, which contains various functions used to create queries to the Solr index instance, send update requests, and set and check the legality Flag and legality scores of documents sent to Solr. It also includes some support functions for translating the content and metadata retrieved from Nutch into a set of tags and features better defined than those viewable through Solr for each audio file. The need to modify the content downloaded from Nutch is due to how Nutch manages metadata for non-textual files or Web pages. Instead of identifying associated tags for each metadata field, Nutch collects various tags into a single field called “content". Thus, to compare two different audio files, the implemented Python modules decompose the “content" field of each file into its own fields and subfields, then compare the list of tags for each file to return a list of tags shared by each audio file pair. Additionally, solr_calls can perform the following actions: it can update the collection after a search; save the list of retrieved files returned from a query, and their corresponding scores; gain control over the whole reference collection and calculate scores for the found files in comparison to all reference files; select the top K number of results to return.

The module operates as follows: it establishes a connection with the active Solr Server instance, performs an edismax query (Extended DisMax query parser) using the user-provided keywords as input, and specifies the number of elements requested for TOP K. Subsequently, the server provides a JSON response, which separates the documents into an array of retrieved documents and a reference document array (used in similarity testing phases), along with the total number of documents found during the search, skipping reference files. Optionally, the reference document array can also include all documents in the collection, not just those that match the search parameters. The array of retrieved documents is then analyzed to obtain information about the metadata and feature vectors of each file, passing them through the chosen neural network. Once all relevant information is extracted, the program iterates through all the retrieved files and, for each reference file, calculates similarity scores defined by the scoring module, obtaining five scores (textual, feature, combined sum, combined with OWA operator, and combined sequentially), as well as the most similar reference file for the analyzed file. The resulting list of values, containing documents and scores, is then sent to the crawlerlogic module and presented to the user on the screen. The module responsible for evaluating the various scores is the scorer. It takes two objects of the same type as input and, based on their data type, attempts to make the necessary transformations and then applies the Cosine similarity formula as defined in (1). Furthermore, the module also contains functionality to compute the combined textual and feature similarity scores discussed in Section 3.6. Both textual and feature similarity scores can be calculated by passing the JSON representation of the metadata of the audio files under analysis and reference, as well as the extractions (in the form of a matrix of floating-point values) of the layers of the neural network under examination. The output of the crawling session is displayed on the Python console (Figure 10) but can also be saved to disk. The resulting indexed data can be analyzed by connecting to the Apache Solr server (Figure 11).

Once all the documents have been retrieved from a repository, the newly found elements are sent to ArangoDB to improve the knowledge base. The python-arango library links up elements from Apache Solr to their representations as nodes in the graph database.

4 Results

The system described in this paper aims to use its knowledge base and a document collection containing protected audio files as the reference to determine the legality of crawled data and to return only the most relevant legal file to its users. The metric employed in the evaluation of the performance of the system is a modified version of the F1-Measure. As we are dealing with the problem of adversarial retrieval, the F1-Measure used in this test is computed as:

Where j denotes the rank of the j-th document contained in the result set, r(j) is the value of its Recall, and P(j) is the value of its Precision. All the illegal documents present in the dataset have been searched through the use of a wildcard query, using the “*" character. This equation lets us determine the likelihood that the system can obfuscate any of the illegal files that would have been otherwise returned in the result set, regardless of whether these illegal files could have been altered in some capacity. The tests were conducted using a reference dataset containing audio tracks sourced from the Free Music Archive (FMA) library. The files were obtained from the “medium" version of the archiveFootnote 10, which encompasses 25,000 audio tracks, each lasting 30 seconds and distributed across 16 different music genres, with a total weight of 22 gigabytes. The collection was locally stored to avoid any issues with sending multiple requests to the FMA Web Server, although the tests can be run on any website containing audio data.

4.1 Evaluation strategy

The evaluation process encompasses the following steps:

-

1.

The implemented neural networks were trained on a set of audio files from the GTZAN collection, divided by genre. A preliminary conversion from .au to .wav was performed on all the audio tracks in order to optimize the way the underlying neural network processes them.

-

2.

Several “illegal" audio tracks were generated by randomly altering the properties of volume, tone and pitch of some of the reference tracks. These modified tracks were then integrated into the dataset to provide a real use case of illegal tracks contained within a legal track collection.

-

3.

We extract the activations from the MaxPooling layer on the tail end of each of the deep neural networks used in the feature vector extraction phase. These will allow us to check for file similarity when comparing the representation of potentially illegal files to the ones obtained from reference files. Similarly, we extract the equivalent final layer of the long-short term memory network to perform file similarity checks.

-

4.

Each audio file is computed by the chosen neural networks, obtaining a feature vector descriptive of the properties of the signals. Cosine similarity is then applied to the descriptors, returning the actual similarity between analyzed files.

The assessment of experimental results presents several challenges that need to be addressed. One of these challenges is the granularity of the solution, as the system may flag a track as “illegal" if it bears a high degree of similarity to any of the files within the reference collection. This approach can potentially result in several false positives, where multiple files are incorrectly flagged as illegal, despite the fact that there might not exist an identical copy of them in the reference collection. Two testing approaches were employed to tackle this issue. The first test focused on identifying any potentially illegal audio track contained within the dataset that the system recognizes without considering the presence of any matching reference track. The second test aimed to identify an exact match between any illegal file in the dataset and any element of the reference collection, serving as a metric for evaluating whether our system is capable of performing adversarial retrieval tasks correctly. Selecting appropriate threshold values and weights for the scorer module presents another set of challenges. In fact, each mode of operation of the similarity scorer module (as described in Section 3.6) necessitates precise tuning of its parameters to solve the problem of flagging files as illegal when their legality may be ambiguous. The choice of weight combinations can significantly impact the importance assigned to either textual or feature scores, potentially resulting in false positives or false negatives. Thresholding values and the parameters of each combination of combined similarity scores and correlated deep neural network have been determined following a series of tests on the dataset.

4.2 Experimental tests

The first test evaluates the ability of the system to handle illegal tracks. The primary objective is to assess how well the system can detect illegal audio tracks hidden in repositories containing legitimate files without the system knowing of their intrinsic properties, and still correctly classify them when compared to files in the reference repository. This test was conducted on a collection of 7,049 files from a subset of the FMA medium archive. The system tries to identify rule violations while searching for files using keywords from user queries, employing Cosine similarity to analyze dictionaries of metadata. Notably, users of our system can browse only a subset of the files from the dataset, the ones that the system found different enough from the protected uploads at query-time; omitted (illegal) results remain hidden. Our approach is mainly focused on the task of Adversarial Retrieval (obfsucation) of potentially illegal files. For this reason, the metrics used for the evaluation of our results can not be mapped to other approaches that lack the goal of hiding query results according to some precedently specified rule. It is important to note that all documents belong to the same class (illegal files) in this particular test. The goal is to obfuscate any document that could be returned in the result set for the query, yet that could also be an illegal upload if it is too similar to any of the files in the reference collection. Consequently, the system calculates the F1-Measure by identifying every illicit document and categorizing retrieved, non-illicit documents as irrelevant for the scopes of the test. On the other hand, all the documents in the result set that are flagged as illegal are considered relevant for the test if the system has correctly identifying the corresponding track in the reference collection.

In this experimental assessment, both textual and feature evaluations are accorded equal significance, meaning that the values for each weight were assigned as \(t=0:5\) and \(f=0:5\). The total set of indexed files encompasses 7,094 unaltered files, 1,137 files subjected to modifications in their characteristics and metadata, and 855 protected reference files. Specifically, the metadata fields modified for each of the illegal files, among others, were the author, title and album fields. The characteristics that were modified for each of the illegal files are pitch, tone and volume adjustments. Focusing exclusively on the illicitly modified files, the ground truth vector for this examination exclusively incorporates positive values, denoted as 1s. This approach is adopted because each scrutinized document in this test inherently pertains to the obfuscating search, rendering them relevant to the scope of the test. The prediction vector is derived by amalgamating searches for all conceivable combinations of modified files. Post-test execution, the vector contains 1s for each correctly identified illegal file and 0s for instances where an illegal file is erroneously identified as legal, or when the most analogous reference file does not match the original reference file from which the modified version was derived. The results of this test, displayed in Figure 12 and Table 2, include evaluations based on file illegality detection and reference file matching. The two calculation methods involve identifying file illegality and then evaluating similarity scores against a single predefined threshold. The thresholds have been averaged from the results of several preliminary experiments.

The total count of accurately identified tracks has been assessed through two distinct methodologies for each similarity score method: firstly, by determining the illegality of files while disregarding the correspondence between the illegal file and its reference file, and subsequently evaluating the similarity score against a single threshold. The threshold values for each combined similarity score have been averaged based on preliminary tests. The selection of these thresholds is a critical and discriminatory factor in ascertaining the illegality of the analyzed files. Although opting for a uniform threshold value for all aggregated similarity scores facilitates simultaneous comparison of all methods, it may not be the optimal approach to discern the strengths of each combination of neural network and scoring method. Given that different neural networks yield distinct feature vectors, the similarity scores for feature extraction may be completely different values depending on the methodology. For instance, despite LSTM appearing to be the least effective among the implemented networks, it could potentially achieve superior results by adjusting threshold values in accordance with the lower overall scores obtained from feature extraction. Following these results, we pondered whether the system could match illegal tracks with the actual reference files from which they were modified. A second test has been performed by changing the file name of both the modified track and its original reference track, where the system must check whether the most similar file to the modified, illegal track has the same file name as the illegal file itself. The testing module computed the micro average F1 measure assigning the same degree of importance to each of the files in our dataset. Our first test contains only one class for both the Precision and the Recall metrics: the class of illegal files. Tracks contained in the class of legal files are excluded from the test result. Thus, the value of the Precision for the documents that have been correctly classified by the system is 0, whereas the Precision for incorrectly classifying audio tracks is 1. Our second test does take into account the legal files class. In this case, we obtain a Recall value for the class of illegal files that have been correctly assigned an “illegal” flag that is identical to the value of the Micro Average F1-Measure.

This experiment demonstrates that, assuming a fixed value for the thresholds and ignoring certain aspects like the total number of modified and protected files, the VGG-16 neural network yielded the best performance for both tests when the goal is to identify illegal files. We excluded the time needed to pre train the models, as they are not part of the scope of this paper. However, this does not necessarily imply that VGG-16 can be considered the ideal network for the system, but rather that, out of all the networks employed, VGG-16 excels at extracting unique information from the input tracks. VGG16-1D has a much worse performance in terms of the obtained values, likely because the smaller dimensions of the neural network return much fewer parameters to compare audio tracks. Meanwhile, the Small Convnet, despite yielding middling results, may be a suitable choice if computational resources and time efficiency are more important than overall accuracy. ResNet returns outstanding results but also demands greater execution time and computational resources, which may make it less viable for a potential system deployment when compared to VGG-16.

5 Conclusions and discussion

This paper proposes a rule-based focused crawler system designed to obtain high-level feature vectors from audio files using deep neural networks and to search for information on the Web or in local repositories. The system integrates obfuscation methods for data deemed illegal, while also enriching its reference audio repository through the inclusion of data sourced from illicit files, guided by user input and feedback. This system demonstrates scalability and efficiency in distinguishing legal files from illegal copies. Our results present a technique suitable for search engines to present exclusively legal documents to their users while retaining information about potentially illegal documents as visual representations of their intrinsic properties. Our system leverages VGG-16 and ResNet-50 neural networks, incorporating transfer learning to generate representative feature vectors. Possible improvements to our approach include increased computational resources, extended pre-training and training epochs, distributed architectures, and the utilization of enhanced GPUs. Additionally, as Neural Networks are the subject of much study, especially due to the increased interest in AI technology, different architectures for the feature extraction phases could be considered to improve the approach, according to the overall complexity of newer networks in comparison to the scope of our technique. Furthermore, the system addresses copyright violation concerns by allowing content uploads while simultaneously performing automated checks on potentially harmful uploads. The system aims to identify highly descriptive feature vectors for all audio tracks in accordance with the computational resources and rules provided by system administrators, and to facilitate other forms of information visualization [35, 36]. It also addresses the issue of copyright violation by allowing content upload while automatically checking potentially harmful uploads. However, the system itself does not consider the changing nature of copyright law. In fact, several geographical areas may deal with illegal copies of audio track differently, and the lack of a coherent international legislation on the matter means that the system must first be properly fine tuned and its reference collections updated to reflect the individual rules and regulations of each area. A possible future development could be the creation of a centralized, on-line hub to use as a global reference collection for the most egregious cases, which can be accessed by individual instances of our system to create its own specific reference collection.

Availability of data and materials

Data will be made available upon reasonable request.

Notes

References

Oppenheim, C.: Copyright in the electronic age. Office for humanities communication publications-oxford university computing services, 97–112 (1997)

Sumanth, T., Harisudan, V., Kumar, T., Geetha, K.: A new audio watermarking algorithm with dna sequenced image embedded in spatial domain using pseudo-random locations. In: 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), IEEE, pp. 1813–1817 (2018)

Kadian, A.S., N, A.: Robust digital watermarking techniques for copyright protection of digital data: a survey. Wireless Pers. Commun. 118, 3225–3249 (2021). https://doi.org/10.1007/s11277-021-08177-w

Nair, U.R., Birajdar, G.K.: Audio watermarking in wavelet domain using fibonacci numbers. In: 2016 International Conference on Signal and Information Processing (IConSIP), IEEE, pp. 1–5 (2016)

Kumar, M., Bhatia, R., Rattan, D.: A survey of Web crawlers for information retrieval. Wiley Interdiscip. Rev. Data Mining Knowl. Discov. 7(6), 1218 (2017)

Bokhari, M.U., Hasan, F.: Multimodal information retrieval: challenges and future trends. Int. J. Comput. Appl. 74(14) (2013)

Moscato, V., Picariello, A., Rinaldi, A.M.: A recommendation strategy based on user behavior in digital ecosystems. In: Proceedings of the International Conference on Management of Emergent Digital Ecosystems, pp. 25–32 (2010)

Montanaro, M., Rinaldi, A.M., Russo, C., Tommasino, C.: A rule-based obfuscating focused crawler in the audio retrieval domain. Multimed. Tools Appl. pp. 1–30 (2023)

Ende, M., Poort, J., Haffner, R., Bas, P., Yagafarova, A., Rohlfs, S., Til, H.: Estimating displacement rates of copyrighted content in the eu (2014)

Sturm, B.L., Iglesias, M., Ben-Tal, O., Miron, M., Gómez, E.: Artificial intelligence and music: open questions of copyright law and engineering praxis. In: Arts, vol. 8, p. 115 (2019). Multidisciplinary digital publishing institute

Liu, W., Wang, Z., Liu, X., Zeng, N., Liu, Y., Alsaadi, F.E.: A survey of deep neural network architectures and their applications. Neurocomputing 234, 11–26 (2017)

Wold, E., Blum, T., Keislar, D., Wheaten, J.: Content-based classification, search, and retrieval of audio. IEEE Multimed. 3(3), 27–36 (1996)

Davis, S., Mermelstein, P.: Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. Speech Signal Process. 28(4), 357–366 (1980)

Bifulco, I., Cirillo, S., Esposito, C., Guadagni, R., Polese, G.: An intelligent system for focused crawling from big data sources. Expert Syst. Appl. 184, 115560 (2021)

Liu, J., Li, X., Zhang, Q., Zhong, G.: A novel focused crawler combining Web space evolution and domain ontology. Knowl.-Based Syst. 243, 108495 (2022). https://doi.org/10.1016/j.knosys.2022.108495

Capuano, A., Rinaldi, A.M., Russo, C.: An ontology-driven multimedia focused crawler based on linked open data and deep learning techniques. Multimed. Tools Appl. 79, 7577–7598 (2020)

Knees, P., Pohle, T., Schedl, M., Widmer, G.: A music search engine built upon audio-based and Web-based similarity measures. In: Proceedings of the 30th Annual International ACM Sigir Conference on Research and Development in Information Retrieval, pp. 447–454 (2007)

Kim, C.: Content-based image copy detection. Signal Processing: Image Communication 18(3), 169–184 (2003)

Dhar, P.K., Kim, J.-M.: Digital watermarking scheme based on fast fourier transformation for audio copyright protection. Int. J. Sec. Its Appl. 5(2), 33–48 (2011)

Donahue, J., Jia, Y., Vinyals, O., Hoffman, J., Zhang, N., Tzeng, E., Darrell, T.: Decaf: a deep convolutional activation feature for generic visual recognition. In: International Conference on Machine Learning, PMLR, pp. 647–655 (2014)

Rinaldi, A.M., Russo, C., Tommasino, C.: Automatic image captioning combining natural language processing and deep neural networks. Results Eng. 18, 101107 (2023)

Henaff, M., Jarrett, K., Kavukcuoglu, K., LeCun, Y.: Unsupervised learning of sparse features for scalable audio classification. In: ISMIR, Citeseer, vol. 11, p. 2011 (2011)

Guo, G., Li, S.Z.: Content-based audio classification and retrieval by support vector machines. IEEE Trans. Neural Netw. 14(1), 209–215 (2003)

Rajanna, A.R., Aryafar, K., Shokoufandeh, A., Ptucha, R.: Deep neural networks: a case study for music genre classification. In: 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), IEEE, pp. 655–660 (2015)

Abdul, Z.K., Al-Talabani, A.K.: Mel frequency cepstral coefficient and its applications: a review. IEEE Access 10, 122136–122158 (2022). https://doi.org/10.1109/ACCESS.2022.3223444

Safadi, B., Derbas, N., Quénot, G.: Descriptor optimization for multimedia indexing and retrieval. Multimed. Tools Appl. 74(4), 1267–1290 (2015)

Becker, S., Ackermann, M., Lapuschkin, S., Müller, K.-R., Samek, W.: Interpreting and explaining deep neural networks for classification of audio signals (2018). arXiv:1807.03418

Mun, S., Shon, S., Kim, W., Han, D.K., Ko, H.: Deep neural network based learning and transferring mid-level audio features for acoustic scene classification. In: 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, pp. 796–800 (2017)

Cui, X., Qu, X., Li, D., Yang, Y., Li, Y., Zhang, X.: Mkgcn: multi-modal knowledge graph convolutional network for music recommender systems. Electronics 12(12), 2688 (2023)

Bogert, B.P.: The quefrency alanysis of time series for echoes; cepstrum, pseudo-autocovariance, cross-cepstrum and saphe cracking. Time Series Analysis, 209–243 (1963)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition (2014). arXiv:1409.1556

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 770–778 (2016)

Sak, H., Senior, A.W., Beaufays, F.: Long short-term memory recurrent neural network architectures for large scale acoustic modeling (2014)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, Ieee, pp. 248–255 (2009)

Caldarola, E.G., Picariello, A., Rinaldi, A.M.: Big graph-based data visualization experiences: the wordnet case study. In: 2015 7th International joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K), IEEE, vol. 1, pp. 104–115 (2015)

Caldarola, E.G., Picariello, A., Rinaldi, A.M.: Experiences in wordnet visualization with labeled graph databases. Commun. Comput. Inform. Sci. 631, 80–99 (2016)

Acknowledgements

We acknowledge financial support from the project PNRR MUR project PE0000013-FAIR.

Funding

Open access funding provided by Università degli Studi di Napoli Federico II within the CRUI-CARE Agreement. The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions