Abstract

RoleSim and SimRank are among the popular graph-theoretic similarity measures with many applications in, e.g., web search, collaborative filtering, and sociometry. While RoleSim addresses the automorphic (role) equivalence of pairwise similarity which SimRank lacks, it ignores the neighboring similarity information out of the automorphically equivalent set. Consequently, two pairs of nodes, which are not automorphically equivalent by nature, cannot be well distinguished by RoleSim if the averages of their neighboring similarities over the automorphically equivalent set are the same. To alleviate this problem: 1) We propose a novel similarity model, namely RoleSim*, which accurately evaluates pairwise role similarities in a more comprehensive manner. RoleSim* not only guarantees the automorphic equivalence that SimRank lacks, but also takes into account the neighboring similarity information outside the automorphically equivalent sets that are overlooked by RoleSim. 2) We prove the existence and uniqueness of the RoleSim* solution, and show its three axiomatic properties (i.e., symmetry, boundedness, and non-increasing monotonicity). 3) We provide a concise bound for iteratively computing RoleSim* formula, and estimate the number of iterations required to attain a desired accuracy. 4) We induce a distance metric based on RoleSim* similarity, and show that the RoleSim* metric fulfills the triangular inequality, which implies the sum-transitivity of its similarity scores. 5) We present a threshold-based RoleSim* model that reduces the computational time further with provable accuracy guarantee. 6) We propose a single-source RoleSim* model, which scales well for sizable graphs. 7) We also devise methods to scale RoleSim* based search by incorporating its triangular inequality property with partitioning techniques. Our experimental results on real datasets demonstrate that RoleSim* achieves higher accuracy than its competitors while scaling well on sizable graphs with billions of edges.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

RoleSim, conceived by Jin et al. [9], is a promising role-oriented graph-theoretic measure that quantifies the similarity between two objects based on graph automorphism, with a proliferation of real-life applications [9, 10, 25], such as link prediction (social network), co-citation analysis (bibliometrics), motif discovery (bioinformatics), and collaborative filtering (information retrieval). It recursively follows a SimRank-like reasoning that “two nodes are assessed as role similar if they interact with automorphically equivalent sets of in-neighbors”. Intuitively, automorphically equivalent nodes in a graph are objects having similar roles that can be exchanged with minimum effect on the graph structure. Similar to the well-known SimRank measure [7], the recursive nature of RoleSim allows to capture the multi-hop neighboring structures that are automorphically equivalent in a network. Unlike SimRank that measures the similarity of two nodes from the paths connecting them, RoleSim quantifies similarities through the paths connecting their different roles. As a result, two nodes that are disconnected from each other will not be considered as dissimilar by RoleSim if they have similar roles. For evaluating similarity score s(a,b) between nodes a and b, as opposed to SimRank whose similarity s(a,b) takes the average similarity of all the neighboring pairs of (a,b), RoleSim computes s(a,b) by averaging only the similarities over the maximum bipartite matching of all the neighboring pairs of (a,b). This subtle difference enables RoleSim to guarantee the automorphic equivalence, which SimRank lacks, in final scoring results. Therefore, RoleSim has been demonstrated as an effective similarity measure in a wide range of real applications. We summarize two of these applications below.

Application 1 (Similarity Search on the Web)

Discovering web pages similar to a query page is an important task in information retrieval. In a Web graph, each node represents a web page, and an edge denotes a hyperlink from one page to another. RoleSim can be applied to measure the similarity of two web pages, based on the intuition that “two web pages are role-similar if they are pointed to by the automorphically equivalent sets of their in-neighboring pages”. This similarity measure produces more reliable similarity results than the SimRank model [10].

Application 2 (Social Network De-anonymization)

Social network de-anonymization is a method to validate the strength of anonymization algorithms that protect a user’s privacy. RoleSim has been applied to de-anonymise node mappings based on the similarity information between a crawled network and an anonymised one. Based on the observation that “correct mappings tend to have higher similarity scores”, RoleSim iteratively evaluates pairwise node similarities between two networks, and captures the reasoning that “a pair of nodes between two networks is more likely to be a correct mapping if their neighbors are correct mappings”. RoleSim has demonstrated superior performance as compared with other existing de-anonymization algorithms [25].

Despite its popularity in real-world applications, RoleSim has a major limitation: with the aim to achieve automorphic equivalence, its similarity score s(a,b) only considers the limited information of the average similarity scores over the automorphically equivalent set (i.e., the maximum bipartite matching) of a’s and b’s in-neighboring pairs, but neglects the rest of the pairwise in-neighboring similarity information that is outside the automorphically equivalent set. Consequently, RoleSim does not always produce comprehensive similarity results because two pairs of nodes, which are not automorphically equivalent by nature, should be distinguishable from each other even though the average values of their in-neighboring similarities over the set of the maximum bipartite matching are the same, as illustrated in Example 1.

Example 1 (Limitation of RoleSim)

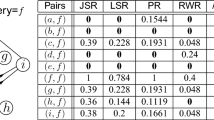

Consider the web graph G in Figure 1, where each node denotes a web page, and each edge depicts a hyperlink from one page to another. Using RoleSim, we evaluate pairs of similarities between nodes, as partially illustrated in the ‘RS’ column of the right table. It is discerned that node-pairs (1,2) and (1,3) have the same RoleSim similarity values, which is not reasonable. Because node 2 and node 3 are not strictly automorphically equivalent by nature, their similarities with respect to the same query node 1, i.e.,s(1,2) and s(1,3), should not be the same.

Limitation of RoleSim (RS) on a web graph, where node-pairs (1,2) and (1,3) have the same RoleSim score (0.426) since RS aggregates only the in-neighboring pairs that are automorphically equivalent (colored in green) whose sums are the same (0.488 + 0.360 = 0.848), while ignoring the remaining pairs

We notice that the main reason why s(1,2) and s(1,3) are assessed to be the same by the RoleSim model is that its similarity s(a,b) considers only the average similarity scores over the maximum bipartite matching, denoted as Ma,b, of (a,b)’s in-neighboring pairs Ia × Ib, where Ia denotes the in-neighbor set of node a, and × is the Cartesian product of two sets. Thus, the similarity information in the remaining in-neighboring pairs of (a,b), i.e., Ia × Ib − Ma,b, are totally ignored. For example, if unfolding the in-neighboring pairs of (1,2) and (1,3) respectively, we see that, in the gray cells, M1,2 = {(4,6),(5,7)} (resp.M1,3 = {(4,9),(5,10)}) is the maximum bipartite matching of (1,2)’s (resp. (1,3)’s) in-neighboring pairs I1 × I2 (resp.I1 × I3). The sum of the similarity values over M1,2 is 0.488 + 0.360 = 0.848, which is the same as that over M1,3. Thus, RoleSim cannot distinguish s(1,2) from s(1,3).

Example 1 illustrates that, to effectively evaluate s(a,b), relying only on the in-neighboring-pairs similarities in the maximum bipartite matching Ma,b (e.g., RoleSim) is not enough. Although RoleSim has the advantage of using intuitively the most influential pairs Ma,b among all the in-neighboring pairs Ia × Ib for achieving automorphic equivalence, it completely ignores the similarity information outside Ma,b. For instance in Example 1, there are opportunities to take good advantage of the similarity values in the regions I1 × I2 − M1,2 and I1 × I3 − M1,3 which would be helpful to distinguish s(1,2) from s(1,3) further when the average similarities over M1,2 and M1,3 are the same.

Contributions

Motivated by this, our main contributions are as follows:

-

1)

We first propose a novel similarity model, RoleSim*, which accurately evaluates pairwise role similarities in a more comprehensive fashion. Compared with the existing well-known similarity models (e.g., SimRank and RoleSim), RoleSim* not only guarantees the automorphic equivalence that SimRank lacks, but also takes into consideration the pairwise similarities outside the automorphically equivalent sets that are overlooked by RoleSim. (Section 3.1)

-

2)

We show three key properties of RoleSim*, i.e., symmetry, boundedness, and non-increasing monotonicity of its iterative similarity scores. On the top of that, we prove the existence and uniqueness of the RoleSim* solution. (Section 3.2)

-

3)

We derive an iterative formula for computing RoleSim* similarities. A concise upper bound for RoleSim* iterations is also established, which can estimate the total number of iterations required for attaining a desired accuracy. (Section 3.3)

-

4)

To substantially accelerate the computation of RoleSim*, we also devise a threshold-based RoleSim* model based on two pruning strategies, and provide provable guarantees on accuracy which is controlled by a user-specified threshold parameter δ trading between speed and accuracy. (Section 4)

-

5)

To scale RoleSim* similarity search well on large graphs with billions of edges, we propose a scalable algorithm for single-source RoleSim* retrieval, which avoids spending unnecessary time on repeated RoleSim* computations while caching important pairs through an unordered hash map. (Section 5)

-

6)

We induce a distance metric based on our RoleSim* measure, and rigorously show that the RoleSim* distance metric fulfills the triangular inequality which other measures (e.g., cosine distance) lack. This implies the sum-transitivity of the RoleSim* measure. (Section 6)

-

7)

We discuss approaches to scale RoleSim* based search using the triangle inequality property and partitioning techniques (Section 7).

-

8)

We conduct an experimental study to validate the effectiveness of our RoleSim* model. Our empirical results show that RoleSim* achieves higher accuracy than the existing competitors (e.g., RoleSim and SimRank) while entailing comparable computational complexity bounds of RoleSim. We also devise an unsupervised experimental setting that quantifies the effectiveness of similarity measures, where RoleSim* outperforms the alternatives. (Section 8)

2 Related work

Graph-based similarity models have been popular since SimRank measure was proposed by Jeh and Widom [7]. SimRank is a node-pair similarity measure, which follows the recursive idea that “two nodes are considered as similar if they are pointed to by similar nodes”. Since then, there have been surges of studies focusing on optimization problems to accelerate SimRank computation as the naive SimRank computing method entails quadratic time in the number of nodes. According to assumptions on data updates, recent results can be divided into static algorithms [1, 4, 5, 12, 16, 22, 26, 32, 34, 37, 42], and dynamic algorithms on evolving graphs [8, 13, 19, 24, 28, 36, 40]. According to types of queries, these results are classified into single-source SimRank [8, 12, 19, 26, 40], single-pair SimRank [6, 15], all-pairs SimRank [1, 20, 34, 35], and partial-pairs SimRank [22, 39].

Recent years have witnessed an upsurge of interest in the semantic problems of pairwise similarity measures. Various SimRank and SimRank-like models have come into play. Representative examples include C-Rank [31], SimFusion [38], Penetrating-Rank [41], RoleSim++ [25], RoleSim [9], MatchSim [18], SimRank* [37], ASCOS [3], CoSimRank [23], and SemSim [32]. In what follows, we will elaborate the pros and cons of these similarity measures and discuss their relations to this work.

C-Rank [31]

C-Rank is a contribution-based ranking algorithm that integrates both content and link information of web pages through the concept of contribution, indicating that a page may contribute to enhancing the content quality of adjacent pages pointing to it via linkages. A C-Rank score of each page on a term is defined to be a linear combination of (i) its relevance score to the term and (ii) its contribution score that quantifies the degree of its overall contributions to other pages on the term. However, unlike similarity scores from the RoleSim family, C-Rank does not take into account the automorphic equivalence property for each pair of nodes. Our experimental evaluation demonstrates the accuracy of RoleSim* is superior to C-Rank with a little compromise in the computational time.

Penetrating-Rank [41]

Zhao et al. [41] proposed Penetrating-Rank, which is a SimRank-based similarity measure that comprehensively considers both incoming and outgoing neighbouring information for similarity assessment. However, Penetrating-Rank is not an automorphic equivalence-based measure as role discovery is not the primary task of this model. Recently, the idea of Penetrating-Rank applied to SimRank shows some degree of resemblance to the idea of RoleSim++, which is a generalisation of RoleSim through exploitation of both in- and out-links of the graph structure.

RoleSim [10]

RoleSim has been accepted as a promising role-based similarity model, due to its elegant intuition that “if two nodes are automorphically equivalent, they should share the same role and their role similarity should be maximal”. To speed up the RoleSim computation, an approximate heuristic, named Iceberg RoleSim, was devised to prune small similarity values below a threshold. Unlike SimRank that takes the average similarity of all the neighboring pairs of (a,b), RoleSim computes s(a,b) by averaging only the similarities over the maximum bipartite matching Ma,b. However, all the similarity information not included in the matching Ma,b is completely ignored by RoleSim. In contrast, our RoleSim* model can effectively capture these information while guaranteeing automorphic equivalence.

RoleSim++ [25]

RoleSim++, proposed by Shao et al. [25], takes good advantage of the direction information of both in- and out-links to model pairwise similarities. which is successfully used in the real-world de-anonymization application. It employs a novel matching algorithm, NeighborMatch, to find matchings for inner and outer neighbors, respectively. Moreover, a threshold-based model, α-RoleSim++, is proposed to eliminate tiny scores for speedup further. Our techniques of RoleSim* can also be slightly modified to tailor RoleSim++ to accommodate similarity contributions from non-automorphically equivalent pairs of in- and out-neighbours for semantic enhancement.

SimFusion [30]

SimFusion exploits a unified relationship matrix (URM) to capture the inter- and intra-relationships among a set of heterogeneous data objects. A unified similarity matrix (USM), which is evaluated iteratively from the URM, characterises the latent relationships among heterogeneous data objects. However, as opposed to RoleSim*, SimFusion fails to capture automorphically equivalent relationships among the heterogeneous data objects.

MatchSim [18]

Lin et al. [18] introduced MatchSim similarity model, which computes the similarity values between two objects based on the average similarity of their maximum matched neighbours. The key difference between MatchSim and RoleSim lies in the initialisation step – MatchSim starts with an identity matrix as its initial similarity and defines s0(a,b) = 1 if a = b, and 0 otherwise, whereas RoleSim utilises a matrix of all ones to be starting similarity matrix which initialises all s0(∗,∗) = 1. As a result, RoleSim exhibits the automorphism property that MatchSim lacks. However, similar to RoleSim, MatchSim totally neglects the neighboring similarity values outside the automorphically equivalent sets. The idea of RoleSim* can be applied to MatchSim in a similar way to resolve this problem.

SimRank* [37] & ASCOS [3]

SimRank* and ASCOS are two variations of the SimRank model that addresses the zero-similarity problem of the SimRank measure. Nevertheless, these methods do not take into account the automorphically equivalent structure of nodes. The key idea of RoleSim* can be applied in a similar manner to SimRank* and ASCOS models to enrich the meaningful semantics of similarity assessment while effectively circumventing the zero SimRank problem.

CentSim [14]

Li et al. [14] proposed CentSim, a centrality-based role similarity measure, which compares the centrality values of two nodes to evaluate their similarity. This measure employs several types of centrality including PageRank, Degree and Closeness for each node, and considers the weighted average of them for evaluating CentSim scores.

SemSim [32]

Milo et al. [32] proposed a semantic-aware random walk-based model namely, SemSim, which is an extension of SimRank applied to heterogeneous information networks. SemSim aims to boost the quality of SimRank similarity scores by exploiting its node semantics and edge weights. Nonetheless, SemSim inherits the limitation of SimRank whose similarity values ignore the role-equivalent relationship between nodes.

Co-SimRank [23]

Rothe and Schütze [23] presented Co-SimRank, a SimRank-like measure of pairwise similarity based on graph structure. A Co-SimRank score s(a,b) of each pair (a,b) is computed from the inner product of two Personalised PageRank vectors corresponding to the seed node a and b, respectively. Co-SimRank distinguishes from SimRank in that the SimRank value s(a,b) counts only the first hitting time of two random surfers starting at nodes a and b, whereas CoSimRank values tallies all the hitting times of the two random surfers. As a result, CoSimRank produces more complete similarity scores than SimRank. In comparison to RoleSim*, the values of CoSimRank do not look at the automorphically equivalent patterns of the graph. However, the intuition of RoleSim* can be extended to Co-SimRank for semantic enhancement.

SimRank

There have also been a variety of studies on SimRank algorithms recently (e.g., [8, 21, 24, 27, 29]). Wang et al. [27] presents a fast probabilistic Monte-Carlo algorithm, ExactSim, to evaluate single-source and top-k SimRank results on large-scale graphs with over 106 nodes effectively. ExactSim provides high-probability guarantees to yield ground truths with provable accuracy. Lu et al. [21] proposed a matrix sampling approach in combination with the steepest descent technique, which not only guarantees the sparsity of the involved matrix, but also speeds up the rate of convergence for a single-pair SimRank retrieval. Wei et al. [29] proposes PRSim, which resorts to the distribution of the reverse PageRank to accelerate single-source SimRank queries, achieving sublinear query time on power-law graphs with small index size. READS [8] precalculates \(\sqrt {c}\)-walks and squeezes random walks into compact trees. In the query processing, READS searches the walks commencing at the query node u, and retrieves all the \(\sqrt {c}\)-random walks which hit the \(\sqrt {c}\)-walks of u. TSF [24] constructs one-way graphs for indexing through sampling an in-neighbour from the in-links of each node. In the query processing, the one-way graphs are utilised to retrieve random walks for SimRank evaluation.

3 RoleSim*

3.1 RoleSim* formulation

The central intuition underpinning RoleSim* follows a recursive concept that two distinct nodes are assessed to be similar if they

-

1.

interact with the automorphically equivalent sets of in-neighbors, and

-

2.

are in-linked by similar nodes out of automorphically equivalent sets.

The starting point for this recursion is to assign each pair of nodes a similarity score 1, meaning that initially no pairs of nodes are thought of to be more (or less) similar than others.

Notations

Before illustrating the mathematical definition to reify the RoleSim* intuition, we introduce the following notations.

Let G = (V,E) be a directed graph with a set of nodes V and a set of edges E. Let Ia be all in-neighbors of node a, and |Ia| the cardinality of the set Ia. For a pair of nodes (a,b) in G, we denote by Ia × Ib = {(x,y) | x ∈ Ia and y ∈ Ib} all in-neighboring pairs of (a,b), and s(a,b) the RoleSim* similarity score between nodes a and b. Using Ia × Ib and s(a,b), we define a weighted complete bipartite graph, denoted by \({\mathcal K}_{|I_{a}|, |I_{b}|}= (I_{a} \cup I_{b}, I_{a} \times I_{b})\), with each edge (x,y) ∈ Ia × Ib carrying the weight s(a,b). We denote by \(M_{a,b} \ (\subseteq I_{a} \times I_{b})\) the maximum weighted matching in bipartite graph \({\mathcal K}_{|I_{a}|, |I_{b}|}\).

Example 2

Recall graph G in Figure 1. For nodes 1 and 2, their in-neighbors are sets I1 = {4,5} and I2 = {6,7,8}, respectively. The set of all in-neighboring pairs of (1,2) is I1 × I2 = {(4,6),(4,7),(4,8),(5,6),(5,7),(5,8)}. The maximum matching of bipartite graph (I1 ∪ I2,I1 × I2) is M1,2 = {(4,6),(5,7)} (see the pairs in bold font in I1 × I2).

Other notations frequently used throughout this paper are listed in Table 1.

RoleSim* Formula.

Based on our aforementioned intuition, we formally formulate the RoleSim* model as follows:

In (1), for every pair of nodes (a,b), the set of their in-neighboring pairs, Ia × Ib, is split into two subsets: Ia × Ib = Ma,b ∪ (Ia × Ib − Ma,b). As a result, the definition of RoleSim* consists of two parts: Part 1 is the average similarity over maximum matching Ma,b, indicating the contribution from (a,b) interacting with the automorphically equivalent set, Ma,b, of (a,b)’s in-neighbors pairs. Part 2 is the average similarity over (Ia × Ib) − Ma,b, corresponding to the contribution from (a,b) being pointed to by the rest of (a,b)’s in-neighbors pairs out of automorphically equivalent set Ma,b.

It is worth highlighting that the reason why we use the denominator \(\left | {{I}_{a}} \right |+\left | {{I}_{b}} \right |-\left | {{M}_{a,b}} \right |\) instead of \(\left | {{M}_{a,b}} \right |\) in (1) is to guarantee that RoleSim* covers the traditional RoleSim model as a special case when λ = 1. More specifically, since \(\left | {{M}_{a,b}} \right |={\min \limits } \{\left | {{I}_{a}} \right |,\left | {{I}_{b}} \right |\}\), it follows that \(\left | {{I}_{a}} \right |+\left | {{I}_{b}} \right |-\left | {{M}_{a,b}} \right |={\max \limits } \{\left | {{I}_{a}} \right |,\left | {{I}_{b}} \right |\}\). When we apply this to (1) and set λ = 1, Part 2 of (1) becomes zero, and (1) reduces to the following traditional RoleSim equation:

The reason why RoleSim in (2) uses \({\max \limits } \{\left | {{I}_{a}} \right |,\left | {{I}_{b}} \right |\} \ \left (= \left | {{I}_{a}} \right |+\left | {{I}_{b}} \right |-\left | {{M}_{a,b}} \right | \right )\) as the denominator instead of \(\left | {{M}_{a,b}} \right |\ \left (={\min \limits } \{\left | {{I}_{a}} \right |,\left | {{I}_{b}} \right |\} \right )\) is to differentiate similarity values of the pairs s(a,b) and s(a,c) when \(\left | {{I}_{b}} \right |\ne \left | {{I}_{c}} \right |\). The larger the difference between \(\left | {{I}_{b}} \right |\) and \(\left | {{I}_{c}} \right |\), the more dissimilar the similarity values of s(a,b) and s(a,c) should be. For example, recall the similarities of s(8,3) and s(8,1) in Figure 1, their in-neighbouring grids are shown in Figure 2. Note that \(\left | {{I}_{3}} \right |=3\) and \(\left | {{I}_{1}} \right |=2\), which implies that the similarity values of s(8,3) and s(8,1) should be different. However, \(\left | {{M}_{8,3}} \right |=\left | {{M}_{8,1}} \right |=2\). Therefore, if we replace \(\left | {{M}_{a,b}} \right | \) with \(\left | {{I}_{a}} \right |+\left | {{I}_{b}} \right |-\left | {{M}_{a,b}} \right |\) in (1), the similarity values of s(8,3) and s(8,1) are considered as the same because

which is counter-intuitive to our common sense due to |I1|≠|I3|. However, if we use (2), then the similarity values of s(8,3) and s(8,1) become

The larger the difference between \(\left | {{I}_{3}} \right |\) and \(\left | {{I}_{1}} \right |\), the more dissimilar the similarity scores of s(8,3) and s(8,1), which follows our intuition.

The relative weight of Part 1 and 2 is balanced by a user-controlled parameter λ ∈ [0,1]. β is a damping factor between 0 and 1, which is often set to 0.6 or 0.8, implying that similarity propagation made with distant in-neighbors is penalised by an attenuation factor β across edges. When Ia (or Ib) = ∅, which implies the maximum matching Ma,b = ∅, we define Part 1 and Part 2 to be 0 in order to avoid the denominators of the fraction in Part 1 and 2 being zeros.

Fixed-Point Iteration

To solve RoleSim* similarity s(a,b) in (1), we adopt the following fixed-point iterative scheme:

where sk(a,b) denotes the RoleSim* score between nodes a and b at iteration k. Based on (3) and (4), we can iteratively compute all pairs of similarity scores sk+ 1(∗,∗) from those at the last iteration sk(∗,∗).

3.2 Axiomatic properties for RoleSim*

Symmetry, Boundedness, & Monotonicity

Based on the definition of iterative similarity sk(a,b) in (3) and (4), we next show three axiomatic properties of RoleSim*, i.e., symmetry, boundedness, and non-increasing monotonicity, based on the following theorem.

Theorem 1

The iterative RoleSim* {sk(a,b)} in (3) and (4) have the following key properties: for any node-pair (a,b) and each iteration k = 0,1,⋯,

-

1.

(Symmetry) sk(a,b) = sk(b,a)

-

2.

(Boundedness) 1 − β ≤ sk(a,b) ≤ 1

-

3.

(Monotonicity) sk+ 1(a,b) ≤ sk(a,b)

Proof

-

1.

(Symmetry) By virtue of (3) and (4), sk(a,b) = sk(b,a) follows immediately.

-

2.

(Boundedness) We will prove this by induction on k. For k = 0, it is apparent that s0(a,b) = 1 ∈ [1 − β,1]. For k > 0, we assume that sk(x,y) ≤ 1 holds, and will prove that sk+ 1(x,y) ≤ 1 holds as follows. Since

Thus, (4) can be rewritten as

On the other hand,

-

3.

(Monotonicity) We will prove by induction on k. For k = 0, s0(a,b) = 1. According to (4), it follows that

For k > 0, we assume that sk+ 1(a,b) ≤ sk(a,b) holds, and will prove that sk+ 2(a,b) ≤ sk+ 1(a,b) holds. According to (4), it follows that

□

Theorem 1 indicates that, for every iteration k = 0,1,2,⋯, {sk(a,b)} is a bounded symmetric scoring function. Moreover, as \(k \to \infty \), it can be readily verified that the exact solution s(a,b) also is a bounded symmetric measure, which is similar to SimRank and RoleSim measures. In contrast, other measures (e.g., Hitting Time and Random Walk with Restart) are asymmetric.

It is worth noticing that, unlike SimRank iterative similarity values {sk(a,b)} that exhibit a non-decreasing trend (starting from 0 for any two distinct nodes a and b) w.r.t. the number of iterations k, RoleSim iterative similarity scores {sk(a,b)} show a non-increasing tendency (starting from 1 for any two distinct nodes a and b) w.r.t.k. This subtle difference makes many existing optimization techniques on SimRank not directly applicable to RoleSim*.

Existence & Uniqueness

The bounded property and non-increasing property of RoleSim* iterative similarity values {sk(a,b)} w.r.t.k guarantee the existence and uniqueness of the exact RoleSim* solution s(a,b) to (3) and (4), as indicated below:

Theorem 2 (Existence and Uniqueness)

There exists a unique solution s(a,b) (i.e., the exact RoleSim score) to (3) and (4) such that the iterative RoleSim similarity {sk(a,b)} non-increasingly converges to it as the number of iterations k increases, i.e.,

Proof

(Existence) For each pair of nodes (a,b), since the sequence {sk(a,b)}k is lower-bounded by (1 − β) (Property 2) and non-increasing (Property 3), by Monotone Convergence Theorem, {sk(a,b)} will converge to its infimum, denoted as s(a,b), which is the exact RoleSim* solution, i.e., \(\lim _{k \to \infty } s_{k}(a,b) = s(a,b)\).

(Uniqueness) For each pair of nodes (a,b), suppose there exist two solutions, s(a,b) and \(\tilde {s}(a,b)\), that satisfy (4). We will prove that \(s(a,b)=\tilde {s}(a,b)\). Let \(\delta (a,b) := s(a,b)-\tilde {s}(a,b)\) and \({\varDelta } := \max \limits _{(a,b)} \{|\delta (a,b)|\}\). Then,

Therefore, taking the absolute value of both sides and applying triangle inequality |x + y|≤|x| + |y| produces

Thus, \({\varDelta }= \underset {(a,b) }{{\max \limits }} \{|\delta (a,b)|\} \le \beta \times {\varDelta } \), implying Δ = 0, i.e., \(s(a,b)=\tilde {s}(a,b)\). □

3.3 Iterative RoleSim* algorithm with guaranteed accuracy

In this section, we provide an iterative algorithm for retrieving RoleSim* similarity values, and give a concise error bound for the difference between iterative similarity scores as provided by our algorithm and actual (exact) scores.

Iterative Algorithm

The fixed-point scheme in (3) and (4) implies an iterative algorithm for RoleSim* computation, as illustrated in Algorithm 1. It starts initialising all pairs of similarities to 1 (line 1), and carries out iterative computations of similarities for each pair of nodes (lines 3–15). If there are no in-neighbors for node a or b, s(a,b) is set to 1 − β (lines 4–6). Otherwise, it finds maximum weighed matching Ma,b in bipartite graph (Ia ∪ Ib,Ia × Ib) (line 8), and averages the (k − 1)-th iterative similarities over Ma,b (resp. (Ia × Ib) − Ma,b) to get w1 (resp.w2) (lines 9–14). Then, the weighted average of w1 and w2 is returned as score sk(a,b) at k-th iteration. This process continues till all pairs of similarities are computed for each iteration.

Complexity The computational cost of Algorithm 1 is shown in Theorem 3.

Theorem 3

It requires O(K|E|2) time and O(|V |2) memory for Algorithm 1 to retrieve RoleSim* similarity scores for |V |2 node-pairs on graph G = (V,E) with |V | nodes and |E| edges for K iterations.

Proof

For each iteration k and each pair of nodes (a,b), the computational time and memory required in each loop iteration of Algorithm 1 (lines 4–15) are described as follows:

Line | Time | Memory | Description |

|---|---|---|---|

4 | O(|Ia| + |Ib|) | O(|Ia| + |Ib|) | get in-neighborings for node a and b |

6 | O(1) | O(1) | initialise sk(a,b) if a and b have no in-neighbors |

8 | O(|Ia| + |Ib|) | O(|Ia|×|Ib|) | finding the maximum matching in a weighted bipartite graph using Jonker-Volgenant algorithm [2] |

9 | O(1) | O(1) | initialise t1 and t2 |

10–11 | O(|Ma,b|) | O(1) | iteratively compute t1 |

12–13 | O(|Ia|×|Ib|−|Ma,b|) | O(1) | iteratively compute t2 |

14–15 | O(1) | O(1) | iteratively compute sk(a,b) |

Thus, for K iterations and |V |2 node-pairs, the total time of Algorithm 1 is bounded by

Therefore, it entails O(K|E|2) time to retrieve |V |2 pairs of RoleSim* scores.

Since |V |2 pairs of similarities sk− 1(∗,∗) at iteration (k − 1) need to be prepared for retrieving sk(a,b) at next iteration k, the memory consumption of Algorithm 1 is bounded by O(|V |2). □

It is important to note that the O(|V |2) memory of Algorithm 1 hinders the scalability of RoleSim* computation on large graphs with millions of nodes. Therefore, in Section 5, on the top of Algorithm 1, we will propose a scalable algorithm for efficient RoleSim* similarity search on sizable graphs without loss of accuracy.

Error Bound

We are now ready to investigate the error bound of the difference between the k-th iterative similarity sk(a,b) and exact one s(a,b).

By virtue of the non-increasing monotonicity of {sk(a,b)}, one can readily show that the exact s(a,b) is the lower bound of all the iterative similarities {sk(a,b)}, i.e.,sk(a,b) ≥ s(a,b) (∀k). The following theorem further provides a concise upper bound to measure the closeness between sk(a,b) and s(a,b).

Theorem 4 (Error Bound for Iterative RoleSim*)

For every iteration k = 0,1,2,⋯, the difference between sk(a,b) and s(a,b) is bounded by

Proof

We prove this by induction on k. For k = 0, s0(a,b) = 1. According to Property 2 of Theorem 1, 1 − β ≤ sk(a,b) ≤ 1, implying that 1 − β ≤ s(a,b) ≤ 1. Thus, s0(a,b) − s(a,b) ≤ β holds.

For k > 0, we assume that sk(a,b) − s(a,b) ≤ βk+ 1 holds, and will prove that sk+ 1(a,b) − s(a,b) ≤ βk+ 2 holds. Subtracting (4) from (1) produces

□

Theorem 4 derives a concise exponential upper bound for the difference between the k-th iterative similarity sk(a,b) and exact s(a,b). Combining this bound with the non-increasing monotonicity sk(a,b) ≥ s(a,b), we can obtain that the k-th iterative error sk(a,b) − s(a,b) is between 0 and βk+ 1. Moreover, Theorem 4 also implies that, given desired accuracy 𝜖 > 0, the total number of iterations required for computing RoleSim* similarity is \(k = \lceil \log _{\beta } \epsilon \rceil \).

It is worth noticing that the equality sign in our error estimation (5) is reachable, highlighting the tightness of the bound, as illustrated in Example 3.

Example 3

Consider the graph G in Figure 3. Given β = 0.8 and λ = 0.7, let us evaluate the RoleSim* similarity s(c,d) iteratively via (3) and (4). For iteration k = 0, it is apparent that s0(∗,∗) = 1. When k = 1, it follows from |Ic| = |Id| = |Mc,d| = 2 and (4) that

Since the exact solution is s(c,d) = 0.36, when k = 1, we have

Therefore, the equality in (5) is attainable on G when (k,β) = (1,0.8).

The equality sign in our estimate bound (5) for iterative RoleSim* computation is attainable, e.g., it can be verified from graph G that there exist (k,β) = (1,0.8) such that s1(c,d) − s(c,d) = 1 − 0.36 = 0.81 + 1 = βk+ 1 holds

4 Threshold-based RoleSim*

In this section, we propose our threshold-based RoleSim* model that substantially speeds up the computation of RoleSim* similarities with only a little sacrifice in accuracy. We will establish provable error bounds on our threshold-based RoleSim* model with respect to a user-specified threshold parameter δ, which is a speed-accuracy tradeoff.

Through the iterative computation of RoleSim* via (3) and (4), we notice that there are a significant number of node-pairs whose iterative similarity scores sk(∗,∗) are very close to their convergent values s(∗,∗) and thus will not change much in subsequent iterations as k grows. To accelerate RoleSim* computation, we have the following two observations for eliminating such pairs from the unnecessary RoleSim* computations, with guaranteed accuracy.

Observation 1

If the RoleSim* similarity scores of two adjacent iterations, sk− 1(∗,∗) and sk(∗,∗), become quite close to each other after some iterations, then the RoleSim* iterative sequence {sk(∗,∗)} from some iteration k0 onwards are very close to the exact solution s(∗,∗) as well.

This observation is based on Cauchy Convergence Criterion to test whether a sequence has a limit. Precisely, for any small user-specified threshold δ > 0, this criterion implies that

We apply this criterion to skip unnecessary iterative computations for node-pairs whose RoleSim* scores of two consecutive iterations are very small. More specifically, after some iterations, once the gap between sk− 1(∗,∗) and sk(∗,∗) is below the threshold δ, instead of employing (4) to iteratively compute sk+ 1(∗,∗) from sk(∗,∗), we simply supersede sk+ 1(∗,∗) by the value of sk(∗,∗). Therefore, we define the following threshold-based RoleSim* similarity \({\overline {s}}_{k}^{\delta }(*,*)\) based on Observation 1:

\( {\overline {s}}_{0}^{\delta }(a,b) = 1 \)

To quantify the difference between the threshold-based RoleSim* similarity \({\overline {s}}_{k}^{\delta }(*,*)\) in (6) and the conventional one sk(∗,∗) in (4) at each iteration k, we show the following theorem.

Theorem 5

Given a threshold δ, for any number of iterations k = 0,1,2,⋯, there exists a positive integer k0 such that for any k > k0 and two nodes (a,b),

where k0 is the minimum integer that guarantees \({\overline {s}}_{{{k}_{0}}-1}^{\delta }(a,b)-{\overline {s}}_{{{k}_{0}}}^{\delta }(a,b)<\delta \).

Proof

When k = 0, it is apparent that \({{s}_{0}}(a,b)={\overline {s}}_{0}^{\delta }(a,b)=1\).

Let k0 be the minimum integer that guarantees \({\overline {s}}_{{{k}_{0}}-1}^{\delta }(a,b)-{\overline {s}}_{{{k}_{0}}}^{\delta }(a,b)<\delta \) for any two nodes a and b. When 0 < k < k0, in the case of \({\overline {s}}_{k-1}^{\delta }(a,b)-{\overline {s}}_{k}^{\delta }(a,b)\ge \delta \), \({\overline {s}}_{k}^{\delta }(a,b)\) is iteratively computed from (6a). Hence,

When k ≥ k0, in the case of \({\overline {s}}_{k-1}^{\delta }(a,b)-{\overline {s}}_{k}^{\delta }(a,b)<\delta \) for any nodes a and b, \({\overline {s}}_{k+1}^{\delta }(a,b)\) is directly obtained by (6b), thereby leading to its deviation from sk+ 1(a,b) since the k0-th iteration. In this case, to quantify the gap between \({\overline {s}}_{k+1}^{\delta }(a,b)\) and sk+ 1(a,b), we notice that

Similarly,

Iteratively, we can obtain that

Since \({\overline {s}}_{k}^{\delta }(a,b)={\overline {s}}_{{{k}_{0}}}^{\delta }(a,b)={{s}_{{{k}_{0}}}}(a,b)\quad (\forall k\ge {{k}_{0}})\), it follows that

□

Theorem 5 indicates that the threshold δ is a user-controlled parameter, which is a speed-accuracy trade-off. A small setting of δ ensures a high accuracy of \({\overline {s}}_{k}^{\delta }(*,*)\), but at the cost of more time for iterations, since only a small number of node-pairs can be pruned. In contrast, larger δ can discard more pairs of nodes from iterative computations, but would produce a larger error bound 𝜖1 between \({\overline {s}}_{k}^{\delta }(*,*)\) and sk(∗,∗).

Example 4

Consider the graph G in Figure 4a. Given threshold δ = 0.01, decay factor β = 0.6, and relative weight λ = 0.8, for pair (a,b) = (2,3), in Figure 4b, we see that

Thus, there exists an integer k0 = 4, such that the error bound in (7) holds for all k > k0, as depicted in Figure 4c. For example, when k = 10, we have

__

On the top of Observation 1, to enable a further speedup in the computation of RoleSim*, our second observation for discarding unnecessary RoleSim* iterations is the following:

Observation 2

For a given threshold δ, after some iterations, if the RoleSim* similarity score sk(∗,∗) is within a small δ-neighbourhood of (1 − β), then the RoleSim* iterative sequence {sk(∗,∗)} from some iteration k1 onwards is also within the δ-neighbourhood of (1 − β).

This observation comes from the non-increasing property and lower bound of the RoleSim* iterative sequence \(\{s_{k}(*,*)\}_{k=1}^{\infty }\) that we derived in Theorem 1. We notice that there are a number of pairs whose iterative RoleSim* similarity scores are very close to the lower bound (1 − β), but have not converged to the exact value of (1 − β) yet. Iteratively computing such pairs via (4) till convergence is cost-inhibitive. We observe that, when sk(∗,∗) becomes close to (1 − β), the value of sk+ 1(∗,∗) in the subsequent iteration is even closer to (1 − β) than sk(∗,∗). As a result, there are opportunities to terminate earlier the iterative computations of sk+i(∗,∗) by simply replacing the value of sk+i(∗,∗) with (1 − β) for all i = 1,2,⋯, once sk(∗,∗) falls into the δ-neighborhood of (1 − β), as illustrated below:

\( {\underline {s}}_{0}^{\delta }(a,b) = 1 \)

To distinguish \({\overline {s}}_{k}^{\delta }(*,*)\) in (6), we denote by \({\underline {s}}_{k}^{\delta }(*,*)\) in (8) the threshold-based RoleSim* similarity based on Observation 2. By definition, it is discerned that \({\underline {s}}_{k}^{\delta }(*,*) \le s_{k}(*,*) \le {\overline {s}}_{k}^{\delta }(*,*)\). The following theorem provides the bound for the difference between sk(∗,∗) in (4) and \({\underline {s}}_{k}^{\delta }(*,*)\) in (8).

Theorem 6

Given a threshold δ, for any number of iterations k = 0,1,2,⋯, there exists a positive integer k1 such that for any k ≥ k1 and two nodes (a,b), it follows that

where k1 is the minimum integer that guarantees \({\underline {s}}_{{{k}_{1}}}^{\delta }(a,b) < 1-\beta + \delta \).

Proof

We first find the lower bound on the gap between sk(a,b) and sk+i(a,b). By definition of (4), when k = 0, it follows from s0(∗,∗) = 1 that

Plugging \(|{{M}_{a,b}}|={\min \limits } (|{{I}_{a}}|,|{{I}_{b}}|)\) and \(|{{I}_{a}}|+|{{I}_{b}}|-|{{M}_{a,b}}|={\max \limits } (|{{I}_{a}}|,|{{I}_{b}}|)\) to the above equation yields

Let \(\xi =\underset {a,b}{{{\min \limits } }} \{{{\xi }_{a,b}}\} =1-\underset {a,b}{{{\max \limits } }} \{{s_{1}(a,b)}\}\), we have

When k = 1, it follows that

Similarly, when k = 2, we have

Iteratively, we have

Therefore,

Next, capitalising on the lower bound for sk(a,b) − sk+i(a,b), we are going to find the upper bound for \({{s}_{{{k}_{1}}+i}}(a,b)-{\underline {s}}_{{{k}_{1}}+i}^{\delta }(a,b)\). Let k1 be the minimum integer that guarantees \({\underline {s}}_{{{k}_{1}}}^{\delta }(a,b)\le (1-\beta )+\delta \) for any two nodes a and b. Then, when k = k1, we have

Iteratively, when k = k1 + i (i ≥ 1), it follows from \({\underline {s}}_{{{k}_{1}}+i}^{\delta }(a,b)=1-\beta \) that

Thus,

□

Example 5

Consider the digraph G in Figure 5a. Given a threshold δ = 0.1, decay factor β = 0.2, and relative weight λ = 0.55, for node-pair (a,b) = (2,3), it is discerned that, when k grows to 3, the value of \(\bar {s}_{k}^{\delta }(2,3)\) will fall into the δ-neighborhood of (1 − β), i.e.,\(\bar {s}_{3}^{0.1}(2,3)=0.899<1-\beta +\delta =1-0.2+0.1=0.9\). Thus, there exists an integer k1 = 3, such that the error bound in (9) holds for all k > k1, as shown in Figure 5c. Then, e.g., when k = 4 (> k1), we have

Putting Them All Together

Combining Observations 1 and 2, we next propose the following complete scheme for threshold-based RoleSim* retrieval. To differentiate the notation from \({\overline {s}}_{k}^{\delta }(*,*)\) in (6) and \({\underline {s}}_{k}^{\delta }(*,*)\) in (8), we denote by \({{s}}_{k}^{\delta }(*,*)\) the threshold-based RoleSim* similarity for our complete scheme combining both Observations 1 and 2, which is defined as follows:

\( s_{0}^{\delta }(a,b) = 1 \)

By virtue of Theorems 5 and 6, the following upper bound on the difference between \(s_{k}^{\delta }(*,*)\) and sk(∗,∗) is immediate.

Corollary 1 (Error Bound for Threshold-Based RoleSim* Iteration)

Given a threshold δ, for any number of iterations k = 0,1,2,⋯, there exist two positive integers k0 and k1 such that for any two nodes (a,b),

where \( {{\epsilon }_{0}}=\tfrac {\beta (1-{{\beta }^{k-{{k}_{0}}}})}{1-\beta }\delta \), and \({{\epsilon }_{1}}= \delta -\frac {{{\rho }^{{{k}_{1}}}}-{{\rho }^{k}}}{1-\rho } \xi \) with ρ = β(1 − λ) and \( \xi =1-\underset {a,b}{{{\max \limits } }} \{{s_{1}(a,b)}\}\); k0 (resp.k1) is the minimum positive integer that ensures \(s_{{{k}_{0}}-1}^{\delta }(a,b)-s_{{{k}_{0}}}^{\delta }(a,b)<\delta \) (resp. \(s_{{{k}_{1}}}^{\delta }(a,b) < 1-\beta + \delta \)) holds.

5 Scaling RoleSim* search on large graphs

In this section, we propose efficient techniques that enable RoleSim* similarity search to scale well on sizable graphs with billions of edges. It is noticed that our iterative method for RoleSim* search by Algorithm 1 needs to memoise all |V |2 pairs of similarities {sk(∗,∗)} at iteration k for computing any similarity at iteration (k + 1). On small graphs, this algorithm runs very fast for all-pairs search. However, real graphs are often large with millions of nodes. The O(|V |2) memory required by Algorithm 1 would jeopardise its scalability over massive graphs. Moreover, in many real-world applications, users are often interested in partial-pairs similarity search. For instance, in a DBLP collaboration network, one would like to find who are Prof. Jennifer Widom’s close collaborators. In a social graph, one wants to know who are Thomas’s close friends on Instagram. In a web graph, one wishes to identify which web pages are relevant to a given query page. These applications call for a need to devise a scalable method that retrieves partial-pairs RoleSim* similarities within a small amount of memory. Formally, we are ready to solve the following RoleSim* search problem:

Problem (Single-source RoleSim* Similarity Search). Given: a graph G = (V,E), a query node q ∈ V, and a desired depth KFootnote 1

Retrieve: |V | pairs of RoleSim* similarities {sK(∗,q)} between all nodes in G and query q in a scalable manner.

To avoid using O(|V |2) memory, the central idea underpinning our method is judiciously implementing caching techniques on only a small portion of node-pairs that involve heavily repetitive similarity computations. More specifically, to evaluate each pair (u,q)’s similarity for single-source {s(∗,q)} retrieval, we start at each root pair (u,q), and employ a depth-first search (DFS) to traverse all the in-neighboring pairs within k hops from the root (u,q), recursively, against the in-coming edges of the graph in a depthward movement before backtracking when a desired depth k is reached or a “dead end” (i.e., a pair (x,y) with either node x or y having no in-neighbours) occurs in any iteration. The iterative recurrence for retrieving each root pair (u,q) can be diagrammed by a recursion tree. For example, given graph G in Figure 6a with query node q = 7 and desired depth K = 4, the recursion tree for the recurrence to retrieve each s(x,7) (∀x ∈ V ) through DFS is depicted in Figure 6c, respectively. We have the following two observations:

-

(1)

There are a number of repeated computations among these recursion trees. For instance, s(4,7) is repetitively evaluated three times (circled in red). If the result of s(4,7) is cached and reused in subsequent recurrence, a number of unnecessary RoleSim* computations can be avoided.

-

(2)

When breaking down the traversal of s(3,7) and s(4,7), we notice that their unfolded recurrence structures (circled in blue) are exactly the same, which is due to the same in-neighboring structures of nodes 3 and 4, i.e.,I(3) = I(4). If the previously cached results of s(4,7) can be used again for evaluating any other {s(x,7)} (for all x ∈ V −{4} with the same in-neighboring structure of node 7), many duplicate computations can be skipped further.

It is worth mentioning that the parameter K here is the desired depth of search to control the height of the traversed recursion tree, which provides a user-controlled effect of the speed and accuracy for computing RoleSim* similarity. For example, when K is small, the computation of sK(v,q) is fast, but this would increase the error between sK(v,q) and the exact solution s(v,q). When K is large, sK(v,q) approaches s(v,q), which achieves high accuracy, but will take more time, as it requires more steps to traverse the recursion tree. Moreover, the user-specified K also effectively avoid ending up an infinite loop of a circle while traversing the graph for RoleSim* retrieval.

Based on these observations, we devise a caching approach in backtracking of DFS to minimise duplicate RoleSim* similarity computations. Different from Algorithm 1 that requires O(|V |2) memory space to cache all-pairs similarities, we select only the “important” pairs for memoization. We first define the “importance” of a node-pair as follows.

Definition 1

Let (x,y) be a pair of nodes, and |Ox| be the out-degree of node x, then the importance of the pair (x,y), denoted as ρ(x,y), is defined as

Intuitively, Definition 1 uses degree centrality to evaluate the “importance” of a pair since a pair (x,y) is likely to be “important” if nodes x and y are linked to a large number of nodes.

According to Definition 1, during DFS backtracking, when each pair (x,y) is visited, we first check if ρ(x,y) ≥ 𝜃 to determine whether this pair is worthy of being cached, where 𝜃 is user-specified threshold between 0 and \(d_{\max \limits }^{2}\)Footnote 2, which is a space-speed tradeoff. When 𝜃 is set to 0, all pairs in the recursion tree are memoised, which in the worst case will reduce to the case of Algorithm 1. When \(\theta > d_{\max \limits }^{2}\), no caching techniques apply, which degrades to the naive recursive retrieval of RoleSim* similarities, being rather cost-inhibitive. In other words, the selection of 𝜃 value is a trade-off problem between memory and execution time, the smaller the 𝜃, the larger the number of memoised pairs in memory and consequently the lower the execution time. Therefore, an appropriate selection of 𝜃 plays an important role in providing a good balance between the computational time and memory space (i.e., the number of memoised pairs to be retrieved).

To avoid caching insignificant pairs, we often set 𝜃 to the first quartile of the pairwise out-degree set \(\{\rho (x,y)\}_{(x,y) \in V^{2}}\) of the graph, which guarantees more than slightly important pairs to be cached using a moderate amount of memory. This is because there are close relationships between 𝜃 and the number of retrieved pairs N. As demonstrated by our extensive experiments in Figure 7, when 𝜃 is less than the first quartile of the pairwise out-degree set \(\{\rho (x,y)\}_{(x,y) \in V^{2}}\) on each datasetFootnote 3 (e.g.,DBLP, Bitcoin-α and P2P), there are a large number of memoised pairs with huge space requirement, but the computation is very fast. When 𝜃 is larger than the first quartile of \(\{\rho (x,y)\}_{(x,y) \in V^{2}}\), the execution time increases significantly whereas the number of retrieved pairs decreases sharply. Only when 𝜃 is around the first quartile of \(\{\rho (x,y)\}_{(x,y) \in V^{2}}\) (e.g.,𝜃 ≈ 10 on DBLP, 5 on Bitcoin-α, 60 on P2P), there is a good balance between the computational time and memory space (with the balancing point circled in red). Thus, we empirically set 𝜃 to the first quartile of the pairwise out-degree set \(\{\rho (x,y)\}_{(x,y) \in V^{2}}\) of a graph.

Once we decide that a visited pair (x,y) deserves to be cached, we next employ an unordered hash table \({\mathcal T}\) for memoising. Precisely, we first check whether the key (i.e., node-pair (x,y)) exists in the hash table. If not, we compute the RoleSim* similarity s(x,y) once, and add < key,value >:=< (x,y),s(x,y) > to the hash table. Otherwise, we just retrieve the cached similarity value s(x,y) corresponding to the key (x,y) from the hash table instead of computing the similarity s(x,y) again, thus significantly boosting the performance for single-source RoleSim* search. Note that, due to the symmetry of RoleSim* similarity s(x,y) = s(y,x), when hashing the pair (x,y), we will swap x and y beforehand if x > y, to avoid both (x,y) and (y,x) being hashed.

Single-Source Algorithm

The single-source RoleSim* algorithm, referred to as SSRS*, is shown in Algorithm 2. It works as follows. First, it starts by building a hash table \({\mathcal T}\) (line 1). Next, it invokes a Single-Pair function to evaluate the RoleSim* similarity between each node u ∈ G and query q according to whether the similarity value of pair (u,q) is memoised in hash table \({\mathcal T}\) (lines 2-6). If pair (u,q) is at the last level or has no in-neighbours, the similarity is set to (1 − β) (line 8). Otherwise, it enumerates all the in-neighboring pairs (a,b) in Iu × Iq (line 11). If (a,b) exists in hash table \({\mathcal T}\), it retrieves the similarity of (a,b) from \({\mathcal T}\) directly with no need for recomputation (line 13); otherwise, it recursively computes the similarity of (a,b) (line 15). Using all the similarities of the in-neighboring pairs of (u,q), it then computes similarity s(u,q) according to (4) (line 16-18), and memoises the resulting score if (u,q) is an important pair (line 19). Finally, it returns s(u,q) to the main function (line 20).

Computational Complexity

Analysing the computational time for retrieving single-source RoleSim* query, we show the following theorem:

Theorem 7

Let N be the number of pairs whose RoleSim* similarity scores are retrieved from the hash table in the traversal of the recursion tree with K levels. Assume that the network G is scale-free and follows power-law degree distribution. We denote by pin(d) and pout(d) the fraction of nodes in G having in-degree and out-degree d, respectively, which satisfy \({{p}_{\text {in}}}(d)\propto {{d}^{-{{\gamma }_{\text {in}}}}}\) and \({{p}_{\text {out}}}(d)\propto {{d}^{-{{\gamma }_{\text {out}}}}}\), where γin and γout are the power-law exponents whose values are typically \(2\sim 3\). Let din and dout be the maximum in-degree and out-degree of G, respectively. Then, the average computational time for retrieving RoleSim* similarities between all nodes and a query for K levels is bounded by \(O\big ({{\rho }_{\text {out}}^{2(K-1)}} \left (|V|{{\rho }_{\text {out}}^{2}}-\frac {N}{K} \right ) \big )\) with \({{\rho }_{\text {out}}}:=O\left (\frac {{{({{d}_{\text {out}}})}^{2-{{\gamma }_{\text {out}}}}}-1}{2-{{\gamma }_{\text {out}}}} \right )\).

Proof

We first analyse the computational cost for a single-pair RoleSim* query without using any memoisation optimisation. Since the graph G follows power-law degree distribution, the expected value of the number of in-neighbours of each node is bounded by

where Cin is a constant. Similarly, the expected value of the number of out-neighbouring pairs of any pair is bounded by \({{\rho }_{\text {out}}}:=\tfrac {{{C}_{\text {out}}}}{2-{{\gamma }_{\text {out}}}}\big ({{({{d}_{\text {out}}})}^{2-{{\gamma }_{\text {out}}}}}-1 \big )\), where Cout is a constant. We notice that, to compute each pair of similarity at any level i, the expected value of the number of in-neighbouring pairs that we need to retrieve at level (i − 1) is \(O({{\rho }_{\text {in}}}^{2})\). Since the expected value of the number of node-pairs at level i is bounded by \(O({{\rho }_{\text {out}}}^{2(i-1)} )\) in the average case, the total computational time for evaluating any single-pair similarity at the top level in the average case is bounded by

which implies that \(O(|V|{{\rho }_{\text {out}}}^{2K})\) time is required for single-source retrieval of |V | nodes w.r.t. a query.

However, after using memoisation, this computational cost is significantly reduced. Generally, the amount of computational cost reduction depends on the number of memoised pairs and the position at which the memoised pairs appear. For ease of our analysis, we denote by C(i) the computational cost that can be saved by a pair at level i whose similarity value is obtainable directly from the hash table. Since the expected value of the number of out-neighboring pairs of similarities that need to be retrieved at level (i + 1) is bounded by \(O({{\rho }_{\text {out}}}^{2})\) recursively till level K, the total computational cost of C(i) in the worst case is:

Then, the average computational cost, denoted as \(\bar {C}\), which can be saved by a pair through retrieving its similarity score from the hash table, is as follows:

It follows that the average computational cost for a single-source query is

□

6 “Sum-transitivity” of RoleSim* similarity

In this section, we investigate the transitive property of the proposed RoleSim* similarity measure. Intuitively, when a similarity measure s(∗,∗) fulfils the transitive property, it means that, for any three nodes a,b,c in the graph, if a is similar to b and b is similar to c, it implies that a is likely to be similar to c. The transitivity feature is useful in many real applications, e.g., for predicting and recommending links in a graph.

Before showing the transitive property of RoleSim*, let us induce a distance d(a,b) := 1 − s(a,b) from the RoleSim* measure. Due to s(∗,∗) ∈ [1 − β,1], the distance d(∗,∗) is between 0 and β. In what follows, we will show that d(∗,∗) satisfies the triangular inequality, which is an indication of s(∗,∗) transitivity.

We first provide the following two lemmas, which will lay the foundation for our proof of the RoleSim* triangular inequality.

Lemma 1

Let sk(∗,∗) be the k-th iterative RoleSim* similarity via (3) and (4). For any 3 nodes (a,b,c) in a graph, if sk(a,b) + sk(b,c) − sk(a,c) ≤ 1 holds at iteration k, the following inequality holds:

Proof

Without loss of generality, we only consider the case of \(\left | {{I}_{a}} \right |\le \left | {{I}_{b}} \right |\le \left | {{I}_{c}} \right |\). The proofs for other cases are similar, and omitted here due to space limitation. In this case, we have

Hence, the left-hand side (LHS) of (12) can be rewritten as

We first find an upper bound on Part 1. Since \( \sum \limits _{(x,y)\in {{M}_{a,b}}}\!\!\!\!\!\!\! {{{s}_{k}}(x,y)} \le \!\!\!\!\!\!\!\!\! \sum \limits _{(x,y)\in {{M}_{a,b}}} \!\!\!\!\!{1} =|{{M}_{a,b}}|\), it follows that

To get an upper bound for Part 2, let

Then, Mb,c can be partitioned into two parts: \({{M}_{b,c}}=M_{b,c}^{(1)}\cup M_{b,c}^{(2)}\) where

Therefore,

Substituting (14) and (15) into (13) produces

□

Lemma 2

For any 3 nodes (a,b,c) in a graph, if sk(a,b) + sk(b,c) − sk(a,c) ≤ 1 holds at iteration k, then it follows that

Proof

For each x ∈ Ia, there exist yx ∈ Ib and zx ∈ Ic such that (x,yx) ∈ Ma,b and (x,zx) ∈ Ma,c. Then, for each z ∈ Ic −{zx}, there exists y ∈ Ib such that

Summing both sides of the inequality over all z ∈ Ic −{zx} and all y ∈ Ib yields

where

Therefore, it follows that

Summing both sides of the inequality over all x ∈ Ia produces

Since \(\bigcup \limits _{x\in {{I}_{a}}}{\{(x,{{y}_{x}})\}}={{M}_{a,b}}\) and \(\bigcup \limits _{x\in {{I}_{a}}}{\{(x,{{z}_{x}})\}}={{M}_{a,c}}\), we divide both sides of the inequality by \((|{{I}_{a}}|\times \left (|{{I}_{c}}|-1 \right )\times |{{I}_{b}}|)\) to get LHS of(16) ≤ 1. □

Example 6

Recall the graph G in Figure 1, and three node-pairs (1,2),(2,3),(3,1) in G. For each pair (e.g., (1,2)), all the RoleSim* similarities of its in-neighboring pairs are tabularised as a grid (e.g., I1 × I2) in Figure 8, respectively. The green cells in each grid (e.g.,I1 × I2) correspond to the similarities over the maximum bipartite matching (e.g.,M1,2); and the remaining cells in orange denote the similarities out of the bipartite matching (i.e., in I1 × I2 − M1,2). Lemma 1 indicates that the similarity values in the green cells satisfy

An illustrative example of Lemmas 1 and 2. The similarity grids for the in-neighbouring pairs of three node-pairs (1,2),(1,3),(2,3) in Figure 1 are visualised, respectively, to picturise RoleSim* triangular inequality

Similarly, Lemma 2 implies that the similarity values in the orange cells satisfy

Leveraging Lemmas 1 and 2, we are now ready to show the sum-transitivity of the RoleSim* similarity distance, which is the main result in this subsection:

Theorem 8 (RoleSim* Triangle Inequality)

We denote by d(a,b) := 1 − s(a,b) the closeness between nodes a and b. Then, for any three nodes a,b,c in a graph, the following triangle inequality holds, i.e.,

Proof

By the definition of d(a,b) := 1 − s(a,b), based on the fact that

in what follows we will prove (18) holds by induction on k. For k = 0, by virtue of (3), it is apparent that

For k > 0, we assume that sk(a,b) + sk(b,c) − sk(a,c) ≤ 1 holds, and will prove that sk+ 1(a,b) + sk+ 1(b,c) − sk+ 1(a,c) ≤ 1 holds.

Let P1 and P2 be left-hand side (LHS) of (12) and (16), respectively. According to Lemmas 1 and 2, it follows from P1 ≤ 1 and P2 ≤ 1 that

□

7 Scaling RoleSim* search using triangle inequality and partitioning

RoleSim* based similarity search can be also scaled via pruning using the triangular inequality property. With some pre-computation, one can prune the node-pairs that need not be evaluated and eliminate nodes from the candidate list to produce a top-k similar nodes rank list with less computation. We first discuss a strategy for retrieving approximate node-pair results based on graph partitioning. We then discuss exact single-source computation to obtain the most similar nodes to a query q, by indexing based on the distance to some chosen keys.

First, we consider a simple graph partitioning-based strategy, where graph G is partitioned using a vertex separator method to produce parts of roughly equal sizes. We refer to the set of vertex separators (also called vertex cut or separating set) as VS. Nodes in VS are the nodes that, when removed from G, separate it into its partitions. For our purposes, we include the set of nodes VS with their corresponding edge connections into each of the partitions. That is, nodes in the VS are the only nodes that are present in every subgraph constructed via this partitioning approach.

Example 7

Consider the digraph G in Figure 9a, where a 2-way partitioning has been performed resulting in vertex separators VS = {3,7}. Subgraphs G1 and G2 are constructed such that \(V_{G_1} = \{1,2,3,7\}\) and \(V_{G_2} = \{3,4,5,6,7\}\). Using our single-source approach, all pairs of similarities from nodes in VS to nodes in G can be pre-computed. Given a decay factor β = 0.8, and relative weight λ = 0.7, the exact RoleSim* similarities are computed (after k iterations) and cached for all s(v,∗) where v ∈ VS. Now, for node-pair (2,1), the exact similarity score of s(2,1) from G1 may be approximately computed as sP(2,1) where the pre-computed value of s(3,7) is used in every iteration and only the pruned graph G1 is considered. The similarity score sPA(2,1) also uses the pre-computed s(3,7), but also allows access to neighboring nodes if they are present in G2. As seen from Figure 9b and c, sPA(2,1) is more accurate but less efficient due to accessing a larger graph.

Approximate RoleSim* node-pair similarity retrieval that caches the similarities between vertex separators to all nodes and uses triangle inequality to compute approximate results for node-pairs (2,3) (case 1), (2,1) (case 2), (2,4) (case 3), where two partitions are encircled in red and blue respectively

Consider a query node q. To compute its similarity to any node n in G, there are three different cases:

-

1.

One or both nodes are vertex separators, i.e., q ∈ VS or n ∈ VS: this means an exact value for s(q,n) is already pre-computed

-

2.

Both nodes are in the same partition, say q,n ∈ G1: this means an approximate value for s(q,n) can be computed considering G1 as the input graph and discarding all nodes/edges from G2

-

3.

Both nodes are in different partitions, say q ∈ G1 and n ∈ G2: this means an approximate (lower bound) value for s(q,n) can be directly computed from the pre-computed exact values of s(v,∗) by making use of the triangle inequality property of RoleSim*

While case 1 returns the exact value, case 2 and case 3 lead to approximate results. This is depicted in Figure 9d, where numerous additional similarity computations (Figure 9e) are avoided by retrieving the values stored for nodes in VS and using these to compute approximate results. In case 2 when G is pruned into G1, the nodes/edges connections that are discarded no longer contribute to the similarity computation during the RoleSim* traversals, leading to inexact results. The case 3, considering q ∈ G1 and n ∈ G2 and pre-computed exact values of s(v,∗), is explained in further detail:

Using triangle inequality (18), we know that ∀v ∈ VS:

Hence, using the pre-computed values s(v,∗), we know that s(q,n) must have its lower bound as the largest of these values, or:

This can be extended to k-way partitioning. The vertex separator set is taken as one end node of each of the edge cuts in any k-way partitioning scheme, and included into each of the subgraphs.

Next, we discuss the challenge of returning an exact solution for the most similar nodes to a query q. The vertex separator set VS is taken as a set of keys whose similarities to all nodes are pre-computed. From the triangle inequality, we know that:

Hence, a lower bound may be obtained on d(q,n), by computing:

Given a threshold \(s_{\min \limits }\), we want to find all nodes ni such that \(s(q,n_i) \geq s_{\min \limits }\). That is, d(q,ni) ≤ ξ where \(\xi = 1 - s_{\min \limits }\). The lower bounds d(q,ni) for all nodes ni are given by (20). Any lower bound greater than ξ allows the node ni to be pruned from the candidate list. We denote the resulting candidate set as Vk. In large graphs, with careful partitioning to select a small number of vertex separators (|Vk|≪|V |), the number of distance computations is substantially reduced.

One can use this approach to prune partitions while identifying the top-k similar nodes to query q. Consider subgraphs Gj obtained by partitioning G, each containing a single vertex separator v. Using pre-computed values for d(v,∗), (19) gives the lower bounds of d(q,ni) for \(n_i \in V_{G_j}\) (j = 1,⋯ ,NP respectively for each of the NP partitions). The minimum of all these distances within a partition gives a lower bound value that helps to index the partitions:

Let us denote these lower bounds as \(\xi _{G_j}\) for each subgraph Gj. Without loss of generality, suppose \(\xi _{G_1} > \xi _{G_2}\) for a 2-way partitioning of G. Thus, every node in G1 is necessarily at least \(\xi _{G_1}\) distance away from q. We first compute exact distances for all nodes in G2. If k such nodes all have a distance to q that is smaller than \(\xi _{G_1}\), then nodes of G1 need not be considered, and the resulting top-k can be directly returned. If not, that is if any nodes of G2 are at a distance higher than the lower bound of the next partition (here, G1), then nodes of G1 must be processed. These nodes are then inserted into the top-k ranking based on the computed distances. A similar process continues through the ordered set of \(\xi _{G_j}\) values for multi-way partitioned data.

8 Experimental evaluation

8.1 Experimental settings

Datasets.

We use 8 real datasets with different scales, as illustrated below:

Datasets | (Abbr.) | |V | | |E| | |E|/|V | | Type | |

|---|---|---|---|---|---|---|

small | DBLP | (DBLP) | 2,372 | 7,106 | 2.99 | Undirected |

Amazon | (AMZ) | 5,086 | 8,970 | 1.76 | Directed | |

HEP-Citation | (CIT) | 34,546 | 421,578 | 12.20 | Directed | |

medium | P2P-Gnutella | (P2P) | 62,586 | 147,892 | 2.36 | Directed |

Email-EuAll | (EML) | 265,214 | 420,045 | 1.58 | Directed | |

Web-Google | (WEB) | 875,713 | 5,105,039 | 5.82 | Directed | |

large | YouTube | (YOU) | 1,134,890 | 2,987,624 | 2.63 | Undirected |

LiveJournal | (LJ) | 4,847,571 | 68,993,773 | 14.23 | Directed |

-

DBLP. A collaboration (undirected) graph taken from DBLP bibliography.Footnote 4 We extract a co-authorship subgraph from six top conferences in computer science (SIGMOD, VLDB, PODS, KDD, SIGIR, ICDE) during 2018–2020. If two authors (nodes) co-authored a paper, there is an edge between them.

-

Amazon. A co-purchasing graph crawled from Customers Who Bought This Item Also Bought feature of AmazonFootnote 5. Each node is a product, and edge i → j means that product j appears in the frequent co-purchasing list of i.

-

HEP-Citation. A citation digraph from arXiv scholarly physics articles. In this graph, nodes represent papers, and there is a directed edge from paper u to paper v if paper u cites paper v.

-

P2P-Gnutella. A file sharing graph from Gnutella peer-to-peer network. In this graph, nodes represent hosts, and each edge denotes the connection from one host to another in the Gnutella network.

-

Email-EuAll. A digraph constructed from emails of a research institute. Each node represents an email address, and there is a link from node u to v if at least one email is sent from u to v.

-

Web-Google. A Google web digraph from SNAPFootnote 6 network repository. In this digraph, nodes represent web pages, which are connected by directed edges that represent the hyperlinks from one web page to another.

-

YouTube. A friendship (undirected) graph from YouTube video sharing website, which is an online social network. In this digraph, nodes denote users, and edges are the friendship relation between them.

-

LiveJournal. A large friendship graph from LiveJournal community. This is an online social network, in which nodes are users, and each edge i → j is a recommendation of user j from user i.

All experiments are conducted on a PC with Intel Core i7-10510U 2.30GHz CPU and 16GB RAM, using Windows 10. Each experiment is repeated 5 times and the average is reported.

Compared Algorithms

We implement the following algorithms in VC++:

Models | (Abbr.) | Description |

|---|---|---|

RoleSim* | (RS*) | our proposed RoleSim* model in Algorithm 1. |

Single-Src RS* | (SSRS*) | our proposed single-source RoleSim* model in Algorithm 2. |

SimRank | (SR) | a pairwise similarity model proposed by Jeh and Widom [7]. |

MatchSim | (MS) | a model relying on the matched neighbors of node-pairs [17]. |

RoleSim | (RS) | a model that ensures the automorphic equivalence of nodes [9]. |

RoleSim++ | (RS++) | an enhanced RoleSim that considers in- and out-neighbors [25]. |

CentSim | (CS) | a model that compares the centrality of node-pairs [14]. |

Parameters

We use the following parameters as default: (a) damping factor β = 0.8, (b) relative weight λ = 0.7, (c) total number of iterations K = 5.

Unsupervised Semantic Evaluation

We design an unsupervised evaluation setting to quantify the effectiveness of the similarity measures. We use self-similarity as the ground truth and study the effect of sampling the immediate neighborhood of a query node on similarity scores in RoleSim* compared with SimRank and RoleSim.

Our evaluation is inspired by the problem of determining duplicate nodes in a network simply by examining their neighborhoods for similar patterns. In many applications, the underlying network contains duplicate entities with noisy, incomplete, and partially overlapping information, such as in a social network that has been scraped from multiple sources. Similarity of duplicate nodes is expected to be high. We consider duplicate entities as separate nodes, where each duplicate has some sampling of the total set of neighboring edges available to the node. For example, in a co-purchasing product graph (AMZ), duplicates may exist when merging multiple e-commerce sources, or when identical products are sold by different sellers. This indicates that each of these duplicate products were frequently purchased along with certain other products as they share some common neighbors. Similarly, incorrectly spelled author names or multiple sources for a paper can lead to duplicates in co-authorship and citation networks (DBLP, CIT).

Consider a single query node q. In our experiments, we create a node q′ and add it to the graph. We connect \(q^{\prime }\) to some proportion (η) of the total number of neighbors of q, and hereby refer to \(q^{\prime }\) as the “sampled clone”. The similarity scores of q to all other points in the graph are computed using SimRank, RoleSim, and RoleSim*. We evaluate how much the relative similarities are preserved when different measures are used. First, we vary η for \(q^{\prime }\) with step size 0.25 (and ensuring no orphaned nodes) while varying λ = 0.0,0.3,0.5,0.7,1.0 for RoleSim* and compare the resulting similarity scores. In a similar experiment, we vary both η (for q) as well as \(\eta ^{\prime }\) (for \(q^{\prime }\)) each with step size 0.25, resulting in some overlap of neighborhoods as the values of η and \(\eta ^{\prime }\) grow towards 1. These results are aggregated over 20 queries on DBLP and AMZ graphs respectively, where query nodes are chosen as having high degree of neighbors.

8.2 Experimental results

Semantic Accuracy

We first count the number of queries where the sampled clone \(q^{\prime }\) appears in the top-k (k = 1,5,10) similar nodes to query q for RoleSim*. Intuitively, this studies how much structural information is gleaned about a query node. Figure 10a presents the number of such queries out of 20 on the undirected DBLP graph, considering top-5 similarity scores. Other top-k plots are omitted, but show that with increasing k for a given sampling proportion there are more such queries even at lower λ.

Next, we test the impact of sampling η and λ on ranking quality in RoleSim*. We plot the average ranking quality (normalized discounted cumulative gain (nDCG)), considering top-100 similar nodes of the sampled clone and comparing this to the baseline original query. We observe that the trend (with respect to η) seen in Figures 10b and 11b for λ = 1 resembles that for RoleSim, and the trend for λ = 0.5 is close to that for SimRank.

We further consider a fixed value of λ = 0.7 and confirm that the RoleSim* has higher ranking quality compared to SimRank and RoleSim, with respect to the average nDCG. Figure 10c with undirected DBLP graph shows that RoleSim* produces a more consistent nDCG even with small η. For the directed AMZ graph in Figure 11c too, RoleSim shows significant improvement at lower sampling, and the performance of SimRank is negatively affected throughout, while RoleSim* remains stable.

Finally, we also check the results obtained on varying the sampling of both q and \(q^{\prime }\) together (sampling η and \(\eta ^{\prime }\) neighboring edges respectively). For the resulting top-k similarity lists, we count the number of queries (out of 100) for which clone \(q^{\prime }\) is present in top-10 results for q (plots are omitted here). This provides an estimate for the number of duplicate entities that can be correctly identified. We note that RoleSim and RoleSim* are both heavily impacted when there is a large mismatch in the sampled neighborhood sizes. Specifically, the exact nature of the neighboring nodes themselves appears less important compared to the relative structure of connectivity patterns with the neighborhood. Despite random samples of neighborhoods, the results peak only when the neighborhood sizes are close to each other (i.e.,η and \(\eta ^{\prime }\) are equal).

Overall, nDCG scores of RoleSim* are superior to RoleSim, while SimRank performs poorly when sampling rates are low. These results together indicate that for the challenge of identifying duplicate entities. RoleSim* is best suited to correctly identify a match when presented with a noisy sample of edge connections from the duplicate node. In particular, taking only a small sample of edges from both the duplicate and the original nodes produces best matching results.

Qualitative Case Study

Table 2 compares the similarity ranking results from three algorithms (SR, RS and RS*) for retrieving top-10 most similar authors w.r.t. query “Philip S. Yu” on DBLP. From the results, we see that the top rankings of RS* are similar to RS, highlighting its capability to effectively capture automorphic equivalent neighboring information. For instance, “Jure Leskovec” is top-ranked in RS* list. This is reasonable because he and “Philip S. Yu” have similar roles - they are both Professors in Computer Science with close research expertise (e.g., knowledge discovery, recommender systems, commonsense reasoning). However, the rankings of RS* are different from those of RS. For example, “Jure Leskovec” is ranked 350th by SR, but 4th by RS* and RS. This is because SimRank can only capture connected paths between two authors while ignoring their automorphic equivalent structure. “Jure Leskovec” has rare collaborations with “Philip S. Yu”, both direct and indirect, thus leading to a low SimRank score.

To evaluate RS* further, we choose two different values for λ ∈{0.6,0.8} to show how RS* ranking results are perturbed w.r.t.λ. From the results, we notice that, when λ is varied from 0.6 to 0.8, nodes with small SR scores (e.g., “Jure Leskovec”) exhibit a stable position in RS* ranking, whereas nodes having higher SR scores (e.g., “Huan Liu”) have a substantial change. This conforms with our intuition because “Huan Liu”’s collaboration with “Philip S. Yu” is closer than “Jure Leskovec”’s, and RS* is able to capture both connectivity and automorphic equivalence of two authors using a balanced weight λ. Thus, compared with “Jure Leskovec”, “Huan Liu” who has higher SimRank value with “Philip S. Yu” is more sensitive to λ change, as expected.

Computational Time

The second set of experiments is evaluating the computational time of seven algorithms (SSRS*, RS*, RS, MS, RS++, CS, SR) on various real-life datasets, including both medium graphs (e.g., CIT, P2P, EML) and large graphs (e.g.,WEB, YOU, LJ).