Abstract

Runoff forecasting is one of the important non-engineering measures for flood prevention and disaster reduction. The accurate and reliable runoff forecasting mainly depends on the development of science and technology, many machine learning models have been proposed for runoff forecasting in recent years. Considering the non-linearity and real-time of hourly rainfall and runoff data. In this study, two runoff forecasting models were proposed, which were the combination of the bidirectional gated recurrent unit and backpropagation (BGRU-BP) neural network and the bidirectional long short-term memory and backpropagation (BLSTM-BP) neural network. The two models were compared with the gated recurrent unit (GRU), long short-term memory (LSTM), bidirectional gated recurrent unit (BGRU), and bidirectional long short-term memory (BLSTM) models. The research methods were applied to simulate runoff in the Yanglou hydrological station, Northern Anhui Province, China. The results show that the bidirectional models were superior to the unidirectional model, and the backpropagation (BP) based bidirectional models were superior to the bidirectional models. The bidirectional propagation was conducive to improving the generalization ability of the model, and BP neural network could better guide the model to find the optimal nonlinear relationship. The results also show that the BGRU-BP model performs equally well as the BLSTM-BP model. The BGRU-BP model has few parameters and a short training time, so it may be the preferred method for short-term runoff forecasting.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Accurate simulation of the rainfall-runoff process is important for water resources and water environment quality management. Over the last few decades, climate and land use change are making the frequency of extreme precipitation and flood disasters rise (Rajib and Merwade 2017), the runoff prediction becomes complex and difficult. In the process of formation and development of runoff, it shows a high degree of nonlinearity due to the uncertainty and randomness of natural geographical and human factors. Moreover, runoff prediction is one of the important non-engineering measures for flood prevention and disaster reduction. A high-precision runoff prediction model, which can provide technical guidance and theoretical support for flood forecasting and early warning (Amengual et al. 2017). Therefore, how to obtain a high-precision runoff prediction model and effectively predict the runoff time series is a great challenge for hydrologists (Yuan et al. 2018). Faced with this challenge, various methods have been proposed for predicting the runoff.

Existing runoff prediction models can be divided into two categories: physics-based models and empirical models (Young and Liu 2015; Kratzert et al. 2018). Physical-based models, such as the TOPMODEL (Beven et al. 1984), Sacramento (Anderson et al. 2006), Xin’anjiang (Bao et al. 2010), SWAT (Spruill et al. 2000), and SWMM (De Paola et al. 2018), simulate the hydrological processes based on the physics characteristics of the watershed. These models can simulate the hydrological generation process more accurately but require substantial parameter calibration, which leads to weak adaptability to the watershed. Empirical models, which are mainly represented by data-driven models, such as statistical models, machine learning models, and deep learning models. The statistical models use statistically correlation methods to predict future runoff based on historical runoff observations, such as autoregressive (AR) (Salas et al. 1985), autoregressive integrated moving average (ARIMA) (Montanari et al. 1997; Phan and Nguyen 2020), and seasonal autoregressive integrated moving average (SARIMA) (Valipour 2015; Xu et al. 2019). These models can accurately describe the linear correlation, but it is difficult to capture the nonlinear relationship. The machine learning models, such as wavelet neural networks (Londhe and Charhate 2010; Makwana and Tiwari 2014), support vector machine (SVM) (Liong and Sivapragasam 2002), neuro fuzzy models (Lohani et al. 2014; Adnan et al. 2020), and artificial neural network (ANN) (Kumar et al. 2016; Bomers et al. 2019). These models solve the problem of fitting nonlinear sequences (Mosavi et al. 2018), but they have limited ability to extract deeper information.

With the improvement of computer performance, the above problems have been solved by deep learning models. The deep learning models, such as long short-term memory (LSTM) network (Qi et al. 2019; Xiang et al. 2020) and gated recurrent unit (GRU) network (Gao et al. 2020; Liu et al. 2022), have been widely used in runoff prediction. The advantage of deep learning models is that they will not be affected by the external physical environment. They can learn the deep correlation relationship between data to establish the quantitative relationship (Fu et al. 2019) between input and output, and effectively capture the nonlinearity of rainfall-runoff relationships.

However, as the units of time series get shorter, the generalization ability of data-driven models may decrease (Liu et al. 2021a). According to a large number of experiments and studies, the non-stationarity of time series (Wang et al. 2019) has a great influence on the prediction accuracy. The rainfall-runoff process is a complex time series by multi-scale laws. For the hourly precipitation and runoff data, the stationarity of the data is poor. Currently, the conventional machine learning methods, such as backpropagation (BP) neural network has strong non-linear ability and self-learning adaptability, but it has no concept of time series, the convergence speed is slow, and the gradient is easy to disappear. Although GRU and LSTM models can effectively deal with sequence data for time series prediction (Rangapuram et al. 2018), their ability to mine complex data information is weak. The bidirectional gated recurrent unit (BGRU) and bidirectional long short-term memory (BLSTM) models employ two hidden layers to extract information from the past and future. Therefore, the bidirectional structure to help the GRU and LSTM networks to extract more information (Zhang et al. 2018; LI et al. 2021). Utilizing the advantages of BGRU and BLSTM for processing time series data and the ability of BP neural network to mine complex data information, the combined models can improve the generalization ability.

In this study, the bidirectional gated recurrent unit and backpropagation (BGRU-BP) neural network and the bidirectional long short-term memory and backpropagation (BLSTM-BP) neural network are combined to predict short-term runoff. The main contributions of this study are summarized as follows: First, we propose two new deep learning predictive models named BGRU-BP and BLSTM-BP to deal with the short-term runoff prediction problem. The combined approach significantly improves the generalization ability of the prediction model and better guides the model to find the optimal nonlinear relationship. Second, we test our models by comparing them with four deep learning models on six evaluation metrics. The results show that our models have promising performance in general. The remainder of the paper is organized as follows. Section 2 describes the study area, the data pre-processing method, and sample generation. Section 3 introduces the BP, GRU, LSTM, BGRU, BLSTM, BGRU-BP, and BLSTM-BP methods, the model structures and development, followed by model evaluation standard. Section 4 presents the results and discussions. Finally, Section 5 concludes the paper and gives further research direction.

2 Overview of the Study Area and Data Pre-processing Steps

2.1 Study Area

Yanglou hydrological station, an experimental station to the Limin River in Suzhou city, Northern Anhui Province, China, is shown in Fig. 1. The hydrological station is located at longitude 116°48′54″E, latitude 34°19′47″N. Huangyang River is the tributary of the Limin River, joining the Limin River in the town of Yanglou. There are eight rainfall gauging stations within the Yanglou hydrological station watershed including Tangzhai, Uotao, Xinzhuangzhai, Shaozhuang, Huzhai, Huangkou, Zhengji, and Yanglou gauge stations. The Yanglou gauge station is located at the outlet of the Yanglou hydrological station watershed.

The Yanglou hydrological station watershed is located in the north–south climate transition zone, with complex meteorological conditions. The annual distribution of precipitation is uneven, the river runoff varies greatly from year to year, and droughts occur frequently, which is very unfavorable to industrial and agricultural production and the exploitation of water resources. There are five local towns in the Yanglou hydrological station watershed. The villages in the watershed are concentrated and the population is dense. It is the main production area of grain, cotton, vegetables, and fruits, and plays an important role in the development of the local economy. The main properties of the Yanglou hydrological station watershed are summarized in Table 1.

2.2 Data Pre-processing

In this study, hourly flow discharge data from the Yanglou gauge station and hourly precipitation data from eight rainfall gauging stations were collected from 2000 to 2020. The flow discharge data and precipitation data are derived from the Bureau of Hydrology and Water Resources in Suzhou city, Anhui Province. The flow discharge data are converted into hourly records by spline interpolation and the precipitation data are converted into hourly records by averaging when the measured flow discharge data and precipitation data are not strictly hourly. In total, 12136 hourly rainfall and runoff records relevant to 40 rainfall-runoff events are selected. The 40 rainfall-runoff events are divided into the training set, validation set, and testing set at a ratio of 6:2:2. To improve the accuracy and calculation speed of the model, the normalization (Eq. (1)) is applied to rectify the precipitation and runoff data. The normalization data fall in the range of [0, 1].

where \({x}_{n}\) is the normalized value; \({x}_{i}\) is the measured value; \({x}_{min}\) and \({x}_{max}\) are the minimum value and maximum measured value, respectively.

2.3 Sample Generation

To determine the optimal model input variables, this research first carried out the exploratory data analysis (EDA). EDA is an effective method to understand the stationarity of time series, which is helpful for mining the rules of the precipitation data and runoff data.

Figure 2 shows the autocorrelation and partial autocorrelation analysis of the runoff time series. The shadow region is the 95% confidence interval. As can be seen from Fig. 2, the autocorrelation does not fall into the confidence interval after 50 lag time, indicating that the stationarity of this series is poor. However, the partial autocorrelation falls into the confidence interval after 4 lag time. In addition, Fig. 3 illustrates the distribution plot of lag time of the runoff time series, and most of the points are distributed on the diagonal. As can be seen from the plot with a 4 lag time, the distribution of some points begins to deteriorate significantly. Therefore, the models developed in this study were constructed through 3 lag time. The model input variables include previously (1 h-lag, 2 h-lag, and 3 h-lag) measured precipitation at each rainfall gauging station and previously (1 h-lag, 2 h-lag, and 3 h-lag) measured runoff at the outlet.

In this study, the value of lead time is set to 1, 2, 3, 6, 9, and 12 h. The data from 40 rainfall-runoff events is restructured to a supervised learning dataset by a sliding window method. The sample size of the training set, validation set, and testing set for the 1 h lead time are 9539 (24 rainfall-runoff events), 1597 (8 rainfall-runoff events), and 994 (8 rainfall-runoff events), respectively. The sample size varies slightly with a different lead time.

3 Methodologies

3.1 BGRU-BP and BLSTM-BP Combination models

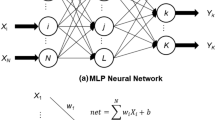

3.1.1 BP Neural Network

BP neural network is a multi-layer feedforward neural network trained according to the error backpropagation algorithm. BP neural network consists of an input layer, a hidden layer, and an output layer. The signals are processed by the hidden layer and output layer neurons (Eq. (2)), and the final result is output by neurons in the output layer (Eq. (3)). The process of weight adjustment is the process of network learning (Tang et al. 2020). BP neural network has strong self-learning and self-adapting abilities (Peng et al. 2020), a three-layer BP neural network can simulate any complex nonlinear problem.

where \({P}_{i}\) and \({Q}_{i}\) are the input of the hidden layer; \({w}_{ij}\), \({b}_{j}\), \(\mathrm{m}\), \(f\), \({h}_{j}\), \({w}_{jk}\), \({b}_{jp}\), \({Q}_{p}\) and \(n\) are the weight of the hidden layer, the bias of the hidden layer, the total number of the input layer, the activation function, the output of the hidden layer, the weight of the output layer, the bias of the output layer, the output of the output layer, and the number of neurons in the hidden layer, respectively.

3.1.2 GRU and LSTM Neural Networks

BP neural network has strong nonlinear mapping ability, but it has no concept of time series, and the gradient is easy to disappear. However, the recurrent neural network (RNN) depends on not only the input of the current time step but also the calculated information of the hidden layer in the previous time step (Zhao et al. 2017). Therefore, RNN can effectively solve the time series problem of sequence data. At present, GRU and LSTM are models with better performance among RNN. In comparison with traditional RNN, GRU (Cho et al. 2014) and LSTM (Hochreiter and Schmidhuber 1997) models can store information and can learn the rules of long time series data. The basic structure of GRU and LSTM is shown in Fig. 4.

The GRU model is a variant of the LSTM model. The main difference between them is that LSTM has an independent memory unit, while GRU transmits the hidden layer output as memory. As it is shown in Fig. 4a, the GRU cell has only two control gates: the reset gate and the update gate. The reset gate determines how much information needs to be forgotten from the previous state. When the value of the reset gate is close to 0, the state information at the previous state will be forgotten. On the contrary, the state information from the previous state is retained in the current input information when the value of the reset gate is 1. The update gate controls whether to update the hidden state to the new state. The larger the value of the update gate, the more information about the hidden state is updated to the new state. The internal calculation process of the GRU cell is shown in Eqs. (4)–(7).

where \({r}_{t}\), \({u}_{t}\), \({\widetilde{z}}_{t}\), \({w}_{r}\), \({w}_{u}\), \({h}_{t-1}\), \({x}_{t}\), \({h}_{tGRU}\), \(tanh\) and \(\sigma\) are the GRU’s output value of the reset gate, output value of the update gate, candidate cell state, connection weight of the reset gate, connection weight of the update gate, output of the previous state, input of the current state, output of the current state, hyperbolic tangent activation function and sigmoid activation function, respectively.

As it is shown in Fig. 4b, the LSTM cell has three control gates: the forget gate, the input gate, and the output gate. The forget gate determines how much information will be moved away from the cell state \({C}_{t}\). The input gate determines how much information of the input \({x}_{t}\) is going to be saved in the cell state \({C}_{t}\). The output gate controls how much cell state \({C}_{t}\) is output to the current output valued \({\mathrm{h}}_{\mathrm{t}}\). The internal calculation process of the LSTM cell is shown in Eqs. (8)–(13).

where \({f}_{t}\), \({n}_{t}\), \({o}_{t}\), \({\widetilde{m}}_{t}\), \({m}_{t}\), \({w}_{f}\), \({w}_{n}\), \({w}_{o}\), \({w}_{m}\), \({b}_{f}\), \({b}_{n}\), \({b}_{o}\), \({b}_{m}\) and \({h}_{tLSTM}\) are the LSTM’s output value of the forget gate, output value of the input gate, output value of the output gate, candidate cell state, current cell state, connection weight of the forget gate, connection weight of the input gate, connection weight of the output gate, weight of the candidate input gate, bias of the forget gate, bias of the input gate, bias of the output gate, bias of the candidate input gate and output of the current state, respectively.

3.1.3 BGRU and BLSTM Neural Networks

The GRU and LSTM neural networks adopt the recurrent structure to store information for long periods, but they may not perform satisfactorily in practice because the networks only access past information (Deng et al. 2019). The BGRU and BLSTM networks have a future layer in which the data sequence is in the opposite direction to solve this problem. The thumbnail of the BGRU network is shown in Fig. 5. Therefore, the networks employ two hidden layers to extract information from the past and future, and both are connected in the same output layer (Eqs. (14)−(16)). These features enable the bidirectional structure to help the GRU and LSTM networks to extract more information, thereby improving the learning ability of the GRU and LSTM networks (Zhang et al. 2018; Li et al. 2021).

where\({\overrightarrow{h}}_{t}\), \({\overleftarrow{h}}_{t}\), \({\overrightarrow{h}}_{t-1}\), \({\overleftarrow{h}}_{t-1}\), \({w}_{\overrightarrow{xh}}\), \({w}_{\overleftarrow{xh}}\), \({w}_{\overrightarrow{yh}}\), \({w}_{\overleftarrow{yh}}\), \({b}_{\overrightarrow{h}}\), \({b}_{\overleftarrow{h}}\), \({b}_{y}\) and \({y}_{t}\) are the output value of the forward hidden layer at the current moment, the output value of the backward hidden layer at the current moment, the output value of the forward hidden layer at the previous moment, the output value of the backward hidden layer at the previous moment, the weight of the input from the forward hidden layer at the current moment, the weight of the input from the backward hidden layer at the current moment, the weight of the output from the forward hidden layer at the current moment, the weight of the output from the backward hidden layer at the current moment, the bias of the input from the forward hidden layer at the current moment, the bias of the input from the backward hidden layer at the current moment, the bias of the output layer at the current moment and the output value at the current moment, respectively.

3.1.4 BGRU-BP and BLSTM-BP Neural Networks

At present, data-driven models are usually single models. The limitation of this single model limits the improvement of the model generalization ability (Liu et al. 2021b). Therefore, the combined models of BGRU-BP and BLSTM-BP are introduced to improve the generalization ability of the single model. The basic layer structure of the BGRU-BP model is shown in Fig. 6. In this way, BGRU and BLSTM networks are used to solve the gradient disappearance problem, and the BP network is used for nonlinear mapping and generalization. The specific processing procedure can be divided into the following steps: (1) The input data is normalized, and restructured to a supervised learning dataset by a sliding window method. The processed input data is \({X}_{0}=\left\{Var1\left(t-n\right), \right.Var2\left(t-n\right), \cdots Var27\left(t-n\right), \left.Var\left(t\right)\right\}\). (2) Divide the sequence into the training set, validation set, and testing set. (3) Setting the model hyper-parameters and tuning them to obtain the optimal model. (4) The testing set is input into the trained model, and the prediction results are compared with known samples. (5) The evaluation index of the model is calculated, and the prediction results of the combined model and the single model are compared.

3.2 Model Development

The GRU, LSTM, BGRU, BLSTM, BGRU-BP, and BLSTM-BP models were developed in this study. Our research relies heavily on Python 3.8. All of the models are developed with Pytorch on top of Python. The data processing is conducted with the Pandas (McKinney 2010), Numpy (Van et al. 2011), and Scikit-Learn packages of the Python software.

To compare the performance of six models in runoff prediction, the same hyper-parameters were used to train six models with different lead times. The optimal hyper-parameters were determined by the trial and error method. After a large number of experiments, the applied GRU and LSTM networks consist of an input layer with 27 neurons, a hidden layer with 30 neurons, and an output layer with 1 neuron. The applied BGRU and BLSTM networks consist of an input layer with 27 neurons, a hidden layer with 60 neurons, and an output layer with 1 neuron. The proposed BGRU-BP and BLSTM-BP networks consist of an input layer with 27 neurons, a hidden layer of BGRU and BLSTM networks with 60 neurons, a hidden layer of BP network with 30 neurons, and an output layer with 1 neuron. The maximum number of iterations is 1000. The learning rate and weight decay are 0.0001 and 0.001, respectively. The optimization process for the number of iterations, learning rate, and weight decay is presented in Appendix. Adaptive moment estimation (Adam) is chosen as the optimizer. Mean squared error (MSE) is used as the loss function. The linear rectification function (ReLU) is used as the activation function of the hidden layer of the BP network.

3.3 Model Evaluation Standard and Optimization

In this study, the Nash–Sutcliffe efficiency coefficient (NSE), mean relative error (MRE), mean absolute error (MAE), relative error (RE), the error of peak discharge (EQ), and the error of peak discharge occurrence time (ΔT) (Eqs. (17)−22)) are used as the evaluation standards. They represent the fitting degree, relative deviation degree, absolute deviation degree, stability of models, and accuracy degree, respectively.

where \({Q}_{m}(i)\) and \({Q}_{p}(i)\) are the measured discharge values and the predicted discharge values in time step \(i\), respectively; \({Q}_{f}\) and \({Q}_{f}^{^{\prime}}\) are the measured peak discharge values and the predicted peak discharge values, respectively; \({T}_{f}\) and \({T}_{f}^{^{\prime}}\) are the measured peak discharge occurrence time and the predicted peak discharge occurrence time, respectively; \({\overline{Q} }_{m}(i)\) is the average value of \({Q}_{m}(i)\); n is the length of data.

4 Results and Discussion

4.1 Performance Comparison Among GRU, LSTM, BGRU, BLSTM, BGRU-BP, and BLSTM-BP Models

Figure 7 shows the training results of six models. The MAE values of the six models in the training set are all small, and the NSE values are high, which reflects that all the models have good convergence. According to the three evaluation standards, the NSE, MRE, and MAE values of the BGRU and BLSTM models are superior to those of the GRU and LSTM models. The NSE, MRE, and MAE values of the BGRU-BP and BLSTM-BP models are superior to those of the BGRU and BLSTM models. The NSE, MRE, and MAE values of the BGRU-BP model are similar to those of the BLSTM-BP model. This demonstrates that the BGRU-BP model performs equally well as the BLSTM-BP model. It can be seen that the ranking order of the three types of models based on the fitting ability from strong to weak is the combined models, the bidirectional models, and the unidirectional models. Among them, the BGRU-BP and BLSTM-BP models have the strongest fitting ability. Figure 8 shows the validation results of six models. Compared with Fig. 7, the results in the validation are similar. The three evaluation standards of the combined models are optimal. The BGRU-BP and BLSTM-BP models still have the highest accuracy.

Figure 9 presents the variation of NSE, MRE, and MAE for the prediction results of the six models with different prediction lead times. In Fig. 9 it can be observed that for all six models, the NSE value decreases as the prediction lead time increases. Both MRE and MAE values increase as the prediction lead time increases. When the lead time increases from 1 to 3 h, the NSE values of the six models decreased by 0.057, 0.051, 0.059, 0.049, 0.032, and 0.032 respectively. The MRE and MAE values of the six models increased by 0.086 and 0.005, 0.072 and 0.004, 0.121 and 0.006, 0.122 and 0.006, 0.109 and 0.006, 0.104 and 0.005 respectively. This shows that the prediction accuracy of runoff is slightly decreased, but it can still achieve a good prediction effect. In the case of short lead time, the correlation of input data is strong, and the model is easy to learn data characteristics. However, when the lead time increases from 3 to 12 h, the NSE values of the six models decreased by 0.28, 0.305, 0.324, 0.346, 0.303, and 0.307 respectively. The MRE and MAE values of the six models increased by 0.415 and 0.016, 0.347 and 0.017, 0.416 and 0.020, 0.430 and 0.022, 0.473 and 0.023, 0.457 and 0.022 respectively. This indicates that the prediction of runoff becomes less accurate. This is expected because the time interval between input previously measured rainfall and runoff and output predicted runoff is much longer when the lead time is longer. As a result, the correlation of input data is reduced, and the model is difficult to learn the characteristics of time series data and the relationship between data. In addition, the accuracy of the prediction decreases with the increase of lead time, influencing factors, and contingency.

The comparison and analysis of the prediction results of six models with a lead time of 1 h (Fig. 9, GRU (NSE = 0.890, MRE = 0.383, and MAE = 0.021), LSTM (NSE = 0.887, MRE = 0.398, and MAE = 0.0215), BGRU (NSE = 0.948, MRE = 0.268, and MAE = 0.0143), BLSTM (NSE = 0.962, MRE = 0.226, and MAE = 0.0123), BGRU-BP (NSE = 0.986, MRE = 0.136, and MAE = 0.0073), and BLSTM-BP (NSE = 0.985, MRE = 0.149, and MAE = 0.008)), the mean NSE values of BGRU and BLSTM models increased by 7.48% than that of GRU and LSTM models. The mean NSE values of BGRU-BP and BLSTM-BP models increased by 3.19% than that of BGRU and BLSTM models. It shows that the fitting degree of BGRU-BP and BLSTM-BP models is high. The mean MRE values of the BGRU and BLSTM models are 36.75% lower than that of the GRU and LSTM models. The mean MRE values of the BGRU-BP and BLSTM-BP models are 42.31% lower than that of the BGRU and BLSTM models. Moreover, the mean MAE values of the BGRU and BLSTM models are 37.41% lower than that of the GRU and LSTM models. The mean MAE values of the BGRU-BP and BLSTM-BP models are 42.48% lower than that of the BGRU and BLSTM models. This reveals that the stability of BGRU-BP and BLSTM-BP models have strong. Therefore, the BGRU-BP and BLSTM-BP models have strong learning ability, and the prediction accuracy of the BGRU-BP and BLSTM-BP models is better than other models.

To validate the stability of different models, the violin plots of six models with a lead time of 1 h are shown to compare the RE distribution (Fig. 10), and the RE distribution parameters of the six models are shown in Table 2. The top horizontal line segment represents the confidence interval upper limit (CIUL) of the RE distribution, and the bottom horizontal line segment represents the confidence interval lower limit (CILL) of the RE distribution. The upper, middle, and lower line segments of the box represent the upper quartile (UQ), median, and lower limit (LQ) of the RE distribution, respectively. The external curve is the probability density curve (PDC), and the black dots are outliers of the RE distribution. The size of the confidence interval (CI) is equal to CIUL minus CILL.

As detailed in Fig. 10 and Table 2, the RE distribution can be divided from good to poor into combined models, bidirectional models, and unidirectional models. Compared with the violin parameters of the unidirectional models, although the CILL of the bidirectional models and the unidirectional models are both 0, the violin parameters of the bidirectional models have smaller CIUL, UQ, median, and LQ. The CI and the interquartile range (IQR) of the bidirectional models are smaller than that of the unidirectional models. This shows that the RE distribution of the bidirectional models is denser, so the stability of the bidirectional models is superior to that of the unidirectional models. Compared with the violin parameters of the bidirectional models, the violin parameters of the combined models have smaller CIUL, UQ, median, and LQ. The CI and the interquartile range (IQR) of the combined models are smaller than that of the bidirectional models. Therefore, the stability of the combined models is superior to that of the bidirectional models.

4.2 Prediction of Individual Rainfall-runoff Events

The testing set consists of 8 individual rainfall-runoff events. Considering the representativeness of the rainfall-runoff events, a single-peak rainfall-runoff event and a multi-peak rainfall-runoff event are selected for discussion. Measured and modeled hydrographs of six models in the single-peak rainfall-runoff event with 1 h, 3 h, and 9 h prediction lead times are shown in Fig. 11, and the EQ and ΔT values of six models are shown in Table 3. According to Fig. 11 and Table 3, we find that the EQ and ΔT values for all six models increased as the prediction lead time increased. These results reveal that the prediction accuracy of runoff is poor when the prediction lead time is large. The EQ values of the six models are negative, and the ΔT values are positive. This indicates that the predicted peak discharge is low and there is a lag in the predicted peak discharge. Based on the EQ and ΔT values, the accuracy of BGRU and BLSTM models is superior to that of GRU and LSTM models. The accuracy of BGRU-BP and BLSTM-BP models is superior to that of BGRU and BLSTM models. The accuracy of the BGRU-BP model is as good as that of the BLSTM-BP model.

Measured and modeled hydrographs of six models in the multi-peak rainfall-runoff event with 1 h, 3 h, and 9 h prediction lead times are shown in Fig. 12, and the EQ and ΔT values of six models are shown in Table 4. As detailed in Fig. 12 and Table 4, the results in the multi-peak rainfall-runoff event are similar. The accuracy of the BGRU-BP and BLSTM-BP models is still higher than other models, and the BGRU-BP model performs equally well as the BLSTM-BP model.

5 Conclusions

In this study, the BGRU-BP and BLSTM-BP deep learning models were proposed for the runoff prediction. EDA is used to provide a reference for modeling. In addition, the combined models are introduced to improve the generalization ability of models. The GRU, LSTM, BGRU, BLSTM, BGRU-BP, and BLSTM-BP models were applied to event-based short-term rainfall-runoff prediction in the control watershed of Yanglou hydrological station. The following can be concluded from this study:

-

1.

EDA can understand the stationarity of time series and help to mine the rules of the precipitation data and runoff data. The correlation analysis of the dataset can determine the optimal model input variables, and provide a reference for the construction of data-driven models.

-

2.

The accuracy of BGRU and BLSTM models is superior to those of GRU and LSTM models. The results show that the bidirectional propagation is conducive to improve the learning ability of the model. The accuracy of BGRU-BP and BLSTM-BP models is superior to those of BGRU and BLSTM models. The RE distribution parameter and violin plot of the BGRU-BP and BLSTM-BP models are the best. This demonstrates that BGRU-BP and BLSTM-BP models have the strongest stability and generalization ability.

-

3.

Compared with the unidirectional models and bidirectional models, the combined models have higher accuracy and better stability, which indicates that the combined models can express more complex nonlinear transformations accurately and effectively.

-

4.

BGRU-BP model performs equally well as the BLSTM-BP model. Given that the BGRU-BP model has few parameters and a short training time, it may be the preferred method for short-term runoff prediction.

Like most studies, this paper also has weaknesses and regrets. The proposed BGRU-BP and BLSTM-BP models still have limitations. The results show that the prediction accuracy of the BGRU-BP and BLSTM-BP models is lower when the lead time increases. Therefore, further research should focus on how to improve the correlation of the input data, and how the model can better learn the characteristics of time series data and the relationship between data. In addition, the influence of data set imbalance on short-term runoff forecasting results will be further expanded in the follow-up researches. In short, the proposed BGRU-BP and BLSTM-BP models can predict runoff in the short-term, and provide a reference for solving the runoff forecasting problem.

Data Availability

Data cannot be made publicly available; readers should contact the corresponding author for details.

Code Availability

Custom code written in Python 3.8 was developed for this study.

References

Adnan RM, Petroselli A, Heddam S, Santos CAG, Kisi O (2020) Short term rainfall-runoff modelling using several machine learning methods and a conceptual event-based model. Stoch Environ Res Risk Assess 35(14). https://doi.org/10.1007/s00477-020-01910-0

Amengual A, Carrió DS, Ravazzani G, Homar V (2017) A comparison of ensemble strategies for flash flood forecasting: The 12 October 2007 case study in Valencia, Spain. J Hydrometeorol 18(4):1143–1166. https://doi.org/10.1175/JHM-D-16-0281.1

Anderson RM, Koren VI, Reed SM (2006) Using SSURGO data to improve Sacramento Model a priori parameter estimates. J Hydrol 320(1–2):103–116. https://doi.org/10.1016/j.jhydrol.2005.07.020

Bao HJ, Wang LL, Li ZJ, Zhao LN, Zhang GP (2010) Hydrological daily rainfall-runoff simulation with BTOPMC model and comparison with Xin’anjiang model. Water Sci Eng 3(2):121–131. https://doi.org/10.3882/j.issn.1674-2370.2010.02.001

Beven KJ, Kirkby MJ, Schofield N, Tagg AF (1984) Testing a physically-based flood forecasting model (TOPMODEL) for three U.K. catchments. J Hydrol 69(1–4):119–143. https://doi.org/10.1016/0022-1694(84)90159-8

Bomers A, Meulen B, Schielen RMJ, Hulscher SJMH (2019) Historic flood reconstruction with the use of an artificial neural network. Water Resour Res 55(11):9673–9688. https://doi.org/10.1029/2019WR025656

Cho K, van Merrienboer B, Gulcehre C, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation. In Conference on Empirical Methods in Natural Language Processing (EMNLP 2014). https://doi.org/10.48550/arXiv.1406.1078

De Paola F, Giugni M, Pugliese F (2018) A harmony-based calibration tool for urban drainage systems. Proc Inst Civil Eng-Water Manag 171(1):30–41. https://doi.org/10.1680/jwama.16.00057

Deng Y, Jia H, Li P, Tong X, Qiu X, Li F (2019) A deep learning methodology based on bidirectional gated recurrent unit for wind power prediction. Proc IEEE Conf Ind Electron Appl (ICIEA) 591–595. https://doi.org/10.1109/iciea.2019.8834205

Fu J, Zhong PA, Chen J, Xu B, Zhu F, Zhang Y (2019) Water resources allocation in transboundary river basins based on a game model considering inflow forecasting errors. Water Resour Manag 33:2809–2825. https://doi.org/10.1007/s11269-019-02259-y

Gao S, Huang Y, Zhang S, Han J, Wang G, Zhang M, Lin Q (2020) Short-term runoff prediction with GRU and LSTM networks without requiring time step optimization during sample generation. J Hydrol 589:125188. https://doi.org/10.1016/j.jhydrol.2020.125188

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Kumar PS, Praveen TV, Prasad MA (2016) Artificial neural network model for rain-runoff-a case study. Int J Hybrid Inf Technol 9(3):263–272. https://doi.org/10.14257/ijhit.2016.9.3.24

Kratzert F, Klotz D, Brenner C, Schulz K, Herrnegger M (2018) Rainfall-runoff modelling using Long Short-Term Memory (LSTM) networks. Hydrol Earth Syst Sci 22(11):6005–6022. https://doi.org/10.5194/hess-22-6005-2018

Li F, Ma G, Chen S, Huang W (2021) An ensemble modeling approach to forecast daily reservoir inflow using bidirectional long- and short-term memory (Bi-LSTM), variational mode decomposition (VMD), and energy entropy method. Water Resour Manag 35(9):2941–2963. https://doi.org/10.1007/s11269-021-02879-3

Liu G, Tang Z, Qin H, Liu S, Shen Q, Qu Y, Zhou J (2022) Short-term runoff prediction using deep learning multi-dimensional ensemble method. J Hydrol. https://doi.org/10.1016/j.jhydrol.2022.127762

Liu X, Sang X, Chang J, Zheng Y (2021a) Multi-model coupling water demand prediction optimization method for megacities based on time series decomposition. Water Resour Manag 35:4021–4041. https://doi.org/10.1007/s11269-021-02927-y

Liu X, Sang X, Chang J, Zheng Y, Han Y (2021b) Water demand prediction optimization method in Shenzhen based on the zero-sum game model and rolling revisions. Water Policy 23(6):1506–1529. https://doi.org/10.2166/wp.2021.046

Liong SY, Sivapragasam C (2002) Flood stage forecasting with support vector machines. J Am Water Resour Assoc 38(1):173–186. https://doi.org/10.1111/j.1752-1688.2002.tb01544.x

Londhe S, Charhate S (2010) Comparison of data-driven modelling techniques for river flow forecasting. Hydrol Sci J 55(7):1163–1174. https://doi.org/10.1080/02626667.2010.512867

Lohani AK, Goel NK, Bhatia KKS (2014) Improving real time flood forecasting using fuzzy inference system. J Hydrol 509:25–41. https://doi.org/10.1016/j.jhydrol.2013.11.021

Makwana JJ, Tiwari MK (2014) Intermittent streamflow forecasting and extreme event modelling using wavelet based artificial neural networks. Water Resour Manag 28(13):4857–4873. https://doi.org/10.1007/s11269-014-0781-1

McKinney W (2010) Data structures for statistical computing in python. Proc Python Sci Conf 1697900(Scipy):51–56. https://doi.org/10.25080/Majora-92bf1922-00a

Montanari A, Rosso R, Taqqu MS (1997) Fractionally differenced ARIMA models applied to hydrologic time series: Identification, estimation, and simulation. Water Resour Res 33(5):1035–1044. https://doi.org/10.1029/97wr00043

Mosavi A, Ozturk P, Chau KW (2018) Flood prediction using machine learning models: Literature review. Water 10(11):1536. https://doi.org/10.3390/w10111536

Peng H, Wu H, Wang J (2020) Research on the prediction of the water demand of construction engineering based on the BP neural network. Adv Civil Eng 2020:8868817. https://doi.org/10.1155/2020/8868817

Phan TTH, Nguyen XH (2020) Combining statistical machine learning models with ARIMA for water level forecasting: The case of the red river. Adv Water Resour 142:103656. https://doi.org/10.1016/j.advwatres.2020.103656

Qi Y, Zhou Z, Yang L, Quan Y, Miao Q (2019) A decomposition-ensemble learning model based on LSTM neural network for daily reservoir inflow forecasting. Water Resour Manag 33(12):4123–4139. https://doi.org/10.1007/s11269-019-02345-1

Rajib A, Merwade V (2017) Hydrologic response to future land use change in the Upper Mississippi River Basin by the end of 21st century. Hydrol Process 31(21):3645–3661. https://doi.org/10.1002/hyp.11282

Rangapuram SS, Seeger MW, Gasthaus J, Stella L, Wang Y, Januschowski T (2018) Deep state space models for time series forecasting. Adv Neural Inf Process Syst (NeurIPS) 7796–7805

Salas JD, Tabios GQ, Bartolini P (1985) Approaches to multivariate modeling of water resources time series. J Am Water Resour Assoc 21(4):683–708. https://doi.org/10.1111/j.1752-1688.1985.tb05383.x

Spruill CA, Workman SR, Taraba JL (2000) Simulation of daily and monthly stream discharge from small watersheds using the SWAT model. Trans ASAE 43(6):1431–1439. https://doi.org/10.13031/2013.3041

Tang Y, Su J, Khan MA (2020) Research on sentiment analysis of network forum based on BP neural network. Mob Netw Appl 26:174–183. https://doi.org/10.1007/s11036-020-01697-y

Van D, Colbert SC, Varoquaux G (2011) The NumPy array: A structure for efficient numerical computation. Comput Sci Eng 13(2):22–30. https://doi.org/10.1109/MCSE.2011.37

Valipour M (2015) Long-term runoff study using SARIMA and ARIMA models in the United States: Runoff forecasting using SARIMA. Meteorol Appl 22(3):592–598. https://doi.org/10.1002/met.1491

Wang Y, Zhang J, Zhu H, Long M, Wang J, Yu PS (2019) Memory in memory: A predictive neural network for learning higher-order non-stationarity from spatiotemporal dynamics. Proc IEEE/CVF Conf Comput Vis Pattern Recognit (CVPR) 9146–9154. https://doi.org/10.1109/CVPR.2019.00937

Xiang Z, Yan J, Demir I (2020) A rainfall-runoff model with lstm-based sequence-to-sequence learning. Water Resour Res 56(1):e2019WR025326. https://doi.org/10.1029/2019WR025326

Xu S, Chan HK, Zhang T (2019) Forecasting the demand of the aviation industry using hybrid time series SARIMA-SVR approach. Transp Res Part E Logist Transp Rev 122:169–180. https://doi.org/10.1016/j.tre.2018.12.005

Yuan X, Chen C, Lei X, Yuan Y, Adnan RM (2018) Monthly runoff forecasting based on LSTM-ALO model. Stoch Env Res Risk Assess 32(8):2199–2212. https://doi.org/10.1007/s00477-018-1560-y

Young CC, Liu WC (2015) Prediction and modelling of rainfall-runoff during typhoon events using a physically-based and artificial neural network hybrid model. Hydrol Sci J 60(12):2102–2116. https://doi.org/10.1080/02626667.2014.959446

Zhang D, Tian L, Hong M, Han F, Ren Y, Chen Y (2018) Combining convolution neural network and bidirectional gated recurrent unit for sentence semantic classification. IEEE Access 6:73750–73759. https://doi.org/10.1109/ACCESS.2018.2882878

Zhao Z, Chen W, Wu X, Chen PCY, Liu J (2017) LSTM network: a deep learning approach for short-term traffic forecast. IET Intell Transp Syst 11(2):68–75. https://doi.org/10.1049/iet-its.2016.0208

Acknowledgements

The authors would particularly like to thank Suzhou Hydrology and Water Resources Bureau of Anhui Province for providing the dataset needed to carry out this study.

Funding

This research was supported by the National Key Research and Development Program of China (2021YFC3200202), and the Scientific Research Projects of IWHR (01882104, 01882106).

Author information

Authors and Affiliations

Contributions

S.H. designed and developed the models and methods, analyzed the data, and drafted the manuscript; X.S. guided and supervised the whole process; X.S., J.Y., Y.Z., and H.C. revised the manuscript; and all authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethical Approval

Not applicable.

Consent to Participate

Not applicable.

Consent to Publish

Not applicable.

Competing Interests

None.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Highlights

• Exploratory data analysis can discover rules from the dataset, and the analysis of the dataset can provide a reference for modeling.

• BGRU-BP and BLSTM-BP models are used for short-term runoff forecasting.

• The combined models are introduced to improve the generalization ability of models.

• BGRU-BP model has less complicated structures and performs as well as the BLSTM-BP model.

Appendix

Appendix

Hyper-parametric loss function curves of BGRU-BP and BLSTM-BP models in the training set a Number of iterations of BGRU-BP model, b Number of iterations of BLSTM-BP model, c Weight decay of BGRU-BP model, d Weight decay of BLSTM-BP model, e Learning rate of BGRU-BP model, and f Learning rate of BLSTM-BP model

The ultimate goal of model hyper-parameter optimization is to make the loss function as small as possible and to achieve high prediction accuracy. In this study, the optimal hyper-parameters were determined by the trial and error method. Mean squared error (MSE) is used as the loss function. Figure 13 presents the three hyper-parameter loss function curves of BGRU-BP and BLSTM-BP models in the training set. As can be seen from Fig. 13a, b, the MSE decreases rapidly as the number of iterations goes from 0 to 1000. The MSE stabilizes after the number of iterations is 1000. Therefore, the maximum number of iterations is 1000. According to Fig. 13e, f, the results of the learning rate loss function curves are similar. The optimal learning rate is 0.0001. In Fig. 13 c, d it can be observed that the MSE increases slowly as the weight decay goes from 0 to 0.001. When the weight decay exceeds 0.001, the MSE first increases rapidly and then tends to be stable. So, the optimal weight decay is 0.001.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

He, S., Sang, X., Yin, J. et al. Short-term Runoff Prediction Optimization Method Based on BGRU-BP and BLSTM-BP Neural Networks. Water Resour Manage 37, 747–768 (2023). https://doi.org/10.1007/s11269-022-03401-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11269-022-03401-z