Abstract

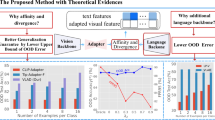

Recent large vision-language models such as CLIP have shown remarkable out-of-distribution (OOD) detection and generalization performance. However, their zero-shot in-distribution (ID) accuracy is often limited for downstream datasets. Recent CLIP-based fine-tuning methods such as prompt learning have demonstrated significant improvements in ID classification and OOD generalization where OOD labels are available. Nonetheless, it remains unclear whether the model is reliable to semantic shifts without OOD labels. In this paper, we aim to bridge the gap and present a comprehensive study to understand how fine-tuning impact OOD detection for few-shot downstream tasks. By framing OOD detection as multi-modal concept matching, we establish a connection between fine-tuning methods and various OOD scores. Our results suggest that a proper choice of OOD scores is essential for CLIP-based fine-tuning. In particular, the maximum concept matching (MCM) score provides a promising solution consistently. We also show that prompt learning demonstrates the state-of-the-art OOD detection performance over the zero-shot counterpart.

Similar content being viewed by others

Notes

Similar trends also hold for ImageNet-1k as ID.

Similar observations can also be verified for adaptor-based methods.

References

Bahng, H., Jahanian, A., Sankaranarayanan, S., & Isola. P. (2022). Exploring visual prompts for adapting large-scale models. arXiv:2203.17274.

Bossard, L., Guillaumin, M., & Van Gool, L. (2014). Food-101—Mining discriminative components with random forests. In The European conference on computer vision (ECCV).

Cimpoi, M., Maji, S., Kokkinos, I., Mohamed, S., & Vedaldi, A. (2014). Describing textures in the wild. In The IEEE/CVF computer vision and pattern recognition conference (CVPR).

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In The IEEE/CVF Computer vision and pattern recognition conference (CVPR).

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., & Houlsby, N. (2021). An image is worth \(16\times 16\) words: Transformers for image recognition at scale. In International conference on learning representations (ICLR).

Esmaeilpour, S., Liu, B., Robertson, E., & Shu, L. (2022). Zero-shot open set detection by extending clip. In The AAAI conference on artificial intelligence (AAAI).

Fort, S., Ren, J., & Lakshminarayanan, B. (2021). Exploring the limits of out-of-distribution detection. In Conference on neural information processing systems (NeurIPS).

Gao, P., Geng, S., Zhang, R., Ma, T., Fang, R., Zhang, Y., Li, H., & Qiao, Y. (2021). Clip-adapter: Better vision-language models with feature adapters. arXiv:2110.04544.

Hendrycks, D., & Gimpel, K. (2017). A baseline for detecting misclassified and out-of-distribution examples in neural networks. In International conference on learning representations (ICLR).

Hendrycks, D., Mazeika, M., Kadavath, S., & Song, D. (2022). Scaling out-of-distribution detection for real-world settings. In International conference on machine learning (ICML).

Hendrycks, D., Zhao, K., Basart, S., Steinhardt, J., & Song, D. (2021). Natural adversarial examples. In The IEEE/CVF conference on computer vision and pattern recognition (CVPR).

Huang, R., & Li, Y. (2021). Mos: Towards scaling out-of-distribution detection for large semantic space. In The IEEE/CVF computer vision and pattern recognition conference (CVPR).

Huang, T., Chu, J., & Wei, F. (2022). Unsupervised prompt learning for vision-language models. arXiv:2204.03649.

Jia, C., Yang, Y., Xia, Y., Chen, Y.T., Parekh, Z., Pham, H., Le, Q., Sung, Y.H., Li, Z., & Duerig, T. (2021). Scaling up visual and vision-language representation learning with noisy text supervision. In International conference on machine learning (ICML).

Krasin, I., Duerig, T., Alldrin, N., Ferrari, V., Abu-El-Haija, S., Kuznetsova, A., Rom, H., Uijlings, J., Popov, S., Veit, A., Belongie, S., Gomes, V., Gupta, A., Sun, C., Chechik, G., Cai, D., Feng, Z., Narayanan, D., & Murphy, K. (2017). Openimages: A public dataset for large-scale multi-label and multi-class image classification. Dataset available from https://github.com/openimages.

Krause, J., Stark, M., Deng, J., & Fei-Fei, L. (2013). 3D object representations for fine-grained categorization. In 4th international IEEE workshop on 3d representation and recognition (3dRR-13), Sydney, Australia.

Lee, K., Lee, K., Lee, H., & Shin, J. (2018). A simple unified framework for detecting out-of-distribution samples and adversarial attacks. In Conference on neural information processing systems (NeurIPS).

Lester, B., Al-Rfou, R., & Constant, N. (2021). The power of scale for parameter-efficient prompt tuning. In Proceedings of the empirical methods in natural language processing (EMNLP), pp. 3045–3059.

Li, X.L., & Liang, P. (2021). Prefix-tuning: Optimizing continuous prompts for generation. In 59th annual meeting of the association for computational linguistics and the 11th international joint conference on natural language processing, pp. 4582–4597.

Li, Y., Liang, F., Zhao, L., Cui, Y., Ouyang, W., Shao, J., Yu, F., & Yan, J. (2022). Supervision exists everywhere: A data efficient contrastive language-image pre-training paradigm. In International conference on learning representations (ICLR).

Liu, W., Wang, X., Owens, J., & Li, Y. (2020). Energy-based out-of-distribution detection. In Conference on neural information processing systems (NeurIPS).

Loshchilov, I., & Hutter, F. (2019). Decoupled weight decay regularization. In International conference on learning representations (ICLR).

Manli, S., Weili, N., De-An, H., Zhiding, Y., Tom, G. Anima, A., & Chaowei, X. (2022). Test-time prompt tuning for zero-shot generalization in vision-language models. In Advances in neural information processing systems (NeurIPS).

Ming, Y., Cai, Z., Gu, J., Sun, Y., Li, W., & Li, Y. (2022). Delving into out-of-distribution detection with vision-language representations. In Advances in neural information processing systems (NeurIPS).

Parkhi, O.M., Vedaldi, A., Zisserman, A., & Jawahar, C.V. (2012). Cats and dogs. In The IEEE/CVF computer vision and pattern recognition conference (CVPR).

Radford, A., Kim, J.W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J., et al. (2021). Learning transferable visual models from natural language supervision. In International conference on machine learning (ICML).

Van Horn, G., Mac Aodha, O., Song, Y., Cui, Y., Sun, C., Shepard, A., Adam, H., Perona, P., & Belongie, S. (2018). The inaturalist species classification and detection dataset. In The IEEE/CVF computer vision and pattern recognition conference (CVPR).

Wang, H., Li, Z., Feng, L., & Zhang, W. (2022). Vim: Out-of-distribution with virtual-logit matching. In The IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp. 4921–4930.

Xiao, J., Hays, J., Ehinger, K.A., Oliva, A., & Torralba, A. (2010). Sun database: Large-scale scene recognition from abbey to zoo. In The IEEE/CVF computer vision and pattern recognition conference (CVPR).

Yang, J., Zhou, K., Li, Y., & Liu, Z. (2021). Generalized out-of-distribution detection: A survey. arXiv:2110.11334.

Yao, L., Huang, R., Hou, L., Lu, G., Niu, M., Xu, H., Liang, X., Li, Z., Jiang, X., & Xu, C. (2021). Filip: Fine-grained interactive language-image pre-training. In International conference on learning representations (ICLR).

Zhang, R., Zhang, W., Fang, R., Gao, P., Li, K., Dai, J., Qiao, Y., & Li, H. (2022). Tip-adapter: Training-free adaption of clip for few-shot classification. In 17th European conference on computer vision (ECCV), pp. 493–510.

Zhou, B., Lapedriza, A., Khosla, A., Oliva, A., & Torralba, A. (2017). Places: A 10 million image database for scene recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 40(6), 1452–1464.

Zhou, K., Yang, J., Loy, C.C. & Liu Z. (2022a) Conditional prompt learning for vision-language models. In IEEE/CVF conference on computer vision and pattern recognition (CVPR).

Zhou, K., Yang, J., Loy, C. C., & Liu, Z. (2022). Learning to prompt for vision-language models. International Journal of Computer Vision (IJCV), 130(9), 2337–2348.

Acknowledgements

Support for this research was provided by American Family Insurance through a research partnership with the University of Wisconsin-Madison’s Data Science Institute, Office of Naval Research under award number N00014-23-1-2643, AFOSR Young Investigator Program under award number FA9550-23-1-0184, and National Science Foundation (NSF) CAREER Award No. IIS-2237037.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Kaiyang Zhou.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Dataset Details

1.1 Details on ID and OOD Dataset Construction

For ID datasets, we follow the same construction as in previous works (Zhang et al., 2022; Zhou et al., 2022a, b). Detailed instructions on dataset installation can be found in https://github.com/KaiyangZhou/CoOp/blob/main/DATASETS.md. For OOD datasets, Huang and Li (2021) curate a collection of subsets from iNaturalist Van Horn et al. (2018), SUN Xiao et al. (2010), Places Zhou et al. (2017), and Texture Cimpoi et al. (2014) as large-scale OOD datasets for ImageNet-1k, where the classes of the test sets do not overlap with ImageNet-1k. Detailed instructions can be found in https://github.com/deeplearning-wisc/large_scale_ood.

Appendix B: Additional Results

1.1 B.1 ID Accuracy

While we primarily focus on the OOD detection performance of CLIP-based fine-tuning methods, we present the results of the ID accuracy for each dataset based on CLIP-B/16 in Table 6 for completeness. Further results on the ID accuracy with various datasets and architectures can be seen in Zhou et al. (2022a), Zhou et al. (2022b), and Zhang et al. (2022).

1.2 B.2 OOD detection performance based on visual features alone

In this section, we explore several commonly used OOD detection scores solely based on the visual branch of CLIP models. Specifically, we consider the Mahalanobis score (Lee et al., 2018) on the penultimate layer of the visual encoder and MSP (Hendrycks & Gimpel, 2017), Energy (Liu et al., 2020), and KL Matching (Hendrycks et al., 2022) scores on the logit layer after linear probing the visual encoder. The results are summarized in Table 7, .based on 16-shot Caltech-101 (ID). We can see that the Mahalanobis score does not yield promising performance because 1) the feature embeddings from the visual encoder of CLIP may not follow class-conditional Gaussian distributions, 2) it is challenging to estimate the mean and especially covariance matrix when the number of samples is much smaller than the feature dimension in the few-shot setting. On the other hand, the OOD scores based on fine-tuned logit layer result in worse performance compared to the MCM score. One major reason is that fine-tuning CLIP in the few-shot setting is prone to overfitting the downstream ID dataset, making the model less reliable. This further highlights the importance of choosing OOD detection scores fitted to parameter-efficient fine-tuning methods.

1.3 B.3 Additional Results on ImageNet-1k

In this section, we consider two additional OOD test sets ImageNet-O (Hendrycks et al., 2021) and OpenImage-O (Wang et al., 2022) for ImageNet-1k (ID). OpenImage-O is a subset curated from the test set of OpenImage-V3 (Krasin et al., 2017) containing a diverse set of categories. ImageNet-O is a challenging OOD dataset that contains naturally adversarial examples for ImageNet-1k. The results are shown in Table 8. The model (CLIP-B/16) is trained with CoOp. We can see that: 1) The performance on ImageNet-O is generally worse than the rest of OOD test sets (iNaturalist, Textures, SUN, Places) in Sect. 4.3, suggesting that this task remains challenging in the context of few-shot prompt learning. 2) MCM score still performs the best compared to MS and MSP on both OOD test sets, consistent with our previous observations, which further highlights the importance of softmax and temperature scaling for OOD detection with fine-tuning.

1.4 B.4 Alternative OOD Scores

In this section, we investigate the performance with several alternative OOD scoring functions based on the cosine similarities of input \(\varvec{x}\) with the k-th label \(s_k(\varvec{x})\), \(k\in \{1,2,...,K\}\) (defined in Sect. 3.2). Specifically, we consider the energy and the KL matching score for each adaptation method and summarize the results based on Caltech-101 (ID) in Table 10. We observe that 1) using the energy score, all adaptation methods significantly enhance the performance over the zero-shot baseline (ZOCLIP). 2) the general performance vastly improves when utilizing the KL Matching score. However, even the highest achieved performance (FPR95 at 7.91 with CoCoOp) falls short when compared to the MCM score (FPR95 at 5.02 with CoCoOp).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ming, Y., Li, Y. How Does Fine-Tuning Impact Out-of-Distribution Detection for Vision-Language Models?. Int J Comput Vis 132, 596–609 (2024). https://doi.org/10.1007/s11263-023-01895-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01895-7