Abstract

A complete representation of 3D objects requires characterizing the space of deformations in an interpretable manner, from articulations of a single instance to changes in shape across categories. In this work, we improve on a prior generative model of geometric disentanglement for 3D shapes, wherein the space of object geometry is factorized into rigid orientation, non-rigid pose, and intrinsic shape. The resulting model can be trained from raw 3D shapes, without correspondences, labels, or even rigid alignment, using a combination of classical spectral geometry and probabilistic disentanglement of a structured latent representation space. Our improvements include more sophisticated handling of rotational invariance and the use of a diffeomorphic flow network to bridge latent and spectral space. The geometric structuring of the latent space imparts an interpretable characterization of the deformation space of an object. Furthermore, it enables tasks like pose transfer and pose-aware retrieval without requiring supervision. We evaluate our model on its generative modelling, representation learning, and disentanglement performance, showing improved rotation invariance and intrinsic-extrinsic factorization quality over the prior model.

Similar content being viewed by others

Data Availability

All datasets are from publicly available sources, detailed below.

Notes

However, we note that, by default, we use spectra derived from meshes, unless otherwise specified (but see Sect. 5.3.3).

We remark that these results utilize single-axis (planar) rotations; we refer the reader to Appendix G for tests with full rotations, which results in reduced rotational robustness.

Except for the flow network and altered AEs.

References

Achlioptas, P., Diamanti, O., Mitliagkas, I., & Guibas, L. (2017). Learning representations and generative models for 3d point clouds. arXiv preprint arXiv:1707.02392

Andreux, M., Rodola, E., Aubry, M., & Cremers, D. (2014). Anisotropic Laplace–Beltrami operators for shape analysis. In European conference on computer vision (pp. 299–312). Springer.

Aubry, M., Schlickewei, U., & Cremers, D. (2011). The wave kernel signature: A quantum mechanical approach to shape analysis. In 2011 IEEE international conference on computer vision workshops (ICCV workshops) (pp. 1626–1633). IEEE.

Aumentado-Armstrong, T., Tsogkas, S., Jepson, A., & Dickinson, S. (2019). Geometric disentanglement for generative latent shape models. In Proceedings of the IEEE international conference on computer vision (pp. 8181–8190)

Ba, J. L., Kiros, J. R., & Hinton, G. E. (2016). Layer normalization. arXiv preprint arXiv:1607.06450

Baek, S. Y., Lim, J., & Lee, K. (2015). Isometric shape interpolation. Computers & Graphics, 46, 257–263.

Basset, J., Wuhrer, S., Boyer, E., & Multon, F. (2020). Contact preserving shape transfer: Retargeting motion from one shape to another. Computers & Graphics.

Bengio, Y., Courville, A., & Vincent, P. (2013). Representation learning: A review and new perspectives. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(8), 1798–1828.

Berkiten, S., Halber, M., Solomon, J., Ma, C., Li, H., & Rusinkiewicz, S. (2017). Learning detail transfer based on geometric features. Computer Graphics Forum, Wiley Online Library, 36, 361–373.

Boscaini, D., Eynard, D., Kourounis, D., & Bronstein, M. M. (2015). Shape-from-operator: Recovering shapes from intrinsic operators. Computer Graphics Forum, Wiley Online Library, 34, 265–274.

Boscaini, D., Masci, J., Melzi, S., Bronstein, M. M., Castellani, U., & Vandergheynst, P. (2015). Learning class-specific descriptors for deformable shapes using localized spectral convolutional networks. Computer Graphics Forum, Wiley Online Library, 34, 13–23.

Bronstein, A. M., Bronstein, M. M., Guibas, L. J., & Ovsjanikov, M. (2011). Shape google: Geometric words and expressions for invariant shape retrieval. ACM Transactions on Graphics (TOG), 30(1), 1.

Chen, C., Li, G., Xu, R., Chen, T., Wang, M., & Lin, L. (2019a). Clusternet: Deep hierarchical cluster network with rigorously rotation-invariant representation for point cloud analysis. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4994–5002).

Chen, X., Chen, B., & Mitra, N. J. (2019b). Unpaired point cloud completion on real scans using adversarial training. arXiv preprint arXiv:1904.00069

Chen, X., Lin, K. Y., Liu, W., Qian, C., & Lin, L. (2019c). Weakly-supervised discovery of geometry-aware representation for 3D human pose estimation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10895–10904).

Chen, X., Song, J., & Hilliges, O. (2019d). Monocular neural image based rendering with continuous view control. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 4090–4100).

Chern, A., Knöppel, F., Pinkall, U., & Schröder, P. (2018). Shape from metric. ACM Transactions on Graphics (TOG), 37(4), 1–17.

Choukroun, Y., Shtern, A., Bronstein, A. M., & Kimmel, R. (2018). Hamiltonian operator for spectral shape analysis. IEEE Transactions on Visualization and Computer Graphics.

Chu, M., & Golub, G. (2005). Inverse eigenvalue problems: Theory, algorithms, and applications. OUP Oxford

Chua, C. S., & Jarvis, R. (1997). Point signatures: A new representation for 3D object recognition. International Journal of Computer Vision, 25(1), 63–85.

Cohen, T. S., Geiger, M., Köhler, J., & Welling, M. (2018). Spherical CNNs. arXiv preprint arXiv:1801.10130

Corman, E., Solomon, J., Ben-Chen, M., Guibas, L., & Ovsjanikov, M. (2017). Functional characterization of intrinsic and extrinsic geometry. ACM Transactions on Graphics (TOG), 36(2), 14.

Cosmo, L., Norelli, A., Halimi, O., Kimmel, R., & Rodolà, E. (2020). Limp: Learning latent shape representations with metric preservation priors. arXiv preprint arXiv:2003.12283

Cosmo, L., Panine, M., Rampini, A., Ovsjanikov, M., Bronstein, M. M., & Rodolà, E. (2019). Isospectralization, or how to hear shape, style, and correspondence. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7529–7538).

Dinh, L., Krueger, D., & Bengio, Y. (2014). Nice: Non-linear independent components estimation. arXiv preprint arXiv:1410.8516

Dinh, L., Sohl-Dickstein, J., & Bengio, S. (2016). Density estimation using real NVP. arXiv preprint arXiv:1605.08803

Durkan, C., Bekasov, A., Murray, I., & Papamakarios, G. (2020). nflows: Normalizing flows in PyTorch. https://doi.org/10.5281/zenodo.4296287

Dym, N., & Maron, H. (2020). On the universality of rotation equivariant point cloud networks. arXiv preprint arXiv:2010.02449

Esmaeili, B., Wu, H., Jain, S., Bozkurt, A., Siddharth, N., Paige, B., Brooks, D. H., Dy, J., & van de Meent, J. W. (2018). Structured disentangled representations. arXiv preprint arXiv:1804.02086

Fuchs, F. B., Worrall, D. E., Fischer, V., & Welling, M. (2020). Se (3)-transformers: 3D roto-translation equivariant attention networks. arXiv preprint arXiv:2006.10503

Fumero, M., Cosmo, L., Melzi, S., & Rodolà, E. (2021). Learning disentangled representations via product manifold projection. In International conference on machine learning (pp. 3530–3540). PMLR.

Gao, L., Yang, J., Qiao, Y. L., Lai, Y. K., Rosin, P. L., Xu, W., & Xia, S. (2018). Automatic unpaired shape deformation transfer. ACM Transactions on Graphics (TOG), 37(6), 1–15.

Gebal, K., Bærentzen, J. A., Aanæs, H., & Larsen, R. (2009). Shape analysis using the auto diffusion function. Computer Graphics Forum, Wiley Online Library, 28, 1405–1413.

Ghosh, P., Sajjadi, M. S., Vergari, A., Black, M., & Schölkopf, B. (2019). From variational to deterministic autoencoders. arXiv preprint arXiv:1903.12436

Gordon, C., Webb, D. L., & Wolpert, S. (1992). One cannot hear the shape of a drum. Bulletin of the American Mathematical Society, 27(1), 134–138.

Groueix, T., Fisher, M., Kim, V. G., Russell, B. C., & Aubry, M. (2018). 3D-coded: 3D correspondences by deep deformation. In Proceedings of the European conference on computer vision (ECCV) (pp. 230–246).

Guo, Y., Bennamoun, M., Sohel, F., Lu, M., & Wan, J. (2014). 3D object recognition in cluttered scenes with local surface features: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(11), 2270–2287.

Higgins, I., Matthey, L., Pal, A., Burgess, C., Glorot, X., Botvinick, M., Mohamed, S., & Lerchner, A. (2017). \(\beta \)-vae: Learning basic visual concepts with a constrained variational framework. In International conference on learning representations.

Huang, R., Rakotosaona, M. J., Achlioptas, P., Guibas, L. J., & Ovsjanikov, M. (2019). Operatornet: Recovering 3D shapes from difference operators. In International conference on computer vision (ICCV).

Huynh, D. Q. (2009). Metrics for 3D rotations: Comparison and analysis. Journal of Mathematical Imaging and Vision, 35(2), 155–164.

Johnson, A. E., & Hebert, M. (1999). Using spin images for efficient object recognition in cluttered 3d scenes. IEEE Transactions on Pattern Analysis and Machine Intelligence, 21(5), 433–449.

Kac, M. (1966). Can one hear the shape of a drum? The American Mathematical Monthly,73(4P2), 1–23

Kingma, D. P., & Ba, J. (2014). Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Kingma, D. P., & Dhariwal, P. (2018). Glow: Generative flow with invertible \(1\times 1\) convolutions. In Advances in neural information processing systems (pp. 10215–10224).

Kingma, D. P., Salimans, T., Jozefowicz, R., Chen, X., Sutskever, I., & Welling, M. (2016). Improved variational inference with inverse autoregressive flow. Advances in Neural Information Processing Systems, 29, 4743–4751.

Klambauer, G., Unterthiner, T., Mayr, A., & Hochreiter, S. (2017). Self-normalizing neural networks. Advances in Neural Information Processing Systems,30.

Kobyzev, I., Prince, S., & Brubaker, M. (2020). Normalizing flows: An introduction and review of current methods. IEEE Transactions on Pattern Analysis and Machine Intelligence.

Kondor, R., Son, H. T., Pan, H., Anderson, B., & Trivedi, S. (2018). Covariant compositional networks for learning graphs. arXiv preprint arXiv:1801.02144

Kovnatsky, A., Bronstein, M. M., Bronstein, A. M., Glashoff, K., & Kimmel, R. (2013). Coupled quasi-harmonic bases. Computer Graphics Forum, Wiley Online Library, 32, 439–448.

Kumar, A., Sattigeri, P., & Balakrishnan, A. (2017). Variational inference of disentangled latent concepts from unlabeled observations. arXiv preprint arXiv:1711.00848

LeCun, Y., Bottou, L., Bengio, Y., & Haffner, P. (1998). Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278–2324.

Levinson, J., Sud, A., & Makadia, A. (2019). Latent feature disentanglement for 3D meshes. arXiv preprint arXiv:1906.03281

Lévy, B. (2006). Laplace–Beltrami eigenfunctions towards an algorithm that understands geometry. In IEEE international conference on shape modeling and applications, 2006. SMI 2006 (pp 13–13). IEEE.

Li, J., Bi, Y., & Lee, G. H. (2019). Discrete rotation equivariance for point cloud recognition. In 2019 International conference on robotics and automation (ICRA) (pp. 7269–7275). IEEE.

Liu, H. T. D., Jacobson, A., & Crane, K. (2017). A Dirac operator for extrinsic shape analysis. Computer Graphics Forum, Wiley Online Library, 36, 139–149.

Loper, M., Mahmood, N., Romero, J., Pons-Moll, G., & Black, M. J. (2015). SMPL: A skinned multi-person linear model. ACM Trans Graphics (Proc SIGGRAPH Asia),34(6), 248:1–248:16.

Loshchilov, I., & Hutter, F. (2017). Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101

MacQueen, J., et al. (1967). Some methods for classification and analysis of multivariate observations. In Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, Oakland, CA, USA (Vol. 1, pp. 281–297).

Mahmood, N., Ghorbani, N., Troje, N. F., Pons-Moll, G., & Black, M. J. (2019). AMASS: Archive of motion capture as surface shapes. In International conference on computer vision (pp. 5442–5451).

Marin, R., Rampini, A., Castellani, U., Rodola, E., Ovsjanikov, M., & Melzi, S. (2020). Instant recovery of shape from spectrum via latent space connections. In 2020 International conference on 3D vision (3DV) (pp. 120–129). IEEE.

Marin, R., Rampini, A., Castellani, U., Rodolà, E., Ovsjanikov, M., & Melzi, S. (2021). Spectral shape recovery and analysis via data-driven connections. International Journal of Computer Vision, 1–16.

Masoumi, M., & Hamza, A. B. (2017). Spectral shape classification: A deep learning approach. Journal of Visual Communication and Image Representation, 43, 198–211.

Melzi, S., Rodolà, E., Castellani, U., & Bronstein, M. M. (2018). Localized manifold harmonics for spectral shape analysis. Computer Graphics Forum, Wiley Online Library, 37, 20–34.

Meyer, M., Desbrun, M., Schröder, P., & Barr, A. H. (2003). Discrete differential-geometry operators for triangulated 2-manifolds. In Visualization and mathematics III (pp. 35–57). Springer.

Moschella, L., Melzi, S., Cosmo, L., Maggioli, F., Litany, O., Ovsjanikov, M., et al. (2022). Learning spectral unions of partial deformable 3D shapes. Computer Graphics Forum, Wiley Online Library, 41, 407–417.

Narayanaswamy, S., Paige, B., Van de Meent, J. W., Desmaison, A., Goodman, N., Kohli, P., Wood, F., & Torr, P. (2017). Learning disentangled representations with semi-supervised deep generative models. In Advances in neural information processing systems (pp. 5925–5935).

Neumann, T., Varanasi, K., Theobalt, C., Magnor, M., & Wacker, M. (2014). Compressed manifold modes for mesh processing. Computer Graphics Forum, Wiley Online Library, 33, 35–44.

Ovsjanikov, M., Ben-Chen, M., Solomon, J., Butscher, A., & Guibas, L. (2012). Functional maps: A flexible representation of maps between shapes. ACM Transactions on Graphics (TOG), 31(4), 1–11.

Panine, M., & Kempf, A. (2016). Towards spectral geometric methods for Euclidean quantum gravity. Physical Review D, 93(8), 084033.

Papamakarios, G., Nalisnick, E., Rezende, D. J., Mohamed, S., & Lakshminarayanan, B. (2019). Normalizing flows for probabilistic modeling and inference. arXiv preprint arXiv:1912.02762

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., Desmaison, A., Kopf, A., Yang, E., DeVito, Z., Raison, M., Tejani, A., Chilamkurthy, S., Steiner, B., Fang, L., Bai, J., & Chintala, S. (2019). Pytorch: An imperative style, highperformance deep learning library. Advances in Neural Information Processing Systems,32, 8024–8035.

Patané, G. (2016). Star-Laplacian spectral kernels and distances for geometry processing and shape analysis. Computer Graphics Forum, Wiley Online Library, 35, 599–624.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., VanderPlas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., & Duchesnay, E. (2012). Scikit-learn: Machine learning in python. CoRR. arxiv:1201.0490

Pons-Moll, G., Romero, J., Mahmood, N., & Black, M. J. (2015). Dyna: A model of dynamic human shape in motion. ACM Transactions on Graphics, (Proc SIGGRAPH),34(4), 120:1–120:14.

Poulenard, A., Rakotosaona, M. J., Ponty, Y., & Ovsjanikov, M. (2019). Effective rotation-invariant point CNN with spherical harmonics kernels. arXiv preprint arXiv:1906.11555

Qi, C. R., Su, H., Mo, K., & Guibas, L. J. (2017). Pointnet: Deep learning on point sets for 3D classification and segmentation. In Proc computer vision and pattern recognition (CVPR) (Vol. 1, issue 2, p. 4). IEEE.

Rampini, A., Pestarini, F., Cosmo, L., Melzi, S., & Rodola, E. (2021). Universal spectral adversarial attacks for deformable shapes. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 3216–3226).

Ranjan, A., Bolkart, T., Sanyal, S., & Black, M. J. (2018). Generating 3D faces using convolutional mesh autoencoders. In European conference on computer vision (ECCV) (pp. 725–741).

Remelli, E., Han, S., Honari, S., Fua, P., & Wang, R. (2020). Lightweight multi-view 3D pose estimation through camera-disentangled representation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6040–6049).

Reuter, M. (2010). Hierarchical shape segmentation and registration via topological features of Laplace–Beltrami eigenfunctions. International Journal of Computer Vision, 89(2–3), 287–308.

Reuter, M., Wolter, F. E., & Peinecke, N. (2006). Laplace–Beltrami spectra as shape-DNA of surfaces and solids. Computer-Aided Design, 38(4), 342–366.

Rhodin, H., Constantin, V., Katircioglu, I., Salzmann, M., & Fua, P. (2019). Neural scene decomposition for multi-person motion capture. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7703–7713).

Rhodin, H., Salzmann, M., & Fua, P. (2018). Unsupervised geometry-aware representation for 3D human pose estimation. In Proceedings of the European conference on computer vision (ECCV) (pp. 750–767).

Roberts, R. A., dos Anjos, R. K., Maejima, A., & Anjyo, K. (2020). Deformation transfer survey. Computers & Graphics.

Rodolà, E., Cosmo, L., Bronstein, M. M., Torsello, A., & Cremers, D. (2017). Partial functional correspondence. Computer Graphics Forum, Wiley Online Library, 36, 222–236.

Rustamov, R. M. (2007). Laplace–Beltrami eigenfunctions for deformation invariant shape representation. In Proceedings of the fifth Eurographics symposium on Geometry processing (pp. 225–233). Eurographics Association.

Sanghi, A. (2020). Info3D: Representation learning on 3D objects using mutual information maximization and contrastive learning. arXiv preprint arXiv:2006.02598

Sanghi, A., & Danielyan, A. (2019). Towards 3D rotation invariant embeddings. In CVPR 2019 workshop on 3D scene understanding for vision, graphics, and robotics.

Sharp, N., Gillespie, M., & Crane, K. (2021). Geometry processing with intrinsic triangulations. In SIGGRAPH’21: ACM SIGGRAPH 2021 courses.

Sharp, N., & Crane, K. (2020). A Laplacian for nonmanifold triangle meshes. Computer Graphics Forum, Wiley Online Library, 39, 69–80.

Shoemake, K. (1985). Animating rotation with quaternion curves. In Proceedings of the 12th annual conference on computer graphics and interactive techniques (pp. 245–254).

Stein, F., & Medioni, G., et al. (1992). Structural indexing: Efficient 3-D object recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence,14(2), 125–145

Su, F. G., Lin, C. S., & Wang, Y. C. F. (2021). Learning interpretable representation for 3d point clouds. In 2020 25th International conference on pattern recognition (ICPR) (pp. 7470–7477). IEEE.

Sumner, R. W., & Popović, J. (2004). Deformation transfer for triangle meshes. ACM Transactions on Graphics (TOG), 23(3), 399–405.

Sun, X., Lian, Z., & Xiao, J. (2019). Srinet: Learning strictly rotation-invariant representations for point cloud classification and segmentation. In Proceedings of the 27th ACM international conference on multimedia (pp. 980–988).

Sun, J., Ovsjanikov, M., & Guibas, L. (2009). A concise and provably informative multi-scale signature based on heat diffusion. Computer Graphics Forum, Wiley Online Library, 28, 1383–1392.

Tan, Q., Gao, L., Lai, Y. K., & Xia, S. (2018). Variational autoencoders for deforming 3D mesh models. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5841–5850).

Taubin, G. (1995). A signal processing approach to fair surface design. In Proceedings of the 22nd annual conference on computer graphics and interactive techniques (pp. 351–358).

Thomas, N., Smidt, T., Kearnes, S., Yang, L., Li, L., Kohlhoff, K., & Riley, P. (2018). Tensor field networks: Rotation-and translation-equivariant neural networks for 3D point clouds. arXiv preprint arXiv:1802.08219

Tombari, F., Salti, S., & Di Stefano, L. (2010). Unique signatures of histograms for local surface description. In European conference on computer vision (pp. 356–369). Springer.

Vallet, B., & Lévy, B. (2008). Spectral geometry processing with manifold harmonics. Computer Graphics Forum, Wiley Online Library, 27, 251–260.

van der Maaten, L., & Hinton, G. (2008). Visualizing data using t-sne. Journal of Machine Learning Research,9, 2579–2605.

Varol, G., Romero, J., Martin, X., Mahmood, N., Black, M. J., Laptev, I., & Schmid, C. (2017). Learning from synthetic humans. In CVPR.

Vinh, N. X., Epps, J., & Bailey, J. (2010). Information theoretic measures for clusterings comparison: Variants, properties, normalization and correction for chance. The Journal of Machine Learning Research, 11, 2837–2854.

Wang, Y., Ben-Chen, M., Polterovich, I., & Solomon, J. (2017). Steklov spectral geometry for extrinsic shape analysis. arXiv preprint arXiv:1707.07070

Watanabe, S. (1960). Information theoretical analysis of multivariate correlation. IBM Journal of Research and Development, 4(1), 66–82.

Weyl, H. (1911). Über die asymptotische verteilung der eigenwerte. Nachrichten von der Gesellschaft der Wissenschaften zu Göttingen, Mathematisch-Physikalische Klasse, 1911, 110–117.

Worrall, D. E., Garbin, S. J., Turmukhambetov, D., & Brostow, G. J. (2017). Interpretable transformations with encoder-decoder networks. In Proceedings of the IEEE international conference on computer vision (pp. 5726–5735).

Worrall, D., & Brostow, G. (2018). Cubenet: Equivariance to 3D rotation and translation. In Proceedings of the European conference on computer vision (ECCV) (pp. 567–584).

Xiao, Z., Lin, H., Li, R., Geng, L., Chao, H., & Ding, S. (2020). Endowing deep 3D models with rotation invariance based on principal component analysis. In 2020 IEEE international conference on multimedia and expo (ICME) (pp. 1–6). IEEE.

Yang, G., Huang, X., Hao, Z., Liu, M. Y., Belongie, S., & Hariharan, B. (2019). Pointflow: 3D point cloud generation with continuous normalizing flows. arXiv preprint arXiv:1906.12320

Ye, Z., Diamanti, O., Tang, C., Guibas, L., & Hoffmann, T. (2018). A unified discrete framework for intrinsic and extrinsic Dirac operators for geometry processing. Computer Graphics Forum, Wiley Online Library, 37, 93–106.

Yin, M., Li, G., Lu, H., Ouyang, Y., Zhang, Z., & Xian, C. (2015). Spectral pose transfer. Computer Aided Geometric Design, 35, 82–94.

You, Y., Lou, Y., Liu, Q., Tai, Y. W., Ma, L., Lu, C., & Wang, W. (2018). Pointwise rotation-invariant network with adaptive sampling and 3D spherical voxel convolution. arXiv preprint arXiv:1811.09361

Zhang, Z., Hua, B. S., Rosen, D. W., & Yeung, S. K. (2019). Rotation invariant convolutions for 3D point clouds deep learning. In International conference on 3D vision (3DV).

Zhang, X., Qin, S., Xu, Y., & Xu, H. (2020). Quaternion product units for deep learning on 3D rotation groups. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7304–7313).

Zhao, Y., Birdal, T., Lenssen, J. E., Menegatti, E., Guibas, L., & Tombari, F. (2020). Quaternion equivariant capsule networks for 3D point clouds. In European conference on computer vision (pp. 1–19). Springer.

Zhou, K., Bhatnagarm, B. L., & Pons-Moll, G. (2020). Unsupervised shape and pose disentanglement for 3D meshes. In European conference on computer vision (pp. 341–357). Springer.

Zuffi, S., Kanazawa, A., Jacobs, D., & Black, M. J. (2017). 3D menagerie: Modeling the 3D shape and pose of animals. In IEEE Conf. on computer vision and pattern recognition (CVPR).

Acknowledgements

We are grateful for support from NSERC (CGSD3-534955-2019) and Samsung Research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Edmond Boyer.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Disclaimer: Tristan Aumentado-Armstrong and Stavros Tsogkas contributed to this article in their personal capacity as PhD student and Adjunct Professor at the University of Toronto, respectively. Sven Dickinson and Allan Jepson contributed to this article in their personal capacity as Professors at the University of Toronto. The views expressed (or the conclusions reached) by the authors are their own and do not necessarily represent the views of Samsung Research America, Inc.

Appendices

Glossary of Notation

Symbol | Sec/Eq | Definition |

|---|---|---|

P | Section 3 | Shape |

\(x_c\) | Section 3 | Canonical AE encoding |

\(\widetilde{x}\) | Section 3.1.2 | Non-canon FTL-AE encoding |

q | Section 3 | Quaternion |

\(D_P\) | Equation 2 | Distance between PCs |

\(z_I\) | Section 4.2.1 | Latent intrinsics |

\(z_E\) | Section 4.2.1 | Latent extrinsics |

\(z_R\) | Section 4.2.1 | Latent rigid pose |

\(\lambda \) | Section 2.2 | LBO Spectrum |

\(\widetilde{z}_I\) | Section 4.2.2 | Latent intrinsics (from \(\lambda \)) |

\(f_\lambda \) | Section 4.2.2 | Spectral flow network |

\(g_\lambda \) | Section 4.2.2 | Inverse of \(f_\lambda \) |

\({\mathcal {S}}\) | Equation 18 | Disentanglement score |

\(E_\beta \) | Section 5.3.2 | Retrieval error wrt SMPL shape |

\(E_\theta \) | Section 5.3.2 | Retrieval error wrt SMPL pose |

\(P_\lambda (\lambda )\) | Equation 12 | Spectral likelihood |

\(d_\lambda \) | Equation 14 | Distance between spectra |

\(d_R\) | Equation 4 | Distance between rotations |

\(\mathcal {L}_{AE}\) | Section 3.2 | AE total loss |

\(\mathcal {L}_c\) | Section 3.2.1 | AE x-consistency |

\(\mathcal {L}_R\) | Section 3.2.1 | AE rotation prediction |

\(\mathcal {L}_P\) | AE shape prediction | |

\(\mathcal {L}_{VAE}\) | Section 4.3 | GDVAE total loss |

\(\mathcal {L}_{HF}\) | Section 4.3.1 | HFVAE loss |

\(L_R\) | Section 4.3.1 | VAE reconstruction loss |

\(\mathcal {L}_\lambda \) | Section 4.3.2 | Spectral log-likelihood loss |

\(\mathcal {L}_D\) | Section 4.3.4 | Additional disentanglement loss |

\(\mathcal {L}_F\) | Section 4.3.4 | Intrinsics-Spectrum consistency |

Invariant FTL-Based Mapping

As an aside, in an FTL-based model, we remark that it is possible to transform \({x} \in {\mathcal {X}}\), in a way that is invariant to latent-space rotation operators. Let \({\mathcal {I}}[x] = (U(x)_i^T U(x)_j)_{i,j\in [1,N_s]; i \le j}\) be the collection of inner products of the subvectors of x. Then \({\mathcal {I}}[x]\) is rotation invariant; i.e., \({\mathcal {I}}[x] = {\mathcal {I}}[ F(R,x) ]\), for any \(R\in SO(3)\). This idea is noted by Worrall et al. (2017).

However, we found that using \({\mathcal {I}}[x]\) only slightly improved rotation invariance, yet slightly decreased reconstruction performance, and further was computationally expensive, due to the quadratic dependence of \(\dim ({\mathcal {I}}[x])\) on \(N_s\). Nevertheless, this may be specific to our particular architectural setup, and could still be an interesting direction for future work.

Evaluation Metric Details

1.1 Latent Rotation Invariance Measure

We provide a more detailed description of the latent clustering metric \({\mathcal {C}}_X\) here. Recall that our goal is to take a set of random shapes (potentially differing in intrinsics, non-rigid extrinsics, and orientation) and duplicate each shape, before randomly rotating the copies. We then encode each shape into \(x_c\)-space and cluster them. We use K-means clustering (MacQueen et al., 1967) to obtain the labeling. We expect our canonicalization method to bring the latent representations close in latent space, such that rotated copies should cluster together. We can therefore measure rotational invariance by supervised clustering quality metrics, in which the instance identity (i.e., which shape a vector originated from) is a ground truth cluster label. We use Adjusted Mutual Information (AMI) for this (Vinh et al., 2010), which returns 1 for a perfect partitioning (as compared to the ground truth) and a 0 for a random clustering.

While this captures the representational invariance to rotation in the embedding space, the number of disparate sample shapes to use is unclear. We therefore average over a sequentially larger set of samples, thus giving an “area-under-the-curve”-like measure of quality across sample sizes.

More formally, let \(\varGamma = \{P_1, \ldots , P_{N_I}\}\) be a set of \(|\varGamma |=N_I\) PC shape instances. Now consider a set that includes \(N_{rc}\) rotated copies of each PC: \( \widetilde{\varGamma } = \bigcup _{k=1}^{N_I} \{ \widetilde{P}_{k,1}, \ldots , \widetilde{P}_{k,N_{rc}} \} \), where \(\widetilde{P}_{k,j} = P_k \widetilde{R}_j\) for a randomly sampled \(\widetilde{R}_j\) and \(|\widetilde{\varGamma }| = N_I N_{rc}\). We then encode the set into canonical representations \(\widetilde{\varGamma }_E = \{ E_x(\widetilde{P}_{k,j})\;\forall \;\widetilde{P}_{k,j} \in \widetilde{\varGamma } \}\) and run our clustering algorithm on \(\widetilde{\varGamma }_E\) to get \(\text {AMI}(N_I)\) for a given instance set size \(N_I\). Let \({\mathcal {N}}_S\) be a set of sample sizes (we chose eight sizes, linearly spaced from 20 to \(10^3\)). Finally, the \(x_c\)-space rotational consistency metric is given by

Note that for each size we always run two clusterings with different randomly chosen sample shapes, and use their average AMI in the above equation. For implementations, we use scikit-learn (Pedregosa et al., 2012).

Dataset Details

Except for HA, all our datasets are identical to those in (Aumentado-Armstrong et al., 2019). We denote \(N_p\) as the size of the input point cloud (PC) and \(N_\lambda \) as the dimensionality of the spectrum used. In all cases, we output the same number of points as we input.

We also perform a scalar rescaling of the dataset such that the largest bounding box length is scaled down to unit length. This scale is the same across PCs in a given dataset (otherwise the change in scale would affect the spectrum for each shape differently). For augmentation and rigid orientation learning, we apply random rotations about the gravity axis (SMAL and SMPL) or the out-of-image axis (MNIST). For rotation supervision, the orientation of the raw data is treated as canonical.

1.1 MNIST

Meshes are extracted from the greyscale MNIST images, followed by area-weighted point cloud (PC) sampling. See Aumentado-Armstrong et al. (2019) for extraction details. We set \(N_p = 512\) and \(N_\lambda = 20\). The dataset has 59483 training examples and 9914 testing examples.

1.2 SMAL

Using the SMAL model (Zuffi et al., 2017), we generate set of 3D animal shapes with varying shape and pose. Using densities provided by authors, we generate 3200 shapes per animal category, Following 3D-CODED (Groueix et al., 2018), we sample poses by taking a Gaussian about the joint angles with a standard deviation of 0.2. We use 15,000 shapes for training and 1000 for testing, and set \(N_p = 1600\) and \(N_\lambda = 24\).

1.3 SMPL

Based on the SMPL model (Loper et al., 2015), we again follow the procedure in 3D-CODED (Groueix et al., 2018) to assemble a dataset of human models. This results in 20500 meshes per gender, using random samples from the SURREAL dataset (Varol et al., 2017), plus an additional 3100 meshes of “bent” people per gender, following Groueix et al. (2018). Ultimately, we get 45,992 training and 1199 testing meshes, equally divided by gender, after spectral calculations. We used \(N_p = 1600\) and \(N_\lambda = 20\).

1.4 Human-Animal (HA)

Since our model uses only geometry, we are able to simply mix SMAL and SMPL data together. The testing sets are left alone, and used separately during evaluation (for comparison to the unmixed models). For training, we use the entire SMAL training set, plus 9000 unbent and 1500 bent samples from the SMPL training set, per gender. We set \(N_p = 1600\) and \(N_\lambda = 22\).

Note that the use of a single scalar scaling factor (setting the maximum bounding box length to 1) means that SMPL models are smaller in the HA data than in the isolated SMPL dataset. We correct for this in the evaluation tables so that they are comparable (e.g., for Chamfer distances).

Results Tables

In this section, we provide the detailed results tables for the experiments discussed in Sect. 5.3. See Table 5 for measurements of VAE quality, including reconstruction, generative modelling, and disentanglement metrics. See Table 6 for pose-aware retrieval scores, with various choices of latent vector, and Fig. 22 for plots of those scores for the STD AE (as well as Fig. 19 for the FTL AE case).

Implementation Details

All models were implemented in Pytorch (Paszke et al., 2019). Notationally, let \(n = \text {dim}(x_c)\), \(n_E = \text {dim}(z_E)\), \(n_I = \text {dim}(z_I)\), and \(n_R = \text {dim}(z_R)\). For this section, we assume that the number of points \(N_p\) in a point cloud (PC) is the same for inputs and outputs (though the architectures themselves do not require this). Validation sets of size 40 (or 250 for MNIST) were set aside from the training set to observe generalization error estimates. Hyper-parameters were largely set based on qualitative examination of training outputs.

1.1 Autoencoder Details

1.1.1 AE Network Architectures

Both the STD and FTL architectures used the same network components, with slight hyper-parameter alterations. Our encoders \(E_r : {\mathbb {R}}^{3N_p} \rightarrow {\mathbb {R}}^{4}\) and \(E_x : {\mathbb {R}}^{3N_p} \rightarrow {\mathbb {R}}^{n}\) were implemented as PointNets (Qi et al., 2017) without spatial transformers, with hidden channel sizes (64, 128, 256, 512, 128) and (128, 256, 512, 836, 1024). The inputs are only the point coordinates (i.e., three channels) and the output is a four-dimensional quaternion for \(E_r\) and an n-dimensional vector for \(E_x\). The decoder \(D : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^{3N_p}\) is implemented as a fully connected network, with hidden layer sizes (K, 2K, 4K), where \(K = 1200\) for STD and \(K = 1250\) for FTL. Within D, each layer consisted of a linear layer, layer normalization (Ba et al., 2016), and ReLU (except for the last, which had only a linear layer). For the MNIST dataset only, we changed the hidden layer sizes of the decoder D to be (512, 1024, 1536).

1.1.2 AE Hyper-Parameters and Loss Weights

For architectural parameters, in the FTL case, we set \(N_s = 333\), and hence \(n = 999\). For STD, we let \(n = 600\) and did not notice improvements when increasing it. For MNIST, we set \(N_s = 32\) and, for the STD case, \(n = 150\). Regarding loss parameters, we set the reconstruction loss weights to \(\alpha _C = 200\), \(\alpha _H = 1\), \(\widetilde{\gamma }_P = 100\), and \(\gamma _P = 20\), in the FTL case, altering only \(\gamma _P = 250\) in the STD case. Rotational consistency and prediction loss weights were set to \(\gamma _c = 1\) and \(\gamma _r = 10\). Regularization loss weights were \(\gamma _w = \gamma _d = 2\times 10^{-5}\). For MNIST, we altered \(\gamma _c = 50\) for STD, while we let \(\gamma _d = 10^{-6}\), \(\gamma _w = 5\times 10^{-5}\), and \(\gamma _c = 100\) for FTL.

1.1.3 AE Training Details

We train all AEs with Adam (Kingma & Ba, 2014), using an initial learning rate of 0.0005. For supervised AEs, we pretrain the rotation predictor for 2000 iterations before the rest of the network. We use a scheduler that decreases the learning rate by 5% upon hitting a loss plateau, until it reaches 0.0001. We trained MNIST, SMAL, SMPL, and HA for 200, 1250, 350, and 400 epochs, respectively, and batch sizes of 64/100 (FTL/STD) and 36/40 for MNIST and non-MNIST datasets. We set the number of rotated copies (which expands the batch sizes above) to \(N_R = 3\), except in the case of MNIST (for which we used \(N_R = 6\) in the FTL case and \(N_R = 4\) in the STD case). Finally, note that, during training, for the supervised case only, we replace the predicted rotation \(\widehat{R}\) with the real one R in all operations.

1.2 Variational Autoencoder Details

For implementation of the HFVAE, we use ProbTorch (Narayanaswamy et al., 2017). Our normalizing flow subnetwork used nflows (Durkan et al., 2020).

1.2.1 VAE Network Architectures

For the VAE, all networks except for the flow mapping \(f_\lambda \) are implemented as fully connected networks (linear-layernorm-ReLU, as above). Approximate variational posteriors have diagonal covariances. Thus, we have the following mappings with their hidden sizes:

-

The rotation distribution parameter encoders, \(\mu _R : {\mathbb {R}}^4 \rightarrow {\mathbb {R}}^{n_R}\) and \(\varSigma _R : {\mathbb {R}}^4 \rightarrow {\mathbb {R}}^{n_R}\), are implemented with an initial shared network, with hidden sizes (256, 128) into an intermediate dimensionality of 64, followed by single linear layer each.

-

The quaternion decoder \(D_q : {\mathbb {R}}^{n_R} \rightarrow {\mathbb {R}}^4\) is structured as (64, 128, 256).

-

The intrinsic and extrinsic parameter encoders, \(\mu _\xi : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^{n_\xi }\) and \(\varSigma _\xi : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^{n_\xi }\), for \(\xi \in \{E, I\}\), have identical network architectures across latent group types: (2000, 1600, 1200, 400) and (2000, 1200, 400), for \(\mu _\xi \) and \(\varSigma _\xi \), respectively.

-

The only mapping that is not a fully connected network is the bijective flow \(f_\lambda \) (and its inverse, \(g_\lambda \)). Recall that we use \(f_\lambda \) as \(\widetilde{\mu }\). Hence, \(f_\lambda : {\mathbb {R}}^{N_\lambda } \rightarrow {\mathbb {R}}^{n_I} \) and \(N_\lambda = n_I\). This is implemented as a normalizing flow with nine layers, where each layer consists of an affine coupling transform (Dinh et al., 2016), an activation normalization (actnorm) (Kingma & Dhariwal, 2018), and a random feature ordering permutation. The last layer does not have normalization or permutation. Each affine coupling uses an internal FC network with one hidden layer of size 400.

-

For the GDVAE++ training regime, we require a covariance parameter estimator for inference during training: \(\varSigma _\xi : {\mathbb {R}}^{N_\lambda } \rightarrow {\mathbb {R}}^{n_I}\). This is implemented via hidden layers \({\mathtt{(2n_I, 2n_I)}}\).

-

The shape decoder \(D_x : {\mathbb {R}}^{n_I + n_E} \rightarrow {\mathbb {R}}^{n} \) is an FC network with hidden layers (600, 1200, 1600, 2000).

1.2.2 VAE Hyper-Parameters and Loss Weights

Recall that the loss hyper-parameters control the following terms: the intra-group total correlation (TC) \(\beta _1\), dimension-wise KL divergence \(\beta _2\), mutual information \(\beta _3\), inter-group TC \(\beta _4\), log-likelihood reconstruction \(\omega _R\), relative quaternion reconstruction \(\omega _q\), flow likelihood \(\omega _p\), intrinsics consistency in \(z_I\)-space \(\omega _I\), intrinsics consistency in \(\lambda \)-space \(\omega _\lambda \), covariance disentanglement \(\omega _\varSigma \), and Jacobian disentanglement \(\omega _{\mathcal {J}}\).

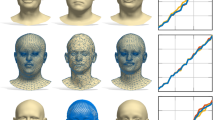

Pose-aware retrieval scores with the STD AE model. Model notation refers to the GDVAE++ model with (S) or without (U) rotation supervision, use of the PC-derived LBOS (PC; see Sect. 5.3.3), and the partial disentanglement loss ablation (NCNJ; see Sect. 5.3.4). The lighter (partially transparent) counterparts of each point corresponds to using \(\widetilde{z}_I\) instead of \(z_I\) for retrieval. We reproduce the FTL AE plots (from Fig. 19) to aid in comparison. See also Appendix Table 6 for detailed values. Compared to the FTL case, for SMPL, U performs relatively better on intrinsic scores, while S and U are relatively similar for extrinsic scores. For SMAL, we see that the extrinsic scores are generally better with the STD AE, compared to the FTL one. We also see that, in the STD case, the PCLBO scenario performs relatively better on SMAL than its S/U counterparts. Finally, we note that using the spectrum-derived latents \(\widetilde{z}_I\) are generally better, but not always (e.g., on SMPL-STD)

In all cases, we set \(n_R = 3\), \(\beta _1 = 1\), \(\beta _3 = 1\), \(\omega _p = 1\), \(\omega _{\mathcal {J}} = 200\), and \(\omega _q = 10\). See Table 7 for dataset-dependent parameters. An additional \(L_2\) weight decay was applied to all networks, with a strength of \(10^{-4}\). For the flow-only (GDVAE-FO) approach, the parameters are the same per dataset, except for \(\omega _I\) and \(\omega _\lambda \) (which were tuned more in line with original GDVAE model, in an effort to improve disentanglement). For the FO case, we set \(\omega _\lambda = 800\) and \(\omega _I\) to 0, 250, and 0, for SMAL, SMPL and HA, respectively.

1.2.3 VAE Training Details

As in the AE case, optimization is done with Adam, using a reduce-on-plateau scheduler. The initial learning rate was set to 0.0001, with a minimum of 0.00001. A batch size of 264 was used, except for MNIST, for which we used 512. The networks were trained for 25000 iterations for MNIST and 40000 iterations for all other datasets. We note that for the GDVAE++ mode only, we also cut the gradient of the \(\omega _I\) loss term from flowing through \(\widetilde{\mu }_I\) (preventing extrinsic information in \(\mu _I\) from contaminating \(\widetilde{\mu }_I\)).

Full Rotation-Space Experiments

We also provide some limited tests our method on full 3D rotations, rather than single-axis rotations. We find that the invariance properties are severely reduced in this more difficult scenario. In particular, we train two AEs on SMAL, both using the FTL architecture and allowing arbitrary rotations. We try both the supervised (S) and unsupervised (U) cases.

Results are shown in Table 8. We see that both reconstruction and rotation invariance are worsened; however, note that (1) \({\mathcal {C}}_{\text {3D}}\) is of a smaller magnitude than \(d_C(P,\widehat{P})\), suggesting the presence of some rotation invariance, and (2) \({\mathcal {C}}_X\) are larger than zero (the expected value if there were no latent structure in the space). Corroborating this latter point, in Fig. 23, we can qualitatively see that the tight latent clustering of rotated objects (as in Fig. 11) is no longer present, but that there is still some structure in the space, by which same-identity objects stay nearby under rotation.

We utilized slightly different hyper-parameters compared to the standard AE case. In particular, for S, we set the batch size to \(B=3\) and \(N_R = 48\) trained for 24 epochs; for U, we set \(B=2\) and \(N_R = 60\). Decoder layers were set via \(K=1400\) (see Sect. F.1). Loss weights were modified to \(\gamma _c = 8000\), \(\widetilde{\gamma }_P = 50/7\), \(\gamma _P = 10/7\) (S case) and \(\gamma _c = 500\), \(\widetilde{\gamma }_P = 400/7\), \(\gamma _P = 80/7\) (U case), to compensate for the larger rotational space.

Mesh Experiments

1.1 AMASS Experiments

1.1.1 AE Settings

Following other works (e.g., Marin et al., 2021; Tan et al., 2018), we use a fully connected AE for the AMASS meshes. In particular, the encoder and decoder have hidden layers (1024, 512) and (512, 1024). A latent dimension of \(\dim (x) = 128\) was used. Both networks use the SELU activation (Klambauer et al., 2017) and no normalization. Note that AMASS shapes use the SMPL mesh, with \(N_{\text {SMPL}} = 6890\) nodes.

Qualitative visualization of latent AE space with respect to full 3D rotation. Similar to Fig. 11, we show a t-SNE of the latent embeddings of random shapes under random rotations (we show more rotations as the set of rotations is now much larger). Heuristically, we can see there is some clustering structure in the space, but it does not have the tight invariance of the single-axis case. We show the supervised (S) case on the left and unsupervised (U) case on the right. See Sect. G for additional details

The loss utilized for training modifies only \({\mathcal {L}}_P\), which (in the FTL case) is given by

where \(D_V(V_1,V_2)\) is the vertex-to-vertex mean squared error between the input nodal coordinate sets. Other loss terms remain the same as in the PC case. We set \(\gamma _P = 5\) and use weight decay with \(\gamma _w = 10^{-3}\). For simplicity, following Zhou et al. (2020), we include the global rotation in \(z_E\) rather than using a separate latent variable. Note that the input and output size is much larger for AMASS than for the PC case (6890 vs. 1600 points). The same learning setup was used as in the PC case, except we apply AdamW (Loshchilov & Hutter, 2017) with a learning rate of \(10^{-4}\) and batch size of 100. We run for 250 epochs, using the same train, validation, and test splits as USPD. Notice that, while the AE uses the identical meshing of the input for the reconstruction loss, it does not perform any disentanglement. The VAE, which does perform disentanglement, uses only the raw x values (and does not update the AE), without correspondence or label information.

1.1.2 VAE Settings

We slightly modify the architecture of the VAE, removing batch normalization and replacing ReLU with SELU (as in the AE). We also increase the layer sizes: the flow network is given 10 layers, the encoders that predict \(\mu _E\) and \(\mu _I\) use hidden layers (2400, 2000, 1600, 800), and the decoder uses (2000, 1800, 1600, 800) for hidden layers; other networks are unchanged. We then use following hyper-parameters, with the SMPL (PC) settings as the default unless otherwise mentioned \( \omega _R = 750 \), \( \beta _2 = 5 \), \( \beta _4 = 500 \), \( \omega _\varSigma = 5 \) \( \omega _{\mathcal {J}} = 50 \), \( \omega _I = \omega _\lambda = 1000\), \( n_E = 18 \), and \( n_I = 9 \). No weight decay was used. We trained with a batch size of 2200 for 40K iterations, starting from initial learning rate \(5\times 10^{-5}\).

1.1.3 Empirical Variation

We also compute variabilities on our mesh experiments (see Sect. 5.4.1), to give an indication of the variability in the results for our method. For pose transfers, we obtain a standard deviation of 8.08. Table 9 shows the standard errors of the mean for the pose-aware retrieval task. In general, the standard deviations are fairly high. However, following USPD, the held-out data sets from AMASS are of size 10,733 for pose transfer and 11,738 for retrieval, meaning the standard error of the mean is relatively small.

1.2 CoMA Experiments

1.2.1 Dataset

We use the same data as in Marin et al. (2021), namely 1853 training meshes with 100 faces from an unseen subject for the shape-from-spectrum recovery test set. We also use their data and dimensionality for the LBOS eigenvalues, so we set \(\dim (\lambda ) = 30\), and treat the meshes at full resolution (3931 vertices and 7800 faces).

1.2.2 AE Settings

Following Marin et al. (2021), we use the same fully connected AE to derive the initial latent representation x: tanh was used as the non-linearity, no normalization was applied, and the hidden layers were given by (300, 200, 30, 200) (with input and output in \({\mathbb {R}}^{3|V|}\)), with \(\dim (x) = 30\). The reconstruction loss was the vertex-to-vertex MSE, with weight \(\gamma _P = 5\). We set the weight decay to \( \gamma _w = 0.01 \), the radial regularization to \( \gamma _d = 0 \), and the batch size to 16. Since this dataset has no orientation changes, we fix our rotation prediction to be identity.

1.2.3 VAE Settings

We use the same VAE architecture as the PC experiments. Only the hyper-parameters and training settings are altered, which we leave at the SMPL settings by default, except for the following changes (see also Sect. F.2.2 for details): \( \omega _R = 250 \), \( \beta _2 = 5 \), \( \beta _4 = 250 \), \( \omega _\varSigma = 100 \) \( \omega _{\mathcal {J}} = 250 \), \( \omega _I = \omega _\lambda = 1000\), \( n_E = 1 \), and \( n_I = 30 \). A lighter weight decay of \(10^{-6}\) was used. We trained with a batch size of 720 for 30K iterations, starting from initial learning rate \(5\times 10^{-5}\). While this setup works well for the shape-from-spectrum task, and it mimics the \(\dim (\lambda ) = 30\) setting from Marin et al. (2021), we found qualitatively that disentangled interpolations could be improved by altering these settings to \(n_E = 4\), \(n_I = 12\), \(\omega _R = 50\), and \(\beta _4 = \omega _J = 500\), which we use for Fig. 21. This is likely due to facial deformations not being exactly isometric; hence, using too high LBOS dimensionality (and too low \(\dim (z_E)\)) leads to \(z_I\) capturing information we might not expect to be intrinsic (but improving shape-from-spectrum performance).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Aumentado-Armstrong, T., Tsogkas, S., Dickinson, S. et al. Disentangling Geometric Deformation Spaces in Generative Latent Shape Models. Int J Comput Vis 131, 1611–1641 (2023). https://doi.org/10.1007/s11263-023-01750-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01750-9