Abstract

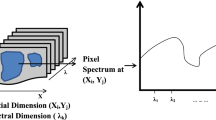

Hyperspectral images (HSIs) have significant advantages over more traditional image types for a variety of computer vision applications dues to the extra information available. The practical reality of capturing and transmitting HSIs however, means that they often exhibit large amounts of noise, or are undersampled to reduce the data volume. Methods for combating such image corruption are thus critical to many HSIs applications. Here we devise a novel cluster sparsity field (CSF) based HSI reconstruction framework which explicitly models both the intrinsic correlation between measurements within the spectrum for a particular pixel, and the similarity between pixels due to the spatial structure of the HSI. These two priors have been shown to be effective previously, but have been always considered separately. By dividing pixels of the HSI into a group of spatial clusters on the basis of spectrum characteristics, we define CSF, a Markov random field based prior. In CSF, a structured sparsity potential models the correlation between measurements within each spectrum, and a graph structure potential models the similarity between pixels in each spatial cluster. Then, we integrate the CSF prior learning and image reconstruction into a unified variational framework for optimization, which makes the CSF prior image-specific, and robust to noise. It also results in more accurate image reconstruction compared with existing HSI reconstruction methods, thus combating the effects of noise corruption or undersampling. Extensive experiments on HSI denoising and HSI compressive sensing demonstrate the effectiveness of the proposed method.

Similar content being viewed by others

Notes

It should be noted that other clustering methods could also be used instead of the K-means.

The derivation can be found in Appendix.

References

Aharon, M., Elad, M., & Bruckstein, A. (2006). K-svd: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Transactions on Signal Processing, 54(11), 4311–4322.

Akbari, H., Kosugi, Y., Kojima, K., & Tanaka, N. (2010). Detection and analysis of the intestinal ischemia using visible and invisible hyperspectral imaging. IEEE Transactions on Biomedical Engineering, 57(8), 2011–2017.

August, Y., & Stern, A. (2013). Compressive sensing spectrometry based on liquid crystal devices. Optics Letters, 38(23), 4996–4999.

August, Y., Vachman, C., Rivenson, Y., & Stern, A. (2013). Compressive hyperspectral imaging by random separable projections in both the spatial and the spectral domains. Applied optics, 52(10), D46–D54.

Boyd, S., Parikh, N., Chu, E., Peleato, B., & Eckstein, J. (2011). Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends\({\textregistered }\). Machine Learning, 3(1), 1–122.

Buades, A., Coll, B., Morel, J. M. (2005). A non-local algorithm for image denoising. In IEEE computer society conference on computer vision and pattern recognition, 2005. CVPR 2005 (Vol. 2, pp. 60–65). IEEE.

Chen, C., Huang, J. (2012). Compressive sensing mri with wavelet tree sparsity. In Advances in Neural Information Processing Systems, pp. 1115–1123

Chen, F., Zhang, L., Yu, H. (2015). External patch prior guided internal clustering for image denoising. In Proceedings of the IEEE international conference on computer vision (pp. 603–611).

Cotter, S. F., Rao, B. D., Engan, K., & Kreutz-Delgado, K. (2005). Sparse solutions to linear inverse problems with multiple measurement vectors. IEEE Transactions on Signal Processing, 53(7), 2477–2488.

Dabov, K., Foi, A., Katkovnik, V., & Egiazarian, K. (2007). Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Transactions on Image Processing, 16(8), 2080–2095.

Dong, W., Zhang, D., Shi, G. (2011). Centralized sparse representation for image restoration. In: 2011 IEEE international conference on computer vision (ICCV) (pp 1259–1266). IEEE.

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R., et al. (2004). Least angle regression. The Annals of statistics, 32(2), 407–499.

Elhamifar, E., & Vidal, R. (2013). Sparse subspace clustering: Algorithm, theory, and applications. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(11), 2765–2781.

Foster, D. H., Amano, K., Nascimento, S., & Foster, M. J. (2006). Frequency of metamerism in natural scenes. JOSA A, 23(10), 2359–2372.

Fu, Y., Lam, A., Sato, I., & Sato, Y. (2017). Adaptive spatial-spectral dictionary learning for hyperspectral image restoration. International Journal of Computer Vision, 122(2), 228–245.

Greer, J. B. (2012). Sparse demixing of hyperspectral images. IEEE Transactions on Image Processing, 21(1), 219–228.

Huang, J., Zhang, T., & Metaxas, D. (2011). Learning with structured sparsity. The Journal of Machine Learning Research, 12, 3371–3412.

Ji, S., Xue, Y., & Carin, L. (2008). Bayesian compressive sensing. IEEE Transactions on Signal Processing, 56(6), 2346–2356.

Kerekes, J. P., & Baum, J. E. (2005). Full-spectrum spectral imaging system analytical model. IEEE Transactions on Geoscience and Remote Sensing, 43(3), 571–580.

Li, B., Zhang, Y., Lin, Z., Lu, H. (2015). Center CMI (2015) Subspace clustering by mixture of gaussian regression. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2094–2102).

Lin, D., Fisher, J. (2012). Manifold guided composite of markov random fields for image modeling. In 2012 IEEE conference on computer vision and pattern recognition (CVPR) (pp. 2176–2183). IEEE.

Liu, L., Wang, P., Shen, C., Wang, L., Van Den Hengel, A., Wang, C., et al. (2017). Compositional model based fisher vector coding for image classification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 99, 1–1.

Liu, X., Bourennane, S., & Fossati, C. (2012). Denoising of hyperspectral images using the parafac model and statistical performance analysis. IEEE Transactions on Geoscience and Remote Sensing, 50(10), 3717–3724.

Lu, C. Y., Min, H., Zhao, Z. Q., Zhu, L., Huang, D. S., Yan, S. (2012). Robust and efficient subspace segmentation via least squares regression. In Computer vision–ECCV 2012 (pp 347–360). Springer.

Maggioni, M., Boracchi, G., Foi, A., & Egiazarian, K. (2012). Video denoising, deblocking, and enhancement through separable 4-d nonlocal spatiotemporal transforms. IEEE Transactions on Image Processing, 21(9), 3952–3966.

Maggioni, M., Katkovnik, V., Egiazarian, K., & Foi, A. (2013). Nonlocal transform-domain filter for volumetric data denoising and reconstruction. IEEE Transactions on Image Processing, 22(1), 119–133.

Martin, G., Bioucas-Dias, J. M., & Plaza, A. (2015). Hyca: A new technique for hyperspectral compressive sensing. IEEE Transactions on Geoscience and Remote Sensing, 53(5), 2819–2831.

Nasrabadi, N. M. (2014). Hyperspectral target detection: An overview of current and future challenges. IEEE Signal Processing Magazine, 31(1), 34–44.

Peng, Y., Meng, D., Xu, Z., Gao, C., Yang, Y., Zhang, B. (2014). Decomposable nonlocal tensor dictionary learning for multispectral image denoising. In 2014 IEEE conference on computer vision and pattern recognition (CVPR) (pp. 2949–2956). IEEE.

Qian, Y., & Ye, M. (2013). Hyperspectral imagery restoration using nonlocal spectral-spatial structured sparse representation with noise estimation. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 6(2), 499–515.

Qian, Y., Shen, Y., Ye, M., Wang, Q. (2012). 3-d nonlocal means filter with noise estimation for hyperspectral imagery denoising. In 2012 IEEE international geoscience and remote sensing symposium (IGARSS) (pp. 1345–1348). IEEE.

Rasti, B., Sveinsson, J. R., Ulfarsson, M. O., Benediktsson, J. A. (2013). Hyperspectral image denoising using a new linear model and sparse regularization. In 2013 IEEE international geoscience and remote sensing symposium (IGARSS) (pp. 457–460). IEEE.

Renard, N., Bourennane, S., & Blanc-Talon, J. (2008). Denoising and dimensionality reduction using multilinear tools for hyperspectral images. IEEE Geoscience and Remote Sensing Letters, 5(2), 138–142.

Schmidt, U., Roth, S. (2014). Shrinkage fields for effective image restoration. In 2014 IEEE conference on computer vision and pattern recognition (CVPR) (pp. 2774–2781). IEEE.

Tropp, J. A., & Gilbert, A. C. (2007). Signal recovery from random measurements via orthogonal matching pursuit. IEEE Transactions on Information Theory, 53(12), 4655–4666.

Van Nguyen, H., Banerjee, A., Chellappa, R. (2010). Tracking via object reflectance using a hyperspectral video camera. In 2010 IEEE computer society conference on computer vision and pattern recognition workshops (CVPRW) (pp. 44–51). IEEE.

Wang, J. (2012). Locally linear embedding. Berlin Heidelberg: Springer.

Wang, P., Cao, Y., Shen, C., Liu, L., & Shen, H. T. (2017). Temporal pyramid pooling based convolutional neural network for action recognition. IEEE Transactions on Circuits and Systems for Video Technology, 27(12), 2613–2622.

Wang, P., Liu, L., Shen, C., Huang, Z., Van Den Hengel, A., Shen, HT. (2016). Whats wrong with that object? identifying images of unusual objects by modelling the detection score distribution. In Computer vision and pattern recognition (pp. 1573–1581)

Wang, Z., Nasrabadi, N. M., & Huang, T. S. (2015). Semisupervised hyperspectral classification using task-driven dictionary learning with laplacian regularization. IEEE Transactions on Geoscience and Remote Sensing, 53(3), 1161–1173.

Wei, W., Zhang, L., Tian, C., Plaza, A., & Zhang, Y. (2017). Structured sparse coding-based hyperspectral imagery denoising with intracluster filtering. IEEE Transactions on Geoscience and Remote Sensing, 55(12), 6860–6876.

Wipf, D. P., Rao, B. D., & Nagarajan, S. (2011). Latent variable bayesian models for promoting sparsity. IEEE Transactions on Information Theory, 57(9), 6236–6255.

Yasuma, F., Mitsunaga, T., Iso, D., & Nayar, S. K. (2010). Generalized assorted pixel camera: Postcapture control of resolution, dynamic range, and spectrum. IEEE Transactions on Image Processing, 19(9), 2241–2253.

Yuan, Q., Zhang, L., & Shen, H. (2012). Hyperspectral image denoising employing a spectral-spatial adaptive total variation model. IEEE Transactions on Geoscience and Remote Sensing, 50(10), 3660–3677.

Zhang, H., He, W., Zhang, L., Shen, H., & Yuan, Q. (2014). Hyperspectral image restoration using low-rank matrix recovery. IEEE Transactions on Geoscience and Remote Sensing, 52(8), 4729–4743.

Zhang, L., Wei, W., Shi, Q., Shen, C., Van Den Hengel, A., Zhang, Y. (2017). Beyond low rank: A data-adaptive tensor completion method arXiv:1708.01008

Zhang, L., Wei, W., Tian, C., Li, F., & Zhang, Y. (2016a). Exploring structured sparsity by a reweighted laplace prior for hyperspectral compressive sensing. IEEE Transactions on Image Processing, 25(10), 4974–4988.

Zhang, L., Wei, W., Zhang, Y., Li, F., Shen, C., Shi, Q. (2015). Hyperspectral compressive sensing using manifold-structured sparsity prior. In Proceedings of the IEEE international conference on computer vision (ICCV) (pp. 3550–3558)

Zhang, L., Wei, W., Zhang, Y., Shen, C., Van Den Hengel, A., Shi, Q. (2016b). Cluster sparsity field hyperspectal imagery denoising. In European conference on computer vision. Springer.

Zhang, L., Wei, W., Zhang, Y., Shen, C., Van Den Hengel, A., & Shi, Q. (2016c). Dictionary learning for promoting structured sparsity in hyperspectral compressive sensing. IEEE Transactions on Geoscience and Remote Sensing, 54(12), 7223–7235.

Zhang, Z., & Rao, B. D. (2011). Sparse signal recovery with temporally correlated source vectors using sparse bayesian learning. IEEE Journal of Selected Topics in Signal Processing, 5(5), 912–926.

Zhao, Q., Meng, D., Xu, Z., Zuo, W., Zhang, L. (2014). Robust principal component analysis with complex noise. In ICML (pp. 55–63)

Acknowledgements

This work is supported in part by the National Natural Science Foundation of China (Nos. 61671385, 61231016, 61571354), Natural Science Basis Research Plan in Shaanxi Province of China (No. 2017JM6021), Innovation Foundation for Doctoral Dissertation of Northwestern Polytechnical University (No. CX201521) and Australian Research Council Grant (No. FT120100969). Lei Zhang’s contribution was made when he was a visiting student at the University of Adelaide.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Srinivasa Narasimhan.

Lei Zhang and Wei Wei have contributed equally to this work.

Appendices

Appendix A \({\varvec{\Theta }}\)-subproblem

In this appendix, we give the detailed derivation of solving \({\varvec{\Theta }}\)-subproblem with the alternative minimization scheme, which reduces the \({\varvec{\Theta }}\)-subproblem into four simpler subproblems on \({\varvec{\gamma }}_k\),\({\varvec{\varpi }}_k\),\({\varvec{\eta }}_k\), and \({\varvec{\nu }}_k\), respectively.

1.1 A.1 Optimization for \({\varvec{\gamma }}_k\)

Removing the irrelevant variables, we can obtain the subproblem over \({\varvec{\gamma }}_k\) as

Since \(f({\varvec{\gamma }}^{-1}_k) = \log |{\varvec{\Gamma }}^{-1}_k + {{\varvec{\Sigma }}}^{-1}_k + {\varvec{\Phi }}^T\mathbf{{A}}^T{{\varvec{\Sigma }}}^{-1}_n{\mathbf{A}{\varvec{\Phi }}}|\) over \({\varvec{\gamma }}^{-1}_k = [\gamma ^{-1}_{1k},\ldots ,\gamma ^{-1}_{n_dk}]^T\) results in Eq. (28) to be nonconvex, we turn to find a strict upper bound of \(f({\varvec{\gamma }}^{-1}_k)\) as

where \(f^*(\mathbf{z})\) is the concave conjugate function of \(f({\varvec{\gamma }}^{-1}_k)\) and \(\mathbf{z} = [z_1,\ldots ,z_{n_d}]^T\). The equality of Eq. (29) holds iff

Substituting the upper bound in Eq. (29) into Eq. (28) and removing the irrelevant variables, the subproblem over \({\varvec{\gamma }}_k\) can be simplified as

where \(\overline{y}_i\) is the i-th entry of \(\overline{\mathbf{y}} = \mathbf {diag}(\mathbf{{Y}}_k\mathbf{{Y}}^T_k) = [\overline{y}_1,\ldots ,\overline{y}_{n_d}]^T\). Since the variance \({\varvec{\gamma }}_k \ge 0\), this convex optimization over \({\varvec{\gamma }}_k\) gives a closed form solution over \(\gamma _{jk}\) as

1.2 A.2 Optimization for \({\varvec{\varpi }}_k\)

Given \({\varvec{\gamma }}_k\), \({\varvec{\varpi }}_k\) can be estimated by solving the problem

This problem produce a close-form solution of \(\varpi _{jk}\) as

1.3 A.3 Optimization for \({\varvec{\eta }}_k\)

Similar to optimization of \({\varvec{\gamma }}_k\), the subproblem over \({\varvec{\eta }}_k\) can be formulated as

Let \(\phi ({\varvec{\eta }}^{-1}_k) = \log | {\varvec{\Gamma }}^{-1}_k + {{\varvec{\Sigma }}}^{-1}_k + {\varvec{\Phi }}^T\mathbf{{A}}^T{{\varvec{\Sigma }}}^{-1}_n{\mathbf{A}{\varvec{\Phi }}}|\) with \({\varvec{\eta }}^{-1}_k = [\eta ^{-1}_{1k},\ldots ,\eta ^{-1}_{n_dk}]^T\). We have the upper bound of \(\phi ({\varvec{\eta }}^{-1}_k)\)

where \(\phi ^*({\varvec{\alpha }})\) is the concave conjugate function with \({\varvec{\alpha }} = [\alpha _1,\ldots ,\alpha _{n_d}]^T\). The equality of this upper bound holds iff

Substituting this upper bound into Eq. (35), we have a simpler subproblem over \({\varvec{\eta }}_k\) as

where \(\widehat{y}_j\) is the j-th entry of \(\widehat{\mathbf{y}} =\mathbf {diag}[(\mathbf{{Y}}_k - \mathbf{{M}}_k)(\mathbf{{Y}}_k - \mathbf{{M}}_k)^T] = [\widehat{y}_1,\ldots ,\widehat{y}_{n_d}]^T\). This convex optimization problem gives a closed form solution over \(\eta _{jk}\) as

1.4 A.4 Optimization for \({\varvec{\nu }}_k\)

Given \({\varvec{\eta }}_k\), we can estimate \({\varvec{\nu }}_k\) by solving the following formula as

This convex optimization problem yields a closed form solution as

Appendix B \({\varvec{\lambda }}\)-subproblem

Finally, \({\varvec{\lambda }}\)-subproblem can be formulated as

According to the following algebra equation

the subproblem in Eq. (42) simplifies to

Let \(g({\varvec{\lambda }}) = \log |{{\varvec{\Sigma }}}_n + {\mathbf{A}{\varvec{\Phi }}}({\varvec{\Gamma }}^{-1}_k + {{\varvec{\Sigma }}}^{-1}_k)^{-1}{\varvec{\Phi }}^T\mathbf{{A}}^T|\), we can obtain its upper bound as

where \(g^*({\varvec{\beta }}_k)\) is the corresponding concave conjugate function with \({\varvec{\beta }}_k = [\beta _{1k},...,\beta _{n_bk}]^T\). It can be found that the equality in Eq. (45) only holds when

Substituting this upper bound into Eq. (44), the subproblem over \({\varvec{\lambda }}\) further simplifies to

This amounts to the following optimization over each component \(\lambda _j\) of \({\varvec{\lambda }}\) as

where \(\overline{q}_j\) is the jth component of \({\overline{\mathbf{q}}} = \sum \nolimits _k \mathbf {diag}[({\mathbf{A}{\varvec{\Phi }}}{} \mathbf{{Y}}_k - \mathbf{{F}}_k)({\mathbf{A}{\varvec{\Phi }}}{} \mathbf{{Y}}_k - \mathbf{{F}}_k)^T] = [\overline{q}_1,\ldots ,\overline{q}_{n_b}]^T\). This convex optimization gives a closed form solution as

Rights and permissions

About this article

Cite this article

Zhang, L., Wei, W., Zhang, Y. et al. Cluster Sparsity Field: An Internal Hyperspectral Imagery Prior for Reconstruction. Int J Comput Vis 126, 797–821 (2018). https://doi.org/10.1007/s11263-018-1080-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-018-1080-8