Abstract

Helmholtz Stereopsis is a powerful technique for reconstruction of scenes with arbitrary reflectance properties. However, previous formulations have been limited to static objects due to the requirement to sequentially capture reciprocal image pairs (i.e. two images with the camera and light source positions mutually interchanged). In this paper, we propose colour Helmholtz Stereopsis—a novel framework for Helmholtz Stereopsis based on wavelength multiplexing. To address the new set of challenges introduced by multispectral data acquisition, the proposed Colour Helmholtz Stereopsis pipeline uniquely combines a tailored photometric calibration for multiple camera/light source pairs, a novel procedure for spatio-temporal surface chromaticity calibration and a state-of-the-art Bayesian formulation necessary for accurate reconstruction from a minimal number of reciprocal pairs. In this framework, reflectance is spatially unconstrained both in terms of its chromaticity and the directional component dependent on the illumination incidence and viewing angles. The proposed approach for the first time enables modelling of dynamic scenes with arbitrary unknown and spatially varying reflectance using a practical acquisition set-up consisting of a small number of cameras and light sources. Experimental results demonstrate the accuracy and flexibility of the technique on a variety of static and dynamic scenes with arbitrary unknown BRDF and chromaticity ranging from uniform to arbitrary and spatially varying.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

3D reconstruction has been an active research area in computer vision in the past decades due to the high demand for it in numerous industrial applications. For example, modern heritage preservation projects set high standards for geometric accuracy on challenging data striving for sub-millimetre resolution accuracy and impeccable global shape. Real objects often have unknown complex surface reflectance with a non-trivial (non-Lambertian) and possibly spatially varying model. There is also much interest in capturing dynamic often non-rigid deformation. This paper tackles the combined challenge of dynamic scene reconstruction with complex arbitrary spatially varying reflectance properties.

Shape-from-Silhouette (Baumgard 1974; Matusik et al. 2000; Lazebnik et al. 2007; Liang and Wong 2010) is a classical geometric technique that is independent of surface reflectance. However, the resolution of structural concavities in the visual hulls (Laurentini 1994) is poor compared to intensity-based methods. The well-established intensity-based methods for 3D geometry reconstruction such as binocular (Scharstein and Szeliski 2002) and multi-view (Seitz et al. 2006) conventional stereo as well as photometric stereo (Woodham 1989; Basri et al. 2007; Higo et al. 2010) have demonstrated remarkable sub-millimetre geometric accuracies on tailored data. The known limitation of both methods however is the inherent inability to deal with unknown surface reflectance. Conventional stereo requires Lambertian (purely diffuse) bi-directional reflectance distribution function (BRDF) being unable to establish feature matches where surface specularities occur. Photometric stereo on the other hand requires the a priori knowledge of the BRDF that must be acquired as pre-processing by a cumbersome and often insufficiently accurate method. There has been work focussing on combining the global accuracy of conventional stereo with the high frequency detail obtained from shading cues (Ahmed et al. 2008; Wu et al. 2011) or photometric stereo (Vlasic et al. 2009; Anderson et al. 2011) to increase modelling accuracy. However, the inherent limitations due to complex reflectance remain. To our knowledge, Helmholtz Stereopsis (HS) is the only technique in existence capable of accurately modelling surfaces with an arbitrary BRDF. The technique’s acquisition set-up, proposed in the seed paper by Zickler et al. (2002), features reciprocal image pairs characterised by the mutually interchanged camera and light source. The reciprocity at acquisition allows to formulate a depth constraint with the dependence on the BRDF factored out and hence an expanded range of applicability.

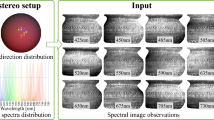

Standard HS has been shown to achieve excellent results for rigid scenes with complex a priori unknown reflectance. However, standard HS is not scalable to dynamic scenes since it does not permit simultaneous acquisition of the minimum of three reciprocal pairs due the performed swap of the camera and light source. In this paper, we propose Colour Helmholtz Stereopsis (CL HS) where wavelength multiplexing is used to enable simultaneous capture of reciprocal pairs. Signal separation is achieved by using three cameras and three coloured light sources and treating each camera channel as a separate image (Fig. 5). The novel approach permits instantaneous capture of three reciprocal pairs but it also introduces a new set of challenges. The challenges are the acute need for photometric calibration of capturing equipment, signal dependence on surface chromaticity and the ambiguity introduced by the drastically reduced number of reciprocal pairs per point.

We address all these challenges by developing a complete practical pipeline for CL HS. The pipeline includes a generalisation of the white light photometric calibration procedure from Jankó et al. (2004) to accommodate for chromatic characteristics of the cameras and multispectral light sources. As surface colour will affect inter-channel compatibility we propose a novel method for surface chromaticity estimation. Spatially varying chromaticity in dynamic scenes is addressed in a procedure for propagation of statically estimated characteristics throughout the sequence. Together the processes of chromaticity estimation and propagation define a procedure for spatio-temporal chromatic calibration of the surface and permit chromatically unconstrained dynamic scene reconstruction. The freedom of the directional behaviour of surface BRDF (i.e. unconstrained surface material) is inherently a given as the reflectance-independent HS constraint is used for reconstruction. Further, to cope with the reduced number of reciprocal pairs, we incorporate the state-of-the-art Bayesian HS formulation from Roubtsova and Guillemaut (2014a) into the pipeline. To the best of our knowledge, our CL HS pipeline is the first approach capable of reconstructing dynamic scenes with arbitrary spatially varying reflectance.

2 Related Work

The dominance of intensity-based reconstruction methods in terms of accuracy and practicality is evident from their ubiquitous use. Their conventional weakness is the reliance on the knowledge of surface-specific BRDF to separate geometry from reflectance in the sampled intensity response. The original formulations of both conventional and photometric stereo assume Lambertian reflectance. There has been noteworthy work aiming to generalise both techniques to more realistic cases.

Jin and colleagues (Jin et al. 2003, 2005) explicitly model non-Lambertian behaviour of scenes for conventional stereo at the expense of increased computational complexity. They propose a novel model-to-image discrepancy measure for non-Lambertian surfaces based on the more generic Ward’s reflectance model (Ward 1992) and formulated via the radiance tensor containing multiview reflectance observations per surface patch. In Oxholm and Nishino (2014), instead of explicit reflectance modelling, the approach aims for joint global estimation of shape and reflectance properties from a set of multiview images. Although the idea of joint inference of unknown parameters (i.e. shape and reflectance) based on observable characteristics (i.e. intensity and the illumination conditions) is plausible, the two estimates will remain inherently linked and the shape cannot be expected to be more accurate than the reflectance estimate whose full complexity is difficult to model with a finite number of images. Although conceptually interesting, non-Lambertian multiview conventional stereo methods comparatively do not deliver particularly accurate or high resolution results.

The topic of unconstrained reflectance has attracted even more attention in photometric stereo as unlike conventional stereo the technique is not fundamentally limited by the Lambertian assumption. One early attempt was the so-called photometric stereo by example (Hertzmann and Seitz 2005). The idea is to find relationships between surface normals and observed reflectance behaviour by sampling reference objects of known geometry under different illumination. The test object’s material is considered a linear combination of reference materials. With the assumption not being fundamental, the work cannot claim to have universally solved reflectance model dependence. In a more principled manner, Vogiatzis and Hernández (2012) address the difficulty of non-Lambertian reflectance in photometric stereo by fitting a Phong model (Phong 1975) based on the outliers from the Lambertian model estimation procedure. For problem tractability, the assumptions of monochromaticity and (spatially and spectrally) constant specular model parameters are made. In the formulation with the more complex Phong reflectance model, the model’s invertibility issues are tackled using the mentioned assumptions. The formulation is innovatively non-Lambertian, but the scene reflectance model allowed by the method is far from arbitrary but in fact limited by the many assumptions made (Phong model, monochromaticity, constant specular components parameters etc.) in pursuit of tractability and well-posedness of the mathematical formulation of the photometric stereo problem.

The aforementioned generalisation of conventional and photometric stereo to specific more complex models (Ward, Phong etc.) is not as fundamentally principled as the techniques based on generic or at the very least very common properties of the entire BRDF class. Specifically, these methods exploit various symmetries in the BRDF behaviour. In the photometric stereo approach of Holroyd et al. (2008) common half vector symmetries are utilised. The symmetries extend to some anisotropic microfacet-based models (Cook and Torrance 1982; Ashikmin et al. 2000; Ngan et al. 2005) but do not cover the entire class of physically valid BRDFs with the method having been found to fail in the face of retro-reflection and surfaces with asymmetric micro-geometries. Further, there has also been work (Alldrin and Zickler 2008; Zhou et al. 2013) on exploiting isotropy (i.e. rotation invariance about the normal) - a symmetry which, although not generic, is common for a majority of real-life reflectance models. The assumption of isotropy in photometric stereo allows the construction of the so-called iso-depth contours where all points are equidistant from the image plane. In Zhou et al. (2013), impressively accurate geometries of non-Lambertian surfaces are reconstructed by propagation of sparse surface points obtained by structure-from-motion along the constructed iso-depth contours. Relying on isotropic reflectance to build continuous iso-depth contours, these methods will also be sensitive to cast shadows and inter-reflections, not to mention the obvious requirement of an isotropic, although otherwise arbitrary, BRDF. In Tan et al. (2011), in addition to the wide-spread isotropy property of the BRDF, its generic symmetry of reciprocity is employed in the context of calibrated and uncalibrated photometric stereo. Although clearly a step towards generalisation to a wider class of BRDFs, the reliance of the proposed system on non-generic isotropy through its joint constraints with reciprocity still imposes limitations on the type of reflectance. To the best of our knowledge, no photometric stereo algorithm based solely on generic symmetries of the BRDF has ever been proposed.

In contrast, the generic reciprocity is the sole core underlying symmetry in an independent reconstruction technique of Helmholtz Stereopsis (HS). The use of the reciprocity symmetry exclusively makes HS the only intensity-based technique fundamentally independent of the BRDF. The subsequent development of HS, after its introduction by Zickler et al. (2002), included work on extensions for wider applicability and increased geometric accuracy. Guillemaut et al. (2004) propose modifications for accurate geometric reconstruction of highly textured surfaces by HS. In Guillemaut et al. (2008), a more physically meaningful HS constraint resulting in a Maximum Likelihood (ML) surface is formulated. In our recent work (Roubtsova and Guillemaut 2014a, 2015) Bayesian formulation of HS with a tailored prior jointly optimising depth and normal information is shown to produce superior results to the original ML formulation in Zickler et al. (2002). Variational approaches optimising over the entire surface (Delaunoy et al. 2010; Weinmann et al. 2012) have been proposed in order to extend HS to full 3D reconstruction. In Weinmann et al. (2012) HS is aided by a structured light technique to identify a consistent point set defining the reconstruction volume.

A major limitation of HS has always been its controlled set-up and the slow acquisition speed. Some of the impracticalities preventing the reconstruction technique from gaining wider popularity are addressed by the HS inventors in the follow-up papers. Firstly, Zickler et al. (2003) propose a binocular variant of HS where geometry is reconstructed from a single reciprocal pair by a differential approach. A partial differential equation of depth as a function of surface coordinates with prior initialisation produces a family of solutions, the ambiguity of which is resolved through a multi-pass optimisation. Although an excellent example of applied optimisation, binocular HS simplifies HS acquisition at the cost of vastly increased computational complexity and introduced reconstruction ambiguities. Another paper by Zickler (2006) addresses automatic online geometric calibration of a HS set-up using stable regions of interest: the texture-based and the inherent to HS specularity-based features. The paper proposes a method to avoid pre-calibration of the set-up but does not deal with the bottleneck issue of tedious sequential image acquisition limiting the scope of the technique to static scenes.

The same paper also touches upon automatic radiometric calibration of HS set-up using the inherent specularity-based features. In Zickler (2006), the definition of radiometric calibration is limited to measuring relative intensities of isotropic light sources. Provided the assumptions of equal camera responses and no spatial source intensity variation hold, such limited calibration is sufficient. A more general radiometric set-up calibration for HS, which does not rely on these assumptions, was proposed by Jankó et al. (2004). Using a sequence of localised calibration planes Jankó et al. calibrate for a spatially varying joint parameter describing sensitivity and radiance of a collocated camera and light source pair.

Unlike Zickler et al. (2003) who for HS simplification modify the reconstruction algorithm only, we propose a complete novel pipeline tailored for processing HS input for the first time acquired using wavelength multiplexing. Simultaneous multi-channel acquisition for dynamic scene reconstruction is known from the well-established Colour Photometric Stereo (CL PS). In Hernández et al. (2007) and its later extension Brostow et al. (2011), CL PS is shown to produce impressive reconstructions of dynamic scenes with untextured (uniform albedo) objects, specifically cloth deformation and facial expression sequences. By enforcing spatio-temporal smoothness, Jankó et al. (2010) extend the technique to textured surfaces, hence allowing spatial reflectance non-uniformity due to its chromaticity component. Both works however are limited to a Lambertian reflectance at each surface point, regardless whether chromaticity is uniform or allowed to be spatially varying across the surface. The applicability of the extension of CL PS to the non-Lambertian case from Vogiatzis and Hernández (2012) is limited because it relies on the accuracy of the data-driven fitting of a specular model whose variability is heavily constrained for mathematically tractability.

In contrast to the discussed state-of-the-art Colour Photometric Stereo techniques, the proposed Colour Helmholtz Stereopsis (CL HS) is valid for any arbitrary spatially varying BRDF. In this paper, we decouple the chromaticity component of the BRDF from the component dependent only on the illumination incidence and viewing angles (henceforth referred to as the directional component). As in standard HS, the directional component of BRDF in CL HS is made irrelevant by virtue of reciprocity at acquisition. In order to deal with the non-uniformity of the chromaticity component of the BRDF, we estimate chromaticity by integrating a novel calibration procedure into the pipeline. This builds upon our previous work (Roubtsova and Guillemaut 2014b, 2015) where static per-pixel surface chromaticity calibration permitted spatially varying chromaticity in static single-shot reconstructions only and dynamic scene reconstruction was limited to datasets with spatially uniform known chromaticity. The current work generalises the scope to dynamic scenes with spatially varying chromaticity by introducing an additional procedure for temporal propagation of surface chromaticity estimated in a reference frame. Spatio-temporal chromaticity calibration allows any arbitrary a priori unknown chromatic characteristics of the surface. Furthermore, likewise for inter-channel signal consistency at acquisition, in the proposed pipeline we generalise the previous work in photometric calibration of Jankó et al. (2004) to multiple multi-chromatic cameras and light sources. Hence by combining the unique properties of HS with a novel multi-spectral acquisition set-up and calibration procedures we obtain a method uniquely permitting dynamic scene reconstruction with fully arbitrary spatially varying BRDFs i.e. unconstrained in terms of both the directional and chromaticity components.

3 White Light Helmholtz Stereopsis

Since this paper proposes a multi-spectral variant of Helmholtz Stereopsis (HS), as a reference we first of all introduce and formalise the theory of traditional White Light Helmholtz Stereopsis (WL HS) together with its calibration procedure from Jankó et al. (2004). Subsequently, we shall use the same formalisation framework to present our novel Colour Helmholtz Stereopsis (CL HS) formulation.

To introduce HS, let us define a perspective camera \(\mathcal {C}\) and a light source \(\mathcal {S}\) centred at \(\mathbf {c_{1}}\) and \(\mathbf {c_{2}}\) respectively. In standard HS reciprocal image pairs are acquired with any \(\mathcal {C}\) and \(\mathcal {S}\) respectively first at locations \(\mathbf {c_{1}}\) and \(\mathbf {c_{2}}\) and then at \(\mathbf {c_{2}}\) and \(\mathbf {c_{1}}\) i.e. with the camera and light source mutually interchanged (Fig. 1). As in Jankó et al. (2004) we define the concept of Helmholtz camera \(\mathcal {R}\) as a collocated camera and light source at some position \(\mathbf {c}\). Traditionally, the collocation is virtual by either the camera/light source swap or by using a turntable to move the scene relative to the set-up (Fig. 1). Let us define virtually collocated \((\mathcal {C},\mathcal {S})\) pairs, \(\mathcal {R}_{1} = (\mathcal {C}_{1},\mathcal {S}_{1})\) and \(\mathcal {R}_{2}= (\mathcal {C}_{2},\mathcal {S}_{2})\) located at \(\mathbf {c_{1}}\) and \(\mathbf {c_{2}}\) respectively. The arrangement facilitates Helmholtz reciprocity as one image of the reciprocal pair is obtained with \(\mathcal {C}_{1}\) and \(\mathcal {S}_{2}\) at \(\mathbf {c_{1}}\) and \(\mathbf {c_{2}}\) and the other with \(\mathcal {C}_{2}\) and \(\mathcal {S}_{1}\) at \(\mathbf {c_{2}}\) and \(\mathbf {c_{1}}\) respectively. Note, in contrast to the state-of-the-art, the proposed method of CL HS presented in the next section will be based on physical collocation of \(\mathcal {C}\) and \(\mathcal {S}\) which means two distinct cameras and two light sources per pair of Helmholtz cameras (Fig. 4).

Regardless whether it is realised through physical or virtual collocation, Helmholtz camera \(\mathcal {R}\) is photometrically characterised by its radiance and sensitivity functions, \(\rho \) and \(\sigma \) respectively. With virtual collocation (i.e. a single pair of \(\mathcal {C}\) and \(\mathcal {S}\)) the sensitivity distributions of \(\mathcal {R}_1\) and \(\mathcal {R}_2\) will be the same but the radiances may vary due to different light source orientations. With physical collocation the photometric distributions of Helmholtz cameras are uncorrelated. Both \(\rho \) and \(\sigma \) vary as a function of ray \(\mathbf {v}\) from the surface point \(\mathbf {x}\) to \(\mathcal {R}\) (Fig. 2).

Hence, intensity \(i_{1}\) at surface point \(\mathbf {x}\) in the reciprocal pair image \(I_{1}\) acquired with \(\mathcal {R}_{2}\) as the light source and \(\mathcal {R}_{1}\) as the camera can be expressed (Jankó et al. 2004) as:

where \(\rho _{2}(\mathbf {v_{2}})\) is the radiance of \(\mathcal {R}_{2}\) along \(\mathbf {v_{2}}\) and \(\sigma _{1}(\mathbf {v_{1}})\) is the sensor sensitivity of \(\mathcal {R}_{1}\) along \(\mathbf {v_{1}}\). Intensity \(i_{2}\), which is the projection of \(\mathbf {x}\) in the other reciprocal pair image, is obtained by interchanging the vector indices 1 and 2 in (1). Jankó et al. (2004) propose a method for photometric calibration of \(\mathcal {R}\) in traditional WL HS. Specifically, for \(\mathcal {R}_{1}\) and \(\mathcal {R}_{2}\) as in Fig. 2 Jankó et al. (2004) calibrate for the radiance to sensitivity ratios:

In WL HS the wavelength variable \(\omega \) can be omitted from the BRDF expression \(f_{r}(\mathbf {v_2},\mathbf {v_1})\) at a given surface point because of the constant spectral characteristics of sampling illumination and the camera sensor. Since the sampling and sampled frequencies are known to be constant, the reflection/absorption behaviour due to the chromaticity of the sampled point is consistent and the BRDF varies only with the local directional variables \(\mathbf {v_1}\) and \(\mathbf {v_2}\). The whiteness of the sampling spectral characteristics ensures a sufficient response for the widest range of surface colours. Reciprocal intensity measurements \(i_{1}\) and \(i_{2}\) can be combined into a single surface normal constraint eliminating the dependence on the BRDF \(f_{r}(\mathbf {v_2},\mathbf {v_1})\) at that point. The elimination is based on Helmholtz reciprocity (Helmholtz 1925) - the invariance of optical behaviour in medium of a light ray and its reverse. For BRDF the implication first observed by Zickler et al. (2002) is that: \(f_{r}(\mathbf{{v_1}},\mathbf{{v_2}}) = {f_{r}}(\mathbf {v_2},\mathbf {v_1})\). Via this equality reciprocal intensities \(i_{1}\) and \(i_{2}\) expressed as in (1) are linked, incorporating photometric calibration \(\mu \), to give the normal constraint:

The constraint is instrumental in the process of geometric reconstruction whereby for each surface point the most plausible depth is selected from a set of hypotheses. The principle of depth selection is illustrated in Fig. 3. Depth hypotheses \(d_p\) are sampled along the projection ray \(r_{p}\) to pixel p of the virtual camera (an orthographic one is shown for simplicity). For each \(d_p\) constraints \(\mathbf {w}\cdot \mathbf {n} = 0\) as in (3) are acquired in different reciprocal camera-light source configurations. As originally described in Zickler et al. (2002), in standard HS at least three constraints in the form \(\mathbf {w}\cdot \mathbf {n} = 0\) are required in order to solve \(W\mathbf {n} =0\) where W is the constraint matrix with \(\mathbf {w}\) as rows. Singular value decomposition of W, i.e. \(SVD(W) = U \Sigma V^{\top }\), gives a normal estimate \(\mathbf {n}\) (the last column of V) and the confidence value for the estimate: \(\frac{\sigma _2}{\sigma _3}\) where \(\sigma _2\) and \(\sigma _3\) are the second and third diagonal values of \(\Sigma \) respectively. A high \(\frac{\sigma _2}{\sigma _3}\) means that all constraints \(\mathbf {w}\) are confined to two dimensions (i.e. co-planar), as at hypothesis \(d_{p,A}\) in Fig. 3, with \(\sigma _3\) tending to zero. The normal to the constraint plane in this case is well-defined. A low \(\frac{\sigma _2}{\sigma _3}\), as at hypothesis \(d_{p,B}\) in Fig. 3, would indicate a lack of constraint coplanarity due to the arbitrary \(\mathbf {w}\) orientations. In reconstruction by HS, the depth hypothesis \(d_p\) of the highest confidence value with its corresponding normal \(\mathbf {n}\) is assigned to the surface location projecting to pixel p. In the standard formulation of HS the depth assignment is performed in a maximum likelihood (ML) manner i.e. optimising the depth at each surface location independently.

4 Colour Helmholtz Stereopsis

We propose a novel approach that generalises Helmholtz Stereopsis (HS) to dynamic scenes—Colour HS (CL HS). In this section, the complete pipeline for CL HS featuring tailored calibration procedures and data processing algorithms is presented and formalised building on the notation presented in Sect. 3.

We expand the theory of WL HS to formalise CL HS. In CL HS, the \((\mathcal {C},\mathcal {S})\) pairs are realised by physical collocation (Fig. 4). The light sources such as \(\mathcal {S}_{1}\) and \(\mathcal {S}_{2}\) in Fig. 4 are characterised by different frequency spectra (Fig. 5). For consistent frequency-independent response, chromaticity of the reconstructed surface must be factored into the intensity equation. Unlike WL HS, in CL HS the illumination frequency \(\omega \) cannot be omitted from the expression for the BRDF at \(\mathbf {x}\): \(f_{r}(\mathbf {v_2},\mathbf {v_1}, \omega )\). We propose to decompose BRDF \(f_r\) into its directional component \(f_{d}(\mathbf {v_2},\mathbf {v_1})\), dependent only on \(\mathbf {v_{1}}\) and \(\mathbf {v_{2}}\) (i.e. the viewing and illumination incidence vectors respectively) and the component related to the surface point chromaticity \(p(\omega )\). We define the local chromatic constant \(p_{1,2}\) as the reflectance coefficient due to the inherent colour of a point when seen by camera \(\mathcal {C}_1\) and lit by light source \(\mathcal {S}_2\). The camera is of importance due to possible differences in spectral sensor characteristics. For the coefficient to be 0, the illumination spectrum must exactly match the point’s chromatic absorption spectrum. This is unlikely to happen exactly, although the signal quality on non-dominant channels will degrade for points of purer (R, G or B) colours. Incorporating chromaticity \(p_{1,2}\), we can re-write intensity equation in (1) for CL HS as:

For CL HS the normal constraint from (3) becomes:

Note that no assumptions are made about the directional component of the BRDF \(f_d\) - it can be arbitrary and unconstrainedly spatially-varying because due to the sampling configuration in HS consistency of the directional component within each reciprocal pair is guaranteed: \(f_{d}(\mathbf{{v_2}},\mathbf{{v_1}}) = f_{d}(\mathbf {v_1},\mathbf {v_2})\). The constraint in (5) is formulated by making use of this \(f_d\) equality, the knowledge of which stems from reciprocity, a fundamental property of the BRDF, and not from any surface homogeneity assumption.

Overview of the pipeline for Colour Helmholtz Stereopsis (CL HS). The core part in the middle computes the point cloud by MRF optimisation based on the data term from SVD decomposition of CL HS constraints and a tailored prior. Depending on its resolution, the generated point cloud is integrated into a mesh either by Poisson surface reconstruction (low resolution point cloud) or, without explicit integration, by direct meshing of vertices by proximity, based on the known geometric relationships between them in the reconstruction volume (high resolution point cloud). The top and bottom branches of the pipeline are respectively the photometric and chromaticity calibration procedures essential for consistency in CL HS constraint formulation

Traditional HS set-ups feature just one camera-light source pair where either the equipment moves relative to the scene or the static scene is moved relative to the set-up for reciprocal pair acquisition. CL HS we propose is a static configuration consisting of three pairs of collocated cameras and light sources. The cameras are each equipped with an RGB sensor while the light sources all have different RGB characteristics. Each collocated pair of an RGB camera and a single-frequency-spectrum light source can be viewed as a multi-spectral Helmholtz camera (or, alternatively, as three single-frequency Helmholtz cameras of which only two are used in the set-up, as the camera will never receive the same frequency it transmits). The three light sources in the set-up must have the minimum frequency overlap to ensure signal separation. Signal separation allows simultaneous acquisition of the three required reciprocal pairs for normal estimation and enables generalisation to dynamic scenes.

An overview of the reconstruction pipeline for CL HS is given in Fig. 6. Bayesian HS from Roubtsova and Guillemaut (2014a) is the reconstruction core where depth labels are assigned in a global optimisation based on the residual of SVD decomposition of sampled HS constraints and a tailored prior. As well as the experimental set-up, Sect. 7 discusses Bayesian HS in more detail as a means of enabling high reconstruction accuracy under the restriction of three reciprocal pairs per frame. In contrast to WL HS, in CL HS spatially distributed and camera dependent photometric and chromaticity calibration parameters are essential to compute HS constraints. Photometric calibration is particularly important for CL HS due to the physically different cameras and multi-spectral light sources of the approach. Note that Helmholtz cameras in CL HS are essentially characterised as a sensor of one light frequency spectrum and a transmitter of another in different reciprocal pairs. Section 5 details how we generalise the algorithm from Jankó et al. (2004) for photometric calibration in CL HS and provides insights into its application in practice. Section 6 introduces the spatio-temporal procedure we devised for chromaticity calibration applicable to both static and dynamic scenes. The procedure eliminates intensity inconsistencies within the same reciprocal pair by a priori estimating surface chromaticity observed by each camera for the spectrum of each light source and, if necessary, propagating the parameters to any unseen frame.

5 Helmholtz Camera Photometric Calibration

Jankó et al. (2004) photometrically calibrate each Helmholtz camera \(\mathcal {R}_{1}\) using another Helmholtz camera \(\mathcal {R}_{2}\) by linking HS constraints, obtained by gradual displacement of a calibration plane, via the ray of incident illumination \(\mathbf {v_{2}}\). In the original paper, the calibration was performed in a highly controlled environment with the plane translated in fixed vertical increments with a single camera and a light source suspended overhead and manually centred over the turntable with the plane (Fig. 2). For photometric calibration in the CL HS pipeline we went for a more freehand approach featuring a hand-held calibration board which was randomly moved within the reconstruction volume (Fig. 7). The calibration board bore four black markers (Fig. 8) for 3D plane localisation purposes. Specifically, detection, either manual or automatic, of at least three of those markers in the calibration images allows one to determine both the position and orientation of the calibration plane in 3D which defines the plane’s surface points \(\mathbf {x}\) and normal \(\mathbf {n}\) in the calibration equations in this section. Our entire configuration consisting of three multi-spectral Helmholtz cameras is calibrated simultaneously.

As in Jankó et al. (2004) for every position j of the calibration plane \(\varPi _{j}\) we establish a ratio of parameters \(\mu _{1}\) and \(\mu _{2}\) corresponding to the Helmholtz cameras \(\mathcal {R}_{1}\) and \(\mathcal {R}_{2}\) sampled at a surface point \(\mathbf {x}\) where rays \(\mathbf {v_{1}}\) and \(\mathbf {v_{2}}\) intersect (Fig. 7). However, in CL HS, the resultant ratio \(\kappa \) is not the same as in WL HS as it is derived from (5) rather than (3) incorporating chromaticity:

Effectively, in CL HS, we need to introduce the notion of relative photometric parameter distributions \(\mu '_{1}=\frac{\mu _1}{p_{1,2}}\) and \(\mu '_{2}=\frac{\mu _2}{p_{2,1}}\) capturing the radiometric properties of the acquisition equipment as sampled on the calibration object of a given reference chromaticity: \(p_{1,2} = p^{ref}_{1,2}\) and \(p_{2,1}=p^{ref}_{2,1}\). Thus \(\kappa (\mathbf {v_1}, \mathbf {v_2}\mid \varPi _j)\) is a ratio of relative photometric distributions \(\frac{\mu '_{1}}{\mu '_{2}}\). For simplicity, the chosen calibration object is of spatially uniform chromaticity (except the masked out black markers) i.e. \(p^{ref}_{2,1}\) and \(p^{ref}_{1,2}\) are constant for all surface points.

Subsequently, point \(\mathbf {x}\) on plane \(\varPi _{j}\) is transferred onto the plane in the new position \(\varPi _{j+1}\) by finding the intersection \(\mathbf {x}'\) of ray \(\mathbf {v_{2}}\) with \(\varPi _{j+1}\). Hence for plane \(\varPi _{j+1}\) the ratio \(\kappa ({\mathbf {v^{2}_{1}}, \mathbf {v_{2}}|\varPi _{j+1}}) =\frac{p_{2,1}\mu _{1}(\mathbf {v^{2}_{1}})}{p_{1,2}\mu _{2}(\mathbf {v_{2}})} \) is established sharing the denominator with the corresponding relationship of plane \(\varPi _{j}\). The shared denominator, together with chromaticity constancy, allows to obtain a photometric parameter relationship \(r_{1}(\mathbf {v_1}, \mathbf {v^{2}_{1}})\) between two pixel locations \((u_{1}, v_{1})\) and \((u^2_{1}, v^2_{1})\) corresponding to rays \(\mathbf {v_1}\) and \(\mathbf {v^{2}_{1}}\) in the spatial photometric distribution of \(\mathcal {R}_{1}\):

Estimation of the continuous spatially-varying photometric parameter distribution based on values \(\mathbf {m_1}=[\mu _1( \mathbf { v_{1} } ),\mu _1( \mathbf { v_{2} } ), \ldots , \mu _1( \mathbf {v_{N}} )]\) of a regular grid of control points defined in the image domain. For example, the sample point at (u, v) corresponding to the sampling vector \(\mathbf {v^{2}_x}\) will be expressed based on the \(\mu _1\) distribution values at the four corners (top-left, top-right, bottom-left and bottom-right at respectively \((u_{tl},v_{tl})\), \((u_{tr},v_{tr})\), \((u_{bl},v_{bl})\) and \((u_{br},v_{br})\) ) of its control point grid square weighted by the bilinear interpolation coefficients. Vectors \(\mathbf {v_x}\) and \(\mathbf {v^{2}_x}\) define two linked samples in the photometric parameter distribution

Let us consider a uniformly sampled grid of control points \(\mathbf {m_1}=[\mu _1( \mathbf { v_{1} } ),\mu _1( \mathbf { v_{2} } ), ... , \mu _1( \mathbf {v_{N}} )]\) sampled in the spatial photometric parameter distribution \(\mu _1\) (see Fig. 9). Equation (7) provides constraints on the set of control points of the photometric map via bilinear interpolation in a linear system of equations. We chose to use a simpler regularisation kernel than Jankó et al. (2004) who perform bicubic interpolation between control points. Specifically, the natural logarithm of (7) is taken resulting in a single constraint on the control points of the form:

where \(\lambda _{1} = \ln (\mu _{1}(\mathbf {v_x}))\) and \(\lambda _{2} = \ln (\mu _{1}(\mathbf {v^2_x}))\) are the sample point values (see Fig. 9), \(\varvec{\lambda } =[ \ln ( \mu _1( \mathbf { v_{1} } ) ) ,\ln ({ \mu _1( \mathbf { v_{2} } ) } ), ... ,\ln ({ \mu _1( \mathbf {v_{N} }) })]^\top \) is the vector of variables (control points), \(\mathbf {a_i}\) and \(\mathbf {b_i}\) are the bilinear interpolation coefficients from control points to sample points and lastly \(\delta _i = \ln (r_{1}(\mathbf {v_x}, \mathbf {v^{2}_{x}}))\). Per sample point only four coefficients in interpolation vector \(\mathbf {a_i}\) (or \(\mathbf {b_i}\)), specifically the ones corresponding to the four closest control points (Fig. 9), will be non-zero. Each point in the distribution is localised in the image domain by its (u, v) pixel coordinates - hence the four grid control points and the sample point coordinates are, respectively, \((u_{tl}, v_{tl})\), \((u_{tr}, v_{tr})\), \((u_{bl}, v_{bl})\), \((u_{br}, v_{br})\) and (u, v) where due to the grid sampling symmetry \(u_{tl} = u_{bl}=u_{1}; u_{tr} = u_{br}=u_{2}\) and \(v_{tl} = v_{tr}=v_{1}; v_{bl} = v_{br}=v_{2}\). With these definitions in mind, the corresponding four non-zero bilinear interpolation coefficients per sample point are defined as:

\({a_{tl}=\left( \frac{v_{2} - v}{v_{2} - v_{1}}\right) \left( \frac{u_{2} - u}{u_{2} - u_{1}}\right) }\),

\({a_{tr}=\left( \frac{v_{2} - v}{v_{2} - v_{1}}\right) \left( \frac{u - u_{1}}{u_{2} - u_{1}}\right) }\),

\({a_{bl}=\left( \frac{v - v_{1}}{v_{2} - v_{1}}\right) \left( \frac{u_{2} - u}{u_{2} - u_{1}}\right) }\),

\({a_{br}=\left( \frac{v - v_{1} }{v_{2} - v_{1}}\right) \left( \frac{u - u_{1}}{u_{2} - u_{1}}\right) }\).

From a set of constraints as in (8) the resultant linear system is:

where \(A =[\mathbf {a_0}, \mathbf {a_1},...,\mathbf {a_M}]^\top \), \(B =[\mathbf {b_0}, \mathbf {b_1},...,\mathbf {b_M}]^\top \) and \(\varDelta =[\delta _0, \delta _1 ..., \delta _{M}]^\top \). Having thus estimated all constrained control points of the grid, a continuous photometric distribution can be obtained by bilinear interpolation between them. In order to maximise the spatial coverage of the calibration, interpolation does not require all four neighbouring control points to be defined i.e. interpolation in the vertical or horizontal direction only is also permitted.

It has perhaps not been made explicit in Jankó et al. (2004) that Helmholtz cameras involved in a single reciprocal pair must be calibrated as a couple and not individually. Also, in CL HS, any pair of photometric distributions obtained will be relative to the calibration object (reference) chromaticity coefficients \(p^{ref}_{1,2}\) and \(p^{ref}_{2,1}\) as expressed in the ratio of (6). Specifically, control point values \(\mathbf {m_1} = [\mu _1(\mathbf {v_1}),\mu _1(\mathbf {v_2}), ... , \mu _1(\mathbf {v_N})]\) on the spatial photometric parameter distribution of Helmholtz camera \(\mathcal {R}_{1}\) are first obtained by taking the exponent of \(\varvec{\lambda }\) from (9) and are subsequently bilinearly interpolated to form a continuous photometric distribution \(\mu _1\). Then the photometric distribution \(\mu _{1,2}\) (i.e. distribution 2 derived from distribution 1) for \(\mathcal {R}_2\) is expressed by transfer of \(\mu _1\) via (6):

We can define the relative transferred photometric distribution \(\mu ''_{1,2} =\frac{p^{ref}_{1,2}}{p^{ref}_{2,1}}\mu _{1,2}\) where the transfer is from \(\mathcal {R}_{1}\) to \(\mathcal {R}_{2}\) and the relative aspect refers to the dependence of \(\mu ''_{1,2}\) on the reference chromaticity. Hence, the calibrated pair of photometric distributions \((\mu _{1},\mu ''_{1,2})\) as a whole retains the dependence on the reference chromaticity of the calibration object. The dependence is equivalent to calibrating ratios \(\mu '_1 =\frac{\mu _1}{p_{1,2}}\) and \(\mu '_2 =\frac{\mu _2}{p_{2,1}}\) from Eq. (5) with \(p_{1,2}= p^{ref}_{1,2}\) and \(p_{2,1}= p^{ref}_{2,1}\) to result in:

The equivalence of \((\mu _{1},\mu ''_{1,2})\) to \((\mu '_{1},\mu '_{2})\) can be observed by multiplying the homogeneous constraint in (11) through by \(p^{ref}_{1,2}\). The relative photometric calibration parameters \(\mu '_1\) and \(\mu '_2\) fully calibrate the set-up for any surface of reference chromaticity. In Sect. 6.1, it will be shown how the undesirable dependence on the reference is neutralised in the HS normal constraint by a corresponding relative chromaticity estimation procedure removing all surface chromaticity limitations.

Spatio-temporal chromaticity calibration pipeline. Chromaticity in the reference frame is estimated per camera. The resultant chromaticity maps (i.e. spatial chromaticity distributions) are aligned with the reconstructed view. If a dynamic sequence is being reconstructed, the aligned chromaticity maps are temporally propagated throughout the dynamic sequence using dense point tracking by optical flow

Let us describe the procedure for distribution transfer from \(\mu _1\) to \(\mu ''_{1,2}\). Transferred control points \(\mathbf {m''_{1,2}} = [\mu ''_{1,2}(\mathbf {v^*_1}),\mu ''_{1,2}(\mathbf {v^*_2}),...,\mu ''_{1,2}(\mathbf {v^*_N}) ]\) of the photometric calibration of \(\mathcal {R}_2\) are defined by a set of sampling vectors \([\mathbf {v^*_1}, \mathbf {v^*_2} ... \mathbf {v^*_N}]\) (the asterisk is added to differentiate the vectors from those sampling the directly calibrated distribution). The expression relating a point from \(\mathbf {m''_{1,2}}\) to the interpolated photometric map \(\mu _1\) of \(\mathcal {R}_{1}\) is:

where \(\kappa (\mathbf {v_1}, \mathbf {v^*_1}\mid \varPi _j)\) is a single constraint for parameter transfer from \(\mathcal {R}_{1}\) to \(\mathcal {R}_2\). The constraint derived from sampling geometry and observed intensities of a point defined by the intersection of vectors \(\mathbf {v_1}\) and \(\mathbf {v^*_1}\) on a given calibration \(\varPi _j\) is:

Note that \(\mathbf {v_1}\) does not have to sample one of the control points of the spatial photometric parameter distribution \(\mu _{1}\): any interpolated value of the distribution is a valid sample for the parameter transfer onto \(\mathcal {R}_2\). Just as in the direct calibration process, to maximise support in the transfer linear system solved, multiple calibration planes \(\varPi _j\) are used in the transfer and non-control points \(\mu ''_{1,2}(\mathbf {v^*}) \notin \mathbf {m''_{1,2}}\) of the distribution \(\mu ''_{1,2}\) also give rise to constraints (13). For every transfer sample, the computed \(\kappa \) constrains up to four control points from \(\mathbf {m''_{1,2}}\) that are related to the sample point through bilinear interpolation in the way defined in the N-dimensional constraint vector \(\mathbf {k_i}\) (along with \(\kappa \)). The transfer linear system solved is:

where \(K = [\mathbf {k_1},\mathbf {k_2},...,\mathbf {k_M}]^\top \) and \(\mathcal {M}_1 =[\mu ^1_{1}, \mu ^2_{1},..., \mu ^M_{1}]\) is a set of corresponding known M samples from the previously directly calibrated distribution \(\mu _1\). Values \(\mathbf {m''_{1,2}}\) obtained through the transfer procedure described are different from \(\mathbf {m_{2}}\) that could have been computed by direct calibration of \(\mathcal {R}_2\). The transfer procedure ensures mutual consistency of \(\mathbf {m''_{1,2}}\) and the directly calibrated \(\mathbf {m_{1}}\). Just as with the directly calibrated camera, the transferred control points are bilinearly interpolated between to produce a continuous spatial distribution.

In contrast to Jankó et al. (2004), we have found that for its accurate calibration a (multi-spectral) Helmholtz camera must be observed in at least two (multi-spectral) Helmholtz camera pairs as in Fig. 7. Jankó et al. (2004) mention ill-posedness of the calibration problem when a single Helmholtz camera pair is used due to constraints being sampled along the projection ray and the linked samples being along the same epipolar line. They however do not deem multiple Helmholtz camera pairs essential using a strong bicubic regulariser to address the ill-posedness. In our case, we make the problem better posed by making use of multiple pairs per multi-spectral Helmholtz camera which are readily available in the set-up. The better posedness allows us to work with a weaker bilinear regulariser thus avoiding potential artefacts due to over-regularisation. Calibration of a multi-spectral Helmholtz camera rather than a single-channel-sensitivity one, operates under the reasonable assumption of the shared spatial sensitivity distribution of the RGB sensors of a single physical camera \(\mathcal {C}\) and simplifies the problem from six to just three directly calibrated unknown photometric distributions in the set-up (subsequently transferred for consistency onto their reciprocal pair partners as discussed).

6 Surface Chromaticity Calibration

In this section, we propose a spatio-temporal chromaticity calibration procedure applicable to dynamic as well as static scenes. The overview of the procedure’s pipeline is presented in Fig. 10. Its two main stages are the initial per camera chromaticity estimation in the reference frame (Sect. 6.1) and its subsequent temporal propagation (Sect. 6.2) to any new frame provided sufficient overlap with the reference. Both single-shot unseen (non-reference) static scenes as well as entire sequences in dynamic scene reconstruction can be served by the spatio-temporal chromaticity calibration approach. The method’s effectiveness in dynamic scene reconstruction is demonstrated in Sects. 8.2.2 and 8.2.3 of the evaluation.

6.1 Initial Spatial Estimation

In this section, a procedure for per-pixel chromaticity estimation of the reconstructed surface is proposed. Since the three light sources in CL HS are red (R), green (G) and blue (B), the goal of the chromaticity calibration procedure is to compute the triplet \((p'_{c,R},p'_{c,G}, p'_{c,B})\) consisting of three chromaticity coefficients relative to the reference \((p^{ref}_{c,R},p^{ref}_{c,G},p^{ref}_{c,B})\). The triplet \((p'_{c,R},p'_{c,G}, p'_{c,B})\) describes the relative reflectance behaviour in response to red, green and blue illumination spectra for a visible surface point \(\mathbf {x}\) viewed by camera \(\mathcal {C}_c\) where \(c=\{1,2,3\}\). It is assumed that the illumination spectra relate to the spectral sensor characteristics of the three cameras in the same way.

The calibration method is based on sampling the chromatic response of first a planar object with the chosen reference chromaticity \((p^{ref}_{c,R},p^{ref}_{c,G},p^{ref}_{c,B})\) and then that of the arbitrarily coloured object to be calibrated. Both the reference and the calibrated objects remain static during sampling to facilitate per-pixel estimation. For sampling of chromatic response, both objects are sequentially exposed to red, green and blue illumination from the same direction by changing colour filters of a single static light source. The colour filters used to sample chromatic response are subsequently intended for data acquisition at reconstruction. The sum of the RGB colour filter spectra defines white illumination in this context. Let us formalise chromaticity estimation per pixel of any \(\mathcal {C}_c\) where \(c=\{1,2,3\}\) since each pixel in the procedure is calibrated independently.

Consider the planar reference surface in Fig. 11a being sampled by camera \(\mathcal {C}_c\) for any \(c=\{1,2,3\}\) in the configuration of CL HS. A surface point on the reference object \(\mathbf {x_{ref}}\) with the orientation \(\mathbf {n_{ref}}\) projects onto the camera sensor pixel defined by \(\mathbf {v^{ref}_1}\). The point is illuminated by a single light source \(S_{l}\) in the fixed position whose inherent radiance distribution is sequentially coloured red (\(\rho _{r}\)), green (\(\rho _{g}\)) and blue (\(\rho _{b}\)) using filters for the purpose of chromatic response sampling of \(\mathbf {x_{ref}}\). From (4) the image formation equations for the per-channel intensity responses \((i^{ref}_{c(r),r}, i^{ref}_{c(g),g}, i^{ref}_{c(b),b})\) at \(\mathbf {x_{ref}}\) corresponding to the spectrum of illumination in each case are:

Note that triplet \((p^{ref}_{c,R},p^{ref}_{c,G}, p^{ref}_{c,B})\) is the chromaticity of the reference surface. This reference chromaticity must be the same as the chromaticity of the calibration plane in the photometric calibration procedure from Sect. 5 in order to ensure the reference independence of the CL HS pipeline as a whole. Typically, the reference tends to be chosen in the white spectrum of colours to maximise the channel response, although theoretically it does not have to be. In addition, the chromaticity of the reference object must be uniform since otherwise there will be per-pixel variations in the reference chromaticity, which would be impossible to reconcile with the reference in the photometric calibration.

Now consider the calibrated surface point \(\mathbf {x}\) with orientation \(\mathbf {n}\) in Fig. 11b sampled with the same three coloured radiance distributions \(\rho _{r}\), \(\rho _{g}\) and \(\rho _{b}\) in an identical configuration of camera \(C_{c}\) and light source \(S_{l}\). The point-to-sensor projection is defined by vector \(\mathbf {v_1}\) and the direction of illumination by \(\mathbf {v_2}\). Each such point \(\mathbf {x}\) on the calibrated object is also characterised by a triplet of image formation equations \((i_{c(r),r},i_{c(g),g}, i_{c(b),b})\) defined by its chromaticity \((p_{c,R},p_{c,G},p_{c,B})\) in response to the same stimuli:

Note that \((p_{c,R},p_{c,G}, p_{c,B})\) in (16) is an absolute chromaticity triplet independent of any reference.

The camera-to-light source geometry is identical within each triplet of expressions in Eqs. (15) and (16) because both the set-up and the scene are static during sampling of each surface. This means that the ratio of any two expressions from (15) or (16) depends only on the relative \(\rho \),\(\sigma \) products and the corresponding per channel chromaticities. For example, the red-to-green response ratio for the reference surface point \(\mathbf {x_{ref}}\) is:

The equivalent ratio for the calibrated surface point \(\mathbf {x}\) is:

Chromaticity is estimated per-pixel in the image domain of \(\mathcal {C}_c\). Hence the idea is to link the reference and the calibrated surface points projecting onto the same pixel as shown in Fig. 11c. In other words, in the sampling configuration employed the projection vectors are the same: \(\mathbf {v^{ref}_{1}} = \mathbf {v_1}\), and Eq. (17) simplifies to:

meaning that the same point in the sensitivity distributions \(\sigma _r\) and \(\sigma _g\) applies to the corresponding reference and calibrated surface points, \(\mathbf {x_{ref}}\) and \(\mathbf {x}\) respectively. However, Fig. 11c also shows that the illumination vectors \(\mathbf {v^{ref}_{2}}\) and \(\mathbf {v_{2}}\) are clearly not the same because the sampled 3D points \(\mathbf {x_{ref}}\) and \(\mathbf {x}\) are not identical.

An important simplification can be made to Eqs. (18) and (19) given that the coloured radiance distributions \(\rho _{r}\), \(\rho _{g}\) and \(\rho _{b}\) are realised by applying colour filters to a radiance distribution of a single light source \(S_{l}\). Colour filters can be assumed spatially uniform which means that the radiance distributions \(\rho _{r}\), \(\rho _{g}\) and \(\rho _{b}\) differ from each other by a constant scale factor. For example, for one pair of distributions one can write: \(\rho _{r} = k\rho _{g}\) where k is a constant meaning that the same relationship holds for any two spatially corresponding samples of the distributions. With this in mind, the ratio in (19) can be re-written as:

Equivalently, (18) becomes:

As a result of the simplification, Eqs. (20) and (21) can be combined by substitution for \(k\frac{\sigma _r(\mathbf {v_1})}{\sigma _g(\mathbf {v_1})}\) and one obtains an expression for the ratio of two relative chromaticity components \(p'_{c,R}\) and \(p'_{c,G}\), defined against the reference chromaticity components \(p^{ref}_{c,R}\) and \(p^{ref}_{c,G}\), as a function of directly measurable intensities:

Three such ratios, e.g. \(\frac{ p'_{c,R} }{ p'_{c,G} } \), \(\frac{ p'_{c,R} }{ p'_{c,B} }\) and \( \frac{ p'_{c,G} }{ p'_{c,B} }\),:

result in three homogeneous constraints constituting a homogeneous linear system of equations:

The system is solved by SVD decomposition of the constraint matrix with as the solution a normalised vector i.e. the chromaticity coefficient triplet \([p'_{c,R}, p'_{c,G}, p'_{c,B}]^\top \).

Substitution of the relative chromaticity coefficients \(p'_{1,2} = \frac{p_{1,2}}{p^{ref}_{1,2}}\) and \(p'_{2,1} = \frac{p_{2,1}}{p^{ref}_{2,1}}\), where \(p_{c,l}\) is defined by the camera \(c=\{1,2,3\}\) and light source \(l=\{R,G,B\}\), together with the relative photometric parameter distributions \(\mu '_1 = \frac{\mu _1}{p^{ref}_{1,2}}\) and \(\mu '_2=\frac{\mu _2}{p^{ref}_{2,1}}\) from Sect. 5 into Eq. (5) instead of the absolute values:

results in the cancellation of the reference chromaticity from the normal constraint equation. Hence a constraint where both photometric and chromaticity calibration are relative to the same reference chromaticity:

is equivalent to the fundamental normal constraint of HS in Eq. 5 formulated in terms of the absolute values not directly accessible. To take advantage of this equivalence the same reference chromaticity must be used in both the photometric calibration of Sect. 5 and the chromaticity estimation described in this section.

Chromaticity describes only the relative inter-channel relationship, not the absolute intensities, which means that multiple colours map onto the same chromaticity (e.g. all greyscale values are the same in terms of chromaticity). The estimation procedure describes each set of colours with the same inter-channel relationship by a single colour from the set, specifically the one corresponding to the normalised vector \([p'_{c,R}, p'_{c,G}, p'_{c,B}]^\top \). For example, due to this intensity ambiguity, all greyscale colours map onto the RGB triplet \([\frac{1}{\sqrt{3}}, \frac{1}{\sqrt{3}}, \frac{1}{\sqrt{3}}]\), which is the normalised vector expressing inter-channel equality. It should be stressed that disambiguation of colours with the same chromaticity is irrelevant for reconstruction by CL HS. The normal constraint of CL HS in Eq. (26) is homogeneous meaning that any consistent scaling of chromaticity coefficients cancels out.

6.2 Temporal Propagation

The chromaticity calibration procedure described in the previous section is static with non-instantaneous acquisition, mapping chromaticities to pixel locations in one particular frame. For reconstruction of dynamic sequences with spatially varying chromaticity per-pixel colour information is needed for every frame. We propose to infer chromaticity in each frame of the dynamic sequence from the original single-frame calibration result using a chromaticity propagation procedure.

6.2.1 Optical Flow Based Procedure

As pre-processing, the chromaticity map of the reference frame must be aligned with the first frame of the dynamic sequence in order to form the starting point for chromaticity propagation. In the simplest case, the calibration would immediately precede the dynamic capture and the calibration shot will be roughly the same as the first frame of the sequence. Unaided perfect alignment occurs only in the case of static reconstruction of the reference frame used for chromaticity estimation. Any minor misalignment can be corrected using optical flow techniques. However, one would not wish to be limited to reconstruction of just the tailored dynamic sequence and hence re-use of chromaticity data for dynamic sequences featuring the same object but different initial positions is desirable. The problem of larger viewpoint variation with such untailored dynamic sequences is solved by warping the calibration shot (the source) to the initial frame of the dynamic sequence (the target) provided a sufficient degree of overlap between the two. The warping procedure involves initialisation of corresponding feature points in both the source and target and a transformation to align the features. The nature of the transform used depends on the surface being aligned. For (near-)planar surfaces, a global homography may be sufficient whereas surfaces with local curvatures require more sophisticated forms of warping. For alignment of non-planar surfaces, the user is required to define a coarse grid whose vertices correspond to scene features. Warping is then performed by local (piecewise) homography with bicubic interpolation. Non-planar alignment is illustrated in Fig. 12 that shows the source and target intensity images with the manually initialised feature grids superimposed and the resultant warping of the source onto the target. The approach has been found capable of coping with a visually substantial difference between the source and target. The alignment stage of the propagation process is the only part of the pipeline requiring manual interaction for feature matching. This requires minimal user effort and provides the ability to process multiple dynamic sequences with substantially varying initial poses from a single calibration result.

As the first stage of propagation, dense optical flow tracking is performed on the intensity images of the dynamic sequence for each camera separately. We use the efficient GPU implementation of optical flow from Sundaram et al. (2010), which has the large displacement optical flow (LDOF) (Brox et al. 2009) in its core. LDOF is a variational technique with a continuous energy functional whose optimisation is embedded into a coarse-to-fine framework allowing one to estimate large displacements even for smaller scene components (Brox et al. 2009). The ability of the algorithm to cover a wide range of displacement amplitudes permits freedom in the choice of motion speed and frame rates of the tracked dynamic scenes.

Tracking produces per-pixel flow maps (Baker et al. 2011) that are used in our work to propagate the aligned chromaticity calibration throughout the dynamic sequence, effectively establishing the mapping of the calibrated chromaticities to each frame. To ensure completeness of mapping coverage, backward flows are utilised rather than forward flows, meaning that each pixel of the current frame is assigned the chromaticity triplet (if defined) of the quantised to the nearest pixel back-projection in the previous frame. The nearest neighbour approach is chosen over higher order interpolation in order to avoid colour blurring at region boundaries of the chromaticity map. To illustrate the process, Fig. 13 shows, for one particular camera, two adjacent intensity frames with a substantial relative motion, propagation of chromaticity between the two and the corresponding backward and forward flow maps. The process of propagation hence provides a spatially varying chromaticity estimate for each frame of the dynamic sequence.

6.2.2 Propagation Error

It is true that optical flow computation is not an easy problem for real dynamic scenes which will inevitably introduce errors into chromaticity propagation. The errors have not been found prohibitive for the performance of the proposed system because of several reasons. Firstly, optical flow is known to err mainly in the uniform intensity regions due to the inherent ambiguity. Since uniform intensity generally correlates well with uniform chromaticity, a frame-to-frame misprojection within a single constant intensity region may not be noticeable in the propagated chromaticity map as the chromaticity is also the same throughout the region. Secondly, the Bayesian reconstruction core with a tailored depth-normal consistency prior of the proposed system (see Sect. 7.2 for details) offers a significant degree of robustness to various signal perturbation such as chromaticity propagation errors and cross-talk (see Sect. 7.3).

There are several things that could potentially be implemented to reduce the optical flow error, especially the drift problem for longer dynamic sequences. The most obvious device would be re-initialisation of the chromaticity map at regular intervals in the sequence, particularly when the subject pose changes significantly. Further, non-sequential tracking methods can be used that exploit similarity of pose within groups of not necessarily consecutive frames in a sequence by constructing a minimum spanning tree. The technique has been been successfully utilised for registration of mesh sequences involving non-rigid surface deformation (Klaudiny et al. (2012); Budd et al. (2013)) but can be equally applicable in 2D tracking. Finally, the propagation of chromaticity to a given frame can be robustified by making use of the reconstructed geometry from the preceding frames. Although propagation cannot be heavily based on prior geometry projection due to the possibility of abrupt pose variation, the error relative to the chromaticity prediction by projection can certainly act as an extra term, in addition to the optical flow cost, in the energy optimisation of per-frame chromaticity estimate.

7 Implementation

In this section, we discuss the details of CL HS implementation at various stages of the pipeline from acquisition to reconstruction. Further, cross-talk in the multi-spectral acquisition system is discussed as a source of error with a corresponding estimate of signal corruption and practical tips to minimise its influence.

7.1 Acquisition

Figure 5 shows our acquisition set-up consisting of three pairs of collocated RGB cameras \(\mathcal {C}_{c}\) where \(c=\{1,2,3\}\) and light sources \(\mathcal {S}_l\) where \(l =\{r,g,b\}\). Due to the use of RGB cameras, each collocated pair is a multi-spectral Helmholtz camera or a triplet of single-sensor-frequency Helmholtz cameras \(\mathcal {R}_{c,l}\) defined by the light spectrum transmitter collocated with the RGB camera at position c and the camera sensor of frequency channel l. Only two Helmholtz cameras of each collocated pair are used at acquisition resulting in a set-up consisting of the total of six Helmholtz cameras: \(( \mathcal {R}_{1,r}, \mathcal {R}_{1,g}, \mathcal {R}_{2,b}, \mathcal {R}_{2,g}, \mathcal {R}_{3,r}, \mathcal {R}_{3,b} )\). Sources \(\mathcal {S}_l\) are given different frequency characteristics by using red, green and blue colour filters for maximal spectral separation. The filters were chosen to match RGB channel spectra of the cameras as much as possible and no ambient light is allowed. With the set-up we simultaneously acquire three reciprocal image pairs, each characterised by two Helmholtz cameras and two RGB signal channels.

7.2 Reconstruction

Using these reciprocal pairs, constraints as in (5) are formulated. The constraints can be directly integrated into the original reconstruction pipeline proposed by Zickler et al. (2002) in the seed paper introducing HS. However, standard ML HS is known to be prone to noise due to the lack of regional support in depth assignment. In the proposed CL HS we are inherently limited to just three reciprocal pairs leaving room for reconstruction ambiguity. Additional intensity error may occur through channel cross-talk which we do not explicitly compensate for in this work. Consequently, standard ML HS is inadequate in this case. In this work, we adopt the Bayesian formulation of HS from our previous publications (Roubtsova and Guillemaut 2014a, 2015) where depth assignment is performed by minimising the joint sum of data (\(E_{data}\)) and prior (\(E_{prior}\)) costs over all surface points. To define a set of reconstruction variables, the surface is sampled spatially with a grid of pixels p of an orthographic virtual camera which determines the reconstruction view. The virtual camera pixel grid defines spatial neighbourhoods \(\mathcal {N}(p)\) where continuity is enforced by a prior in the optimisation process. Depth hypotheses \(d_p\) for each virtual camera pixel p are sampled along its orthographic projection ray through the scene. Bayesian HS seeks the optimum depth labelling configuration \(f_{MAP}\) in the set S:

where \(\alpha \) is a weighting factor for the relative contributions of the two terms. The data term is computed via SVD decomposition of the matrix consisting of three CL HS constraints (5) instantaneously acquired with our set-up. Specifically, the term is defined as the exponential decay with factor \(\mu = 0.2\ln (2)\) of the SVD residual quotient:

The chosen prior is based on the idea from Roubtsova and Guillemaut (2014a) to enforce consistency between depth d and normal \(\mathbf {n}\) estimates of neighbouring points (please, refer to the publication for more detail). The prior is uniquely tailored to HS as this method inherently associates a normal \(\mathbf {n}\) with every depth hypothesis \(d_{p}\) of virtual camera pixel p via SVD decomposition. The prior minimises the consistency error between the geometric local surface curvature and the photometric normal estimates:

The depth-normal consistency prior has been shown in Roubtsova and Guillemaut (2014a) to result in the most accurate depth maps compared to one-sided (depth-based or normal-based) priors.

The optimisation process results in a point cloud of oriented vertices whose resolution can be controlled by embedding the reconstruction core into a coarse-to-fine framework with both spatial and depthwise subdivision at each iteration. By harnessing the joint advantage of the tailored depth-normal consistency prior and the coarse-to-fine approach one can generate such accurate high resolution point clouds that can be meshed directly without explicit integration as post-processing. The direct meshing is accomplished by knowing the proximity relationships between vertices in the reconstruction volume. If the resolution of the point cloud is insufficient for direct meshing, integration can be performed by Poisson surface reconstruction (Kazhdan et al. 2006). Note that the generated point cloud is only a 2.5D sampling of the object with its back-side being occluded. Poisson surface reconstruction requires a full 3D point cloud, otherwise it is unable to close the surface, which distorts the reconstructed view. Inspired by the approach in Vlasic et al. (2009), for integration with Poisson surface reconstruction, the 2.5D point cloud with the occluded back-end is extended to obtain a watertight full 3D cloud taking normal orientation cues from the contour of the object’s orthographic projection to the virtual camera. The point cloud extension to full 3D is implemented merely to make the integration problem solvable by Poisson surface reconstruction and is not representative of the true geometry of the occluded back-end. The need for such speculative point cloud completion as well as the danger that integration as a post-processing step may introduce artefacts (e.g. point cloud over-smoothing) are the reasons why this explicit surface integration should best be avoided if possible. The relative performance of Poisson surface reconstruction and the advocated no explicit integration approach is compared on static scenes in Sect. 8.1.1 to support the statements made on the applicability of each.

7.3 Cross-Talk

Cross-talk occurs when a fraction of a signal intended for one channel is received on another channel. In the presented system based on wavelength-multiplexing cross-talk is the RGB illumination signals exciting the wrong camera sensors. The energy may be thus lost to other channel sensors or received from an unintended stimulus. Either way the received signal on each individual channel can be distorted by cross-talk.

A way to spatially estimate cross-talk is to measure the response of the three multi-spectral Helmholtz cameras in the reconstruction configuration to red, green and blue light reflected from a white surface. Assuming a perfectly white surface, the signal of a given colour in theory should excite a response only in the corresponding channel. The sum of responses on the other two channels is cross-talk. Measuring the response in the reconstruction configuration allows one to estimate a spatial distribution of cross-talk percentage from the total signal strength for a real-life scenario. Such a map does not allow to correct for cross-talk at reconstruction as the chromatic properties of the reconstructed surface will change the distribution. However, the distribution can serve as a good indication of the quality of the Helmholtz camera configuration.

Let us consider an example acquisition equipment configuration of three multi-spectral Helmholtz cameras (one less advantageous than the actual reconstruction configuration used) that helps provide practical suggestions for a better set-up in terms of cross-talk. For each camera the cross-talk percentage is measured under two signal wavelengths (the third wavelength is the one the Helmholtz camera emits itself and hence is irrelevant). Cross-talk is represented as a spatial distribution of the percentage leaked signal from the total signal strength. Fig. 14 shows the six distributions in the three camera reconstruction configuration with the corresponding statistical metrics given in Table 1. Generally speaking, the rms cross-talk observed in this configuration is about \(1-2\,\%\) (which with an 8-bit camera sensor amounts to about 5 intensity levels with a single light source in the test configuration). As the general trend the measured cross-talk percentage tends to increase towards the outskirts of the frame as the total signal strength there tends to be substantially weaker with essentially a low signal-to-(cross-talk) noise ratio. The drastic cross-talk percentage maxima given in Table 1 are not reliable measurements as they occur at near-zero signal intensity levels.

The cross-talk percentage expresses the relative significance of signal leakage in perturbing reconstruction and is co-determined by the local signal strength as well as the overall camera-light source RGB spectra compatibility. To minimise the effect of cross-talk at reconstruction the signal-to-noise ratio must be kept high in the region of interest. In other words the scene should be optimally illuminated. From the distribution presented in Fig. 14 it is clear that the blue light in the example configuration is oriented the most advantageously with the scene well-lit in a clearly defined spotlight where the locally observed cross-talk percentage is under \(0.5\,\%\). The orientations of the green and red light sources are inferior.

With a proper light source positioning resembling that of the blue light in the example configuration, cross-talk is unlikely to pose reconstruction challenges. In setting up the reconstruction configuration care must be taken to ensure that the scene receives the maximum amount of light from all the three light sources in order to minimise the cross-talk percentage (or equivalently to maximise the signal-to-noise ratio) in the reconstruction volume. Such a configuration can be easily achieved during scene framing by collocating the spatial centre of the reconstruction volume with the optical spotlight axes of all the projectors. Given such a configuration, the system will be able to cope well with any residual cross-talk (e.g. the rms value of \(1-2\,\%\) in Table 1) by virtue of the optimal Bayesian HS reconstruction core with its effective depth-normal consistency prior.

8 Evaluation

The methodology of CL HS is validated using real datasets with the evaluation comprising static and dynamic scenes versatile in the challenges they present.

In Sect. 8.1, the range of static objects was selected to demonstrate accuracy of the algorithm and its ability to cope with cases of different complexity. Shown in Fig. 18, the objects are: 1. a reference chromaticity plane (“Plane”) defined by the calibration board; 2. a plaster statue of a monster head (“Monster”) of not strictly uniform chromaticity; 3. a highly specular mug (“Mug”) of reference chromaticity and 4. toy-dog (“Slinky”) which is highly heterogeneous in terms of material, chromaticity, reflectance etc. and shows geometric and radiometric complexity due to fine structure, transparency, specular reflectance and pure colours of its various materials.

Having shown correctness of geometric reconstruction for the more controlled static scenery sets, we subsequently validate our claim of suitability of CL HS for dynamic scene reconstruction. We are particularly interested in reconstruction of such scenes with complex reflectance properties as these are inherently challenging for conventional and photometric stereo methods. In the dynamic scene evaluation in Sect. 8.2 a range of temporal object deformations was reconstructed featuring objects posing distinctive geometric and photometric challenges. The objects are a highly specular laminated white sheet (“WLS”), a white glossy blouse (“Blouse”), a woollen jumper with structural detail of its approximately uniformly coloured knitwear (“Jumper”) and a face showing reflectance complexity with its spatially varying chromaticity and non-Lambertian directional component (“Face”). As will be explained in Sect. 8.2, the selection of objects covers the full spectrum of possible surface chromaticity variation from uniformly reference chromaticity to freely spatially varying.

For both static and dynamic scene reconstruction, each Helmholtz camera was radiometrically calibrated as described in Sect. 5 using a calibration board with markers to define the position of the plane in each calibration shot. Position triangulation of any three of the four markers gives an anchor point and a normal to the calibration plane defined by the board. The black localisation markers are masked out together with the background outside the calibration boards in the images. Furthermore, data from more than just one pair of planes \(\varPi _j\) and \(\varPi _{j+1}\) is needed for continuous calibration coverage of the reconstruction frame. Using about 10 plane positions we directly calibrate for the \(\mu \) distributions of three multi-spectral Helmholtz cameras \((\mathcal {R}_{1}, \mathcal {R}_{2},\mathcal {R}_{3})\) (a simplification of the more difficult problem of calibrating six single-frequency-spectrum Helmholtz cameras \((\mathcal {R}_{1,r}, \mathcal {R}_{1,g},\mathcal {R}_{2,b}, \mathcal {R}_{2,g}, \mathcal {R}_{3,r}, \mathcal {R}_{3,b})\) made possible by the assumption of identical R,G and B sensors in a single camera \(\mathcal {C}\)). Figure 15 shows the photometric parameter distributions in the region of interest for Monster used in the reciprocal constraint computation: the first column distributions are the directly calibrated distributions of \((\mathcal {R}_{1}, \mathcal {R}_{2},\mathcal {R}_{3})\) while the second column are the partner Helmholtz camera distribution obtained by transfer (\(\mathcal {R}_{1}\rightarrow \mathcal {R}_{2}\), \(\mathcal {R}_{2}\rightarrow \mathcal {R}_{3}\) and \(\mathcal {R}_{3}\rightarrow \mathcal {R}_{1}\)) from the directly calibrated (the transfer is essential for photometric parameter consistency within a reciprocal pair as described in Sect. 5). The maps are represented as heat maps to illustrate regional variation of \(\mu \) within the scope of the reconstruction frame. The obtained photometric maps are invariant for all datasets acquired in the same capture session (static datasets in case of Fig. 15). An equivalent photometric calibration was performed for the dynamic scenes capture session.

Further, reconstruction of each shot is performed from three RGB images (three reciprocal pairs) instantaneously acquired by cameras \(\mathcal {C}_1\), \(\mathcal {C}_2\) and \(\mathcal {C}_3\) under concurrent multispectral (RGB) illumination of \(\mathcal {S}_1\), \(\mathcal {S}_2\) and \(\mathcal {S}_3\) as described in Sect. 7. Throughout the evaluation we, as appropriate, compare the following reconstruction methods:

-

1.

VH: visual hull (i.e. shape-from-silhouette);

-

2.

ML_HS_wPhCalib: standard (maximum likelihood) Helmholtz Stereopsis with photometric calibration only;

-

3.

ML_HS_wPh&ChromCalib: standard (maximum likelihood) Helmholtz Stereopsis with photometric and chromaticity calibration;

-

4.

BayesianHS_w/oCalib: uncalibrated Bayesian HS;

-

5.

BayesianHS_wPhCalib: Bayesian HS with photometric calibration only;

-

6.

BayesianHS_wPh&ChromCalib: Bayesian HS with photometric and chromaticity calibration.

We compare integration by Poisson surface reconstruction against the proposed final mesh assembly from the cloud of oriented vertices without explicit integration on static scenes. Subsequently, the explicit-integration-free pipeline is used throughout for all dynamic scenes.

Static scene reconstruction with the fully calibrated ML HS and Bayesian HS at different levels of calibration, using Poisson Surface Reconstruction for final surface assembly and without explicit surface integration. The objects considered are: Plane resolution (spatially/depthwise): 3/0.5 mm; Monster initial resolution (spatially/depthwise)—1/0.5 mm; final resolution—0.25/0.03125 mm; Mug initial resolution (spatially/depthwise)—1/0.25 mm; final resolution—0.5/0.015625 mm; Slinky initial resolution (spatially/depthwise)—1/1 mm; final resolution—0.25/0.0625 mm; Visual hulls are also included for reference. All meshes are rendered in flat shading (without the use of per-vertex photometric normals)

8.1 Static Scenes

This section is aimed at demonstrating both quantitatively and qualitatively the reconstruction accuracy of the proposed framework in static scene reconstruction of various photometric complexity.

8.1.1 Qualitative Evaluation

In Fig. 16 we qualitatively compare reconstructions of Plane, Monster, Mug and Slinky using

-

1.

VH (all);

-

2.

fully calibrated ML_HS: i.e. ML_HS_wPhCalib (Plane, Mug) or ML_HS_wPh&ChromCalib (Monster, Slinky);

-

3.

BayesianHS_w/oCalib (all)

-

4.

BayesianHS_wPhCalib (all)

-

5.

BayesianHS_wPh&ChromCalib (Monster, Slinky)