Abstract

Cross-domain recommendations (CDR) present a viable solution and are increasingly used to address the cold-start problem. Recently, CDR methods are utilizing deep models to generate latent preferences from context vectors or rating matrices and transfer these preferences between domains. However, many of these models focus on learning latent preferences using domain-related information and often disregard preference patterns from the contrary domain. Incorporating the contrary domain preference patterns into deep models can improve the generation of more effective latent representations. Moreover, existing CDR models face challenges in effectively transferring mapped preferences between domains due to the large features disparity between them. In this study, we tackle these problems and present a novel Deep Shared Learning and Attentive Domain Mapping (DSAM) approach for CDR. Specifically, we propose a variant of Long Short-Term Memory (LSTM) called shared learning LSTM, which incorporates the learning of cross-domain preference patterns alongside domain-specific user/item embeddings derived from textual reviews to dynamically generate shared contextual representations in each domain. We further exploit a multi-head self-attentive network to match item-specific knowledge from the source and target domains into different subspaces. We aggregate this learned knowledge to predict rating scores for cold-start users in the target domain. We efficiently optimize this framework in an end-to-end fashion. Experimental results on five real-world datasets demonstrate the effectiveness of our proposed approach against various groups of recommendation models. Additionally, we provide insights to help understand the model architecture and its robustness in handling cold-start users.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recommender systems (RSs) have grown in prominence over the last few decades with the expansion of internet services. Recommender systems are effective tools of information filtering that are prevalent due to increasing access to the Internet, personalization trends, and changing habits of computer users (Batmaz et al. 2019). Collaborative filtering (CF) methods have proven to be effective and commonly employed in the recommender system (Liu et al. 2022a; Wang et al. 2015). The fundamental premise of collaborative filtering techniques is that they use past user history and behavior to determine which products the user may like or find interesting. However, these methods are significantly less effective to recommend products for new users (the “cold start” problem) or when making recommendations in a domain where there is insufficient user behavior data, which limits the performance of recommendation systems. One potential solution to address the cold-start problem is cross-domain recommendation (CDR). CDR is an effective method for handling cold-start problems by transferring knowledge from a source domain with sufficient data for modeling to facilitate prediction in the target domain (Chang et al. 2021). For instance, an earlier research study introduced a novel collective matrix factorization technique that enables knowledge transfer from a dense auxiliary domain to a sparse target domain (Singh & Gordon 2008). This method jointly learns relation matrices and shares user latent factors across domains to improve predictive accuracy.

In recent years, the influence of deep learning is increasing in the recommendation systems research area. The hidden layer architecture of deep learning models can extract latent features, capture nonlinear user–item interactions or be able to make nonlinear mappings and infer relationships among features (Khan et al. 2021). Because of these attractive features, deep learning has become a superlative choice for researchers’ interest in using it for cross-domain recommender systems. Several existing works (Elkahky et al. 2015; Lian et al. 2017) indicate the potency of deep learning in catching generalizations and generating quality recommendations on cross-domain platforms. Amidst advancements in the field, there has been a notable research effort focused on increasing the understanding of users’ preferences. Customer reviews, which are now commonplace on many websites, have the valuable potential to understand consumer decisions or preferences. These reviews carry sentiment information and characterize users and products at a fine-grained level (Guan et al. 2019; Ni et al. 2019). Thus, exploiting user-generated reviews provides an explanation in refining the recommender system decision-making processes. For example, a study introduced a deep fusion model that integrates review texts, item contents, and rating matrices to learn latent semantic representations across two domains (Fu et al. 2019). This approach effectively addresses challenges related to the cold start and data sparsity in the target domain. Another research introduced a framework for cross-domain recommendation involving an aspect transfer network to model user preferences at the aspect-level derived from reviews (Zhao et al. 2020a). Additionally, the authors leveraged auxiliary reviews from similar neighbors to enhance users’ aspect representation.

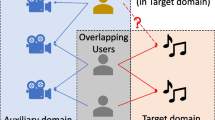

There are two core mechanisms of a cross-domain recommendation system. One involves generating the latent preferences using context vectors, rating matrices or both that identify as mapping representations of users and items in their respective domains. The other mechanism is transfer learning, which emphasizes transferring the learned users’ preferences from one domain to the other. Existing approaches, proposed by (Fu et al. 2019; Man et al. 2017; Yuan et al. 2019), first learn the user–item latent factors in the source domain and then transfer these factors to the target domain to match the latent spaces and learn user preferences in the target domain. On the other hand, models introduced by (Li & Tuzhilin 2020; Liu et al. 2022a; Zhao et al. 2020a) learn the user–item latent factors from domain associated features and then map this knowledge between domains via shared network weights. During the latent preferences learning phase, these methods primarily rely on domain-related information to capture user–item latent preferences. However, we believe that incorporating cross-domain preference patterns with the domain specific embeddings during the learning phase can capture shared latent preferences of different users of different domains. For example, by leveraging user preferences from the target domain and integrating them with the source domain context embeddings during the source domain learning phase (or vice versa, i.e., leveraging user preferences from the source domain during the target domain learning phase), we have the potential to enhance the generation of more effective latent representations. Furthermore, it is important to note that most existing CDR methods focus on using mapping weights (Li & Tuzhilin 2020; Zhao et al. 2020) or functions (Fu et al. 2019; Man et al. 2017) to transfer learned preferences between domains. We argue that these direct approaches may lead to a drop in performance due to variations in feature alignments across domains (Zhang et al. 2021; Zhang et al. 2022; S. Zhao et al. 2020b). To illustrate this point, consider Fig. 1, where reviewers express a positive sentiment “intriguing” (highlighted in orange) in both domains when referring to the "plot" feature in the source domain and the "track" feature in the target domain. It can be observed that the feature spaces of these two domains are dissimilar. Particularly in cases involving large datasets, deriving deep representations without explicitly matching domain feature space may not be good enough for domain adaptation (Long et al. 2016). When it comes to implementing the cross-domain recommendation scenario, both mechanisms—learning latent preferences and transferring them between domains—are highly sensitive, and their effective application ensures optimal recommendation performance.

An Illustration of Feature Alignment Variations Across Domains. Reviewers express positive sentiment using the term "intriguing" to describe the feature (movie plot) in the source domain and feature (music track) in the target domain. This highlights the disparity between features (plot and track) across different domains

To address the aforementioned CDR problems, we propose a novel cross-domain recommendation framework named Deep Shared Learning and Attentive Domain Mapping (DSAM). DSAM aims to dynamically generate shared contextual representations within each domain while leveraging an attentive domain feature mapping strategy. This approach is designed to enhance the cross-domain recommendations accuracy and mitigate the challenges associated with the cold-start problem. Our proposed solution consists of two main steps. In the first step, DSAM employs a novel shared learning Long Short-Term Memory (LSTM) deep network to obtain the underlying shared user–item mapping representations. This network incorporates cross-domain preference patterns alongside domain specific user–item embeddings into the process of modeling reviews context. In the second step, DSAM takes into account the item feature contexts from both the source and target domains. It then employs a multi-head self-attentive network, which serves the purpose of reducing variations in features alignments and allowing their mapping into different subspaces. This ultimately helps to improve the transferability of mapped knowledge between the two domains. Following these two steps, DSAM implements a simple predication layer to estimate rating accuracy in the target domain. We conducted extensive experiments on five benchmark datasets and compared our approach with several state-of-the-art methods. The results demonstrate that DSAM achieves the best performance in both rating prediction and top-n ranking tasks. Our contributions can be summarized as follows:

-

We propose a shared learning LSTM network that incorporates user–item embeddings and cross-domain preference patterns using textual reviews within the context learning process to generate underlying shared user–item mapping representations in each domain.

-

We introduce a novel attentive domain feature mapping layer that leverages the domain knowledge (item features) and effectively maps features into different subspaces to reduce variations in features alignment.

-

We conduct extensive experiments on five real-world datasets. We demonstrate our proposed method’s capability to handle cold-start users, and we provide insights into the various architectural components employed.

2 Related work

We briefly discuss the three recommender system research areas related to our work—traditional cross-domain recommendations, deep learning-based cross-domain recommendations and review-aware recommendations.

2.1 Traditional cross-domain recommendations

Cross-domain recommendation emerges as an approach helpful for mitigating the long-standing cold-start problem (Berkovsky et al. 2008; Bi et al. 2020; Fu et al. 2019). Researchers primarily focus on three fundamental aspects (domain analysis, overlapping scenarios and recommendation task) when building cross-domain recommender systems. Domain analysis involves inter-level system recommendations (Li et al. 2009), for example, recommending movies on Netflix based on a user’s interest on Amazon e-books and intra-level recommendations (Salah et al. 2021) that relate to recommendations made within the same system. Existing studies assume different scenarios in which user/item sets fully overlap, partially overlap, or not overlap across domains (Liu et al. 2022a; Liu et al. 2022; Pan & Yang 2013). In the realm of cross-domain recommendation tasks, the approaches either focus on leveraging data from two or multiple domains (Hu et al. 2018a; Wang et al. 2017) or transferring knowledge from a rich domain to a sparse domain (Lu et al. 2013; Zhang et al. 2018). An earlier cross-domain study proposed a collective matrix factorization (CMF) model that collectively factorizes rating matrices of two domains and shares the embeddings of users across domains (Singh & Gordon 2008). Another model CDTF improved the cross-domain recommendation task by learning triadic interactions between users, item and domains to provide domain-specific user preferences for items (Hu et al. 2013). Another example, where the authors enhanced the matrix factorization by embedding the users’ rating behaviors and then transferred neighborhood users’ features between domains in the mapping process (Wang et al. 2018). A classic method Codebook Transfer emphasized on abstracting user–item rating patterns in the form of a codebook from a dense auxiliary domain and transferring them to a sparse target rating matrix using a constrained tri-matrix factorization (Li et al. 2009). A different approach takes into account data heterogeneity and integrates both user and item knowledge in auxiliary data sources using a principled matrix-based transfer learning framework (Pan et al. 2010). However, these conventional approaches are capable of extracting linear mapping patterns based on known features and tend to be less effective when dealing with unseen or new user/item features.

2.2 Deep learning-based cross-domain recommendations

The majority of existing research concentrates on conventional algorithms like matrix factorization and memory-based collaborative filtering, which can only uncover linear relationships. But gradually, the focus of research is changing toward using deep learning methods to build cross-domain recommender systems. EMCDR, an embedding and mapping framework, is designed to learn users and/or items latent factors, respectively, using matrix factorization and Bayesian Personalized Ranking (Man et al. 2017). Unlike EMCDR that maps common users’ preferences across domains, the PTUPCDR method learns meta-generated personalized preferences to achieve personalized transfer of preferences for each user (Zhu et al. 2022). It subsequently utilizes a Multi-Layer Perceptron-based mapping function to capture the coordinate nonlinear relations across domains. A hybrid CCCFNet model employed factorization method to tie collaborative and content-based filtering together and further unified it into a multi-view neural network (Lian et al. 2017). A method called collaborative cross-networks (CoNet) implemented multi-layer feedforward networks to transfer dual knowledge across domains by introducing cross-connections from one base network to another and vice versa (Hu et al. 2018a). Later, the authors designed a novel method MTNET with unstructured text for cross-domain recommendation (Hu et al. 2018b). The proposed model can attentively extract useful content via a memory network (MNet) and can selectively transfer knowledge from across domains by a transfer network (TNET) (Hu et al. 2018b). Another methodology DARec leveraged the domain adaptation concept and transferred the learnt shared rating patterns of the same user in different domains using adversarial training (Yuan et al. 2019). Among the studies of dual transfer learning, DDTCDR model adopted novel latent orthogonal mapping to extract user preferences over multiple domains while preserving relations between users across different latent spaces (Li & Tuzhilin 2020). The model utilized autoencoder network to capture the latent essence of feature information. In most of these studies, recommender systems relied on implicit or explicit data as the primary source to predict users’ preferable rating scores. However, due to spareness in data, these systems are inadequate to gain achievable accuracy. Additionally, these data cannot reflect fine-grained users’ opinions. As a result, these methods fall short to provide explanations for their recommendations (Guan et al. 2019).

2.3 Review-aware single domain and cross-domain recommendations

Textual reviews have proven to be a notably crucial data source in a manner of abstracting detailed user opinions, demonstrating their effectiveness in review-aware recommender systems for modeling fine-grained user–item interactions (Liu et al. 2021b). Capturing fine-grained information improves the understanding of user preferences for items and offers explainable recommendations. One of the pioneer works employed a topic modeling technique Hidden Factors Topics (HFT) to learn latent aspects from either user or item reviews (McAuley & Leskovec 2013). Other studies (Diao et al. 2014; Ling et al. 2014) concentrated on the joint learning of reviews and ratings to improve recommendation performance. A different technique adopted an initialization strategy that involved developing a topic model from review data and then initializing the user–item latent factors within the topic space of the topic model (Peña et al. 2020). Further the methodology optimized these initializations with factorization machine algorithm, which resulted in faster convergence of the algorithm. All these studies are based on lexical similarity. However, the users have different tendencies in writing a product review meaning the words contain inherent ambiguity (Hyun et al. 2018). Consequently, such models encounter difficulty in completely understanding the unstructured review data.

With the help of deep learning technology, not only the content (words) but also the associated rich elements can be learned from the textual reviews. There is significant ongoing research in the direction of deep learning methods applied to learning textual reviews for recommendation systems. A Deep Cooperative Neural Networks (DeepCoNN) model jointly modeled user behaviors and item properties from text reviews using Convolutional Neural Networks (CNNs) (Zheng et al. 2017). On top of the networks, the authors framed a factorization machine shared layer to connect user–item representations and obtain final ratings. In subsequent research, the DeepCoNN model was expanded by incorporating a transform layer into the source network, which approximates the user and item review texts (Catherine & Cohen 2017). Unlike DeepCoNN, this model did not utilize reviews during validation or testing phase and still maintained reasonable accuracy. A new approach proposed a deep hybrid model to provide interpretable recommendation via fusing rating embeddings with textual features (Wang et al. 2021b). Other research studies have demonstrated the usefulness of review by providing explanations at the review level (Chen et al. 2018) or by taking into account various properties of reviews (Wang et al. 2021a) to facilitate effective decisions about recommending the items. Next research methodology gained interpretability of captured semantic representations from review text using attention mechanism with CNN (Seo et al. 2017). However, these efforts have concentrated solely on single-domain recommendations, disregarding user preferences across other domains. Moreover, single-domain recommendation systems encounter challenges such as scalability and the ability to recommend items to new users due to limited data availability.

Leveraging reviews in cross-domain recommender systems has attracted increasing research interests due to their tendency to reflect diverse user opinions on item attributes and establish accurate recommendation systems. RC-DFM, a review and content-based deep fusion model, integrated novel Stacked Denoising AutoEncoder (SDAE) to effectively fuse review text, item content and rating matrix in both auxiliary and target domains (Fu et al. 2019). RC-DFM matches latent space vectors across domains to predict preferences for cold-start users in the target domain. Likewise, another study unified ratings and reviews across multiple domains and was able to automatically generate cross-domain reviews for cross-domain recommendations (Doan & Sahebi 2019). While CD-DNN model jointly learns reviews and item metadata in both domains using a parallel deep neural network (Hong et al. 2020). Extending the research, a novel CATN model leveraged aspect level preference matching by correlating learnt aspects across domains with an attention mechanism (Zhao et al. 2020a). This cross-domain mapping is learned considering there are overlapping users between source and target domain. While DSLN focused on scenario when domains have minimum or no overlapping users (Liu et al. 2022a). The model is designed to extract and select useful embedding learnt from user reviews in the auxiliary domain to transfer to the target domain using CNN-based text processor and denoising autoencoder. A recent work proposed collaborative filtering with attribution alignment model for solving review-based non-overlapped cross-domain recommendation (Liu et al. 2022b). The proposed model aimed to reduce domain discrepancy by adopting horizontal and vertical alignment of embeddings obtained from text review and historical rating. However, these review-based cross-domain methods fall short in fully capitalizing on the potential to capture shared latent preferences of different users between domains. Additionally, these models often overlook the variations in feature spaces between domains when transferring mapped knowledge from the source to the target domains.

Different from these methods, our proposed DSAM solution focuses on exploiting the cross-domain preference patterns from textual review embeddings. This information is then incorporated into a novel shared learning LSTM network alongside domain specific user–item embeddings to extract shared representations. Ultimately, this approach helps in learning the underlying preferences of different users across the two domains. Moreover, DSAM leverages domain knowledge (item features) from both the source and target domains and uses an attention mechanism to learn these features, reducing variations in domain feature spaces and enhancing the cross-domain transferability process.

3 Proposed method

In this section, we formulate the cross-domain recommendation (CDR) task, provide an overview of proposed DSAM model, and then discuss the specifics of the DSAM components in detail.

3.1 Problem formulation

The notations used in our model are listed in Table 1. In CDR, there are two distinct domains: the source domain (denoted as \(d_{s}\)) and the target domain (denoted as \(d_{t}\)). Each of these domains has a set of users \(U = \left\{ {u_{1} , u_{2} , u_{3} , \ldots ., u_{m} } \right\}\), and a set of items \(V = \left\{ {v_{1} , v_{2} , v_{3} , \ldots ., v_{n} } \right\}\), where \(m\) represents the total number of users, and \(n\) represents the total numbers of items in each domain. Both source and target domains are associated with user–item interaction information, which includes ratings \(\left( {y_{u,v} } \right)\), user reviews \(\left( {rev_{u} } \right),\) and item reviews (\(rev_{v}\)). Each item in these domains is associated with a set of item content/features (e.g., title and description) represented as \(IC = \left\{ {ic_{1} , ic_{2} , ic_{3} , \ldots ., ic_{k} } \right\}\). The overlapping users between two domains can be given as \(U^{o} = U^{s} \cup U^{t}\). The users that do not have any interactions (ratings and reviews) with items, we denote as a cold-start users \(U^{cs}\). The goal of the DSAM is to (a) capture underlying shared representations of two domains using a novel LSTM network that introduces the learning of cross-domain preference patterns into the process of modeling domain specific user–item embeddings (b) map and project all the item-specific features from source and target domains into different subspaces through attention mechanism (c) enhance transferability and recommendation accuracy in the target domain by aggregating hidden shared representations from (a) and domain interaction attentive features from (b). To formalize the cross-domain recommendation problem, we define it as follows:

-

Input: The input in each domain consists of domain-specific information with (\(U, V, rev_{u} , rev_{v} , y_{u,v} ,{ }IC\))Footnote 1 and the preferences patterns \(I_{uv}\) learned from cross-domain data.

-

Output: A prediction function that estimates the likelihood \(\hat{y}_{u,i}\) that a user \(u\) will interact with an item \(v\). This prediction is particularly important for findings ratings for the cold-start users \(U^{cs}\), who do not have any interaction history in the target domain.

3.2 Deep learning-based cross-domain recommendations

The complete architecture of DSAM is shown in Fig. 2, which includes two domains: the source domain and the target domain. In the pre-step, we combine all the reviews written by the user \(rev_{\left( u \right)}^{*}\) into a single document \(D^{u*}\) and all the reviews written for an item \(rev_{\left( v \right)}^{*}\) into a single document \(D^{v*}\). Similarly, we combine the item features and represent with a unified piece of text into a single document \(DC^{v*}\). \(\left( * \right)\) indicates the source or the target domain. Each domain processes these user–item review documents in context modeling to generate latent representations of users and items. Furthermore, within each domain, we utilize the item feature documents to establish strong connections across domains. This is achieved by mapping domain-specific knowledge through different subspaces, enabling the efficient transfer of mapped representations from one domain to the other.

Each domain structure consists of three major components: Embeddings and interaction learning layer, Shared learning LSTM layer, and Attentive domain feature mapping component. Within the embeddings and interaction learning layer, we first adopt word embedding method to generate corresponding embedding representations of each group of reviews. \(e_{u}^{s}\) represents user-embeddings and \(e_{v}^{s}\) represents item-embeddings in the source domain. Correspondingly, \(e_{u}^{t}\) and \(e_{v}^{t}\) represent user and item embeddings in the target domain. Likewise, we apply word embedding to generate embedding representations from item feature documents with \(ce_{v}^{s}\) representing item feature embeddings in the source domain and \(ce_{v}^{t}\) representing item feature embeddings in the target domain. Next, in the interaction learning phase, we generate interaction matrix \(I_{uv}^{s}\) from source domain embeddings \(\{ e_{u}^{s}\) and \(e_{v}^{s}\)} and \(I_{uv}^{t}\) from target domain embeddings \(\{ e_{u}^{t}\) and \(e_{v}^{t}\)}. These domain-specific review embeddings, along with the cross-domain interaction matrix, are then input into a shared learning LSTM layer. To elaborate, in the source domain, the LSTM network is employed for both user and item modeling. For the user model, the LSTM network takes user embeddings \(e_{u}^{s}\) and target domain matrix \(I_{uv}^{t}\) as inputs to learn user latent representations. Conversely, for the item model, the LSTM network takes the item embeddings \(e_{v}^{s}\) and the same target domain matrix \(I_{uv}^{t}\) as inputs to learn item latent representations. The vector formed interaction matrix can influence the process of learning review embeddings within the LSTM network. It has potential to select the shared key information to extract the useful user latent representations \(h_{u}^{*}\) and item latent representations \(h_{v}^{*}\) in both the source and target domains. We obtain the final latent representations \(Z^{s}\) in the source domain and \(Z^{t}\) in the target domain by concatenating user and item representations into a single feature vector. Within the attentive domain feature mapping component, we input the item feature embeddings \(\{ ce_{v}^{s}\) and \(ce_{v}^{t}\)} of both the domains and implement a multi-head self-attention mechanism to map these features. In each network layer, the attention mechanism learns the domain features, and various combinations can be explored using the multi-head, effectively mapping the features into different subspaces. With this approach, a different order of combinatorial attentive features can be modeled say \(Z_{attn}\). The output of this component is further used with the user–item representation vector \(Z^{*}\) to make final prediction of the rating scores \(\hat{y}_{u,v}^{*}\). In the following sections, we discuss each of these components in detail.

3.3 Embedding and interaction learning layer

In the initial step of our text analysis pipeline, we handle the text corpus by converting into a representable vector form. Each text corpus contains a sequence of words \(\left( {w_{1} , w_{2} , w_{3} , \ldots ..w_{K} } \right)\), and the word embedding layer maps this sequence of words to their respective \(d\)-dimensional distributed vectors. This mapping allows us to encode the text in a way that captures meaningful representations and relations. In our methodology, we use word2vec (Mikolov et al. 2013), a distributed representative word embedding method to capture semantic encodings of reviews text and domain features (item content). Following the approach outlined in the paper by (Zheng et al. 2017), we gather all reviews \(rev_{u}^{s}\) written by a user \(u\) in the source domain \(s\) and combine them into a single document \(D_{1:K}^{us}\), consisting of \(K\) words. Similarly, we aggregate all reviews \(rev_{v}^{s}\) written for an item \(v\) in the source domain \(s\) into a single document \(D_{1:L}^{vs}\). Likewise, we follow a parallel procedure for the target domain. We create user and item documents from reviews \(rev_{u}^{t}\) and \(rev_{v}^{t}\) in the target domain, resulting in \(D_{{1:K^{\prime}}}^{ut}\) and \(D_{{1:L^{\prime}}}^{vt}\), respectively. These documents are then subjected to a pretrained word2vec algorithm, which takes the document let say \(D_{1:K}^{u}\) as input and generates corresponding vector representations for each word \(w_{{j \in \left\{ {1:K} \right\}}}^{u}\) in the document. By concatenating these word vectors, we construct an embedding matrix \(EM_{1:K}^{u}\) for the user \(u\) as follows:

A similar process is applied to item features text corpora, where we combine all item features \(IC\) of an item \(v\) in the source domain \(s\) into a single document \(DC_{1:P}^{vs}\) and all item features of an item \(v\) in the target domain \(t\) into a single document \(DC_{1:Q}^{vt}\). These documents are then processed by the word embedding algorithm to produce vector representations for each word, e.g., \(w_{{j \in \left\{ {1:P} \right\}}}^{v}\) in the document. Again, we concatenate these word vectors to create an embedding matrix \(EM_{1:P}^{v}\) for further analysis.

Review Embedding Layer. We simply perform a look-up operation in an embedding layer that projects each word from the review documents \(D_{1:K}^{us}\), to its embedding matrix \(EM_{1:K}^{u}\) to obtain embedding representations \(e\). This is defined as \(e_{u}^{s} = \left\{ {e_{1, } e_{2, } e_{3, } \ldots ..e_{l } } \right\}\) user embeddings in the source domain, where \(e_{i, }\) \(\in\) \({\mathbb{R}}^{d}\), \(l\) is the document length and \(d\). is the embedding dimension. We perform a similar process to obtain item embeddings \(e_{v}^{s}\) in the source domain, as well as user embeddings \(e_{u}^{t}\) and item embeddings \(e_{v}^{t}\) in the target domain.

Interaction Learning Layer. We apply a technique that involves learning the relationships between users and items separately within each domain to capture shared representations of these domains. This lay performs a simple inner product to capture user–item interactions. In the source domain, the layer takes user embeddings \(e_{u}^{s}\) and item embeddings \(e_{v}^{s}\) and computes their inner product, resulting in an interaction matrix denoted as \(I_{uv}^{s}\). Likewise, in the target domain, the layer uses user embeddings \(e_{u}^{t}\) and item embeddings \(e_{v}^{t}\) to calculate interaction matrix as \(I_{uv}^{t}\)\(.\) The equations for these computations are as follows:

Item Feature Embedding Layer. We use an embedding layer to retrieve the representation of each word from the item content documents \(DC_{1:P}^{v}\). We perform a look-up operation to project each word onto its corresponding embedding matrix \(EM_{1:P}^{v}\) to obtain item feature embedding representations, denoted as \(ce\). This is defined as \(ce_{v}^{s} = \left\{ {ce_{1, } ce_{2, } ce_{3, } \ldots ..ce_{p} } \right\}\) embeddings in the source domain and \(ce_{v}^{t}\) item feature embeddings in the target domain.

3.4 Shared learning LSTM

LSTM networks are widely employed in text modeling because of its advantages for extracting effective serialization semantic information (Xu et al. 2017; Yang et al. 2017). Several exiting works have introduced an LSTM layer to handle contextual modeling of review text, but may be restricted by (1) these methods (Da’u and Salim 2019; Li et al. 2016, 2018; Mohd Aboobaider 2021) are mainly designed for single-domain recommendation task, meaning they may not fully leverage user preferences learned from rich and diverse domains (2) While some methods (Anwar et al. 2023; Doan & Sahebi 2019; Wang et al. 2019) do employ LSTM for CDR, they tend to rely solely on domain-related information to capture latent user–item preferences from text reviews. Different from these methods, we propose a novel technique called “shared learning LSTM (SL-LSTM)”, which introduces the cross-domain preference patterns (interaction matrix) into the process of modeling contextual review embeddings. Given our understanding, reviews encapsulate rich semantic information such as the possible explanation of the users’ preferences (Wang et al. 2021a). Thus, by leveraging user preferences from the target domain and integrating them with the source domain embeddings during the source domain learning phase (or vice versa) can generate more effective user preferences. The objective of this layer is to enhance user–item representations by taking advantage of the information available across domains, resulting in improved recommendations.

Our proposed design, the Shared Learning LSTM (SL-LSTM), differs from the standard LSTM network. While the standard LSTM has three gates (input gate, forget gate, output gate) to regulate information flow, the SL-LSTM, as depicted in Fig. 3, is designed with six gates. The SL-LSTM takes two inputs at each timestep \(\left( T \right)\). The first input is the domain respective embeddings, where \(x^{(T)} = e_{\alpha }^{s}\) in the source domain and \(x^{\left( T \right)} = e_{\alpha }^{t}\) in the target domain, where \(\alpha\) represents entity (user or item). The second input is the cross-domain interaction matrix, where \(p^{\left( T \right)} = I_{uv}^{t}\) in the source domain and \(p^{\left( T \right)} = I_{uv}^{s}\) in the target domain. In essence, the extent to which cross-domain interaction matrix are learned at each time step directly impacts the vectors representing the review words. This matrix into the LSTM cell controls the key information in reflection with the user choices in the other domain, subsequently, leads to the generation of shared hidden user–item representations. SL-LSTM has six gates—two input gates \(i_{x}^{\left( T \right)}\) and \(i_{p}^{\left( T \right)}\), two forget gates \(f_{x}^{\left( T \right)}\) and \(f_{p}^{\left( T \right)}\), and two output gates \(o_{x}^{\left( T \right)}\) and \(o_{p}^{\left( T \right)}\). The additional input, forget and output gates control the flow of information of the cross-domain interaction matrix. For each sequence at the timestep \(T\), the input gate \(i_{p}^{\left( T \right)}\), the forget gate \(f_{p}^{\left( T \right)}\) and the output gate \(o_{p}^{\left( T \right)}\) adopt functionality as standard LSTM and control the flow of these interaction matrix, while the input gate \(i_{x}^{\left( T \right)}\), the forget gate \(f_{x}^{\left( T \right)}\), and the output gate \(o_{x}^{\left( T \right)}\) attempt to balance the input information by determining the extent of learning interaction matrix with the embedding sequences. The workflow of the proposed can be given as follows:

where \(\sigma \left( . \right)\) is sigmoid activation function formulated as \(\sigma \left( x \right) = \frac{1}{{\left( {1 + e^{ - x} } \right)}}\), \(\odot\) denotes element-wise multiplication, and \(W_{*}\) and \(U_{*}\) represents weights matrices learned with the gates and memory states.

The cell gates and states regulate the cross-domain interaction matrix \(p^{\left( T \right)}\) and the current sequence input \(x^{\left( T \right)}\) by adding, updating, or removing the information. They produce an output \(h^{\left( T \right)}\) that represents a sequence of hidden representations \(\left\{ {h^{1} , h^{2} , \ldots . h^{\left( T \right)} } \right\}\) at the current timestep \(\left( T \right)\).

During the input gate phase, the model quantifies what new information to store in the cell state. The interaction input gate \({i}_{p}^{(T)}\) decides how much the interaction pattern to transfer to the cell state as given by (4). This sigmoid layer is computed with \({p}^{(T)}\) and \({h}^{(T-1)}\), where \({h}^{(T-1)}\) can be regarded as previous state information. Another input gate \({i}_{x}^{(T)}\) determines how much new sequence information \({x}^{(T)}\) to add the cell state and also controls the extent of interaction pattern learning \({i}_{p}^{(T)}\) with the current sequence input using sigmoid function (5). Next the tanh layer (10) creates a vector of new candidate values \(c^{\prime (T)}\) to add to the cell state. These two layers (5) and (10) are combined to update the cell state as shown with the second term in (11).

In the forget gate phase, the model decides whether to retain or forget the information from the previous timestep. The interaction forget gate \(f_{p}^{\left( T \right)}\) decides which interaction pattern to transfer to forget gate (6). The learning factor of \(f_{p}^{\left( T \right)}\) is integrated into another forget gate \(f_{x}^{\left( T \right)}\) to balance the trade-off between previous state information and the current sequence input (7). This sigmoid regulation is combined with previous state information to update the cell state as shown with the first term in (11).

The model output gate computes the hidden state from the input sequences to obtain cell state as the output. The interaction output gate \(o_{p}^{\left( T \right)}\) controls the information flow of patterns to transfer to output gate (8). This information is used into model’s another output gate \(o_{x}^{\left( T \right)} { }\) to select the degree of interaction pattern learning and the aspect of current sequence input should flow from the current cell state to output (9). Finally, we input the cell state in the tanh layer and multiply it with output of the sigmoid gate \(o_{x}^{\left( T \right)}\) to generate the contextual hidden representations \(h^{\left( T \right)}\) as in (12). To sum up, this novel SL-LSTM layer models domain-specific embeddings with the cross-domain interaction matrix within the LSTM cell to dynamically learn shared hidden representations of users and items in both the source domain as shown with (13) and the target domain as shown with (14).

Given the user latent representations and item latent representations, we calculate the pairs differently in the source domain as in (15) and in the target domain as in (16).

3.5 Attentive domain feature mapping

The key issue in transferring knowledge across domains lies in the dissimilarity of feature spaces. This dissimilarity adds complexity when attempting to map the feature spaces of two distinct domains. When we perform direct mapping or apply mapping weights in such dissimilar feature spaces, the likelihood of introducing transfer errors significantly increases. In short, the larger the variations in feature spaces between domains, the wider the gap in transferability. Reducing these variations becomes important to enable effective domain adaptation when transferring knowledge between domains. We handle this problem by employing a new multi-head self-attention mechanism to learn the item content features. Item content has also been proven to be an aid to improve the performance of recommender systems (Fu et al. 2019; Wang et al. 2015). The multi-head self-attention network (Vaswani et al. 2017) has demonstrated remarkable performance and shown superiority in extracting multiple relations in context word analysis. More recently, it has been successfully applied to various NLP tasks, including sentiment analysis (Xi et al. 2020), text summarization (Li et al. 2019), and relation extraction (Liu et al. 2021a). Here, we extend this technique to improve domains knowledge by mapping different feature fields between domains. As discussed above we selected features \(IC\) say title and description for each item \(v\) in the source and target domain. We combine all the features for item to prepare the item content document \(DC\) and then obtained the embedding vectors \({ce}_{v}^{*}\) and \({ce}_{v}^{\sim *}\) to represent these documents using the word2vec embedding method. * represents one domain (either source or target) and \(\sim *\) represents opposite domain. To enable these embedding features alignments across domains, we represent feature as

where \(q\) and \(r\) represent item features from source and target domain, respectively. For the feature context mapping, we adopt a key-value attention mechanism to extract feature combinations that are meaningful. Then, we find the relation between features \(a\) and \(b,\) using a specific attention head \(h\) as follows:

In above equations, \(\gamma\) is an attention function used to measure the similarity between the item features \(a\) and \(b\). \({W}_{Q}\) represent the Query transformation matrix and \({W}_{K}\) represent the Key transformation matrix. Our approach involves a straightforward inner product on these features to transform the initial embedding space into an attentive new space. Consequently, the representation of feature \(a\) is then updated within the subspace \(h\) by combining all relevant features based on coefficients \({\eta }_{a,b}^{h}\):

In (20), \({W}_{V }^{h}\) denotes the value transformation matrix. \({z}_{va}^{h}\) is the result of the combination of the feature m and its associated features under head \(h\), that also signifies a new combinatorial feature learned through this approach. Furthermore, a feature is likely to participate in different combinatorial features and can be achieved using multiple heads. Such that each head creates different subspaces and learns distinct feature interactions separately. We aggregate all the distinct features learned in different subspaces as shown in (21):

In above equation, \(\oplus\) symbol denote the concatenation operator, and \(H\) as the total number of heads. We incorporate standard residual connections in the attention network as given by (22) to retain previously learned distinct features within different subspaces (that also includes 1st order features).

In the above equation, \(ReLU\) is a nonlinear activation function and \({W}_{R}\) is the residual projection matrix for mismatch dimension. This interaction layer enables the transformation of each feature \(({ce}_{va})\) into a new attentive high-order feature representation, denoted as \({Z}_{attn}\). As a result, we can create feature distribution between the source domain and the target domain similarly under each attention head of this network.

3.6 Prediction layer

Here, we make the predictions by leveraging the mapped domain features \({Z}_{attn}\) through multi-head attention layer and hidden representations \({Z}^{*}\) obtained through shared learning LSTM layer as follows:

where * represents source or target domain and \({\widehat{w}}_{g}^{*}\) represents the global bias.

4 Experiments

We conduct the empirical study to answer the following research questions: RQ1 How our proposed DSAM approach performs compared to state-of-the-art recommender systems? RQ2 How do different hyperparameter settings affect the performance of DSAM? RQ3 What are the effects of the different components of DSAM on its performance? RQ4 Can DSAM handle the cold-start problem in the target domain?

4.1 Experimental settings

Datasets. We evaluate our DSAM model on multiple categories on the publicly available AmazonFootnote 2 dataset (Ni et al. 2019), widely used for evaluating cross-domain recommendation systems. We select eight categories to construct five (source \(\to\) domain) pairs: D1: Books \(\to\) Movies (B \(\to\) M), D2: Musical Instruments \(\to\) Digital Music (MI \(\to\) DM), D3: Movies \(\to\) Musical Instruments (M \(\to\) MI), D4: Home & Kitchen \(\to\) Office Products (HK \(\to\) OP), and D5: Toys Games \(\to\)-Video Games (TG \(\to\) VG). These sets contain multiple attributes including ratings, review text, and item meta-data. For our experiments, we used attributes—reviewer ID, product ID, review texts, ratings, and titles and descriptions from meta-data.

The dataset statistics are detailed in Table 2, revealing that D1 and D4 are the larger pairs among the categories. To assess our model’s performance on smaller datasets with a very few target data, we include the D2 set. For each pair, we select source domain with a higher number of users and the other as target domain. This approach replicates real-world scenarios where the source domain typically contains a significantly larger number of users interactions than the target domain. Furthermore, the data in the target domain are often sparser compared to the source domain, with all datasets displaying a high degree of sparsity. This observation emphasizes the importance of leveraging text content to mitigate data sparsity and enhance recommendation performance.

In the data preprocessing step, we apply a few filtering tasks across the cross-domain data pair and then carry out the required pre-processing steps to handle the review texts. Except for the D2 target data, the number of reviews per user and per item exceeds 5, as illustrated in Table 2. We apply filtering to users and items with total ratings and reviews less than 5 in all cross-domain scenarios except for the D2 case. We randomly sample 50% of the overlapping users as the cold-start users and remove their interactions (ratings and reviews) from the target domain. From these cold-start users set, we randomly sample 20% for the validation set, 30% for the testing set. We build a training set by randomly including a fraction of \(\mu =\left\{0\%, 5\%, 50\%, 80\%\right\}\) of the remaining 50% of the overlapping users and rest \(\left\{100\%, 95\%, 50\%, 20\%\right\}\) as non-overlapping users. The reviews associated with a user/item are combined into a single document, and the reviews associated with an item are also combined into a single document, following previous work (Zheng et al. 2017). The content in the textual reviews is important for understanding user behavior. As the reviews contain user emotions, we carefully pre-process them and avoid using stemming and lemmatization approaches to preserve the text semantics. However, the review text needs some correction to improve recommendation quality. For that purpose, we eliminate punctuations, numbers, and newlines from the texts. We also apply text normalization techniques to replace some shorthand notations with appropriate ones. To keep uniform input text length, we apply a padding strategy, setting the maximum sequence length of the review text equal to 250.

Evaluation metrics. We evaluate all the models on the rating prediction task, which estimates actual ratings (\({y}_{u,v}\)) and predicted ratings (\({\widehat{y}}_{u,v}\)) and on the top-n ranking task. We use the following metrics:

Baseline methods. We compare the performance of our proposed DSAM model with nine baselines, which we categorize into four groups:

Type A. Traditional CDR Methods.

-

CMF (Singh & Gordon 2008) Collective Matrix Factorization is a MF-based model that simultaneously factorizes several matrices from related domains. In this model, latent factors of entities are shared when those entities participate in multiple relations.

-

CDCF (Loni et al. 2014) Factorization Machine-based CDCF learns domain-specific user interaction patterns from the source domain and encodes them as a feature vector, which is then used to enhance recommendations in the target domain. CDCF utilizes factorization machines to enable this modeling process.

Type B. Deep Learning-Based CDR Methods – These models do not take into account textual reviews.

-

EMCDR (Man et al. 2017) An Embedding and Mapping framework first uncovers user and item factors in both domains through matrix factorization and then transfers these factors across domains using a nonlinear MLP mapping function.

-

DARec (Yuan et al. 2019) A deep domain adaptation model that relies solely on rating matrices to transfer knowledge. The model has two variants: U-DARec, which learns and transfers abstract shared rating patterns between two domains via Deep Neural Networks (DNN). In contrast, I-DARec focuses on extracting distinct rating patterns within each domain. We considered both variants in our experiments.

-

DDTCDR (Li & Tuzhilin 2020) A novel approach uses dual transfer learning mechanism to transfer latent knowledge from one domain to other through embeddings. It iteratively repeats this process until the models stabilize for both domains.

Type C. Review-Based Single Domain Methods.

-

DeepCoNN (Zheng et al. 2017) A Deep Cooperative Neural Networks uses CNNs to jointly model user and item representations from reviews. It concatenates these representations into a factorization machines layer to predict ratings.

-

NARRE (Chen et al. 2018) A state-of-the-art method employs a parallel neural network architecture similar as DeepCoNN + + . It builds an additional attention-based review pooling layer to capture usefulness of reviews for modeling user–item representations.

Type D. Review-Based Cross-Domain Methods.

-

RC-DFM (Fu et al. 2019) It integrates review texts and side information along with rating matrix in both the source and target domains using SDAE. Subsequently, it maps the user latent factors between domains using MLP.

-

CATN (Zhao et al. 2020a) The model learns multiple aspects of user and item from their reviews and employs an attention mechanism to transfer user preferences at aspect level. This model adopts an end-to-end training optimization approach.

Implementation details. We implement DSAM and baseline models in Python using TensorFlow libraries. We apply the squared error loss between the predicted rating (\({\widehat{y}}_{u,v}\)) and the actual rating (\({y}_{u,v}\)) as \(\sum_{\left(u,v\right)\in N }{\left({y}_{u,v}- {\widehat{y}}_{u,v}\right)}^{2}\). We conduct experimental investigations to determine the optimal training and model parameters across all datasets and list the parameter settings of our model in Table 3. We train DSAM model with five epochs and used a batch size of 64. We optimize the model with Adam (Kingma & Ba 2014), setting the initial learning rate set to \(2{e}^{-3}\) and the epsilon value to \(1{e}^{-8}\). The embedding dimension is set to 300, and the number of hidden units for the LSTM network is also set to 300. The size of the latent factors is set to 32. We parameterize the number of attention heads as 1 for the multi-head mapping layer. We assign dropout ratio equal to 0.5 and L2 regularization to \(1{e}^{-5}\).

We implement the baseline models EMCDRFootnote 3, DARecFootnote 4, DDTCDRFootnote 5, DeepCoNNFootnote 6, NARREFootnote 7, CATNFootnote 8 using their available source codes and CMF using the original codeFootnote 9 in python (TensorFlow) version. To code CDCF, we use the libFM library. For RC-DFM, we develop our own python (TensorFlow) versions based on the original papers. For fair comparison, we apply the same pre-processing steps, set the review length to 250 and train under identical conditions (batch size, epochs, learning rate, dropout ratio and L2 regularization). In the required baseline models, we parameterize the text embedding dimension and the number of hidden units/hidden neurons to 300. The number of latent factors for the hidden layer or matrix factorization layer is set to 32. For models with CNN components such as DeepCoNN, NARRE and CATN, we assign the convolution kernel sizes equal to 3 and filters equal to 100. The ID embeddings size and the attention size in the NARRE model are set to 32. Similarly, the ID embeddings size is set to 32 for CMF, CDCF, and EMCDR models. In the CATN model, we configure the number of aspects to 5. For RC-DFM, we set the number of layers in the SDAE network to 5 and the output dimensions of the MLP network to 32. As for the coefficients and regularization parameters, we follow the same values as provided in their respective papers.

4.2 Results and discussion

To base the idea on taxonomies and methods discussed in (Zang et al. 2022), this study examines two scenarios: first, where there is partial overlap among users and non-overlap among items, and second, where there is non-overlap among both users and items. The experimental results are based on an intra-domain recommendation task, focusing on recommending items within the same domain, wherein the DSAM model predicts how a user say \({U}^{t}\) of a domain \({d}_{t}\) rates an item \({V}^{t}\) of the same domain \({d}_{t}\). Further, our approach involves multi-target recommendation, aiming to enhance the performance of both domains by leveraging knowledge from each other. Methodologically, it conducts latent factor modeling from domain-specific user–item embeddings and cross-domain preference patterns. It then performs items feature space mapping from source and target domains and leverages the resulting latent and mapping factors to make final predictions. Following in this section, we report the experimental results and conduct a detailed comparative analysis of different methods.

We evaluate the performance of DSAM and groups of baseline methods on five cross-domain pairs from Amazon datasets, under different overlapping user scenarios 5%, 50%, 80%, and non-overlapping users. The results of these evaluations are shown in (Tables 4–11), with Tables 4, 6, 8, and 10 presenting the rating prediction results and in Tables 5, 7, 9, and 11 presenting the top-n ranking results. Each table presents the best scores in bold; these illustrate DSAM achieves state-of-the-art performance, surpassing all existing methods. The best results from baselines are underlined. The last row in each table represents the relative improvement of DSAM over the best baseline. Our model demonstrates significant improvement against the best baseline, with most cases achieving statistical significance at the 0.01 level based on a paired t test. Further analysis of these experimental results reveals the following insights:

-

We observe that the differences between DSAM and baseline methods are pronounced under all overlapping/non-overlapping user settings across both regression and ranking metrics. Specifically, we observe the highest RMSE and MAE improvements under non-overlapping users (which represents a more realistic scenario), showing enhancements of about 11.2% and 22.2%, respectively. Even in the relatively sparser D2 dataset, DSAM demonstrates considerable average RMSE and MAE improvements of 6.6% and 12.8% on D2. Moreover, these improvements remain significant across different datasets, yielding average RMSE and MAE improvements of 5.7% and 9.8% on D1, 7.7% and 13.5% on D3, 6.7% and 14.3% on D4, and 9% and 13% on D5, respectively. For ranking metrics, DSAM achieves the highest improvements in precision (6.2%) and NDCG (2.1%) under the 5% overlapping scenario, and recall (12.7%) under the 50% overlapping scenario. Under the non-overlapping scenario, the improvements remain significant with 4.5% in precision, 10.4% in recall, and 1.4% in NDCG. Overall, DSAM shows substantial average gain in ranking metrics across all datasets, particularly in recall on D1 (7.3%), D3 (8.8%), and D5 (11.7%); and in precision on D2 (3.6%) and D3 (5.0%), with statistical significance observed at a 5% threshold. Other gains are also significant, with a threshold of nearly at a 1%.

-

Next, among the baseline methods, CATN, NARRE, and DeepCoNN deliver the best performances across both regression and ranking measures in the majority of cases. Except for the 80% overlapping user scenarios, U-DARec performs best across the D5 dataset on RMSE and MAE metrics. Additionally, linear CDCF and RC-DFM outperform other baselines in ranking measures in some instances. CATN’s approach involves learning global sharing aspect representation and implementing a cross-domain aspect-based transfer approach, while leveraging auxiliary reviews from like-minded and non-overlapping users to improve performance. This is true with the ranking metrics, where CATN shows an average improvement of up to 4% against NARRE and DeepCoNN in non-overlapping scenarios. However, our RMSE and MAE results indicate that CATN performs less effectively compared to NARRE and DeepCoNN in scenarios with non-overlapping users. Conversely, with a higher number of overlapping users, CATN demonstrates significantly better performance than NARRE and DeepCoNN. For instance, considering the average performance across all datasets with an 80% overlapping user scenario, CATN achieves an average of 10.69% RMSE and 12% MAE over NARRE and 11.44% RMSE and 11.50% MAE over DeepCoNN. CATN also outperforms NARRE in scenarios with higher numbers of overlapping users, such as 80% overlap, with improvements in precision, recall, and NDCG ranging from 0.22 to 6.55%, and under 50% overlap with improvements ranging from 0.42 to 6.42%. In comparisons between DeepCoNN and CATN under similar scenarios, CATN generally shows better performance in precision, recall, and NDCG. Moreover, we observe that NARRE performs exceptionally well on large dataset like D1, except in one case where the MAE of the best baseline (CATN) is 0.857, slightly lower than NARRE’s MAE, which is 0.861, as shown in Table 8. However, NARRE does not fare well in cases with few data instances like D2, showing the highest RMSE of 1.65 and MAE of 1.33, which is significantly higher than all methods, as evident from Table 4. Across both D1 and D2 datasets, NARRE shows subpar performance compared to CATN under top-n metrics. In fact, NARRE performs poorest among baseline methods with P@5 of 0.619 and R@5 of 0.566 in a 50% overlap scenario (Table 7), and R@5 of 0.594 in an 80% overlap scenario (Table 9).

-

Furthermore, upon overall analysis of the models with the least effective performance, Tables 4, 6, 8, and 10 indicate that EMCDR ranks as the least performing model in rating measures. EMCDR experiences a decline in performance as the number of overlapping users increases. For example, with 5% overlapping users on D5 data, it records 1.692 RMSE and 1.421 MAE, performance drops to 1.818 RMSE and 1.541 MAE at 50% overlap, and further deteriorates to 1.829 RMSE and 1.535 MAE at 80% overlap. And when there is no user overlap scenario, EMCDR’s performance improves to 1.705 RMSE and 1.425 MAE. Additionally, in terms of ranking measures as shown in Tables 5, 7, 9, and 11, CMF also exhibits poor performance alongside EMCDR. EMCDR shows particularly poor results on D4 and D5, whereas CMF underperforms on D1, D2, and D3 datasets.

-

Performance across different overlapping user ratios. Analyzing the performance results across various overlapping user ratios provides valuable insights into the consistent performance of DSAM. We observe marginal fluctuations with measured metrics results of our model across different overlapping user ratios of 0%, 5%, 50%, and 80%. To illustrate, on the D1 dataset, the RMSE ranges from 1.026 to 1.034, and the MAE results vary from 0.775 to 0.788. Under top-n metrics, P@5 ranges from 0.883 to 0.887, R@5 ranges from 0.565 to 0.575 and N@5 ranges from 0.924 to 0.966. DSAM demonstrates sensitivity to changes in the degree of overlapping users, with subtle variations in performance. Undoubtedly, the optimal performance occurs at the 80% overlapping user ratio, suggesting that our model performance tends to improve with higher overlapping ratios. Importantly, it also signifies that there is not a major drop in the model’s performance when there are very few or no overlapping users. However, comparing 80% overlap to no overlap scenario reveals noticeable differences in top-n performance: for example, a 4.3% drop on D1, 0.7% on D2, 0.5% on D3, and 1.2% on both D4 and D5. Further, Figs. 4 and 5 provide visual insights into the significance of these differences, with connecting lines (depicted in blue) between the bars indicating negligible variations. For instance, in the D3 dataset under the RMSE results chart in Fig. 4, the connecting line appears notably straight, and a similar observation can be made in the D1 dataset under the MAE results chart. Likewise, in Fig. 5, the Precision results for the D1 dataset and the NDCG results for the D2 dataset also display straight connecting lines. This visual representation highlights the model’s stability, with minor variations in the performance under different overlapping user conditions. In summary, DSAM exhibits robust behavior across this setting, ensuring its reliability and performance consistency.

-

CDR models. Figures 6 and 7 display the performance of baseline cross-domain recommendation models for all overlapping user settings across five datasets. The Type D group, i.e., review-based deep learning CDR models (represented by yellow lines) achieves performance superiority over other CDR models. Within these Type D group models, our proposed model DSAM (square marker, yellow line) outperforms both RC-DFM (triangle right marker, yellow line) and CATN (triangle left marker, yellow line) across all datasets. Additionally, CATN excels over RC-DFM on the D2, D3, D4, and D5 cross-domain sets, while RC-DFM demonstrates superior results on the larger D1 cross-domain set in terms of RMSE and MAE performance. Regarding ranking performance, we observe that only a few times RC-DFM results are better than CATN, but the differences are marginal. For instance, under the 50% overlapping scenario, RC-DFM achieves an R@5 of 0.352, compared to CATN’s R@5 of 0.35. The results of Type A traditional CDR models (green lines) indicate that both CMF and CDCF exhibit inferior performance compared to Type D CDR models. This can be attributed to their utilization of a linear factorization method for learning user representation, which proves ineffective in capturing complex interactions between users and items (Fu et al. 2018; Liu et al. 2022a). Comparatively, CMF (circle marker, green line) performs more poorly than CDCF (hexagon marker, green line) and even compared to Type B deep learning models (orange lines). With RMSE and MAE evaluation, the linear CDCF model surpasses Type B CDR (EMCDR, U-DARec, I-DARec, and DDTCDR) models when there are a large number of overlapping users. Conversely, group B models (U-DARec, I-DARec, and DDTCDR) demonstrate significantly lower RMSE and MAE values compared to CDCF in scenarios with few or no overlapping users, highlighting the effectiveness of deep learning techniques in providing accurate recommendations in such settings. For ranking evaluation, CDCF generally performs better than Type B models (EMCDR, U-DARec, and I-DARec), except in some cases where DDTCDR performs better than CDCF. The variability in the results of deep learning models highlights the effectiveness of the deep learning models depends on how the methodologies are employed. Also, our findings draw attention to the considerable performance gap in EMCDR (represented by the ‘x’ marker orange line) compared to other models. Particularly, EMCDR exhibits the highest RMSE and MAE results when dealing with large datasets as well as the poorest precision, recall, and NDCG results on the D5 dataset across the tested overlapping scenarios. Despite its implementation of a nonlinear MLP-based mapping function for learning user and item factors. The direct transfer of all latent factors from the source to the target domain in EMCDR might introduce a potential risk of transferring noisy information (Hu et al. 2019), contributing to its underperformance. Overall, EMCDR demonstrates lower performance in prediction metrics, while in ranking metrics, it performs sub-optimally in some cases, and CMF exhibits weaker performance in others. In terms of the optimal outcome, Type D CDR models demonstrate superior performance compared to Type A and Type B CDR models. This analysis generalizes the findings, emphasizing the effectiveness of reviews in CDR models in understanding user preferences. Further below, we demonstrate statistical improvements in recommendation performance using models that utilize text reviews.

-

Single domain models vs cross-domain models. Type A, Type B and Type D models are cross-domain models that exploit data from the source domain, while Type C represents a single-domain model that only uses data from the target domain. Initially, in this analysis, we observe that both DeepCoNN and NARRE, which belong to Type C, outperform Type A and Type B models for all the overlapping user scenarios in most cases. For example, when analyzing non-overlapping user scenarios, the performance of DeepCoNN is significantly superior to models CMF, CDCF, EMCDR, DARec, and DDTCDR. Referring to Table 10, we find that DeepCoNN achieves maximum improvements in RMSE and MAE ranging from approximately 17% to 56% and 25% to 61%, respectively. Similarly, NARRE shows substantial improvements in RMSE and MAE, ranging approximately from 20 to 58% and 21 to 60%, respectively, when compared to the same set of models across different datasets. Additionally, according to Table 11, DeepCoNN achieves maximum improvements in Precision, Recall, and NDCG of approximately up to 27%, 21%, and 17%, respectively, against Type A and Type B models. It is noteworthy that DeepCoNN shows a slight decline in Precision and Recall compared to DDTCDR, with maximum negative improvements of − 0.78 and − 0.91, respectively. On the other hand, NARRE demonstrates significant improvements in Precision, Recall, and NDCG, reaching up to approximately 27%, 22%, and 17%, respectively, compared to same models across different datasets.

To demonstrate, the average percentage difference in performance between single-domain models (Type C) and non-review-based cross-domain models (Type A and Type B) for all the overlapping user scenarios is illustrated in Figs. 8 and 9. Considering the non-overlapping user scenario from Fig. 8, the RMSE and MAE of best single domain models surpass the best results from the non-review-based cross-domain models by 5.8% and 6.3% on D1, 20.8% and 21.2% on D3, 14.5% and 17.3% on D4, and 9% and 11.4% on D5, respectively. Conversely, on D2, non-review-based cross-domain models outperform single-domain models, showing relative percentage decreases of − 12.4% in RMSE and − 16.4% in MAE. Similar patterns are observed for other user overlapping ratio scenarios. Let’s take look at 80% user-overlapping scenario and under top-n metrics, as shown in Fig. 9, the best performance of single-domain models surpasses the best performance of non-review-based cross-domain models by 0.3% P@5, 1.7% R@5, and 3.4% N@5 on D1; 0.2% N@5 on D2; 3.8% P@5, 4.6% R@5, and 0.5% N@5 on D3; 3.4% P@5, 2.8% R@5, and 0.6% N@5 on D4; and 1.3% P@5, 1.5% R@5, and 2.8% N@5 on D5. In two instances, single-domain models perform slightly worse than non-review-based cross-domain models, with relative percentage decreases of -1.1% P@5 and − 2.3% R@5 on D2. The average improvement figures indicate that the performance differences between single-domain and non-review-based cross-domain models are more pronounced in prediction metrics than in ranking metrics. This suggests that while single-domain models typically perform better, the extent of improvement varies depending on the evaluation measures used.

In a subsequent analysis, the above observation deviates, indicating that, in most instances, DeepCoNN and NARRE demonstrate suboptimal performance relative to review-based cross-domain models (RC-DFM and CATN). The average percentage difference in performance between single-domain models (Type C) and review-based cross-domain models (Type D) for all the overlapping user scenarios is shown in Figs. 10 and 11. In scenarios involving overlapping users, the review-based cross-domain models outperform the best-performing models among DeepCoNN and NARRE. For instance, under the 50% overlapping user scenario from Fig. 10, single-domain models exhibit poorer performance compared to Type D models, resulting in a relative percentage decrease of − 27% RMSE and − 37.2% MAE on D2, − 1.2% RMSE on D3, − 1.2% RMSE and − 0.8% MAE on D4, and − 8.1% RMSE on D5. Meanwhile, the percentage improvement that single-domain models exhibit over review-based cross-domain models is less significant, with a 0.3% RMSE and 0.1% MAE improvement on D1, 4.6% MAE improvement on D3, and 2.7% MAE improvement on D5 in the same scenario. In the same 50% overlapping scenario from Fig. 11, single-domain models show relative percentage decreases compared to review-based cross-domain models of − 0.8% P@5, − 0.6% R@5, and − 0.3% N@5 on D1; − 1.9% P@5, − 3.2% R@5, and − 0.2% N@5 on D2; − 0.2% N@5 on D3; − 1.0% P@5 and − 0.2% N@5 on D4; and − 1.2% R@5 and − 0.1% N@5 on D5. Exceptions include a 0.7% P@5 and 2.4% R@5 improvement on D3, a 1.4% R@5 improvement on D4, and a 1.0% P@5 improvement on D5.

However, the results differ in the case of the non-overlapping scenario, where the top-performing models from DeepCoNN and NARRE generally outperform those from Type D, particularly in terms of RMSE, MAE, and NDCG metrics. This observation also draws attention to the fact that existing review-based cross-domain models perform more effectively when there are overlapping users during training, and their performance drops when there is a small proportion of overlapping users or no overlapping users at all. In comparison, the performance of our model shows subtle variations in effectiveness, indicating stability in performance across different ratios of overlapping users.

Parameter sensitivity (RQ2). We conduct several experiments of DSAM by setting different parameters such as the number of attention heads, latent factors, text sequence lengths, learning rates, and dropout ratios. Given the breadth of these experiments, we focus our analysis on RMSE and MAE performances across three datasets D2: Musical Instruments-Digital Music, D4: Home Kitchen-Office Products, and D5: Toys Games-Video Games. We have chosen these datasets to provide insights into how parameter settings affect model performance across datasets of varying sizes, from small to large.

Number of attention heads. We investigate the performance of DSAM with respect to the number of attention heads assigned for the attentive domain features mapping layer. This parameter has a significant impact on preserving the learned combinatorial features of the two domains. As illustrated in Fig. 12, the RMSE and MAE performance exhibit lower values when the number of heads is set to 1. However, they increase as the number of heads is set to 2. Subsequently, the RMSE and MAE values start to stabilize and decrease again after reaching a value of 4 heads.

Number of latent factors. In this study, we vary the number of latent factors within the range {8, 16, 32, 50, 60} and evaluate our model’s performance. As depicted in Fig. 13, increasing the number of latent factors initially leads to a gradual decrease in both RMSE and MAE, indicating improved model performance. The optimal point, where the model achieves its best results, is found at 32 latent factors. Beyond this point, as the number of latent factors further increases to 60, we observe that the RMSE and MAE values remain relatively stable. While there are minor variations, they do not significantly impact on the overall performance.

Text sequence length. This analysis explores how different text sequence lengths impact our model’s performance. We assess the model’s performance across different text sequence lengths that fall into the ranges {0–100, 100–200, 200–300, and > 300}. The findings as shown in Fig. 14 reveal an interesting pattern where increasing the length of text sequences initially leads to improved performance, resulting in lower RMSE and MAE values. However, we observe a trend where the RMSE and MAE values start to rise when text sequence length greater than 300, indicating potential overfitting. The drop in model performance could be due to the level of complexity exceeding the model’s capacity to generalize effectively. This implies that an increase in text sequence length initially improves accuracy, but beyond 300, excessive length may lead to overfitting and a subsequent decline in performance.

Influence of learning rates. We train our model to assess its performance under various learning rates \(\left(lr\right)\). We select \(lr= \left\{1{e}^{-4}, 2{e}^{-4}, 1{e}^{-3}, 2{e}^{-3}, 1{e}^{-2}, 2{e}^{-2}\right\}\) and conducted a comparative analysis of our model’s performance. As depicted in Fig. 15, for all the datasets, the RMSE and MAE values initially decrease gradually, reaching their optimal performance at \(lr=2{e}^{-3}\). However, beyond this point, the values start to increase as the learning rates further increase.

Effect of dropout ratios. The performance metrics curve presented in Fig. 16 indicates that initially a progressive shift in model performance corresponding to initial dropout values, but the model performance declines when the dropout value is greater than 0.5. Notably, the model exhibits great performance in terms of RMSE and MAE values when dropout ratio is set to 0.4 and 0.5. A careful examination of the experimental results further reveals that, among the datasets considered (D2, D4, and D5), particularly for the relatively large dataset D5, the optimal parameter configuration corresponds to a dropout rate of 0.5. Hence, a dropout value of 0.5 in the rest of the experiments.

Model ablation study (RQ3). To understand the contribution of each component of DSAM to the final performance, we conduct experiments with several alternatives of DSAM. These variants include DAM (no cross-domain preference patterns), DSM (no attentive features mapping), and DM (no cross-domain preference patterns and no attentive features mapping). DAM employs a standard LSTM and integrates domain-specific user–item embeddings into the context learning process to generate underlying user and item representations in each domain. This allows us to investigate the impact of excluding cross-domain preference patterns on model performance. DSM does not involve attentive domain features mapping and directly evaluates predictions based on the learned user–item representations from the SL-LSTM layer. DM is a simple deep model that excludes learning of cross-domain preference patterns and domain attentive mapping.

The results of DSAM and its alternatives are presented in Table 12. Our observations indicate that DSAM significantly outperforms its alternatives across all cross-domain recommendation scenarios, particularly on the smaller dataset D2. The relative RMSE and MAE improvement on D2 is maximum, with approximately 34.04% and 39.48% over DAM, 19.20% and 27.90% over DSM, and 16.20% and 24.84% over DM. Overall, across all datasets, DSAM achieves improvements of between 4 and 28% over DAM. (no cross-domain preference patterns). Relying solely on domain-specific preference patterns for representation learning can introduce bias in recommendation decisions in the target domain, resulting in poor prediction performance. Additionally, the results demonstrate that DSAM achieves the marginal improvement of about 3% to 26% over DSM (no attentive feature mapping). This highlights the importance of considering the feature disparities between domains for effective cross-domain transferability. The most significant improvement is observed over DM, with a 5% to 39% relative increase. This substantial enhancement underscores the importance of the proposed SL-LSTM and Domain Attentive Feature Mapping modules. In summary, our ablation study demonstrates that the proposed modules within the model effectively enhance the performance of cross-domain recommendation tasks.

Cold-start recommendation (RQ4). For the cold-start recommendation analysis, we conduct two experiments on D2, D4, and D5 sets. In the first experiment, we randomly select samples from the target test set to predict ratings for cold-start users. In comparison with most existing methods (Wang et al. 2019; Zhao et al. 2020a; Zheng et al. 2017), which include either reviews or ratings in the testing set, our method differs. During testing, we provide a more realistic scenario and neither include ratings nor reviews for making prediction for the cold-start users. The prediction is purely based on transferring the knowledge from source domain. The results of these experiments are presented in Table 13. For instance, in the case of D2, our model made predictions based on the knowledge from the source domain (user reviews, item reviews, and item content), resulting in a prediction of 5.94, close to the actual rating of 5. These results demonstrate the capability of our method to accurately predict ratings for cold-start users, even when no reviews or ratings are available.