Abstract

Producing and consuming live-streamed content is a growing trend attracting many people today. While the actual content that is streamed is diverse, one especially popular context is games. Streamers of gaming content broadcast how they play digital or analog games, attracting several thousand viewers at once. Previous scientific work has revealed that different motivations drive people to become viewers, which apparently impacts how they interact with the offered features and which streamers’ behaviors they appreciate. In this paper, we wanted to understand whether viewers’ motivations can be formulated as viewer types and systematically measured. We present an exploratory factor analysis (followed by a validation study) with which we developed a 25-item questionnaire assessing five different viewer types. In addition, we analyzed the predictive validity of the viewer types for existing and potential live stream features. We were able to show that a relationship between the assessed viewer type and preferences for streamers’ behaviors and features in a stream exists, which can guide fellow researchers and streamers to understand viewers better and potentially provide more suitable experiences.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, people have become interested in creating user-generated video content and uploading it to platforms such as YouTube or Vimeo, making it accessible to the world (Van Dijck 2009). This has allowed other people to consume user-generated video content whenever they wanted, but interaction options were initially limited and restricted to asynchronous forms (e.g., posting comments below the video). With the increased availability of faster and higher Internet bandwidth, more and more user-generated video platforms also allowed for live-streaming of user-generated content and, in parallel, also provided synchronous forms of interaction between content creators (“streamers”) and viewers, such as a live chat or interactive polls (Lessel et al. 2017a).

Today, the range of content that is live-streamed is broad: streamers show how they cook, dance or mix music (Shamma et al. 2009), or how they experience events (Haimson and Tang 2017); they talk about their personal lives (Ko and Wu 2017), or show themselves programming (Haaranen 2017) or playing analog or digital games (Hamilton et al. 2014; Smith et al. 2013). The latter is a particularly successful form of live-streaming as it has a large streamer and viewer base today with streams attracting 10,000 or more viewers in parallel (Deng et al. 2015). Twitch.tvFootnote 1 is one of the major platforms for game live-streams. In 2021, according to the Twitch Tracker page,Footnote 2 Twitch had 1460 billion min of watched content, 8.5 million monthly streamers and more than 2.7 million average concurrent viewers.

A common characteristic of live-streams is that viewers usually have access to a real-time chat in which they can communicate with each other, but this also serves as a direct communication channel to the streamer (Smith et al. 2013). Streamers are usually open to acknowledging their audience and can either respond in the chat, or, more commonly, directly through the video stream. This is a form of audience participation and audience integration, as through this, viewers can have an impact on how the stream proceeds (Lessel et al. 2017a). Through built-in interaction features in the streaming platforms, third-party options and the creativity of streamers the range of integrative and interactive options that can be and are offered to viewers is large (Lessel et al. 2018): from simple overlays showing the name of the background music currently playing or who has subscribed to the channel, to polls that allow viewers to decide on the course of the stream (e.g., which game should be played next), to participation options in which viewers can directly impact game mechanics of the game the streamer is playing.

Various works, as we will detail in the related work section, have started to investigate individual motivations for viewers to watch or participate in such experiences and for streamers to stream. The results indicate that there are individual differences on both sides, and for viewers, these differences appear to also impact whether and how they use the offered features and whether they like the behaviors streamers exhibit.

In this work, we focused on this viewers’ side only, with the aim to get more insights into whether there are potential classes viewers could be partitioned into and how these could be assessed. This was inspired by the efforts currently being made in the games and gamefulness domain, in which user/player types are also derived to better understand individual preferences and behaviors as a complement or alternative to personality traits such as the Big Five (Raad and Perugini 2002). Besides knowledge of which classifications are possible, assessment options such as questionnaires are also created to assess these, e.g., Tondello et al. (2018a). If viewer types could be assessed in a similar fashion, these classes would help streamers to understand their audience better and provide a more suitable experience for them (e.g., they could answer questions such as “would this new feature be accepted by the majority of my viewers?” a priori), but would also allow researchers to understand specific effects in their experiments in the case that most participants would fall into one class. In addition, similar to the efforts made in, for example, the gamefulness domain, viewer types would also allow for conceptualizing, designing and investigating individualized experiences (e.g., Altmeyer et al. 2019; Orji et al. 2018): if the viewer type of an individual is known, novel streaming platforms could adapt, for example, the video overlays used to those that appear reasonable only for this viewer type, thereby opening up a large new design space.

Based on this motivation, we established three goals that build on each other and that we have pursued in this paper:

- G1:

-

Assessing whether viewer types exist.

- G2:

-

Developing a questionnaire to assess viewer types, if they exist.

- G3:

-

Relating viewer types to features used and streamers’ behaviors exhibited in live-streams.

While these goals could be applied to all live-streaming contexts, we focused on game streaming in particular, as its popularity and range of features and behaviors used (Lessel et al. 2018) offer a rich set of options for potential follow-up personalization. In the next section, we will illustrate the related research in the context of this work to motivate our investigation overall and discuss the already available indications that viewer types likely exist (G1). This is followed by a description of how an initial set of questionnaire items was derived and the presentation of results of an Exploratory Factor Analysis (EFA). We were able to derive different classes (G1), with five items per class that could be used to assess these (G2). Subsequently, results of a Confirmatory Factor Analysis (CFA) are presented that validated the questionnaire (G2). Finally, we present results showing how the established viewer types relate to behavior and features in game live-streams (G3). We end the paper with a discussion of how our contributions (i.e., the identified viewer types as well as the 25-item questionnaire) can be used practically.

2 Related work

While most work in this section is from the game live-streaming domain, we do not restrict ourselves only to this context, to present a more holistic view. Structurally, first, we will provide research that highlights the importance of interactivity, and forms thereof. Afterward, work is presented that highlights individual differences and motivations for why streamers stream and viewers consume this content. Finally, we discuss other approaches, not restricted to the domain of live-streams that aim at classifying users, as well as the advantages of doing so.

2.1 The role of interactivity

Offering interactivity was shown to be important across live-streaming contexts. Tang et al. (2016) investigated mobile streaming apps, and many of the activities that were found to happen there had an interactive nature between streamer and viewers. Hu et al. (2017) also highlighted how important such interactivity is, as audience participation options help to enhance the feeling of belonging to the group. Haimson and Tang (2017) focused on live-streamed events broadcast on Facebook, Periscope and Snapchat and also found that interaction is one of the key components to make remote viewing engaging. Even here, streamers are inclined not only to respond to chat comments but also to allow their viewers to alter how the stream proceeds: aspects that we also see in game streams (Lessel et al. 2018). As Li et al. (2020) found, interaction in live-streams is a social activity as well. Watching gaming in general is a key component of play, be it co-located or distributed (Smith et al. 2013) and Tekin and Reeves (2017) also pinpoint (in the context of co-located play) that spectating is more than someone watching others playing games, as spectators start to interact, for example through reflecting on the past play or wanting to coach the player, which again can be seen as form of interaction.

Overall, these aspects highlight that interactivity is important and inherent to the live-streaming experience. Works such as Lessel et al. (2018) show that the forms of interactivity can be seen as broad, and already start with streamers simply answering viewer questions or acknowledging the presence of viewers. Given the importance of interactivity, the broad range of options to realize interactive features and integrative behavior, and works such as Flores-Saviaga et al. (2019) and Deng et al. (2015) showing that there are highly frequented streams with thousands or ten thousands of viewers at the same time, it seems obvious that individual differences in preferences and perception of the available options should be understood and potentially accounted for. Investigating viewer types can contribute to this.

2.2 Understanding and enhancing interactivity in live-streams

For this section, we cluster research in respect to interactivity into “understanding (and improving) individual interactive features,” “investigating new streaming platforms,” and “investigating new interactive content.”

Research done in the first cluster either analyzes features currently in use, investigates how these can be improved or altered with new content, or provides and studies novel elements. We will give an example for every aspect.

Much work has been done to understand how the text chat, the main communication channel in live-streams between viewers and streamers (Lessel et al. 2017b), is used. Works such as Hamilton et al. (2014), Olejniczak (2015), Musabirov et al. (2018a, 2018b) and Ford et al. (2017) investigated how it is used and how it changes in relation to the number of viewers: Hamilton et al. (2014) found that chats with more than 150 viewers are hard to maintain; this is compared to the roar of a crowd in a stadium, dramatically changing the interaction options as well as the possible communications between streamer and viewer. Follow-up work (Musabirov et al. 2018a, b) compared the situation to a sports bar, where communication is still possible and viewers could switch between roaring and talking. Ford et al. (2017) names the communication “crowdspeak”; communication that seems chaotic and meaningless, but still makes sense to participants and makes massive chats legible and compelling. Olejniczak (2015) analyzed chat messages of different-sized channels (1k, 10k, 50k, 150k viewers) and found that the nature of the chat changes, for example, messages are visible longest in the 1k case, and emoticons are used most in the 150k case. These examples show that contextual factors—here, the number of viewers in a channel—have a significant impact on how an offered option—here the primary communication channel—is used. Similar findings were reported by Flores-Saviaga et al. (2019).

As an example of how an identified drawback can be targeted, we want to highlight the work of Miller et al. (2017). They suggested an approach to overcome the information overload of the chat through the usage of conversational circles, in which viewers of a live-stream are dynamically partitioned and only see messages of other viewers in the same partition. Through a message upvoting mechanism, it is possible that messages from one partition are shown in others or even to all viewers. In a user study, the authors found that viewers appreciate such an approach and that more messages can be handled.

Another commonly used type of feature in streams is overlays, for example showing the latest follower, or what kind of background music is currently playing, directly in the video stream. In the work of Robinson et al. (2017), it was investigated how viewers perceive receiving “internal” information about the streamer, i.e., their heart rate, skin conductivity and emotions, presented in the form of such a video overlay. In their study, this was found to impact the viewer engagement, enjoyment and connection to the streamer positively, but was also distracting to a certain extent. While again the context (e.g., the game situation being streamed) might have had an impact on this perception, it might also be the case that such pieces of information are problematic for certain viewer types; something that might be better understood if a classification tool, as aimed for in this paper, were available.

An example of a new feature that is introduced can be seen in the prototype Helpstone (Lessel et al. 2017b). With it, a specific round-based trading card game (Hearthstone by Blizzard Entertainment) was in the focus, and the authors wanted to investigate how communication channels could be improved. The streaming situation of this game is often characterized by viewers giving suggestions or hints for the current game state; these become harder to process in larger channels (see above). To mitigate this, we proposed, among other features, a direct interaction option in which viewers can directly interact with the streamed video and give move suggestions similarly as they would carry out moves in the game. These are then automatically aggregated by the system and shown to the streamer directly in the game itself with arrows and a number indicating how many viewers suggested this move. In a small user study, we found that this direct interaction is appreciated by the streamer and viewers. An interesting finding here was that while every viewer appeared to like this feature, not everyone would actively use it: Viewers apparently can be partitioned into two classes, i.e., active and passive viewers, hinting that there appear to be at least two coarse-grained classes. Similarly, in a large-scale survey, Gros et al. (2018) found that not all viewers want to be involved in a stream, giving further support for these two classes. They also investigated factors having an impact on viewers’ involvement desire, and were able to identify factors, such as age. This further supports the supposition that a viewer-type instrument could find other differences and subsequently could serve as an additional factor to consider in such studies.

In the second cluster, works are characterized by adding fundamentally new options to the streaming platform itself, or by the investigation of new platforms. Already in 2017, Twitch released a functionality called “Twitch Extensions”Footnote 3 that allowed streamers to build custom features that could directly be integrated into their channel and allow viewers to interact with it. Besides the option to build such extensions from scratch by coding them, extensions made by others could also be integrated into one’s own streaming channel through a marketplace. This option increased the possible interaction and integration space, but at the same time also calls for systematic guidelines on which possibilities are reasonable for viewers: something viewer types could help with. With Rivulet, Hamilton et al. (2016) investigated how multiple streams coming from different streamers, but, for example, from the same event, could be combined to allow viewers to get a holistic view. The platform that they provided prominently showcased one of the streams, with the other streams shown in smaller windows in parallel, and with viewers being able to select which stream should be showcased. A main chat combined the individual streams’ chats, and as additional features, push-to-talk voice messages were possible, as well as heart emoticons, as a quick form of feedback. A follow-up work by Tang et al. (2017) found that a multi-streaming approach is highly dependent on its context when it comes to viewer interactions, as well as the viewers themselves (voluntary viewers had a wider variation in, for example, how long they watched, or had less interactions through the text chat compared to viewers recruited via Amazon Mechanical Turk). Again, this indicated that different motivations exist, and these can directly impact the interactions with the streams and the offered interactions.

Research of the third cluster focuses on allowing viewers to directly impact the underlying content that is streamed. In the context of games, this means allowing the audience to impact how the game the streamer is playing proceeds, or giving (at least some) viewers the option to directly participate in the game. Both of these aspects are not only considered in research, but also done in typical streams today. For example, streamers use polls to let viewers decide what they should play or do next, or offer to play against some of them in multiplayer games (Lessel et al. 2018). Commercial games like Choice ChamberFootnote 4 even allow the audience to directly impact, through periodical polls, what happens for the streamer in the game (e.g., which enemies appear).

Also, a special form of streams without a streamer have appeared, in which the audience alone controlled the game that was broadcasted. “Twitch Plays Pokémon” is a prominent representative of this experience: the game Pokémon Red was streamed and the game’s avatar could be controlled by viewers through entering special commands into the chat, without any form of moderation. At the peak, 121,000 people played the game simultaneouslyFootnote 5 and in the beginning, game commands entered were executed automatically, leading to game situations that delayed progress for hours (e.g., because the game’s avatar moved one step left and then right for hours). Nonetheless, the game was completed by the audience in under 17 days. During the game, a plurality voting mechanism was introduced, in which commands were aggregated and only the command most often provided in a given time frame would be executed. This voting mode was not always active: the viewers themselves decided (again through voting) which command mode was active. Scientific work such as that of Ramirez et al. (2014) analyzed the experience and found that viewers were not uniform and perceived this experience differently: for example, some liked the chaos associated with the execution of all entered commands, while others wanted the game to proceed faster. As we will illustrate below, these differences might be explainable by player types in games, but viewer types, if they exist, might have also had an effect. We conducted two case studies in this third cluster (Lessel et al. 2017a). In the first study, they investigated a popular tabletop roleplaying stream and analyzed what kind of options the streamers provide their audience to let them impact what happens. In the second study, they also investigated a “Twitch Plays Pokémon” setting in a laboratory study with more features compared to the original run (e.g., more voting modes). In both studies, the results highlight individual viewer differences, as, for example, not every viewer participated in the polls (first study), and some viewers focused on social actions instead of game-related actions (second study).

In sum, although it was not their actual research focus, research on interactivity already provided indications that viewers behave differently, thus making it reasonable to assume that viewer types could be identified and measured, supporting this line of research further.

2.3 Motivation and individual differences in live-streams

In this section, we will focus on research that aims to understand the viewer’s motivations in the context of game streams.

Wohn and Freeman (2020) conducted qualitative interviews with streamers (with varying channel sizes) and with an interest in how they perceive their audience. It became clear that streamers can identify different classes of viewers, such as viewers who feel they are part of the family, or who are fans, trolls or lurkers. To identify their audience, they actively probed by asking questions and remembering certain viewers over the course of the stream. Cheung and Huang (2011) focused on the popular real-time strategy game Starcraft (by Blizzard Entertainment). By analyzing online sources of viewers who shared their story of spectating the game, they identified nine different personas, ranging from ones like The Uninformed Bystander (a person who is merely watching by coincidence and does not know anything about the game he or she watches), to The Pupil (a person who watches to understand and learn the game better) to the The Assistant (a person who wants to help the player). The authors highlighted that a spectator can have multiple personas at once. While this investigation was not strictly bound to interactive live-streaming, it already indicates, with the nine personas, that different preferences and motivations apparently exist, although it did not provide a way to measure these specifically. We conducted an online questionnaire for viewers of game live-streams and let them rate a broad range of features and streamers’ behaviors in the context of these streams (Lessel et al. 2018). It became obvious that many elements were only perceived well by subsets of participants, again indicating individual differences. Based on the personas identified by Cheung and Huang, statements (one per persona) were presented through which participants were to indicate their motivations for why they watch live-streams. The answers to these statements were set in relation to the element ratings, and it was found that the absence or presence of a certain motivation has an impact on the ratings. We stated that more work should be invested in the derivation of viewer types, as our approach only revealed that there are individual differences with an impact on the perception of elements. Another classification was presented by Seering et al. (2017). The authors investigated their own audience participation games (games like the mentioned Choice Chamber) and from post-session survey data, they derived archetypes: Helpers (who want to help the streamer to reach his/her outcome), Power Seekers (who want to have impact on the game, regardless of whether it helps or hinders the streamer), Collaborators (who want to collaborate with other viewers and with the streamer, regardless of the outcome), Solipsists (who focus on obtaining personal benefits, e.g., learning or meeting new people) and Trolls (who want to hinder the streamer). While it is again interesting to see different behaviors, no direct options to assess these archetypes were provided. Yu et al. (2020), on the other hand, investigated whether there are relationships or trends between the perception of features enabling interaction with streamers and Hexad user types (which were originally developed to explain user behavior in gamified settings by Tondello et al. (2016)). The authors conducted an online survey with 50 participants, in which the Hexad user type was assessed, as well as preferences regarding eight different interactive features frequently used in game live-streams. Their results show that there are certain relationships, such as Socializers preferring affiliation features and chat input. While this is valuable work for identifying individual differences together with a way to assess them, in contrast to Yu et al. (2020), we argue that the experience of consuming game live-streams is very different from directly interacting with a gameful system (which is the scope of the Hexad model used). Consequently, to explain preferences for game live-stream features, a specialized viewer-type questionnaire seems worthwhile, instead of using a model developed for gamified applications.

In another line of work, motivations of viewers were focused directly. The work of Wohn et al. (2018) is an example that aimed to understand why viewers donate money to a streamer. They found different motivations for this, such as the goal to improve the content, or to pay for the entertainment value. Sjöblom and Hamari (2017) wanted to understand why people become viewers of game streams and whether it could be predicted how much people will watch, and how many streamers they will follow and subscribe to. They found positive relationships between motivations from the uses and gratification perspective and the aforementioned variables. Kordyaka et al. (2020) were also interested in finding technological and social variables that would best describe motivations of users for consuming game live-streams using the Affective Disposition Theory and the Uses and Gratification Theory, based on an online questionnaire in which participants were asked to provide answers to questions in relation to, for example, their consumption behavior. They propose a unified model, in which the perception of identification with and liking of a streamer and interactivity predict how much a stream is consumed, with interactivity as the most important predictor. While the latter part is in line with the work presented above, the paper does not discuss individual differences, something that we aim for with the viewer types. Gros et al. (2017) also used a questionnaire approach to find out the motivations for why Twitch is consumed (in the main categories entertainment, socialization and information), with statements relating to entertainment receiving the highest values. The authors also found differences in answers to statements relating to socialization aspects, depending on whether users had already donated to the streamer or not; i.e., those that have a stronger social motivation are more likely to donate, further highlighting individual differences and the existence of a relationship between motivation and actual viewers’ actions. Sjöblom et al. (2017) also investigated contextual factors (e.g., which games are played and which presentation form is used) and here also found a strong impact, in that “particular stream types and game genres serve to gratify specific needs of users”, as well as individual differences depending on the form of gratification sought (e.g., social and personal integration vs. seeking tension release). Hilvert-Bruce et al. (2018) also wanted to understand why viewers engage (such as by subscribing or donating) in live-streams. They found that six of eight motivations (such as sense of community or the desire to meet new people) indeed influence the willingness to engage, and also that the channel size (as a contextual factor) has an impact. Seering et al. (2020) analyzed 183 million messages from Twitch streams and found differences in the behavior of first-time participants vs. regulars (e.g., the former write shorter messages, ask more questions and would engage less broadly with others of the community). In addition, some indications were found that the context also has an impact on the subsequent behavior of the former (e.g., whether moderators had interacted before). While giving further examples of contextual differences, this paper also shows that individual factors that are less persistent (here: amount of stream content consumed in the past) compared to viewer types or personality (which is assumed to change more slowly over time: see Roberts et al. (2006)), might also have an impact on how viewers behave, and are thus another factor to be considered when viewer behavior should be understood.

All these works show existing relationships between motivational structures that impact the viewers’ behavior on the level of, for example, how long they watch a stream. Additionally, some classifications were suggested that were derived through various means. However, a systematic way to assess viewer classes specifically has yet not been provided, to our knowledge. In this work, we not only want to shed light on which viewer types exist, but also how to measure these, and whether we can use them to explain differences in the perceptions of features or streamers’ behaviors.

2.4 User-type classifications in game-related contexts

Understanding how and why users interact with games and gameful systems is considered fundamental to improving the users’ experience (Tondello et al. 2019; Hamari and Tuunanen 2014). Consequently, substantial efforts have been made to categorize users into certain types—based either on their motivations and needs or on how they behave in games and gameful systems (Hamari and Tuunanen 2014). We will elaborate on these approaches briefly to show how these classifications were derived. One of the first attempts to classify players in Multi-User Dungeons (“MUD”) was proposed by Bartle (1996). The typology was established by analyzing bulletin-board postings referring to a question asking what players want out of a MUD. As a result, two dimensions arose—action vs. interaction, player orientation vs. world orientation—along which playing can be categorized. Within these dimensions, four player types were established: Achievers, Explorers, Killers and Socializers. When it comes to assessing and using these player types practically, numerous issues have been identified (Bateman et al. 2011). One criticism is related to the fact that the typology is based on motivations and preferences of MUD players, which limits its generalizability to other games or gameful settings (Bateman et al. 2011). Also, the player typology has never been empirically validated, which poses a severe threat to using it for scientific purposes (Bateman et al. 2011; Busch et al. 2016). To tackle this issue, Yee (2002) conducted empirical studies about player motivations. These were based on Bartle’s player types, i.e., Yee brainstormed potential motivations of players guided by the work of Bartle, and came up with statements to rate them. Yee used factor analysis to validate five motivational factors in this first article (Yee 2002). In follow-up works, Yee (2007) empirically derived three main factors which motivate players of online games, namely Achievement, Social Factors and Immersion. Although these empirical studies allow one to assess the motivations of online players reliably, the limited focus on MUDs and thus the lack of generalizability still persists (Busch et al. 2016). Instead of relying on motivations of players, the BrainHex (Nacke et al. 2014) model is based on a series of demographic game design studies and neurobiological research. It presents seven player types, such as the Seeker (motivated by curiosity) or Daredevil (motivated by excitement). In a survey with more than 50,000 participants, Brain Hex archetypes were assessed using textual descriptions that the authors created. However, an instrument to assess BrainHex archetypes has never been validated, and other researchers have demonstrated substantial flaws in the validity of the model (Busch et al. 2016; Tondello et al. 2019). Therefore, the BrainHex scale cannot reliably be used to classify player preferences.

In a more recent work, Tondello et al. (2018b) analyzed the dataset from the BrainHex survey and found support for only three out of seven BrainHex archetypes: action orientation (represented by the Conqueror and Daredevil archetypes), aesthetic orientation (represented by the Socializer and Seeker archetypes) and goal orientation (represented by the Mastermind, Achiever, and Survivor archetypes). Based on the work by Yee (2002, 2007) and on the literature review by Hamari and Tuunanen (2014), the authors suggested to add social orientation and immersion orientation as additional factors. In a follow-up work by Tondello et al. (2019), this five-factor model, and a scale to assess the five factors, was empirically validated. To generate items for each of the five proposed player traits, the researchers used a brainstorming approach, after which each member of the research team wrote several suggested items that could be used to score someone on that trait. Afterward, the suggested items were discussed and the best items were selected.

Instead of focusing on games, the Hexad user-types model targets gamification (as already stated above). It was initially proposed by Marczewski (2015). In contrast to previous models, which are mostly based on empirical studies and observations, the Hexad model is based on Self Determination Theory (“SDT”) (Ryan and Deci 2000). The Hexad model consists of six user types, which differ in the degree to which they are driven by their needs for autonomy, competence, purpose and relatedness, as defined by SDT. For example, Philanthropists are socially minded; they share knowledge with others and are driven by purpose. Tondello et al. (2016) created a survey to assess Hexad user types. As a first step, an expert workshop with six experts was held to generate a pool of items for each of the user types, which were agreed upon by discussions (Tondello et al. 2016; Diamond et al. 2015). Next, the first version of the Hexad scale was introduced and the instrument’s ability to explain user preferences for gameful design elements was demonstrated (Tondello et al. 2016). More recently, the scale was slightly adapted and its validity was empirically demonstrated (Tondello et al. 2018a). Högberg et al. (2019) also contributed to the field of user modeling in gamified systems by proposing and validating a questionnaire to model and assess gameful experiences of a gamified system. Like us, they followed an empirical approach, starting with a pre-study to inform the item generation process, creating and refining items for the questionnaire and validating it in a validation study.

To sum up, previous work shows that player or user typologies in the context of games and gameful settings have been under investigation for more than 2 decades, and this is still an active research field. While the aforementioned studies focused on the player or user actively engaging and interacting with a game or gameful system, we shift our focus to viewers or spectators of such games, to account for the increasing popularity of game live-streams. We believe that—although the content of such live-streams is also games—the experience of spectating someone else playing a game is different from interacting with it directly. Therefore, we contribute a typology of game live-stream viewers, which is based on an empirical study of their motivations and preferences. While this sheds light on the needs and motivations of viewers, we go a step further and contribute an instrument to assess viewer types and investigate which features in game live-streams are particularly relevant for each viewer type, mirroring to a certain extent the approach of Tondello et al. (2016).

3 Development of a viewer-type questionnaire

The previous section has already shown that classifications of viewers seem possible and that different approaches could be used (e.g., providing features and observing their use (Lessel et al. 2017b), using theories to create a model and relate that to behaviors (Sjöblom et al. 2017; Tondello et al. 2016) or collecting statements and analyzing them (Cheung and Huang 2011)). Similar to Högberg et al. (2019), we decided to use an empirical approach, in our case by focusing on the reported interests of game live-stream consumers. In the following, we present our item development process, the study procedure, and the exploratory analysis results, as well as a discussion of these.

3.1 Item development

For the item development, we started by reviewing existing data from game live-stream audiences that we acquired in the context of game live-streams where more than 400 participants rated 58 features, concepts and streamers’ behaviors in terms of whether these would be interesting for them (see Lessel et al. (2018) for more details on this past study). In accordance with the terminology of that work, we refer to these features, concepts and streamers’ behaviors as “elements” from here on. The idea was that if it was possible to group these elements statistically through a principal components Exploratory Factor Analysis (EFA), the resulting clusters might help distinguish conceptual directions of live-stream interest. While these clusters are unlikely to match specific viewer types, we argue that they provide a good basis for a conceptual item generation (i.e., potential questions for a viewer-type questionnaire). According to Fabrigar and Wegener (2011), four prerequisites need to be fulfilled for the results of an EFA to be reported and interpreted: All elements should correlate by at least 0.3 but by no more than 0.9 with another element (1). The number of participants (N) should exceed the number of elements (x) at least by a factor of three, i.e., \(N > x \times 3\) (2). The Kaiser–Meyer–Olkin (KMO) value returns values between 0 and 1 (a rule of thumb: KMO values between 0.8 and 1 indicate the sampling is adequate (Kaiser and Rice 1974)). KMO values less than 0.6 indicate the sampling is not adequate (Kaiser and Rice 1974) (3) and the Bartlett test of sphericity should reach significance (4). Seven elements needed to be eliminated because they did not correlate by at least 0.3 with another element (1). Since data of 400 participants was gathered for the remaining set of 51 elements, the minimum of 153 participants was exceeded, i.e., \(400 > 153 = 51 \times 3\) (2). The Kaiser–Meyer–Olkin (KMO) value reached .886, indicating the sampling was adequate (3). Lastly, the Bartlett test reached significance (\(\chi ^2_\mathrm{Bartlett}(1275) = 10049.65\), \(\textit{p} < .001\)) (4). Thus, all criteria of the EFA were met and the analysis could be conducted.

Eight clusters resulted from this EFA on the elements (see “A.1” for the clusters, elements and their loadings) with loadings of > .4 (substantial according to Stevens (1996)), explaining 54% of the variance in the element answers. The criterion for variance explanation through EFA is 60%, which this analysis did not meet (Hinkin 1998). However, keeping in mind that these clusters only served to inspire viewer item generation and that a structure of the elements themselves was not of interest, this was not an issue at this point. Next, three researchers developed items for each of these clusters individually, and in the first discussion round these were merged, discussed and refined accordingly. In this process, special attention was paid to potential similarities between the individual elements from the previous study (Lessel et al. 2018) and the newly generated items for this study to ensure that the latter focused on viewer’s impressions of and attitudes toward elements, as opposed to describing the elements themselves and resembling a rephrased copy of the former. After this first round, another individual round of item generation and a second discussion round followed. The resulting set consisted of 68 items (see Table 1).

3.2 Procedure

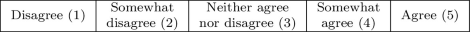

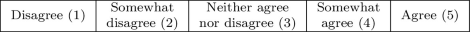

We intended to use a crowdsourcing platform to distribute the derived items, so we set up an online questionnaire. Besides the items, it also contained questions on the demographics of the participants and on their live-streaming consumption behavior. The item order was randomized for every participant and partitioned into several pages in the questionnaire so as not to overwhelm/demotivate participants with one long list. Every item was to be answered on a 5-point scale with the labels Disagree, Somewhat disagree, Neither agree nor disagree, Somewhat agree and Agree. Participants also had the chance to select a sixth option to indicate that they had an issue with the question, for example if they did not understand it. If this option was selected, a free text field appeared in which they could state the issue more specifically. We included four attention check questions to identify careless responses, in which participants were asked to select a specific answer. These four questions were also randomly presented amongst the 68 items. Additionally, we added a question to the end of the questionnaire, in which we asked whether participants in their honest opinion thought that their data should be used, and emphasized that the answer to this question had no impact on the payment. This and the attention checks were shown to be helpful in detecting careless responses (Meade and Craig 2012). In addition, the questionnaire closed with a free text field to allow participants to comment on it.

Participants were recruited using Amazon Mechanical Turk and financially compensated with $1.50 for participating in the on average 11-min study, to meet the minimum wage suggestion of Salehi et al. (2015). Before this main study, we ran a recruiting study to ensure that our main study would be distributed only to US based actual consumers of game live-streams. The study was approved by the Ethical Review Board of the Department of Computer Science at Saarland University (No. 19-2-5).

3.3 Participants

Thirty-two out of the initial 248 participants were removed for failing at least one attention check, while five participants indicated that we should not use their data. The data of the remaining 211 US participants (gender: 66 females, 138 males, 2 gender variant, 5 preferred not to report; age: 18–24: 30x; 25–31: 85x; 32–38: 58x; 39–45: 19x; >45: 12x; 7 preferred not to report) were used subsequently. The age distribution roughly resembles the age distribution of US video game players in 2021 (as of February 2021).Footnote 6 Due to our recruiting study, all participants reported consuming game live-streams, although to a varying degree: 9 viewers indicated that they watch less than 1 h of game live-stream content per week, 73 watch 2 to 3 h, 76 watch 4 to 9 h, 36 watch 10 to 18 h, and 17 watch more than 18 h of game live-stream content per week. Most participants reported watching 1 or 2 streamers regularly (68x), or 3 to 4 (97x). 40 participants also indicated that they stream content themselves, with 29 participants having an average audience of less than 80 viewers and only three participants having an average audience of at least 250 viewers.

3.4 Exploratory factor analysis

We first report the results of the prerequisite checks for the principal components Exploratory Factor Analysis (EFA), followed by the analysis. All analyses in this first study were conducted using IBM SPSS Statistics, Version 25.0.

3.4.1 Prerequisites

Before we started looking into the viewer-type items, we first had to remove only one item (“I like the fact that there are other viewers in the streams”, No. 56) for being reported as unclear by one participant (see Sect. 3.2). All other prerequisites are identical to the ones in Sect. 3.1, so that only the results are reported here. There were no item correlations higher than 0.9; however, we had to remove one item (“I don’t necessarily have to watch the stream live”, No. 16) because of a lack of correlations of at least 0.3. (1). 211 participants were recruited for the remaining 66 items, exceeding the minimum of 198 participants, i.e., \(211 > 198 = 66 \times 3\) (2). The KMO value reached .899, indicating the sampling to be adequate (3). Lastly, the Bartlett test reached significance (\(\chi ^2_\mathrm{Bartlett}(2145) = 8625.01\), \(\textit{p} < .001\)) (4). Thus, all criteria were met and the analysis could be conducted.

3.4.2 Analysis

We used Horn’s parallel analysis method (Horn 1965) to determine the number of extracted factors. It produces one of the most solid factor solutions by comparing the eigenvalues of the extracted factors to those of simulated factors in the same sequential position, reducing the impact of random data variation on the factor extraction (Braeken and Van Assen 2017).

Furthermore, we chose an oblimin rotation method since we assumed that the resulting viewer types would not be mutually exclusive, similar to other user classification questionnaires (e.g., the User Type Hexad (Tondello et al. 2018a)). Through the aforementioned parallel analysis we were able to find stable viewer-type structures for game live-stream viewers in our data, resulting in five different factors. Since the items have loadings of a certain extent on every factor, they are first assigned to the factor with their highest loading. This results in lists for each factor which are then sorted by loading in descending order. All items with factor loadings greater than .4 (Stevens 1996) can be used for interpreting the factors, with higher interpretative value for items with higher loading. Only the item “I am more interested in the streamer than in the other viewers” loaded substantially on several factors, i.e., on the resulting viewer-types Content Observer and Streamer-focused Observer. Since this item, as described in the following, was not strong enough to be entered into either of the scales, it follows that none of the remaining items load on more than one viewer type. Naturally, these lists will also be of different length. For organizing these lists to scales, the internal reliability, as indicated by Cronbach’s \(\alpha \), should be maximized by removing scale items with low internal reliability, while also bearing in mind that both excessively long scales, causing response biases through fatigue and boredom (Schmitt and Stuits 1985), and excessively short scales, causing validity concerns (Hughes and Cairns 2020), should be avoided. Thus, the ideal scale should be short enough to maintain attention and interest, but long enough to be a valid instrument, all the while trying to reach good reliability with Cronbach’s \(\alpha \) between .7 and .9 (Nunnally 1994).Footnote 7 Considering that prior research has shown that reliable scales can be achieved with as little as four items per scale (Hinkin and Schriesheim 1989) and that a scale length of four to six items is to be recommended (Hinkin 1998), we aimed for a length of four to six items for our five scales.

Following this procedure and after sorting the lists, we determined which one of the five factors received the shortest list. Since that factor still ended up with five items, being perfectly within the desired scale length of four to six items and forming a scale with an optimal \(\alpha \) of .904, we then decided to generate five-item scales from the lists of the other four factors as well. The Cronbach’s \(\alpha \) values for the remaining factors ranged between .805 and .869. The resulting five-factor-five-item questionnaire items can be found in Table 2, including the individual factor loadings. The respective factor items were then again examined by three researchers to understand and derive definitions for the factors. The individual results were discussed and subsumed, and resulted in the definitions below. Subsequently, the five viewer types were named based on their definition. For each viewer type, the abbreviation (e.g., SA), the eigenvalue (E) and its simulated counterpart (SE), as well as the reliability coefficient (i.e., Cronbach’s \(\alpha \)) are presented in parentheses:

System Alterer (SA; E = 19.67; SE = 2.44; Cronbach’s \(\alpha = .869\) )

This viewer type is characterized by a preference for exerting influence on the streamed game and the stream itself. Opportunities to alter game contents or aspects of the stream, such as context information shown in the corners of the stream, are welcomed and actively used. The interaction with both the streamer and other viewers is aimed at fulfilling the SA’s interests.

Financial Sponsor (FS; E = 6.01; SE = 2.29; Cronbach’s \(\alpha = .904\) )

This viewer type is characterized by a strong willingness to donate, and thereby financially support, their favorite streamer(s). His aim is creating or maintaining a good stream quality and is accompanied by the normative stance that it should be appropriate to support streamers who produce good quality content.

Content Observer (CO; E = 3.76; SE = 2.18; Cronbach’s \(\alpha = .805\) )

This viewer type is characterized by a desire for an undisturbed stream viewing experience. Interaction and communication with the streamer and other viewers within the live-stream, e.g., via live chat, are of no interest to the CO. Instead, these viewers focus on following the in-game events.

Streamer-focused Observer (SO; E = 2.42; SE = 2.10; Cronbach’s \(\alpha = .822\) )

This viewer type is characterized by an interest in the streamer. The streamer is the center part of the stream watching experience for the SO. This type shows little interest in following the in-game events and instead is most interested in the streamer’s game experience.

Social Player (SP; E = 2.16; SE = 2.03; Cronbach’s \(\alpha = .858\) )

This viewer type is characterized by a playful orientation, which is reflected in an interest in gamification elements, such as unlockable achievements and collecting points, and in interaction with the streamer through, for example, user-created submissions or discussions between streamers and viewers.

As calculated in a re-run of the analysis on the resulting questionnaire (KMO = .843, \(\chi ^2_\mathrm{Bartlett}(300) = 2682.58\), \(\textit{p} < .001\)), the 25-item solution was able to explain 65.02% of the total item variance, i.e., about two-thirds of the entire variability in the answers of the participants could be explained solely through the viewer-type model, in line with the criterion of 60% (Hinkin 1998).

3.5 Summary

Based on the related work, we expected to find viewer types in game live-streams. Through the EFA on the initial items, we were indeed able to find five reliable viewer-type scales, with five items each (see Table 2), leading to a 25-item questionnaire overall. This addresses our goals G1 and G2. However, all the above results are based on a single sample and could be biased by sampling errors. Similarly, the viewer-type model could be over-fitted to our sample and not applicable to other samples. Furthermore, as expressed with G3, it would be valuable if the viewer-type model could also show predictive validity to underline its practical usefulness, e.g., by allowing prediction of feature preferences in game live-streams. However, we could not investigate this in the first study. Thus, in the following second study we wanted to confirm that our viewer-type model holds with a second sample and that feature preferences could be predicted (thus contributing to G2 and G3).

4 Validating the viewer-type questionnaire and advanced analyses

The second study consists of two parts: In part A, we validate the five-type-five-item questionnaire through a Confirmatory Factor Analysis (CFA). In part B, we investigate whether they have predictive validity for live-stream features. In the next section, details of the overall study procedure are provided, followed by sections for the two parts, and a summary of the results.

4.1 General procedure

The second study was also set up as an online questionnaire, consisting of the same demographic questions and questions on the live-streaming consumption behavior as in the first study, the 25 items of the viewer-type questionnaire in the first block (part A) and 38 features in the second block (part B) of the experiment. Within each block, the questions were again presented in a random order and across several pages, so as not to overwhelm a participant with one long list. The exact procedures will be described in the respective parts. As for attention checks, seven were included (4 in part A, 3 in part B). Again, a final question asking whether or not the participants thought that we should use their data was included, as well as a free text field for final comments. This study was also approved by the Ethical Review Board of the Department of Computer Science at Saarland University (No. 19-2-5).

4.2 Participants

Participants were again recruited using Amazon Mechanical Turk and financially compensated with $1.50 for participating in the on average 12-min study, to meet the minimum wage suggestion of Salehi et al. (2015). No participant of the first study could participate in the second study, and again, we ensured that participants were consumers of game live-streams. To achieve enough statistical power for our confirmatory factor analysis, which we calculated with structural equation modeling, we targeted a recommended minimum sample size of 100 participants (Soper 2015). We based our estimation on the following indices: A desired statistical power of .8, an expected effect size of .4, a significance level of \(\alpha = 5\%\), five latent variables, i.e., the viewer types, and 25 observed variables. To maximize our feasible sample size, not relying on the absolute minimum number of participants, we recruited 177 participants, out of which 24 were removed for failing at least one attention check, while five participants indicated that we should not use their data. One hundred and forty-eight US participants (gender: 51 females, 95 males, 1 gender variant, 1 preferred not to report; age: 18–24: 20x; 25–31: 51x; 32–38: 43x; 39–45: 23x; >45: 9x; 2 preferred not to report) remained after these exclusions, surpassing the minimum sample size of 100 (Soper 2015). All reported consuming game live-streams, but to a varying degree: six viewers indicated that they watched less than 1 h of game live-stream content per week, 52 watched 2 to 3 h, 60 watched 4 to 9 h, 21 watched 10 to 18 h, and 9 watched more than 18 h of game live-stream content per week. Most participants reported watching 1 or 2 streamers regularly (46x) or 3 to 4 (68x). Twenty-four participants also indicated that they streamed content themselves, with 19 participants having an average audience of less than 60 viewers and only two participants having an average audience of at least 200 viewers. The participants regularly used the following live-stream platforms for consuming gaming content: Twitch (117x), YouTube Gaming/ Gaming Live-Streams on YouTube (108x), Mixer (27x), Smashcast (formerly Hitbox/Azubu) (3x) and Facebook/Facebook Gaming (3x).

4.3 Part A: confirmatory factor analysis of the viewer-type questionnaire

In this part, we present confirmation and validation of the questionnaire.

4.3.1 Part A: procedure

We used the same 5-point scale for the 25 viewer-type items of the first study, without providing an option to indicate issues with the respective question. We assumed that all questions were understandable given the first study.

4.3.2 Part A: analysis

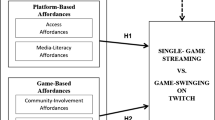

We conducted a Confirmatory Factor Analysis (CFA) to validate the viewer-type model through structural equation modeling (SEM) in R. The lavaan package for R was used to calculate the SEM with maximum likelihood estimation. The measurement model of the CFA, presented in Fig. 1, shows the following: the viewer types in the large ovals and their relations (i.e., covariances) next to the lines between them, as well as the five items per viewer type in squares with their errors in the small circles. For instance, the bold red line (a covariance of − .63) between CO and SO might demonstrate their opposing interests while the bold black line (a covariance of .67) between SA and SP might demonstrate their interactive behavior. The full list of factor loadings, as well as the factor variances and covariances, is given in Table 3. Descriptive statistics of the questionnaire items, i.e., means and standard deviations as well as skewness and kurtosis of the answer distributions, can be found in “A.2.”

When evaluating Confirmatory Factor Analyses, the usual procedure is to first investigate the Chi-squared index and to follow up thereafter with the consideration of so-called “model fit indices,” which are meant to facilitate a better model quality review than the Chi-squared index. Finally, a third step to refine validity considerations is the calculation of composite reliability (CR) and average variance extracted (AVE) estimates, which allow drawing conclusions on convergent and discriminant validity of the viewer types.

Measurement model for the Confirmatory Factor Analysis of the viewer-type questionnaire (\(N = 148\)). Loadings for the items are all significant with \(p < .001\). Note that the seven out of ten covariances of viewer types reach significance. The boldness indicates significance levels, with the dotted lines indicating non-significant covariance, while the color accentuates the relationship polarity. The arrows between viewer type and items, in turn, indicate the standardized factor loadings. See also Table 3 for an overview

Overall and Specific Model Fit As mentioned above, one of the first indices to investigate is the Chi-squared index (\(\chi ^2\)). Since the Chi-squared test statistic easily reaches significance for larger samples, which, in turn, are necessary for model validation, it has been proposed to compare the Chi-squared estimate with the degrees of freedom of the Chi-squared test because the latter is linearly dependent on the sample size as well (Ullman and Bentler 2012). Thus, comparing these two values can reduce the impact of sample size differences on the model validity (Ullman and Bentler 2012). While different cutoff values for this comparison have been proposed (McIver and Carmines 1981; Marsh and Hocevar 1985), a cutoff value of two (i.e., \(\frac{\chi ^2}{\mathrm{d}f} \le 2\)) has been established (Ullman and Bentler 2012). Our CFA reaches significance (\(\chi ^2(265) = 498.11, \textit{p} < .001\)) and the aforementioned refined criterion is fulfilled (\(\frac{\chi ^2}{\mathrm{d}f} = \frac{498.11}{265} = 1.88 < 2\)), indicating our viewer-type model is accurate. The model fit indices that follow the Chi-squared index had been originally developed to bypass this sample size issue inherent in the \(\chi ^2\) test. Among these indices, the root-mean-squared error of approximation (RMSEA) and the Tucker Lewis Index (TLI) are prominent. Both of these indices have recommended cutoff values: RMSEA \(\le \) .08 (Bollen and Long 1993) and TLI \(\ge \) .90 (Sharma et al. 2005). Considering our data, our RMSEA value of .077 met the cutoff, while the TLI value of .855 fell short. Despite being standard indices for model evaluation, doubts have been cast on the assumed higher reliability of these indices (Bentler 1990; Sharma et al. 2005). For example, it has been argued that TLI estimates scatter strongly under certain conditions (Bentler 1990). In order to investigate whether the model fit indices are indeed more reliable than the Chi-squared index, i.e., less prone to disruptive factors such as sample size differences, simulation research on the model fit indices was conducted (Sharma et al. 2005). While the RMSEA proved to be insensitive to sample size, the TLI proved to be greatly affected by sample size, with strong spread for sample sizes below 200. Thus, one should carefully consider the overly conservative norms of the cutoff values for such sample sizes (Sharma et al. 2005). To provide less distorted validity data in light of these limitations, we followed with convergent and discriminant validity assessment as planned for the third step of the CFA.

Convergent and discriminant validity Both composite reliability (CR), with a recommended criterion of .7 (Zaiţ and Bertea 2011), and the average variance extracted (AVE), with a recommended criterion of .5 (Fornell and Larcker 1981), are helpful tools for establishing convergent validity (Fornell and Larcker 1981; Farrell 2010). We first calculated the CR estimates for the viewer types, which take the varying factor loadings (as depicted in Table 3) into consideration. The CR estimates were .88 for SA, .90 for FS, .75 for CO, .83 for SO and .76 for SP, and thus well above the recommended criterion of .7 (Zaiţ and Bertea 2011). The AVE estimates, in turn, indicate the average variation in the answers of the participants on the questions of a viewer type that can be explained solely through that viewer type itself (Zaiţ and Bertea 2011; Farrell 2010). If, for example, the AVE estimate of SA were larger than .5, it would mean that more than half (\(50\%\)) of the variation in the answers on the five SA questions (1.1 to 1.5 of Table 2) could be traced back to the viewer-type SA. Considering our data, the overall AVE estimate suits the criterion (AVE\(_\mathrm{overall} = .523\)), but it has been pointed out that the AVE estimates of each of the latent variables, i.e., viewer types in this instance, should also be examined separately (Farrell 2010). While the AVE estimates for SA (.595), FS (.689) and SO (.522) match the .5 criterion, the estimates of CO (.373) and SP (.431) fail to fulfill it, implying reduced convergent validity for the CO and the SP. Thus, the CR estimates were all adequate, while two out of five (six, if the average is included) AVE estimates were not. This discrepancy was, however, already discussed by the developers of the AVE (Fornell and Larcker 1981), who stated that the AVE would be a rather conservative measure for establishing validity and that a good CR would suffice to conclude that the construct validity is adequate. Following this recommendation and considering the CR estimates (CO: .83, SP: .76) for the two viewer types with weak AVEs (CO: .37, SP: .43), we conclude that the convergent validity is adequate.

While the CR alone seems to suffice for establishing convergent validity (Fornell and Larcker 1981), a different picture emerges for the discriminant validity. To assess the divergence between the viewer types, it is invaluable to compare the AVE with the so-called “shared variance” (Farrell 2010), i.e., the squared correlation between the viewer types. As regards content, this means that, for each given set of items “P.1” to “P.5”, the explanatory power of viewer type P must always be higher than the explanatory power of any other viewer type Q. Should this not apply to any pair, then a lack of discriminant validity for these two viewer types would emerge. Thus, we compared the AVE of each viewer type with the shared variances for the remaining types. Our data shows discriminant validity for all viewer types (see Table 4).

The squared correlations in Table 4 show another interesting result: despite being discriminant, several viewer types share significant correlations. A similar result can be found for the viewer-type data of the first study. While the correlations between viewer types for both studies (see Table 5 for the correlations in the first study and Table 6 for the correlations in the second study) differ in magnitude and significance, they are of equal direction in both studies. Because insignificant correlations do not, by definition, differ significantly from zero, we only display the significant correlations in Tables 5 and 6. These correlation patterns might point toward underlying higher-order variables, which, in turn, might provide a classification system for viewer types. While we aim to investigate these correlation patterns in future work, we could not do so in this study due to the absence of a priori hypotheses concerning correlations between viewer types.

4.3.3 Part A: CFA summary

We described the CFA result interpretation as a three-step process. In the first step, the overall model test with Chi-squared index, we managed to prove the model as a whole to be suitable for explaining our data. Subsequently, we investigated the two fit indices RMSEA and TLI in the second step. While the RMSEA met the quality criterion, the TLI was just below adequate (.855 < .9) which could have been the result of a data mismatch, of an insufficient sample size (albeit being larger than the minimum recommended sample size) or of unreliability of the index itself due to the sample size being lower than 200 participants. However, since both the RMSEA and the sample size were adequate, we summarized the second step to be successful and the specific model fit to be adequate as well. As a third and last step, we investigated CR and AVE, finding both convergent and discriminant validity for all but two viewer types (CO and SP), for which only reduced convergent validity could be found. With the model fit being adequate and the viewer types themselves proving to be valid, we conclude the viewer-type questionnaire to be valid as a whole, and applicable, giving support for G2.

In part B, we further examined feature preferences of game live-stream viewers by attempting to predict them based on viewer type scores.

4.4 Part B: relationship between features and viewer types

In this part, we will present our investigation of the relationships between feature/streamers’ behavior preferences and viewer types.

4.4.1 Part B: procedure

After part A, participants were presented a second block of questions describing 38 features, again presented in a random order and on several pages. We describe in the next section how they were derived. As an introduction, participants were asked “For each of these features, we would like you to tell us the likelihood that you would personally prefer watching a live-stream with the respective feature compared to a live-stream without it, all other things being the same. While considering the features, please try not to focus on a specific streamer and instead refer to a hypothetical average streamer. Please do not take into consideration whether or not a feature is already available or feasible given today’s level of technology.” This was done to account for potential contextual effects. Every feature was described in one sentence and needed to be rated on a 5-point scale with the labels Very unlikely, Rather unlikely, Neither likely nor unlikely, Rather likely, Very likely for the question “How likely is it that you would personally prefer watching a live-stream with the respective feature compared to a live-stream without it, all other things being the same?”. This abstract question should ensure that all features could be classified with the same question. For example, a more concrete question such as “How likely is it, that you would use the respective feature?” would be suitable for “Option to change the game’s rules while the streamer is playing it (e.g., as done in Choice Chamber)” but not for “Streamer provides regular VLOGs about private life (i.e., without gaming context)”. The following text, including two examples, was shown to the participants at the start of part B: “In the following, we present to you seven pages with six features each. Examples: If you are asked to rate the feature “Facebook Messenger integration for communication”, provided that you do not have and do not plan to have a Facebook account, you might rate “Very unlikely” to indicate that there is absolutely no chance that you would prefer a live-stream with this feature since you would never use it. If you are asked to rate the feature “Emoticons in the live-chat of the stream,” provided that you enjoy actively expressing your emotions in text messages, you might rate “Very likely” to indicate that you are very interested in using an array of emoticons and would thus prefer a live-stream with this feature.

4.4.2 Part B: feature selection

The features and streamers’ behaviors were chosen based on a review of existing and potential features in live-streams, e.g., from our experience, we know that many streamers provide video-based blog entries on their life, i.e., VLOGs, which we would then add as a potential feature to the feature list, wording it as “Streamer provides regular VLOGs about private life (i.e., without gaming context).” We again looked at the elements of Lessel et al. (2018) and examined them for their relevance. For example “Multiple camera perspectives; every viewer can change the perspective for him/herself” was considered as interesting by 73.5% of the participants in the aforementioned study and was reworded here to “Option to change the webcam perspective.” Another criterion for inclusion of elements was sufficient appeal, e.g., the element “Availability of chat rooms” of Lessel et al. (2018) had been classified as interesting only by 22% of the respective participants, so that this feature could potentially also lead to a floor effect, i.e., many participants could dislike the feature so strongly that no substantial differences in these ratings could be found and analyzed. Importantly, comparing to the first study where we focused on abstracting away from elements and generating novel items based on element clusters, here we focused on the individual elements themselves. Again, this process was done by three researchers separately and was refined through discussion.

Distinction of elements and items/features

The elements of Lessel et al. (2018) were already utilized to a certain extent in the item generation part of the first study, and again to a certain extent in the feature generation for this study part. Now, at first glance, one might assume that it is unsurprising that we will find associations between the resulting viewer types and the elements themselves. To mitigate this, it was important to have a reasonable mix between features that were not part of the work of Lessel et al. (2018) (to see whether associations to new elements can be found) and those that had relationships to the viewer types. The latter part is also important as, although the element clusters were used as inspiration for the initial item generation, no element was used 1:1 as a viewer-type item (cf. Sect. 4.4.2). To quantify this, the semantic content of all items and features with all elements was compared. For example, the element “Access to more features for subscribers of the channel” was directly related to the item “I think that viewers who watch the stream a lot should have access to more features in the stream” as well as to the feature “Rewards (e.g., additional features or points) for loyal viewing (e.g., long subscription, frequent commenting, ...)”. In contrast, the element “Availability of channel-specific emotes” had semantic relations neither to items nor features since none of these mention emotes at all. We found that only nine out of 25 items (36%) of the final viewer-type questionnaire were semantically related to elements of Lessel et al. (2018). Furthermore, the features of part B can be seen as semantically related (R; 37% of the features) and semantically unrelated (U; 63% of the features) to the elements, which we deemed to be a good fit for the goal that we want to achieve. Table 7 shows the resulting set of features and respective viewer types to which we found associations. Importantly, we do not assume that this set of 38 features captures all relevant or possible live-stream features; instead, we aim to prove that feature preferences in game live-streams could generally be predicted by the audience’s average viewer-type scores.

4.4.3 Part B: analysis

For each of the 38 features and behaviors, we calculated hierarchical regressions based on the correlations of the preference for the respective feature with the five viewer-type mean scores. Since predictors should be added stepwise in order of their importance (Field 2013) and the magnitude of the correlations indicate the strength of their relation, we added the viewer type with the largest correlation as predictor in the first step, the viewer type with the second largest correlation in the second step, and so forth, until no further variance explanation, i.e., incremental validity, could be achieved. However, a large variance explanation based on a combination of multiple individually insignificant viewer types would be pointless in the sense that it would hinder a practical prediction of features from individual viewer-type scores. Thus, in addition to the aforementioned stop criterion and for the sake of interpretability and achieving the intended simplified feature prediction, we also chose a reduced maximum of variance explanation over adding insignificant viewer types into the prediction.

Only one feature (“Option to disable stream pop-ups”, No. 38) could not be predicted by any viewer type. Since this feature has the largest mean score as well as the lowest standard deviation of all 38 features (M=4.23, SD=.87), ceiling effects could be assumed. The remaining 37 predictable features are listed in Table 8 with their respective viewer type (“VT”) combination of highest variance explanation according to the aforementioned criteria. It is important to note that the shared explained variances between predictors in the regressions do not result from collinearity issues (all VIF \(< 10\) and all tolerances \(> .10\)Footnote 8). For the full statistics of the regressions as per APA standards (Field 2013), please refer to “A.3.” Importantly, we argued in Sect. 4.4.2 that a prediction of only semantically related (R) but not of semantically unrelated (U) features would indicate that the viewer-type questionnaire was a mere transformation of the elements that were used in the preliminary conceptualization process. As shown in Table 8, all except the one aforementioned feature could be predicted by the viewer-type questionnaire, irrespective of the semantic relation to the elements, thus showing additional construct validity of the viewer-type questionnaire. To ease the reading process of the overview in Table 8, three examples will be explained in the following:

-

1.

Option to hide the live-chat in the streaming platform (Feature 15) The CO score is the strongest predictor and entered in the first step. The score can explain about \(10\%\) of the variance in the preferences of all viewers. No other score can improve this prediction. Thus, the total explained variance results only from this one VT The result for this feature means that a live-stream audience of gaming content will be more likely to prefer an option to hide the live-chat in the streaming platform the higher the overall (i.e., averaged across all viewers) mean score of CO is.

-

2.

Option to start individual polls in the stream (Feature 07) The SA score is the strongest predictor and entered in the first step. Adding the CO score in step 2 increases the total variance explained to \(13\%\). However, the SA likes the feature while the CO dislikes it. Thus, the CO predictor is marked with a minus sign. Thus, a game live-stream audience will be more likely to prefer an option to start individual polls in the stream the higher the overall mean score of SA is. This likelihood of preference will be even higher the lower the overall mean score of CO is.

-

3.

Automatic read-out of donator’s text message upon donation receipt (Feature 13) The FS score is the strongest predictor and entered in the first step. Adding the SA score in step 2 and the SP score in step 3 increases the total variance explained to \(27\%\). Thus, a game live-stream audience will be more likely to prefer the automatic read-out of the text messages of donators upon donation receipt the higher the overall mean score of FS is. This likelihood of preference will be even higher given higher overall mean scores of SA and SP in the streamer’s audience.

4.4.4 Part B: viewer-type predictability summary

This part of the study had the goal to find connections between individual features and viewer types. Finding such connections is something that is typically investigated when player/user types (e.g., Tondello et al. 2018a) or personality traits (e.g., Orji et al. 2017), as discussed in the related work section, are investigated. As we were able to show that viewer types can be used to predict preferences for features, this not only adds to our goal G3, it also directly underlines the usefulness of the constructs, especially from a practical perspective (as we will discuss below).

5 General discussion

Based on the indications of related work that there seem to be measurable and observable differences across viewers of game live-streams, we set out to not only identify these (G1), but also to have a chance to assess them through the usage of a questionnaire to establish a standard tool for future use (G2). In two studies, we were able to identify five viewer types that can be assessed through a 25-item questionnaire, which we were able to validate in this paper.

Based on our chosen approach (i.e., creating an initial exploratory data set of prototype items), we do not claim that it is impossible to identify further viewer types or alternative classification models (as can also be seen in the game and gamefulness domain; see related work), but the contribution lies in that we are the first, to our knowledge, to investigate this specifically in the live-streaming domain and provide an assessment tool. Furthermore, we were able to show relationships between preferences for features and streamers’ behaviors and the resulting viewer types (G3): 37 of a set of 38 features could be significantly predicted by a viewer-type score (the feature “Option to disable stream pop-ups” reached the highest average preference with the lowest deviation, that is, the highest agreement between participants, and could not be predicted by individual viewer types), although the prediction could be improved for almost half (18) of the features by including additional viewer-type scores. We conclude that all of our goals have been addressed: the viewer-type questionnaire can be applied, the viewer types theoretically interpreted, and the scores used for predicting actual preferences of viewers. As stated before, the 37 features are only examples to show that such a connection exists, and future work can more deeply investigate further connections. In the meantime, the provided definitions for the viewer types provide a structure for how other features not included in the 37 investigated might be classified.