Abstract

Self-explanation prompts in example-based learning are usually directed backwards: Learners are required to self-explain problem-solving steps just presented (retrospective prompts). However, it might also help to self-explain upcoming steps (anticipatory prompts). The effects of the prompt type may differ for learners with various expertise levels, with anticipatory prompts being better for learners with more expertise. In an experiment, we employed extensive modelling examples and different types of self-explanations prompts to teach 78 automotive apprentices a complex and job-relevant problem-solving strategy, namely the diagnosis of car malfunctions. We tested the effects of these modelling examples and self-explanation prompts on problem-solving strategy knowledge and skill, self-efficacy, and cognitive load while learning. In two conditions, the apprentices learned with modelling examples and received either retrospective or anticipatory prompts. The third condition was a control condition receiving no modelling examples, but the respective open problems. In comparison with the control condition, modelling examples did not promote learning. However, we observed differential effects of the self-explanation prompts depending on the learner’s prior knowledge level. Apprentices with higher prior knowledge learned more when learning with anticipatory prompts. Apprentices with less prior knowledge experienced a greater increase in self-efficacy and a higher germane cognitive load when learning with retrospective prompts. These findings suggest using different self-explanation prompts for learners possessing varying levels of expertise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

When learning a problem-solving strategy, learners are often first instructed about the strategy and then study worked-out solutions of problems that have been solved with the instructed strategy (VanLehn, 1996). Such exemplary solved problems can be text-based worked examples (e.g., Najar & Mitrovic, 2013) or video-based modelling examples (e.g., screencasts showing a model’s action on a computer; van Gog & Rummel, 2010). Studying worked or modelling examples frees up cognitive capacities and is thus more beneficial for learning than independently practising to apply the instructed strategy to solve problems (worked or modelling example effect; McLaren & Isotani, 2011; Renkl, 2014; Sweller, 2006; van Gog et al., 2019; van Gog & Rummel, 2010). Examples are especially beneficial for novices (see expertise-reversal effect; Kalyuga & Renkl, 2010). Regarding (video-based) modelling examples, research has focused on modelling examples illustrating rather brief and simple problem-solving strategies (e.g., Fiorella et al., 2017: assembling an electrical circuit, 90 s; Hoogerheide, 2016; Hoogerheide et al., 2018: calculating current, voltage, and resistance, 240 s). However, there are also often situations where learners need to learn longer and more complex problem-solving strategies. For example, automotive apprentices need to learn how to diagnose car malfunctions (Abele, 2018; Abele & von Davier, 2019). To teach such more complex problem-solving strategies, more extensive modelling examples are necessary. However, such extensive examples have been studied rarely. Consequently, the first aim of the paper was to investigate the effectiveness of more complex and, therefore, longer video-based modelling examples for teaching a complex problem-solving strategy.

It is assumed that examples are conducive to learning because they free up cognitive capacities (van Gog et al., 2019). Generative learning activities stimulated by, for example, self-explanation prompts ensure that these capacities are used for learning (Renkl & Eitel, 2019). So far, prompts usually ask learners to explain previous examples or previous steps of an example (i.e., retrospective self-explanation prompts). Prompts targeting the upcoming contents of an example have hardly been investigated (Bisra et al., 2018). Such anticipatory self-explanation prompts are probably more cognitively demanding, but potentially more conducive to learning. Presumably, the learners’ prior knowledge is a crucial prerequisite of whether they can manage the more demanding anticipatory prompts. Hence, the second aim of the paper was to compare the effects of retrospective and anticipatory self-explanation prompts for learners possessing different levels of prior knowledge.

Cognitive load theory and example-based learning

The effects of worked examples and self-explanation prompts can be explained via the Cognitive Load Theory (CLT; Sweller et al., 1998, 2011). In this paper, we refer to the still widely used conception of CLT from 1998Footnote 1. CLT assumes that working memory capacity is limited and that learning induces three distinct types of cognitive load on working memory: germane cognitive load (GCL), intrinsic cognitive load (ICL), and extraneous cognitive load (ECL; Sweller et al., 1998). If the sum of these three load types exceeds available working memory capacities, learning fails. GCL describes the working memory load resulting from learning-related activities. Such activities include, for example, organizing and integrating new information with existing prior knowledge (see SOI model; Fiorella & Mayer, 2016). ICL is determined by the learning material’s complexity and the learner’s (prior) knowledge. That is, more complex learning materials (i.e., learning materials with higher element interactivity) induce higher ICL. However, the more prior knowledge learners have about a learning topic, the lower the ICL they experience. If learners have prior knowledge of a topic, they already have cognitive schemas enabling them to combine multiple elements from the learning material and handle those as a single element in their working memory. Element interactivity, and thus ICL, decreases. The third type of cognitive load is ECL, which is unproductive and learning-unrelated. Learning materials containing irrelevant information, redundant repetition, or numerous references induce higher ECL. Given the same task (i.e., same element interactivity) and the same learners (i.e., same prior knowledge), ICL is considered fixed. Therefore, to ensure that sufficient working memory resources are available for GCL, ECL should be minimized (e.g., Mayer & Moreno, 2003).

A well-studied method to minimize ECL is worked examples or modelling examples (see worked example effect; Renkl, 2014; Sweller, 2006; Sweller et al., 1998): Learning with examples usually comprises an introduction of the problem-solving strategy, followed by examples demonstrating how to solve example problems (Renkl, 2014; VanLehn, 1996). Learners having to solve the corresponding problems themselves instead of studying examples usually apply general problem-solving strategies not conducive to learning (e.g., trial-and-error, means-ends analysis); these general problem-solving strategies may sometime lead to a correct solution but not to an understanding of the specific strategy to be learned. Hence, such general (weak) strategies can also be considered a learning-irrelevant activity inducing ECL (Renkl, 2014; Sweller et al., 1998; van Gog et al., 2019). Learners studying examples, on the other hand, can devote enough of their cognitive capacities to understanding how the problem-solving strategy is applied to the example problem(s). ECL is thus reduced, and GCL increases - effects that should lead to better learning outcomes (Renkl et al., 2009). Much recent research has focused on video-based modelling examples, such as screencasts showing a model’s actions on a computer (van Gog & Rummel, 2010). In the present paper, we also focussed on just such video-based modelling examples.

Besides beneficial effects on cognitive load and learning, studying (modelling) examples (in comparison to more open learning formats like inventing, or independent problem solving) is also known to promote self-efficacy (Glogger-Frey et al., 2015; Hoogerheide et al., 2014, 2018; van Harsel et al., 2019). Self-efficacy describes how confident learners are in performing a specific task (Bandura, 1997). Observing how a model successfully solves a task can strengthen learners’ confidence that they can perform the task as well (Bandura, 1997; Schunk, 1995). For example, van Harsel et al. (2019) investigated how different sequences of studying examples and problem solving would affect various motivational aspects including self-efficacy. They found that studying examples only resulted in greater self-efficacy than mere problem solving (van Harsel et al., 2019). Finally, self-efficacy exerts a strong influence on learning outcomes, as it positively affects academic motivation and learning behaviour, such as learning perseverance (Bandura, 1997; Multon et al., 1991; Schunk, 1995).

Modelling examples are known to benefit learning in various domains and settings. However, in most cases, such problem-solving strategies were comparatively simple and could be taught with shorter modelling examples (e.g., Fiorella et al., 2017: assembling an electrical circuit, 90 s; Hoogerheide, 2016; Hoogerheide et al., 2018: calculating current, voltage, and resistance, 240 s). We can assume that substantially longer examples than in earlier studies, namely those illustrating more complex problem-solving strategies, also reveal beneficial effects on learners‘ cognitive load, learning outcomes, and self-efficacy. However, to our knowledge, this assumption has hardly been investigated so far. We, therefore, aimed to replicate the worked or modelling example effect with video-based modelling examples for more complex problem-solving strategies.

Self-explanation prompts

By reducing ECL, examples liberate working memory capacities. To ensure that these capacities are used for learning (i.e., ensuring that GCL increases), learners should engage in self-explanations (Hilbert & Renkl, 2009) which can be elicited with self-explanation prompts (Atkinson et al., 2003; Renkl et al., 1998). With such prompts, learners are explicitly asked to relate the content in the illustrative example to the problem-solving strategy explained in an earlier instruction. For example, Hilbert and Renkl (2009) used two paper-based worked examples to teach students a circular, three-step-process of concept mapping that had already been introduced (Hilbert & Renkl, 2008). While worked examples alone failed to promote learning (Hilbert & Renkl, 2009; experiment 1), the combination of worked examples and self-explanation prompts proved beneficial for learning (Hilbert & Renkl, 2009; experiment 2). The self-explanation prompts used in experiment 2 asked students to explain: ‘To which phase of the concept mapping process can you assign what Carolin/Karsten just did? Why?’ (Hilbert & Renkl, 2009, p. 271) with Carolin and Karsten being fictitious students in the examples.

In this case and in most studies, self-explanation prompts refer to aspects already shown in corresponding examples (e.g., Berthold et al., 2009; Hilbert et al., 2008; Klein et al., 2019). We refer to such backwards-directed prompts as retrospective self-explanation prompts. Another potentially successful type of self-explanation prompts is directed forward: in Renkl (1997), successful learners were, inter alia, those who thought about a problem’s upcoming solution steps (anticipative reasoning). Consequently, anticipatory self-explanation prompts, that is, prompts referring to upcoming problem-solving steps could also be useful. Referring to the study by Hilbert and Renkl (2009), such an anticipatory prompt could be ‘Which step of concept mapping comes next and what will Carolin/Karsten have to do?’

Anticipatory and retrospective prompts presumably induce different cognitive processes: When answering retrospective self-explanation prompts, learners have to consider only previous steps in the illustrated problem-solving strategy. Conversely, when answering anticipatory prompts, learners have to represent the problem-solving strategy’s next step. However, these mental processes can only take place by relying on already-completed problem-solving steps. Consequently, when learning with anticipatory prompts, more elements (i.e., the prior and subsequent step) must be considered overall, but more relevant information also has to be organized and integrated (Fiorella & Mayer, 2016).

In CLT terms, this could mean two things for learners’ cognitive load: First, anticipatory prompts might induce higher GCL than regular retrospective prompts, as learners are prompted to organize and integrate more information. On the other hand, as more information to be considered results in greater element interactivity, anticipatory prompts will likely also induce higher ICL and might therefore be more demanding. Presumably, only those learners with greater prior knowledge will successfully manage the increased demands of such anticipatory prompts while remaining able to invest considerable amounts of GCL. Learners with lower prior knowledge, on the other hand, might be overwhelmed by the increased demands of the anticipatory prompts and will thus experience higher ICL (Gerjets et al., 2006; van Merriënboer et al., 2006). Consequently, in terms of learning outcomes, only learners with higher prior-knowledge levels can be expected to benefit from anticipatory prompts.

Self-explanation prompts affect both learners’ cognitive processes and thus their cognitive load and learning outcomes, but they also influence learners’ self-efficacy regarding the learning topic. For example, Crippen and Earl (2007) developed a web-based learning tool to teach undergraduate students problem solving skills in the domain of chemistry with quizzes. Students were allocated to one of three experimental conditions: in the control condition, students learned with the quizzes only. In the other two conditions, students were also provided with worked examples for each quiz item. Additionally, in one of these conditions, students were prompted to self-explain the worked examples. Regarding self-efficacy, these authors found that worked examples alone revealed no effects on self-efficacy, but worked examples provided together with self-explanation prompts did exert a positive effect on students’ self-efficacy (Crippen & Earl, 2007). The question as to whether retrospective or anticipatory prompts reveal different effects on learners’ self-efficacy cannot be answered based on existing research evidence.

Taken together, the potential positive effects of anticipatory prompts supposedly depend on whether learners can cope with the increased demands. Hence, the learners’ prior knowledge likely plays an important role in the relationship between prompt type and cognitive load, learning outcomes, and self-efficacy. However, these theoretical considerations cannot be substantiated with empirical evidence, as anticipatory prompts have seldom been investigated (Bisra et al., 2018).

Present study and research questions

The present study was conducted with automotive apprentices who were taught a diagnostic strategy to diagnose complex automotive malfunctions (Abele, 2018; Abele & von Davier, 2019). Although diagnoses of malfunctions are a crucial part of an automotive technicians day-to-day work (Spöttl et al., 2011), at the end of their 3-year apprenticeship, only 15% of the apprentices master the required strategies to diagnose complex malfunctions; they can thus be considered novices (Abele & von Davier, 2019). We pursued two goals: First, we investigated the use and possible limitations of longer and more comprehensive modelling examples in a screencast video format for teaching a job-relevant and complex problem-solving strategy, namely diagnosing car malfunctions. Second, we compared the effects of anticipatory and retrospective self-explanation prompts for these modelling examples. For this comparison, we considered the apprentices’ general prior knowledge of car diagnoses. We examined the effects of modelling examples and self-explanation prompts on apprentices’ diagnostic strategy knowledge and skills (i.e., knowledge about and application of the instructed strategy), self-efficacy, and cognitive load. Diagnostic strategy knowledge and skills and self-efficacy were measured before and after the intervention. Cognitive load was measured only after the intervention.

We investigated the following hypotheses regarding modelling examples:

-

H1: Following the worked or modelling example effect (Renkl, 2014; Sweller, 2006), we expected a greater increase in diagnostic strategy knowledge and skills from a pretest to a posttest when the apprentices learned with modelling examples than when apprentices practised applying the diagnostic strategy by solving open problems.

-

H2: We expected a greater increase in self-efficacy among apprentices learning with modelling examples than among those practising applying the strategy (Crippen & Earl, 2007; Schunk, 1995).

-

H3: Following the example-based learning literature (e.g., Renkl et al., 2009), we expected apprentices in the modelling example condition to perceive lower extraneous and higher germane cognitive load while learning than apprentices practising to apply the strategy.

Moreover, we were interested in whether the effects of different self-explanation prompts depend on prior knowledge. However, since these effects have hardly been researched so far, we formulated no specific hypotheses. Instead, we posed these three open research questions:

-

RQ1: Do anticipatory and retrospective self-explanation prompts reveal differential effects on the development of apprentices’ diagnostic strategy knowledge and skills and does their prior knowledge moderate these effects?

-

RQ2: Do anticipatory and retrospective prompts exert differential effects on the development of apprentices’ self-efficacy and does their prior knowledge moderate these effects?

-

RQ3: Third, do anticipatory and retrospective prompts demonstrate differential effects on apprentices’ extraneous, intrinsic, and germane cognitive load while learning, and does their prior knowledge moderate these effects?

Methods

Participants

Originally, 78 apprentices participated in our experiment. Because of technical problems with the survey software, only 67 complete data sets could be analysed. Apprentices were 20.85 years old (SD = 2.74), 65 were male, and two were female. German was the first language of 57 apprentices, and 10 reported an additional first language. Seven apprentices had a university entrance qualification (Abitur), 55 apprentices had a secondary school leaving certificate (Mittlere Reife), and five apprentices had a lower secondary school leaving certificate (Hauptschulabschluss).

To determine the required sample sizes, we conducted two a-priori power analyses with Gpower 3.1 (Faul et al., 2007). We aimed for a power of 0.80. Based on previous studies on the worked example effect (e.g., Nievelstein et al., 2013; Schwonke et al., 2009; van Gog et al., 2011) and self-explanation prompts (e.g., Atkinson et al., 2003; Hilbert & Renkl, 2009), we expected medium effect sizes (e.g., Cohen’s f > 0.25 or η2 > 0.06; Cohen, 1988). For the analyses regarding hypotheses H1 and H2 and research questions RQ1 and RQ2 (i.e., repeated measures analyses of variance, RM-ANOVAs), the required sample size was N = 34 (about half of the collected sample). For the analyses regarding hypothesis H3 and research question RQ3 (i.e., analyses of variance, ANOVAs), the required sample size was N = 128. As we had to stop collecting data at an early stage because of school closures during the COVID-19 pandemic, the required sample size for the ANOVAs could not be realized. A larger sample may have enabled us to demonstrate additional effects. However, the effects we did discover can still be interpreted.

Design and procedure

The experiment comprised two sessions separated by approximately 10 days. Table 1 shows the detailed procedure. Session one included the pretests, session two comprised the intervention and posttests. In the intervention in session two, first, apprentices in all conditions learned about the diagnostic strategy with instructional videos and organizational prompts. Then, they learned according to their randomly assigned experimental condition: Two groups received modelling examples, one (n = 21) with retrospective self-explanation prompts and the other (n = 25) with anticipatory self-explanation prompts. The third group (control, n = 21) received no modelling examples and no self-explanation prompts.

The entire study took place on computers in the apprentices’ schools. All learning and testing materials, which can be requested from the first author, were presented in digital form via the page-based online survey tool LimeSurvey. Once apprentices left a page, they could not go back. We told participants when we expected them to have completed a phase and to proceed with the next phase. Thereby we ensured an equal time on task within and between conditions (see maximum durations in Table 1).

Learning materials

In the experimentally varied intervention, apprentices learned a complex diagnostic strategy that should help them to diagnose car malfunctions in a structured way. The development of this strategy and the intervention is described in detail by Meier et al. (2022): The cyclical diagnostic strategy comprises four steps: (1) formulating a hypothesis about possible causes for a malfunction, (2) planning a measurement to test this hypothesis, (3) carrying out the measurement, and (4) evaluating the measurement results and the hypothesis. Steps one and two include additional sub-principles. Consequently, this diagnostic strategy can be considered a complex problem-solving strategy (Abele, 2018; Abele & von Davier, 2019; Meier et al., 2022).

The intervention comprised two learning phases (see Table 1). In the first learning phase, apprentices in all conditions watched five animated instructional videos explaining the strategy (16:33 min, Fig. 1). All participants also completed four practice tasks during this phase, which served as organizational prompts (Roelle et al., 2017), and received the correct solution. Learning phase one took 35 min.

Screenshots of the instructional videos in german. Note The top left picture shows the introduction to diagnostic strategy; the top right picture explains a sub-principle in step one; the bottom left picture explains how to plan a measurement with an electrical circuit diagram in step two; the bottom right picture gives an overview of the complete diagnostic cycle in step four

In learning phase two, we implemented both experimental variations. The first experimental variation concerned the modelling examples: Participants in the two modelling example conditions (i.e., both in the retrospective and in the anticipatory self-explanation prompt condition) received two video-based modelling examples showing an expert applying the diagnostic strategy in a computer simulation (Gschwendtner et al., 2009; Meier et al., 2022). Each diagnostic step was illustrated in a separate video. Hence. both modelling examples consisted of several videos (first example: 12 videos; 25:50 min; second example: 10 videos; 19:37 min). Figure 2 shows how the expert uses the electrical circuit diagram in the computer simulation to plan a measurement in step two. Figure 3 illustrates how the expert then executes the corresponding measurement. Participants in the control condition did not receive the modelling examples but tried to diagnose the same diagnostic problems in the computer simulation (Gschwendtner et al., 2009; Meier et al., 2022). Hence, instead of studying the worked-out solutions to the two diagnostic problems in the modelling examples, participants in the control condition were required to solve the problems independently, that is, to practise applying the diagnostic strategy on their own.

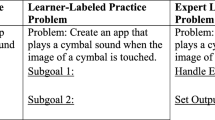

After each video of the modelling examples, participants answered the same self-explanation prompt in writing. With these prompts, we implemented the second experimental variation. Depending on the condition, the prompt differed: In the retrospective self-explanation prompt condition, the prompt read as “Which troubleshooting step was just completed? Explain how you will proceed with this step and why it is important for troubleshooting (in general)”. In the anticipatory self-explanation prompt condition the prompt was “Which troubleshooting step comes next? Explain how you will proceed with this step and why it is important for troubleshooting (in general)”. For the first four prompts, participants were supported in their answers by answering fill-in-the-blank self-explanation prompts (i.e., assisting self-explanation prompts; Berthold et al., 2009). For all following prompts, participants received suggestions for how to start their answers’ first sentences. Participants did not receive individual feedback but the correct answer for each prompt after answering it, that is, they received an example of how the respecting prompt could have been answered correctly.

As participants in the control condition did not receive the modelling examples, they did not receive any self-explanation prompts.

Testing materials

To investigate the effects of modelling examples and different self-explanation prompts depending on the learners’ general prior knowledge on diagnostic strategy knowledge and skills, self-efficacy, and cognitive load, different tests were used (see Table 1): To assess general prior knowledge about car diagnoses, we used two different tests in session one. For diagnostic strategy knowledge and skills (i.e., knowledge about and application of the instructed diagnostic strategy) three tests were given in both sessions one (i.e., before the intervention) and two (i.e., after the intervention). Likewise, a questionnaire assessing the apprentices’ self-efficacy in performing diagnoses was used in sessions one and two. Finally, a questionnaire aiming at the apprentices’ cognitive load was given after the intervention in session two. All these tests are described below. Closed and open items were used in most of them. Closed items were scored automatically. For all open items, the first author and a subject matter expert (i.e., the second author) developed a coding scheme. We developed these schemes based on ideal responses to the different tests. Ideal means that these responses were perfectly in line with the taught diagnostic strategy. In addition, we also looked for alternative solutions in the responses of all participants that could be assessed as similarly good from a subject matter perspective. Then, a student assistant and the first author scored 25% of all answers and adjusted the coding schemes until achieving an interrater reliability of Cohen’s Kappa > 0.8. Then the student assistant independently scored the remaining answers.

General Prior Knowledge tests

As a first measure of general prior knowledge about car diagnoses, we selected five out of 24 items in the diagnosis-relevant reception competence (DRC) test by Norwig et al. (2021). This competence describes the ability to read various documents relevant to the diagnosis (e.g., electrical circuit diagrams) and can thus be seen as prerequisite knowledge for car diagnoses. For example, we gave participants a schematic diagram and a photo of an engine compartment and asked them to use the schematic diagram to locate a particular component in the realistic photo. We selected items with a midrange solution rate (ranging from 32 to 71% in Norwig et al., 2021) to prevent floor and ceiling effects and with the highest item-total correlation (> 0.43 for all 5 items).

Second, we selected three of seven items of a partial skills test by Abele (2014) with a high item-total correlation (between 0.48 and 0.60 in Abele, 2014). In these items, participants were instructed to perform specific measurements in the simulation and to evaluate whether the measurement results indicated a malfunction or not.

Diagnostic strategy knowledge and skills tests

We administered three different tests to measure the apprentices’ diagnostic strategy knowledge and skills in the pretest (i.e., in session one) as well as in the posttest (i.e., after the intervention in session two). First, the strategy description test measured conceptual knowledge and comprised two questions asking participants (1) to describe their troubleshooting procedure in a situation where they are given little assistance from a computer-based expert system (i.e., complex diagnostic problems), and (2) how they would narrow down which components might be responsible for a malfunction.

Second, in the strategy completion test, apprentices carried out or described (parts of) steps of the diagnostic strategy in four different scenarios. Within these scenarios, closed and open questions were used. The former dealt, for example, with which diagnostic step should be taken next in the current scenario. In the open-ended questions, the apprentices, for example, studied a circuit diagram and described an appropriate measurement.

Third, to test diagnostic skills, participants performed diagnoses in the computer simulation. They were provided with a description of the malfunction and then diagnosed it. Eventually, participants described the cause of the malfunction and how it could be repaired. Participants made their first diagnosis in both the pretest in session one and the posttest in session two, and one additional second diagnosis in the posttest only.

Self-efficacy and cognitive load

Both in the pretest and posttest and before performing the first diagnosis in the computer simulation, participants rated their self-efficacy regarding this diagnosis with five items on a seven-point Likert scale (Cronbach’s α = 0.89). These items were developed based on Bandura’s (2006) guide for constructing self-efficacy (Table 2). After the intervention, participants rated their intrinsic (two items), germane (two items) and extraneous cognitive load (three items) on a seven-point Likert-scale (Table 2). These items were developed and validated by Klepsch and colleagues (Klepsch et al., 2017; Klepsch & Seufert, 2020, 2021). Reliability was acceptable (intrinsic load: Cronbach’s α = 0.74; germane load: Cronbach’s α = 0.84; extraneous load: Cronbach’s α = 0.60).

Data analyses

To test the effects of modelling examples and self-explanation prompts on variables measured in the pretest (i.e., session 1) and posttest (i.e., session 2), we ran repeated measures analyses of variance (RM-ANOVAs) with timepoint (pretest versus posttest) as within-subjects variable and either example condition (modelling examples yes versus no; for H1 to H3) or self-explanation prompt condition (retrospective versus anticipatory prompts; for RQ1 to RQ3) as between-subjects variable. For the latter, the mean-centered test scores on both prior knowledge tests were included as additional continuous factors (Schneider et al., 2015). Significant three-way interactions of timepoint, prompt condition, and a moderating prior knowledge test score were further explored using the Johnson-Neyman procedure. The Johnson-Neyman procedure identifies boundaries of significance along the continuous moderating variable (i.e., prior knowledge test scores). In other words, we identified (mean-centered) prior knowledge test scores at which the difference in the corresponding dependent variable between the retrospective and the anticipatory prompt condition became significant and yielded an α value of 0.05. For participants with (mean-centred) prior knowledge test scores beyond this boundary, the difference in the corresponding dependent variable between the retrospective and anticipatory prompt conditions thus became significant (Hayes & Matthes, 2009; Montoya, 2019).

For variables only measured once (session 2), regular analyses of variance (ANOVAs) with either example condition (modelling examples yes versus no; H1 and H3) or self-explanation prompt condition (retrospective versus anticipatory prompts; RQ1 and RQ3) as between-subjects factors were conducted.

A significance level of 0.05 applied to all analyses. As effect size we used η2partial with 0.01, 0.06, and 0.14 corresponding to a small, medium, and large effect, respectively (Cohen, 1988; Lakens, 2013). Analyses were conducted with IBM SPSS Statistics 27.

Results

Apart from two exceptions, there were no differences in demographic variables, prior knowledge tests, or first measures of repeated measures between the two example conditions or two prompt conditions (all p > .05). The first exception was the age between the two example conditions, F(1, 65) = 4.227; p = .044. However, as age did not correlate with any of the dependent variables (or with the development of the dependent variables with repeated measures), this difference is negligible. Second, in the pretest, apprentices in the modelling example condition scored significantly higher in the first diagnosis in the simulation than apprentices did in the control condition (see Table 3), F(1, 65) = 7.727; p = .007. This difference must be considered in the later interpretation of our results. In the following section, we first report the effects of modelling examples. In the second section, we report the effects of retrospective versus anticipatory self-explanation prompts.

Effects of modelling examples

Table 3 illustrates descriptive data. Table 4 shows the results of the statistical tests.

Tables 3 and 4 indicate significant main effects of timepoint on strategy description and strategy completion test scores with large effects. In both tests, participants in both conditions scored significantly higher in the posttest than in the pretest. However, there were no interaction effects of example condition and timepoint on these scores. Hence. this improvement was not larger in the modelling example condition. There were no effects on self-efficacy, either as main effects by timepoint or example condition or as interaction effects.

We observed a significant main effect of timepoint and an interaction effect of example condition and timepoint on the score in the first diagnosis. However, both effects arise from a difference in the first measurement of the score on the first diagnosis, which we already pointed out at the beginning of the results section. Thus, these effects should be disregarded.

Regarding the variables measured only once, we detected no effects of modelling examples on participants’ score on the second diagnosis, nor any effects on participants’ ICL, GCL, or ECL.

Taken together, we were unable to confirm hypotheses H1 to H3: modelling examples did not lead to a greater increase in diagnostic strategy knowledge and skills (H1), or in self-efficacy (H2), and participants in the modelling examples did not perceive lower ECL and higher GCL (H3).

Effects of retrospective versus anticipatory self-explanation prompts

Table 5 shows the descriptive data of the dependent variables for the two self-explanation prompt conditions. Table 6 shows the results of the statistical tests of the effects of the different self-explanation prompts.

We noted significant main effects of timepoint on the strategy description test score and strategy completion test score with large effects. These effects correspond to the effects we had already observed when comparing the two example conditions.

Regarding research questions RQ1 and RQ2, we detected no interaction effects of timepoint and prompt condition on any of the dependent variables. When also considering participants’ prior knowledge, however, our results revealed two significant three-way interactions of timepoint, prompt condition, and the DRC test score on the strategy description test score (RQ1) and self-efficacy (RQ2). Concerning strategy description test scores (see Fig. 4), the Johnson-Neyman procedure indicated that for learners with mean-centered DRC test scores larger than 1.68, that is, the higher prior knowledge participants, retrospective prompts had detrimental effects and anticipatory prompts had beneficial effects on the difference of strategy description test scores, t(42) = 2.02, p = .05.

Scatter plot of grand mean-centered DRC test scores against difference in strategy description test scores for the retrospective prompt condition and anticipatory prompt condition. Note The differential effect of retrospective and anticipatory prompts on the difference in strategy description test scores is significant right of the vertical longer dashed line (DRC test scores > 1.68)

Regarding self-efficacy (RQ2; see Fig. 5), the Johnson-Neyman procedure indicated that for participants with mean-centered DRC test scores lower than − 0.85, that is, the lower prior knowledge participants, retrospective prompts had beneficial effects and anticipatory prompts had detrimental effects in terms of difference in self-efficacy, t(42) = -2.02, p = .05.

Scatter plot of grand mean-centered DRC test scores against difference in self-efficacy for the retrospective prompt condition and anticipatory prompt condition. Note The differential effect of retrospective and anticipatory prompts on the difference in self-efficacy is significant left of the vertical longer dashed line (DRC test scores < -0.85)

Eventually, regarding RQ3, we found that ECL was lower in the anticipatory prompt group. Moreover, we identified a significant two-way interaction of prompt condition and DRC test score on GCL (see Fig. 6). Following the Johnson-Neyman procedure, we found that for lower prior knowledge participants (mean-centered DRC test scores < -0.98) retrospective prompts induced a higher GCL than anticipatory prompts, t(42) = -2.02, p = .05.

Scatter plot of grand mean-centered DRC test scores against germane load for the retrospective prompt condition and anticipatory prompt condition. Note The differential effect of retrospective and anticipatory prompts on GCL is significant left of the vertical longer dashed line (DRC test scores < -0.98)

Taken together, RQ1 to RQ3 cannot be answered unambiguously, but there is a tendency that apprentices with more prior knowledge learned more when learning with anticipatory prompts, while apprentices with less prior knowledge experienced a greater increase in self-efficacy and a higher GCL when learning with retrospective prompts.

Discussion

So far, research on modelling examples has tended to focus on brief modelling examples teaching quite simple problem-solving strategies. Moreover, self-explanation prompts asking learners to explain past problem-solving steps illustrated in an example have mainly been used. Thus, the present study had two objectives: First, we investigated the effects of modelling examples when teaching longer problem-solving strategies, such as diagnosing car malfunctions, on diagnostic strategy knowledge and skills (H1), self-efficacy (H2), and extraneous and germane cognitive load during learning (H3). Second, while taking into account the apprentices’ prior knowledge, we compared the effects of retrospective and anticipatory self-explanation prompts on the development of diagnostic strategy knowledge and skills (RQ1), self-efficacy (RQ2), and cognitive load during learning (RQ3).

Effects of modelling examples

Contrary to H3, we observed that the modelling examples exerted no effects on the apprentices’ extraneous (ECL) or germane cognitive load (GCL). Since example-based learning’s positive effect on learning outcomes relies on reducing ECL and increasing GCL (Sweller, 2006), we would not expect the modelling examples to reveal any positive effect on learning outcomes. Accordingly, and in contrast to our H1, we detected no such effect. One interpretation of this finding is that longer modelling examples are less suitable for teaching complex problem-solving strategies. However, both text-based worked examples (e.g., Heitzmann et al., 2015; Schalk et al., 2020) and video-based modelling examples (Fiorella et al., 2017; Hoogerheide, 2016; Hoogerheide et al., 2014; Schmitz et al., 2017; van Harsel et al., 2019) have proven to be conducive to learning. We assume that we were unable to detect beneficial effects of the modelling examples because of the long instruction phase in which the diagnostic strategy was initially explained to all apprentices, that is, also in the control condition. Learning phase one took 35 min and comprised five instructional videos and four practice tasks that presumably supported knowledge organisation well. This 35-minute instruction phase is substantially longer than in other studies. Schmitz et al. (2017) tested an instruction lasting only 17 min. Some studies used no instruction at all (e.g., Hoogerheide et al., 2014). Therefore, our extensive instruction may have provided all apprentices, that is, also those in the control condition, with sufficient knowledge to begin independent problem solving (i.e., independent diagnosis in the simulation).

Regarding self-efficacy, we expected the modelling examples to promote self-efficacy, as learners would be able to see how the diagnostic process is completed (H2; Glogger-Frey et al., 2015; Schunk, 1995). However, that is not what we observed. One reason for this might be that the inexperienced apprentices could not identify with the model, who was an experienced expert. According to the model-observer similarity principle (Renkl, 2014; van Gog et al., 2019), the model should also have been an apprentice so that the learners could have identified better with it.

We, therefore, recommend future studies investigating the use of longer modelling examples for complex problem-solving strategies to use a shorter instruction phase and a model with which learners can better identify.

Effects of retrospective and anticipatory self-explanation prompts

Between the two prompt conditions we noted different effects on learning outcomes (RQ1), self-efficacy (RQ2), and cognitive load (RQ3) depending on the apprentices’ prior knowledge: We found a greater increase in declarative knowledge (i.e., in the strategy description test) among the stronger apprentices when learning with the anticipatory prompts. Regarding self-efficacy, the weaker apprentices’ self-efficacy was better supported by the retrospective prompts. Similarly, in terms of cognitive load, apprentices with less prior knowledge reported a higher GCL when learning with retrospective prompts. Overall, these effects suggest that anticipatory prompts are more beneficial for learners with more prior knowledge, whereas learners with less prior knowledge profit more from retrospective prompts. However, before we can recommend practitioners to use either anticipatory or retrospective prompts depending on their learners’ prior knowledge when designing example-based learning scenarios for learners with different prior knowledge levels, replication studies are necessary to make sure that these findings are reliable. Unfortunately, we could not conduct a direct replication of our study as the study was very extensive and was conducted in the field, that is, in classrooms at schools during regular school hours. For this study, the participating schools had to adapt their curricula to allow us access. Nevertheless, a conceptual replication or an extension study (Zwaan et al., 2018) that investigates the effectiveness of similarly designed anticipatory prompts for teaching a cyclical problem-solving strategy would be helpful to examine the specific mechanisms of the anticipatory prompts for learners with different prior knowledge levels. Such an extension study could also involve think aloud protocols.

Besides the interactions, we also found that the anticipatory prompts induced a lower ECL, that is, a lower learning-irrelevant load. This finding is rather surprising. We expected anticipatory prompts to mainly influence element interactivity and thus ICL. The ECL items mainly addressed the learning material’s (visual) appearance, that is, whether the content was easy to process. However, the two prompt conditions did not differ in their learning materials’ (visual) design. For example, Klepsch and Seufert (2020, study 1), who developed the cognitive load instruments used in the present study, found differences in learners’ ECL ratings when element interactivity had been manipulated and argued that participants sometimes struggle to distinguish between ICL and ECL, which resulted in effects on both scales. However, we could only refer to this argumentation if the anticipatory prompts had caused an increase and not a decrease in ECL.

Limitations and implications for future research

Above, we already gave several recommendations for future research: First, in future studies on longer modelling examples, a shorter instruction phase and a model with which learners can better identify should be used. Second, the recurrent pattern of anticipatory prompts being more beneficial for higher prior knowledge learners and retrospective prompts being more beneficial for lower prior knowledge learners needs to be further investigated – possibly in a laboratory setting with think-aloud protocols.

One limitation of the current study that would be important to address in future research was that we found that the self-explanation prompts were answered sub-optimally. That is, participants in both prompt conditions answered only about 50% of the prompts in a meaningful way. The other half of the prompts were often not answered meaningfully, with participants either entering only single letters or blanks or making entries without any reference to car diagnoses. If learners do not answer prompts properly, it can also be assumed that the expected effects of these prompts will be smaller. Table 7 gives exemplary responses to the self-explanation prompts.

We see two potential reasons for these inadequate answers. First, the self-explanation prompts were not very specific, as they simply asked learners to name and explain the previous or subsequent diagnostic step. Accordingly, they provided little guidance to the learners. For example, Glogger et al. (2009) showed that for ninth graders prompted to apply learning strategies, specific prompts were superior to general prompts. Second, in the present paper, after each diagnostic step in the modelling example, the apprentices answered exactly the same self-explanation prompt. These prompts may have been perceived as too repetitive. The differential effects of retrospective and anticipatory prompts depending on prior knowledge may be even stronger with more specific and more engaging prompts. This possibility should be investigated in the future.

Conclusion

Even if modelling examples did not yield the desired effects in the present study, anticipatory self-explanation prompts seem to function differently from retrospective self-explanation prompts and could be a promising alternative for stronger learners. However, before educational practitioners apply such anticipatory self-explanations prompts, these prompts should be further investigated in future research.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Notes

Recently, Sweller and colleagues have presented new GCL concepts (2019). However, we refer to the 1998 concept in this paper, as it is the basis for most of the research we refer to, and because we had this original concept in mind when developing the learning materials and experimental design.

References

Abele, S. (2018). Diagnostic problem-solving process in professional contexts: Theory and empirical investigation in the context of car mechatronics using computer-generated log-files. Vocations and Learning, 11(1), 133–159. https://doi.org/10.1007/s12186-017-9183-x.

Abele, S., & von Davier, M. (2019). CDMs in vocational education: Assessment and usage of diagnostic problem-solving strategies in car mechatronics. In von M. Davier, & Y. S. Lee (Eds.), Handbook of diagnostic classification models. Springer. https://doi.org/10.1007/978-3-030-05584-4_22.

Abele, S., Walker, F., & Nickolaus, R. (2014). Zeitökonomische und reliable Diagnostik beruflicher Problemlösekompetenzen bei Auszubildenden zum Kfz-Mechatroniker [Time-saving and reliable diagnostics in measuring professional problem-solving competence in the domain of car mechatronics]. Zeitschrift für Pädagogische Psychologie, 28(4), 167–179. https://doi.org/10.1024/1010-0652/a000138.

Atkinson, R. K., Renkl, A., & Merrill, M. M. (2003). Transitioning from studying examples to solving problems: Effects of Self-Explanation prompts and fading worked-out steps. Journal of Educational Psychology, 95(4), 774–783. https://doi.org/10.1037/0022-0663.95.4.774.

Bandura, A. (1997). Self-efficacy: The exercise of control. W.H. Freeman.

Bandura, A. (2006). Guide for constructing self-efficacy scales. In F. Pajares, & T. C. Urdan (Eds.), Self-efficacy beliefs of adolescents (pp. 307–337). IAP - Information Age Pub., Inc.

Berthold, K., Eysink, T. H. S., & Renkl, A. (2009). Assisting self-explanation prompts are more effective than open prompts when learning with multiple representations. Instructional Science, 37(4), 345–363. https://doi.org/10.1007/s11251-008-9051-z.

Bisra, K., Liu, Q., Nesbit, J. C., Salimi, F., & Winne, P. H. (2018). Inducing Self-Explanation: A Meta-analysis. Educational Psychology Review, 30(3), 703–725. https://doi.org/10.1007/s10648-018-9434-x.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). L. Erlbaum Associates.

Crippen, K. J., & Earl, B. L. (2007). The impact of web-based worked examples and self-explanation on performance, problem solving, and self-efficacy. Computers & Education, 49(3), 809–821. https://doi.org/10.1016/j.compedu.2005.11.018.

Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. https://doi.org/10.3758/BF03193146.

Fiorella, L., & Mayer, R. E. (2016). Eight Ways to promote Generative Learning. Educational Psychology Review, 28(4), 717–741. https://doi.org/10.1007/s10648-015-9348-9.

Fiorella, L., van Gog, T., Hoogerheide, V., & Mayer, R. E. (2017). It’s all a matter of perspective: Viewing first-person video modeling examples promotes learning of an assembly task. Journal of Educational Psychology, 109(5), 653–665. https://doi.org/10.1037/edu0000161.

Gerjets, P., Scheiter, K., & Catrambone, R. (2006). Can learning from molar and modular worked examples be enhanced by providing instructional explanations and prompting self-explanations? Learning and Instruction, 16(2), 104–121. https://doi.org/10.1016/j.learninstruc.2006.02.007.

Glogger-Frey, I., Fleischer, C., Grüny, L., Kappich, J., & Renkl, A. (2015). Inventing a solution and studying a worked solution prepare differently for learning from direct instruction. Learning and Instruction, 39, 72–87. https://doi.org/10.1016/j.learninstruc.2015.05.001.

Gschwendtner, T., Abele, S., & Nickolaus, R. (2009). Computersimulierte Arbeitsproben: Eine Validierungsstudie am Beispiel der Fehlerdiagnoseleistungen von Kfz-Mechatronikern [Can troubleshooting skills of car mechatronic technicians validly be assessed using computer-based simulations of real work sample?]. Zeitschrift Für Berufs- Und Wirtschaftspädagogik, 105(4), 557–578.

Hayes, A. F., & Matthes, J. (2009). Computational procedures for probing interactions in OLS and logistic regression: SPSS and SAS implementations. Behavior Research Methods, 41(3), 924–936. https://doi.org/10.3758/BRM.41.3.924.

Heitzmann, N., Fischer, F., Kühne-Eversmann, L., & Fischer, M. R. (2015). Enhancing diagnostic competence with self-explanation prompts and adaptable feedback. Medical Education, 49(10), 993–1003. https://doi.org/10.1111/medu.12778.

Hilbert, T. S., & Renkl, A. (2008). Concept mapping as a follow-up strategy to learning from texts: What characterizes good and poor mappers? Instructional Science, 36(1), 53–73. https://doi.org/10.1007/s11251-007-9022-9.

Hilbert, T. S., & Renkl, A. (2009). Learning how to use a computer-based concept-mapping tool: Self-explaining examples helps. Computers in Human Behavior, 25(2), 267–274. https://doi.org/10.1016/j.chb.2008.12.006.

Hilbert, T. S., Renkl, A., Kessler, S., & Reiss, K. (2008). Learning to prove in geometry: Learning from heuristic examples and how it can be supported. Learning and Instruction, 18(1), 54–65. https://doi.org/10.1016/j.learninstruc.2006.10.008.

Hoogerheide, V. (2016). Effects of Observing and Creating Video Modeling Examples on Cognitive and Motivational Aspects of Learning.

Hoogerheide, V., Loyens, S. M. M., & van Gog, T. (2014). Comparing the effects of worked examples and modeling examples on learning. Computers in Human Behavior, 41, 80–91. https://doi.org/10.1016/j.chb.2014.09.013.

Hoogerheide, V., van Wermeskerken, M., van Nassau, H., & van Gog, T. (2018). Model-observer similarity and task-appropriateness in learning from video modeling examples: Do model and student gender affect test performance, self-efficacy, and perceived competence? Computers in Human Behavior, 89, 457–464. https://doi.org/10.1016/j.chb.2017.11.012.

Kalyuga, S., & Renkl, A. (2010). Expertise reversal effect and its instructional implications: Introduction to the special issue. Instructional Science, 38(3), 209–215. https://doi.org/10.1007/s11251-009-9102-0.

Klein, M., Otto, B., Fischer, M. R., & Stark, R. (2019). Fostering medical students’ clinical reasoning by learning from errors in clinical case vignettes: Effects and conditions of additional prompting procedures to foster self-explanations. Advances in Health Sciences Education, 24(2), 331–351. https://doi.org/10.1007/s10459-018-09870-5.

Klepsch, M., & Seufert, T. (2020). Understanding instructional design effects by differentiated measurement of intrinsic, extraneous, and germane cognitive load. Instructional Science, 48(1), 45–77. https://doi.org/10.1007/s11251-020-09502-9.

Klepsch, M., & Seufert, T. (2021). Making an effort Versus Experiencing load. Frontiers in Education, 6, 645284. https://doi.org/10.3389/feduc.2021.645284.

Klepsch, M., Schmitz, F., & Seufert, T. (2017). Development and validation of two instruments measuring intrinsic, extraneous, and germane cognitive load. Frontiers in Psychology, 8, 1997. https://doi.org/10.3389/fpsyg.2017.01997.

Lakens, D. (2013). Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Frontiers in Psychology. https://doi.org/10.3389/fpsyg.2013.00863. 4.

Mayer, R. E., & Moreno, R. (2003). Nine ways to reduce cognitive load in multimedia learning. Educational Psychologist, 38(1), 43–52. https://doi.org/10.1207/S15326985EP3801_6.

McLaren, B. M., & Isotani, S. (2011). When is it best to learn with all worked examples? In G. Biswas, S. Bull, J. Kay, & A. Mitrovic (Eds.), Artificial Intelligence in Education (6738 vol., pp. 222–229). Springer. https://doi.org/10.1007/978-3-642-21869-9_30.

Meier, J., Spliethoff, L., Hesse, P., Abele, S., Renkl, A., & Glogger-Frey, I. (2022). Promoting car mechatronics apprentices’ diagnostic strategy with modeling examples: Development and evaluation of a simulation-based learning environment. Studies in Educational Evaluation, 72, 101117. https://doi.org/10.1016/j.stueduc.2021.101117.

Montoya, A. K. (2019). Moderation analysis in two-instance repeated measures designs: Probing methods and multiple moderator models. Behavior Research Methods, 51(1), 61–82. https://doi.org/10.3758/s13428-018-1088-6.

Multon, K. D., Brown, S. D., & Lent, R. W. (1991). Relation of self-efficacy beliefs to academic outcomes: A meta-analytic investigation. Journal of Counseling Psychology, 38(1), 30–38. https://doi.org/10.1037/0022-0167.38.1.30.

Najar, A. S., & Mitrovic, A. (2013). Examples and Tutored Problems: How Can Self-Explanation Make a Difference to Learning? In H. C. Lane, K. Yacef, J. Mostow, & P. Pavlik (Eds.), Artificial Intelligence in Education: 16th International Conference, AIED 2013, Memphis, TN, USA, July 9–13, 2013. Proceedings (Vol. 7926, pp. 339–348). Springer Berlin Heidelberg. https://doi.org/10.1007/978-3-642-39112-5.

Nievelstein, F., van Gog, T., van Dijck, G., & Boshuizen, H. P. A. (2013). The worked example and expertise reversal effect in less structured tasks: Learning to reason about legal cases. Contemporary Educational Psychology, 38(2), 118–125. https://doi.org/10.1016/j.cedpsych.2012.12.004.

Norwig, K., Güzel, E., Hartmann, S., & Gschwendtner, T. (2021). „Tools to tap into the content of human minds – think-aloud-interviews und cognitive labs als zentrale Bausteine zur Identifikation von Barrieren in Fehlerdiagnoseprozessen bei Auszubildenden des Kfz-Handwerks und zur Entwicklung addressantespezifischer Lehr-/Lernarrangements [Tools to tap into the content of human minds – the use of think-aloud and cognitive laboratory interviews for identifying cognitive barriers of Car Mechatronics Apprentices Diagnosing Electronic Car Systems and for developing Educational Interventions tailored to the Apprentices’ needs]. Zeitschrift Für Berufs- Und Wirtschaftspädagogik, 117, 658–693. https://doi.org/10.25162/zbw-2021-0025.

Renkl, A. (1997). Learning from worked-out examples: A study on individual differences. Cognitive Science, 21(1), 1–29. https://doi.org/10.1207/s15516709cog2101_1.

Renkl, A. (2014). Toward an instructionally oriented theory of example-based learning. Cognitive Science, 38(1), 1–37. https://doi.org/10.1111/cogs.12086.

Renkl, A., & Eitel, A. (2019). Self-Explaining: Learning About Principles and Their Application. In J. Dunlosky & K. A. Rawson (Eds.), The Cambridge Handbook of Cognition and Education (1st ed., pp. 528–549). Cambridge University Press. https://doi.org/10.1017/9781108235631.022.

Renkl, A., Stark, R., Gruber, H., & Mandl, H. (1998). Learning from worked-out examples: The Effects of Example Variability and Elicited Self-Explanations. Contemporary Educational Psychology, 23(1), 90–108. https://doi.org/10.1006/ceps.1997.0959.

Renkl, A., Hilbert, T., & Schworm, S. (2009). Example-based learning in Heuristic Domains: A cognitive load theory account. Educational Psychology Review, 21(1), 67–78. https://doi.org/10.1007/s10648-008-9093-4.

Roelle, J., Hiller, S., Berthold, K., & Rumann, S. (2017). Example-based learning: The benefits of prompting organization before providing examples. Learning and Instruction, 49, 1–12. https://doi.org/10.1016/j.learninstruc.2016.11.012.

Schalk, L., Roelle, J., Saalbach, H., Berthold, K., Stern, E., & Renkl, A. (2020). Providing worked examples for learning multiple principles. Applied Cognitive Psychology, 34(4), 813–824. https://doi.org/10.1002/acp.3653.

Schmitz, F. M., Schnabel, K. P., Stricker, D., Fischer, M. R., & Guttormsen, S. (2017). Learning communication from erroneous video-based examples: A double-blind randomised controlled trial. Patient Education and Counseling, 100(6), 1203–1212. https://doi.org/10.1016/j.pec.2017.01.016.

Schneider, B. A., Avivi-Reich, M., & Mozuraitis, M. (2015). A cautionary note on the use of the Analysis of Covariance (ANCOVA) in classification designs with and without within-subject factors. Frontiers in Psychology, 6. https://doi.org/10.3389/fpsyg.2015.00474.

Schunk, D. H. (1995). Self-efficacy, motivation, and performance. Journal of Applied Sport Psychology, 7(2), 112–137. https://doi.org/10.1080/10413209508406961.

Schwonke, R., Renkl, A., Krieg, C., Wittwer, J., Aleven, V., & Salden, R. (2009). The worked-example effect: Not an artefact of lousy control conditions. Computers in Human Behavior, 25(2), 258–266. https://doi.org/10.1016/j.chb.2008.12.011.

Spöttl, G., Becker, M., & Musekamp, F. (2011). Anforderungen an Kfz-Mechatroniker und Implikationen für die Kompetenzerfassung [Requirements for automotive mechatronics technicians and implications for the assessment of competencies]. In R. Nickolaus (Ed.), Lehr-Lernforschung in der gewerblich-technischen Berufsbildung (Zeitschrift für Berufs- und Wirtschaftspädagogik: Sonderbd. 25) (pp. 37–53). Steiner.

Sweller, J. (2006). The worked example effect and human cognition. Learning and Instruction, 16(2), 165–169. https://doi.org/10.1016/j.learninstruc.2006.02.005.

Sweller, J., van Merrienboer, J. J. G., & Paas, F. G. W. C. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10(3), 251–296. https://doi.org/10.1023/A:1022193728205.

Sweller, J., Ayres, P. L., & Kalyuga, S. (2011). Cognitive load theory. Springer. https://doi.org/10.1007/978-1-4419-8126-4.

Sweller, J., van Merriënboer, J. J. G., & Paas, F. (2019). Cognitive Architecture and Instructional Design: 20 years later. Educational Psychology Review, 31(2), 261–292. https://doi.org/10.1007/s10648-019-09465-5.

van Gog, T., & Rummel, N. (2010). Example-based learning: Integrating cognitive and social-cognitive research perspectives. Educational Psychology Review, 22(2), 155–174. https://doi.org/10.1007/s10648-010-9134-7.

van Gog, T., Kester, L., & Paas, F. (2011). Effects of worked examples, example-problem, and problem-example pairs on novices’ learning. Contemporary Educational Psychology, 36(3), 212–218. https://doi.org/10.1016/j.cedpsych.2010.10.004.

van Gog, T., Rummel, N., & Renkl, A. (2019). Learning How to Solve Problems by Studying Examples. In J. Dunlosky & K. A. Rawson (Eds.), The Cambridge Handbook of Cognition and Education (1st ed., pp. 183–208). Cambridge University Press. https://doi.org/10.1017/9781108235631.009.

van Harsel, M., Hoogerheide, V., Verkoeijen, P., & van Gog, T. (2019). Effects of different sequences of examples and problems on motivation and learning. Contemporary Educational Psychology, 58, 260–275. https://doi.org/10.1016/j.cedpsych.2019.03.005.

van Merriënboer, J. J. G., Kester, L., & Paas, F. (2006). Teaching complex rather than simple tasks: Balancing intrinsic and germane load to enhance transfer of learning. Applied Cognitive Psychology, 20(3), 343–352. https://doi.org/10.1002/acp.1250.

VanLehn, K. (1996). Cognitive skill acquisition. Annual Review of Psychology, 47(1), 513–539. https://doi.org/10.1146/annurev.psych.47.1.513.

Zwaan, R. A., Etz, A., Lucas, R. E., & Donnellan, M. B. (2018). Making replication mainstream. Behavioral and Brain Sciences, 41, E120. https://doi.org/10.1017/S0140525X17001972.

Acknowledgements

This work was supported by the Federal Ministry of Education and Research, Germany, and the Federal Institute for Vocational Education and Training, Germany, within the project “DigiDIn-Kfz – Digitale Diagnostik und Intervention im Kfz-Wesen ” [Digital diagnostics and intervention in the automotive sector], which is a subproject of the research and transfer initiative “ASCOT+ - Technology-based Assessment of Skills and Competences in Vocational Education and Training” [grant number 21AP004C].

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Meier, J., Hesse, P., Abele, S. et al. Better self-explaining backwards or forwards? Prompting self-explanation in video-based modelling examples for learning a diagnostic strategy. Instr Sci 52, 613–638 (2024). https://doi.org/10.1007/s11251-023-09651-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11251-023-09651-7