Abstract

While game theory has been transformative for decision making, the assumptions made can be overly restrictive in certain instances. In this work, we investigate some of the underlying assumptions of rationality, such as mutual consistency and best response, and consider ways to relax these assumptions using concepts from level-k reasoning and quantal response equilibrium (QRE) respectively. Specifically, we propose an information-theoretic two-parameter model called the quantal hierarchy model, which can relax both mutual consistency and best response while still approximating level-k, QRE, or typical Nash equilibrium behavior in the limiting cases. The model is based on a recursive form of the variational free energy principle, representing higher-order reasoning as (pseudo) sequential decision-making in extensive-form game tree. This representation enables us to treat simultaneous games in a similar manner to sequential games, where reasoning resources deplete throughout the game-tree. Bounds in player processing abilities are captured as information costs, where future branches of reasoning are discounted, implying a hierarchy of players where lower-level players have fewer processing resources. We demonstrate the effectiveness of the quantal hierarchy model in several canonical economic games, both simultaneous and sequential, using out-of-sample modelling.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A crucial assumption made in Game Theory is that all players behave perfectly rationally. Nash Equilibrium (Nash, 1951) is a key concept that arises based on all players being rational and assuming other players will also be rational, requiring correct and consistent beliefs amongst players. However, despite being the traditional economic assumption, perfect rationality is incompatible with many human processing tasks, with models of limited rationality better matching human behaviour than fully rational models (Camerer, 2010; Gächter, 2004; Gabaix et al., 2006). Further, if an opponent is irrational, then it would be rational for the subject to play “irrationally” (Raiffa & Luce, 1957). That is, “[Nash equilibrium] is consistent with perfect foresight and perfect rationality only if both players play along with it. But there are no rational grounds for supposing they will” (Koppl et al., 2002). These assumptions of perfect foresight and rationality often lead to contradictions and paradoxes (Cournot et al., 1838; Glasner, 2022; Hoppe, 1997; Morgenstern, 1928).

Alternate formulations have been proposed that relax some of these assumptions and model boundedly rational players, better approximating actual human behaviour and avoiding some of these paradoxes (Simon, 1976). For example, relaxing mutual consistency allows players to form different beliefs of other players (Camerer et al., 2004; Stahl & Wilson, 1995) avoiding the infinite self-referential higher-order reasoning which emerges as the result of interaction between rational players (Knudsen, 1993) [I have a model of my opponent who has a model of me \(\dots\) ad infinitum (Morgenstern, 1935)] and non-computability of best response functions (Koppl et al., 2002; Rabin, 1957). The ability to break at various points in the higher-order reasoning chain can be considered as “partial self-reference" (Löfgren, 1990; Mackie, 1971). Importantly, rather than implicating negation (Prokopenko et al., 2019), this type of self-reference represents higher-order reasoning as a logically non-contradictory chain of recursion (reasoning about reasoning...). Hence, bounded rationality arises from the ability to break at various points in the chain, discarding further branches. “Breaking" the chain on an otherwise potentially infinite regress of reasoning about reasoning can be seen as the players limitations in information processing, determining when to end the recursion.

Another example of bounded rationality based on information processing constraints is the relaxation of the best response assumption of players, which allows for erroneous play, with deviations from the best response governed by a resource parameter (Goeree et al., 2005; Haile et al., 2008).

In this work, we adopt an information-theoretic perspective on reasoning (decision-making). By enforcing potential constraints on information processing, we are able to relax both mutual consistency and best response, and hence, players do not necessarily act perfectly rational. The proposed approach provides an information-theoretic foundation for level-k reasoning and a generalised extension where players can make errors at each of the k levels.

Specifically, in this paper, we focus on three main aspects:

-

Players reasoning abilities decrease throughout recursion in a wide variety of games, motivating an increasing error rate at deeper levels of recursion. That is, it becomes more and more difficult to reason about reasoning about reasoning \(\dots\) (necessitating a relaxation in best response decisions).

-

Finite higher-order reasoning can be captured by discounting future chains of recursion and ultimately discarding branches once resources run out. This representation introduces an implicit hierarchy of players, where a player assumes they have a higher level of processing abilities than other players, motivating relaxation of mutual consistency.

-

Existing game-theoretic models can be explained and recovered in limiting cases of the proposed approach. This fills an important gap between methods relaxing best response and methods relaxing mutual consistency.

The proposed approach features only two parameters, \(\beta\) and \(\gamma\), where \(\beta\) quantifies relaxation of players’ best response, and \(\gamma\) governs relaxation of mutual consistency between players. In the limit, \(\beta \rightarrow \infty\) best response can be recovered, and in the limit \(\gamma =1\) mutual consistency can be recovered. Equilibrium behaviour is recovered in the limit of both \(\beta \rightarrow \infty , \gamma =1\). For other values of \(\beta\) and \(\gamma\), interesting out-of-equilibrium behaviour can be modelled which concurs with experimental data, and furthermore, in repeated games that converge to Nash equilibrium, player learning can be captured in the model with increases in \(\beta\) and \(\gamma\). Importantly, we also show how fitted values of \(\beta\) and \(\gamma\) also generalise well to out-of-sample data.

The remainder of the paper is organised as follows. Section 2 analyses bounded rationality in the context of decision-making and game theory, Sect. 3 introduces information-theoretic bounded rational decision-making, Sect. 4 extends the idea to capture higher-order reasoning in game theory. We then use canonical examples highlighting the use of information-constrained players in addressing bounded rational behaviour in games in Sect. 5. We draw conclusions and outline future work in Sect. 6.

2 Background and motivation

While a widespread assumption in economics, perfect rationality is incompatible with the observed behaviour in many experimental settings, motivating the use of bounded rationality (Camerer, 2011). Bounded rationality offers an alternative perspective, by acknowledging that players may not have a perfect model of each other or may not play perfectly rationally. In this section, we explore some common approaches to modelling bounded rational decision-making.

2.1 Mutual consistency

Equilibrium models assume mutual consistency of beliefs and choices (Camerer et al., 2003; Camerer, 2003), however, this is often violated in experimental settings (Polonio & Coricelli, 2019) where “differences in belief arise from the different iterations of strategic thinking that players perform" (Chong et al., 2005).

Level-k reasoning (Stahl & Wilson, 1995) is one attempt at incorporating bounded rationality by relaxing mutual consistency, where players are bound to some level k of reasoning. A player assumes that other players are reasoning at a lower level than themselves, for example, due to over-confidence. This relaxes the mutual consistency assumption, as it implicitly assumes other players are not as advanced as themselves. Players at level 0 are not assumed to perform any information processing, and simply choose uniformly over actions (i.e., a Laplacian assumption due to the principle of insufficient reason), although alternate level-0 configurations can be considered (Wright & Leyton-Brown, 2019). Level-1 players then exploit these level-0 players and act based on this. Likewise, level-2 players act based on other players being level-1, and so on and so forth for level-k players acting as if the other players are at level-\((k-1)\). Various extensions have also been proposed (Levin & Zhang, 2022).

A similar approach to level-k is that of cognitive hierarchies (CH) (Camerer et al., 2004), where again it is assumed other players have lower reasoning abilities. However, rather than assuming that the other players are at \(k-1\), players can be distributed across the k levels of cognition according to Poisson distribution with mean and variance \(\tau\). The validation of the Poisson distribution has been provided in Chong et al. (2005), where an unconstrained general distribution offered only marginal improvement. Again, various extensions have been proposed (Chong et al., 2016; Koriyama & Ozkes, 2021) and there are many examples of successful applications of such depth-limited reasoning in literature, for example, Goldfarb and Xiao (2011).

Endogenous depth of reasoning (EDR) is a similar approach to level-k and CH, but it separates the player’s cognitive bounds from their beliefs of their opponent’s reasoning (Alaoui & Penta, 2016). EDR captures player reasoning as if they are following a cost-benefit analysis (Alaoui & Penta, 2022), with cognitive abilities (costs) and payoffs (benefits).

One fundamental similarity across these methods is that they all maintain best-response. That is, they best respond based on the lower-level play assumptions. The following section introduces methods that instead maintain mutual consistency, but relax the best-response assumption.

2.2 Best response

Alternate approaches assume that a player may make errors when deciding which strategy to play, rather than playing perfectly rationally. That is, they relax the best response assumption. Quantal response equilibrium (McKelvey & Palfrey, 1995, 1998) (QRE) is a well-known example, where rather than choosing the best response with certainty, players choose noisily based on the payoff of the strategies and a resource parameter controlling this sensitivity. Another method of capturing this erroneous play is Noisy Introspection (Goeree & Holt, 2004). Utility proportional beliefs (Bach & Perea, 2014) is another method that relaxes the best response assumption, where the authors note that “possibly, the requirement that only rational choices are considered and zero probability is assigned to any irrational choice is too strong and does not reflect how real world [players] reason", giving merit to the relaxation of best-response. By allowing for errors in decision-making, these methods offer a more realistic perspective on how individuals make choices.

2.3 Infinite-regress

When considering reasoning about reasoning, infinite regress can emerge Knudsen (1993). The problem of infinite regress can be formulated as a sequence

where the player is first confronted with an initial choice of an action a from the set of actions A, or a computation f(A). If the action is chosen, the decision process is complete. If, instead, the computation is chosen, the outcome of f(A) must be calculated, and the player is then faced with another choice between the obtained result or yet another computation. This process is repeated until the player chooses the result, as opposed to performing an additional computation. Lipman (1991) investigates whether such sequence converges to a fixed point. A similar approach by Mertens and Zamir (1985) formally approximates Harsanyi’s infinite hierarchies of beliefs (Harsanyi, 1967, 1968) with finite states.

2.4 Contributions of our work

In contrast to existing works, we propose an information-theoretic approach to higher-order reasoning, where each level or hierarchy corresponds to additional information processing for the player. While Alaoui and Penta (2016) capture the trade-off between additional reasoning and payoff, by measuring the first intersection of the players’ payoff improvement (from k to \(k+1\)), and the cost of performing this additional reasoning \(c(k+1)\). This cost function c has to be determined (for all c(k)), for example, with maximum likelihood estimation to estimate the average cost of performing this extra level of reasoning (Alaoui & Penta, 2022). In contrast, we propose capturing this trade-off with information processing costs by using the Kullback–Leibler divergence to constrain the overall change in action probabilities at each stage of reasoning.

Our approach constrains the overall amount of information processing available to the players, leading to potential errors at each stage of reasoning, which is not present in existing level-k type approaches. By doing so, we establish a foundation for “breaking" the chain of higher-order reasoning based on the depletion of players’ information processing resources.

This results in a principled information-theoretic explanation for decision-making in games involving higher-order reasoning. Best response is relaxed with \(\beta < \infty\) (linking to quantal response equilibrium) and mutual consistency is relaxed with \(\gamma < 1\) (linking to level-k type models). Best response and mutual consistency are recovered with \(\beta \rightarrow \infty\) and \(\gamma =1\). This contributes to the existing literature on game theory and decision-making by adopting an information-theoretic perspective on bounded rationality, quantified by information processing abilities. A key benefit of the proposed approach is while level-k models relax mutual consistency, but retain best response, and QRE models relax best response but retain mutual consistency (Chong et al., 2005), the proposed approach is able to relax either assumption through the introduction of two tunable parameters. We apply this model to various games, demonstrating the usefulness of the proposed approach for capturing human behaviour when compared to these existing approaches.

3 Technical preliminaries: information-theoretic bounded rationality

Information theory provides a natural way to reason about limitations in player cognition, as it abstracts away specific types of costs (Wolpert, 2006; Harre, 2021). This means that we can assume the existence of cognitive limitations without speculating about the underlying behavioural foundations. As pointed out by Sims (2003), an information-theoretic treatment may not be desirable for a psychologist, as this does not give insights to where the costs arise from, however, for an economist, reasoning about optimisation rather than specific psychological details may be preferable, for example, in the Shannon model (Caplin et al., 2019). Such models have seen considerable success in a variety of areas for information processing, for example, embodied intelligence (Polani et al., 2007), self-organisation (Ay et al., 2012) and adaptive decision-making of agents (Tishby & Polani, 2011) based on the information bottleneck formalism (Tishby et al., 1999).

In this work, we adopt the information-theoretic representation of bounded rational decision-making proposed by Ortega and Braun (2013), which has been further developed in Ortega and Braun (2011), Braun and Ortega (2014), Ortega and Stocker (2016), Gottwald and Braun (2019). This approach has a solid theoretical foundation based on the (negative) free energy principle and has been successfully applied to several tasks (Evans & Prokopenko, 2021). We begin by providing an overview of single-step decisions and then sequential decisions, before discussing extensions for capturing the relationship between processing limitations and higher-order reasoning.

3.1 Single-step decisions

A boundedly-rational decision maker who is choosing an action \(a \in A\) with payoff U[a] is assumed to follow the following negative free energy difference when moving from a prior belief p[a], e.g., a default action, to a (posterior) choice f[a], given by:

The first term represents the expected payoff, while the second term represents a cost of information acquisition that is regularised by the parameter \(\beta\). Formally, the second term quantifies information acquisition as the Kullback–Leibler (KL) divergence from the prior belief p[a]. Parameter \(\beta\), therefore, serves as the resource allowance for a decision-maker.

By taking the first order conditions of Eq. (1) and solving for the decision function f[a], we obtain the equilibrium distribution:

where \(Z=\sum _{a' \in A}p[a'] e^{\beta U[a', x]}\) is the partition function. This representation is equivalent to the logit function (softmax) commonly used in QRE models, and relates to control costs derived in economic literature (Mattsson & Weibull, 2002; Stahl, 1990).

The parameter \(\beta\) serves as a resource allowance for the decision-maker, modulating the cost of information acquisition from the prior belief. Low values of \(\beta\) correspond to high costs of information acquisition (and high error play), while as \(\beta \rightarrow \infty\), information becomes essentially free to acquire, and the perfectly rational homo economicus player is recovered.

3.2 Sequential decisions

This free energy definition can be extended to sequential decision-making by considering a recursive negative free energy difference, as described in Ortega and Braun (2013, 2014). This corresponds to a nested variational problem, and involves introducing new inverse temperature parameters \(\beta _k\) for K sequential decisions, which allows for different reasoning at different depths of recursion to choose a sequence of K actions \(a_{\le K}\).

Therefore, for sequential decision-making, Eq. (1) can be represented as:

where \(a_{<k}\) abbreviates the history \(a_o,..,a_{k-1}\) of decisions. We can expand the sum:

which we can see as first choosing an action \(a_1\) at \(k=1\), while considering that to choose this action, we must consider the future stages by analysing the result at \(k=2\) given the choice \(a_1\), and so forth. To compute this, we can solve the innermost sum first:

which recovers Eq. (2) with the introduction of conditioning on decision histories. This represents the base-case for recursion. For steps where \(k < K\), we get the following equilibrium solution for sequential decisions:

where decisions are now dependent on the history of decisions as well as on a recursive component based on the future contribution of each decision. Here

where \(Z_{K}=1\), i.e., the base-case for recursion at the final level.

3.3 Extension

With this formal and generic treatment of information-processing costs for sequential decision-making, it is desirable to use this to capture bounded rational reasoning of an agent making sequential or simultaneous decisions in games. By representing reasoning as an extensive-form game tree, these two can be captured in a similar manner. Simultaneous decisions can be treated as if they are pseudo-sequential decisions, considering possible repercussions for various choices.

To model higher-order reasoning, we extend the information-theoretic formulation for sequential decisions discussed in Sect. 3.2. This involves representing reasoning as a (pseudo) sequence of decisions, where each decision corresponds to a "level" of reasoning. At each level, players may play incorrectly, producing a level-k play that is modulated by \(\beta _k\). A high \(\beta _k\) corresponds to an exact level-k thinker, while a low \(\beta _k\) corresponds to an error-prone level-k thinker. We can formalise this chain of reasoning as an extensive-form game tree, where at the root node, a player is faced with a decision to choose from a set of available options A or perform additional processing f(A) to acquire new information on the beliefs and repercussions of each choice. If the player chooses not to process additional information, each branch is terminated early, and the player makes a decision based solely on the immediately available information. However, if the player chooses to process additional information, each action branch is (potentially) extended to analyze the possible repercussions of taking an action, and a higher level thinker can examine these repercussions. This process continues until the player runs out of processing resources or, in a finite problem, converges to a solution. For example, in the p-beauty contest analyzed in later sections, convergence occurs once the guess hits 0.

This extensive-form game tree representation means reasoning about simultaneous decisions can be treated in the same manner as decisions in sequential games. However, the key issue here is the requirement of Eq. (6) to have K information processing parameters for each step or level of reasoning. To analyse the purpose of these parameters, we consider a simple case, setting \(\beta _k=\beta\) for all k, giving discrete control (Braun et al., 2011):

which clarifies that the boundedness applies to the computation of payoff U, but the depth of reasoning (or recursion-depth) is dependent on the length of the sequence (or level) K, not on \(\beta\). To represent higher-order reasoning more succinctly, it would be desirable to implicitly base the sequence length K on the resource parameter \(\beta\) rather than keeping them separate. This would allow us to treat recursive reasoning in information-theoretic terms. For instance, when \(\beta \rightarrow \infty\), \(K \rightarrow \infty\), which captures (potential) infinite-regress, while \(\beta =0\) would imply \(K=0\), leaving the player with no information processing abilities.

In order to achieve this, \(\beta\) must be reduced at each level k. This reduction in \(\beta\) at each level is related to the relaxation of mutual consistency, reflecting how a player perceives other (lower-level) players’ reasoning about the problem. In the next section, we outline our proposed approach for modelling this. This extension allows us to reason directly about resource constraints instead of sequential level-k thinking.

4 Proposed approach

The proposed approach implicitly enforces the assumption that, in sequential games, it becomes more difficult to reason to later stages in the game (e.g. in chess, it is difficult to reason 5 steps-ahead), and likewise in simultaneous games, it is difficult to perform the level of higher-order reasoning required to arrive at the equilibrium solution. The further a player mentally tries to reason, the more likely an error is to occur, as the processing resources deplete. This assumption is captured under the proposed model in a generic information-theoretic sense.

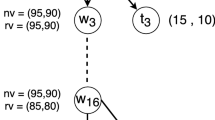

The key concept is that it becomes more difficult to reason about reasoning, that is the further one tries to explore through the extensive-form tree. This overall process is visualised Fig. 1, where the players reasoning error increases throughout the steps of reasoning. This representation can be thought of as a hierarchy of players noisily responding to lower level players, or as a sequential decision with increasing noise at each step. Once a player’s resources deplete, the later depths simply echo the prior beliefs as the noise obstructs the payoffs.

The effect of discounting resources over time. In the beginning, the player has \(\beta\) resources and may make some error (red bars). Parameter \(\gamma\) controls how this error grows over time and, implicitly, how the player believes lower-level players will respond. A low \(\gamma\) means the errors increase drastically at each level, assuming opponents with much lower reasoning abilities. As \(\beta \gamma ^{k} \rightarrow 0\), the noise increases, and the recursion eventually stops once the utilities become indistinguishable (i.e., the player can not reason any deeper)

4.1 Quantal hierarchy model

To model higher-order reasoning with processing costs, we propose a more flexible and succinct approach than simply setting a maximum depth K and corresponding parameters \(\beta _k\) in a pseudo-sequential decision-making task. Instead, we introduce an overall information processing parameter \(\beta\), which captures the available information processing resources for a player, and a discount parameter \(\gamma \in [0,1]\), which controls the reduction in player rationality throughout the reasoning chain. This approach allows for heterogeneous bounds on player reasoning, relaxing the assumptions of best response and mutual consistency. We refer to this approach as the quantal hierarchy (QH) model, as it shares formal similarities with quantal response equilibrium and cognitive hierarchy models, as discussed in previous sections.

4.1.1 Formulation

We represent reasoning as information-constrained sequential decision-making. The proposed formulation features only two parameters, \(\beta\) and \(\gamma \in [0,1]\) (as opposed to the vector \(\beta _k\) and number of levels K). Reasoning resources are then set as

i.e., \(\beta _k\) is \(\beta\) discounted based on \(\gamma\) and the current depth of reasoning. This can be represented by the following recursive free energy difference:

where we have represented the sequence as an infinite-sum. The sum converges due to the discount parameter as the later (inner-most sums) simply echo the prior beliefs once the player’s computational resources are exhausted. This formalisation draws parallels with the reinforcement learning (RL) methods, where such representations are common for reasoning about future states for the player. In RL, \(\gamma\) is used to discount future timesteps. Here, \(\gamma\) is used to discount future chains of reasoning about reasoning (i.e., the depth of recursion is governed by \(\beta\) discounted by \(\gamma\)), and to represent the limited resources that we believe other players have. We have represented this as in infinite sum with \(k \rightarrow \infty\), however, in various problems (such as sequential games) K can be assumed to be finite. The solution for the decision function \(f[a_k \mid a_{<k}]\) with the discounted \(\beta\) becomes:

which aims to capture decisions based on the beliefs of other players reasoning at later stages. With the assumption of a discount rate \(\gamma\), we can see the recursion is now depth-bound based on the (discounted) resource parameter. Once \(\beta \gamma ^{k} \rightarrow 0\),Footnote 1 the recursion will stop since the result will simply echo the prior belief as no focus will be placed on the payoff, becoming the base case for recursion (the “naive" player). This means that in the new implicit limit KFootnote 2 (denoted by the case where \(\beta _k \approx 0\)), reasoning about the future provides no new information, which recovers the original form of Eq. (5) (when \(\gamma =1\)) with the introduction of conditioning on histories.

With the proposed representation, what was previously thought of in the context of sequential decisions, can be extended and modified to think about hierarchies of beliefs. The informationally constrained players “break" the chain of reasoning due to depleting their cognitive or computational capabilities as bounded by \(\beta\) and \(\gamma\). Formally, this is represented as a (potentially) infinite-sum that converges based on \(\gamma\). By discounting future computation in a chain of recursion, we can approximate higher-order reasoning, where players become increasingly limited as the reasoning chain progresses, making it more challenging to reason about reasoning.

4.1.2 Parameters

Resource parameter The resource parameter \(\beta\) quantifies the amount of processing a player can perform. Perfect utility maximisation behaviour is recovered with \(\beta \rightarrow \infty\). With \(\beta <\infty\), players are assumed to be limited in computational resources and must now balance the trade-off between their computation cost and payoff. With \(\beta \rightarrow 0\), players have no processing resources, and choose based on their prior beliefs (default actions). Anti-rational (or adversarial) play can be modelled with \(\beta \rightarrow -\infty\).

Discount parameter The discount parameter \(\gamma\) quantifies other players mental processing abilities in terms of level-k thinking. A high \(\gamma\) assumes other players play at a relatively similar cognitive level, whereas a low \(\gamma\) assumes other players have less playing ability. With \(\gamma < 1\), Eq. (10) is guaranteed to converge to a finite sequence of decisions, where the ability to process information decreases the further we get through the sequence. This captures the belief about play at later stages of reasoning, where other players are assumed to be less rational (and thus, more noisy) as governed by \(\gamma\). The case \(\gamma < 1\) implicitly relaxes mutual consistency as lower-level thinkers are then governed by a lower resource parameter, and allows for players to believe that players at later nodes behave more noisily. In the case of otherwise infinite regress (where backward induction can not be used), the limited foresight approach proposed converges to a finite approximation of the sequence by relaxing the assumption of mutual consistency. In tractable problems, we recover an approximation of backward induction where the player performing such induction may make errors at each step due to limited computational processing abilities. With \(\gamma =1\), we recover mutual consistency, as we assume other players are just as rational as ourselves, and in the special case with uniform prior beliefs and \(\gamma =1\), we collapse to a logit form of agent QRE (McKelvey & Palfrey, 1998; Turocy, 2010). The special case for \(\gamma =0\) exists in Eq. (10) as in this case, no future processing will be performed.

In the limit, with \(\beta \rightarrow \infty , \gamma =1\), perfect backward induction is recovered (see Sect. B.1). Crucially, the proposed QH model allows for a general representation, relaxing the perfect rationality assumption (with \(\beta< \infty , \gamma < 1\)), which can model out-of-equilibrium behaviour compatible with observed experimental data. We explore the role of these parameter values in more detail in the following section.

Parameter interactions In Fig. 2 we visualise how \(\beta\) and \(\gamma\) interact in a general setting. For \(\beta \rightarrow \infty\) and \(\gamma =1\), we approach payoff maximisation behaviour, i.e., the perfectly rational (Nash Equilibrium) player is recovered. For \(\beta \rightarrow -\infty\) and \(\gamma =1\), payoff minimisation behaviour (an adversarial player) is recovered. In between, we can see how \(\gamma\) adjusts \(\beta\). It is these values in between random play (\(\beta =0\)) and perfect payoff maximisation behaviour which are particularly interesting, as they give rise to out-of-equilibrium behaviour not predicted by traditional methods.

A heatmap visualising the resulting players expected payoff based on the values of \(\beta\) and \(\gamma\). The yellow colour represents the maximal expected payoff, and the purple colour represents the minimum expected payoff. When either parameter is 0, the result is a random choice amongst the actions

4.2 Explanation

We work through a generic example of the QH model on an extensive-form game tree. A player is given decision-making resources, governed by \(\beta\), to make a decision f. At each stage of reasoning k, the player’s resources are discounted by \(\gamma\). Ultimately, the player’s resources become depleted (at K, i.e., once \(\beta \gamma ^k \approx 0\)), and the game tree is considered terminated, and the naive player chooses based on their prior belief (which we assume to be uniform). This decision is then propagated backwards, and becomes the prior belief at the higher stage of reasoning \(p[a_{K-1}]\), where the player has processing resources \(\beta \gamma ^{K-1}\), and hence, noisily responds to the lower-level play based on these resources. This process is continued, with noisy responses from the lower-level thinker captured by the resource constraint. Finally, once all results have been recursively propagated, the higher-level players’ decision is made (which may still be noisy, as captured by \(\beta\)). That is, their decision is made recursively, starting from the most basic level of player reasoning (the naive player) and reasoning upwards.

4.2.1 Basic example

To help illustrate the proposed approach, we will use a simplified version of the Ultimatum game (see Sect. C.4) as a specific example. In this game, a player must decide what percentage of a pie to take. We assume that there are uniform priors among the available options at each stage.

At the first stage, Player 1 must request the percentage of the pie they want to take, denoted by \(a_1 \in [0, 100]\), with 100 giving the highest payoff (i.e., they receive the entire pie). However, at the second stage, Player 1 encounters a fairness calculator (Player 2). Player 2 decides whether or not to approve Player 1’s request, denoted by \(A_2=\{\text {accept}, \text {reject}\}\). The decisions are based on the following utilities:

where \(U_n\) corresponds to Player n’s payoff, and \(f_n\) the probability with which Player n chooses the action. A player who has no look-ahead, i.e., one who assigns zero weight to future decisions (or assumes that their opponent has zero processing abilities), can be represented with \(\beta \rightarrow \infty\) and \(\gamma =0\). Such a player simply looks at the first stage and sees that it is in their best interest to request 100% of the pie. However, this player fails to take into account the repercussions of their chosen action, as they did not consider the future decisions. They did not compute \(f_2[a_2 \mid a_1]\), and thus assumed that \(f_2[a_2 \mid a_1]\) is uniform and that their opponent would be indifferent to accepting or rejecting their request regardless of the value of \(a_1\).

A perfectly rational player with unlimited computational resources, i.e., \(\beta =\infty\) and \(\gamma =1\), would request 49 (assuming integer requests). They assign weight to the future of their actions, and can see that for any \(a > 50\), the fairness calculator will deny their request, and they will be left with nothing (at \(a=50\), the calculator will be indifferent to accepting or rejecting their request). This corresponds to the subgame perfect equilibrium, where the player performed backward induction. That is, the player examined the future until they reached the end of the game and then reasoned backwards to request the optimal choice.

A player with limited computational resources, i.e., \(\beta < \infty\), requests the best action they can subject to their resource constraint. For example, they may only request \(a=40\), as they are unable to complete the search for \(a=49\). A player with no information processing abilities, i.e., \(\beta =0\), cannot search for any optimal choices and therefore chooses based on the prior distribution, which we assumed to be uniform. Therefore, the player is equally likely to choose any \(a \in A\).

An interesting question that arises is what if we assume that the "fairness" calculator may make errors, and that it is not necessarily defined by a step function that rejects all requests above 50 and accepts all below. For example, there may be a range where 100 will get rejected, but perhaps 75 would not. This can be captured with \(\beta \gamma < \infty\), where the calculator is assumed to make errors for low values, and for \(\beta \gamma \rightarrow \infty\), it is assumed to be perfectly rational. If the fairness calculator is broken and is indifferent to accepting or rejecting values, this can be represented with \(\gamma =0\), which gives 0 processing ability to the calculator. This means \(f_2[\text {accept}_2 \mid a_1] = f_2[\text {reject}_2 \mid a_1] = 0.5\), and therefore, a rational Player 1 should request \(a_1=100\).

This example shows the usefulness of the proposed approach, and how modifying \(\beta\) and \(\gamma\) can capture a variety of heterogeneous behaviours between the two players.

5 Results

In this section, we perform out-of-sample comparisons across various canonical economic games, including both sequential and simultaneous games. We compare the proposed quantal hierarchy model against well-known approaches to capturing bounded rational reasoning, including QRE, level-K and cognitive hierarchy, as well as the Nash equilibrium predicted solutions. To assess the performance of each method, we fit the corresponding parameter values to experimental data and then evaluate the performance on hold-out data. For the quantal hierarchy method, these parameter values are \(\beta\) and \(\gamma\). For QRE, the parameter value is \(\lambda\), which serves a similar purpose as \(\beta\) in our approach, i.e., relaxing best response. For level-k, the parameter value is the steps of reasoning k. For cognitive hierarchy, the parameter value is \(\tau\), corresponding to the Poisson distribution of level-k thinkers. Further information on model fitting is given in Sect. A.

We show how the proposed approach convincingly captures human behaviour and generalises beyond the training examples, outperforming existing approaches on a wide range of games.

5.1 Performance on canonical games

For this work, we use various experimental data from canonical economic sequential and simultaneous games. Specifically, for simultaneous games, we analyse market entrance and beauty contest games, and for sequential games, centipede, and bargaining games.

For market entrance games, we use the data of Camerer (2011), originally presented in Sundali et al. (1995). For the beauty contest game, we use p-beauty contest results from Bosch-Domenech et al. (2002). For the Centipede games, we use the four and six-level data from McKelvey and Palfrey (1992). For the sequential bargaining games, we use the Ultimatum game and two-stage game from Binmore et al. (2002) (Game 1 and 3 in their paper). Further discussion on game specifics, utilities, and experimental analysis is given in Sect. C.

For each game, we perform 5 \(\times\) 2 repeated cross-fold validation (Dietterich, 1998), analysing the out-of-sample performance. This analysis ensures that the inclusion of an additional parameter does not overfit to the original training data, and instead, ensures the approach generalises well to unseen data. We present the average RMSE on the unseen data and the resulting rankings (Demšar, 2006) of each method in Table 1. The rankings account for the independence of the games, and the inability to compare errors directly across game classes. A visualisation of the resulting ranks in Fig. 3. By using these evaluation metrics, we are able to determine the effectiveness of the proposed method in comparison to existing approaches for predicting (out-of-sample) human behaviour on a range of canonical games.

The proposed quantal hierarchy method consistently performs well across the various games trialled, resulting in the best (lowest) overall rank (Table 1), as well as the most consistent (narrowest distribution of results, Fig. 3), always performing in the top 2. These results validate the modelling assumption that it becomes more difficult to reason at deeper levels of reasoning, and thus, the reasoning process becomes more erroneous. This motivates the usage of the quantal hierarchy model for capturing human decision-making in a wide-range of settings.

In the following subsections, we analyse the game results in more detail.

5.2 Simultaneous games

Market entrance

Market entrance game. The darker lines indicate the mean result from the 5 \(\times\) 2 cross-validation. The shaded regions indicate ± one standard deviation. The out-of-sample data are shown as the black circles. The proposed quantal hierarchy model is the purple line. Quantal response equilibrium is the orange line, level-k is the blue line, and cognitive hierarchy is the green line. The Nash equilibrium solution is indicated as the diagonal dashed grey line

In the market entrance game, players must simultaneously decide whether to enter or stay out of a market, where the payoff depends on the decisions of the other players and market capacity c (see Sect. C.1). Experimental data show that player behaviour in market games is inconsistent with either mixed or pure Nash equilibria, although, with repeated play, players begin to approach the mixed strategy equilibrium (Duffy & Hopkins, 2005).

These deviations from equilibrium are captured well by the proposed quantal hierarchy model (Fig. 4). We see that in the beginning (before learning, Fig. 4a), the players overestimate for low c and underestimate for high c. Towards the final rounds (after learning, e.g. Fig. 4b), the behaviour approaches equilibrium, and the proposed QH model approximates this well using an increase in processing resources \(\beta\) and/or \(\gamma\) (see Table 2) to capture this player “learning". These changes highlight an important property of the QH model. If a player is learning, i.e., becoming closer to rational, this should correspond to an increase in \(\beta\) (and/or an increase in \(\gamma\)).

The level-k model fails to capture the overall trend, and is best fitted with \(k=0\), performing worse than the mixed strategy equilibrium (and all other alternatives). The reason for this is simple. Level-k (\(k\ge 1\)) implies a step function, where for \(c > T\) where T is some threshold, the player enters with certainty, and for \(c \le T\), the player stays out with certainty. The distance to the experimental data from this step function is greater than the uniform case (\(k=0\)), so the uniform case is chosen. The cognitive hierarchy model improves upon level-k, by fitting a distribution of k thinkers, able to “smooth" out this step function, with the line shown in Fig. 4. While this captures the qualitative trend (over entry for low c, under entry for high c, near equilibrium for mid c), quantitatively, the approach is not as strong as QRE, quantal hierarchy, or even the mixed-strategy equilibrium in most cases.

The QRE model is also a good fit here, however, due to the representation is constrained to linear lines. In contrast, the QH representation can capture such “S" shape curves, better approximating the experimental data in 3 out of the 5 blocks. QRE and QH significantly outperform the approaches which just relax mutual consistency (level-k and CH), motivating the relaxation of best-response in addition to mutual consistency.

p-Beauty contest

Beauty contest games. The darker lines indicate the mean result from the 5 \(\times\) 2 cross-validation. The shaded regions indicate ± one standard deviation. The out-of-sample data are shown as the black line. The proposed quantal hierarchy model is the purple line. Quantal response equilibrium is the orange line. The level-k and cognitive hierarchy plots are shown in Fig. 12 due to the large difference in scales, distorting the figure. The Nash equilibrium solution is indicated as the diagonal dashed grey line

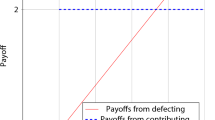

In the p-beauty contest (Moulin, 1986), players must try and guess p times the average guess (in the range [0, 100]) of other competitors (see Sect. C.2). The Nash equilibrium is for all players to guess 0, however, experimentally, we see large deviations from this behaviour.

Analysing the experimental results (Fig. 5), we see very strong performance for the QH model when modelling the less experienced players, e.g. in the Lab experiments. The QH model fits the data well, capturing the overall distribution and achieving the lowest error rate. However, for the other experiments composed of more experienced players or players with more time (take home, newspaper), we see the distribution is better approximated by QRE.

The reason for this performance is because under the proposed approach, as a player becomes more rational, the distribution of choices narrows in to the optimal choice (see Fig. 6). However, under these experimental settings, even in the theorist case, there is bounded rational (and anti-rational) behaviour. These deviations are captured well by QRE. However, it is difficult for the QH model to capture the wide distribution of choices, as well as the bulk probability mass around the optimal case. For example, in Fig. 6 we show the proposed approach approximating level-k. As k increases, the distribution narrows. Here, this narrowing of the distribution makes it difficult to capture the entire prediction range for the more advanced subjects, due to the fact there are many sub-rational choices mixed in. As a result, we see similar fitted models for each case, despite the fact that the theorists clearly have a higher level of reasoning. If, instead, we tried to approximate the average player for each case, we could capture this increase in reasoning effort, and it would reflect an increase in \(\beta\) and/or \(\gamma\) as expected.

Nevertheless, in all cases, the model still significantly outperforms level-k, cognitive hierarchy, and the mixed strategy equilibrium. The level-k model predicts some of the representative spikes in the experimental data (e.g., with \(k=1\) guesses of 33, \(k=2\) of 22, etc.). However, we can see that the players do not necessarily choose according to level-k, and may make errors around the best response suggested by level-k reasoning. Level-k thinking presupposes that players will predict a multiple of p, i.e., with \(p=\frac{2}{3}\), we get \(p \times 50, p^2 \times 50, \dots , p^k \times 50\), as the players at each level are best responding to lower-level players. In the proposed QH model, level-k reasoning can be recovered if \(\beta _t=\infty\) for \(t \le k\) and \(\beta _t=0\) for \(t > k\). However, with \(\beta _t < \infty\), the proposed QH model produces a distribution around these best-responding values anticipating potential errors in player reasoning, with these errors growing throughout the chain of reasoning.

The cognitive hierarchy model can improve upon the level-k approach here by weighting the “spikes" of the level-k model differently, however, this still fails to capture the underlying distribution. A large reason for this is that certain predictions in the p-beauty contest are considered irrational, for example, any prediction over 67. However, we can see experimentally that such predictions occur, for example, in Fig. 5. If a player believes that other players would choose the maximal offer of 100, then the player should choose \(\frac{2}{3}100=~67\). Level-k or cognitive hierarchy models cannot capture such irrational behaviour where players choose \(>67\). That is, there is no distribution of level-k thinkers that would predict 100. However, this feature can be captured directly under the proposed QH and QRE models due to the errors in play, again motivating the usefulness of relaxing best response in addition to mutual consistency.

In summary, we see that under the lab experiment, the QH approach is the best fit. However, QRE is a better fit in other cases of the beauty contest game.

5.2.1 Sequential games

Centipede games

In the centipede game (see Sect. C.3), “two players alternately get a chance to take the larger portion of a continually escalating pile of money. As soon as one person takes, the game ends with that player getting the larger portion of the pile, and the other player getting the smaller portion" (McKelvey & Palfrey, 1992).

The subgame perfect equilibrium of the centipede game is for each player to immediately take the pot without proceeding to any further rounds, however, we see this is not the case experimentally, where players behave far from the subgame perfect equilibrium (Fig. 14). In general, many players take towards the middle of the game. The proposed QH model can capture this trend well, with QRE and cognitive hierarchy generally over-weighting the earlier nodes and under-weighting the later modes (Fig. 7).

The quantal hierarchy model provides the best fit for both the four and six-level centipede games, capturing realistic beliefs. When modelling this reasoning process, the player believes they are reasoning at a higher level than their opponent, but in addition, it is as if the player overestimates how noisy their own play will be when faced with a decision at later nodes. This overestimation is because once actually faced with the decision, there will be a smaller game tree for the player to consider. This reasoning process was shown to approximate the experimental results well, motivating the discounting of information processing resources for capturing future beliefs. When comparing the resulting parameters (\(\beta\) and \(\gamma\)) from the four and six-level variants (Table 2), we note that the six-level variant results in additional information processing costs for the player (larger \(\beta\) and \(\gamma\)). The additional processing costs result from the longer chain of reasoning, requiring higher processing resources.

The significantly improved performance over quantal response equilibrium on both games motivates the usefulness of relaxing mutual consistency in addition to best response. By relaxing mutual consistency, we captured the perceived “lapse" in reasoning when considering the full extensive form game tree by reducing the information processing resources the further the player tries to reason through the tree.

Four and six-level centipede games. The darker lines indicate the mean result from the 5 \(\times\) 2 cross-validation. The shaded regions indicate ± one standard deviation. The out-of-sample data are shown in the dark grey bars. The proposed quantal hierarchy model is the purple line. Quantal response equilibrium is the orange line, level-k is the blue line, and cognitive hierarchy is the green line. The Nash equilibrium solution is indicated as the light grey bar at the first move

Bargaining games

In bargaining games, players alternately bargain over how to divide a sum (see Sect. C.4). We examine two types, single-stage (Ultimatum) and two-stage bargaining games. These are extensions of the example game considered in Sect. 4.2.1.

Ultimatum The results are presented in Fig. 8 for the ultimatum game. We see the QH model explains important features of the observed experimental behaviour. For example, with higher \(V_1\), Player 1 is likely to make a larger initial request (Fig. 8a vs 8c). Perfect rationality does not capture this (with the Nash equilibrium remaining unchanged), whereas the QH model suggests higher initial requests (due to higher \(V_1\) if the request is rejected). The QRE model and the QH model behave similarly here. The reason for this similar behaviour is because the ultimatum game is nearly a single-stage decision, meaning the QH model “collapses" to QRE. This is also confirmed in the fitted parameters Table 2, with the (10, 10) and (70, 10) having almost identical values for \(\beta\) and \(\lambda\), and QH having relatively high values of \(\gamma\), meaning the differences between Player 1 and Player 2 processing resources are small. However, something interesting happens in the (10, 60) case, where QRE cannot capture the distribution. For example, if we look to the right of the rational rejection region for Player 2 (Fig. 16), we do not see any acceptances in (10, 10) or (70, 10). Whereas, if we look in the rational rejection region of (10, 60), we see several acceptances. This behaviour is irrational, because if the player rejected the request, they would have received a higher payoff. In contrast, Player 1’s initial requests are relatively rational, with the peak occurring around the rational request of 40. This mismatch in player rationality is captured under the proposed model with a small \(\gamma\), i.e., a large discount in processing resources. This mismatch in rationality cannot be captured with the standard QRE, which assumes a fixed \(\beta\) for both players. These results motivate the discounting of player resources, which can capture heterogeneous information processing resources between the two players. Alternate forms of QRE, such as Heterogenous QRE have also been proposed to deal with such dilemmas (Rogers et al., 2009), however, this is captured natively by the QH model.

The level-k model fails to capture any of the trends, with the uniform level-0 case being the best fit. The cognitive hierarchy model predicts a representative spike at the rational capacity in the (70, 10) and (10, 60) cases, however, it is still clearly outperformed by both QRE and QH.

These results confirm the usefulness of QH. When both players behave with similar levels of rationality, this can be captured with \(\gamma \rightarrow 1\), and the model acts the same as QRE. However, when there is a mismatch in player rationality, this heterogeneity can be captured directly with \(\gamma < 1\), which became most pronounced in the (10, 60) case.

Ultimatum game. The darker lines indicate the mean result from the 5 \(\times\) 2 cross-validation. The shaded regions indicate ± one standard deviation. The out-of-sample data are shown as the black line. The proposed quantal hierarchy model is the purple line. Quantal response equilibrium is the orange line, level-k is the blue line, and cognitive hierarchy is the green line. The Nash equilibrium solution is indicated as the vertical dashed grey line

Two-stage bargaining game. The darker lines indicate the mean result from the 5 \(\times\) 2 cross-validation. The shaded regions indicate ± one standard deviation. The out-of-sample data are shown as the black line. The proposed quantal hierarchy model is the purple line. Quantal response equilibrium is the orange line, level-k is the blue line, and cognitive hierarchy is the green line. The Nash equilibrium solution is indicated as the vertical dashed grey line

Two-stage While we saw similar behaviour between QRE and QH in the ultimatum game, under the two-stage game, the differences between the approaches become more pronounced due to the longer game tree. Under such conditions, the usefulness of discounting future paths (and relaxing mutual consistency) becomes more noticeable. The QH model convincingly outperforms QRE across the experimental results with the small disagreement penaltiesFootnote 3 (i.e., \(D>0.5\)), and still generally outperforms QRE for the larger disagreement penalties (i.e., \(D \le 0.5\)), although the two methods become closer.

With the larger disagreement penalties (i.e. smaller D), the experimental data are closer to the perfectly rational case, as indicated with the peaks corresponding roughly to the rational request in Fig. 9. This distribution around the rational request is precisely the premise QRE is founded on, so QRE achieves adequate performance. However, with the smaller disagreement penalties (larger D, top row of Fig. 9), the distribution is not centred around the rational request, meaning QRE struggles to capture such phenomena. In contrast, the QH approach is robust to this shift due to the relaxation of mutual consistency, and is able to capture the varying distributions regardless of whether they are approximating the best-response case.

5.3 Results summary

The quantal hierarchy method consistently performed well out-of-sample in all games, ranking the best overall and achieving either the first or second position in every game. The results analysis was categorised into two game types: sequential and simultaneous games, where reasoning is represented as an extensive-form game tree with depleting information-processing resources. Although the representation worked well in both game types, it showed more improvement over alternative methods in sequential games. This improvement in sequential games can be attributed to the discount parameter that captures the heterogeneity of players, allowing for different information processing resources between the players at each stage, relaxing mutual consistency, which was crucial in bargaining games.

On the other hand, in simultaneous games, the approach aims to fit a representative distribution of the entire group, but it can struggle to capture the entire distribution of players, particularly when they exhibit widely varying levels of rationality, as in certain versions of the beauty-contest game. This highlights a potential limitation of the approach when attempting to capture multimodal distributions with varying levels of rationality, such as a bi-modal distribution with beginners and experts. To address this limitation, multiple versions of the model may need to be fitted, such as one for beginners and one for experts, as modelling more rational play narrows the distribution to the rational prediction and modelling less rational play widens the distribution to account for larger errors, as demonstrated in Fig. 6. However, despite this potential limitation, the method still performed exceptionally well overall.

6 Discussion and conclusions

The assumption of perfect rationality amongst players is violated in numerous experimental settings, particularly in non-repeated games. In this work, we utilised experimental datasets for several games, showing that the equilibrium behaviour is often a poor predictor of the observed actions. The proposed quantal hierarchy model offers a concise alternative representation, relaxing some traditional game-theoretic assumptions underlying rationality. The model is a good fit for experimentally observed behaviour on a range of canonical economic games, outperforming existing bounded rationality approaches on out-of-sample validation.

In the QH model, we represent higher-order reasoning as pseudo-sequential decision-making. At each level, players may reason erroneously, and this error grows the deeper one reasons (i.e., it becomes more difficult to reason about reasoning). The magnitude of the errors is governed by \(\beta\), with \(\beta =0\), players do not perform any reasoning, and with \(\beta \rightarrow \infty\) players reason perfectly. Parameter \(\beta\), therefore, relaxes the best response assumption of players at each level of reasoning.

Decreasing \(\beta\) at each level of reasoning was shown to work well on a wide variety of games, reinforcing the assumption that players cognitive abilities decrease throughout the depth of reasoning. This reduction in player cognition is captured with \(\gamma\), introducing an implicit hierarchy of players, relaxing the mutual consistency assumption. Representing this hierarchy of players as extensive-form game trees allowed for an information-theoretic representation, where lower-level players are assumed to make more significant playing errors (constrained by lower information processing resources). With a single-step decision, this recovers the quantal response equilibrium model. With multi-stage decisions, we recover an approximation of a generalised level-k formulation, where at each step, players are assumed to have higher resources and reasoning ability than players below themselves, but may still play erroneously.

Similar to QRE, the resource parameter \(\beta\) is problem dependent, and depends on the payoff magnitude (McKelvey et al., 2000). This opens an area of research analysing whether a normalised \(\beta\) can be used to measure problem difficulty, or whether some relationship holds between the the experimentally fitted \(\beta\) and the \(\beta\) which corresponds to the Nash solution. For example, a question arises if a normalised \(\beta\) can provide insights across games, and if so, can this average distance to the Nash solution be generally useful across games. A similar consideration is given to whether such payoff perturbations in QRE can be related across different games (Haile et al., 2008), and whether the boundedness parameter can be endogenised (Friedman, 2020).

There is a clear relationship between the decision-making components proposed in this work and the decision-making in multi-agent systems, such as agent-based models (ABMs) and multi-agent reinforcement learning (RL) approaches. For example, Wen et al. (2020) outline a novel framework for hierarchical reasoning RL agents, which allows agents to best respond to other less sophisticated agents based upon level-k type models. Likewise, Łatek et al. (2009) propose a recursion based bounded rationality approach for ABMs. Replacing the agents in these multi-agent approaches with the informationally constrained agents presented in our work provides a distinct area of future research, where we could examine the resulting dynamics and out-of-equilibrium behaviour from heterogeneous QH agents.

In summary, we proposed an information-theoretic model for capturing higher-order reasoning for boundedly rational players. Bounded rationality is achieved in the model by the relaxation of two central assumptions underlying rationality, namely, mutual consistency between players and best response decisions. Through relaxing these assumptions, we showed how the predictions from the proposed quantal hierarchy model align well with the experimentally observed human behaviour in a variety of canonical economic games.

Data availability

The experimental data analysed during the current study are publicly available from the original studies referenced. Specifically, data for bargaining games are presented in Binmore et al. (2002). Data for the beauty contest games are presented in Bosch-Domenech et al. (2002). Data for the centipede games are presented in McKelvey and Palfrey (1992). Data for the market entrance games are presented in Sundali et al. (1995), Camerer (2011). All data are subject to the original availability statements from the original authors.

Notes

e.g. when \(\beta \gamma ^{k} < \epsilon\) where \(\epsilon\) is some small enough term where the payoffs become indistinguishable, here, \(\epsilon =10^{-8}\).

In contrast to level-k, here K indicates the level with the lowest resources.

These are referred to as “discount" rates in Binmore et al. (2002). We have used the term disagreement penalties to avoid confusion with the information processing “discount" parameter \(\gamma\).

References

Alaoui, L., & Penta, A. (2016). Endogenous depth of reasoning. The Review of Economic Studies, 83(4), 1297–1333.

Alaoui, L., & Penta, A. (2022). Cost-benefit analysis in reasoning. Journal of Political Economy, 130(4), 881–925.

Anufriev, M., Duffy, J., & Panchenko, V. (2022). Learning in two-dimensional beauty contest games: Theory and experimental evidence. Journal of Economic Theory, 201, 105417.

Arthur, W. B. (1994). Inductive reasoning and bounded rationality. The American Economic Review, 84(2), 406–411.

Aumann, R. J. (1992). Irrationality in game theory. Economic Analysis of Markets and Games, 214–227.

Ay, N., Bernigau, H., Der, R., & Prokopenko, M. (2012). Information-driven self-organization: The dynamical system approach to autonomous robot behavior. Theory in Biosciences, 131(3), 161–179.

Bach, C. W., & Perea, A. (2014). Utility proportional beliefs. International Journal of Game Theory, 43(4), 881–902.

Bergstra, J., Yamins, D., & Cox, D. (2013). Making a science of model search: Hyperparameter optimization in hundreds of dimensions for vision architectures. In International Conference on Machine Learning, pp. 115–123. PMLR.

Binmore, K. (1987). Modeling rational players: Part I. Economics & Philosophy, 3(2), 179–214.

Binmore, K. (1988). Modeling rational players: Part II. Economics & Philosophy, 4(1), 9–55.

Binmore, K., McCarthy, J., Ponti, G., Samuelson, L., & Shaked, A. (2002). A backward induction experiment. Journal of Economic theory, 104(1), 48–88.

Bosch-Domenech, A., Montalvo, J. G., Nagel, R., & Satorra, A. (2002). One, two,(three), infinity,...: Newspaper and lab beauty-contest experiments. American Economic Review, 92(5), 1687–1701.

Botev, Z. I., Grotowski, J. F., & Kroese, D. P. (2010). Kernel density estimation via diffusion. The Annals of Statistics, 38(5), 2916–2957.

Braun, D. A., & Ortega, P. A. (2014). Information-theoretic bounded rationality and \(\varepsilon\)-optimality. Entropy, 16(8), 4662–4676.

Braun, D. A., Ortega, P. A., Theodorou, E., & Schaal, S. (2011). Path integral control and bounded rationality. In 2011 IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning (ADPRL), pp. 202–209. IEEE.

Breitmoser, Y. (2012). Strategic reasoning in p-beauty contests. Games and Economic Behavior, 75(2), 555–569.

Camerer, C., Ho, T., & Chong, K. (2003). Models of thinking, learning, and teaching in games. American Economic Review, 93(2), 192–195.

Camerer, C. F. (2003). Behavioral game theory: Plausible formal models that predict accurately. Behavioral and Brain Sciences, 26(2), 157–158.

Camerer, C. F. (2010). Behavioural game theory. Behavioural and experimental economics (pp. 42–50). Springer.

Camerer, C. F. (2011). Behavioral game theory: Experiments in strategic interaction. Princeton University Press.

Camerer, C. F., Ho, T.-H., & Chong, J.-K. (2004). A cognitive hierarchy model of games. The Quarterly Journal of Economics, 119(3), 861–898.

Caplin, A., Dean, M., & Leahy, J. (2019). Rational inattention, optimal consideration sets, and stochastic choice. The Review of Economic Studies, 86(3), 1061–1094.

Challet, D., Marsili, M., & Zhang, Y.-C., et al. (2013). Minority games: Interacting agents in financial markets. OUP Catalogue.

Chong, J.-K., Camerer, C. F., & Ho, T.-H. (2005). Cognitive hierarchy: A limited thinking theory in games. In R. Zwick & A. Rapoport (Eds.), Experimental business research (pp. 203–228). Springer US.

Chong, J.-K., Ho, T.-H., & Camerer, C. (2016). A generalized cognitive hierarchy model of games. Games and Economic Behavior, 99, 257–274.

Cournot, A. A. (1838). Recherches sur les principes mathématiques de la théorie des richesses. L. Hachette.

Demšar, J. (2006). Statistical comparisons of classifiers over multiple data sets. The Journal of Machine Learning Research, 7, 1–30.

Dietterich, T. G. (1998). Approximate statistical tests for comparing supervised classification learning algorithms. Neural Computation, 10(7), 1895–1923.

Duffy, J., & Hopkins, E. (2005). Learning, information, and sorting in market entry games: Theory and evidence. Games and Economic Behavior, 51(1), 31–62.

Evans, B. P., & Prokopenko, M. (2021). A maximum entropy model of bounded rational decision-making with prior beliefs and market feedback. Entropy, 23(6), 669.

Friedman, E. (2020). Endogenous quantal response equilibrium. Games and Economic Behavior, 124, 620–643.

Gabaix, X., Laibson, D., Moloche, G., & Weinberg, S. (2006). Costly information acquisition: Experimental analysis of a boundedly rational model. American Economic Review, 96(4), 1043–1068.

Gächter, S. (2004). Behavioral game theory. In Blackwell handbook of judgment and decision making, pp. 485–503.

Georgalos, K. (2020). Comparing behavioral models using data from experimental centipede games. Economic Inquiry, 58(1), 34–48.

Glasner, D. (2022). Hayek, Hicks, Radner and four equilibrium concepts: Perfect foresight, sequential, temporary, and rational expectations. The Review of Austrian Economics, 35(1), 39–61.

Goeree, J. K., & Holt, C. A. (2004). A model of noisy introspection. Games and Economic Behavior, 46(2), 365–382.

Goeree, J. K., & Holt, C. A. (2005). An explanation of anomalous behavior in models of political participation. American Political Science Review, 99(2), 201–213.

Goeree, J. K., Holt, C. A., & Palfrey, T. R. (2005). Regular quantal response equilibrium. Experimental Economics, 8(4), 347–367.

Goeree, J. K., Holt, C. A., & Palfrey, T. R. (2016). Quantal response equilibrium. Quantal response equilibrium. Princeton University Press.

Goldfarb, A., & Xiao, M. (2011). Who thinks about the competition? Managerial ability and strategic entry in us local telephone markets. American Economic Review, 101(7), 3130–61.

Gottwald, S., & Braun, D. A. (2019). Bounded rational decision-making from elementary computations that reduce uncertainty. Entropy, 21(4), 375.

Haile, P. A., Hortaçsu, A., & Kosenok, G. (2008). On the empirical content of quantal response equilibrium. American Economic Review, 98(1), 180–200.

Harré, M. S. (2021). Information theory for agents in artificial intelligence, psychology, and economics. Entropy, 23(3), 310.

Harsanyi, J. C. (1967). Games with incomplete information played by “Bayesian’’ players. Part I. The basic model. Management Science, 14(3), 159–182.

Harsanyi, J. C. (1968). Games with incomplete information played by “Bayesian’’ players. Part II. Bayesian equilibrium points. Management Science, 14(5), 320–334.

Ho, T.-H., & Su, X. (2013). A dynamic level-k model in sequential games. Management Science, 59(2), 452–469.

Hoppe, H.-H. (1997). On certainty and uncertainty, or: How rational can our expectations be? The Review of Austrian Economics, 10(1), 49–78.

Johnson, E. J., Camerer, C., Sen, S., & Rymon, T. (2002). Detecting failures of backward induction: Monitoring information search in sequential bargaining. Journal of Economic Theory, 104(1), 16–47.

Kawagoe, T., & Takizawa, H. (2012). Level-k analysis of experimental centipede games. Journal of Economic Behavior & Organization, 82(2–3), 548–566.

Ke, S. (2019). Boundedly rational backward induction. Theoretical Economics, 14(1), 103–134.

Keynes, J. M. (1937). The general theory of employment. The Quarterly Journal of Economics, 51(2), 209–223.

Keynes, J. M. (2018). The general theory of employment, interest, and money. Springer.

Knudsen, C. (1993). Rationality and the problem of self-reference in economics! Rationality, Institutions, and Economic Methodology, 2, 133.

Koppl, R., Jr., & Barkley Rosser, J. (2002). All that I have to say has already crossed your mind. Metroeconomica, 53(4), 339–360.

Koriyama, Y., & Ozkes, A. I. (2021). Inclusive cognitive hierarchy. Journal of Economic Behavior & Organization, 186, 458–480.

Krockow, E. M., Pulford, B. D., & Colman, A. M. (2018). Far but finite horizons promote cooperation in the centipede game. Journal of Economic Psychology, 67, 191–199.

Łatek, M., Axtell, R., & Kaminski, B. (2009). Bounded rationality via recursion. In Proceedings of Eighth International Conference on Autonomous Agents and Multi-Agent Systems (AAMAS 2009), pp. 457–464.

Levin, D., & Zhang, L. (2022). Bridging level-k to nash equilibrium. Review of Economics and Statistics, 104(6), 1329–1340. https://doi.org/10.1162/rest_a_00990

Lipman, B. L. (1991). How to decide how to decide how to...: Modeling limited rationality. Econometrica: Journal of the Econometric Society, 1105–1125.

Löfgren, L. (1990). On the partiality of self-reference (pp. 47–64). Gordon and Breach Science Publishers.

Mackie, J. (1971). What can we learn from the paradoxes? Part II. In Crítica: Revista Hispanoamericana de Filosofía, pp. 35–54.

Mattsson, L.-G., & Weibull, J. W. (2002). Probabilistic choice and procedurally bounded rationality. Games and Economic Behavior, 41(1), 61–78.

McKelvey, R. D., Palfrey, T. R. (1992). An experimental study of the centipede game. Econometrica: Journal of the Econometric Society, 803–836.

McKelvey, R. D., & Palfrey, T. R. (1995). Quantal response equilibria for normal form games. Games and Economic Behavior, 10(1), 6–38.

McKelvey, R. D., & Palfrey, T. R. (1998). Quantal response equilibria for extensive form games. Experimental Economics, 1(1), 9–41.

McKelvey, R. D., Palfrey, T. R., & Weber, R. A. (2000). The effects of payoff magnitude and heterogeneity on behavior in 2\(\times\)2 games with unique mixed strategy equilibria. Journal of Economic Behavior & Organization, 42(4), 523–548.

Mertens, J.-F., & Zamir, S. (1985). Formulation of Bayesian analysis for games with incomplete information. International Journal of Game Theory, 14(1), 1–29.

Morgenstern, O. (1928). Wirtschaftsprognose: Eine Untersuchung ihrer Voraussetzungen und Möglichkeiten. Springer.

Morgenstern, O. (1935). Vollkommene voraussicht und wirtschaftliches gleichgewicht. Zeitschrift für Nationalökonomie/Journal of Economics, 6 337–357. https://doi.org/10.1007/BF01311642

Moulin, H. (1986). Game theory for the social sciences. NYU Press.

Nagel, R. (1995). Unraveling in guessing games: An experimental study. The American Economic Review, 85(5), 1313–1326.

Nash, J. (1951). Non-cooperative games. Annals of Mathematics, 286–295.

Ortega, D. A., & Braun, P. A. (2011). Information, utility and bounded rationality. In International Conference on Artificial General Intelligence, pp. 269–274. Springer.

Ortega, P. A., & Braun, D. A. (2013). Thermodynamics as a theory of decision-making with information-processing costs. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 469(2153), 20120683.

Ortega, P. A., & Braun, D. A. (2014). Generalized Thompson sampling for sequential decision-making and causal inference. Complex Adaptive Systems Modeling, 2(1), 1–23.

Ortega, P. A., & Stocker, A. A. (2016). Human decision-making under limited time. In D. Lee, M. Sugiyama, U. Luxburg, I. Guyon, R. Garnett (Eds.), Advances in neural information processing systems, pp. 100–108. Curran Associates, Inc.

Polani, D., Sporns, O., & Lungarella, M. (2007). How information and embodiment shape intelligent information processing. 50 years of artificial intelligence (pp. 99–111). Berlin: Springer.

Polonio, L., & Coricelli, G. (2019). Testing the level of consistency between choices and beliefs in games using eye-tracking. Games and Economic Behavior, 113, 566–586.

Prokopenko, M., Harré, M., Lizier, J., Boschetti, F., Peppas, P., & Kauffman, S. (2019). Self-referential basis of undecidable dynamics: From the liar paradox and the halting problem to the edge of chaos. Physics of Life Reviews, 31, 134–156.

Rabin, M. O. (1957). Effective computability of winning strategies. Contributions to the Theory of Games, 3(39), 147–157.

Raiffa, H., & Luce, R. D. (1957). Games and decisions: introduction and critical survey. Wiley.

Rapoport, A., Seale, D. A., Erev, I., & Sundali, J. A. (1998). Equilibrium play in large group market entry games. Management Science, 44(1), 119–141.

Rogers, B. W., Palfrey, T. R., & Camerer, C. F. (2009). Heterogeneous quantal response equilibrium and cognitive hierarchies. Journal of Economic Theory, 144(4), 1440–1467.

Scott, D. W. (2015). Multivariate density estimation: Theory, practice, and visualization. Wiley.

Silverman, B. W. (2018). Density estimation for statistics and data analysis. Routledge.

Simon, H. A. (1976). From substantive to procedural rationality. 25 years of economic theory (pp. 65–86). Springer.

Sims, C. A. (2003). Implications of rational inattention. Journal of Monetary Economics, 50(3), 665–690.

Stahl, D. O. (1990). Entropy control costs and entropic equilibria. International Journal of Game Theory, 19(2), 129–138.

Stahl, D. O. (1993). Evolution of smart players. Games and Economic Behavior, 5(4), 604–617.

Stahl, D. O., & Wilson, P. W. (1995). On players’ models of other players: Theory and experimental evidence. Games and Economic Behavior, 10(1), 218–254.

Sundali, J. A., Rapoport, A., & Seale, D. A. (1995). Coordination in market entry games with symmetric players. Organizational Behavior and Human Decision Processes, 64(2), 203–218.

Tishby, N., Pereira, F. C., & Bialek, W. (1999). The information bottleneck method. In Proc. of the 37-th Annual Allerton Conference on Communication, Control and Computing, pp. 368–377.

Tishby, N., & Polani, D. (2011). Information theory of decisions and actions. Perception-action cycle (pp. 601–636). Berlin: Springer.

Turocy, T. L. (2010). Computing sequential equilibria using agent quantal response equilibria. Economic Theory, 42(1), 255–269.

Webster, T. J. (2013). A note on the ultimatum paradox, bounded rationality, and uncertainty. International Advances in Economic Research, 19(1), 1–10.

Wen, Y., Yang, Y., & Wang, J. (2020). Modelling bounded rationality in multi-agent interactions by generalized recursive reasoning. In Bessiere, C., editor, Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, IJCAI-20, pp. 414–421. International Joint Conferences on Artificial Intelligence Organization. Main track.

Wolpert, D. H. (2006). Information theory-the bridge connecting bounded rational game theory and statistical physics. Complex Engineered Systems (pp. 262–290). Springer.

Wright, J. R., & Leyton-Brown, K. (2019). Level-0 models for predicting human behavior in games. Journal of Artificial Intelligence Research, 64, 357–383.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. This work was supported by the Australian Research Council Discovery Project [Grant number DP170102927].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No competing interests to declare. The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Model fitting

Each model is fit to the training portion of the data (from 5 \(\times\) 2 cross-fold validation), using Bayesian hyperparameter optimisation (Bergstra et al., 2013). Every model is given 1000 evaluations for a fair comparison. With level-k, due to the integer parameter, rather than Bayesian optimisation, we instead perform an exhaustive search for \(k \in [0,1,\dots ,100]\), noting that this is an extensive range of k, easily capturing standard k’s reported in the literature. The fitted parameter values with the lowest mean squared error between the predictions and the training values are selected. The out-of-sample (testing) portion is never seen by the optimisation process and is only used for evaluation after the parameter optimisation has been complete.