Abstract

Most laboratory experiments studying Tullock contest games find that bids significantly exceed the risk-neutral equilibrium predictions. We test the generalisability of these results by comparing a typical experimental implementation of a contest against the familiar institution of a ticket-based raffle. We find that in the raffle (1) initial bid levels are significantly lower and (2) bids adjust more rapidly towards expected-earnings best responses. We demonstrate the robustness of our results by replicating them across two continents at two university labs with contrasting student profiles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

“The hero ... is condemned because he doesn’t play the game. [...] But to get a more accurate picture of his character, [...] you must ask yourself in what way (the hero) doesn’t play the game”.

— Albert Camus, in the afterword of The Outsider (Camus 1982, p. 118)

There is an active literature studying contest games using laboratory experiments (Dechenaux et al. 2015). The workhorse game in these experiments is based on the model of Tullock (1980). Under the Tullock contest success function (CSF), the ratio of the chances of victory of any two players is given by the ratio of their bids, raised to a given exponent. Most experimental studies using the Tullock CSF set the exponent to one, and find bids in the contests exceed the levels predicted by the Nash equilibrium for risk-neutral contestants, both initially and after repeated experience with the game.Footnote 1

In this paper, we explore how bid levels and dynamics depend on implementation details of the contest. The most common situation in which a typical person encounters an institution described by the Tullock CSF is in the raffle. Raffles are common as fund-raisers for schools, clubs and charities. In the typical implementation of a raffle, people purchase individually numbered tickets, which are collected in a container of some sort.Footnote 2 A ticket is drawn and the number announced or publicised, which determines the winner of the prize.Footnote 3 We design a treatment in which we describe the game in the instructions, and carry it out in the implementation, using the common terminology associated with the raffle. Participants purchase individually numbered (virtual) tickets, and one ticket number is drawn and quoted to determine the winner. We compare the performance of this contest to a baseline implementation using a protocol we designed to be typical of previous studies. We find that in the raffle, initial bids are significantly lower, and adjustments towards (risk-neutral) best responses are faster, with median group bids approaching the risk-neutral prediction. We replicate our results in two participant pools in two countries, at two universities with student bodies with contrasting profiles.

As a starting point, we updated the list of Tullock contest studies initiated by Sheremeta (2013). The 37 papers are listed in Table 1. Since the value of the contest prize differs across studies, we follow Sheremeta and report a normalised “overbidding rate,” computed as (actual bid − Nash bid)/Nash bid.

We augmented the survey by collecting information on the experimental protocols used for presenting the game.Footnote 4

- 1.

Whether the instructions refer to a ratio of bids mapping into probabilities of winning the prize. In expressing theoretical models, the Tullock CSF is commonly written in ratio form. This custom carries over to the writing of experimental instructions. Experiments which follow this custom, by making explicit mention that the probability of winning is given by the ratio of the player’s own bid to the total bids of all players, are indicated in the “Ratio rule” column in Table 1. A majority of studies do present this ratio, with many, including Fallucchi et al. (2013), Ke et al. (2013), and Lim et al. (2014) explicitly using a displayed mathematical formula similar to (1).Footnote 5

- 2.

What concrete randomisation mechanism, if any, is mentioned in the instructions. Many experiments supplement the mention of a ratio with an example mechanism capable of generating the probability. For example, instructions may state that it is “as if” bids translate into lottery tickets, or other objects such as balls or tokens (Potters et al. 1998; Fonseca 2009; Masiliunas et al. 2014; Godoy et al. 2015), which are then placed together in a container, with one drawn at random to determine the winner. The “Example” column of Table 1 lists the example given to participants in each study.

- 3.

How the realisation of the random outcome is communicated to participants. In representing the randomisation itself, experimenters rarely use a pseudo-physical representation of the randomisation process. Among the few that provide a representation, several do so using a spinning lottery wheel, on which bids are mapped proportionally onto wedges of a circle, which is a ratio-format representation. Among this limited sample, there is no difference in reported bidding relative to Nash compared to the body of the literature as a whole. Herrmann and Orzen (2008) report bids more than double the equilibrium prediction; Morgan et al. (2012) and Ke et al. (2013) around 1.5 times the equilibrium prediction; and Shupp et al. (2013) find bids below the equilibrium prediction.

Based on the survey, the emerging standard of recent years, which we refer to as the conventional approach, is for a Tullock contest experiment to

- 1.

introduce the success function in terms of a probability or ratio;

- 2.

give a mechanism like a raffle as an example of how the mechanism works; but

- 3.

not to represent the mechanism of randomisation, beyond identifying the winner.

The standardisation is reflective of the maturity of the literature on experimental contests. A design with features parallel to other recent papers is more readily comparable to existing results. However, by not exploring the design space of other implementations, this standardisation leaves the literature unable to distinguish whether common results, such as bids in excess of the risk-neutral Nash prediction, are behaviourally inherent to all Tullock contests, or depend on features of the environment which are not accounted for in standard models.

Understanding how behaviour might be a function of implementation features is particularly relevant for Tullock CSFs because of their wide applicability, which includes not only raffles but rent-seeking, electoral competition, research and development, and sports. In the latter applications, the Tullock CSF is used as a theoretical device to represent an environment in which success in the contest depends significantly both on the bids of the players and on luck, and is attractive for such applications because it is analytically tractable. For example, in the symmetric setting with risk-neutral players and no spillovers, there is a unique Nash equilibrium in which the players play pure strategies (Szidarovszky and Okuguchi 1997; Chowdhury and Sheremeta 2011).

We chose the raffle implementation not only because of its ubiquity in common experience, but also because existing results suggest consistently presenting and implementing the contest as a raffle would maximise the differentiation from the conventional design. Raffle tickets are inherently count-based representations. Differences in processing probabilistic (ratio) versus frequency (count) information have been studied extensively in psychology. For example, Gigerenzer and Hoffrage (1995) proposed that humans are well adapted to manipulating frequency-based information as this is the format in which information arises in nature. There is, therefore, an unresolved expositional tension in the instructions of many experiments, mixing ratio-based formats in expressing probabilities of winning and/or fortune wheels, and count-based formats of as-if raffles.

We present the formal description of the Tullock contest game and our experimental design in Sect. 2. The summary of the data and the results are included in Sect. 3. We conclude in Sect. 4 with further discussion.

2 Experimental design

The Tullock contest game we study is formally an n-player simultaneous-move game. There is one indivisible prize, which each player values at \(v>0\). Each player i has an endowment \(\omega \ge v\), and chooses a bid \(b_i\in [0, \omega ]\). Given a vector of bids \(b=(b_1,\ldots ,b_n)\), the probability player i receives the prize is given by

If players are risk-neutral, they maximise their expected payoff, written as \(\pi _i(b) = vp_i(b) + (\omega - b_i)\). The unique Nash equilibrium is in pure strategies, with \(b_i^{{\text {NE}}}=\min \left\{ \frac{n-1}{n^2}v,\omega \right\} \) for all players i.

In our experiment, we choose \(n=4\) and \(\omega =v=160\). We restrict the bids to be drawn from the discrete set of integers, \(\{0, 1, \ldots , 159, 160\}\). With these parameters, the unique Nash equilibrium has \(b_i^{{\text {NE}}}=30\).

Participants played 30 contest periods, with the number of periods announced in the instructions. The groups of \(n=4\) participants were fixed throughout the session. Within a group, members were referred to anonymously by ID numbers 1, 2, 3, and 4; these ID numbers were randomised after each period. All interactions were mediated through computer terminals, using zTree (Fischbacher 2007). A participant’s complete history of their own bids and their earnings in each period was provided throughout the experiment. Formally, therefore, the 4 participants in a group play a repeated game of 30 periods, with a common public history.Footnote 6 By standard arguments, the unique subgame-perfect equilibrium of this supergame interaction is to play the \(b_i^{{\text {NE}}}=30\) in all periods irrespective of the history of play.Footnote 7

We contrast two treatments, the conventional treatment and the ticket treatment, in a between-sessions design. The instructions for both treatments introduce the game as “bidding for a reward.”Footnote 8 In the conventional treatment, the instructions explain the relationship between bids and chances of receiving the reward using the mathematical formula first, pointing out that the chances of winning are increasing in one’s own bid, with a subsequent sentence mentioning that bids could be thought of as lottery tickets. Our explanation follows the most common pattern found across the studies surveyed in Table 1. In the ticket treatment, each penny bid purchases an individually numbered lottery ticket, one of which would be selected at random to determine the winner.

The randomisation in each period was presented to participants in line with the explanations in the instructions. In conventional treatment sessions, after bids were made but before realising the outcome of the lottery, participants saw a summary screen (Fig. 1a), detailing the bids of each of the participants in the group. After a pause, this was followed by a screen announcing the identity of the winner. In sessions using the ticket treatment, the raffle was played out by providing the range of identifying numbers of the tickets purchased by each participant (Fig. 1b). This screen was augmented, after a pause, by an indicator showing the number of the selected winning ticket, and the identity of its owner.

The raffle treatment differs from the conventional in its consistent use of counts of pseudo-physical objects to express the game, in both instructions and feedback. Moving to this internally consistent expression of the environment admits two channels through which behaviour in the ticket treatment could differ from that in the conventional treatment.

- 1.

Description While both mention lottery tickets as a metaphor, the conventional treatment instructions discuss the chance of receiving the reward as a proportion, whereas the ticket treatment uses counts of tickets purchased. Effects due to the description of the mechanism would be identifiable in the first-period bids, which are taken when participants have not had any experience with the mechanism or information about the behaviour of others.

- 2.

Experience The outcome in the ticket treatment is attributed to an identifiable ticket owned by a participant, while the conventional treatment identifies only the winner. However, the number of the winning ticket conveys no additional payoff-relevant information beyond the identity of its owner. An effect due to feedback structure will be identifiable by looking at the evolution of play within each fixed group over the course of the 30 periods of the session.

Our design and the analysis of the data permit us to look for evidence of effects from each channel. These two channels roughly follow the distinction drawn by, e.g. Hertwig et al. (2004), between choices taken based on the description of a risk, versus those taken based on experience.

We conducted a total of 14 experimental sessions. Eight of the sessions took place at the Centre for Behavioural and Experimental Social Science at University of East Anglia in the United Kingdom, using the hRoot recruitment system (Bock et al. 2014), and six at the Vernon Smith Experimental Economics Laboratory at Purdue University in the United States, using ORSEE (Greiner 2015). We refer to the samples as UK and US, respectively. In the UK, there were four sessions of each treatment with 12 participants (3 fixed groups) per session; in the US, there were three sessions of each treatment with 16 participants (4 fixed groups) per session. We, therefore, have data on a total of 48 participants (12 fixed groups) in each treatment at each site.

The units of currency in the experiment were pence. In the UK sessions, these are UK pence. In the US sessions, we had an exchange rate, announced prior to the session, of 1.5 US cents per pence. We selected this as being close to the average exchange rate between the currencies in the year prior to the experiment, rounded to 1.5 for simplicity.

Participants received payment for 5 of the 30 periods. The five periods which were paid were selected publicly at random at the end of the experiment, and were the same for all participants in a session.Footnote 9 Sessions lasted about an hour, and average payments were approximately £10 in the UK and $15 in the US.

3 Results

We begin with an overview of all 5760 bids in our sample. Figure 2 displays dotplots for the bids made in each period, broken out by subject pool and treatment. Overlaid on the dotplots are solid lines indicating the mean bid in the period, and a shaded area which covers the interquartile range of bids. Table 2 provides summary statistics on the individual bids for each treatment and subject pool. Both the figure and table suggest a treatment difference, with bids in the conventional treatment having a higher mean and larger variance. Aggressive bids at or near the maximum of 160 are infrequent in the ticket treatment after the first few periods, but persist in the conventional treatment. Figure 3 summarises the distribution of mean bids by group over time. The aggregate patterns of behaviour are similar in the UK and US.

Result 1

There are no significant differences between the distributions of bids in the UK versus in the US in either treatment.

Support We use the group as the unit of independent observation, and compute, for each group, the average bid over the course of the experiment. The Mann–Whitney–Wilcoxon (MWW) rank-sum test does not reject the null hypothesis of equal distributions of these group means between the UK and US pools (\(p=0.86\), \(r=0.479\) for the conventional and \(p=0.91\), \(r=0.486\) for the ticket treatment).Footnote 10 Similarly, the MWW test does not reject the null hypothesis if the group means are computed based only on periods 1–10, 11–20, or 21–30. \(\square \)

This result speaks against a hypothesis that a significant amount of the variability observed in Table 1 is attributable to differences across subject pools. In view of the similarities between the data from our two subject pools, we continue using the combined sample for our subsequent analysis. Our next result treats the full 30-period supergame as a single unit for each group, and compares behaviour to the benchmark of the unique subgame-perfect Nash equilibrium in which the stage game equilibrium is played in each period.

Result 2

Mean bids by group are lower in the ticket treatment.

Support For each group, we compute the mean bid over the course of the experiment. Descriptively, the mean over groups is 51.7 in conventional (standard deviation 14.8) and 40.7 in ticket (standard deviation 9.1). Figure 4 plots the full distribution of these group means. The boxes indicate the locations of the median and upper and lower quartiles of the distributions; all three of which are lower in ticket. Using the MWW rank-sum test, we reject the hypothesis that the distribution in ticket is the same as that in conventional (\(p=0.0036\), \(r=0.255\)). \(\square \)

We note further from Fig. 4 that in each treatment exactly 2 of the 24 groups have mean group bids below the equilibrium prediction. In ticket, group mean bids are less heterogeneous and generally closer to the equilibrium prediction, but ticket does not lead to groups over-correcting and bidding less than the Nash prediction.

The difference between the treatments could be attributable to some difference in how experiential learning takes place because of the feedback mechanism in playing out the raffle, or simply because participants process the explanation of the game differently. We can look for evidence of the latter by considering only the first-period bids.

Result 3

The distributions of first-period bids are different between the treatments. Mean and median bids in the ticket treatment are lower, and closer to the Nash prediction.

Support Figure 5 displays the distribution of first-period bids for all 192 bidders (96 in each treatment), with the boxes indicating the median and interquartile range. Since at the time of the first-period bids participants have had no interaction, we can treat these as independent observations. The mean first-period bid in the conventional treatment is 71.1, versus 56.8 in the ticket treatment. Bids in the conventional treatment exceed the Nash prediction by 41.1 pence, compared to 26.8 pence for the ticket treatment, a decrease of about 35%. Using the MWW rank-sum test, we reject the hypothesis that the distribution under the ticket treatment is the same as that under the conventional treatment (\(p=0.020\), \(r=0.403\)). The lower quartile initial bid for both treatments is 10; the median and upper quartiles are lower in ticket than in conventional. \(\square \)

First-period bids on average are above the equilibrium prediction in both treatments. We, therefore, turn to the dynamics of bidding over the course of the session. Figure 6 displays boxplots of the group average bids period-by-period for each treatment. Average bids decrease over time in both treatments, and are generally closer to the Nash level in later periods. The rate of change of average bids over time is larger in the conventional treatment, especially in earlier periods. This is to be expected if we believe that participants are interested in the earnings consequences of their decisions. In the first period, the level and heterogeneity of bids in the conventional treatment suggest participants are further from empirical best response, in earnings terms, than in the ticket treatment.Footnote 11 The larger movements in bids in the conventional treatment may then be a direct corollary of the differences in initial bids between the treatments. There is simply more scope for participants to improve on their earnings by changing their bids, and participants are presumably more likely to recognise earnings-enhancing adjustments when changes in bids would lead to larger gains in anticipated earnings. To investigate whether the within-group dynamics differ between the two treatments, we develop an analysis of how ex-post foregone payoffs evolve over time within each group.

Consider a group g in session s of treatment \(c\in \{\text {conventional}, \text {ticket}\}\). We construct for this group, for each period t, a measure of disequilibrium as follows. In each period t, each bidder i in the group submitted a bid \(b_{it}\). Given these bids, bid \(b_{it}\) had an expected payoff to i of

For comparison, we can consider bidder i’s best response to the other bids of his group. Letting \(B_{it}=\sum _{j\in g:j\not = i} b_{jt}\), the best response, if bids were permitted to be continuous, would be given by

Bids are required to be discrete in our experiment; the quasiconcavity of the expected payoff function ensures that the discretised best response \(b^\star _{it}\in \{\lceil {\tilde{b}}^\star _{it}\rceil , \lfloor {\tilde{b}}^\star _{it}\rfloor \}\). This discretised best response then generates an expected payoff to i of

We then writeFootnote 12

By construction, \(\varepsilon _{csgt}\ge 0\), with \(\varepsilon _{csgt}=0\) only at the Nash equilibrium.Footnote 13

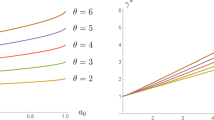

Figure 7 shows the evolution of the disequilibrium measure \(\varepsilon \) over the experiment. Values of \(\varepsilon \) in the first period are generally lower in ticket (mean 41.2, median 33.0) than in conventional (mean 53.0, median 51.2). This follows on from first-period bids being lower in ticket but still generally above Nash (Result 3). While suggestive, these dot plots alone are not enough to establish whether the evolution of play differs between the treatments, because it does not take into account the dynamics of each individual group.

We control for the difference in initial conditions by focusing on the evolution of \(\varepsilon \) period-by-period within-group over the experiment. We plot the average value of \(\varepsilon _{csg(t+1)}\) as a function of \(\varepsilon _{csgt}\) for both treatments in Fig. 8.Footnote 14 Pick a group, session, and period (g, s, t) in the conventional treatment and a group, session, and period \((g',s',t')\) in the ticket treatment, such that \(\varepsilon _{\text {conventional}\;gst}=\varepsilon _{\text {ticket}\;g's't'}\). Figure 8 suggests that in the subsequent period, on average, the group \(g'\) will have a smaller value of \(\varepsilon \) in period \(t'+1\) than the group g in period \(t+1\). This like-for-like comparison in terms of initial conditions allows us to distinguish the evolution of a group’s bidding over time from the fact that groups in the two treatments start in systematically different initial conditions from their period 1 bids.

Result 4

From the same initial conditions, the disequilibrium measure of a group in the ticket treatment on average will be lower than a group in the conventional treatment over the course of the experiment.

Support To formalise the intuition suggested by Fig. 8, for each treatment c we estimate the dynamic panel model

using the method of Arellano and Bond (1991), with robust standard errors (reported in parentheses below). We obtain for the ticket treatment

and for the conventional treatment

We reject the null hypothesis that \(\alpha _{\text {ticket}}=\alpha _{\text {conventional}}\) (\(p=0.0024\) against the two-sided alternative).Footnote 15 The slopes are not significantly different. The parameters of these lines approximate the plots in Fig. 8.

The estimates of the intercepts are positive. Therefore, groups which have very small values of \(\varepsilon \) in one period tend to see an increase in \(\varepsilon \) in the subsequent period; that is, these groups, on average, move away from mutual best response. The fixed point for \(\varepsilon \) using the point estimates is about 18.7 for the conventional treatment, and 10.6 for the ticket treatment. These fixed points can be interpreted as a projection of the long-run value of the disequilibrium measure in the two treatments, and indicate a persistent difference in behaviour between the treatments; in the long run, groups in the ticket treatment would be closer to mutual best response measured in ex-post payoff terms. \(\square \)

Although variability around the equilibrium is smaller in the ticket treatment, the data from our 30-period experiment do not provide evidence that all variability would be eliminated in the long run. The ticket treatment provides a different way of communicating the random aspect of the outcome, but it does not eliminate the fact that the outcome is random. Evidence from previous studies (e.g. Lim et al. 2014) indicates at least some participants react to the noisy sample provided by the outcome of the contest, even though the draw itself does not provide any information about the distribution of payoffs that is not in principle inferrable from knowing the bids. Chowdhury et al. (2014) compare a contest with a Tullock success function and random outcome with one in which the prize is shared deterministically among the bidders in proportion to their bids, and find tighter convergence to the equilibrium in the deterministic version. The presence of some bidders who react to the noisy sampling created by the randomness in the draw, or who adopt other heuristic approaches to their bidding, would result in persistent deviations from mutual best response.

4 Discussion

We provide a first investigation into bidding in implementations of a Tullock contest which are equivalent under standard theoretical assumptions. This literature commonly takes the risk-neutral Nash equilibrium as a benchmark and defines “overbidding” to be bids in excess of this amount. We find that a design in which the contest is expressed as a raffle lowers the ratio of bids to the Nash benchmark. The overbidding rate in the initial period is 1.36 in the conventional treatment and 0.89 in the ticket treatment; over the course of the experiment the overbidding rates are 0.72 and 0.36, respectively. Although both experimental environments implement a Tullock CSF and are payoff equivalent, the prediction that the environments are behaviourally equivalent is not supported by our data.

Our literature survey summarised in Table 2 illustrates that while greater-than-Nash average bids are the norm, there is substantial variation reported across studies. These studies have been conducted over years, with different implementation details as well as in different participant pools. Our design makes a direct comparison of behaviour across participant pools drawn from two universities in two countries. Usefully, these universities have distinct academic profiles and reputations. Purdue University is noted for its strengths in particular in engineering and other quantitative disciplines, whereas University of East Anglia has no engineering programme but is strong in areas such as creative writing. Behaviour of participants from these two participant pools is not substantially different.

Our results provide an assessment of how much of the variation observed in Table 2 may be attributable to these features of experimental implementation. This complements a strand of the contests literature which has to date looked at the effects of endowments (Price and Sheremeta 2015), feedback (Fallucchi et al. 2013), and framing (Chowdhury et al. 2018; Rockenbach et al. 2018).

We observe differences both in initial bids and in the dynamics of adjustment over the course of the experiment. Participants make their initial bids after reading the instructions and completing the comprehension control questions, but prior to having any experience with the mechanism. This implicates the description of the mechanism, especially the use of counts (frequencies) rather than ratios (probabilities).

Gigerenzer (1996) and others suggest presenting uncertainty in terms of counts leads to systematically different judgments relative to ratio-based representations. Additionally, the mere introduction of a mathematical representation such as (1) may affect decisions. Kapeller and Steinerberger (2013) conduct an experiment in which they present the solution to the Monty Hall problem to post-graduate students in economics and social sciences using one of two approaches, a version of the solution written verbally based on logical arguments, and one written in mathematical terms. They document differences in perception of the difficulty of the problem and the perception of the necessity of “mathematical knowledge” to understand and solve the problem. Xue et al. (2017) show that participants drawn from the same participant pool as our UK sample who self-report that they are not “good at math” make earnings-maximising choices substantially less often in a riskless decision task. We noted that many experimental instructions hedge their bets on understanding of the implications of the probability of winning formula by pointing out, as our conventional instructions do, that the chances of winning are increasing in the amount one bids, other things equal. Although the use of mathematical formalisms is valuable to us as quantitative researchers in expressing and analysing models, the presence of a “precise” mathematical display may not improve comprehension or experimental control.

We also find faster adaptation in the direction of approximate mutual best responses when using the raffle implementation. Our raffle differs from most experiments in our survey in that it delivers a specific number as the winning ticket. Under standard assumptions, the number of the winning ticket is irrelevant information. However, it does close the loop by carrying out the raffle in a concrete way, even if the realisation of the outcome is still accomplished by the draw of a pseudo-random number by the computer.

Both of our treatments implement the same Tullock CSF; the strategy sets, distributions over outcomes, and financial incentives are identical. Both are, therefore, representable by the same noncooperative game form; in game theory terms, this makes them both “lottery” or “raffle” contests. In contrast, in common usage a “raffle” is defined not by the CSF or the game form, but is based on how the interaction is played out. Wikipedia’s entry for “raffle”Footnote 16 begins, “A raffle is a gambling competition in which people obtain numbered tickets, each ticket having the chance of winning a prize. At a set time, the winners are drawn at random from a container holding a copy of every number.” Alekseev et al. (2017) distinguish between abstract and context-rich language in experiments. In the case of raffles, this contrast manifests itself, at least in part, as a contrast between the language of economists and game theorists, who often define an environment primarily in terms of the consequences of actions, and non-specialists, who more commonly describe an environment in terms of the way the game is implemented.

When thinking about how to convey a Tullock context game to participants in the laboratory, we can look to the raffle as one way to explain the CSF. Nevertheless, there are many situations in which we might apply the Tullock contest model which do not have some of the features present in a raffle implementation. Suppose we contemplate applying the Tullock contest model to a sporting contest, in which the strategic choice is an amount of effort expended. In such games, it is generally not possible to identify a given bit of effort as having been the pivotal one which made the difference between victory and defeat. However, a raffle identifies one discrete unit of investment (the winning ticket) as having determined the outcome. This is a relatively straightforward design decision for an experimenter, as a winner can simply be announced without recourse to naming a specific ticket or using another similar device; our results provide one measure of the relevance of this type of feedback for the dynamics of play.

In addition, in sporting contests, the relative odds of winning are not literally given by the ratio of effort investments; in these applications, the Tullock contest game form is a metaphor, a useful and tractable theoretical abstraction. Players in those games are not given a description, either mathematical or verbal, describing the relationship between their effort choices and the chances of winning. Our results suggest how the relationship is initially explained does affect initial play. This is a tension for which the resolution is less clear. In most cases, experimenters do want participants to know and understand the relationship between investment and probability of winning, so some way to explain the relationship is required. The raffle is probably the only situation people would typically encounter outside the laboratory, in which a method for implementing the Tullock CSF is described to them. However, those people would probably not consider a raffle and a sporting contest to be similar games, because the means by which they play out are very different—even if theorists argue that they present similar strategic incentives.

Many papers, including some of our own previous papers, mix raffle applications and other applications in their exposition and the implementation of their experiment. In view of the diverse set of applications to which contest games are applied, we propose that a more careful consideration of how people process, understand, and react to different descriptions of contest mechanisms would be a useful direction for future work.

Notes

We focus throughout on the case in which the exponent is equal to one.

Tickets are most commonly used for their convenience; occasionally other objects such as balls or tokens may be used. In the annual Great Norwich Duck Race, individually numbered rubber ducks are dumped en masse into the River Wensum; the first to float past the designated finish line determines the winner.

The term “lottery” is often used in experimental instructions, as the meanings of “lottery” and “raffle” are not always distinguished in common usage. Since “lottery” also has a specific technical meaning in economics and decision theory, we use “raffle” in this paper to avoid confusion.

We were unable to obtain instructions for a few of the studies listed. Cells with entries marked — indicate studies we were not able to classify.

Another alternative, giving a full expected payoff table as used by Shogren and Baik (1991), is a rather rare device.

That is, all players in the group share the same history of which bids were submitted in each period. Due to the re-assignment of IDs in each period, the private histories differ, because each participant only knows which of the four bids in each period was the one they submitted, and whether or not they were the winner in each period.

Table 1 in Fallucchi et al. (2013) shows that both fixed-groups and random-groups designs are common in the literature. We choose a fixed groups design to facilitate the focus in our analysis on the dynamics of behaviour as the supergame is played out.

We provide full text of the instructions in Appendix A.

The US participants also received a $5 participation payment on top of their contingent payment, to be consistent with the conventions at Purdue.

The MWW test statistic between samples A and B is equivalent to the probability that a randomly selected value from sample A exceeds a randomly selected value from sample B. We report this effect size probability as r, with the convention that the sample called A is the one mentioned first in the description of the hypothesis in the text. Under the MWW null hypothesis of the same distribution, \(r=0.5\).

This assertion is supported by the disequilibrium measures as plotted in Fig. 7.

If we instead defined \(\varepsilon _{csgt}\) by taking the maximum we would parallel the definition of \(\varepsilon \) from Radner (1980). Our results about the treatment effect on dynamics are unchanged if the maximum is chosen instead.

Conducting the analysis in the payoff space measures behaviour in terms of potential earnings. The marginal earnings consequences of an incremental change in bid depends on both \(b_{it}\) and \(B_{it}\), so a solely bid-based analysis would not adequately capture incentives. In addition, although in general bids are high enough that the best response in most groups in most periods is to bid low, there are many instances in which the best response for a bidder would have been to bid higher than they actually did. A focus on payoffs allows us to track the dynamics without having to account for directional learning in the bid space.

In Fig. 8, we bin observations by rounding \(\varepsilon _{csgt}\), and taking the average over all observations with the same rounded value.

In an augmented model adding a second lag on the right side, the coefficient on the second lag is not significant in either treatment.

As at 1 October 2019. Wikipedia’s mixed record for being a reliable factual sources is well analysed. In our case, however, Wikipedia is a useful source to quote precisely because its openly editable approach tends to result in definitions being phrased in common language.

References

Abbink, K., Brandts, J., Herrmann, B., & Orzen, H. (2010). Intergroup conflict and intra-group punishment in an experimental contest game. The American Economic Review, 100, 420–447.

Ahn, T. K., Isaac, R. M., & Salmon, T. C. (2011). Rent seeking in groups. International Journal of Industrial Organization, 29(1), 116–125.

Alekseev, A., Charness, G., & Gneezy, U. (2017). Experimental methods: When and why contextual instructions are important. Journal of Economic Behavior & Organization, 134, 48–59.

Anderson, L. R., & Stafford, S. L. (2003). An experimental analysis of rent seeking under varying competitive conditions. Public Choice, 115(1–2), 199–216.

Arellano, M., & Bond, S. (1991). Some tests of specification for panel data: Monte Carlo evidence and an application to employment equations. Review of Economic Studies, 58, 277–297.

Baik, K. H., Chowdhury, S. M., & Ramalingam, A. (2016). Group size and matching protocol in contests. Working paper.

Baik, K. H., Chowdhury, S. M., & Ramalingam, A. (2019) The effects of conflict budget on the intensity of conflict: An experimental investigation. Experimental Economics(in press).

Bock, O., Baetge, I., & Nicklisch, A. (2014). hRoot: Hamburg registration and organization online tool. European Economic Review, 71, 117–120.

Brookins, P., & Ryvkin, D. (2014). An experimental study of bidding in contests of incomplete information. Experimental Economics, 17(2), 245–261.

Camus, A. (1982). The outsider, translated by Joseph Laredo. London: Hamish Hamilton.

Cason, T. N., Sheremeta, R. M., & Zhang, J. (2012). Communication and efficiency in competitive coordination games. Games and Economic Behavior, 76(1), 26–43.

Chowdhury, S. M., & Sheremeta, R. M. (2011). Multiple equilibria in Tullock contests. Economics Letters, 112(2), 216–219.

Chowdhury, S. M., Sheremeta, R. M., & Turocy, T. L. (2014). Overbidding and overspreading in rent-seeking experiments: Cost structure and prize allocation rules. Games and Economic Behavior, 87, 224–238.

Chowdhury, S. M., Jeon, J. Y., & Ramalingam, A. (2018). Property rights and loss aversion in contests. Economic Inquiry, 56(3), 1492–1511.

Cohen, C., & Shavit, T. (2012). Experimental tests of Tullock’s contest with and without winner refunds. Research in Economics, 66(3), 263–272.

Davis, D. D., & Reilly, R. J. (1998). Do too many cooks always spoil the stew? An experimental analysis of rent-seeking and the role of a strategic buyer. Public Choice, 95(1–2), 89–115.

Dechenaux, E., Kovenock, D., & Sheremeta, R. M. (2015). A survey of experimental research on contests, all-pay auctions and tournaments. Experimental Economics, 18(4), 609–669.

Deck, C., & Jahedi, S. (2015). Time discounting in strategic contests. Journal of Economics & Management Strategy, 24(1), 151–164.

Fallucchi, F., Renner, E., & Sefton, M. (2013). Information feedback and contest structure in rent-seeking games. European Economic Review, 64, 223–240.

Faravelli, M., & Stanca, L. (2012). When less is more: Rationing and rent dissipation in stochastic contests. Games and Economic Behavior, 74(1), 170–183.

Fischbacher, U. (2007). z-Tree: Zurich toolbox for ready-made economic experiments. Experimental Economics, 10, 171–178.

Fonseca, M. A. (2009). An experimental investigation of asymmetric contests. International Journal of Industrial Organization, 27(5), 582–591.

Gigerenzer, G. (1996). The psychology of good judgment. Frequency formats and simple algorithms. Medical Decision Making, 16, 273–280.

Gigerenzer, G., & Hoffrage, U. (1995). How to improve Bayesian reasoning without instruction: Frequency formats. Psychological Review, 102, 684–704.

Godoy, S., Melendez-Jimenez, M. A., & Morales, A. J. (2015). No fight, no loss: Underinvestment in experimental contest games. Economics of Governance, 16(1), 53–72.

Greiner, B. (2015). Subject pool recruitment procedures: Organizating experiments with ORSEE. Journal of the Economic Science Association, 1, 114–125.

Herrmann, B., & Orzen, H. (2008). The appearance of homo rivalis: Social preferences and the nature of rent seeking. CeDEx discussion paper series.

Hertwig, R., Barron, G., Weber, E., & Erev, I. (2004). Decisions from experience and the effect of rare events in risky choice. Psychological Science, 15, 534–539.

Kapeller, J., & Steinerberger, S. (2013). How formalism shapes perception: An experiment on mathematics as a language. International Journal of Pluralism and Economics Education, 4(2), 138–156.

Ke, C., Konrad, K. A., & Morath, F. (2013). Brothers in arms: An experiment on the alliance puzzle. Games and Economic Behavior, 77(1), 61–76.

Kimbrough, E. O., & Sheremeta, R. M. (2013). Side-payments and the costs of conflict. International Journal of Industrial Organization, 31(3), 278–286.

Kimbrough, E. O., Sheremeta, R. M., & Shields, T. W. (2014). When parity promotes peace: Resolving conflict between asymmetric agents. Journal of Economic Behavior & Organization, 99, 96–108.

Kong, X. (2008). Loss aversion and rent-seeking: An experimental study. CeDEx discussion paper series.

Lim, W., Matros, A., & Turocy, T. L. (2014). Bounded rationality and group size in Tullock contests: Experimental evidence. Journal of Economic Behavior & Organization, 99, 155–167.

Mago, S. D., Sheremeta, R. M., & Yates, A. (2013). Best-of-three contest experiments: Strategic versus psychological momentum. International Journal of Industrial Organization, 31(3), 287–296.

Mago, S. D., Samak, A. C., & Sheremeta, R. M. (2016). Facing your opponents: Social identification and information feedback in contests. Journal of Conflict Resolution, 60, 459–481.

Masiliunas, A., Mengel, F., & Reiss, J. P. (2014). Behavioral variation in Tullock contests. Working Paper Series in Economics, Karlsruher Institut für Technologie (KIT).

Millner, E. L., & Pratt, M. D. (1989). An experimental investigation of efficient rent-seeking. Public Choice, 62, 139–151.

Millner, E. L., & Pratt, M. D. (1991). Risk aversion and rent-seeking: An extension and some experimental evidence. Public Choice, 69, 81–92.

Morgan, J., Orzen, H., & Sefton, M. (2012). Endogenous entry in contests. Economic Theory, 51(2), 435–463.

Potters, J., De Vries, C. G., & Van Winden, F. (1998). An experimental examination of rational rent-seeking. European Journal of Political Economy, 14(4), 783–800.

Price, C. R., & Sheremeta, R. M. (2011). Endowment effects in contests. Economics Letters, 111(3), 217–219.

Price, C. R., & Sheremeta, R. M. (2015). Endowment origin, demographic effects, and individual preferences in contests. Journal of Economics & Management Strategy, 24(3), 597–619.

Radner, R. (1980). Collusive behavior in noncooperative epsilon-equilibria of oligopolies with long but finite lives. Journal of Economic Theory, 22, 136–154.

Rockenbach, B., Schneiders, S., & Waligora, M. (2018). Pushing the bad away: Reverse Tullock contests. Journal of the Economic Science Association, 4(1), 73–85.

Schmitt, P., Shupp, R., Swope, K., & Cadigan, J. (2004). Multi-period rent-seeking contests with carryover: Theory and experimental evidence. Economics of Governance, 5(3), 187–211.

Sheremeta, R. M. (2010). Experimental comparison of multi-stage and one-stage contests. Games and Economic Behavior, 68(2), 731–747.

Sheremeta, R. M. (2011). Contest design: An experimental investigation. Economic Inquiry, 49(2), 573–590.

Sheremeta, R. M. (2013). Overbidding and heterogeneous behavior in contest experiments. Journal of Economic Surveys, 27(3), 491–514.

Sheremeta, R. M. (2018). Impulsive behavior in competition: Testing theories of overbidding in rent-seeking contests. Economic Science Institute working paper.

Sheremeta, R. M., & Zhang, J. (2010). Can groups solve the problem of over-bidding in contests? Social Choice and Welfare, 35(2), 175–197.

Shogren, J. F., & Baik, K. H. (1991). Reexamining efficient rent-seeking in laboratory markets. Public Choice, 69(1), 69–79.

Shupp, R., Sheremeta, R. M., Schmidt, D., & Walker, J. (2013). Resource allocation contests: Experimental evidence. Journal of Economic Psychology, 39, 257–267.

Szidarovszky, F., & Okuguchi, K. (1997). On the existence and uniqueness of pure Nash equilibrium in rent-seeking games. Games and Economic Behavior, 18, 135–140.

Tullock, G. (1980). Efficient rent seeking. In J. M. Buchanan, R. D. Tollison, & G. Tullock (Eds.), Toward a theory of the rent-seeking society (pp. 97–112). College Station: Texas A&M University Press.

Xue, L., Sitzia, S., & Turocy, T. L. (2017). Mathematics self-confidence and the “prepayment effect” in riskless choices. Journal of Economic Behavior & Organization, 135, 239–250.

Acknowledgements

This project was supported by the Network for Integrated Behavioural Science (Economic and Social Research Council Grant ES/K002201/1). We thank Catherine Eckel, Michael Kurschilgen, Aidas Masiliunas, Peter Moffatt, and Henrik Orzen, the editor and two anonymous referees, as well as participants at the Contests: Theory and Evidence Conference, Eastern ARC Workshop, Jadavpur University Annual Conference 2016, and the Visions in Methodology Conference for helpful comments. We thank Tim Cason and the Vernon Smith Experimental Economics Laboratory at Purdue University for allowing us to use their facilities. Any errors are the sole responsibility of the authors.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Instructions

A Instructions

The session consists of 30 decision-making periods. In the conclusion, any 5 of the 30 periods will be chosen at random, and your earnings from this part of the experiment will be calculated as the sum of your earnings from those 5 selected periods.

You will be randomly and anonymously placed into a group of 4 participants. Within each group, one participant will have ID number 1, one ID number 2, one ID number 3, and one ID number 4. The composition of your group remains the same for all 30 periods but the individual ID numbers within a group are randomly reassigned in every period.

In each period, you may bid for a reward worth 160 pence. In your group, one of the four participants will receive a reward. You begin each period with an endowment of 160 pence. You may bid any whole number of pence from 0 to 160; fractions or decimals may not be used.

If you receive a reward in a period, your earnings will be calculated as:

That is, your payoff in pence = 160 − your bid + 160.

If you do not receive a reward in a period, your earnings will be calculated as:

That is, your payoff in pence = 160 − your bid.

1.1 Portion for conventional treatment only

The more you bid, the more likely you are to receive the reward. The more the other participants in your group bid, the less likely you are to receive the reward. Specifically, your chance of receiving the reward is given by your bid divided by the sum of all 4 bids in your group:

You can consider the amounts of the bids to be equivalent to numbers of lottery tickets. The computer will draw one ticket from those entered by you and the other participants, and assign the reward to one of the participants through a random draw.

An example Suppose participant 1 bids 80 pence, participant 2 bids 6 pence, participant 3 bids 124 pence, and participant 4 bids 45 pence. Therefore, the computer assigns 80 lottery tickets to participant 1, 6 lottery tickets to participant 2, 124 lottery tickets to participant 3, and 45 lottery tickets for participant 4. Then the computer randomly draws one lottery ticket out of 255 (80 + 6 + 124 + 45). As you can see, participant 3 has the highest chance of receiving the reward: 0.49 = 124/255. Participant 1 has a 0.31 = 80/255 chance, participant 4 has a 0.18 = 45/255 chance and participant 2 has the lowest, 0.05 = 6/255 chance of receiving the reward.

After all participants have made their decisions, all four bids in your group as well as the total of those bids will be shown on your screen.

Interpretation of the table The horizontal rows in the left column of the above table contain the ID numbers of the four participants in every period. The right column lists their corresponding bids. The last row shows the total of the four bids. The summary of the bids, the outcome of the draw and your earnings will be reported at the end of each period.

At the end of 30 periods, the experimenter will approach a random participant and will ask him/her to pick up five balls from a sack containing 30 balls numbered from 1 to 30. The numbers on those five balls will indicate the 5 periods, for which you will be paid in Part 2. Your earnings from all the preceding periods will be throughout present on your screen.

1.2 Portion for ticket treatment only

The chance that you receive a reward in a period depends on how much you bid, and also how much the other participants in your group bid. At the start of each period, all four participants of each group will decide how much to bid. Once the bids are determined, a computerised lottery will be conducted to determine which participant in the group will receive the reward. In this lottery draw, there are four types of tickets: Type 1, Type 2, Type 3 and Type 4. Each type of ticket corresponds to the participant who will receive the reward if a ticket of that type is drawn. So, if a Type 1 ticket is drawn, then participant 1 will receive the reward; if a Type 2 ticket is drawn, then participant 2 will receive the reward; and so on.

The number of tickets of each type depends on the bids of the corresponding participant:

Number of Type 1 tickets = Bid of participant 1

Number of Type 2 tickets = Bid of participant 2

Number of Type 3 tickets = Bid of participant 3

Number of Type 4 tickets = Bid of participant 4

Each ticket is equally likely to be drawn by the computer. If the ticket type that is drawn has your ID number, then you will receive a reward for that period.

An example Suppose participant 1 bids 80 pence, participant 2 bids 6 pence, participant 3 bids 124 pence, and participant 4 bids 45 pence. Then:

Number of Type 1 tickets = Bid of participant 1 = 80

Number of Type 2 tickets = Bid of participant 2 = 6

Number of Type 3 tickets = Bid of participant 3 = 124

Number of Type 4 tickets = Bid of participant 4 = 45

There will, therefore, be a total of 80 + 6 + 124 + 45 = 255 tickets in the lottery. Each ticket is equally likely to be selected.

In each period, the calculations above will be summarised for you on your screen, using a table like the one in this screenshot:

Interpretation of the table The horizontal rows in the above table contain the ID numbers of the four participants in every period. The vertical columns list the participants’ bids, the corresponding ticket types, total number of each type of ticket (second column from right) and the range of ticket numbers for each type of ticket (last column). Note that the total number of each ticket type is exactly same as the corresponding participant’s bid. For example, the total number of Type 1 tickets is equal to Participant 1’s bid.

The last column gives the range of ticket numbers for each ticket type. Any ticket number that lies within that range is a ticket of the corresponding type. That is, all the ticket numbers from 81 to 86 are tickets of Type 2, which implies a total of 6 tickets of Type 2, as appears from the ‘Total Tickets’ column. In case a participant bids zero, there will be no ticket that contains his or her ID number. In such a case, the last column will show ‘No tickets’ for that particular ticket type.

The computer then selects one ticket at random. The number and the type of the drawn ticket will appear below the table. The ID number on the ticket type indicate the participant receiving the reward.

At the end of 30 periods, the experimenter will approach a random participant and will ask him/her to pick up five balls from a sack containing 30 balls numbered from 1 to 30. The numbers on those five balls will indicate the 5 periods, for which you will be paid in Part 2. Your earnings from all the preceding periods will be throughout present on your screen.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Chowdhury, S.M., Mukherjee, A. & Turocy, T.L. That’s the ticket: explicit lottery randomisation and learning in Tullock contests. Theory Decis 88, 405–429 (2020). https://doi.org/10.1007/s11238-019-09731-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11238-019-09731-6