Abstract

The standard principle of expert deference says that conditional on the expert’s credence in a proposition A being x, your credence in A ought to be x. The so-called Adams conditionalization is an attractive update rule in situations when learning experience prompts a shift in your conditional credences. In this paper, I show that, except in some trivial situations, when your prior conditional credence in A obeys the standard principle of expert deference and then is revised by Adams conditionalization in response to a shift in your conditional credence for a proposition B given A, your posterior conditional credence in A cannot continue to obey that principle, on pain of inconsistency. I explain why this tension between Adams conditionalization and the standard principle of expert deference is puzzling and why the option of rejecting the update rule appears problematic. Finally, I suggest that in order to avoid this inconsistency, we should abandon the standard principle of expert deference and think of an expert’s probabilistic opinion as a constraint on your posterior credence distribution rather than your prior one.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Chloe is an Ashkenazi Jew and has just been told that women of Ashkenazi Jewish ancestry have a greater chance of carrying a mutated BRCA1 gene than other women. More precisely, she has consulted Bert, a reliable doctor, who said that he believes to degree 0.025 that she has a mutated BRCA1 gene. Since she is certain that Bert has much more information about this subject matter than she does and is better at evaluating such information, she regards Bert as an expert when it comes to the proposition that she has a mutated BRCA1 gene.Footnote 1 That is, she obeys the standard principle of expert deference, and so she sets her credence in that proposition conditional on Bert’s reported credence in that proposition equal to Bert’s reported credence.

Before Chloe gets tested for the mutated gene, she is aware that this gene mutation causes uncontrollable cell growth and is associated with a non-negligible risk of hereditary breast cancer. Let us assume that her initial conditional credence for the proposition that she will develop a breast cancer given that she has a mutated BRCA1 gene is 0.3.

Today Chloe is reading a reputable journal in genetics in which the following well-justified statement is given: ‘The odds are 3:1 that patients with a mutated BRCA1 gene will develop a breast cancer’. Importantly, she is certain that this is news for her, but not for Bert who had gathered this information long before she consulted him and took it into account when forming his credence that she has the mutated gene. In light of what she has just read, Chloe changes her conditional credence that she will develop a breast cancer given that she has a mutated BRCA1 gene from 0.3 to 0.75, and also updates her remaining credences in response to this change. Should Chloe still defer to Bert’s credence that she has a mutated BRCA1 gene? In other words, should Chloe’s posterior credence that she has the mutated gene conditional on Bert’s probabilistic opinion about this subject matter be still equal to Bert’s opinion?

In this paper, I show that if in response to the journal’s news Chloe changes her conditional credence that she will develop a breast cancer given that she has a mutated BRCA1 gene, and then revises her remaining credences by an update rule called Adams conditionalization, devised by Richard Bradley (2005; 2017, ch. 10.5), then her posterior credence in the proposition that she has the mutated gene conditional on Bert’s credence for that proposition can no longer line up with Bert’s credence, on pain of inconsistency.

This follows from a more general result that I prove in this paper. It says that, except in some trivial situations, the following three claims are jointly inconsistent:

-

1.

Your prior and posterior credence in a proposition A conditional on the expert’s credence in A being x are equal to x.

-

2.

You change your prior conditional credence in a proposition B given the proposition A.

-

3.

You revise your remaining prior credences by Adams conditionalization.

However, as I will argue, this inconsistency is puzzling. To preview: when you revise your credences by Adams conditionalization in response to a change in your conditional credence for B given A, you always leave your credence for the conditioning proposition A unchanged. And since, by doing so, you learn nothing that could either raise or lower your credence in A, it is at least permissible to continue to defer to the expert’s credence for A. Yet, as the main result of this paper shows, this cannot be so, on pain of inconsistency.

In order to avoid this inconsistency, I consider two possible ways out: the first is to reject Adams conditionalization, and the second one is to drop the standard principle of expert deference. I will argue that while dropping Adams conditionalization appears problematic, we should rather give up the standard principle of expert deference and think of the expert’s reported credence as a constraint on an agent’s posterior credence distribution rather than her prior one.

Here is the plan. In Sect. 2, I will spell out in detail the standard principle of expert deference and its recent rival, i.e., the idea of expert deference as a belief revision schema. In Sect. 3, I will show that when your prior conditional credence in A abides by the standard principle of expert deference and then is revised by Adams conditionalization in response to a shift in your conditional credence for B given A, your posterior conditional credence in A can no longer obey this standard principle of expert deference, on pain of inconsistency. There, I will also provide reasons for thinking that this finding is puzzling. In Sect. 4, I will show why the option of rejecting Adams conditionalization in order to avoid this inconsistency appears problematic. In Sect. 5, I will argue that to avoid this inconsistency, we should rather abandon the standard principle of expert deference and think of expert deference as a belief revision schema. Section 6 concludes.

2 Two approaches to expert deference

In this section, I introduce both the orthodox account of expert deference in Bayesian epistemology and a recent alternative account—expert deference as a belief revision schema—developed by Roussos (2021).

Let me first state some background assumptions. Suppose that \(\left( {\mathcal {W}}, {\mathcal {F}}, c \right) \) is your subjective probability space (or your credal state), where \({\mathcal {W}}\) represents the worlds that you consider possible, \({\mathcal {F}}\) is an algebra of subsets of \({\mathcal {W}}\) that can be understood as the propositions you can express, and c is your credence function which assigns numbers from \(\left[ 0, 1 \right] \), called credences, to propositions in \({\mathcal {F}}\). I assume that, at any given time, your credence function is constrained by the norm called probabilism—that is, it is required to be a probability function over \({\mathcal {F}}\).

Since credal states frequently change in response to learning experiences, I assume that a learning experience \({\mathcal {E}}\) may prompt a revision of your credence in any \(A \in {\mathcal {F}}\), c(A), resulting in your new credence in A, \(c_{{\mathcal {E}}}(A)\). Given this dynamic aspect of our credal states, I will call \(c(\cdot )\) your prior and \(c_{{\mathcal {E}}}(\cdot )\) your posterior credence function. Note also that in a sequence of learning experiences \({\mathcal {E}}\) and \(\mathcal {E'}\) that you undergo, \(c_{{\mathcal {E}}}(\cdot )\) is posterior relative to \(c(\cdot )\), yet it is prior relative to \(c_{\mathcal {EE'}}(\cdot )\).

A widespread position in Bayesian epistemology is that there are many things that you ought to defer to in forming your credences. Let’s call them experts. They include, for example, objective chances (Lewis, 1980), your future, better informed credences (van Fraassen, 1984), or the credences of other people who you believe are better informed than you or have skills which make them better at evaluating evidence (Elga, 2007; Joyce, 2007).

According to the orthodox account of expert deference in Bayesian epistemology (Gaifman, 1988; Elga, 2007; Joyce, 2007), the expert’s credence in a proposition A is understood as a constraint on an agent’s conditional prior credence in A in her prior credence distribution over \({\mathcal {F}}\). That is, many believe that in forming your prior credence in A, you should obey the following principle (call it the standard principle of expert deference, or simply SED):

SED: Let \(E_{x}^{A} \in {\mathcal {F}}\) be the proposition that the expert’s credence for A is x, where \(0 \le x \le 1\). Then, for all \(A \in {\mathcal {F}}\) and all x,

$$\begin{aligned} c(A\vert E_{x}^{A}) = x, \end{aligned}$$providing \(c\,(E_{x}^{A}) > 0\).

Thus, SED says that your prior credence in a proposition A conditional on the proposition which says that the expert’s credence in A is x ought to be equal to x, providing that you assign a positive credence to \(E_{x}^{A}\).

Note that SED is a synchronic constraint on your prior credence distribution over \({\mathcal {F}}\)—it fixes your prior credence for A conditional on the proposition \(E_{x}^{A}\). Thus, SED should not be read as a way of transforming your prior credence in A into a posterior one in response to the expert’s reported credence for A, i.e., as a diachronic constraint on how you should revise your prior credences.

Why should your prior credences obey SED? It turns out that many instances of SED can be justified by appealing to some fundamental norms of both epistemic and practical rationality.Footnote 2 For example, there are arguments that vindicate the claim that deferring to objective chances in the manner prescribed by SED makes your credences epistemically better (or less inaccurate), in expectation (see, e.g., Pettigrew 2013; 2016, ch. 9). Or, one can show that deferring to your future credences in this way minimizes inaccuracy (see, e.g., Huttegger 2013), and it cannot lead you to expect to make worse practical decisions (see Huttegger 2014).

But although many instances of SED are well justified, SED itself faces considerable conceptual problems. Here, I will mention two.Footnote 3 Firstly, we should naturally expect that real agents might have no attitudes to the proposition concerning the expert’s credence. For example, suppose that Chloe has just been introduced to Sam, a professor of genetics. She regards him as an expert with respect to the proposition that she has a mutated BRCA1 gene. But because she has just met Sam, it is unrealistic to demand that previously she had a credence in his probabilistic opinion concerning this subject matter. Yet SED is a constraint on your prior conditional credence, and thus requires that Chloe had formed an attitude to Sam’s probabilistic opinion even before she met him. Secondly, SED is too restrictive, for it focuses only on one type of expert’s reports—reports of unconditional credence. But experts can report various other types of probabilistic opinion, including conditional credences, likelihood ratios or even expected values of random variables. These types of probabilistic opinion might also be worthy of deference, and hence a plausible theory of expert deference should take them into account.

The above-mentioned problems motivate an alternative approach to expert deference which models an expert’s report as a constraint on your posterior credence distribution, \(c_{{\mathcal {E}}}\left( \cdot \right) \). For example, when the expert reports that her unconditional credence for proposition A is x, you should set your posterior credence for A equal to x. This approach has been recently called expert deference as a belief revision schema (EDasBR, for short). Its crucial feature is that the expert’s report is no longer modelled as a proposition in \({\mathcal {F}}\), and hence you are not required to have priors for this proposition.

Moreover, according to EDasBR, once the expert’s report acts like a certain type of constraint on your posterior credences, you can propagate it over the remainder of your posterior credences over \({\mathcal {F}}\) by using an update rule which is associated with this type of constraint. For example, when Bert reports that his unconditional credence that Chloe has a mutated BRCA1 gene is 0.025, and then Chloe sets her posterior credence for that proposition equal to this value, we should reasonably expect that this would also affect her prior credence that she will develop a breast cancer. And, by applying an update rule that is appropriate for this type of expert’s report, we can determine how exactly that credence would be affected.

EDasBR, so understood, is a part of Bayesian theory of updating which provides various update rules that tell us how we ought to change our prior credences in response to a learning input. A well-entrenched view within this theory is to model various types of learning input as a constraint on the set of possible credence functions \({\mathcal {C}}\) which restricts the candidates for your posterior credence function (see, e.g. van Fraassen 1989, ch. 13; Uffink 1996; Joyce 2010, Dietrich et al. 2016; van Fraassen and Halpern 2017). More formally, a learning input can be understood extensionally as a set of posterior credence functions over \({\mathcal {F}}\), \({\mathcal {C}}_{{\mathcal {E}}}\), which contains those possible posterior credence functions that are consistent with this input, that is, \({\mathcal {C}}_{{\mathcal {E}}}\subseteq {\mathcal {C}}\). Once we specify a learning input, we can use a suitable update rule as a way of revising the rest of your credences in response to that input. Below, I will focus on two types of learning input that are matched with two different update rules. Both will play a crucial role in the coming sections.Footnote 4

When experience rationalizes a shift in your credences over some partition \({\mathcal {A}}\) of \({\mathcal {W}}\), the learning input gleaned from this experience can be understood as the constraint \({\mathcal {C}}_{{\mathcal {E}}} = \left\{ c_{{\mathcal {E}}}: c_{{\mathcal {E}}}\left( A\right) \ge 0 \ \text {for all} \ A \in {\mathcal {A}}\right\} \), for some set of ordered pairs \(\left\{ \left\langle A, c_{{\mathcal {E}}}(A)\right\rangle \right\} \) such that \(A \in {\mathcal {A}}\) and \(\sum _{A \in {\mathcal {A}}}c_{{\mathcal {E}}}\left( A\right) = 1 \). Call this constraint a Jeffrey input and denote it by \({\mathcal {C}}_{{\mathcal {E}}}^{J}\). An update rule that is typically matched with a Jeffrey input, and allows you to propagate it over your remaining credences, is Jeffrey conditionalization (JCondi):

JCondi: Given \({\mathcal {C}}_{{\mathcal {E}}}^{J}\), your posterior credence function, \(c_{{\mathcal {E}}}\), ought to be such that, for every \(X \in {\mathcal {F}}\),

$$\begin{aligned} c_{{\mathcal {E}}}\left( X \right) = \sum _{A \in {\mathcal {A}}} c\left( X\vert A \right) \cdot c_{{\mathcal {E}}}\left( A \right) . \end{aligned}$$

The idea behind JCondi is that your posterior credence in X should be a weighted sum of your prior conditional credences in X given each of the A, with the weights given by your posterior credences in the A. Observe that when you become certain that one of the propositions in \({\mathcal {A}}\) is true and all of the other are false, JCondi reduces to the orthodox Bayesian conditionalization (BCondi). It states that for all \(X \in {\mathcal {F}}\), \(c_{{\mathcal {E}}}(X) = c\left( X\vert A\right) \), providing \(c(A) > 0\).

Now, according to EDasBR, the expert’s reported unconditional credence for A is a Jeffrey input, and hence constrains a redistribution of your credences over a partition \(\left\{ A, \lnot A \right\} \) in such a way that your posterior credence in A should be the expert’s unconditional credence in A. For example, Bert’s credence in G (where G stands for the proposition that Chloe has a mutated BRCA1 gene) provides a constraint for Chloe’s posterior credences over \(\left\{ G, \lnot G \right\} \) such that \(c_{{\mathcal {E}}}\left( G \right) = 0. 025\) and \(c_{{\mathcal {E}}}\left( \lnot G \right) = 0. 975\). And in response to this constraint, JCondi allows us to determine the remainder of Chloe’s posterior credences. Hence, we have that for any \(X \in {\mathcal {F}}\):

In many cases experience rationalizes a change in your conditional credence for a proposition B given a proposition A. The learning input can then be understood as the constraint \({\mathcal {C}}_{{\mathcal {E}}} = \left\{ c_{{\mathcal {E}}}: c_{{\mathcal {E}}}\left( B\vert A\right) \ge 0 \ \text {for some} \ A \in {\mathcal {A}} \ \text {and for some} \ B \in {\mathcal {B}}\right\} \), where \({\mathcal {A}}\) and \({\mathcal {B}}\) are partitions of \({\mathcal {W}}\). Call this constraint an Adams input and denote it by \({\mathcal {C}}_{{\mathcal {E}}}^{A}\). A plausible update rule that is suitable for this kind of learning input is Adams conditionalization (ACondi):Footnote 5

ACondi: Given \({\mathcal {C}}_{{\mathcal {E}}}^{A}\), your posterior credence function, \(c_{{\mathcal {E}}}\), ought to be such that for all \(X \in {\mathcal {F}}\),

$$\begin{aligned}{} & {} c_{{\mathcal {E}}}(X) = c\left( X\vert A \wedge B \right) \cdot c_{{\mathcal {E}}}\left( B\vert A \right) \cdot c\left( A \right) \\{} & {} \quad + c\left( X\vert A \wedge \lnot B \right) \cdot c_{{\mathcal {E}}}\left( \lnot B\vert A \right) \cdot c\left( A \right) + c\left( X\vert \lnot A \right) \cdot c\left( \lnot A\right) . \end{aligned}$$

That is, when you revise your credence in X by ACondi upon a change in your conditional credence \(c\left( B\vert A \right) \), you (i) calculate your posterior conditional credences in X given the elements of the partition \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B\right\} \) of \({\mathcal {W}}\) by using your posterior conditional credences \(c_{{\mathcal {E}}}\left( B\vert A \right) \) and \(c_{{\mathcal {E}}}\left( \lnot B\vert A \right) \) given by the learning input and your prior conditional credences for X given the elements of this partition, and (ii) average the conditional posteriors \(c_{{\mathcal {E}}}\left( X\vert A \wedge B \right) \), \(c_{{\mathcal {E}}}\left( X\vert A \wedge \lnot B \right) \), and \(c_{{\mathcal {E}}}\left( X\vert \lnot A \right) \) so calculated with your prior credences for A and \(\lnot A\), respectively.

Now, suppose that a reliable expert informs Chloe that patients with a mutated BRCA1 gene have a much higher risk of developing breast cancer, around \(75\%\). It seems reasonable to treat this statement as the expert’s reported conditional credence for the proposition that Chloe will develop a breast cancer (D) given the proposition G. And according to EDasBR, we can understand this report as an Adams input which constrains Chloe’s posterior credence distribution so that \(c_{{\mathcal {E}}}\left( D\vert G \right) = 0.75\) and \(c_{{\mathcal {E}}}\left( \lnot D\vert G \right) = 0.25\). If so, then ACondi allows Chloe to propagate this constraint over her remaining credences, so that for every \(X \in {\mathcal {F}}\):

Which of the above approaches to expert deference should be preferred? Both have able defenders and many plausible arguments have been proposed in favour of each. My goal is not to decide which one is the correct approach to expert deference. Rather, I will formulate a conflict between SED and ACondi, and will argue that so long as we want to avoid it, we should prefer EDasBR over SED.

3 The problem

In this section, I will present a conflict between SED and ACondi: it turns out that, except in some trivial situations, SED is not preserved under ACondi in response to a certain kind of Adams input. I will also argue that this conflict is puzzling.

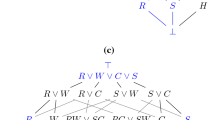

The relevant result is the following (The proof is given in the Appendix):

Proposition 1

Let A and B be propositions in \({\mathcal {F}}\) such that \(0< c\left( B\vert A \right) < 1\). Suppose that \(E_{x}^{A} \in {\mathcal {F}}\) is the proposition that the expert’s credence for A is x, where \(0<x<1\). Then, the following conditions are jointly inconsistent:

- (\(C_{1}\)):

-

\(c_{{\mathcal {E}}}\left( A\vert E_{x}^{A}\right) = x = c\left( A\vert E_{x}^{A}\right) \).

- (\(C_{2}\)):

-

\(c_{{\mathcal {E}}}\left( B\vert A\right) \ne c\left( B\vert A\right) \).

- (\(C_{3}\)):

-

\( c_{{\mathcal {E}}}(X) = c\left( X\vert A \wedge B \right) \cdot c_{{\mathcal {E}}}\left( B\vert A \right) \cdot c\left( A \right) + c\left( X\vert A \wedge \lnot B \right) \cdot c_{{\mathcal {E}}}\left( \lnot B\vert A \right) \cdot c\left( A \right) + c\left( X\vert \lnot A \right) \cdot c\left( \lnot A\right) \), for every \(X \in {\mathcal {F}}\).

More informally, \(C_{1}\) states that your prior and posterior conditional credence in a proposition A obey SED—they both line up with the expert’s credence for A. \(C_{2}\) says that you change your conditional credence in some proposition B given A, or that you are constrained by Adams input. And \(C_{3}\) states that, in response to this learning input, you update your credences over the entire algebra \({\mathcal {F}}\) by using ACondi. Thus, this result says that if you defer to the expert’s credence for a proposition A in the manner prescribed by SED, and then update your credences by ACondi in response to a change in your conditional credence for B given A, you cannot continue to defer to that expert as prescribed by SED, on pain of inconsistency.

Observe that the inconsistency stated in Proposition 1 disappears once the expert’s credence for A is trivial—i.e., the value of x is 0 or 1. In particular, suppose that \(x = 1\). Then, one can show that if \(c\left( A\vert E_{1}^{A}\right) = 1\) and you satisfy \(C_{2}\) and \(C_{3}\), then we have that \(c_{{\mathcal {E}}}\left( A\vert E_{1}^{A}\right) = 1\), and hence you also satisfy \(C_{1}\). So conditions \(C_{1}, C_{2}\) and \(C_{3}\) are jointly compatible.Footnote 6 Formally:

Hence, \(c_{{\mathcal {E}}}\left( A\vert E_{1}^{A}\right) = 1 = c\left( A\vert E_{1}^{A}\right) \), as required.

Chloe’s case proves illustrative of Proposition 1. That is, it follows from this result that once her posterior credence distribution is recommended by ACondi in response to Adams input \({\mathcal {C}}_{{\mathcal {E}}} = \left\{ c_{{\mathcal {E}}}: c_{{\mathcal {E}}}\left( D\vert G\right) = 0.75\right\} \), her posterior conditional credence in G given that Bert’s credence for G equals 0.025 can no longer be equal to 0.025, on pain of inconsistency.Footnote 7 That is, in her situation, the following conditions are jointly inconsistent:

- (\(M_{1}\)):

-

\(c_{{\mathcal {E}}}\left( G\vert E_{0.025}^{G}\right) = 0.025 = c\left( G\vert E_{0.025}^{G}\right) \).

- (\(M_{2}\)):

-

\(c_{{\mathcal {E}}}\left( D\vert G\right) = 0.75 \ne 0.3 = c\left( D\vert G\right) \).

- (\(M_{3}\)):

-

\( c_{{\mathcal {E}}}(X) = c\left( X\vert G \wedge D \right) \cdot c_{{\mathcal {E}}}\left( D\vert G \right) \cdot c\left( G \right) + c\left( X\vert G \wedge \lnot D \right) \cdot c_{{\mathcal {E}}}\left( \lnot D\vert G \right) \cdot c\left( G \right) + c\left( X\vert \lnot G \right) \cdot c\left( \lnot G\right) \), for every \(X \in {\mathcal {F}}\).

Should Chloe continue to defer to Bert’s credence after updating by ACondi? My view is that the inconsistency given in Proposition 1, and hence in Chloe’s case, is puzzling, for we should reasonably expect the conditions \(C_{1}, C_{2}\) and \(C_{3}\) to be jointly satisfied.

To see why, observe first that, as shown in Bradley (2005, p. 352), one changes her credences by ACondi in response to a shift in one’s conditional credence for B given A iff for all \(X \in {\mathcal {F}}\):

- (\(AC_1\)):

-

\(c_{{\mathcal {E}}}(A) = c( A)\).

- (\(AC_2\)):

-

\(c_{{\mathcal {E}}}(X\vert \lnot A) = c( X\vert \lnot A)\).

- (\(AC_3\)):

-

\(c_{{\mathcal {E}}}(X\vert A \wedge B ) = c(X\vert A \wedge B)\).

- (\(AC_4\)):

-

\(c_{{\mathcal {E}}}(X\vert A \wedge \lnot B ) = c(X\vert A \wedge \lnot B)\).

That is, updating by ACondi leaves your prior credence in the conditioning proposition A unaltered and keeps your prior conditional credences given the cells of partition \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B\right\} \) fixed.

Now, if you change your credence for B given A (or you satisfy \(C_{2}\)) and update your remaining credences by ACondi (or you satisfy \(C_{3}\)), you learn no information evidentially relevant to how you ought to grade the possible worlds in A, for ACondi requires setting \(c_{{\mathcal {E}}}(A) = c(A)\), and hence \(c_{{\mathcal {E}}}(\lnot A) = c(\lnot A)\). And it is reasonable to think that if you learn nothing that could be evidentially relevant to A, then it is at least permissible to continue to defer to the expert’s credence for A. After all, it is reasonable to think that the expert’s credence for A trumps any information which is evidentially irrelevant for A. Hence, it is at least permissible to satisfy \(C_{1}\). Yet ACondi yields an inferential change over your remaining credences so that your posterior credence for A conditional on the expert’s credence for A being x cannot be equal to x, on pain of inconsistency.

Thus, insofar as Chloe updates by ACondi in response to a change in her credence for D given G, she cannot continue to defer to Bert’s credence for G, even though her learning experience says nothing that could be evidentially relevant to whether G is true. This seems hardly acceptable, for it is at least permissible to continue to defer to Bert’s credence for G under these circumstances.

But it might be claimed that even though the Adams input under consideration, i.e., a shift in your credence for B given A, has no evidential value with respect to A, it can be understood as a shift in your credences for some other propositions that could nevertheless be regarded as supporting evidence for A. And, this argument continues, so long as no such proposition counts as supporting evidence for A, we are entitled to say that it is at least permissible to continue to defer to the expert’s credence for A after undergoing a shift in your credences for these propositions.

To explore this possibility, let us first note that ACondi in response to a shift in your credence for B given A can be represented as a special case of JCondi in response to a shift in your credences over the partition \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B \right\} \). And we can obtain this representation by setting the posterior credences over this partition as follows: (i) \(c_{{\mathcal {E}}}(\lnot A) = c(\lnot A)\) and (ii) \(c_{{\mathcal {E}}}(A \wedge B) = c_{{\mathcal {E}}}\left( B\vert A \right) \cdot c(A)\) and \(c_{{\mathcal {E}}}(A \wedge \lnot B) = c_{{\mathcal {E}}}\left( \lnot B\vert A \right) \cdot c(A)\), which, by the law of total probability, ensures that \(c_{{\mathcal {E}}}(A \wedge B) + c_{{\mathcal {E}}}(A \wedge \lnot B) = c(A)\).Footnote 8

With this in mind, let us now ask whether any of the propositions in \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B \right\} \) could count as supporting evidence for A. To answer this question, observe, however, that neither proposition in this partition can be learned for certain. After all, we use, as our update rule, JCondi in response to a learning experience by which we become more confident in some propositions without becoming entirely certain of any of them. But if this is so, we need to go beyond the orthodox Bayesian account of confirmation which states that:

Confi: Y is supporting evidence for X iff

$$\begin{aligned} c(X\vert Y) > c\left( X\right) . \end{aligned}$$

Clearly, Confi applies to cases in which we learn a proposition Y for certain, and hence we can use BCondi as our update rule. But to answer our question, we need a generalization of Confi that would cover cases to which JCondi is applicable. Here is a plausible generalizationFootnote 9:

GConfi: Y is supporting evidence for X iff (a) \(c(X\vert Y) > c\left( X\right) \) and (b) \(c_{{\mathcal {E}}}(X) > c(X)\) for all posterior credence functions \(c_{{\mathcal {E}}}\) that are recommended by JCondi on a partition \({\mathcal {Y}}\) of which Y is a member and for which \(c_{{\mathcal {E}}}(Y) > c(Y)\).

The idea behind GConfi is that just in case clauses (a) and (b) are satisfied, a proposition Y can be regarded as unambiguously confirmatory for X.

GConfi seems intuitively right. First, if you conditionalized on Y and this made you less confident that X is true or had no effect at all on your credence in X, then it would be suspicious to regard Y as supporting evidence for X. And, second, if you became more confident in some member Y of a partition \({\mathcal {Y}}\) and yet lowered your posterior credence in X or kept it unchanged by using JCondi in response to a shift over \({\mathcal {Y}}\), then Y’s status as supporting evidence for X would also be ambiguous.

Now, if GConfi is correct, neither proposition in the partition \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B \right\} \) counts as unambiguously confirmatory for A. Clearly, assuming \(c\left( \lnot A \right) > 0 \), we have that \(0 = c(A\vert \lnot A) < c\left( A\right) \) and so clause (a) of GConfi is not satisfied in the case of proposition \(\lnot A\). And when it comes to either proposition \(A \wedge B\) or \(A \wedge \lnot B\), it turns out that clause (b) of GConfi cannot be satisfied. For example, suppose that you have raised your credence for \(A \wedge B\) and so \(c_{{\mathcal {E}}}(A \wedge B) > c(A \wedge B)\). Then, assuming that \(0<c(A)<1\), \(A \wedge B\) satisfies clause (a), for \(1 = c(A\vert A \wedge B) > c(A)\), but it fails to satisfy clause (b), since, by the special case of JCondi, we get:

Thus, the proposition \(A \wedge B\) (and similarly, \(A \wedge \lnot B\)) is not confirmatory for A, according to GConfi. And since neither proposition in \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B \right\} \) counts as confirmatory for A, it is at least permissible to continue to defer to the expert’s credence for A.

Before closing this section, let us note a related result in the literature. There is a similar conflict between SED and JCondi. That is, as it has been first noticed in Levi (1967), proved in Harper and Kyburg (1968, pp. 250-251), and recently reproduced by Nissan-Rozen (2013, pp. 843-845), SED is not preserved under JCondi, except in some trivial cases.Footnote 10 More formally:

Proposition 2

(Levi, 1967; Harper and Kyburg, 1968; Nissan-Rozen, 2013) Let A be a proposition in \({\mathcal {F}}\) and let \(\left\{ A, \lnot A\right\} \) be a partition of \({\mathcal {W}}\). Suppose that \(E_{x}^{A} \in {\mathcal {F}}\) is the proposition that the expert’s credence for A is x, where \(0<x<1\). Then, the following conditions are jointly inconsistent:

- (\(D_1\)):

-

\(c_{{\mathcal {E}}}\left( A\vert E_{x}^{A}\right) = x = c\left( A\vert E_{x}^{A}\right) \).

- (\(D_2\)):

-

\(c_{{\mathcal {E}}}(A) \ne c(A)\).

- (\(D_3\)):

-

\(c_{{\mathcal {E}}}\left( X\vert A\right) = c\left( X\vert A\right) \), for every \(X \in {\mathcal {F}}\).

- (\(D_4\)):

-

\(c_{{\mathcal {E}}}\left( X\vert \lnot A\right) = c\left( X\vert \lnot A\right) \), for every \(X \in {\mathcal {F}}\).

Clearly, the structure of Proposition 2 resembles that of Proposition 1. \(D_{1}\) says that your prior and posterior conditional credences in A satisfy SED. \(D_{2}\) says that you undergo a Jeffrey shift over \(\left\{ A, \lnot A\right\} \), so that your prior and posterior credence in A disagree.Footnote 11 Finally, \(D_{3}\) and \(D_{4}\) say that, in response to that Jeffrey shift, updating your credence in any proposition X is rigid, and hence is governed by JCondi.Footnote 12

Given Proposition 2, it might be claimed that Proposition 1 is just a simple consequence of Proposition 2. For, as I have already indicated, ACondi can be represented as a special case of JCondi on the partition \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B \right\} \).

But this view is misguided. Observe that Proposition 2 does not say that SED is not preserved under JCondi on any partition of propositions you like. To show this, suppose that you update your credence distribution by JCondi on the partition consisting of two propositions, \(\left\{ E_{0.5}^{A}, E_{0.8}^{A}\right\} \), assumed to be mutually exclusive and jointly exhaustive. Then, as the following simple reasoning shows, your posterior credence in A conditional on \(E_{0.5}^{A}\) would still satisfy SED:

So, what cases of JCondi does Proposition 2 concern? It is easy to observe that it concerns JCondi on those partitions that contain a proposition A for which the expert’s credence is known and constrains your conditional credences \(c\left( A\vert E_{x}^{A}\right) \) and \(c_{{\mathcal {E}}}\left( A\vert E_{x}^{A}\right) \). But when we represent ACondi as a special case of JCondi, then Proposition 1 concerns JCondi on the partition that, instead of A, contains the more fine-grained propositions \(A \wedge B\) and \(A \wedge \lnot B\) for which the expert’s credences are not known, since your conditional credences \(c\left( A\vert E_{x}^{A}\right) \) and \(c_{{\mathcal {E}}}\left( A\vert E_{x}^{A}\right) \) are still constrained by the expert’s credence for A. Hence, even if ACondi can be represented as a special case of JCondi on the partition \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B \right\} \), it still remains to be checked whether JCondi on this partition conflicts with the condition that \(c\left( A\vert E_{x}^{A}\right) = x = c_{{\mathcal {E}}}\left( A\vert E_{x}^{A}\right) \). And Proposition 1 does exactly this.

Having stated a conflict between SED and ACondi and having explained its puzzling nature, below I consider two possible escape routes from our problem.

4 Rejecting ACondi

It is reasonable to think that whenever a learning experience rationalizes a shift in one’s prior conditional credence \(c\left( B\vert A\right) \) in a way ensuring that conditions (\(AC_{1}\))–(\(AC_{4}\)) are satisfied, ACondi steps in as a plausible update rule. But, of course, one might reject ACondi and follow the dictates of some alternative update rule in learning situations like this, hoping that this would avoid the inconsistency with SED. Below, I give two reasons for why this strategy appears problematic, whether or not one could capitalize on it to avoid the inconsistency.

Firstly, if we understand ACondi in response to a change in your credence for B given A as a special case of JCondi on the partition \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B \right\} \), then failure to follow this rule will lead you into diachronic practical irrationality. That is, when you learn a change in your credences over that partition, and you revise your credences in any way other than the way recommended by JCondi, then a clever bookie could use a strategy which exposes you to the risk of losing money with no hope of winning any money.Footnote 13 Hence, if we replace ACondi with some other update rule in cases where we learn a change in the conditional credence for B given A, we will be diachronically Dutch book-able.

Secondly, ACondi has recently received additional support in Dietrich et al. (2016) who have proved that, for learning experiences that prompt a shift in one’s conditional credences, this is the unique update rule that satisfies two plausible axioms that any update rule may be expected to satisfy: responsiveness and conservativeness. While the former axiom requires that posterior credences be consistent with the learnt input, the latter ensures that the update rule changes only what is required by the learnt input and leaves unaltered those parts of your credal state that are not affected by that input.

More specifically, ACondi is responsive just in case it respects the constraint given by some Adams input. And it is conservative in response to a change in one’s conditional credence for B given A just in case it affects one’s credences over the partition \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B \right\} \), but leaves unaltered all conditional credences given the elements of that partition, and keeps one’s credence for A fixed.

Thus, if we dropped ACondi and used some different update rule in learning situations like Chloe’s, we would have to violate either responsiveness or conservativeness, or both. But unless a learning experience rationalizes rejecting either the former or the latter, this move seems unjustified.

Now, there is no reason to think that Chloe’s learning experience justifies violations of these two axioms. If Chloe violated responsiveness, it would mean that she changes her conditional credence for D given G to any probability value different than 0.75. But it would be unjustified. After all, the learning input says precisely that this posterior conditional credence should be 0.75. And if she violated conservativeness, it would mean that, by using an update rule different than ACondi, she changes her conditional credences for some proposition in her algebra given the elements of \(\left\{ \lnot G, G \wedge D, G \wedge \lnot D \right\} \) or alters her credence in G. For example, she might be tempted to change her credence that she will lose her job given that she has the mutated gene and she will develop a breast cancer. But her learning experience is silent on this credence— it justifies only a change in her credence for D given G, and hence, by probabilism, a change in her credence for \(\lnot D\) given G. All changes in her conditional credences given the elements of the partition \(\left\{ \lnot G, G \wedge D, G \wedge \lnot D \right\} \) are unjustified by that learning experience.

5 Dropping SED

A different strategy is to replace SED by some alternative account of expert deference. My suggestion is that to avoid the inconsistency given in Proposition 1, a promising move is to replace SED by EDasBR.

To make this point more concrete, let us get back to Chloe’s case. Suppose that instead of taking Bert’s credence for G as a constraint on her prior conditional credence for G, she takes it as a constraint on her posterior credence for G, so that \(c_{{\mathcal {E}}}\left( G \right) = 0.025 \), in accordance with EDasBR. Recall that by employing this approach to expert deference, we are able to propagate this constraint through the remainder of her credences over \({\mathcal {F}}\). Thus, assuming that Chloe is mostly interested in setting her new credence for the proposition that she will develop a breast cancer, she can use JCondi for this purpose. In doing so, we also stipulate that her prior conditional credence that she will develop a breast cancer given that she does not have a mutated BRCA1 gene is relatively low, around 0.1. Hence:

Observe also that, as intuitively expected, using JCondi would have no effect on her prior conditional credence that she will develop a breast cancer given that she has the mutated gene, which initially was equal to 0.3. This is so because:

And similarly, updating by JCondi would not budge \(c\left( D\vert \lnot G \right) \).

Now, when Chloe reads the statement ‘The odds are 3:1 that patients with a BRCA1 gene mutation will develop a breast cancer’, she undergoes a new learning experience \({\mathcal {E}}'\) and changes her conditional credence from \(c_{{\mathcal {E}}}(D\vert G) = 0.3\) to \(c_{\mathcal {EE'}}(D\vert G) = 0.75\). Then, if she uses ACondi in response to this learning input, she raises slightly her posterior credence for D, since:

This seems intuitively correct, for given that the probability that she has a mutated BRCA1 gene is quite low, \(c_{{\mathcal {E}}}\left( G \right) = 0.025\), her posterior credence that she will develop a breast cancer, \(c_{\mathcal {EE'}}(D) \approx 0.12\), should not depart much from her prior conditional credence that she will develop a breast cancer given that she does not have the mutated gene, \(c_{{\mathcal {E}}}\left( D\vert \lnot G \right) \approx 0.1\)

But, most importantly, ACondi in response to a change in Chloe’s credence for D given G does not change her prior credence for G. More precisely:

Hence, when we employ EDasBR as a model of expert deference, we can show in a consistent way that, after reading the journal’s news, Chloe will continue to defer to Bert’s credence that she has a mutated BRCA1. This is reflected in the fact that Chloe’s posterior credence for G, \(c_{\mathcal {EE'}}(G)\), retains the constraint imposed by Bert’s probabilistic opinion on her prior credence for G, \(c_{{\mathcal {E}}}(G)\), which is also a posterior credence relative to c(G).

At this point, I would like to consider one important objection to this treatment of the problem stated in Proposition 1.Footnote 14 One might worry that the current proposal tackles a different problem than the one given in Proposition 1. Here’s why. It is often claimed that we should understand SED as telling us how the agent is disposed to respond to expert reports they may learn, rather than how they actually update their credences when they learn such reports.Footnote 15 If so, then Proposition 1 in fact says that if you are disposed to respond to the expert report on proposition A in the manner prescribed by SED, and then you actually update your credences by ACondi in response to a change in your conditional credence for B given A, you cannot continue to be disposed to respond to that expert as prescribed by SED, on pain of inconsistency. And again this seems puzzling, for when you actually update by ACondi, you learn no information evidentially relevant to A, and so it should be at least permissible to still be disposed to adopt the expert’s credence for A after this update. Thus, under this interpretation, the tension between SED and ACondi stands. But EDasBR does not concern how you are disposed to respond when you learn expert reports, but rather it says how you actually update your credences when you learn such reports. So EDasBR cannot count as a resolution to the inconsistency problem stated in Proposition 1, for the latter concerns your disposition to respond to the expert testimony rather than your actual update in the light of such testimony.

One plausible way to avoid this concern is to observe that we can also understand EDasBR as modelling your learning dispositions—i.e., your dispositions to update your credences in the light of expert testimony—rather than your actual updates. EDasBR models expert deference as a belief revision process: the expert report is modelled as a constraint on your posterior credences and is accompanied with a suitable update rule which propagates this constraint through your credal state. It is natural to think that the constraint or learning input may be interpreted as a certain stimulus condition and the suitable update rule as saying how you should be disposed to respond in the stimulus condition.Footnote 16 To see how this might work, let’s get back to Chloe’s case. In response to Bert’s testimony, JCondi tells Chloe how she should be disposed to propagate the learning input modelled as \(c_{{\mathcal {E}}}\left( G \right) = 0.025\) through her credal state. In particular, she should be disposed to adopt the credences \(c_{{\mathcal {E}}}\left( D \right) = 0.105\) and \(c_{{\mathcal {E}}}\left( D\vert G \right) = 0.3\), as shown in equations (6) and (7). Importantly, under this interpretation of EDasBR, she is also disposed to adopt the credence \(c_{{\mathcal {E}}}\left( G \right) = 0.025\), which is Bert’s reported credence for G. After all, JCondi says that one of her learning dispositions is given by \(c_{{\mathcal {E}}}\left( G \right) = c(G\vert G)\cdot 0.025 + c(G\vert \lnot G)\cdot 0.975 = 0.025\). Now, when Chloe changes her conditional credence from \(c_{{\mathcal {E}}}(D\vert G) = 0.3\) to \(c_{\mathcal {EE'}}(D\vert G) = 0.75\) and actually updates her credences by ACondi in response to this learning input, she is still disposed to adopt Bert’s credence in G, for we can show in a consistent way that \(c_{\mathcal {EE'}}(G) = 0.025 = c_{{\mathcal {E}}}(G)\). Thus, when both SED and EDasBR concern your dispositions to respond to the expert report rather than your actual updates in the light of expert testimony, EDasBR avoids the inconsistency problem that besets SED.

Besides avoiding the inconsistency given in Proposition 1 and being intuitively correct in cases like Chloe’s, EDasBR has another advantage that is worth mentioning. One might think about Chloe’s case as a situation in which we deal with the reports of two experts delivered at two different times: the first is Bert’s credence for G and the second is the conditional credence for D given G reported by a person who wrote that: ‘The odds are 3:1 that patients with a BRCA1 gene mutation will develop a breast cancer’. And these reports are essentially different—the first concerns unconditional credence, while the second is about the expert’s conditional credence. As I mentioned earlier, SED is not flexible enough to cover such different types of expert’s report—it models only the first type and it is not entirely obvious how it could capture the second one. EDasBR, on the contrary, can cope well with this interpretation of Chloe’s case, for it models each type of expert report as a learning input that constrains Chloe’s posterior credence distribution at two different times.

Nonetheless, one might wonder whether EDasBR captures properly some important differences between an expert’s reported credence and any other kind of learning input you might receive. For example, one might claim that a revision of your credence in the proposition that it will rain today prompted by your observation of black clouds in the sky is essentially different from a revision of your credence in that proposition prompted by your deference to the probabilistic judgement of a highly reliable weather forecaster. More specifically, you might reasonably think that the probabilistic weather forecast is more reliable than your own probabilistic judgement about today’s rain, and any approach to expert deference should also model this difference in reliability adequately.

In reply, note that, as argued by Roussos (2021), EDasBR is designed to offer more realistic guidance for non-ideal agents interacting with ideal experts. So understood, this model is properly sensitive to the content of the expert report in cases that it targets—cases in which more realistic agents believe that expert reports raise no suspicions and so the evaluation of expert reliability has negligible impact. That is, this model highlights the fact that, as opposed to general learning experiences, there is something special about expert testimony in such cases—the expert’s reported credence for proposition A gives you information that is directly relevant to your credence for A, and your response to this testimony does not depend on your assessment of the expert reliability. In particular, unlike the Bayesian conditioning model which is intended to capture general learning experiences, EDasBR does not require that, in the face of expert report \(E_{x}^{A}\), your posterior credence for A depends on your prior for A, \(c\left( A \right) \), your prior for \(E_{x}^{A}\), \(c\left( E_{x}^{A}\right) \), and your likelihood for \(E_{x}^{A}\), \(c\left( E_{x}^{A}\vert A\right) \).

6 In summation

I have shown that there is a puzzling conflict between the standard principle of expert deference, SED, and the update rule ACondi—except in trivial cases, if you defer to the expert’s credence for a proposition A in the manner prescribed by SED, and then update your credences by ACondi in response to a change in your credence for a proposition B given A, you cannot continue to defer to that expert as prescribed by SED, on pain of inconsistency. As I have argued, this inconsistency is puzzling, for neither the Adams input, i.e., a change in your credence for B given A nor its representation in terms of a Jeffrey input over a specific partition of propositions counts as unambiguously confirmatory for A, and hence it is at least permissible to continue to defer to the expert’s credence for A under these circumstances.

In response to this problem, I have considered two possible ways out. In doing so, I have argued against dropping ACondi as your update rule, and I have shown that we can avoid this puzzling inconsistency by replacing SED with a different approach to expert deference, EDasBR.

Notes

Following Hall’s (2004) terminology, we may say that Bert is both a database-expert and an analyst-expert when it comes to the proposition that Chloe has the mutated gene.

By ‘instances of SED’, I mean various principles of expert deference that come by specifying the kind of expert’s credence in A that one considers. For example, if we take \(E_{x}^{A}\) to stand for the proposition that the objective chance of A is x, we get a version of Lewis’s (1980) Principal Principle. Or, if \(E_{x}^{A}\) stands for the proposition that your future credence for A is x, we get van Fraassen’s (1984) Reflection Principle.

For an excellent overview of these problems, see Roussos (2021).

I focus only on the tip of the iceberg: there is a variety of learning inputs and the corresponding update rules that the Bayesian theory covers. For example, another important kind of learning input is a constraint on your expectation value for some random variable. Arguably, it is the most general kind of constraint on one’s posterior credence distribution, and that other types of constraint can all be understood as special instances of it (see, e.g., van Fraassen 1981). Also, it is often claimed that the update rule which is appropriate for this kind of constraint is the rule of minimum relative entropy (see, e.g., Uffink 1996, Douven and Romeijn 2011).

A more general form of ACondi than the one presented here is given in Bradley (2017, p. 197). It says that when learning experience rationalizes changing your conditional credences from \(c\left( B\vert A \right) \) to \(c_{{\mathcal {E}}}\left( B\vert A \right) \), your posterior credence function ought to be such that for all \(X \in {\mathcal {F}}\), \(c_{{\mathcal {E}}}(X) = \sum _{A \in {\mathcal {A}}}\left[ \sum _{B \in {\mathcal {B}}}c\left( X\vert A \wedge B \right) \cdot c_{{\mathcal {E}}}\left( B\vert A \right) \right] \cdot c\left( A \right) \). This from of ACondi covers cases in which experience yields changes over one’s conditional credences for each B in some partition \({\mathcal {B}}\) given each conditioning proposition A in another partition \({\mathcal {A}} \). For the sake of simplicity, here I focus on a less general form of ACondi which applies to cases in which one changes conditional credences for B and \(\lnot B\) given only one conditioning proposition A. Also, this choice squares well with Chloe’s case in which after reading the scientific journal, she changes her conditional credence that she will develop a breast cancer given a mutated BRCA1 gene, but we are not told whether she should change her conditional credence that she will develop a breast cancer given that she does not have the mutated gene.

Similarly, assuming that \(x = 0\), we can show that if \(c\left( A\vert E_{0}^{A}\right) = 0\) and you satisfy \(C_{2}\) and \(C_{3}\), then you also satisfy \(C_{1}\).

We can think of the learning input gleaned by Chloe from reading the journal as an instance of conditional information which has the following more general form ‘If A, then the odds for \(B_{1},\ldots , B_{j}\) are \(\alpha _{1}:...: \alpha _{j}\)’. Then, it is reasonable to think that when you receive this kind of information, you should set your posterior conditional credence \(c_{{\mathcal {E}}}\left( B_{j}\vert A \right) \) equal to \(\frac{\alpha _{j}}{\sum _{k = 1}^{j}\alpha _{k}}\). Hence, in Chloe’s case, \(c_{{\mathcal {E}}}\left( D\vert G \right) = \frac{3}{1+3} = \frac{3}{4}\).

A proof of this representation is to be found in Bradley (2005, p. 363).

A similar generalization has been proposed and defended by Zynda (1995).

Strictly speaking, they have shown that a particular instance of SED—a version of the Principal Principle—is not preserved under JCondi. But their result can be easily generalized to any instance of SED.

For simplicity, this result concerns a two-element partition \(\left\{ A, \lnot A\right\} \), but it can be generalized to a finite n-element partition \( {\mathcal {A}} = \left\{ A_{1},\ldots , A_{n} \right\} \) of \({\mathcal {W}}\).

More generally, as shown in Jeffrey (1965), JCondi on a partition \({\mathcal {A}}\) is equivalent to the condition called Rigidity. This condition states that for every \(X \in {\mathcal {F}}\) and every \(A \in {\mathcal {A}}\), \(c_{{\mathcal {E}}}(X\vert A) = c\left( X\vert A\right) \).

Thanks to an anonymous referee for bringing this objection to my attention.

For example, Roussos (2021) claims that the prior conditional credence that figures in SED is a deference prior which can be interpreted as your disposition to respond to various pieces of evidence you may learn. Similarly, Titelbaum (2022, ch. 5) argues that expert deference principles like SED should govern your hypothetical prior credence which represents your ultimate epistemic standards or tendencies to respond to whatever evidence you might learn. Of course, when you update your credences by Bayesian conditionalization, satisfying SED has the following consequence: if you are certain that an expert assigns credence x to A, and \(E_{x}^{A}\) is your total evidence relevant to A, then, for all \(A \in {\mathcal {F}}\), \(c_{{\mathcal {E}}}\left( A \right) = c(A\vert E_{x}^{A}) = x\). This consequence is a diachronic norm—the SED-based Update—which says how you actually revise your prior credence in A into a posterior one in response to the expert’s reported credence for A. Yet Proposition 1 does not concern SED-based Update.

References

Armendt, B. (1980). Is there a dutch book argument for probability kinematics? Philosophy of Science, 47(4), 583–588.

Bradley, R. (2005). Radical probabilism and Bayesian conditioning. Philosophy of Science, 72(2), 342–364.

Bradley, R. (2017). Decision theory with a human face. Oxford University Press.

Dietrich, F., List, C., & Bradley, R. (2016). Belief revision generalized: A joint characterization of Bayes’s and Jeffrey’s rules. Journal of Economic Theory, 162, 352–371.

Douven, I., & Romeijn, J.-W. (2011). A new resolution of the Judy Benjamin problem. Mind, 120(479), 637–670.

Elga, A. (2007). Reflection and disagreement. Noûs, 41(3), 478–502.

Gaifman, H. (1988). A theory of higher order probabilities. In B. Skyrms & W. Harper (Eds.), Causation, Chance, and Credence (Vol. 1, pp. 191–219). Kluwer Academic Publishers.

Gallow, J. D. (2019). Learning and value change. Philosophers’ Imprint, 19, 1–22.

Gallow, J. D. (2021). Updating for externalists. Noûs, 55(3), 487–516.

Hall, N. (2004). Two mistakes about credence and chance. Australasian Journal of Philosophy, 82(1), 93–111.

Harper, W. L., & Kyburg, H. E. (1968). The Jones case. British Journal for the Philosophy of Science, 20(2), 247–251.

Huttegger, S. M. (2013). In defense of Reflection. Philosophy of Science, 80(3), 413–433.

Huttegger, S. M. (2014). Learning experiences and the value of knowledge. Philosophical Studies, 171(2), 279–288.

Jeffrey, R. C. (1965). The Logic of Decision. University of Chicago Press.

Joyce, J. M. (2007). Epistemic deference: The case of chance. Proceedings of the Aristotelian Society, 107(2), 187–206.

Joyce, J. M. (2010). The development of subjective Bayesianism. In D.M. Gabbay , S. Hartmann, & J. Woods (Eds.), Handbook of the history of logic (Vol. 10, pp. 415–476). Elsevier.

Levi, I. (1967). Probability kinematics. British Journal for the Philosophy of Science, 18(3), 197–209.

Lewis, D. (1980). A subjectivist’s guide to objective chance. In R. Jeffrey (Ed.), Studies in inductive logic and probabilities (Vol. 2, pp. 263–293). University of California Press.

Nissan-Rozen, I. (2013). Jeffrey conditionalization, the Principal Principle, the Desire as Belief Thesis, and Adams’s Thesis. British Journal for the Philosophy of Science, 64(4), 837–850.

Pettigrew, R. (2013). A new epistemic utility argument for the Principal Principle. Episteme, 10(1), 19–35.

Pettigrew, R. (2016). Accuracy and the laws of credence. Oxford University Press.

Roussos, J. (2021). Expert deference as a belief revision schema. Synthese, 199, 3457–3484.

Skyrms, B. (1987). Dynamic coherence and probability kinematics. Philosophy of Science, 54(1), 1–20.

Titelbaum, M. G. (2022). Fundamentals of Bayesian Epistemology 1: Introducing Credences. Oxford: Oxford University Press.

Uffink, J. (1996). The constraint rule of the maximum entropy principle. Studies in History and Philosophy of Science Part B: Studies in History and Philosophy of Modern Physics, 27(1), 47–79.

van Fraassen, B. (1981). A Problem for relative information minimizers in probability kinematics. The British Journal for the Philosophy of Science, 32(4), 375–379.

van Fraassen, B. (1984). Belief and the will. Journal of Philosophy, 81(5), 235–256.

van Fraassen, B. C. (1989). Laws and symmetry. Oxford University Press.

van Fraassen, B. C., & Halpern, J. Y. (2017). Updating probability: Tracking statistics as criterion. The British Journal for the Philosophy of Science, 68(3), 725–743.

Zynda, L. (1995). Old evidence and new theories. Philosophical Studies, 77, 67–95.

Acknowledgements

I am extremely grateful to Dominika Dziurosz-Serafinowicz, H. Orri Stefánsson, Rafał Urbaniak as well as two anonymous referees for this journal for helpful comments on earlier versions of this paper. Funding for this research was provided by the National Science Centre, Poland (grant no. 2017/26/D/HS1/00068).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author has no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Proposition 1

In order to prove that \(C_{1}\), \(C_{2}\), and \(C_{3}\) are jointly inconsistent, let us first apply Bayes’s theorem to the conditional credences \(c\left( A\vert E_{x}^{A}\right) \) and \(c_{{\mathcal {E}}}\left( A\vert E_{x}^{A}\right) \):

Then, by condition \(C_{1}\), we get:

which is equivalent to:

Then, by applying condition \(C_{3}\), we get \(c_{{\mathcal {E}}}(A) = c(A)\), and so we can write (13) as:

which reduces to:

Now, since \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B\right\} \) partitions \({\mathcal {W}}\), we can apply the law of total probability to \(c\left( E_{x}^{A} \right) \) and \(c_{{\mathcal {E}}}\left( E_{x}^{A} \right) \). Thus:

By condition \(C_{3}\), we have \(c_{{\mathcal {E}}}\left( E_{x}^{A}\vert A \wedge B \right) = c\left( E_{x}^{A}\vert A \wedge B \right) \), \(c_{{\mathcal {E}}}\left( E_{x}^{A}\vert A \wedge \lnot B \right) = c\left( E_{x}^{A}\vert A \wedge \lnot B \right) \), \(c_{{\mathcal {E}}}\left( E_{x}^{A}\vert \lnot A \right) = c\left( E_{x}^{A}\vert \lnot A \right) \), \(c_{{\mathcal {E}}}\left( \lnot A \right) = c\left( \lnot A \right) \), and so we can write (16) as:

Hence, from (15), (17), and (18), we get:

Since \(\left\{ \lnot A, A \wedge B, A \wedge \lnot B\right\} \) is a partition, by \(C_{3}\) applied to \(c_{{\mathcal {E}}}\left( E_{x}^{A}\vert A\right) \), we get:

Similarly, by the law of total probability applied to \(c\left( E_{x}^{A}\vert A\right) \), we get:

Then, from (19), (20) and (21), we obtain:

By the multiplication rule for conditional probabilities applied to \(c_{{\mathcal {E}}}\left( A \wedge B \right) \), \(c_{{\mathcal {E}}}\left( A \wedge \lnot B \right) \), \(c\left( A \wedge B \right) \), and \(c\left( A \wedge \lnot B \right) \), we can write (22) as:

By \(C_{3}\), we have that \(c_{{\mathcal {E}}}(A) = c(A)\), and hence we can write (23) as:

And because \(c_{{\mathcal {E}}}\left( \lnot B \vert A \right) = 1 - c_{{\mathcal {E}}}\left( B \vert A \right) \) and \(c\left( \lnot B \vert A \right) = 1 - c\left( B \vert A \right) \), we can write (24) as:

Now, since all the terms on both sides of equation (25) are identical and non-zero, except for \(c\left( B \vert A \right) \) and \(c_{{\mathcal {E}}}\left( B \vert A \right) \), it must be the case that:

which contradicts condition \(C_{2}\).

\(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dziurosz-Serafinowicz, P. Expert deference and Adams conditionalization. Synthese 201, 176 (2023). https://doi.org/10.1007/s11229-023-04175-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11229-023-04175-6