Abstract

In recent works, Kind (2020a, b) has argued that imagination is a skill, since it possesses the two hallmarks of skill: (i) improvability by practice, and (ii) control. I agree with Kind that (i) and (ii) are indeed hallmarks of skill, and I also endorse her claim that imagination is a skill in virtue of possessing these two features. However, in this paper, I argue that Kind’s case for imagination’s being a skill is unsatisfactory, since it lacks robust empirical evidence. Here, I will provide evidence for (i) by considering data from mental rotation experiments and for (ii) by considering data from developmental experiments. I conclude that imagination is a skill, but there is a further pressing question of how the cognitive architecture of imagination has to be structured to make this possible. I begin by considering how (ii) can be implemented sub-personally. I argue that this can be accounted for by positing a selection mechanism which selects content from memory representations to be recombined into imaginings, using Bayesian generation. I then show that such an account can also explain (i). On this basis, I hold that not only is imagination a skill, but that it is also plausibly implemented sub-personally by a Bayesian selection mechanism.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Imagination is central to human cognition. It is involved in planning (Suddendorf & Corballis, 2007), decision-making (Paul, 2004), causal reasoning (Walker & Gopnik, 2013), and predicting future affect (Wilson & Gilbert, 2000). In this paper, I argue that imagination is a skill by showing that it meets two hallmarks of skill: improvability by practice and control.Footnote 1

Until recently, there has been little discussion about whether imagination is a skill, despite its status as a mental action (Dorsch, 2012) and the interest in characterising other mental actions as skills (DeKeyser, 2015). Amy Kind’s recent papers (2020a; 2020b) constitute the first attempt to establish imagination as a skill. However, I will argue that her attempt is ultimately unsatisfactory. Though she rightly maintains that in order for it to be a skill, imagination needs to be improvable through practice and controlled, the empirical evidence she uses to show that imagination possesses these two features is wanting.

In this paper, I build on her arguments and make an empirically well-supported case for the claim that imagination is a skill. To show that imagination is improvable by practice, I draw on experimental evidence from the study of mental rotation. To show that imagination is controlled, I first provide a theoretical account of how this could be possible by arguing that controlling our imagination amounts to constraining it in certain ways, and I then provide data from developmental psychology.

Having established that imagination is a skill, I then take up the question of what cognitive architecture imagination needs to be implemented in for this to be the case. I begin by considering the issue of how control can be implemented sub-personally. To this effect, I develop a Bayesian computational account in line with current cognitive science, which details how imaginings are generated from the content of memory representations. I show that this is a plausible hypothesis that sits well with what we already know about the sub-personal organisation of imagination, and which generates a number of interesting testable hypotheses. I also show that this account can explain how imagination is improvable by practice.

I thus have two aims in this paper: firstly, to establish that imagination is a skill; secondly, to develop a robust testable hypothesis for imagination’s underlying cognitive architecture.

2 Improving imagination by practising

We often praise the imaginative abilities of inventors, writers, and the like, and we might have the natural intuition that some are better at imagining than others. This raises the question of whether imagination is a skill that one can improve to expert levels. To demonstrate this point, Kind (2020b) considers three people with incredible imaginative abilities—in particular, with extraordinary visual imagination—namely, the engineer Nikola Tesla, the scientist Temple Grandin, and the origami folder Satoshi Kamiya. Tesla described how he used visual imagination to work out, improve, change, and operate his inventions; Grandin was able to use her imagination to visualise how cattle would react when entering a conveyor track in a slaughtering plant in order to design a new process where cattle would not panic; and Kamiya has said that he uses his visual imagination to figure out how to fold origami, where he visualises the piece completed and then sees in his mind’s eye how it unfolds. Since these people all seem to be very good at imagining, Kind concludes that we should take them to be skilled imaginers.Footnote 2

Before considering whether they are skilled, the reader might wonder what ‘being good at imagining’ means. How good one is at something normally depends on the goal we evaluate it against. For example, it is difficult to evaluate how good someone is at running without knowing if the goal is to run 100 metres or a marathon. Endurance, for example, matters to how good one is at running if the goal is to run a marathon, but does not matter if the goal is to run a 100 metre race. The same general point applies to imagination. When using imagination to plan for the future, we could say that someone is good at imagining if they imagine the future as accurately as possible (Suddendorf & Corballis, 2007). If, on the other hand, the goal is to create a fictional story, we can measure one’s imaginative capacities in terms how vivid or detailed an imagining is (Fulford et al., 2018). For this reason, in this paper, I will not specify a general notion of goodness with respect to imagination, but instead specify it on a case-by-case basis, depending on the imaginer’s goal.

Now, Tesla, Grandin, and Kamiya all use imagination for practical purposes (e.g. solving engineering problems) and it is clear that they are very good at imagining with respect to their goals, since their imaginings are extremely accurate. But it is less clear that they are skilled, since two important pieces are missing from this story. There is widespread agreement among philosophers and psychologists that the two hallmarks of something’s being a skill are: (a) skills can be improved through practice (Anderson, 1982; Stanley & Williamson, 2001; Dreyfus, 2007; Fridland, 2014), and (b) skills involve control (Dreyfus, 2002; Stanley & Krakauer, 2013; Fridland, 2014; Wu, 2016). This provides us with a useful way of testing whether imagination is a skill. In this section, I focus on the first criterion.

The problem with the cases of Tesla, Grandin, and Kamiya is that there are two possible explanations for their exceptional performance, and we cannot tell these two apart: (i) they are exceptionally good at imagining because they improved their imagination by practising; (ii) they were simply born with extraordinary imaginative abilities. In another recent paper, Kind acknowledges this issue, which she labels ‘the nativist objection’ (Kind, 2020a). Specifically, this objection states that the fact that somebody is a very good imaginer does not provide evidence for the claim that imagination is a skill, since that person might simply have an innate capacity to imagine well, rather than having developed this capacity through practice. To address this objection, Kind turns to evidence from sports psychology (Kind, 2020a). She considers how visualisation techniques are used in sports, where athletes imagine themselves achieving the desired outcome (e.g. spinning a ball). Since athletes practise visualising and, purportedly on the basis of this, improve their sport performances, Kind infers that practising visualising improves visual imagination, and this in turns improves the sport performance. Hence, it appears that imagination meets the first hallmark of skill.

The crux of the matter is whether this inference is justified: do we have good reasons to think that by practising visualising athletes become better at visual imagination? Unfortunately for Kind, sports psychology cannot provide an empirical basis for this conclusion, for the following reason: empirical research into athletes’ use of visual imagery does not actually test whether athletes improve at visualising.Footnote 3 Instead, researchers are interested in whether athletes improve their athletic skill, and questionnaires such as the Sport Imagery Questionnaire are designed to test this improvement, rather than to test their potential visualisation improvement (Hall et al., 1998; Munroe et al., 2000; Beauchamp et al., 2002; Williams & Cumming, 2014). Though some studies report on how good athletes are at visualising (often cashed out in terms of how vivid their imaginings are), there is still no comparison between visualisation ability before and after practising (Baddeley & Andrade, 2000).

However, even if improvement in visualisation is not directly measured, one could potentially argue that there is evidence for imagination’s improving with practice, since the sports performance is improved. One could claim that the best explanation for why athletes perform better at their sport is because their capacity to imagine has improved by practice, and this improvement directly affects their performance. Unfortunately, even this conclusion is hard to establish, since it is notoriously difficult to tease out whether and to what extent visualising improves sport performance because of the many confounding factors. For example, athletes are often in physical training as well as using visualising techniques, so visualising cannot easily be singled out as the sole factor that contributes to performance improvement, and some research has also suggested that visualisation techniques do in fact not improve performance directly, but rather work to boost confidence (Munzert & Lorey, 2013), which in turn makes for a better performance. For these reasons, Kind’s inference is not as straightforward as it first seems, and sports psychology cannot currently give us a conclusive answer as to whether imagination is improvable by practice. Luckily, there is another area of research which directly tests whether imagination is improvable by practice, making it more suitable for my purposes here.

3 Mental rotation

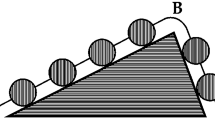

Whether imagination is improvable by practice has been extensively tested using mental rotation tasks. Mental rotation (or visual rotation) tasks are tasks where a subject is presented with a picture of a 2D or 3D object, next to four other pictures of very similar-looking objects at various angles. Their task is to determine which of the four shapes matches the first shape, if the first shape were to be rotated. In order to solve the task, the subjects are asked to mentally rotate the original shape to ‘see’ if it matches the others. Subjects are put through practice trials where they get to practise on a large number of different shapes before they go through the test-sessions. In both practice trials and test-sessions, subjects are instructed to press one button if they think the shapes are the same, and another one if they think that the shapes are different. Reaction times and accuracy are measured on both occasions. If the subject is able to provide a correct answer more quickly in the test-session than in the practice trial, they are taken to have improved. This is an important measure, since being skilled is positively correlated with performing the task quicker (Anderson, 1982). This experimental design, and slight variations of it, has repeatedly been used and the results robustly indicate that subjects can improve their capacity for visual rotation (Shepard & Metzler, 1971; Kosslyn, 1994; Habacha et al., 2014).Footnote 4

One might worry about confounding factors here too. For example, these results could be due to subjects using techniques other than mental rotation, such as retrieving the right answer from memory. But there are strong reasons to think that this is not the case. Provost et al., (2013) observed that participants could potentially solve the mental rotation task in either of these ways, so to disentangle these two hypotheses, they introduced a further EEG measurement into the rotation experiment. More precisely, they measured the EEG signal Rotational Related Negativity (RRN) during task performance, since this positively correlates with mentally rotating shapes, but not with retrieving a shape from memory. RRN signals were present during both practice and test sessions, thus indicating that subjects are indeed mentally rotating the shapes on both occasions. At the same time, subjects reduced their reaction time by an average of 800ms from practice to test sessions, demonstrating significant improvement through practice.

These experiments show that visualising—in particular, visual rotation—can be practised such that subjects can improve at it. However, my argument in this paper concerns imagination in general, not just mental rotation or visualising, so one possible objection is that because visualisation is just one kind of imagination, we cannot draw such a general conclusion from these studies. We ordinarily distinguish among different kinds of imagination, such as visual imagination, auditory imagination, gustatory imagination, and so on, and these distinctions find support in neuroscience, which has shown that these different types of imagination are also implemented in different brain areas.Footnote 5 It looks possible that visual imagination is improvable, whereas auditory imagination might not be, and hence my conclusion over-generalises.

However, this objection fails to take into account that we have good reasons to think that all kinds of imagination work by means of simulation—that is, they all work by using a certain sensory/perceptual system for a secondary function.Footnote 6 For example, the primary function of the visual system is to see, and the secondary function is to visualise.Footnote 7 Given that this process is universal across different kinds of imagination, it would be very surprising to find that one kind of imagination can be improved, whereas another cannot. Thus, I take it that the case study of visual imagination that I have offered indeed generalises to other kinds of imagination, and hence that imagination is improvable by practice.Footnote 8

4 Control in imagination

Things are looking good for imagination so far. I have shown that there is robust empirical data supporting the thesis that imagination is indeed improvable by practice, but that is not enough to conclude that imagination is a skill, since there is also widespread agreement that skills involve control (Dreyfus, 2002; Stanley & Krakauer, 2013; Fridland 2014; Wu, 2016; Kind, 2020a). For example, a runner can control the speed they are running at, and a chess player can control their play by setting a strategy for a game. It is less clear what it means to control one’s imagination, so I will first build a theoretical framework of what control in imagination could amount to, before I provide empirical evidence that imagination is indeed controlled in this way.Footnote 9 It should be noted that my account of control spans across the personal and sub-personal level. Hence, I will sometimes speak of a person exercising control in imagination (personal level control), and sometimes of mechanisms exercising control (sub-personal control). As this distinction has been extensively defended elsewhere (see: Fridland, 2014), I will not argue the case here.

4.1 A theoretical framework for control

To build a theoretical framework of control for imagination, I draw on another paper of Kind’s, where she argues that we can constrain our imagination by using the Reality Constraint and Change Constraint (Kind, 2016), and I will argue that controlling imagination could amount to employing these constraints. Footnote 10

Consider the following example. There are many cases where our goal is to imagine things accurately, such as when I moved into my narrow terraced house and tried to accurately imagine if my bedframe would fit up the stairs. But note that imagination is voluntary, and it is not bound by how things actually are (Balcerak Jackson, 2018). That is, I seem to be able to imagine whatever I want irrespectively of the state of the world. Given this, how can we ensure that it is accurate? Kind argues that it can be so only if we constrain it by employing the Reality Constraint and the Change Constraint. According to the first, an imagining should be constrained by the world as it actually is. For example, I should imagine the bedframe and the stairs as being the sizes they actually are. According to the second, when we imagine a change in the world, we need to be guided by the consequences of that change. When I imagine the bedframe going up the stairs, I should imagine the consequences of that state of affairs, namely, that the bedframe ends up upstairs. I should not imagine that it suddenly vanishes or that it miraculously shrinks. Note that these constraints are indeed different, as I can employ one without another; I can choose not to employ the Reality Constraint and imagine something unrealistic, but still employ the Change Constraint to imagine things changing as they normally would. Imagining an alligator driving a car is an example of this, where this is clearly unrealistic, but changes in the world remain normal (the alligator turning the steering wheel turns the car’s wheels, etc.). To be clear, these constraints are not to be thought of as genuine limits on our ability to imagine—of course, we can imagine fantastical scenarios too—but rather as strategies that agents can intentionally employ when they want to obtain an accurate imagining (Kind, 2016).

Now, I maintain that this gives us a plausible theoretical picture of how imagination could work in order for it to be controlled: an act of imagining is controlled if the imaginer constrains it by employing the Reality Constraint and the Change Constraint. On this basis, we can assess whether imagination is a skill by considering whether there are actual cases where imagination is controlled in this way. A related interesting question is whether all imaginers are able to employ these constraints. One might think that we need to learn to do so, similarly to how we have to learn to exercise control over our bodily skills by practising (Fridland, 2014). To address both these questions at once, I will use studies from developmental psychology, as this will also give an indication as to when we are first able to employ the constraints.

4.2 Empirical research on control in imagination

Atance and Meltzof (2005) conducted a study testing children’s ability to imagine and plan for the future. I propose that the differential results they got for different age groups are best explained by the older children’s being able to control imagination by applying the Reality Constraint. Footnote 11

Children of three, four, and five years were asked to imagine themselves in various outdoors locations (such as a desert) and asked what item they wanted to bring with them from a set of three. For example, in the desert-case, they could choose from soap, a mirror, or sunglasses. If children applied the Reality Constraint, we should expect them to choose sunglasses, since that would be in accordance with imagining the world as it actually is (deserts are hot and sunny so sunglasses could be useful). In fact, this is what children chose. All age groups performed significantly above chance, with four-and five-year-olds performing significantly better than three-year-olds, and explaining their choice with reference to future needs about two thirds of the time. It looks as though children have the ability to employ the Reality Constraint around the age of three, but that they also gradually improve their performance, possibly by practising.Footnote 12

Further, children’s ability to employ the Change Constraint has been indirectly tested by McColgan & McCormack (2008), who tested three-, four-, and five-year olds’ ability to control imagination to solve a planning task. Here, children were asked to place a camera on a path along which a doll was going to walk. The doll wanted to take a picture of some zoo animals which appeared further down the path, and the interesting question was whether children would place the camera before or after the zoo animals. It was explained that the doll could not fetch the camera and then go back and take the picture, so the only right solution was to place the camera before the zoo animals. If children are able to employ the Change Constraint, they should imagine that the world changes in different ways depending on whether they place the camera before or after the zoo animals. If they place it before, the doll would be able to get the camera and take the picture. If they place it after, she would not be able to obtain the camera and could not take the picture. Interestingly, only five-year-olds reliably passed this task, with both four- and three-year-olds failing. Thus, it looks like five-year-olds are able to apply the Change Constraint, whereas three- and four-year-olds are not.Footnote 13

From these two experiments, we get evidence that imagination can indeed be controlled by employing the Reality Constraint and the Change Constraint, that the ability to do so is likely to be developed already in the pre-school years, and that these are indeed two different kinds of constraints, as they develop at different times. Since children are able to control their imagination in this way by employing constraints, we should think that adults can do so too.Footnote 14 Taken together with the previous argument about improvability, we can conclude that imagination is a skill, since both hallmarks are now met.

But a pressing question immediately arises, namely: how is imagination implemented sub-personally such that it is improvable and controlled? That is, what are the cognitive mechanisms and architecture that make this possible? I take up this issue by first considering what cognitive architecture could underlie imaginative control. To this effect, I develop a Bayesian computational account of imaginative control. I then show that this model also explains how imagination is improvable by practice.

5 A cognitive architecture for control and improvability in imagination

A common theme from both philosophy (Fridland, 2014) and psychology (Pezzulo & Castelfranchi, 2009) when it comes to giving sub-personal accounts of control is to focus on how control can be implemented in a selection mechanism. In Ellen Fridland’s work, the idea gets applied to bodily actions, and control is said to involve a mechanism that selects the relevant features of the environment. For example, a batsman in cricket focuses their attention on the relevant features of the bowler’s delivery to determine which shot to play. More interestingly given my purpose, Pezzulo and Castelfranchi (2009) develop an account of control for mental actions which they apply to imagination specifically.Footnote 15 They propose that controlled imagination involves a selection mechanism selecting relevant representations from memory to form imaginings, which are then compared to the agent’s goal. As observed earlier, when imagining something, you typically have a goal with that imagining, for example to imagine something accurately in order to solve a problem. Consider a person whose goal is putting some beers in the fridge, and who is trying to solve the task of how to open the fridge door with their hands full, using imagination. Pezzulo and Castelfranchi argue that control is exercised here as follows: the selection mechanism produces an imagining by selecting a relevant content from memory, which is then judged for its aptness. For example, the person can imagine opening the fridge door with their foot, and compare this to their goal representation to find out if they will satisfy it. If the answer is ‘no’, they can use this feedback to form a new imagining.

I concur with Fridland, Pezzulo, and Castelfranchi, that selection is central to control, and specifically that control in imagination involves selecting relevant memory representations to form an imagining. The problem is that there is no detailed account of the computational operations guiding the selection mechanism. Pezzulo and Castelfranchi tell us that the selection mechanism selects certain contents from memory, but they do not say anything about how the mechanism does so. As a result, we have no explanation for how an agent produces a particular imagining. This in turn will be problematic for explaining how to improve imagination, since this often amounts to producing better imaginings. That is, when improving, particular content that is more apt for the goal needs to be selected over other content. This can only be specified if we give a computational account, since just positing a selection mechanism cannot tell us why one thing is selected over something else. So, in the remainder of this paper, I provide one such computational account.

This account needs to be sensitive to what we already know about the cognitive architecture of memory and imagination. This is why, in Sect. 4.1, I briefly outline a largely agreed upon model of the relationship between memory and imagination (Schacter & Addis, 2007; Schacter & Addis, 2020). Against this backdrop, I then develop a Bayesian account of the selection mechanism (Sect. 4.2), which further specifies this account of the cognitive architecture. I then show that this account also gives us the tools to explain how imagination can be improved by appealing to the cognitive mechanism becoming better at selecting the content from memory representations relevant to one’s goal (Sect. 4.3).

5.1 On memory and imagination

Using a large number of behavioural (Levine et al., 2002; Addis et al., 2007; Madore et al., 2014, 2016), cognitive (Schacter et al., 2007, 2012; Ward, 2016; Hallford et al., 2018), and neurological evidence (Addis et al., 2007, 2009; Benoit & Schacter, 2015; Axelrod et al., 2017), Donna Rose Addis and Daniel Schacter put forward an extremely influential architecture concerning the relationship between memory and imagination: the constructive episodic simulation hypothesis (Schacter & Addis, 2007; Schacter & Addis, 2020).

The hypothesis centres around the idea that a key function of episodic memory is to support the construction of what they call episodic future events (imaginings about the future), and that it does so by retrieving information about past experiences and flexibly recombining elements of these into novel imaginings. Imagination and episodic memory thus rely on a number of shared processes, such as the capacity for relational processing (i.e. linking together various pieces of information).

Though episodic memory plays a key role on this picture, it does not mean that semantic memory plays no role in imagination (Schacter & Addis, 2007). Information stored in episodic memory can be cashed out as who-what-where-when information, whereas information stored in semantic memory includes semantic knowledge, related facts, commentary, or references to other events including general events. Imagination recruits both information stored in episodic memory and information stored in semantic memory (perhaps to a different extent depending on the imagining).

If we think about how we imagine, we can easily see how this is the case. When a subject intends to imagine something, elements are selected from semantic and episodic memory, before they are recombined. The selected elements are flexibly recombined using a recombination process, which entails that the subject can imagine novel events that they have not previously experienced. For example, the representation of the content horse could be selected from episodic memory, and combined with the representation of the location Iceland from another episodic memory, and semantic information about Iceland, such that I can imagine riding a horse in Iceland, even if I have not experienced this.

Recombining elements in this way is different from simply selecting a whole memory representation and recasting it in a future setting, such as I might do when imagining that my kitchen will look the same tomorrow as it did yesterday. Empirical data suggests that the second case does not involve the recombination process to a great extent, and thus requires less effort (Addis et al., 2009; Schacter & Addis, 2020). Notice that this echoes the evidence from mental rotation in Sect. 2, where I argued that different processes underwrite solving the task by mental rotation as opposed to retrieving the answer from memory; this could be interpreted as mental rotation involving recombination, whereas simple retrieval does not. What we see from this discussion is that imagination is generative, rather than simply reproductive, and that this account is well-suited to explain the vast amount of different creative imaginings that subjects generate.Footnote 16 With this background in place, the selection mechanism needs to be sensitive to this cognitive architecture, and I will now explain how the selection mechanism selects content from memory representations that is to be recombined.

5.2 Bayesian inference and Bayesian generation

My suggestion is that the selection mechanism works by Bayesian generation. The idea that selection mechanisms operate according to Bayesian principles is common in cognitive science, partly because using Bayesian probabilities is the optimal way of making selections in light of available options. The assumption that we should start with is that the mind works in this optimal way, before experiments potentially prove otherwise (Norris & Cutler, 2021). After all, we should not posit that the mind operates sub-optimally unless we have an empirical reason to do so. There are generally two kinds of Bayesian accounts: Bayesian inference accounts and Bayesian generation accounts, which are used in a vast amount of theories. These include Predictive Processing accounts of perception (Clark, 2013; Kirchhoff, 2018; Hohwy, 2020; Williams, 2021), as well as theories about causal reasoning and counterfactual thought (Walker & Gopnik, 2013), language acquisition (Jusczyk, 2003), and word recognition (Norris, 2006). For example, Walker and Gopnik argue that children are rational Bayesian learners of causal models, and that Bayesian inference is actually implemented in a cognitive mechanism. As a consequence, this theory can explain behaviour, because the model attempts to mirror what goes on in the mind. Their appeal to Bayesian inference is thus more than a mere modelling of behaviour, which would not have this explanatory power.Footnote 17 As I will show, Bayesian generation suits my purpose better here, but my claim is otherwise very similar to this, as I hypothesise that the Bayesian generation in imagination is indeed implemented in this cognitive mechanism. This means that the calculations I describe on the following pages are analogous to ones carried out by the mechanism. The upshot is that my account is also an attempt to explain behaviour, rather than model it.

For my account to make sense, going over how Bayesian inference works before describing Bayesian generation will be helpful. The general idea of Bayesian inference is that a particular hypothesis can be tested by calculating how likely it is to be true, given the data an agent has observed. For example, if I observe my friend Sally coughing, how likely is it that she is coughing because she has a cold? The data I am observing here is Sally’s coughing, and the hypothesis I am interested in testing is whether she is coughing because she has a cold. There are several hypotheses available, and we will focus on three different ones here: Sally could be coughing because she has a cold, she could be coughing because she has heartburn, or she could be coughing because she has cancer.Footnote 18

To calculate which hypothesis is the most likely, we need to set values for likelihoods and priors. These are supposed to capture what we know about the case. The likelihoods capture how likely it is that coughing is a symptom of any of the diseases. Since both colds and lung cancer cause coughing, whereas heartburn does not, the likelihoods favour these options. That is, it is common to cough when one has a cold or lung cancer, but not when one has heartburn. These will thus receive higher values and heartburn a lower one (see Table 1). The priors capture how likely it is that one has a cold, heartburn, or lung cancer, independently of any observed symptoms. The priors favour colds and heartburns over lung cancer, since it is more common to have a cold or a heartburn than to have lung cancer. We capture this intuition by assigning higher values to colds and heartburns, and a lower one to lung cancer. This can be formalised in the following way, where P is the probability, d is the data observed, and h is a specific hypothesis:

We can then calculate what we are interested in, which is the posterior probability, i.e. the probability that Sally is coughing because she has a cold. We do so using Bayes’ formula:

This gives us:

Hence, the hypothesis that Sally is coughing because she has a cold has a probability of 0.75. We can then perform the same calculation for the other hypotheses, and see which one comes out as the most likely.

With this in place, let me now elaborate on my account of the cognitive mechanism of control in imagination. Remember, when an imagining is generated, it draws on memory representations in semantic and episodic memory. Given that we have a vast number of different memory representations, there is also a vast array of imaginings that could be generated, and a cognitive mechanism needs to have a structured way of selecting content from one representation over other content. We need to find out how this is accomplished to account for how imagination can be controlled with respect to the cognitive mechanism involved. But it does not look like a Bayesian inference account is apt for this task, as it is unclear what would count as a hypothesis or a piece of evidence when generating imaginings. Instead, a generative account, which does not invoke these notions, is more suitable. This kind of account generally allows us to calculate the probability of two events co-occurring, by utilising the priors and likelihoods. For example, we can find out the probability of Sally having a cold and Sally coughing. Assume that P(Cough|Cold) = 0.8, as it is very common to cough when one has a cold. This gives us:

Plugging in the numbers:

What we have found here is the joint probability distribution; the probability that two events co-occur. In other words, the probability that one event occurs, given that another event occurs. This seems more apt for the task at hand, as a generative account could give us the probability for an imagining to occur, given the contents of an agent’s memory representations.Footnote 19

But an agent is bound to have more than one memory, so the mechanism needs to calculate the joint probability distribution for multiple contents, and then select a few to be recombined into an imagining. I suggest that the contents of the memory representations assigned the highest probabilities are selected to be recombined into an imagining. But before we get to this point, we need to assign priors and likelihoods just like in the case above. Let us use a simplified example to illustrate this.

An agent, Matilda, tries to accurately imagine what the weather will be like in Croatia in spring, because she wants to decide whether to go there. This is her imaginative project, I Holiday. We are interested in what content is selected for this imagining, given the probabilities that the selection mechanism assigns to the content. For ease of example, say that Matilda only has four memory representations and that these are all mostly episodic: visiting Croatia in the hot summer; a person in Croatia saying it often rains there in spring; visiting a rainy and flooded Venice in spring; and visiting Leeds for shopping in the summer. To make things easier, let us use these categories as shorthand: (1) Croatia; (2) Conversation; (3) Venice; (4) Leeds. Each of these memory representations is comprised of different elements, as discussed in Sect. 4.2, such as information relating to who-what-where-when, all of which are candidates for content that could be retrieved and recombined into a novel imagining. For example, Conversation contains both episodic information about a person and semantic information about the weather.

How should the priors be assigned in this case? Recall that in the example of Sally, priors were assigned on the basis of how likely an event was to occur independently of any other event. Could it be the case that priors here too are determined by episodes’ independent probabilities of occurring? This does not seem likely, as the mind does not have access to these probabilities. As mentioned, I am giving a realist account of the selection mechanism, whereby the Bayesian selection mechanism is actually implemented in the mind, and which aims to explain behaviour. For this to be viable, the mind needs to have access to the probabilities that it uses in calculations. But it does not seem like the mind has access to these independent probabilities. For example, there is an independent probability of the event of an apple falling occurring right now, but it would be unfounded to think that my mind has access to this probability. Instead, I suggest that prior probabilities are determined by something that the mind does have access to: the emotional intensity of stored episodes.Footnote 20 This hypothesis predicts that emotional episodes (negative or positive) are more readily retrieved in greater detail than neutral episodes (Brown & Kulik, 1977; Talarico et al., 2004).Footnote 21Prima facie, the emotional intensity of episodes seems highly relevant for remembering/imagining, and this suggestion tallies well with evolutionary theories, which suggest that emotionally intense episodes are more useful for an animal to remember/imagine, than neutral ones (Suddendorf & Corballis, 2007). To take an extreme but illustrative example, if a pre-historic human is planning a trip to the waterhole, it holds a greater payoff for them to more readily retrieve a memory of a fearful episodic of being attacked at the waterhole, than a neutral one of having a quiet drink, even if the neutral episodes have happened more frequently. It seems that more readily recalling intense episodes could drastically increase the chances of survival, and it could thus be more useful to more easily retrieve emotionally intense episodes.

Now, going on holiday might not be that emotionally intense, but it still has a significant degree of emotional intensity. Hence, both Croatia and Venice should be assigned high priors, reflecting that they are highly likely to be recalled and used in imaginings. Particular conversations about the weather are not emotionally intense, so Conversation should be assigned a very low prior. Similarly, visiting Leeds for shopping is not likely to be very emotionally intense, so this should also be assigned a low value.Footnote 22

What about the likelihoods? These should capture how likely it is that a particular imagining is created, given the content of a memory representation. Now, we should assign Croatia a high likelihood, since it is likely that a memory of Croatia in the summer will be drawn on when trying to imagine the weather there in spring. Conversation, though having a low prior, should be assigned a high likelihood, since it concerns the weather in Croatia—the very thing the imaginer is trying to imagine. Venice is also fairly likely to be drawn on, since it is a memory of a place geographically close to Croatia and it is a memory of springtime. On the other hand, Leeds seems very unlikely to be drawn on, since the location is nowhere near Croatia, and the memory concerns summer rather than spring.Footnote 23 Below, the priors and likelihoods are assigned to reflect this:

What we are trying to find out, then, is how likely it is that a particular imagining is generated, given the memory representation: P(i Holiday,mCroatia). In other words, we are trying to find out the joint probability distribution. We calculate this in the following way:

This gives us:

From this, we can see that the content of the memory representation Croatia is assigned a high probability, making it highly likely to be used in generating the imaginative project. From this alone, it looks likely that the Matilda would produce an imagining of a sunny Croatia, but, remember, we have only considered content in the memory representation Croatia so far. Since we are interested in how likely it is that the agent will imagine a certain scenario (rainy spring in Croatia) given all her memory representations, we need to also assess how likely the content from other memory representations are to be drawn on; I will leave these calculations out here, but the results are as follows:

What we have done above is analogous to the computations carried out by the selection mechanism. But the selection mechanism needs to do more than just calculate the probabilities of various memory contents’ being used in imaginings; it needs to actually select the content too. Just like there is a structured way of calculating probabilities, there needs to be a structured way of selecting content based on these probabilities. A plausible assumption is that only the top-ranking content gets included in the imagining, since otherwise all content would be included to some degree, no matter their joint probability distribution. It will suffice for my demonstration here to assume that the three top ranking are selected. In that case, the contents of Croatia, Conversation, and Venice will be selected to be recombined into an imagining. Since these together contain the vital semantic information about rain in the spring, an episodic representation of rain, and the episodic representation of the location Croatia, Matilda generates an accurate imagining of it raining in the spring in Croatia.Footnote 24

The reader might at this point worry that this is a ‘just so’ story, which does not make any precise predictions, and lacks any empirical support. Without predictions and support, characterising the selection process as Bayesian might itself seem unfounded.Footnote 25 This would be a considerable problem for the account, but luckily, it is more than a ‘just so’ story. Here is an example of a prediction of the account: if emotional intensity determines priors, emotionally intense episodes should be more likely to be recalled/imagined than neutral episodes. There are two telling instances of this. Firstly, patients with PTSD are more likely to recall/imagine negative emotionally intense episodes, than neutral episodes (Pearson et al., 2015; Pearson, 2019). Secondly, multiple studies have found that people often suffer from an intensity bias when imagining/recalling episodes, whereby episodes are imagined/recalled as overly emotionally intense (Wilson et al., 2003; Gilbert & Wilson, 2007; Ebert et al., 2009). Both of these findings are predicted and can be explained by appealing to priors being set by emotional intensity. Note that this is not to say that we cannot imagine neutral episodes; of course we can. Even though an episode could have a low prior due to low emotional intensity, it could still be selected due to high likelihood (recalling cereal at the breakfast table would have a low prior due to not being emotionally intense, but in a context where an agent’s intention is to remember their breakfast table, the likelihood would rise significantly). The claim is rather that, ceteris paribus, emotionally intense episodes are more likely to be chosen over non-emotionally intense ones, due to emotional intensity determining priors.

5.3 Improving imagination

This computational account thus explains how control as selection could be implemented sub-personally, thus solving half of the problem of the cognitive architecture of imagination necessary to explain how it could be a skill. As it turns out, improvability can easily be accommodated for in this architecture too, by appealing to the agent’s ability to change the likelihoods depending on their epistemic feelings. To clarify, my suggestion is that when an imagining has been generated, an agent can evaluate whether they think this imagining is realistic or not depending on their epistemic feelings about the imagining, such as a feeling of certainty or uncertainty (Arango-Muñoz, 2014; Michaelian & Arango-Muñoz, 2014). Feelings of certainty/uncertainty have been shown to be reliable, and could thus be taken as an indicator that the imagining is indeed accurate or inaccurate (Fernandez Cruz et al., 2016). If someone imagines a scenario and has a feeling of uncertainty about it, this could trigger a thought process by which they can realise what is potentially wrong about the imagining. This in turn can trigger a change in the values of the likelihoods of the memory representations, such that when they try to imagine it the next time, these likelihoods have been set differently, and as a result, a different imagining is generated. For example, Matilda might imagine that she would enjoy a holiday in Croatia during the spring despite the rain, but have a feeling of uncertainty about it. When being reminded by her friend of how it feels to have soaked shoes for a week, she realises why she was feeling uncertain about it, and revises her imagining. This points to the same dynamic process of imagining scenarios, evaluating imaginings, and re-imagining scenarios as Pezzulo and Castelfranchi (2009) highlight. It also illustrates one of the ways in which one can practise to become better at imagining, which involves improving the cognitive mechanism of control using feedback from personal level epistemic feelings. Having some kind of feedback loop to adjust likelihoods has proven useful in improving other Bayesian systems, as in machine learning, and it therefore looks viable as a way of improving imagination too (Gopnik & Tenenbaum, 2007). This account can thus also account for improvement sub-personally by appealing to the likelihoods being changed, and hence a new imagining being generated.

Before concluding, I will address a worry that the reader might have about the tractability of my account. The reader might think that the account looks computationally intractable, since for any imagining, one’s cognitive mechanism would need to assign a prior and likelihood to every single one of thousands of contents in memory representations. This simply looks like too big a task and it does not seem plausible that this is what goes on sub-personally. There are two different ways to deal with this concern. One could either simplify the Bayesian generation, or one could say that memory representations are stored in a more economical way. I think that both ways are viable, and that combining them overcomes the problem. Firstly, Bayesian accounts can be simplified using variational approximation methods, which are widely used in machine learning and cognitive science (for example, see: Smith (2021)).Footnote 26 Secondly, contents in memory representations are in fact stored schematically, meaning that only memories which relate to certain topics would need to be assigned values (Bartlett, 1932; Brewer & Treyens, 1981), and it has been argued that pre-existing knowledge in the form of schemas also guide the selection and integration of relevant episodic and semantic memory content when imagining something (Irish et al., 2012; Addis, 2018). It is thus plausible that only the content of memory representations within a certain schema are assigned values. If I intend to imagine an office, only memory contents in the office-schema are assigned values and recombined into imaginings, whereas contents in a holiday-schema are not. Which schemas are selected could be accounted for by the cognitive mechanism’s sensitivity to the semantic content of the agent’s intention. If the intention includes ‘holiday’, the cognitive mechanism is sensitive to this.Footnote 27 Finally, another reason to think that this actually is a computationally tractable account is that Bayesian models very similar to this have already been developed in computer science for generating novel virtual worlds from a limited set of ‘memory representations’ (Fisher et al., 2012; Davies, 2020). I thus take it that this is a computationally tractable account of content generation for imagination.

Importantly, this is a novel account of the selection mechanism for which I have provided indirect evidence for its plausibility. Though it has not yet been tested directly, it makes a number of interesting testable predictions which will generate future research. For example, my account predicts that an imagining which is accompanied by a feeling of uncertainty is less likely to be accurate. This could easily be tested by a method similar to the one used by Fernandez Cruz et al., (2016), who investigated the reliability of epistemic feelings with respect to solving mathematical problems. To test imagination, subjects could for example be asked to imagine how they would feel in various hypothetical scenarios, and subjective uncertainty about imaginings could be measured. Previous research suggests that we are often inaccurate when imagining our future emotions (Wilson & Gilbert, 2000), and together with these findings, my account predicts that an inaccurate imagining about a future emotion would be accompanied by a higher degree of subjective uncertainty. My account also predicts that we learn to become better imaginers by cycling through imaginings and evaluating them in this way, which could be tested by using a qualitative methodology. Like with other Bayesian accounts, it could also be modelled using computer simulations, and running computer simulations could tell us more about how this process is optimised.

6 Conclusions

In this paper, I first provided empirical support for the claim that imagination is a skill. I identified improvability by practice, and control as two hallmarks of skill, and I argued that imagination meets both. Specifically, I showed that subjects can improve their visual rotation performance by practising, and that imagination is intentionally controlled by employing the Reality Constraint and Change Constraint. After establishing that imagination is a skill, I asked how this could possibly be implemented in a cognitive architecture. Here, I argued that plausibly there is a Bayesian selection mechanism that selects content from memory representations to generate imaginings. This is not only a valuable addition to the literature on skill, but it also contributes to the literature on cognitive architecture by further developing the selection mechanism posited by Pezzulo and Castelfranchi (2009), and it creates new avenues for us to continue research to learn more about skilled imagination.

Availability of data and material

N/A.

Code availability

N/A.

Notes

I use ‘imagination’ and ‘the capacity to imagine’ interchangeably in sentences like ‘imagination is a skill’ and ‘the capacity to imagine is a skill’.

See Kind (2016) for a longer discussion of Grandin and Tesla.

See Zvyagintsev et al., (2013) for an overview and details of where visual imagination and auditory imagination are implemented.

Note that my argument does not hinge on the primary/secondary distinction; it only requires that the systems have multiple functions, which Predictive Processing accounts do not dispute.

Note that this is not to say that one kind of imagination is automatically improved when another kind is improved. On the contrary, a person who practises auditory imagination will improve their ability to imagine sounds and melodies, but not their ability to visualise complex scenes or mentally rotate objects, as demonstrated by Habacha et al., (2014). Jakubowski (2020) provides a recent overview of auditory imagery in music, which discusses relevant issues.

Kind also rightly acknowledges that imagination can be controlled, but does not treat this at length and offers no empirical support Kind 2020a, 339).

Ideas of constraining imagination have also been developed elsewhere, see Williamson (2016).

When the capacity to imagine matures is a debated topic, and some suggest that it could be as early as 7 months, as demonstrated by violation-of-expectation studies (Onishi & Baillargeon, 2005; Scott & Baillargeon, 2017) and motor planning studies (Claxton et al., 2003). For a general discussion of the development of imagination, see Suddendorf & Redshaw (2013).

It is worth noting that language could also be a confounding factor here too, but no non-verbal paradigms have yet been developed—this is a question for future research.

This is uncontroversially assuming that capacitates developed in childhood persist to adulthood, rather than deteriorate. But this is not to say that empirical research should not be conducted on adults as well, since this could further shed light on how and when constraints are employed.

Someone might worry that restricting the discussion to control for mental actions excludes an important area of imagination, namely prop-based imagination (Walton, 1990). Prop-based imagination is very common in children, who often imagine everyday objects as being something else (e.g. a stick as a sword), and it can also be seen in adults, such as when using pint glasses to represent football players on a field. I acknowledge that this is an important area of imagination, but due to space constraints in this paper, my discussion will be restricted to non-prop-based imagination. Importantly, the account I develop of the selection mechanism where content is selected from episodic/semantic memory does not preclude extending the cognitive architecture to explain how content can also be selected from perception in certain cases, as in prop-based imagination. My cognitive architecture is not a comprehensive one, and future research should work on extending it the account to include prop-based imagination. I am grateful to an anonymous reviewer for raising this point.

I am grateful to two anonymous reviewers for pressing me on a number of issues in this section.

Jones & Love (2011) have termed this approach, which I endorse, ‘Bayesian Enlightenment’.

This example is adapted from Perfors et al., (2011). Though a causal link need not be present between the hypothesis and evidence for there to be a probabilistic dependence between the two, I assume along with Perfors et al. that there is indeed such a link present here. Thanks to an anonymous reviewer for pointing this out.

See Williams (2021) for a discussion of generative Bayesian accounts in imagination.

Note that this does proposal does not entail that emotionally neutral episodes cannot be recalled/imagined, as priors alone do not determine the probability. I thank an anonymous reviewer for raising this issue.

This is not true across the board, as suppression mechanisms can sometimes work to suppress negatively valenced episodes, especially if a person has experienced trauma (Ryckman et al., 2018). However, the cases I am concerned with are not cases of trauma, so I assume that no suppression mechanisms are involved here. Thanks to an anonymous reviewer for raising this interesting point.

Some other possible options for setting priors include recency and frequency of recall (Talarico et al., 2004).

It might be helpful to think of the agent as ‘priming’ certain memory representations here. When priming a memory, a subject is presented with a certain cue, and this makes it more likely that they recall memories that are related to the cue (e.g. mouse-cheese) (McNamara, 2005).

Interestingly, there are actually two different probabilities at play here. The probability of Matilda generating an accurate imagining is an objective probability, whereas the probabilities assigned by the selection mechanism to content are subjective probabilities. Subjective probabilities are probabilities represented by a vehicle, whereas objective probabilities are probabilities of representing something. Like most Bayesian theories in cognitive science, the account I have given is concerned with subjective probability. However, if we are interested in modelling behaviour, we can also infer an objective probability from the subjective probabilities, as the subjective probabilities assigned by the selection mechanism bears on the probability that Matilda generates an accurate imagining. See Hájek (2019) for a discussion. I thank an anonymous reviewer for raising this issue.

I am grateful to three anonymous reviewers for pressing me on this point.

This is similar to the linguistic cues discussed by Jones and Wilkinson (2020).

References

Addis, D. R. (2018). Are episodic memories special? On the sameness of remembered and imagined event simulation. Journal of the Royal Society of New Zealand 48. Taylor & Francis: 64–88. https://doi.org/10.1080/03036758.2018.1439071

Addis, D., Rose, L., Pan, M. A., Vu, N., Laiser, & Schacter, D. L. (2009). Constructive episodic simulation of the future and the past: Distinct subsystems of a core brain network mediate imagining and remembering. Neuropsychologia, 47, 2222–2238. https://doi.org/10.1016/j.neuropsychologia.2008.10.026

Addis, D., Rose, A. T., Wong, & Schacter, D. L. (2007). Remembering the past and imagining the future: Common and distinct neural substrates during event construction and elaboration. Neuropsychologia, 45, 1363–1377. https://doi.org/10.1016/j.neuropsychologia.2006.10.016

Anderson, J. R. (1982). Acquisition of Cognitive Skill. Readings in Cognitive Science: A Perspective from Psychology and Artificial Intelligence, 89, 362–380. https://doi.org/10.1016/B978-1-4832-1446-7.50032-7

Arango-Muñoz, S. (2014). The nature of epistemic feelings. Philosophical Psychology, 27, 193–211. https://doi.org/10.1080/09515089.2012.732002

Atance, C. M., & O’Neill, D. K. (2005). Preschoolers’ talk about future situations. First Language, 25, 5–18. https://doi.org/10.1177/0142723705045678

Atance, C., & Meltzoff, A. (2005). My future self: Young children’s ability to anticipate and explain future states. Cognitive Development, 20, 1–341. https://doi.org/10.1038/jid.2014.371

Axelrod, V., Rees, G., & Bar, M. (2017). The default network and the combination of cognitive processes that mediate self-generated thought. Nature Human Behaviour 1. Springer US: 896–910. https://doi.org/10.1038/s41562-017-0244-9

Baddeley, A. D., & Andrade, J. (2000). Working memory and the vividness of imagery. Journal of Experimental Psychology: General, 129, 126–145. https://doi.org/10.1037/0096-3445.129.1.126

Balcerak Jackson, M. (2018). Justification by Imagination. In Perceptual Imagination and Perceptual Memory, ed. Fiona Macpherson and Fabian Dorsch. Oxford University Press

Barlassina, L., & Gordon, R. M. (2017). Folk Psychology as Mental Simulation. The Stanford Encyclopedia of Philosophy

Bartlett, F. C. (1932). Remembering: A Study in Experimental and Social Psychology. Cambridge University Press

Beauchamp, M. R., Steven, R., Bray, & Albinson, J. G. (2002). Pre-competition imagery, self-efficacy and performance in collegiate golfers. Journal of Sports Sciences, 20, 697–705. https://doi.org/10.1080/026404102320219400

Benoit, R. G., & Schacter, D. L. (2015). Specifying the core network supporting episodic simulation and episodic memory by activation likelihood estimation. Neuropsychologia 75. Elsevier: 450–457. https://doi.org/10.1016/j.neuropsychologia.2015.06.034

Brewer, W. F., & Treyens, J. C. (1981). Role of schemata in memory for places. Cognitive Psychology, 13, 207–230. https://doi.org/10.1016/0010-0285(81)90008-6

Brown, R., & Kulik, J. (1977). Flashbulb Memories. Cognition, 5, 73–99. https://doi.org/10.1016/B978-0-08-097086-8.51035-6.

Clark, A. (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences, 36, 181–204. https://doi.org/10.1017/S0140525X12000477

Claxton, L. J., Keen, R., & McCarty, M. E. (2003). Evidence of motor planning in infant reaching behavior. Psychological Science, 14, 354–356. https://doi.org/10.1111/1467-9280.24421

Damasio, A. (1990). Face Agnosia And The Neural Substrates Of Memory. Annual Review of Neuroscience, 13, 89–109. https://doi.org/10.1146/annurev.neuro.13.1.89

Davies, J. (2020). Artificial Intelligence and Imagination. In A. Abraham (Ed.), The Cambridge Handbook of the Imagination (pp. 162–172). Cambridge University Press. Cambridge Handbooks in Psychologyhttps://doi.org/10.1017/9781108580298.011

DeKeyser, R. (2015). Skill Acquitision Theory. In B. VanPatten, & J. Williams (Eds.), Theories in Second Language Acquisition (Second Edition). New York: Taylor & Francis

Dorsch, F. (2012). The Unity of Imagining. De Gruyter

Dreyfus, H. L. (2007). The return of the myth of the mental. Inquiry: An Interdisciplinary Journal of Philosophy 50. Taylor & Francis: 352–365. https://doi.org/10.1080/00201740701489245

Dreyfus, H. L. (2002). Intelligence without representation—Merleau-Ponty’s critique of mental representation.Phenomenology and the Cognitive Sciences1

Driskell, J. E., Copper, C., & Moran, A. (1994). Does mental practice enhance performance? Journal of Applied Psychology, 79, 481–492. https://doi.org/10.1037//0021-9010.79.4.481

Ebert, J. E. J., Daniel, T., Gilbert, & Wilson, T. D. (2009). Forecasting and Backcasting: Predicting the Impact of Events on the Future. Journal of Consumer Research, 36, 353–366. https://doi.org/10.1086/598793

Fernandez Cruz, A. L., Arango-Muñoz, S., & Volz, K. G. (2016). Oops, scratch that! Monitoring one’s own errors during mental calculation. Cognition 146. Elsevier B.V, 110–120. https://doi.org/10.1016/j.cognition.2015.09.005

Fisher, M., Ritchie, D., Savva, M., Hanrahan, T., & Funkhouser Pat. (2012). and. Example-based synthesis of 3D object arrangements.ACM Transactions on Graphics

Fridland, E. (2014). They’ve lost control: Reflections on skill. Synthese, 191, 2729–2750. https://doi.org/10.1007/s11229-014-0411-8

Fulford, J., Milton, F., Salas, D., Smith, A., Simler, A., Winlove, C., & Zeman, A. (2018). The neural correlates of visual imagery vividness—An fMRI study and literature review. Cortex, 105, 26–40. Elsevier Ltd. https://doi.org/10.1016/j.cortex.2017.09.014

Geman, S., & Geman, D. (1984). Stochastic Relaxation, Gibbs Distributions, and the Bayesian Restoration of Images. IEEE Transactions on Pattern Analysis and Machine Intelligence PAMI, 6, 721–741. https://doi.org/10.1109/TPAMI.1984.4767596

Gilbert, D. T., & Wilson, T. D. (2007). Prospection: Experiencing the future. Science, 317, 1351–1354. https://doi.org/10.1126/science.1144161

Goldman, A. (2006). Simulating Minds: The Philosophy, Psychology, and Neuroscience of Mindreading. Oxford University Press USA

Gopnik, A., & Tenenbaum, J. B. (2007). Bayesian networks, Bayesian learning and cognitive development. Developmental Science, 10, 281–287. https://doi.org/10.1111/j.1467-7687.2007.00584.x

Guillot, A. (2020). Neurophysiological Foundations and Practical Applications of Motor Imagery. In A. Abraham (Ed.), The Cambridge Handbook of the Imagination (pp. 207–226). Cambridge University Press. Cambridge Handbooks in Psychologyhttps://doi.org/10.1017/9781108580298.014

Habacha, H., Molinaro, C., & Dosseville, F. (2014). Effects of gender, imagery ability, and sports practice on the performance of a mental rotation task. American Journal of Psychology, 127, 313–323. https://doi.org/10.5406/amerjpsyc.127.3.0313

Hájek, A. (2019). Interpretations of Probability. In E. N. Zalta (Ed.), The Stanford Encyclopedia of Philosophy

Hall, C. R., Diane, E., Mack, A., Paivio, Heather, A., & Hausenblas (1998). Imagery use by athletes: Development of the Sport Imagery Questionnaire. International Journal of Sport Psychology, 29, 73–89. Italy: Edizioni Luigi Pozzi

Hallford, D. J., Austin, D. W., Takano, K., & Raes, F. (2018). Psychopathology and episodic future thinking: A systematic review and meta-analysis of specificity and episodic detail. Behaviour Research and Therapy, 102, 42–51. Elsevierhttps://doi.org/10.1016/j.brat.2018.01.003

Hohwy, J. (2020). New directions in predictive processing. Mind and Language, 35, 209–223. https://doi.org/10.1111/mila.12281

Irish, M., Addis, D. R., & Hodges, J. R., and Olivier Piguet (2012). Considering the role of semantic memory in episodic future thinking: Evidence from semantic dementia. Brain, 135, 2178–2191. https://doi.org/10.1093/brain/aws119

Jakubowski, K. (2020). Musical Imagery. In A. Abraham (Ed.), The Cambridge Handbook of the Imagination (pp. 187–206). Cambridge University Press. Cambridge Handbooks in Psychologyhttps://doi.org/10.1017/9781108580298.013

Jones, M., & Love, B. C. (2011). Bayesian fundamentalism or enlightenment? on the explanatory status and theoretical contributions of bayesian models of cognition. Behavioral and Brain Sciences, 34, 169–188. https://doi.org/10.1017/S0140525X10003134

Jones, M., & Wilkinson, S. (2020). From Prediction to Imagination. In A. Abraham (Ed.), The Cambridge Handbook of the Imagination. Cambridge University Press

Jusczyk, P. W. (2003). Chunking language input to find patterns. Early category and concept development: Making sense of the blooming, buzzing confusion (pp. 27–49). New York, NY, US: Oxford University Press

Kanwisher, N., McDermott, J., & Chun, M. M. (1997). The Fusiform Face Area: A Module in Human Extrastriate Cortex Specialized for Face Perception Nancy. The Journal of Neuroscience, 17, 4302–4311

Kind, A. (2016). Imagining Under Constraints. Knowledge Through Imagination. https://doi.org/10.1093/acprof:oso/9780198716808.003.0007

Kind, A. (2020a). The Skill of Imagination. In E. Fridland, & C. Pavese (Eds.), Routledge Handbook of Philosophy of Skill and Expertise (pp. 335–346)

Kind, A. (2020b). What imagination teaches. In J. Schwenkler, & E. Lambert (Eds.), Transformative Experience. Oxford University Press

Kirchhoff, M. D. (2018). Predictive processing, perceiving and imagining: Is to perceive to imagine, or something close to it? Philosophical Studies, 175, 751–767. Springer Netherlands. https://doi.org/10.1007/s11098-017-0891-8

Kosslyn, S. M. (1994). Image and Brain: The Resolution of the Imagery Debate. MIT Press

Kosslyn, S. M., Thompson, W., & Ganis, G. (2006). The Case for Mental Imagery. Oxford University Press

Levine, B., Svoboda, E., Hay, J. F., Gordon Winocur, and, & Moscovitch, M. (2002). Aging and autobiographical memory: Dissociating episodic from semantic retrieval. Psychology and Aging, 17, 677–689. https://doi.org/10.1037/0882-7974.17.4.677

Madore, K. P., Gaesser, B., & Schacter, D. L. (2014). Constructive episodic simulation: Dissociable effects of a specificity induction on remembering, imagining, and describing in young and older adults. Journal of Experimental Psychology: Learning Memory and Cognition, 40, 609–622. https://doi.org/10.1037/a0034885

Madore, K. P., Helen, G., Jing, & Schacter, D. L. (2016). Divergent creative thinking in young and older adults: Extending the effects of an episodic specificity induction. Memory and Cognition, 44, 974–988. https://doi.org/10.3758/s13421-016-0605-z

McColgan, K. L., & McCormack, T. (2008). Searching and Planning : Young Children ’ s Reasoning about Past and Future Event Sequences. Child Development, 79, 1477–1497

McNamara, T. P. (2005). Semantic Priming: Perspectives from memory and word recognition. New York: Psychology Press Taylor and Francis Group

Michaelian, K., & Arango-Muñoz, S. (2014). Epistemic feelings, epistemic emotions: Review and introduction to the focus section. Philosophical inquiries, 2(1), 97–122

Munroe, K. J., Peter, R., Giacobbi, C., Hall, & Weinberg, R. S. (2000). The four Ws of imagery use: Where, when, why, and what. Sport Psychologist, 14, 119–137. https://doi.org/10.1123/tsp.14.2.119

Munzert, J., & Lorey, B. (2013). Motor and visual imagery in sports. Multisensory Imagery, 9781461458, 319–341. https://doi.org/10.1007/978-1-4614-5879-1_17

Norris, D. (2006). The Bayesian reader: Explaining word recognition as an optimal bayesian decision process. Psychological Review, 113, 327–357. https://doi.org/10.1037/0033-295X.113.2.327

Norris, D., & Cutler, A. (2021). More why, less how : What we need from models of cognition ☆. Elsevier B.V.

O’Craven, K. M., & Kanwisher, N. (2000). Mental Imagery of Faces and Places Activates Corresponding Stimulus-Specific Brain Regions. Journal of Cognitive Neuroscience, 12, 1013–1023

Onishi, K. K., & Baillargeon, R. (2005). Do 15-month-old infants understand false beliefs? Science, 308, 255–258. https://doi.org/10.1126/science.1107621

Paul, L. A. (2004). Transformative Experience. New York: Oxford University Press

Pearson, J. (2019). The human imagination: the cognitive neuroscience of visual mental imagery. Nature Reviews Neuroscience, 20, 624–634. Springer US. https://doi.org/10.1038/s41583-019-0202-9

Pearson, J., Naselaris, T., Holmes, E. A., & Kosslyn, S. M. (2015). Mental Imagery: Functional Mechanisms and Clinical Applications. Trends in Cognitive Sciences, 19, 590–602. Elsevier Ltd. https://doi.org/10.1016/j.tics.2015.08.003

Perfors, A., Tenenbaum, J. B., Griffiths, T. L., & Xu, F. (2011). A tutorial introduction to Bayesian models of cognitive development. Cognition, 120, 302–321. Elsevier B.V. https://doi.org/10.1016/j.cognition.2010.11.015

Pezzulo, G., & Castelfranchi, C. (2009). Thinking as the control of imagination: A conceptual framework for goal-directed systems. Psychological Research, 73, 559–577. https://doi.org/10.1007/s00426-009-0237-z

Provost, A., Johnson, B., Karayanidis, F., & Brown, S. D., and Andrew Heathcote (2013). Two routes to expertise in mental rotation. Cognitive Science, 37, 1321–1342. https://doi.org/10.1111/cogs.12042

Ryckman, N. A., Addis, D. R., Latham, A. J., & Lambert, A. J. (2018). Forget about the future: effects of thought suppression on memory for imaginary emotional episodes. Cognition and Emotion, 32, 200–206. Taylor & Francis. https://doi.org/10.1080/02699931.2016.1276049

Schacter, D. L., & Addis, D. R. (2007). The cognitive neuroscience of constructive memory: Remembering the past and imagining the future. Philosophical Transactions of the Royal Society B: Biological Sciences, 362, 773–786. https://doi.org/10.1098/rstb.2007.2087

Schacter, D. L., & Addis, D. R. (2020). Memory and Imagination: Perspectives on Constructive Episodic Simulation. The Cambridge Handbook of the Imagination, 111–131. https://doi.org/10.5860/choice.50-4715

Schacter, D. L., Addis, D. R., & Buckner, R. L. (2007). Remembering the past to imagine the future: The prospective brain. Nature Reviews Neuroscience, 8, 657–661. https://doi.org/10.1038/nrn2213

Schacter, D. L., Addis, D. R., Hassabis, D., Martin, V. C., Nathan Spreng, R., & Szpunar, K. K. (2012). The Future of Memory: Remembering, Imagining, and the Brain. Neuron, 76, 677–694. Elsevier Inc. https://doi.org/10.1016/j.neuron.2012.11.001

Scott, R. M., & Baillargeon, R. (2017). Early False-Belief Understanding. Trends in Cognitive Sciences, 21, 237–249. Elsevier Ltd. https://doi.org/10.1016/j.tics.2017.01.012

Shepard, R. N., & Metzler, J. (1971). Mental rotation of three-dimensional objects. Science. US: American Assn for the Advancement of Science. https://doi.org/10.1126/science.171.3972.701

Shultz, T. R. (2007). The Bayesian revolution approaches psychological development. Developmental Science, 10, 357–364. https://doi.org/10.1111/j.1467-7687.2007.00588.x

Smith, R. (2021). A Step-by-Step Tutorial on Active Inference and its Application to Empirical Data Active Inference Tutorial * These authors contributed equally. Author affiliations: Insti. https://doi.org/10.31234/osf.io/b4jm6.

Stanley, J., & Krakauer, J. W. (2013). Motor skill depends on knowledge of facts. Frontiers in Human Neuroscience, 7

Stanley, J., & Williamson, T. (2001). Knowing How. Journal of Philosophy, 98, 411–444

Suddendorf, T., & Busby, J. (2005). Making decisions with the future in mind: Developmental and comparative identification of mental time travel. Learning and Motivation, 36, 110–125. https://doi.org/10.1016/j.lmot.2005.02.010

Suddendorf, T., & Corballis, M. C. (2007). The evolution of foresight: What is mental time travel, and is it unique to humans? Behavioral and Brain Sciences, 30, 299–351. https://doi.org/10.1017/S0140525X07001975

Suddendorf, T., & Redshaw, J. (2013). The development of mental scenario building and episodic foresight. Annals of the New York Academy of Sciences, 1296, 135–153. https://doi.org/10.1111/nyas.12189

Talarico, J. M., Kevin, S., Labar, & Rubin, D. C. (2004). Emotional intensity predicts autobiographical memory experience. Memory and Cognition, 32, 1118–1132. https://doi.org/10.3758/BF03196886

Walker, C. M., & Gopnik, A. (2013). Causality and Imagination. In M. Taylor (Ed.), The Oxford Handbook of the Development of Imagination

Walton, K. L. (1990). Mimesis as Make-Believe. Cambridge, MA: Harvard University Press

Ward, A. M. (2016). A critical evaluation of the validity of episodic future thinking: A clinical neuropsychology perspective. Neuropsychology, 30, 887–905. https://doi.org/10.1037/neu0000274

Williams, D. (2021). Imaginative Constraints and Generative Models. Australasian Journal of Philosophy, 99, 68–82. Taylor & Francis. https://doi.org/10.1080/00048402.2020.1719523

Williams, S. E., & Cumming, J. (2014). The Sport Imagery Ability Questionnaire Manual. University of Birmingham, 1–7. https://doi.org/10.13140/RG.2.1.1608.6565

Williamson, T. (2016). Knowing by Imagining. Knowledge Through Imagination, 113–123. https://doi.org/10.1093/acprof:oso/9780198716808.003.0005

Wilson, T. D., & Gilbert, D. T. (2000). Affective Forecasting. Advances in Experimental Social Psychology, 35, 345–411. https://doi.org/10.1016/j.pain.2011.02.015

Wilson, T. D., Meyers, J., Daniel, T., & Gilbert (2003). How Happy Was I, Anyway?” A Retrospective Impact Bias. Social Cognition, 21, 421–446. https://doi.org/10.1521/soco.21.6.421.28688

Wu, W. (2016). Experts and Deviants: The Story of Agentive Control. Philosophy and Phenomenological Research, 92

Young, A. W., Glyn, W., Humphreys, M., Jane Riddoch, D. J., Hellawell, Edward, H. F., & de Haans (1994). Recognition impairments and face imagery. Neuropsychologia, 32, 693–702. https://doi.org/10.1016/0028-3932(94)90029-9

Zacks, J. M. (2008). Neuroimaging studies of mental rotation: A meta-analysis and review. Journal of Cognitive Neuroscience, 20, 1–19. https://doi.org/10.1162/jocn.2008.20013

Zvyagintsev, M., Clemens, B., Chechko, N., Mathiak, K. A., Alexander, T., Sack, & Mathiak, K. (2013). Brain networks underlying mental imagery of auditory and visual information. European Journal of Neuroscience, 37, 1421–1434. https://doi.org/10.1111/ejn.12140

Acknowledgements

For discussions and comments on drafts of this paper, I am grateful to Luca Barlassina, Dorothea Debus, Dominic Gregory, Will Hornett, James Lloyd, Alice Murphy, and six anonymous reviewers. I am also grateful to The White Rose College of the Arts and Humanities (WRoCAH) for funding support.

Funding

This research was funded by The White Rose College of the Arts and Humanities (WRoCAH), grant number 169547374.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest/Competing interests

N/A.

Ethics approval

N/A.

Consent to participate

N/A.

Consent for publication

N/A.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Blomkvist, A. Imagination as a skill: A Bayesian proposal. Synthese 200, 119 (2022). https://doi.org/10.1007/s11229-022-03550-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11229-022-03550-z