Clearly, science, whose aim is to comprehend nature, must assume the comprehensibility of nature and must reason and investigate according to this presupposition until incontrovertible facts may force it to recognize its limits.

(Helmholtz 1847, p. 4)

Abstract

The relativistic revolution led to varieties of neo-Kantianism in which constitutive principles define the object of scientific knowledge in a domain-dependent and historically mutable manner. These principles are a priori insofar as they are necessary premises for the formulation of empirical laws in a given domain, but they lack the self-evidence of Kant’s a priori and they cannot be identified without prior knowledge of the theory they purport to frame. In contrast, the rationalist endeavors of a few masters of theoretical physics have led to comprehensibility conditions that are easily admitted in a given domain and yet suffice to generate the theory of this domain. The purpose of this essay is to compare these two kinds of relativized a priori, to discuss the nature of the comprehensibility conditions, and to demonstrate their effectiveness in a modular conception of physical theories.

Similar content being viewed by others

In fundamental theories of physics, we may distinguish between a generic framework and specific laws depending on the subclass of systems considered. For instance, in Newtonian mechanics we have a framework defined by Newton’s three laws of motion and specific laws of force for gravitation, (corpuscular) optics, etc.; in quantum mechanics, we have a framework defined by the Hilbert space of states and the Schrödinger equation on the one hand and specific choices of the Hamiltonian operator on the other hand. Different frameworks may have very different mathematical structures and thus define different sorts of objects, both mathematically and physically. It is therefore tempting to place the framework of a theory at a more fundamental epistemological level than the rest of the theory. For instance, the framework could be an expression of basic constraints for the possibility of experience.

This transcendental view is the one Immanuel Kant propounded for the Newtonian framework in the late eighteenth century. In his system, Newton’s laws result from the application of rigidly and a priori determined categories of our understanding. This view is of course incompatible with the advent of relativistic mechanics and quantum mechanics, which require new frameworks. Historically, there were many attempts to accommodate the Kantian doctrine to the relativity of frameworks.Footnote 1 Two classes of attempts will be considered in this essay. In the first class, the rationalist ambition to derive the possible frameworks by a priori means is given up; their constitutive principles are identified by analysis of the accepted theories of physics; rational justification is limited to the historical replacement of a given framework by another. The accent here is on the way theoretical frameworks constitute the objects and processes of the theory. In the second kind of approach, the accent is on the comprehensibility of the investigated domain: an attempt is made to identify natural conditions of comprehensibility from which the relevant framework derives. Compared to Kant’s transcendental idealism, the implied rationalism is moderate since the conditions of comprehensibility are tentative, local, and refutable. The purpose of the present essay is to compare these two varieties of neo-Kantianism and to argue that the second variety solves some of the difficulties of the first.Footnote 2

In Sect. 1, the constitutive principles of the first variety of neo-Kantianism are illustrated through three examples: Ernst Cassirer’s “forms of knowledge,” the young Hans Reichenbach’s “principles of coordination,” and Michael Friedman’s “relativized a priori.” The first two examples were responses to the challenge of Albert Einstein’s theory of relativity around 1920. Friedman’s much more recent proposal builds on this early neo-Kantianism, on Rudolf Carnap’s linguistic frameworks, and on Thomas Kuhn’s paradigms. It is nowadays the most persuasive and most influential counter-reaction to post-Quinean epistemological holism. Cassirer’s approach is singular in its Marburgian denial of any counterpart to Kant’s sensibility. His forms of knowledge are preconditions of measurement conceived within a purely relational and progressively unified structure, with no pre-conceptual given in perception. In contrast, for Reichenbach and for Friedman there are no constitutive principles without coordination between the theory and the empirically given. While Cassirer’s neglect of coordination implies a certain vagueness of his forms of knowledge, Reichenbach’s and Friedman’s first notions of coordination are so rudimentary that they easily degenerate into mere conventions. This is the reason why Friedman, in more recent writings, endows the target of the coordination with a complex adaptable structure including experimental, technological, and socio-cultural dimensions.Footnote 3

This recent evolution of Friedman’s views suggests that the identification of constitutive frameworks should be subordinated to a sufficiently realistic account of the concrete application of physical theories. Section 2 is a sketch of the account that I proposed a few years ago after inspecting many historical cases. In this conception, physical theories have an evolving substructure that mediates between their symbolic universe and concrete experiments. This substructure implies interpretive schemes acting as blue prints of conceivable experiments, and modular connections with other theories. This modular structure is shown to be essential to the application, comparison, construction, and communication of theories. It also enables us to construct descriptive schemes for experiments in a given domain of physics without yet knowing the relevant theory.Footnote 4

This last point is what permits a sharp formulation of the comprehensibility conditions discussed in Sect. 3. The “comprehensibility of nature” (Begreiflichkeit der Natur) is the expression Hermann Helmholtz used in the nineteenth century to characterize natural but tentative regularity requirements for nature. This could mean, in his earliest works, a Laplacian and Kantian reduction of physical phenomena to central forces acting in pairs of material points, or it could more broadly mean lawfulness (causality) and the measurability of basic quantities.Footnote 5 In the same vein, I define the comprehensibility conditions as specific implementations of ideals of causality, measurability, and correspondence (with earlier theories) in a given domain of experience. From Greek statics to general relativity and quantum mechanics, there were many attempts to prove that assumptions of this kind completely define the theoretical framework that fits the given domain of experience. Two strikingly successful examples are given in this section: Helmholtz’s derivation of the locally Euclidean character of physical space, and Lagrange’s derivation of the general principles of statics. For the history and criticism of similar derivations of other important theories of physics, I refer the reader to my Physics and necessity.Footnote 6

Lastly, I compare constitutive principles and comprehensibility conditions. Whereas the former are obtained by inspection of a theory in its usual presentation, the latter are obtained by implementing regulative ideas of causality, measurability, and correspondence. Intertheoretical relations and epistemological considerations play an important role for supporters of both views. But they occur at different moments. In the first view, they serve to rationalize the transition between successive theories. In the second view, they provide the modular structure and the regulative principles that orient our search for comprehensibility conditions. Most strikingly, comprehensibility principles have a naturalness and a theory-generating power that elude constitutive principles. The conjunction of these two qualities may seem paradoxical: how could easily accepted principles have so far-reaching structural implications? The answer lies in the circumstance that comprehensibility principles are not applied in an epistemological vacuum. They presuppose a general conception of physical theory in which comprehensibility can take a mathematically and empirically precise form.

1 Constitutive principles from Kant to Friedman

1.1 The Kantian heritage

In Kant’s Critique of pure reason, any empirical knowledge requires the mental processing of sensorial data through two separate faculties working in tandem. The first mental faculty, called sensibility, allows us to receive sensorial information, prior to any conceptual synthesis. The resulting imprints on the mind, called intuitions, all have two forms independent of their sensorial content: time as the pure form of internal intuition, and space as the pure form of external intuition. The second faculty, called the understanding, allows us to synthesize our experience in a systematic, unified manner through concepts, rules, and laws. Some concepts of the understanding exist prior to any experience, and they are necessary preconditions of any empirical knowledge. In what Kant calls a transcendental deduction, the existence of these pure concepts or categories derives from the unity of our pre-perceptual thinking (synthetic unity of apperception). There are four categories reflecting the fundamental forms of judgment (quantity, quality, relation, and modality), and each category has three subcategories.Footnote 7

The most basic act of empirical knowledge according to Kant is the application of concepts to intuitions. Since the understanding and sensibility are of a very different nature by definition, Kant bridges these two faculties through schematism. Namely, he relies on temporal imagination to associate a mediating counterpart or schema to each pure concept. For instance, number (of occurrences in time) is the schema of quantity, and order (in time) is the schema of quality. The application of pure concepts to pure intuitions is a priori, since pure concepts, pure intuitions, and the mediating schemata all are so. The results of this application are synthetic, namely: they condition possible experiences. They include Euclidean geometry and ordinary arithmetic, which thereby acquire their synthetic a priori status.

The categories, the intuitions of space and time, and the schemata determine the general form of any scientific knowledge of the world. The content remains largely open. In Kant’s words, transcendental criticism is only a method. In order to provide a foundation of natural sciences, the transcendental apparatus must be supplemented with a “metaphysics” of empirical objects or with some empirical induction. In his Metaphysical foundations of natural science, Kant follows the metaphysical route in defining matter as a continuous distribution of centers of force. Applying the three subcategories of relation (substance, causality, and community) to this representation, he gets three laws of Newtonian mechanics (conservation of mass, rectilinear inertia, equality of action and reaction). Newton’s mechanics thus acquires rational necessity and the only empirical input is the force law (of gravitation for instance).Footnote 8

In the Critique of judgment, Kant describes the empirico-inductive route in which we gradually fill in the contents of the transcendental form of knowledge. In this view, we do not know in advance how the categories, especially those of substance and cause, should be implemented in the empirical world. We can only hope that the laws of a mature science will employ them in a simple, unified manner. In addition to the constitutive principles of the understanding, which determine the transcendental form of knowledge, we need the regulative principles of a parsimonious reason, which guide us in giving empirical content to the form.Footnote 9

There are many obscurities in Kant’s architectonic. The strictly non-conceptual and the non-sensorial character of pure intuition seem hard to reconcile; the deduction of the table of categories is opaque; the time-based schematism seems arbitrary; the necessity of Euclidean geometry is asserted without proof; and the Newtonian concept of matter is not sufficiently justified. The suspicion runs high that Kant arbitrarily rigidified contingent presuppositions of the Aristotelian logic and the Newtonian science that prevailed in his time.Footnote 10

In the nineteenth century, it became clear that Euclidean geometry was not the only conceivable geometry and that mathematics should not be tied to the intuitions of space and time. These blows to Kant’s doctrine were not fatal. In his memoirs on the foundations of geometry around 1870, Helmholtz argued that Kant’s form of external intuition could be preserved if it was restricted to the general idea of space as a continuous, homogeneous manifold. He derived the locally Euclidean structure and the constant curvature of this manifold from the measurability of space by freely mobile rigid bodies, which may loosely be regarded as a rule of the understanding. In this view, experience serves only in the determination of the value of the curvature. Helmholtz thus gave a new content to Kant’s intuitions and categories. He also deeply altered their nature by giving intuition a physiological basis, and by downgrading the categories to tentative assumptions of comprehensibility.Footnote 11

Poincaré adapted Kant in a different manner: he gave up the idea of a passive sensibility and he made the general notion of space depend on the concept of Lie group, which he regarded as a synthetic a priori “form of the understanding.” He judged the choice of the group to be conventional because geometrical laws are never tested independently of dynamical laws (ruling the deformation of bodies or the propagation of light) and the latter laws can always be adjusted to fit a conventionally given geometry. A regulative principle of simplicity induced Poincaré to maintain Euclidean space and Galilean spacetime in face of his and Einstein’s relativity theory.Footnote 12

In Marburg, the neo-Kantian philosopher Hermann Cohen condemned Kant’s sensibility as an unwitting remnant of a “psychologism” in which objectivity is traced to a fixed, non-conceptual given. What is left of Kant’s theory of cognition after evacuating the theory of sensibility (the transcendental aesthetic), is the theory of the understanding, which Kant calls transcendental logic. This is why Cohen calls his mature philosophy a Logic of pure knowledge (1902). Like Kant, he characterizes this logic through a table of twelve basic judgments. But he gives up the strict connection between kinds of judgment and categories, as well as the justification of the categories through their applicability to the manifold of intuition. In his view, the basic judgments are fixed guidelines for deriving categories (including time, space, substance, and cause) that could evolve together with the sciences of nature. His table of judgments relies on Platonic principles of unity and identity, and on Leibnizian principles of continuity and infinity.Footnote 13

A few characteristics of Cohen’s variety of neo-Kantianism were influential both within and without the Marburg school. For Cohen, the transcendental project is defined as the identification of the a priori conditions of knowledge, and not as a speculative metaphysics of the relation between subject and object (as had been the case in the perverted Kantianism of Naturphilosophie). Knowledge is thereby defined as scientific knowledge in mathematical form, and not as elementary sensuous cognition. The pure, a priori, character of the transcendental apparatus excludes the notion of a fixed given to our thought, not even Kant’s pure intuitions; thought is purely generative. The categories, general laws, and principles of scientific knowledge may evolve in the course of time, although they are subjected to invariable regulative demands. To be true, the specific way in which Cohen formulated these demands was judged obscure by most of his contemporaries. Their general necessity nonetheless became a commonplace of neo-Kantianism.

1.2 Cassirer’s Substance and function (1910)

Cohen’s most outstanding disciple, Ernst Cassirer, judged the earlier transcendental projects to be misled by an antiquated, Aristotelian conception of logic based on the subject-predicate relation and naturally implying an abstractionist view of concept formation. In this view, concepts are reached inductively by detecting similarities in a collection of individuals. Like Cohen, Cassirer excluded any pre-conceptual given in experience and required a purely intellectual construction of concepts and objects. He found the means of this construction in the new relational logic of Bertrand Russell, in which structures or abstract systems of relations are the sole source of mathematical concepts. In Cassirer’s view, any cognition, from the most rudimentary to the most advanced, relies on a synthesis of our experience through relational structures. The basic expression of this synthesis is a function, through which the value of a given variable can be generated from the value of another variable. It should not be a substance, which would presuppose a hidden fixed being:Footnote 14

Instead of imagining behind the world of perceptions a new Dasein built solely out of the materials of sensation, [knowledge] contents itself with throwing universal intellectual schemata in which the relations and connections of perceptions can be completely represented.

At the most elementary level of perception, we become aware of stable relations between signs and inductively assume their generality. The signs, according to Helmholtz’s theory of perception, are not direct reflections of the properties of external objects. They are elementary data of perception whose correlations prompt us to assume the existence of stable, external objects. In truth, Cassirer concludes, the assumed objects are intellectual constructs, based on the properties of systems of relations.Footnote 15

In more advanced, scientific knowledge, Cassirer goes on, measurement plays an essential role. Contrary to the naive empiricist view, measurement is not a passive collection of empirical data. As Pierre Duhem emphasized, it presupposes concepts that define the conditions and purpose of measurement. In order to create the intellectual preconditions of quantitative, lawful theory, we must depart further and further from the sensory given. Whenever we identify constant structural elements in a theory, we tend to forget the intellectual presuppositions of measurement and theory-making, and we reify these constants just as we do with the objects of ordinary perception. This was the case, in Cassirer’s time, for the ether and for space and time. Independently of relativity theory (which he did not discuss in Substance and function), Cassirer reduced the ether to a “mere unification and concentration of objectively valid, measurable relations” and Newton’s absolute space and time to “pure functions.”Footnote 16

In the history of mathematics and physics, Cassirer saw a gradual substitution of functional forms for naive substantialist descriptions. Typically, the mind’s demand for permanence induces us to reify the stable components of the relational structures through which we organize our experience. At a given stage of science, we are at a resting-point [Haltpunkt] in which this stability remains unchallenged. In the next stage, we reach a higher systematic unity in which the earlier stable components become interrelated and variable:

Thus we stand before a ceaseless progress, in which the fixed fundamental form of being and process that we believed we had gained, seems to escape us. All scientific thought is dominated by the demand for unchanging elements, while on the other hand the empirically given constantly thwarts this urge. We grasp permanent being only to lose it again.

We are thus reminded of the merely functional character of our forms of knowledge.Footnote 17

To sum up, at each stage of the history of science, our theories rely on functional forms (Funktionsformen) that constitute the object of knowledge in an essentially relational manner. These forms are truly hypothetical, although we may mistake them for necessary, strictly a priori conditions of knowledge. The true a priori, in Cassirer’s terminology, should be a necessary premise of any cognition, and therefore cannot be revised in future science. It includes a principle of projected unity, which prompts us to depart more and more from immediate experience in order to reach higher formal unity and generality in our theories. It also entails the possibility of comparing successive forms of knowledge and to judge the superiority of the latter when the scope of our empirical investigation is increased. The successive stages of theory are rationally related inasmuch as the earlier stage contains the questions answered by the latter stage, and because it remains an approximation of the latter stage. These regulative principles restrict the choice of the functional forms, so that they are not mere conventions.Footnote 18

Despite the recurrent redefinition of the object of scientific inquiry through new functional forms, and despite the lack of a Kantian sensibility that would yield a fixed given in cognitive processes, the scientific enterprise remains objective because of its ability to self-correct, to reach higher unity and generality under a “common forum of judgment.” Although the functional form and the concomitant definition of the object of knowledge is constantly corrected, it converges toward a final, never reached object because the act of correction follows the rules of reason.Footnote 19

In addition to the regulative demand of progressive unity, Cassirer identifies a few “logical invariants” or categories that could pretend to be truly a priori: time, space, number, magnitude, permanence, change, causality, interaction. At the same time, he recognizes that these categories are purely functional and therefore might someday be dissolved into a higher relational unity. In his own words:Footnote 20

The goal of critical analysis would be reached, if we succeeded in isolating … the ultimate common elements of all possible forms of scientific experience; i.e., if we succeeded in conceptually defining the elements that persist in the advance from theory to theory because they are the conditions of any theory. At no given stage of knowledge can this goal be perfectly achieved; nevertheless it remains as a demand, and prescribes a fixed direction to the continuous unfolding and evolution of the systems of experience.

1.3 Cassirer on relativity theory

Cassirer did not discuss special relativity in Substance and function. In 1910, this theory already had a strong hold on German theoretical physics, and it was an obvious challenge to the Newtonian and Kantian views about space and time.Footnote 21 Yet it rarely attracted the attention of philosophers before the advent of general relativity and its spectacular postwar confirmation. Special relativity mixes up the two forms of intuition that Kant had separated, and it downgrades Newtonian mechanics to an approximation of a deeper theory in which the Newtonian law of acceleration no longer holds. General relativity undermines the distinction between inertial and non-inertial forces and brings the metric properties of spacetime to depend on the distribution of matter. The very idea of space and time as a fixed stage for phenomena needs to be given up.

When, around 1920, Cassirer studied Einstein’s theory of relativity, he welcomed it as a confirmation of the general picture of the nature and evolution of science he had given ten years earlier in Substance and function. Newtonian mechanics, special relativity, and general relativity could indeed be seen as three stages in the gradual process of unifying “functionalization” there described. In Cassirer’s analysis, the first, Newtonian stage relies on a synthesis in which spatial and temporal relations are the same in any reference system. In the second, special-relativistic stage, this double constancy is replaced with a higher synthesis based on the constancy of the velocity of light and the constancy of the Lorentz-group structure. In Substance and function, Cassirer had removed a fundamental obstacle to the transition from the first to second stage by regarding space and time as systems of relations instead of quasi-substantial beings; and he had anticipated the capital role of an analysis of the conditions of measurement in redefining space and time. In the third, general-relativistic stage, the equivalence of all coordinate systems deprives space and time of any fixed structural invariant and their metric structure becomes correlated with their material content. A limited synthetic unity is replaced by a deeper one in which any objectification of space–time as a necessary, fixed background is made impossible. Metric and energetic properties merge in a unified dynamical scheme.Footnote 22

In retrospect, the three stages share a proto-notion of space–time as a non-metric manifold of events, which Cassirer still calls pure intuition (although he has rejected Kant’s purely passive definition of intuition) and defines conceptually as “coordination under the viewpoint of coexistence and proximity, or under the viewpoint of succession” (p. 85). Each stage is structured by specific forms of knowledge. Cassirer names and characterizes these forms in a loose manner. Besides “form of knowledge” (pp. 57, 119: Erkenntisform), he uses the expressions “form of thought” (p. 88: Denkform), “ordering form” (p. 58: Ordnungsform), “logical system of coordinates to which we refer the phenomena” (p. 24), “point of leverage” (pp. 25, 40: Angelpunkt), “fixed intellectual pole,” (p. 35: ruhender Gedanklicher Pole), “rule of the understanding” (p. 82: Regel des Verstands), “norm of investigation” (p. 82: Norm der Forschung), and “prescription for the formation of physical concepts” (p. 97: Vorschrift für unsere physikalische Begriffsbildung). These constitutive forms are of a diverse nature: they include the absolute character of metric space and time (in the Newtonian stage), the constancy of the velocity of light and the principle of relativity (for special relativity), the equivalence principle and the equivalence of all coordinate systems (in general relativity).Footnote 23

Just as described in Substance and function, the motor of the evolution from one form of thought to the next is the regulative principle of convergence toward higher systematic unity, in a mutual adaptation of the forms of thought to experience:Footnote 24

Physics, as an empirical science, is equally bound to the “material” content, which sense perception offers it, and to these form-principles in which are expressed the universal conditions of the “possibility of experience.” It has to “invent” or to derive deductively the one as little as the other, i.e., neither the whole of empirical contents nor the whole of characteristic scientific forms of thought, but its task consists in progressively relating the realm of “forms” to the data of empirical observation and, conversely, the latter to the former. In this way, the sensuous manifold increasingly loses its “contingent” anthropomorphic character and assumes the imprint of thought, the imprint of systematic unity of form. Indeed “form,” just because it represents the active and shaping, the genuinely creative element, must not be conceived as rigid, but as living and moving.

The great merit of Cassirer’s variety of transcendental idealism is its ability to accommodate for even the deepest conceptual changes known in the history of science. This was later confirmed by the rationalization of the quantum revolution he offered in his Determinism and indeterminism.Footnote 25 This flexibility results from his reducing the absolute a priori conditions of knowledge to regulative principles of synthetic unity, lawfulness, and progressive convergence. The drawback is the vagueness of the characterization of the constitutive principles or “forms” that operate in the successive stages of a given science. Cassirer never meant his epistemology to be normative; he did not pretend to offer working rules for theorizing scientists. On the contrary, he believed the evolving forms of scientific thought and the direction in which they converge could only be identified by critical analysis of our best science in its historical development.

For Cassirer, the relative, hypothetical, and partially empirical character of the forms of thought reduces any a priori derivation of them to an illusion. If that is the case, how can we decide, at a given stage of physics, which principles of the leading theories play a constitutive role? It would be trivial and unhelpful to assume that the theory as a whole constitutes its object. In order to remain in the spirit of the transcendental method, we need to distinguish form from content, if only in a temporary manner. Although Cassirer is frustratingly vague on this point, he gives us some clues about where to find the constitutive principles: in the analysis of the preconditions of measurement (which he regards as an essential part of the critique of knowledge), in unifying symmetries, in invariants and constants of nature, and in constructive thought experiments. Especially important is the idea that when numeric determinations turn out to be relative to the observer, an intrinsic object can be constructed through the group of transformations relating the various determinations.Footnote 26

1.4 Reichenbach’s principles of coordination

Before having seen Cassirer’s treatise on relativity, his former student Hans Reichenbach published his own interpretation of the relativistic revolutions in a neo-Kantian framework. In Kant’s a priori, Reichenbach argued, one should distinguish two aspects: apodictic certainty, and constitutive power. The first has to go, but the second remains indispensible. The mind imposes some order and some resilience not to be found in raw sensory data.Footnote 27

The central concept of Reichenbach’s new theory of knowledge is coordination (Zuordnung). Although he borrows the word from Moritz Schlick’s empiricist theory of a one-to-one (eindeutige) set-theoretical correspondence between theoretical concepts and physical reality,Footnote 28 he believes that the epistemological concept of coordination essentially differs from the set-theoretical concept because the coordinated elements of physical reality (Wirklichkeit) are not defined before the coordination. Somewhat paradoxically, coordination defines the object of experience even though experience is the sole source of the relevant order. The coordinated elements are defined by the coordination itself. In order to be successful, the coordination has to be one to one, surely not in the set-theoretical sense of the word (which presupposes the target elements to be predefined), but in the following empirically testable sense: the value of any measurable quantity must be the same whatever be the data used for its determination.Footnote 29

At this stage of his reasoning, Reichenbach introduces the principles of coordination (Zuordnungsprinzipe) as the principles that make the coordination one to one. These principles constitute the objects of the theory as they define the mathematical form of physical quantities and the kinds of structures they can form. They do not by themselves alone determine the theory; in addition, Reichenbach admits laws of combination (Verknüpfungsaxiome or Verknüpfungsgesetze) that relate different physical quantities in an empirically testable manner (once the principles of coordination are given). For instance, Euclidean geometry and the vector character of forces are principles of coordination in classical mechanics, and a specific law of force is a law of combination. In general relativity, the differential manifold and the rules of tensor calculus on this manifold are principles of coordination, and Einstein’s equations relating the (derivatives of) the metric tensor with the energy–momentum tensor are laws of combination.Footnote 30

In the latter theory, the coordination depends on an arbitrary choice of coordinates and does not require a fixed given metric. On the one hand, this arbitrariness shows the necessity of a subjective form in the physical description. On the other hand, it shows that there are equivalent coordination frameworks. These are equally one-to-one and they are related by differentiable coordinate transformations. The invariants of the theory under these transformations define the objective content of reality (den objektiven Gehalt der Wirklichkeit) according to Reichenbach. The similarity with Cassirer’s notion of intrinsic object is here obvious.Footnote 31

As history teaches us, the principles of coordination do not share the apodictic certainty of Kant’s a priori. Radically new theories such as Einstein’s two theories of relativity require new principles of coordination. Future theories may require still different principles of coordination, as Reichenbach infers from Weyl’s contemporary proposal of a variable gauge for length measurement. Owing to the constitutive value of these principles, any such change implies a new mode of constituting the object of knowledge. In each such change Reichenbach sees a closer and closer approximation to reality. He believes in a “procedure of continual extension” (Verfahren der stetigen Erweiterung) enabling us to move inductively from one mode of coordination to the next.Footnote 32

To sum up, Reichenbach retains the Kantian idea of constitutive principles that define the object of knowledge. He departs from Kant by allowing these principles to vary in the history of physics. In a more apodictic vein, he stipulates the one-to-one character of the coordination provided by the constitutive principles, although he admits that even this meta-principle might have to be given up in a future science. In proper Marburg fashion, he does not distinguish sensibility from understanding. He reduces both of them to a logic of coordination. He does not admit predefined elements of reality as the target of the coordination. Yet the very idea of coordination seems to betray the nostalgia for a fixed given in perception. He indeed maintains a notion of space and time coordinates as a subjective form of description from which the true objects are extracted by constructing invariants. At the end of his book, he proposes to replace the Kantian deduction of categories with the art of extracting invariants from the subjective form of description.Footnote 33

There are several obscurities in Reichenbach’s notion of coordination: It is not clear how the coordination between the mathematical formalism and empirical reality is effectively done; it is not clear how the principles of coordination should be chosen and how they permit one-to-one coordination; and it is not clear how the one-to-one character of the coordination can be tested without knowing what the measured quantities and the measurements should be in the imagined tests. Then how are we supposed to distinguish the constitutive principles from mere conventions? Reichenbach believes that in a given empirical context, the choice of the coordination principles is constrained by the empirical data if other a priori “self-evident” principles are assumed. For instance, he argues that coordination by absolute time is excluded if we accept the relativity principle, the principle of contiguous action, and a principle of “normal induction.” As Reichenbach would himself later realize, this argument is invalid, if only because Poincaré’s version of special relativity satisfies all these principles without giving up the absolute time. In his correspondence with Reichenbach, Schlick similarly argued that nothing distinguished the alleged constitutive principles from conventions à la Poincaré. Shaken by this criticism, Reichenbach soon gave up his neo-Kantian claims and became the advocate of a “relativistic conventionalism.”Footnote 34

We may now compare the ways in which Cassirer and the young Reichenbach accommodated the relativistic revolution. They both saw three stages in the evolution of our concepts of space and time, corresponding to Newtonian mechanics, special relativity, and general relativity; they characterized each stage by its constitutive principles; and they had ways of comparing the successive principles. With some extrapolation and modernization of their identification of these principles, we might say that the Galilean group, the Lorentz group, and the group of diffeomorphisms respectively constitute the object of Newtonian mechanics, special relativity, and general relativity. For Reichenbach, these groups play a double role: they warrant the one-to-one character of the coordination between the mathematical apparatus of the theory and physical reality, and they interconnect equivalent coordinations. For Cassirer, they express systematic unity in the manifold of events, the better with the less metric background.

This difference in the interpretation of the constitutive groups results from a basic difference in the definition of constitutive principles. For Reichenbach, they are “principles of coordination” warranting the one-to-one character of the coordination between theory and physical reality. For Cassirer, they are “forms of knowledge” providing uniform, structuring presuppositions of measurement. For Reichenbach, objectivity remains tied to a presupposed empirical reality, whereas for Cassirer it results from a higher regulative principle of systematic unity. This is why Reichenbach’s neo-Kantianism could easily evolve into a conventionalist empiricism, whereas Cassirer strict regulative a priori prevented his forms of thought from degenerating into mere conventions.

Cassirer and the young Reichenbach both situated themselves in the Kantian tradition. Yet they presented their relation to Kant in a different manner. Cassirer saw himself as capturing the essence of Kant’s transcendental project, properly interpreted as a regulative ideal in the search for constitutive principles. In contrast, Reichenbach believed that Kant’s a priori depended on a notion of self-evidence (Evidenz) that led to inconsistencies in the face of the newer physics. In a letter thanking Reichenbach for his text, Cassirer wrote:

Our viewpoints are related –but, as far as I can see for the moment, they do not coincide in regard to the determination of the concept of a priori and in regard to the interpretation of the Kantian doctrine; in my opinion, you interpret this doctrine in a too psychological manner, and you consequently exaggerate the contrast with your “analysis of science.” When understood in a strict “transcendental” manner, Kant is much closer to this conception than you suggest.

What Reichenbach called the method of science analysis (wissenschaftsanalytische Methode) was an inquiry in the implicit presuppositions of modern science. As suggested by Cassirer, this agreed with the transcendental method in Marburg style and contradicted Kant only if the more contingent and psychological aspects of this doctrine (intuition and the self-evidence of some categories) were taken seriously.Footnote 35

1.5 Friedman’s “relativized a priori”

In recent years, Michael Friedman proposed his own neo-Kantian interpretation of constitutive change in physical theory under the label “relativized a priori.” The most detailed account of his views is found in his Dynamics of reason published in 2001. His main sources of inspiration are Reichenbach’s early principles of coordination, Cassirer’s idea of a converging sequence of forms of knowledge, Rudolf Carnap’s linguistic frameworks, and Thomas Kuhn’s paradigms. His main target is the holism of Willard Quine’s “webs of beliefs,” which exclude any distinction between the formal and empirical components of a theory. According to Friedman, Quine’s proofs of the impossibility of this distinction (or of the related analytic/synthetic distinction) only apply when it is expressed in purely logical terms (as is the case for Carnap). They do not apply to the distinction between “constitutive principles” and “properly empirical laws” which Friedman takes to be a central feature of any advanced physical theory (echoing Reichenbach’s distinction between principles of coordination and laws of combination). Like Reichenbach’s principles of coordination, Friedman’s constitutive principles define the object of scientific knowledge without having the apodictic certainty of Kant’s a priori. They may undergo radical changes in revolutionary circumstances. Friedman defines his constitutive principles as basic preconditions for the mathematical formulation and the empirical application of a theory.Footnote 36

Like Reichenbach and Cassirer, Friedman sees some rationality in the transition from one set of constitutive principles to the next and he assumes asymptotic convergence toward stable principles. For Cassirer, increased systematic unity by de-reification was the rational motor of change. For Reichenbach, intertheoretical approximation and a higher principle of normal induction controlled such transitions. These are internal mechanisms of change in which physicists are being their own philosophers. Although Friedman also dwells on intertheoretical relations of approximation and reinterpretation, he does not believe that a purely internal process of theory change suffices to overcome the Kuhnian incommensurability barrier between successive paradigms. In his eyes, philosophical meta-frameworks play an essential role in allowing for a continuous, natural transition toward new constitutive frameworks.Footnote 37

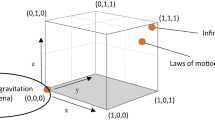

Friedman’s choice of constitutive principles in the three standard examples of Newtonian physics, special relativity, and general relativity somewhat differ from Reichenbach’s and Cassirer’s. For Newtonian physics, the constitutive principles are Euclidean geometry and Newton’s laws of motion, in conformity with Kant’s Metaphysical foundations of natural science. Newton’s law of gravitation has no empirical meaning without the laws of motion. For special relativity, Friedman’s constitutive principles are the light postulate, the relativity principle, and the mathematics needed to develop the consequences of these principles. For general relativity, the relevant principles are the Riemannian manifold structure, the light postulate (used locally), and the equivalence principle (understood as the statement that free-falling particles follow geodesics of the Riemannian manifold)Footnote 38 ; Einstein’s relation between the Riemann curvature tensor and the energy–momentum tensor is regarded as a “properly empirical law,” whose content cannot be expressed and tested without the constitutive principles.Footnote 39

One might object that some of Friedman’s constitutive principles, notwithstanding their being preconditions of properly empirical laws, are themselves empirical laws. Friedman anticipates this objection through a Poincarean remark: some constitutive principles do have antecedents in merely empirical laws, but they have been “elevated” to a higher status in which they become conventions for the construction of the new theory.Footnote 40 Let us see how this would work in the case of Newtonian physics. Newton’s laws of motion do have empirical content. For instance, the law of inertia implies the testable existence of a reference system in which all free particles travel in straight lines and travel proportional distances in equal times (granted that global synchronization is possible); and the law of acceleration can be tested by comparing the observed motion in an inertial frame with the static measure of the force.Footnote 41 Friedman would reply that the derived “coordinating principles” differ from empirical laws by an element of decision or convention that makes them rigid preconditions for the description of any mechanical behavior and for the expression of further empirical laws such as the law of gravitation. At least this is Friedman’s convincing reply to the similar difficulty for the light postulate and for the relativity principle in the case of special relativity, and for the equivalence principle in the case of general relativity.

Another difficulty concerns the rationality of changes in the systems of constitutive principles. As Friedman has these changes depend on the intellectual context of the time (especially the philosophical debates), he introduces an element of historical contingency that seems incompatible with a rational view of scientific progress. Friedman deflects this charge in three different ways: by insisting on the rational demand that the earlier system should in some sense be an approximation of the earlier one, by displaying the inner logic of each intellectual context, and by showing a natural evolution of each of these contexts from Kant’s rigid definition of the a priori toward more adaptable neo-Kantian notions.Footnote 42

Following Reichenbach, in his Dynamics of reason Friedman regards the mathematical apparatus of physical theories as purely formal and therefore requires coordinating principles that mediate between abstract mathematics and physical phenomena. As noted by Ryckman, a first difficulty with this view is that it relies on an unnecessarily formal conception of mathematics.Footnote 43 In contrast, the neo-Kantian and Husserlian conceptions of mathematics imply structures that prepare the physical application of mathematical constructs (especially the group structure for Poincaré and Weyl). Another difficulty is the unrealistic simplicity of the modes of coordination imagined by Reichenbach and Friedman. For instance, much background knowledge is needed before the light postulate and the geodetic principleFootnote 44 truly inform the application of general relativity.

Around 2010, some of Friedman’s readers suggested ways out of these troubles with coordination. Scott Tanona recommended a richer definition of the target of the coordination as a pre-structured phenomenal frame, just as Niels Bohr had the interpretation of quantum formalism depend on classical accounts of observed phenomena. Other critiques of coordination instead regarded the idea of a phenomenal target as an undesirable remnant of the Kantian duality between understanding and sensibility. In place of it, Ryckman recommended a Husserlian-Weylian “sense bestowal” based on the inner evidence of consciousness; Thomas Uebel argued for a strictly analytic version of the relativized a priori in Carnapian spirit; Massimo Ferrari and Jonathan Everett advocated a return to Cassirer’s variety of neo-Kantianism. In this last view, the object of scientific inquiry is constituted by evolving forms of lawfulness, under the control of fixed regulative principles.Footnote 45

Friedman then agreed that his original notion of coordination was “too thin” but he distanced himself from attempts to do without something like Kant’s intuition.Footnote 46 In his opinion, there cannot be any genuine constitutive principles without empirical intuition, because the very distinction between regulative and constitutive principles depends on sensibility: In order to constitute objects of experience, the categories of the understanding must be applied to sensible intuitions; the ideas of reason are merely regulative because they do not operate on our sensibility. For the new Friedman, constitutive principles must be able to coordinate the mathematical structure of a physical theory with generalized “physical frames of reference” defined as “ostensively introduced and empirically given systems of coordinates (spatial and temporal) within which empirical phenomena are to be observed, described, and measured.” The frames define the concrete conditions of observation in a manner structured by earlier available theories:Footnote 47

We thus have (relativized) a priori mathematical structure at both the observational and the theoretical levels, and the two are coordinated with one another by a complex developmental interaction in which each informs the other.

Although Friedman’s characterization of the “frames” of observation remains sketchy, he clearly means them to contain much more than the physicists’ notion of reference frame. They encompass the approximate applicability of observational structures, all the technical knowledge accumulated during the earlier application of these theories, as well as broader cultural elements. Friedman’s relativized a priori thus acquires much historical thickness:Footnote 48

Our problem, therefore, is not to characterize a purely abstract mapping between an uninterpreted formalism and sensory perceptions, but to understand the concrete historical process by which mathematical structures, physical theories of space, time, and motion, and mechanical constitutive principles organically evolve together so as to issue, successively, in increasingly sophisticated mathematical representations of experience.

By redefining Kant’s sensibility in a pragmatic, historicized manner, Friedman significantly alters the meaning and function of his earlier constitutive principles. Yet some crucial components of his view have not changed. The constitutive principles still serve the purpose of coordinating a mathematical structure to an observational structure; they still define the object of the theory; and they still remain distinct from the properly empirical laws that determine the behavior of the object. Although Friedman is not quite explicit on this last point, it is trivially needed in order to avoid the degenerate view in which the constitutive principles would define the entire theory (save for the observational structure). What has changed is the kind of coordination provided by the constitutive principles, now involving experimental technologies and lower-level theoretical presuppositions.

Quite explicitly, Friedman introduces this new idea of coordination as an amplification of Kantian sensibility and thus moves further apart from the Marburg school. Yet, ironically, there is a sense in which he has come closer to Cassirer’s structuralism: the theory-ladenness of his frames of reference tends to reduce them to systems of relations. The fixed perceptual basis, if there is any, seems to be at the end of a chain of interlocked models of measurement. Perhaps the only important difference left between Friedman and Cassirer is in the way they conceive the change of constitutive principles. For Friedman, a very rich meta-framework including experimental physics, technology, philosophy, theology, and other cultural elements determines this evolution in rational continuity with Kant’s original project. For Cassirer, the evolution of constitutive principles is more narrowly driven by a regulative principle of systematic unity and by empirical challenging of the invariants assumed in earlier theories.

1.6 Criticism

Granted that constitutive principles are necessary to define the object of scientific inquiries, the problem is to give a precise and effective characterization of these principles. Kant’s original notion was too tied to Newtonian physics to survive the later evolution of physics. The more flexible and more evolvable notions of Reichenbach, Cassirer, and Friedman seem more apt to capture the constitutive component of modern theories. On the one hand, Reichenbach and Friedman define the constitutive principles as the means through which we connect a mathematical structure with physical phenomena. On the other hand, Cassirer defines the constitutive (or regulative) principles as the means through which we satisfy the basic demand of systematic unity in diverse contexts of measurement. The first view has the advantage of addressing the notoriously inscrutable difference between a mathematical theory and a physical theory, and the defect of relying on an ill-defined concept of coordination (as long as Friedman’s notion of frames is not made more precise). The second has the advantage of being based on a universally acceptable demand of unifying synthesis and measurability, and the defect of leaving measurement and physical interpretation in the dark.

Let us look more closely at the first, coordination-based view of constitutive principles. For Reichenbach, these principles are the warrants of the one-to-one character of coordination. This notion is problematic because it cannot make sense without some preconceived idea of what is being coordinated in the physical world, an idea that seems to lead to naive realism or to psychologism. Friedman avoids Reichenbach’s notion of one-to-one and instead propounds a characterization of constitutive principles as the component of the theory that is needed to express and test the properly empirical laws of the theory. This leaves us with a number of questions: How selective is this characterization? Where do the constitutive principles come from? Do they have some sort of necessity or are they merely convenient conventions? How do they connect the mathematical formalism to the world of experience?

The first question is about the legitimacy of Friedman’s distinction between constitutive principles and properly empirical laws. According to Friedman, some constitutive principles such as the light postulate or the equivalence principle do have empirical content (they are empirical generalizations), but unlike ordinary empirical laws they are regarded as preconditions for the expression of any other empirical law. The problem with this view is that it seems to rely on a subjective decision. For instance in Newtonian physics, why could not we regard both the acceleration law and the law of gravitation as properly empirical laws in a constitutive framework defined by Euclidean geometry and Newton’s first and third laws? Pace Kant, this was the view of Daniel Bernoulli and of later textbook writers who asserted the empirical character of the second law. In order to regard this law as merely constitutive, we would have to demonstrate that it is in some sense more necessary than other empirical laws such as the law of gravitation. Such demonstration is not to be found in Kant’s writings.Footnote 49

The opposite difficulty occurs in the context of general relativity. Here Friedman regards the geodetic principle (according to which free-falling particles follow geodesics of the spacetime manifold) as constitutive and Einstein’s relation between the curvature tensor and the energy–momentum tensor of matter as properly empirical. Why could not we also regard this relation as constitutive, and confine the properly empirical in the expression of the energy–momentum tensor? This seems to be the more natural choice for physicists accustomed to regard the principle of least action as constitutive, because this principle together with general covariance and plausible simplicity assumptions leads to both the geodetic principle and the Einstein field equations. In this case it is tempting to admit more constitutive principles than Friedman does, whereas in the case of Newtonian mechanics it may be tempting to admit more properly empirical laws.

Owing to these ambiguities, Friedman’s notion of constitutive principles seems dangerously close to the Quinean notion of subjectively and contingently “entrenched principles,” which is precisely what Friedman wanted to avoid. It remains true, however, that some empirical laws, for instance the law of universal gravitation, cannot be regarded as preconditions for the formulation of other empirical laws, while others, such as Newton’s laws of motion or Einstein’s field equations, are necessary preconditions for the application of other empirical laws. What is questionable is the decision to call some laws of the latter kind constitutive and others not. This does not affect Friedman’s general idea of a stratification of our epistemic resources; it only shows the difficulty of sharply characterizing this stratification for a given theory.

The second question is about the origin of the constitutive principles. Some of the principles, for instance Euclidean geometry in Newtonian physics or the pseudo-Riemannian manifold structure in general relativity are mathematical preconditions for the formulation of the theory. They may already being known to mathematicians, or they may require new inventions or adaptations. The rest of the constitutive principles, Friedman tells us, are the “coordinating principles” obtained by elevating empirical laws to a higher constitutive status. Not every empirical law is a candidate for this elevation, only laws that have sufficient generality and appear to play a critical role in a philosophical meta-framework. In a situation of crisis, this elevation allows us to constitute the object of a new theory. Listen to Goethe: “The highest art in intellectual life and in worldly life consists in turning the problem into a postulate that enables us to get through.”Footnote 50

This sounds fine except that there may be other ways to get through the crisis. For instance, it became clear to Poincaré, in 1900, that the velocity of light as measured by moving observers would be the same as in the ether frame and that, as a consequence, the time measured by moving observers who synchronize their clocks by optical means would differ from the time measured in the ether frame. In the spring of 1905, this insight led Poincaré to a version of the theory of relativity that was empirically equivalent to Einstein’s slightly later theory, and yet Poincaré refused to regard the light postulate (constancy of the velocity of light in the ether frame) and the relativity principle as constitutive with respect to the definition of space and time. In general, the underdetermination of theories by experimental data leads to several equivalent options for solving the same difficulties, and these options have different constitutive principles. The choice between these options is largely contingent. In physics narrowly considered, they are conventions the suitability of which is a matter of convenience. At least Poincaré thought so.

Friedman is of course aware of this underdetermination and he removes it by appeal to philosophical meta-frameworks in which the new conventions become necessary and thus acquire a constitutive status.Footnote 51 Although his accounts of the genesis of Einstein’s coordinating principles work well as rational reconstructions, their historical pertinence remains debatable. Firstly, the availability of the needed meta-framework at the right moment seems contingent. Secondly, the true historical motivations of the central actor may have differed from those suggested by Friedman. For instance, a Hertzian dislike of redundancies in theoretical representation may have weighed more than Poincaré’s philosophy of geometry when Einstein elevated the constancy of the velocity of light to a principle constitutive of space–time relations. These remarks answers my third question about the necessity of the constitutive principles.Footnote 52

The fourth and last question is about the manner in which the constitutive principles connect the mathematical apparatus of a theory with the world of experience. The relevant principles are Friedman’s “coordinating principles.” Are these principles sufficient to determine the applications of a theory? The textbook definitions of major physical theories suggest so much, because these definitions typically involve a “mathematical formalism” and a few “rules of interpretation” which play a role somewhat similar to Friedman’s coordinating principles. Also, the particular theories Friedman discusses, Newtonian mechanics and relativity theory, seem to provide for their own interpretive resources, unlike other theories such as electromagnetic theory, statistical mechanics, fluid mechanics, or quantum mechanics, whose interpretation requires modular connections with other theories.

Yet, as Friedman himself emphasizes in his more recent writings, simple coordinating principles do not suffice to define the application of theories. It is not by contemplating the textbook definition of a theory that physicists learn how to apply it; it is by applying the theory to a series of exemplars given in any good textbook. That is not only for pedagogical reasons. Knowledge is needed that is not contained in the bare rules of interpretation, even in the simple cases of classical mechanics and relativity theory. Consider general relativity. Any effect involving concrete clocks (for instance the gravitational redshift of spectral lines or the gravitational slowing down of atomic clocks) requires Einstein’s equivalence principle according to which the laws of physics in a free-falling, non-rotating local reference frame are locally the same as in the absence of gravity. This principle is not contained in the geodetic principle that Friedman takes to be the basic coordinating principle (in addition to the light postulate).Footnote 53 Friedman would perhaps have no objection to accepting Einstein’s stronger principle as one of the constitutive principles. This might indeed square well with his recent notion of physical frame of reference. Note, however, that this principle differs from his other coordinating principles by implicitly involving phenomena (and theories) that do not belong to the official domain of the theory under consideration, for instance electromagnetism.

Admittedly, there are many concrete consequences of general relativity that do not principally involve extra-theoretical time gauges (clocks whose functioning cannot be described by gravitation theory only). But even in such cases more is needed than just the light postulate and the equivalence principle to interpret relevant experiments. As Friedman himself remarks in recent writings, optical instruments and goniometric techniques are used in any observation of the astronomical consequences of general relativity. Their implying electromagnetic theory is not much of a problem, because additional constitutive principles could be introduced for the electromagnetic sector of the theory. What is more problematic, for anyone interested in the actual application of theories, is the fact that the global theory, as long as it is defined only by its constitutive principles and its general laws does not provide the means to conceive the experimental setups through which it is applied. For this purpose we rely on previous theoretical and practical knowledge that remains regionally valid.

One might retort that this knowledge is implicitly contained in the new theory because the older theories are regional approximations of the new theory in some operationally meaningful sense. This sort of reductionism is a will-o’-the-wisp because we would generally lack an incentive to consider the regional approximations if we did not have previous knowledge of the relevant regions of experience. Even if we chanced to consider these approximations for purely formal reasons, we would thus access only the formal apparatus of the earlier theories, not the associated laboratory practice. Moreover, the reductions would require the entire theory, not only its constitutive principles, so that the allegedly non-constitutive, empirical component of the theory indirectly plays a coordinating role in defining local conditions of measurement.

To sum up, Friedman’s version of the constitutive a priori has several defects. Firstly, it does not provide a sufficiently sharp criterion for distinguishing the constitutive principles from other assumptions of a theory. Secondly, the coordinating principles are contingent on the availability of a proper philosophical meta-framework. Thirdly, the constitutive principles do not by themselves determine the concrete application of a physical theory. Although Friedman’s recent idea of “physical frames of reference” addresses this last difficulty, it is still too vague to address the application problem in a realistic manner.

An additional remark concerns the scope of the relativized a priori. For the sake of historical continuity with Kant’s transcendental project, Cassirer, Reichenbach, and Friedman adapted their theories of knowledge to the historical evolution of the concepts of space and time from Newton to Einstein and they focused on three theories of physics: Newtonian mechanics, special relativity, and general relativity. There is no doubt, however, that any mature neo-Kantian epistemology should encompass all the theories of modern physics. As was earlier mentioned, the pet physical theories of neo-Kantian philosophers are peculiar in their direct bearing on the most primitive concepts of experience (space and time). Other theories, for instance electrodynamics or thermodynamics, are constructed on the basis of higher-level concepts. There is no warranty that epistemological notions developed in the limited context of space–time theories would be relevant to physical theory in general.

These critical remarks contain some hints for how to improve on Friedman’s constitutive principles. Firstly, we should investigate the way in which physical theories of all kinds are applied in concrete experimental setups before we decide on their constitutive apparatus. In other words, we need a realistic and nonetheless generic account of coordination. Secondly, we need to distinguish coordination from constitution, which is only a constraint on the form of coordination. Thirdly, we need a way of justifying the constitutive apparatus of a theory without relying on the contingent availability of philosophical meta-frameworks. Here Cassirer’s emphasis on measurement, on its preconditions, and regulative demands of systematic unity and lawfulness seems most promising.

2 The coordinating substructures of physical theories

The purpose of this subsection is to identify the substructures that allow the coordination of physical theories with the phenomenal world and also to investigate the relevant inter-theoretical relations.

2.1 Generic definition of physical theories

Not much can be said on the nature, role, and constitution of physical theories without a sufficiently precise definition. I arrived at the following definition after inspecting the most important physical theories and the ways in which they are concretely applied.Footnote 54

A physical theory is defined by four components:

-

(a)

a symbolic universe in which systems, states, transformations, and evolutions are defined by means of various magnitudes based on powers of R (or C) and on derived functional spaces and algebras.

-

(b)

theoretical laws that restrict the behavior of systems in the symbolic universe.

-

(c)

interpretive schemes that relate the symbolic universe to idealized experiments.

-

(d)

methods of approximation that enable us to derive the consequences that the theoretical laws have on the interpretive schemes.

To illustrate this definition, take the simple example of the classical mechanics of a finite number of mass points. The symbolic universe comprehends systems defined by a number N of mass points, in states defined by the spatial configuration of the particles (N vectors in a three-dimensional Euclidean space), evolutions that give this configuration as a twice differentiable function of a real time parameter, the list of masses of the particles (N real positive constants), and an unspecified force function that determines the forces acting on the particles for a given configuration and at a given time. The basic law is Newton’s law relating force, mass, and acceleration. Interpretive schemes vary. A first possibility is that the scheme consists in a given system (choice of N) and the description of ideal procedures for measuring spatial configuration, time, forces, and masses. Then an idealized experiment may consist in the verification of the motion predicted by the theory for given initial positions and velocities and for a properly selected frame of reference. Or the scheme may involve mass, position, and time measurements only, allowing idealized experiments in which the forces are determined as a function of the configuration. Or else, the scheme may involve mass, position, and time measurements and the choice of a specific force function, allowing idealized experiments in which the motion predicted by the theory is verified for given initial conditions.

This first example suggests a more precise definition of an interpretive scheme as the choice of a given system in the symbolic universe together with a list of characteristic quantities that satisfy the following three properties:

-

(1)

They are selected among or derived from the (symbolic) quantities that are attached to this system.

-

(2)

At least for some of them, ideal measuring procedures are known.

-

(3)

The laws of the symbolic universe imply relations among them.

The characteristic quantities of a given interpretive scheme are divided into measured quantities and more theoretical quantities. Only the former quantities are measured in experiments based on the scheme. The theoretical quantities either are the unknowns that the experiments aim to determine, or their value is taken from empirical laws established by preliminary experiments.

Before further comments, let us consider the more difficult example of quantum mechanics. There the symbolic universe involves a Hilbert space of infinitely many dimensions, operators representing physical quantities, and a few real-number parameters such as time, mass, charge, and external fields. The two basic laws are Schrödinger’s equation and the law giving the statistical distribution of a given quantity for a given state. Interpretive schemes involve the various quantities attached to the particles and fields and the parameters. The laws of the symbolic universe imply statistical correlations between the quantities for given values of the parameters. The complexity of the symbolic universe and of the interpretive schemes varies with the type of system considered (single particle in external fields, several interacting particles, quantum fields).

In this example, it is obvious that the interpretive schemes do not spontaneously derive from the symbolic universe because there is no direct correspondence between the symbolic state vectors and the measured quantities. In the classical case, one may be tempted to believe the contrary because one can easily imagine an approximate concrete counterpart of the symbolic universe. This would be a mistake, because the symbolic quantities never have a direct concrete counterpart. Their concrete implementation requires ideal measurement procedures that are not completely definable within the symbolic universe of the theory. Most mechanical experiments appeal to position and time measurements, and these require, besides the laws of the symbolic universe, a notion of inertial frame and the means to concretely realize the measurements.

In general, the set of interpretive schemes associated with a theory varies in the course of time. Some schemes are there from the beginning of the theory, as they are associated with its invention. Others come at later stages of the evolution of the theory when it is applied in a more precise or a more extensive manner. In this process, some purely symbolic quantities may be promoted to the schematic level. For instance, late nineteenth-century studies of gas discharge and cathode rays provided experimental access to the invisible motions assumed in the electron theories of Hendrik Lorentz, Joseph Larmor, and Emil Wiechert.

A last remark on the present definition of theories is that all the structures it employs are defined mathematically. In this respect, it agrees with the so-called semantic view, in which physical theories are set-theoretical constructs serving as models of a putative linguistic formulation. The main difference is that it contains evolving substructures, the interpretive schemes, that enable us to conceive blueprints of concrete experiments. In a vague way, we may understand this power of the schemes as a consequence of their being generated all along the history of applications of the theory. But we are still in the dark regarding the precise way in which physicists articulate the relation between symbols, schemes, and experiments. This is where the notion of modules is indispensable.

2.2 Modules

Any advanced theory contains or is constitutionally related to other theories with different domains of application. The latter theories are said to be modules of the former. Modules occur in the symbolic universe, in the interpretive schemes, and in limits of these schemes. Since by definition they are themselves theories, they also contain modules, submodules, and so forth until the most elementary modules are reached. There are (at least) five sorts of modules. In reductionist theories such as the mechanical ether theories of the nineteenth century, there is a reducing module diverted from its original domain in order to build the symbolic universe of another domain. In many theories, the symbolic universe also appeals to defining modules that define some of the basic quantities. For instance, mechanics is a defining module of thermodynamics because it serves to define the basic concepts of pressure and energy. There are schematic modules that occur at the level of interpretive schemes and serve to describe the relevant measurements. These may belong to the symbolic universe, as is the case for pressure in the schemes of a thermodynamic gas system; or they may require additional modules as is the case for position and momentum in the schemes of one-particle quantum mechanics. There are specializing modules that are exact substitutes of a theory for subclasses of schemes under certain conditions. For instance, electrostatics is a specializing module of electrodynamics. Lastly, there are approximating modules that can be obtained by taking the limit of the theory for a given subclass of schemes. For instance, geometrical optics is an approximating module of wave optics. These categories are not mutually exclusive: for example, a schematic module can also be a defining module or an approximating module.Footnote 55

Thus we see that there are diverse ways in which the full exposition of a given theory calls for other theories. The choice of the word “module” is intended to convey metaphorically this diversity as well as the fact that the same theory can be a module of a number of different theories. For instance, classical mechanics is a module of electrodynamics, thermodynamics, quantum mechanics, general relativity, etc.; and it can be so in different ways. At any given time, any non-trivial theory has a modular structure, namely: it includes a number of modules of the above-defined kinds.

The modular structure of a theory is not unique and invariable. It depends on a number of factors: (1) the conception we have of this theory, (2) the type of experience that is conceivable at a given period of time, (3) the degree of elaboration of the theory, etc. As an example of the first factor, for some nineteenth-century physicists mechanics was a reducing module of electrodynamics; for phenomenologists it was only a defining module; for believers in the electromagnetic worldview, it was a schematic module. As an example of the second factor, approximating modules for the description of stochastic processes appeared in statistical mechanics only after the development of relevant experiments. As an example of the third factor, the boundary-layer approximating module of hydrodynamics appeared only at a late stage of its evolution, even though it concerned an old domain of experience.

This ambiguity and variability of modular structure may explain why philosophers of physics have paid little or no attention to it. This structure seems to elude any formal, rigorous epistemology. It seems too fleeting and too vague to embody the epistemic virtues that philosophers wish to find in physical theories. Against these impressions, it will now be argued that modular structure is essential to the application of theories, to their comparison, to their construction, and to their communication. These four aspects of theorizing activity will thus appear to be intimately related to each other. Modular structure has some sort of necessity: without it physical theories would remain paper theories.

The symbolic universe of a theory never applies directly to a concrete situation. The application is mediated through interpretive schemes that describe ideal devices and quantitative properties of these devices. In order to build a concrete counterpart of a scheme, we must know the correspondence between ideal device and real device, as well as concrete operations that yield the measured quantities. In any advanced theory, this correspondence obtains in a piecewise manner, through the modules involved in the schemes. The most superficial observer of a modern test of a theory cannot fail noticing the contrast between the simplicity of the theoretical statement to be tested and the complexity of the experimental setting. What enables physicists to make sense of this complexity is, for the most part, the modular structure of schemes.

The modular insertion of a previously known theory enables us to exploit the competence we have already acquired in applying this theory. This application may involve sub-modules and their schemes, and so forth until the concrete operations become so basic that their description can be expressed in ordinary language. Take the relatively simple case of mechanics. The schemes involve a geometric module, which one already knows how to realize by means of surveying with rigid rods (for example). This knowledge is essential in building the apparatus and realizing the relevant measurements. Other useful modules are those of kinematics and statics.