Abstract

With mobile and embedded devices getting more integrated in our daily lives, the focus is increasingly shifting toward human-friendly interfaces, making automatic speech recognition (ASR) a central player as the ideal means of interaction with machines. ASR is essential for many cognitive computing applications, such as speech-based assistants, dictation systems and real-time language translation. Consequently, interest in speech technology has grown in the last few years, with more systems being proposed and higher accuracy levels being achieved, even surpassing human accuracy. However, highly accurate ASR systems are computationally expensive, requiring on the order of billions of arithmetic operations to decode each second of audio, which conflicts with a growing interest in deploying ASR on edge devices. On these devices, efficient hardware acceleration is key for achieving acceptable performance. In this paper, we propose a technique to improve the energy efficiency and performance of ASR systems, focusing on low-power hardware for edge devices. We focus on optimizing the DNN-based acoustic model evaluation, as we have observed it to be the main bottleneck in popular ASR systems, by leveraging run-time information from the beam search. By doing so, we reduce energy and execution time of the acoustic model evaluation by 25.6 and 25.9 %, respectively, with negligible accuracy loss.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, computers are immensely more powerful than 50 years ago and have become nearly ubiquitous, with more and more people having access to them in the form of mobile devices such as smartphones. However, computer interfaces remain complicated, and most of the time, they require the user to completely focus on the computer or phone in order to use it. Some current trends try to blur the idea of using a computer, by proposing systems that interact with humans in a more natural way (e.g., voice), with interfaces that are familiar, or directly invisible, to us. In such a world of fluid human-computer interactions, the so-called cognitive computing is going to be a key component in many kinds of systems, where the traditional keyboard and mouse, and even touch-screens, are being left behind in favor of more intuitive and yet sophisticated interfaces based on speech recognition.

Cognitive computing [20, 28, 29, 43, 65] is a sub-field of artificial intelligence that refers to technology platforms and systems, hardware and/or software, that aim to simulate and mimic the human brain thought processes and reasoning in order to interpret data, understand natural language, and learn from interactions. The goal is to create and develop intelligent computer systems that can adapt and make decisions similar to the way humans would, tackling complex problems that usually require human cognition. This involves advanced techniques like machine learning, neural networks, natural language processing, speech recognition, computer vision, among other technologies. Unlike traditional programmed systems, cognitive computing solutions can analyze large amounts of data from various sources and identify patterns and insights. They can interpret text, images, speech and make connections across data. Over time, these systems continue to learn from their interactions and experiences.

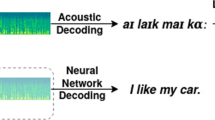

From all the technologies wrapped under the umbrella term cognitive computing, automatic speech recognition (ASR) [38, 79] will play a key role, becoming the next big leap in human–machine interactions. ASR consists of processing an audio signal (utterance) to obtain a written transcription, where the audio is broken down into overlapping fragments and transformed into a sequence of frames. Research in ASR is a marathon that started around fifty years ago with very high expectations, but soon faced important technology limitations, causing the progress in the field to slow down. However, with more powerful and ubiquitous computers, interest in this technology is again gaining popularity, and the industry and academia are working toward meeting the growing demand. Not long ago an important milestone was reached, when some systems claimed to perform better than humans in specific conditions for large vocabulary continuous speech recognition [71].

Modern ASR systems are often classified as Hybrid Deep Neural Network-Hidden Markov Model (Hybrid DNN-HMM) [1] or End-to-End (E2E) [56] systems. The Hybrid approach consists of a DNN-based acoustic model (AM), followed by a Viterbi beam search [58] to generate a word lattice with the most likely transcriptions, that is later re-scored by a Recurrent Neural Network (RNN) based Language Model (LM). To reduce the complexity of the ASR pipeline, E2E solutions aim at generating a transcription from features of audio frames by using a stand-alone DNN. However, most E2E systems include a beam search with an LM to significantly improve their accuracy, as explained in Sect. 2, and therefore, despite being called E2E, they usually combine multiple DNNs and a beam search to achieve the best accuracy.

The outstanding accuracy achieved by modern ASR systems is enabling them to quickly become a mainstream technology for many uses, as witnessed by the proliferation during the last decade of consumer products based on ASR like the Virtual Personal Assistants (VPA) [2, 4, 17, 41]. It comes, however, at the cost of performing inferences with huge DNN models that require on the order of billions of arithmetic operations per second of speech and expensive searches in large graphs (e.g., LM).

The consequence of high computational cost is that ASR is usually performed on servers rather than on edge devices. Edge devices, such as smartphones and smartwatches, are often ill-equipped to perform highly accurate ASR within reasonable latency and energy consumption. In contrast, servers provide more than enough computing power for the task, which usually comes at the expense of high energy consumption. This is more challenging if ASR is deployed on edge devices, for which battery lifetime has a direct impact. Edge devices generally require hardware acceleration to provide highly accurate decoding within reasonably latency and energy. Despite the advantages of servers for ASR, on-edge ASR is the preferred solution for the long term. Service availability and low latency requirements are difficult or impossible to guarantee when ASR is provided as a cloud service in situations of no internet coverage or slow connection. Even more important are security and privacy issues that arise when sending sensitive audio data to company-owned remote servers.

A system-on-chip (SoC), commonly used in mobile devices with limited resources, can integrate some small accelerators to deliver accurate and robust on-edge ASR. However, even those accelerators may struggle to run modern ASR efficiently. In particular, the DNN-based AM evaluated with a DNN accelerator accounts for the bulk of computations and memory accesses, resulting in the most expensive component of the ASR system in terms of latency and energy consumption.

As an example, we first analyze the behavior of a baseline ASR system when running on an accelerator-rich SoC, detailed in Sect. 3. From the software perspective, our baseline ASR system is representative of recent proposals as it includes a DNN-based acoustic scoring stage [51] and a beam search [58]. From the hardware point of view, our baseline SoC consists of a multi-core ARM CPU, a DNN accelerator [9] and a beam search accelerator [73]. Our preliminary results, discussed in Sect. 4, show that the DNN evaluation used for acoustic scoring is the main performance and energy bottleneck, consuming \(82~\%\) of the execution time and \(68.3~\%\) of the energy in our baseline ASR system. The memory subsystem is a key component due to the huge storage capacity required to completely store the parameters of the DNN, and the high bandwidth requirements. Therefore, optimizations with alternative mechanisms for saving memory bandwidth, such as low-precision data quantization, are effective ways to improve performance and reduce the energy consumption of the DNN accelerator, while maintaining the accuracy of the DNN model.

In this paper, we aim at improving the performance and energy efficiency of the DNN accelerator employed in an ASR system, focusing on local real-time ASR in edge devices. To this end, we take a novel approach based on the observation that not all the input audio frames require the same level of precision. Figure 1 shows the number of hypotheses, a.k.a. tokens, expanded by the beam search at each frame of speech, where the input speech file corresponds to several frames of audio of a single utterance of the LibriSpeech [48] dataset. As it can be seen, in some frames the decoder is very confident about the best hypothesis and, hence, the number of tokens expanded is very small, whereas in other parts of the speech the decoder is much less confident, which leads to a large number of hypotheses to be considered. Regions of speech where the beam search is not confident require highly accurate DNN scores to avoid discarding the correct hypothesis. However, we show in this work that regions of the speech where the search is highly confident, i.e., where the number of tokens is small, do not require a high level of accuracy to make the correct choice and, hence, precision in DNN evaluation can be relaxed with negligible impact in word error rate (WER).

Then, we present a novel technique that exploits the above observation by leveraging feedback information from the beam search accelerator to select the appropriate accuracy in the DNN accelerator. More specifically, for regions of the speech where the search is highly confident, the DNN is evaluated at low precision (e.g., 4 bits), whereas regions of the speech with low confidence are evaluated at high precision (e.g., 8 bits). By counting the number of tokens at each frame in the beam search accelerator, and comparing it with a threshold, we can decide if the level of confidence is high enough to evaluate the DNN-based AM in low precision. The threshold is computed at run-time so it automatically adapts to the particular situation. By using the number of tokens as a metric of confidence and the run-time threshold, we can save \(16.9~\%\) of the energy and reduce the overall execution time by \(19.6~\%\) of the complete ASR system.

To summarize, the main contributions of this work are the following:

-

We characterize the performance and energy consumption of a modern ASR system on an accelerator-rich SoC. We identify the evaluation of the DNN-based acoustic model as the main bottleneck, as it requires 82 and 68.3 % of the total execution time and energy, respectively.

-

We analyze the impact of reducing the precision of the DNN-based acoustic model in different regions of the speech. We conclude that frames with a high confidence in the search, i.e., with a small number of tokens expanded, can be evaluated at low precision with negligible impact in WER.

-

We present a novel technique that dynamically selects the precision of the DNN accelerator based on the feedback information provided by the beam search accelerator. Our system evaluates the DNN at low precision for \(50~\%\) of the frames, resulting in \(19.6~\%\) reduction in execution time and \(16.9~\%\) energy savings for the entire LibriSpeech test set.

The rest of the paper is organized as follows. Section 2 contains some background and related work about the software and hardware solutions employed on ASR. Section 3 describes our baseline ASR system. In Sect. 4, we present our analysis of the baseline with a discussion of its bottlenecks and a description of the proposed solutions to alleviate them. Section 5 is a detailed description of the changes required in the hardware platform to support our technique. In Sect. 6, we describe our evaluation and methodology, providing some details regarding the configuration of the system and the models employed for its evaluation along our experimental results. Finally, Sect. 7 sums up the main conclusions of this work.

2 Background and related work

This section summarizes some popular software and hardware solutions for ASR.

2.1 ASR software models

The purpose of ASR is to obtain a written transcription from an utterance of audio. Most popular ASR systems do it by following the same algorithm consisting of three steps: (1) feature extraction, (2) acoustic scoring and (3) decoding.

Feature extraction receives the raw signal and generates a sequence of feature frames. Then, each of these frames is classified into acoustic tokens by employing an AM. For most systems, the AM is a DNN that generates, for each frame, a probability distribution over phonetic units (e.g., phonemes). The set of acoustic tokens is defined by the ASR designer. Many recent systems use word-pieces, but characters and phonemes are not rare. The decoding step combines the acoustic scores with scores from other sources to generate transcription hypotheses. The simplest approach for obtaining a transcription consists of selecting the phonetic unit with the highest probability on each frame. However, using more sophisticated approaches, e.g., a Language Model, generally result in better accuracy. When the ASR system includes an LM, the best-scoring transcription cannot be obtained by simply taking the single best-scoring phonetic unit on each frame. Instead, a beam search algorithm traverses the graph of possible transcriptions to find the sequence of phonetic units with the best overall score. When all the scored frames are incorporated in the hypotheses by the decoder, the best hypothesis is regarded as the final transcription for the input utterance.

Despite the homogeneity implicit in the previous description, there are many alternative approaches to implement those steps. Feature extraction usually consists of a Mel-Frequency Cepstral Coefficients (MFCC) [36] extractor preceded by a signal processing step that enhances certain characteristics of the signal and reduces noise. However, MFCCs are not the only features used for ASR, and signal pre-processing can be performed in a variety of ways. The acoustic scoring consists on a DNN inference, but there is a wide variety of DNNs used among ASR systems (e.g., time delay neural network (TDNN) [51], DeepSpeech [3], Listen Attend and Spell (LAS) [8] or Transformer [67]) and each comes with its own peculiarities.

Decoding can also be performed in a variety of ways. Hybrid systems, such as [54, 78], do it by traversing a complex graph, which contains transition probabilities between a heterogeneous set of symbols, such as tri-phones, phonemes and words. On the other hand, E2E systems rely on simpler graphs. For example, wav2vec [5, 6] systems employ a tree structure of phonemes, word-pieces or words. This structure serves to limit the symbols to which each symbol can transition. However, this graph does not contain scores. Additionally, an LM or DNN can be included in the decoding step of E2E systems to improve accuracy. The LM consists of a graph that contains scores that represent transition probabilities between words.

As previously stated, the acoustic scoring is essentially a classification problem, so it is commonly implemented as a DNN. Our goal in this work is to ease the DNN inference evaluation, as it is the most costly step in terms of computations and memory accesses. However, neural networks are a broad and fast changing domain, with many architectures to choose from and new proposals every year. Most recent ASR systems employ a DNN-based AM inspired on the E2E Transformer architecture with several encoder-decoder blocks of attention layers, such as the Conformer [18], wav2vec [5] and Whisper [59] systems. Attention-based DNNs improve accuracy at the cost of very large and computationally expensive models, and despite being called E2E due to their ability to provide output transcripts, most employ additional post-processing steps in the decoding to improve accuracy, making the system more complex than a traditional Hybrid approach. For instance, the Conformer network achieves \(2.1~\%\) WER on LibriSpeech test_clean with 118.8M parameters, wav2vec trains a 317 M parameter network that reaches \(2.2~\%\) WER on the same benchmark, while Whisper reaches \(2.0~\%\) WER without an LM but 1550 M parameters. Such big ASR models are not suitable for low-power edge devices with limited hardware resources.

While E2E ASR systems achieve state-of-the-art results in most benchmarks in terms of accuracy [23], Hybrid systems have been highly optimized for production for decades so they are still used in a large proportion of commercial ASR systems [31]. In this paper, we choose to evaluate a Hybrid DNN-HMM ASR system with a TDNN-based AM, not only because it offers a good trade-off between accuracy and hardware requirements for on-edge ASR, but also because it represents accurately how many commercial ASR systems work. In addition, we expect that our proposal could be also implemented for E2E systems relying on some sort of graph processing like an LM with a beam search algorithm. Section 3 provides a more detailed description of the components of our baseline ASR system.

2.2 Hardware-accelerated ASR

The field of machine learning has experienced an enormous growth in the last few decades, and that has been reflected in the computer architecture research, with a high number of proposals to increase the performance of machine learning algorithms. Since heterogeneous systems have also been gaining popularity, many of the new proposals regarding machine learning are related to hardware accelerators for specific algorithms, being DNN acceleration [26] a popular alternative. However, many of the proposed accelerators focus on exploiting characteristics of specific types of networks, such as CNNs [10, 11, 16] or RNNs [19, 66], which are not a good fit for our baseline ASR system. Our baseline relies on a TDNN acoustic model [51, 55], mostly composed of fully connected layers. Other recent works focus on high performance computing. Chen et al. [37] propose DaDianNao, a modular accelerator for DNN and CNN composed of \(67.72~\text {mm}^2\) nodes, consuming 15.97 W each. Song et al. [21] designed ESE, a DNN accelerator optimized for LSTM layers, consuming 41 W on an FPGA. This work is different because we focus on low-power on-edge computation, with tight constraints in area and power dissipation.

Regarding specific proposals for speech recognition, most prior work was focused on older ASR systems [35, 52, 76, 77], and assumed smaller vocabularies and/or acoustic models than modern solutions as the one considered in this paper. Price et al. [57] designed a chip encompassing from voice activity detection (VAC) and audio capture, to beam search decoding. The area of the chip is \(13.18~\text {mm}^2\), and consumes 11.27 mW (not including power from off-chip components, such as main memory, which is the main bottleneck according to our models) while running a 145-k word vocabulary benchmark. On the other hand, our work focuses on bigger models, including a 200 k vocabulary decoding graph, and a 16.17 MB acoustic model (opposed to their 3.71 MB model) to achieve state-of-the-art accuracy.

Other proposals try to optimize a specific part using only information local to that component; for example, Yazdani et al. [73, 75] propose a beam search accelerator, consuming 462 mW plus the power dissipated by the GPU (between 2 W and 6 W), which is used for the acoustic model evaluation. Additionally, they optimize the beam search accelerator by performing on-the-fly composition of the WFST-based decoding graph [74]. Our work is different since our aim is not to improve the beam search energy efficiency, but to leverage information known in the beam search accelerator to improve energy and performance of the DNN accelerator.

Another common optimization consists in exploiting computation reuse in the DNN accelerator [22, 25, 47, 60, 62], or compressing the DNN model, e.g., via weight pruning [19, 21, 61, 63]. In this work, we follow a different approach. Instead of optimizing a component by exploiting local properties, we look at the system from a high-level perspective, exploiting the inter-dependencies among ASR stages. Note, however, that our proposal can still be applied on top of any of these optimizations, providing additional gains.

Earlier proposals for hardware-accelerated ASR [13, 34, 42] focused on GMM based recognizers, with CMU’s Sphinx as an usual software baseline, and vocabularies with less than 100k words (e.g., 5k/20k-word Wall Street Journal, 64K-word Broadcast News). More recently, Tabani et. al. [68] proposed an accelerator for the PocketSphinx system, configured to decode a 130k-word LibriSpeech-based benchmark. PocketSphinx is based on CMU Sphinx, aimed at portability. By using that accelerator (a \(0.94~\text {mm}^2\), 110 mW chip), the decoding time and energy is reduced by 5.89x and 241x, respectively, over a mobile GPU implementation. However, this type of systems have become less popular nowadays due to their lower accuracy. For instance, Tabani et. al. [68] report a WER of 24.14, which is much higher than current state-of-the-art systems.

3 Baseline ASR system

This section describes the software and hardware of our baseline ASR system. First, we review the software pipeline of a Hybrid DNN-HMM ASR system, that is largely influenced by recent advances in deep learning. Modern ASR systems combine several DNNs used for different sub-tasks, such as Acoustic Scoring or Language Model re-scoring, with other algorithms for speaker adaptation, beam search, etc. Second, we describe a mobile hardware SoC platform for real-time large vocabulary ASR, including a quad-core ARM CPU integrated with recently proposed accelerators for the most computationally demanding tasks in ASR systems.

3.1 ASR pipeline

The top part of Fig. 2 shows the software pipeline of our baseline ASR system. As explained in Sect. 2, the first stage is the feature extraction [14, 24]. This component splits the raw audio signal in overlapping frames of 25 ms of speech. Next, it computes a vector of features to encode each frame, typically MFCC [36]. Furthermore, it also computes an iVector [15, 27] for speaker adaptation [7, 44, 50, 64, 80, 81]. Therefore, each frame is encoded as a vector that is the concatenation of the audio features and the iVector. The objective of this representation is to expose the information that is relevant for the system in a compact manner.

The next pipeline stage is the evaluation of the acoustic model. This model is executed for every frame of speech. It takes as input the acoustic features, i.e., MFCC+iVector, of one frame of speech and its neighbor frames and it computes the probability of that frame representing each of the possible sound units (referred to as sub-phonemes, or senonesFootnote 1) included in the model.

The most common solution to implement the AM consists of a DNN trained to compute senones’ probabilities, a.k.a. acoustic scores, for each input frame. Although different deep learning solutions, such as multi-layer perceptrons (MLP) or recurrent neural networks (RNN), have been successfully applied for acoustic scoring, a more effective approach is to use a time delay neural network (TDNN) [69]. TDNNs for speech recognition were proposed long ago, but they have been proven recently to improve the accuracy of modern ASR systems more efficiently than other approaches [51], such as using RNNs or introducing long contexts to an MLP.

The main idea behind a TDNN is to have a network that is suited to recognize sequences, but does not have the overheads of RNNs. A TDNN is a feed-forward network whose layers not only receive their input from the previous layer of the current frame, but also from past and future frames. Figure 3 shows an example of the dependencies between layers on a simple TDNN network. In this case, every layer depends on the output from the previous layer in the time-steps \(t-1\), t and \(t+1\). Using this network instead of an RNN allows for exploiting more parallelism by removing some dependencies between time-steps.

As discussed in Sect. 2, in an E2E system, a greedy algorithm could be used to obtain a transcription from the acoustic scores computed by the AM: it just selects the sub-phoneme with maximum score for each frame of speech and performs a simple post-processing to provide a transcription. However, this greedy decoding leads to sub-optimal WER, and accuracy is substantially improved by incorporating a lexicon and a language model by means of a beam search. For example, Google’s E2E system LAS [8] reduces its WER from 6.8 to 5.8 % in the LibriSpeech dataset [48] when using a beam search with an LM [49]. NVIDIA’s Jasper improves its WER from 11.9 to 8.7 % for the same dataset when using a beam search and a TransformerXL LM to re-score the likelihoods of the E2E DNN [30]. Baidu’s DeepSpeech obtains a relative improvement of \(41.6~\%\) in WER when using the output likelihoods of the E2E RNN to drive a beam search with a 3-gram LM [3]. Finally, a work from Facebook in E2E systems [67] also reports large improvements in WER when using a beam search and LM re-scoring. Therefore, no matter if the ASR system is Hybrid or E2E, the state-of-the-art solution is to use the DNN-computed acoustic scores to perform a beam search [12, 32, 39, 70], which is the last stage of our pipeline.

The objective of the beam search is to find the best path in a graph that contains all the possible sequences for the transcription, weighted by probabilities. This graph is known as the decoding graph and is usually generated by combining an LM with an HMM and a lexicon. All these models are efficiently represented through a Weighted Finite State Transducer (WFST) [45], a mathematical framework to build graphs specifically for sequence-to-sequence translation. The WFST is a type of weighted graph over which there are defined operations that allow to efficiently combine information from several models into the same graph. The use of a WFST makes it possible to merge together a lexicon and a language model into the same graph.

In order to efficiently traverse the decoding graph, an algorithm known as Viterbi beam search [58] is used. This algorithm obtains the less costly path, i.e., the most likely transcription, given the acoustic scores and the WFST-based decoding graph. This beam search algorithm works by expanding, for each frame, all the active states in the graph, named tokens. At start time, the only token in the active set of expanded tokens represents a special state from the graph, denoted as start state. For each frame of speech, each token in the active set is replaced by a set of new tokens corresponding to all reachable states from it, each with a weight equal to the addition of the source token weight, the traversed arc’s weight and the score obtained by the AM for the destination state.

Aiming to keep the search space manageable, a beam width is used to discard very unlikely tokens. Hence, after expanding the tokens for a given frame, a pruning step is performed: for each token, the distance between its weight and the best token’s weight is computed and if it is larger than the beam width the token is discarded. Note that due to this pruning the number of alternative tokens considered for each frame may largely vary as shown in Fig. 1. More specifically, for regions of speech where the decoder is highly confident only a few tokens are expanded, whereas the beam search expands a large number of tokens when it is less confident about the correct transcription. In this work, we exploit the degree of confidence in the beam search to perform a more efficient AM evaluation: we argue that precision of acoustic scores is critical when the beam search shows low confidence, but it can be relaxed when the decoder is highly confident.

The output of the beam search is a word lattice containing the most likely transcriptions for the input audio signal (utterance). At this point, the best path in the lattice could be recovered by following back-pointers with an inexpensive backtracking step. However, to further improve the WER, the word lattice is normally re-scored by using a more sophisticated language model encoded with an RNN or a Transformer Network [32, 67, 70, 72].

3.2 Low-power hardware for ASR

Figure 2 illustrates the mobile SoC assumed in this work. It consists of a quad-core ARM CPU, a DNN accelerator and a beam search accelerator, all of them sharing the same system memory (8GB of LPDDR4). The CPU, an ARM Cortex A57, orchestrates the execution of the ASR pipeline: it prepares the datasets in main memory and issues commands to the accelerators to offload the most computationally intensive parts. Furthermore, it also runs part of the Feature Extraction stage.

The DNN accelerator is inspired in DianNao [9]. It consists of a command processor, local scratchpad memories and 16 neural function units (NFUs). The NFU contains all the units required to perform the DNN computations, including an array of adders and multipliers, in addition to specialized units for the activation functions. The NFU is pipelined in three stages: NFU-1, to multiply the inputs by the weights; NFU-2, to add-reduce the results from NFU-1; and NFU-3, to perform the activation function. The internal memory is composed of three SRAM buffers to store weights (16KB), inputs (1KB) and outputs (1KB).

We employ the beam search accelerator presented in [73]. It contains specialized units to fetch from memory the required data to traverse the decoding graph: state issuer, arc issuer and acoustic likelihood issuer. The likelihood evaluation unit computes the weights of the new tokens by combining source token weight, the arc’s weight and the DNN-computed acoustic score. Finally, the token issuer maintains the list of tokens and generates the word lattice. Regarding the on-chip memories, it includes several caches to speed up the accesses to different data (state cache of 128KB, arc cache of 256KB and token cache of 128KB) and two hash tables to track the tokens for the current frame and next frame of speech (768KB).

Each stage of the ASR software pipeline is executed on the best suited hardware. Figure 4 shows a diagram of the execution of the different components of the ASR pipeline. The extraction of the features from the audio frames is essentially composed of matrix–vector and matrix–matrix operations, so it can be executed almost exclusively in the DNN accelerator. The extraction of the iVector contains matrix–matrix operations, which can be efficiently handled by the DNN accelerator, and other operations not well-suited for DNN hardware that are executed in the CPU. The acoustic model DNN inference and beam search are computed in the DNN and beam search accelerators, respectively.

Execution of the ASR pipeline on the available hardware. The horizontal axis shows time, whereas the y axis contains a row for each hardware component. When a frame is captured, the features are extracted using the CPU and the DNN accelerator. After that, the acoustic scores are obtained by evaluating the acoustic model (AM) in the DNN accelerator. These acoustic scores are then merged with the language model (LM) Scores during beam search, executed on the beam search accelerator. The system is in idle state until a new frame arrives

Although some steps in the ASR pipeline run on different hardware, they cannot be executed in parallel because each step depends on the previous one. Apart from that, we are assuming a Stream Evaluation of the input utterance, in which the frames are evaluated one by one, while the utterance is being captured by the ASR front-end; this is commonly referred to as online real-time speech recognition. Because of using an online ASR system on a hardware that runs faster than real-time, we cannot parallelize among different frames, either. Another limitation for parallel execution of the beam search and the DNN accelerators is that the DNN evaluation requires a large amount of data from main memory, that is, LPDDR4 in our SoC. In fact, performance is mainly limited by the amount of bandwidth that it requires.

4 Baseline analysis

In this section, we analyze the baseline ASR system presented in Sect. 3 in terms of execution time and energy consumption, showing the breakdown of all the components in the system and demonstrating, as previously discussed, that the component that consumes the most time and energy is the DNN-based acoustic model evaluation.

4.1 ASR system parameters

We implement the ASR software pipeline described in Sect. 3.1 using Kaldi [53], a widely popular framework for building speech recognition systems. Table 1 shows the relevant parameters of the ASR employed in our baseline system. The acoustic model is a TDNN network, trained to receive as input a 40-dimension MFCC array concatenated with a 100-dimension iVector, complemented with a context of 21 past frames and 21 future frames. The network weights and inputs are linearly quantized to 8 bits. The output of the acoustic model represents the probabilities for the 6056 sub-phoneme elements or senones present in the model. The Decoding Graph is a WFST that combines a lexicon of 200k words and a 4-gram language model.

Then, we execute the ASR system in the hardware platform described in Sect. 3.2. Table 2 shows the parameters for the mobile SoC. We chose a low-power ARM CPU with 8GB of LPDDR4 DRAM, complemented with two accelerators: one to perform the beam search [73] and the other for the DNN inference [9]. All these subsystems share the same address space, are connected to the main system bus and are served by the same memory controller. The methodology employed to obtain the execution time and energy consumption of this platform is described in Sect. 6.1.

4.2 Breakdown of energy and execution time

We conduct some preliminary experiments on the baseline ASR system by running the LibriSpeech test set, that consists of more than five hours of speech of a large number of speakers. More details on the LibriSpeech corpus are provided in Sect. 6.1.

According to our experiments, the distribution of energy consumption among the ASR system components is as shown in Fig. 5, where we can see how most of it is consumed by the DRAM during the DNN inference. Chart 5a shows the breakdown of the energy consumed during the evaluation of the LibriSpeech test set, split by the components of the ASR software pipeline. The most expensive parts are the iVector and the acoustic model evaluation, consuming 28.9 and 68.3 %, respectively. The iVector computation is expensive in terms of energy consumption because it has to be partially executed on the CPU, running at an average power of \(2.7~\text {W}\). Since the AM evaluation is the most costly part, our target is to identify how much of that energy is consumed by each hardware component in the SoC. Chart 5b shows the breakdown of the energy consumed during the AM evaluation by the different hardware components. The LPDDR4 DRAM employed as main memory is the component that consumes the most, with \(85~\%\) of the total energy. The rest of the energy is consumed by the CPU, which is idle during the TDNN evaluation, and the accelerators, of which the beam search accelerator is also idle but is not shut off because it is beneficial to reuse its internal caches and scratchpads across consecutive frames.

Breakdown for energy consumption during ASR evaluation on the mobile SoC presented in Sect. 3.2. Chart a shows the energy breakdown by ASR software component, where the most costly part is the acoustic model TDNN evaluation, whereas chart b shows the energy breakdown among hardware components during the AM evaluation. Here, it can be seen how reads and writes from the DRAM are responsible for most of the consumed energy

Similarly, the TDNN evaluation for acoustic scoring is also the most time-consuming part of the ASR system, as it takes \(82~\%\) of the total execution time. The feature extraction and the beam search require 14.8 and 3.2 % of the execution time, respectively. The mobile SoC platform meets the real-time constraints for all the utterances in LibriSpeech test set (more than 2k utterances), achieving real-time factors of 0.05xRT on average and 0.09xRT in the worst case.

Summarizing, it is clear from these results that the TDNN is the most energy-intensive and time-consuming component of the baseline ASR system. Furthermore, we have identified that most energy (\(58.1~\%\)) is consumed because of main memory accesses during the TDNN evaluation. We have measured that \(99~\%\) of those accesses are for fetching weights. The weights of the TDNN are stored in the LPDDR4 DRAM of the SoC. We assume that the weights are initially loaded into the SoC main memory during the first evaluation of the TDNN, and later those weights are reused by fetching from the main memory for multiple consecutive executions of the TDNN model with different input frames. This is because the network is too large to be kept in the on-chip memory buffers of the DNN accelerator, and since we are evaluating the input frames one by one, we cannot take advantage of the temporal locality of weights for different input frames. Consequently, the main bottleneck of the baseline ASR system is reading from the main memory due to the limited bandwidth and the huge amount of data that has to be transferred to the DNN accelerator.

4.3 DNN-based AM optimizations

A well-known optimization to alleviate the high costs of the AM evaluation consists of pruning the DNN model by an iterative process of removing some weights and retraining. This approach results in a sparse network, which can be significantly smaller than the dense network. However, the pruning algorithm is expensive, and the inference with a sparse network requires important changes in the DNN accelerator.

Another popular technique is to use aggressive DNN quantization. However, quantizing inputs and weights to less than 8 bits results in an important degradation in recognition accuracy, making it a bad solution. Figure 6 shows the WER for test_clean and test_other evaluated with the TDNN-based AM at different levels of quantization. While 8 bits result in minor accuracy loss compared to full precision, going to 4 bits increases the WER by \(49~\%\) in test_clean and \(61~\%\) in test_other, and if the weights are quantized to 2 bits, the system does not work, generating invalid transcriptions.

In the following Sections, we show how the beam search confidence can be leveraged to reduce energy consumption and increase performance by dynamically selecting the appropriate precision for the acoustic model inference evaluation.

5 Dynamic DNN precision

This section presents the main contributions of our work. First, we describe our technique to dynamically set the precision of the DNN used for acoustic scoring based on the key observation of the beam search confidence. In Sect. 4, we showed that fetching the DNN weights from main memory takes a large percentage of the total energy consumption. Furthermore, we showed that reducing the DNN precision for all the frames results in a significant accuracy loss. Therefore, we take a different approach and propose to reduce the precision for parts of the speech where the confidence of the decoder is high and, hence, it does not require highly accurate acoustic scores to avoid discarding the correct hypothesis. Then, we describe the hardware support required to implement our dynamic DNN precision technique on top of the DNN accelerator.

5.1 Beam search confidence

Determining in advance which frames require more or less precision is a challenging problem. To solve it, we propose to look at the number of tokens expanded during the beam search, based on the observation that a high number of tokens means that the beam search is not very confident about the correct transcription, whereas a low number of tokens is related to high confidence. Figure 7 compares the WER obtained for test_clean and test_other with different percentages of frames computed at 4 bits. When the precision is reduced for frames with a low number of tokens, we can evaluate in low precision many more frames while maintaining a low WER loss, than if the frames are chosen randomly. Therefore, frames with high confidence, i.e., low number of expanded tokens, are good candidates to be evaluated in low precision in the DNN-based acoustic model. Note that when the DNN is evaluated for a given frame, the number of tokens expanded during the evaluation of the previous frame is already known by the beam search accelerator, and thus, this information can be exposed to the DNN accelerator to set the precision at run-time.

5.2 Static threshold setting

Our technique decides which frames of speech show high/low confidence in the decoding, based on the number of tokens expanded during the previous beam search step. If the evaluation of one frame results on few current hypotheses, the DNN inference for the next frame is performed in low precision. The threshold for the number of tokens is set with the goal of trying to achieve a given target percentage of frames evaluated at low precision. There are two options to set this threshold, a per-utterance threshold, meaning that each utterance will be forced to keep the same percentage of low precision frames by using a different threshold value; or a global threshold, meaning that the percentage of low precision frames will be different for each utterance but the target percentage will be achieved after computing a number of utterances. We have observed that a global threshold gives better results because different utterances have different precision requirements. If the threshold is set individually for each utterance, we are undermining those requirements by forcing the same percentage of low precision frames in all the utterances, and so the global WER increases.

The easiest solution for a global threshold would be to measure the number of tokens for each frame in the train set, and fix the threshold statically according to the desired percentage of low precision frames. However, we have observed that the threshold obtained with this method does not match the desired percentage of low precision frames in the test sets. In Fig. 8, the train set was evaluated with high precision, then we sorted the frames according to the number of tokens expanded by the beam search, and selected the frames at percentiles 30, 50 and 70. The number of tokens at those frames was used as the threshold to evaluate test_clean and test_other and measure the percentage of frames classified for low precision. This approach for setting the threshold results in a percentage of low precision frames significantly lower than in the train set. The reason is that when low precision is used, the number of tokens expanded during the beam search changes with respect to the case when high precision is always used, modifying the token distribution and moving the percentiles.

This plot shows the percentage of frames classified for low precision computation in our technique when using a fixed threshold. To obtain this threshold, the train set is evaluated at high precision. Then, all frames are sorted according to the number of tokens expanded during the beam search, and the number of tokens for the frame at the target percentile is set as threshold for test_clean and test_other evaluation. As we can see, the desired percentage of low precision frames is not achieved in the test sets, since it is much lower than the target percentage used in the train set

Figure 9 shows the cumulative frequency of frames from test_clean and test_other according to the number of tokens expanded, when the DNN is evaluated completely at 8 bits and 4 bits. In this case, the percentile 50 for the test set evaluated at 8 bits is at 883 tokens, whereas when the test set is evaluated at 4 bits, it is at 1961 tokens (vertical dashed red lines). It is clear from the figure that when the acoustic model is evaluated at lower precision, the number of tokens per frame increases. In other words, the overall confidence decreases when low precision is used.

5.3 Dynamic threshold setting

We have previously described a naive solution to set the threshold statically based on a profiling of the train set evaluated in full precision. However, this approach does not achieve the expected results because the number of tokens that are expanded in the test sets varies when they are evaluated in low precision. Below, we describe the key contribution of this work, which consists of setting the threshold dynamically.

To overcome the issues of the static method to set the threshold, we propose instead an alternative heuristic that aims to compute \(50~\%\) of the frames at low precision by doing run-time adjustments to the threshold. For that, we keep a variable, h, that contains the difference between the number of frames evaluated in low precision and those computed at high precision, and try to keep it at 0, by increasing or decreasing the threshold for the number of tokens.

To avoid the threshold from oscillating wildly, we define an additional variable, \(h_l\), which also contains the difference between high precision and low precision frames, but constrained to a window of latest frames. This way, we know if we need more frames at low or high precision, and also the current tendency. If we have more low-precision than high precision frames in the local window (\(h_l > 0\)), we assume that the number of frames at low precision is increasing. If the opposite is true, i.e., \(h_l < 0\), we assume that the number of frames evaluated at low precision is decreasing. With these values, we update the threshold (Th) using the following formula:

When the system has evaluated more low-precision than high-precision frames and the tendency goes toward increasing low-precision frames, the heuristic decreases the threshold by \(\Delta\), so it is more difficult for frames to be classified for low precision. On the other hand, if it has evaluated less frames in low precision, both globally and in the local window, the threshold is increased, so more frames are classified as low-precision. Both \(\Delta\) and the starting value of Th are parameters of the system. To avoid using floating point arithmetic, \(\Delta\) is an integer value, and we introduce another parameter to regulate the number of frames between consequent threshold updates.

This heuristic means that the threshold is going to fluctuate. To put the amplitude of the threshold oscillations into perspective, we compare it with the number of tokens per frame (Fig. 10). Since the oscillations in both signals are orders of magnitude apart, we conclude that the proposed heuristic leads to a well stable threshold.

5.4 DNN accelerator

To implement the technique described above, we quantize the weights from the full-precision DNN-based acoustic model into two levels (8-bit and 4-bit), which we keep stored in the main memory of the SoC. On each frame, depending on the number of tokens expanded by the beam search, we command the DNN accelerator to evaluate the input frame using one model or the other.

The low-precision model is half the size of the high-precision model, which results in half the time required to read the model from main memory while using the same bandwidth between the DNN accelerator and the DRAM. In order to take advantage of the low-precision model in the most efficient way, we modified the DNN accelerator so it can perform computations in base precision or half precision, with the same hardware, so we can double the number of operations per cycle when operating in 4-bit mode, with very low area and power overheads over the baseline design.

The DNN accelerator now has to support two different modes of operation: base-precision and half-precision, which in this case means operating at 8 bits or 4 bits.

One of the main parameters of the accelerator is Tn, which configures the number of NFUs and the NFU vector size. In the baseline design, each NFU carries out the computation of a different neuron, whereas the NFU vector size enables to compute in parallel several inputs for that neuron.

Without loss of generality, we assume a configuration of the DNN accelerator with Tn = 16, using 8-bit weights for base-precision mode and 4-bit weights for half-precision mode. Hence, during full-precision mode, the DNN accelerator computes 16 neurons in parallel, and for each of those, 16 parallel inputs. In order to do so, it receives \(16 \times 16\) weights and 16 inputs each cycle.

For half-precision mode, however, the accelerator receives \(32 \times 16\) weights and 16 inputs per cycle (i.e., 32 neurons are computed in parallel), that is, the size of the input buffer is the same as in the baseline design. We decided not to quantize the inputs to half-precision because we found it has an important impact on WER for a small benefit on performance. During half-precision mode, we partition each compute unit so it computes 16 inputs for \(2 \times 16\) neurons. In this case, each compute unit would receive \(2 \times 16\) half-precision weights and 16 base-precision inputs. In this solution, we still have to modify the multiplication units to support both base-precision (one 8-bit\(\times 8\)-bit multiplication) or half-precision (two \(8 \times 4\) multiplications). However, since in half-precision mode we are computing two neurons at the same time at each compute unit, every two multiplications share the same 8-bit input operand. We design our multiplication units to either multiply two base-precision operands, or multiply two half-precision operands by the same base-precision operand. Figure 11 shows a diagram of our multiplication unit.

In half-precision mode each compute unit accumulates two different neurons and, hence, the add-tree must be able to perform one base-precision accumulation of 16 values, or two half-precision accumulations of 16 values each. For that purpose, we modify the adders in the tree so the transmission of the carry from one half to the other is conditioned on the mode of operation. By doing this simple modification, when the add-tree operates in half-precision mode, each adder operates as two independent adders, and thus the complete tree is unfolded in two separated trees, as shown in Fig. 12. Additionally, since we are merging different levels of precision, the bit-width of the adder units has to be carefully set in order to avoid arithmetic overflow.

5.5 DNN activation unit and output buffer

The activation unit performs the neuron activation function after all the neuron inputs have been accumulated. Since this unit is only used at the end of the neuron evaluation, and the accelerator is alternating the computation of several neurons (to leverage temporal locality of inputs), there is a lot of time from one activation to the next, and thus, the activation unit only requires support to serialize the output from the compute unit when operating in half-precision mode. A similar argument applies for the output buffer.

5.6 Beam search accelerator

In order to compute the threshold updates following the proposed heuristic, we introduce some modifications in the beam search accelerator. First, it has to count the generated tokens on each search step, and keep track of the threshold, Th, and the variables h and \(h_l\) used by the heuristic. To this end, we modify the token issuer to include a few adders, a very small buffer for the \(h_l\) window, and a register for each variable, resulting in a negligibly area overhead. With these modifications, the token issuer keeps track of the threshold and the number of tokens expanded during each beam search step. After each step, the number of expanded tokens is compared with the threshold, and the required precision is exposed to the DNN accelerator. When a new frame is captured and transformed into a feature vector, the DNN accelerator computes the inference for that frame in the required precision.

6 Experimental evaluation

In this section, we evaluate the performance and energy efficiency of our dynamic DNN precision scheme based on the beam search confidence. First, we describe the methodology employed for the experimental evaluation. Then, we show the speedups and energy savings achieved by our technique compared to the baseline system. The baseline is the mobile SoC platform running the ASR pipeline presented in Sect. 3. It includes a multi-core ARM CPU, a DNN accelerator, and a beam search accelerator. We have implemented our scheme on top of the baseline, as described in Sect. 5. Finally, we discuss the impact on accuracy and the overheads of our proposal.

6.1 Methodology

To measure the performance and energy consumption of the accelerators, we relied on cycle-accurate simulators to count the cycles and usage of the logic units. We model the mobile SoC platform described in Sect. 3.2, illustrated in Fig. 2. The hardware parameters for the experiments are shown in Table 2. To obtain the power values for all the logic units, we modeled them in Verilog, employing IPs from the synopsys designware building block IP when available, and writing the Verilog ourselves when required. To report accurate power, we employed the synopsis power compiler tool, which requires a switching activity file. These activity files were obtained by simulating the Verilog designs with specific test-benches.

The on-chip SRAM buffers in the accelerators were modeled with a modified version of CACTI [46] included in McPAT [33], whereas the LPDDR4 DRAM used as main memory was modeled with the Micron DRAM Power model [40]. The latter requires the memory usage conditions, which we set by estimating the average read and write bandwidth required by the accelerators when operating at different frequencies.

The clock frequency for each logic unit was derived from the critical path as estimated by the synopsis synthesis tool from our Verilog code, and the limit established by the bandwidth requirement between the accelerators and the main memory. The clock frequency for the beam search accelerator is constrained by the logic units and was set to \(600~\text {Mhz}\), whereas the frequency for the DNN accelerator is limited by the main memory bandwidth and was set to \(55~\text {Mhz}\). Note that a DRAM with higher bandwidth could be used to improve the performance, but that would increase the overall system’s power and energy consumption, rendering it less attractive for low-power on-edge solutions, which is the main focus of this work.

To measure the CPU performance, we used a Jetson Tx1 Board from Nvidia, which contains, among other components, the ARM CPU that we are assuming for our baseline system. Those tasks previously identified to run on CPU were executed on this board, while reading the internal performance counters for time and CPU energy. To ensure that the measurements were accurate, we launched the utterance evaluations one by one, making sure that the GPU was not being used, and that this process was the only one running (apart from OS tasks).

The Kaldi Speech Recognition Toolkit [53] has been used to build the software ASR pipeline described in Sect. 3.1, including the training of the TDNN model [51]. Kaldi has several recipes of different ASR models, including the architecture of the DNN model and the scripts with the parameters to perform the training. We employ the recipe of the TDNN model without further changes to perform an initial training. Then, we apply linear quantization over the pre-trained weights to obtain the high-precision (8 bits) and low-precision (4 bits) models required for our technique.

Regarding the speech corpus, we employed the LibriSpeech [48] dataset. LibriSpeech is a large-scale ASR corpus of approximately 1000 h of \(16~\text {kHz}\) read English speech derived from audio-books with several speakers. The data are divided in 5 sets: train, test_clean, test_other, dev_clean and dev_other. The train set, augmented with different techniques included in Kaldi, was used to train the models (see Table 1), whereas test_clean with 40 different speakers and test_other with 33 speakers, each containing more than 5 h of speech (i.e., around 2k utterances), were used for the evaluations. The difference between both test sets is that test_other contains more challenging utterances than test_clean, so the WER changes significantly.

6.2 Performance gains and energy savings

Figure 13 shows the distribution of utterances according to the percentage of frames evaluated at low precision. Most of them fall between \(40-60~\%\), but a few utterances have evaluated more than \(80~\%\) of their frames at low precision. This is because our heuristic modifies the threshold slowly and, hence, during a single utterance evaluation, the threshold does not change to a large extent. Note that, in the long run, the number of frames evaluated in low precision converges to the \(50~\%\) target.

Figure 14 shows the savings in energy consumption and execution time obtained from the proposed technique compared to the evaluation on the baseline platform. Three cases are plotted: the worst utterance, the best utterance and the test set average. The worst and best utterances are chosen regarding the percentage of frames evaluated at half-precision. Significant improvements are achieved in all cases. By applying our technique, we can save up to \(47.2~\%\) of energy and reduce execution time up to \(47.4~\%\) for the AM evaluation on utterances where the beam search confidence is high for most of its frames. On average, our scheme reduces energy consumption by \(25.6~\%\) and execution time by \(25.9~\%\) when evaluating the complete test set.

Since the low-precision (4-bit weights) AM network is half the size than the full-precision (8-bit weights) network, whenever a frame is evaluated at low-precision, we save half the reads from main memory. Operating at half-precision results in significant speedups for two reasons. First, the neural function units (NFUs) are modified so they can operate at double throughput in half-precision mode with negligible hardware overheads. Second, the DNN accelerator is memory bound since data reuse is largely limited in the TDNN and, hence, reducing one half of the reads from main memory results in large performance improvements. Therefore, the execution time for the AM evaluation is reduced by approximately one half during low-precision frames.

Similarly, the reduction in energy consumption is also mostly explained by the reduction in reads from main memory. As detailed in Sect. 4, DRAM reads of the DNN-based AM weights are the main bottleneck of the system, contributing to \(85~\%\) of the energy consumed during AM evaluation. Another source of energy savings comes from the reduction in static energy consumed by the rest of the components during the time that the AM is being evaluated. Since around \(50~\%\) of the total number of frames are evaluated at low precision, the observed savings of around \(25~\%\) in time and energy during acoustic model evaluation are consistent.

When we take into account the complete ASR system, the savings obtained in the AM evaluation translate to an average reduction on energy consumption of \(16.9~\%\) and a reduction of execution time of \(19.6~\%\) (Fig. 14). As discussed in Sect. 5, when the low precision AM is employed, the average confidence of the beam search is decreased, which translates to a decrease in the performance of the beam search when our technique is used. Consequently, the energy and time consumed by the beam search is generally increased with respect to the baseline. However, even for the worst cases observed in the test sets, the benefits exceed the overheads, resulting in a net improvement in performance for all the utterances.

6.3 Recognition accuracy

Reducing the precision for the AM evaluation results in a minor degradation in the recognition accuracy when the frames for low precision evaluation are carefully chosen. Our experiments show that by using the proposed heuristic based on the number of tokens, we can compute at low precision \(50~\%\) of the test sets frames incurring in less than \(1~\%\) absolute WER loss for test_clean, and \(1.35~\%\) for test_other.

To select a target for the percentage of frames computed at low precision, we performed a sensitivity analysis, modifying the percentage from \(0~\%\) (every frame in high precision) to \(100~\%\) (every frame in low precision) with increments of \(10~\%\). Figure 15 shows the relation between WER and savings. Note that the execution time and energy savings are proportional to the percentage of frames evaluated at low precision. If some percentage of frames, \(x~\%\), is evaluated in low precision, we save around \(x/2~\%\) of execution time and energy consumption during the DNN evaluation. This is because the precision is reduced by half (i.e., 8 to 4 bits), so the savings are well correlated for frames that are evaluated at low precision. Moreover the DNN accelerator is deterministic, so all frames evaluated with a given precision take the same execution time and energy consumption. The figure shows a curve with an elbow around \(x = 40-50~\%\) for both cases: test_clean, and test_other. Choosing a target in this range grants significant gains with negligible WER loss.

6.4 Overheads

In order to implement our technique, the baseline accelerators require some modifications. More specifically, the DNN accelerator must support two operation modes: base-precision and half-precision, whereas the beam search accelerator has to compute the heuristic and maintain the threshold. These modifications, made as described in Sect. 5, result on an area overhead of \(3.1~\%\) over the baseline accelerators, resulting on a negligible overhead over the complete platform, since the accelerators are very small when compared to the 8GB LPDDR4 or the 4-core ARM CPU. The DNN accelerator occupies an area of \(0.42~\text {mm}^2\), split between buffers (\(25.5~\%\)), MULT arrays (\(62.6~\%\)) and AddTrees (\(11.9~\%\)), whereas the beam search accelerator occupies \(3.34~\text {mm}^2\). Since we keep in main memory an additional DNN model (AM quantized to 4 bits), our solution incurs in memory footprint overheads. However, the additional model is fairly small and, as a result, the overall overhead is just a \(3.8~\%\) increase in memory footprint. The average power of the complete system, including CPU, accelerators and external DRAM, is \(1.17~\text {W}\) (\(3.3~\%\) increase over the baseline), and the frames are evaluated 20x faster than real time, consuming \(0.7~\text {mJ/frame}\).

7 Conclusions

In this work, we show how the number of tokens expanded during the beam search can be used to improve the performance of the acoustic model evaluation, the main bottleneck of ASR systems. Following the observation that a low number of expanded tokens is related with high confidence in the partial decoding, we set a threshold on the number of tokens and evaluate the AM in low precision for those frames falling below it. This threshold is computed at run-time by using a heuristic that guarantees that \(50~\%\) of the frames will be computed at low precision.

To support this computation scheme, we modified a baseline low-power DNN accelerator, changing the array multiplication and add-tree units by specifically designed duplex units, so the accelerator can operate either in base or half precision, doubling the throughput for the low precision frames, with minimal impact on area and power. By using our proposal, the performance of the DNN-based acoustic model evaluation is improved by \(25.9~\%\), whereas the energy consumption is reduced by \(25.6~\%\) on average for the entire LibriSpeech test set.

Data Availability

No datasets were generated or analysed during the current study.

Notes

These sound units, sub-phoneme units, or senones, are normally defined as context-dependent phonemes, being the most popular the tri-phone. A tri-phone is composed of a central phoneme p plus a left and a right phonemes \(p_{l}-p-p_{r}\).

References

Alharbi S, Alrazgan M, Alrashed A et al (2021) Automatic speech recognition: systematic literature review. IEEE Access 9:131858–131876

Amazon (2014) Alexa. https://en.wikipedia.org/wiki/Amazon_Alexa, [Online; accessed 22-Mar-2024]

Amodei D, Ananthanarayanan S, Anubhai R, et al (2016) Deep speech 2: End-to-end speech recognition in english and mandarin. In: International Conference on Machine Learning, pp 173–182

Apple (2011) Siri. https://en.wikipedia.org/wiki/Siri, [Online; accessed 22-Mar-2024]

Baevski A, Zhou Y, Mohamed A et al (2020) wav2vec 2.0: a framework for self-supervised learning of speech representations. Adv Neural Inf Process Syst 33:12449–12460

Baevski A, Hsu WN, Xu Q, et al (2022) Data2vec: a general framework for self-supervised learning in speech, vision and language. In: International Conference on Machine Learning, PMLR, pp 1298–1312

Bai Z, Zhang XL (2021) Speaker recognition based on deep learning: An overview. Neural Netw 140:65–99

Chan W, Jaitly N, Le Q et al (2016) Listen, attend and spell: a neural network for large vocabulary conversational speech recognition. 2016 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 4960–4964

Chen T, Du Z, Sun N et al (2014) Diannao: a small-footprint high-throughput accelerator for ubiquitous machine-learning. ACM SIGARCH Comput Archit News 42(1):269–284

Chen YH, Krishna T, Emer JS et al (2016) Eyeriss: an energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J Sol State Circuits 52(1):127–138

Chen YH, Yang TJ, Emer J et al (2019) Eyeriss v2: a flexible accelerator for emerging deep neural networks on mobile devices. IEEE J Emer Select Topics Circuits Syst 9(2):292–308

Chorowski JK, Bahdanau D, Serdyuk D, et al (2015) Attention-based models for speech recognition. Advances in neural information processing systems 28

Chun A, Chang JX, Fang Z, et al (2011) Isis: an accelerator for sphinx speech recognition. In: 2011 IEEE 9th Symposium on Application Specific Processors (SASP), IEEE, pp 58–61

Dave N (2013) Feature extraction methods lpc, plp and mfcc in speech recognition. Int J Adv Res Eng Technol 1(6):1–4

Dehak N, Kenny PJ, Dehak R et al (2010) Front-end factor analysis for speaker verification. IEEE Trans Audio Speech Lang Process 19(4):788–798

Du Z, Fasthuber R, Chen T, et al (2015) Shidiannao: shifting vision processing closer to the sensor. In: Proceedings of the 42nd Annual International Symposium on Computer Architecture, pp 92–104

Google (2023) Gemini. https://en.wikipedia.org/wiki/Gemini_(chatbot), [Online; accessed 09-Apr-2024]

Gulati A, Qin J, Chiu CC, et al (2020) Conformer: convolution-augmented transformer for speech recognition. arXiv preprint arXiv:2005.08100

Gupta U, Reagen B, Pentecost L, et al (2019) Masr: a modular accelerator for sparse rnns. In: 2019 28th International Conference on Parallel Architectures and Compilation Techniques (PACT), IEEE, pp 1–14

Gutierrez-Garcia JO, López-Neri E (2015) Cognitive computing: a brief survey and open research challenges. In: 3rd International Conference on Applied Computing and Information Technology/2nd International Conference on Computational Science and Intelligence, IEEE, pp 328–333

Han S, Kang J, Mao H, et al (2017) Ese: efficient speech recognition engine with sparse lstm on fpga. In: Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, pp 75–84

Hegde K, Yu J, Agrawal R, et al (2018) Ucnn: exploiting computational reuse in deep neural networks via weight repetition. In: 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), IEEE, pp 674–687

Hub HF (2024) Open asr leaderboard. https://huggingface.co/spaces/hf-audio/open_asr_leaderboard, [Online; accessed 06-06-2024]

Jia W, Sun M, Lian J et al (2022) Feature dimensionality reduction: a review. Complex Intell Syst 8(3):2663–2693

Jiao X, Akhlaghi V, Jiang Y, et al (2018) Energy-efficient neural networks using approximate computation reuse. In: 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), IEEE, pp 1223–1228

Jouppi NP, Young C, Patil N, et al (2017) In-datacenter performance analysis of a tensor processing unit. In: Proceedings of the 44th Annual International Symposium on Computer Architecture, p 1-12

Karafiát M, Burget L, Matějka P, et al (2011) ivector-based discriminative adaptation for automatic speech recognition. In: 2011 IEEE Workshop on Automatic Speech Recognition & Understanding, IEEE, pp 152–157

Kelly JE (2015) Computing, cognition and the future of knowing. IBM Research :12

Kelly JE, Hamm S (2013) Smart machines: IBM’s Watson and the era of cognitive computing. Columbia University Press, New York

Li J, Lavrukhin V, Ginsburg B, et al (2019) Jasper: an end-to-end convolutional neural acoustic model. arXiv preprint arXiv:1904.03288

Li J, et al (2022) Recent advances in end-to-end automatic speech recognition. APSIPA Transactions on Signal and Information Processing 11(1)

Li Q, Zhang C, Woodland PC (2023) Combining hybrid dnn-hmm asr systems with attention-based models using lattice rescoring. Speech Commun 147:12–21

Li S, Ahn JH, Strong RD, et al (2009) Mcpat: an integrated power, area, and timing modeling framework for multicore and manycore architectures. In: Proceedings of the 42nd Annual IEEE/ACM International Symposium on Microarchitecture, pp 469–480

Lin EC, Yu K, Rutenbar RA, et al (2006) Moving speech recognition from software to silicon: the in silico vox project. In: Ninth International Conference on Spoken Language Processing

Liu B, Qin H, Gong Y et al (2018) Eera-asr: an energy-efficient reconfigurable architecture for automatic speech recognition with hybrid dnn and approximate computing. IEEE Access 6:52227–52237

Logan B (2000) Mel frequency cepstral coefficients for music modeling. In: Ismir, pp 1–11

Luo T, Liu S, Li L et al (2016) Dadiannao: a neural network supercomputer. IEEE Trans Comput 66(1):73–88

Malik M, Malik MK, Mehmood K et al (2021) Automatic speech recognition: a survey. Multimed Tools Appl 80:9411–9457

Meister C, Vieira T, Cotterell R (2020) Best-first beam search. Trans Assoc Comput Linguist 8:795–809

Micron Technology I (2016) Tn-53-01: Lpddr4 system power calculator. urlhttps://www.micron.com/support/tools-and-utilities/power-calc

Microsoft (2014) Cortana. https://www.microsoft.com/en-us/cortana, [Online; accessed 22-Mar-2024]

Miura K, Noguchi H, Kawaguchi H, et al (2008) A low memory bandwidth gaussian mixture model (gmm) processor for 20,000-word real-time speech recognition fpga system. In: 2008 International Conference on Field-Programmable Technology, IEEE, pp 341–344

Modha DS, Ananthanarayanan R, Esser SK et al (2011) Cognitive computing. Commun ACM 54(8):62–71

Mohamed A, Hy Lee, Borgholt L et al (2022) Self-supervised speech representation learning: a review. IEEE J Sel Topics Signal Process 16(6):1179–1210

Mohri M, Pereira F, Riley M (2002) Weighted finite-state transducers in speech recognition. Comput Speech Lang 16(1):69–88

Muralimanohar N, Balasubramonian R, Jouppi NP (2009) Cacti 6.0: a tool to model large caches. HP laboratories 27:28

Ning L, Shen X (2019) Deep reuse: streamline cnn inference on the fly via coarse-grained computation reuse. In: Proceedings of the ACM International Conference on Supercomputing, pp 438–448

Panayotov V, Chen G, Povey D et al (2015) Librispeech: an asr corpus based on public domain audio books. 2015 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 5206–5210

Park DS, Chan W, Zhang Y, et al (2019) Specaugment: A simple data augmentation method for automatic speech recognition. arXiv preprint arXiv:1904.08779

Park TJ, Kanda N, Dimitriadis D et al (2022) A review of speaker diarization: recent advances with deep learning. Comput Speech Lang 72:101317

Peddinti V, Povey D, Khudanpur S (2015) A time delay neural network architecture for efficient modeling of long temporal contexts. In: Sixteenth Annual Conference of the International Speech Communication Association

Pinto D, Arnau JM, González A (2020) Design and evaluation of an ultra low-power human-quality speech recognition system. ACM Trans Architec Code Optim (TACO) 17(4):1–19

Povey D, Ghoshal A, Boulianne G, et al (2011) The kaldi speech recognition toolkit. In: IEEE 2011 Workshop on Automatic Speech Recognition and Understanding, IEEE Signal Processing Society, CONF

Povey D, Peddinti V, Galvez D, et al (2016) Purely sequence-trained neural networks for asr based on lattice-free mmi. In: Interspeech, pp 2751–2755

Povey D, Hadian H, Ghahremani P et al (2018) A time-restricted self-attention layer for asr. 2018 IEEE International Conference on Acoustics. IEEE, Speech and Signal Processing (ICASSP), pp 5874–5878

Prabhavalkar R, Hori T, Sainath TN, et al (2023) End-to-end speech recognition: a survey. IEEE/ACM Transactions on Audio, Speech, and Language Processing

Price M (2016) Energy-scalable speech recognition circuits. PhD thesis, Massachusetts Institute of Technology

Rabiner LR (1989) A tutorial on hidden markov models and selected applications in speech recognition. Proc IEEE 77(2):257–286

Radford A, Kim JW, Xu T et al (2023) Robust speech recognition via large-scale weak supervision. In: International Conference on Machine Learning, PMLR, pp 28492–28518

Riera M, Arnau JM, González A (2018) Computation reuse in dnns by exploiting input similarity. In: 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA), IEEE, pp 57–68

Riera M, Arnau JM, González A (2019) Cgpa: coarse-grained pruning of activations for energy-efficient rnn inference. IEEE Micro 39(5):36–45

Riera M, Arnau JM, González A (2022) Crew: computation reuse and efficient weight storage for hardware-accelerated mlps and rnns. J Syst Architect 129:102604

Riera M, Arnau JM, González A (2022) Dnn pruning with principal component analysis and connection importance estimation. J Syst Architec (JSA) 122:102336

Rouvier M, Favre B (2014) Speaker adaptation of dnn-based asr with i-vectors: Does it actually adapt models to speakers? In: Fifteenth Annual Conference of the International Speech Communication Association

Schank RC (1984) The cognitive computer: on language, learning, and artificial intelligence. Addison-Wesley Longman Publishing Co., Inc., Boston

Silfa F, Dot G, Arnau JM, et al (2018) E-pur: an energy-efficient processing unit for recurrent neural networks. In: Proceedings of the 27th International Conference on Parallel Architectures and Compilation Techniques, pp 1–12

Synnaeve G, Xu Q, Kahn J, et al (2019) End-to-end asr: from supervised to semi-supervised learning with modern architectures. arXiv preprint arXiv:1911.08460

Tabani H, Arnau JM, Tubella J, et al (2017) An ultra low-power hardware accelerator for acoustic scoring in speech recognition. In: 2017 26th International Conference on Parallel Architectures and Compilation Techniques (PACT), IEEE, pp 41–52

Waibel A, Hanazawa T, Hinton G et al (1995) Phoneme recognition using time-delay neural networks. Theory, Architectures and Applications, Backpropagation, pp 35–61

Wang Y, Mohamed A, Le D et al (2020) Transformer-based acoustic modeling for hybrid speech recognition. ICASSP 2020–2020 IEEE International Conference on Acoustics. IEEE, Speech and Signal Processing (ICASSP), pp 6874–6878

Xiong W, Droppo J, Huang X, et al (2016) Achieving human parity in conversational speech recognition. arXiv preprint arXiv:1610.05256

Xu L, Gu Y, Kolehmainen J et al (2022) Rescorebert: Discriminative speech recognition rescoring with bert. ICASSP 2022–2022 IEEE International Conference on Acoustics. IEEE, Speech and Signal Processing (ICASSP), pp 6117–6121

Yazdani R, Segura A, Arnau JM, et al (2016) An ultra low-power hardware accelerator for automatic speech recognition. In: 2016 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), IEEE, pp 1–12

Yazdani R, Arnau JM, González A (2017a) Unfold: A memory-efficient speech recognizer using on-the-fly wfst composition. In: Proceedings of the 50th Annual IEEE/ACM International Symposium on Microarchitecture, pp 69–81

Yazdani R, Segura A, Arnau JM et al (2017) Low-power automatic speech recognition through a mobile gpu and a viterbi accelerator. IEEE Micro 37(1):22–29

Yazdani R, Arnau JM, González A (2019) A low-power, high-performance speech recognition accelerator. IEEE Trans Comput 68(12):1817–1831

Yazdani R, Arnau JM, Gonzalez A (2020) Laws: locality-aware scheme for automatic speech recognition. IEEE Trans Comput 69(8):1197–1208

Zhang Y, Qin J, Park DS, et al (2020) Pushing the limits of semi-supervised learning for automatic speech recognition. arXiv preprint arXiv:2010.10504

Zhang Y, Han W, Qin J, et al (2023) Google usm: scaling automatic speech recognition beyond 100 languages. arXiv preprint arXiv:2303.01037

Zhou T, Zhao Y, Wu J (2021) Resnext and res2net structures for speaker verification. In: 2021 IEEE Spoken Language Technology Workshop (SLT), IEEE, pp 301–307

Zmolikova K, Delcroix M, Ochiai T et al (2023) Neural target speech extraction: an overview. IEEE Signal Process Mag 40(3):8–29

Acknowledgements

This work has been supported by the CoCoUnit ERC Advanced Grant of the EU’s Horizon 2020 program (grant No 833057), the Spanish State Research Agency (MCIN/AEI) under grant PID2020-113172RB-I00, the Catalan Agency for University and Research (AGAUR) under grant 2021SGR00383, the ICREA Academia program, and the Spanish MICINN Ministry under grant BES-2017-080605.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Contributions

Dennis Pinto wrote the main manuscript text and prepared all figures. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note