Abstract

In the last decade, the smart grid has utilized many modern technologies and applications compared with the conventional grid. Cloud computing offers numerous managed services and storage solutions for smart grid data. To balance security, privacy, efficiency, and utility, more efforts should be made to keep up with the rapid evolution of technology. Searchable encryption techniques are widely considered an intelligent solution to ensure data privacy and security while maintaining the functionality to search over encrypted data. In this paper, we propose a more reliable and efficient searchable symmetric encryption scheme for smart grid data. It is a dynamic keyword searchable scheme that uses a symmetric cipher based on a key hashing algorithm (DKS-SCKHA) to generate a secure index. This scheme eliminates the false-positive issue associated with the use of bloom filters and narrows the scope of the retrieved search results. Additionally, it efficiently supports both partial and exact query processing on the encrypted database. Both theoretical and security analyses demonstrate the efficiency and security of the DKS-SCKHA scheme compared to other previous schemes. Comprehensive experiments on a smart grid dataset showed that the DKS-SCKHA scheme is 35–68% more efficient than the schemes compared in this paper. The DKS-SCKHA scheme supports three keyword search scenarios: single, conjunctive, and disjunctive. Furthermore, the DKS-SCKHA scheme is extended to support dynamic fuzzy keyword search on the encrypted database (DEFKS-SCKHA). We evaluated the security and efficiency of the DEFKS-SCKHA scheme through security analysis and experimental evaluation. Theoretical analysis shows that the proposed scheme is secure against known-ciphertext and known-background attacks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A smart grid is considered the next-generation energy system that can provide high-quality service to the public. Since it incorporates modern sensing, control, monitoring, and communication technologies into legacy grids, it has drawn significant attention from both academics and businesses. It also achieves intelligence by gathering and utilizing data from smart meters, sensors, and various Internet of Things (IOT) devices. The smart grid can intelligently manage equipment, boost operational efficiency, and predict faults through real-time monitoring and data analysis. An investigation into malicious power generation attacks and power supply interruptions was reported in [1], focusing on network vulnerabilities. Consequently, numerous efforts are being made to ensure security [2] and enhance the cyber resilience [3] of power management systems, which are anticipated to be critical in the future of smart grids.

The National Institute of Standards and Technology (NIST) of the USA introduced a well-known conceptual model in the smart grid [4]. This model divides the smart grid into seven categories, including generation, distribution, transmission, customers, operations, markets, and service providers. In the future, smart grid systems may utilize all these components and modules as sources for massive amounts of various data. Despite variations in structure, type, and generation rates, integration, storing, and managing data remain active study areas in the smart grid community [5]. However, the traditional smart grid cannot extract the required knowledge and information from large amounts of data, nor can it keep up with the growing demands for storage and data management [5]. To address these issues, cloud computing provides massive storage space, powerful computing capabilities, and the ability to appropriately outsource and process smart grid data. Research on remote cloud-based administration and storage of smart grid data has recently gained popularity [6]. Complete security can be achieved when owners of smart grid data use private cloud servers to outsource their data or cloud services to manage and analyze it. However, for specific economic reasons, smart grid data organizations may decide to outsource their data to a third party. Technically, the service provider has full access to all sensitive data. Furthermore, the cloud service provider (CSP) may have reasons to be curious about this data. For instance, the advanced metering infrastructure (AMI) data in smart grids may contain personally identifiable information about clients, including payment history, residential location, and hourly measured power usage. The CSP could potentially learn about customers’ routines and preferences through non-intrusive load monitoring [7] and side-channel attacks. Therefore, it is necessary to consider outsourcing sensitive data to a private cloud server to address privacy concerns when dealing with smart grid data. Additionally, different types of smart grid data, such as videos and images, should be hidden using steganography techniques to enhance data confidentiality and protect privacy details [8,9,10].

Encrypting data before uploading to the cloud is an optimal solution to prevent attackers from accessing private information in the smart grid. While data confidentiality is achieved through encryption, query processing capabilities are sacrificed. Keyword searching is one of the most important operations involved in processing data stored on the cloud server. For example, a utility company may wish to inquire about a certain customer’s billing statement. In this case, if the documents are encrypted due to privacy concerns, the CSP cannot offer search features to the utility company. Searchable symmetric encryption (SSE) was introduced to address this issue and has been widely studied since its inception 22 years ago [11]. Using SSE technology, users can store their data in ciphertext format while maintaining the ability to search for keywords within the encrypted data.

Recently, more effective and secure SSE systems have been proposed to handle this problem [12,13,14,15,16,17]. Some schemes [12,13,14] build more efficient indexes and searchable algorithms, although they are limited to single-keyword searches. Smart grid data presents specific challenges. Firstly, it undergoes continuous updates due to rapid development, resulting in the expansion of keyword dictionaries. Secondly, attributes such as user electricity price, identity, and smart meter readings serve as keywords in search operations for each record. These characteristics render current SSE techniques inadequate for smart grid applications.

In this paper, we propose a new SSE scheme designed to enhance the privacy of smart grid data. This scheme is based on constructing an encrypted index for sensitive attributes of data in smart grid. By leveraging these features, we achieve higher space efficiency without compromising information leakage in the smart grid system. Recent studies [13,14,15] have developed SSE schemes to address substring queries, where users recall only a substring of an attribute rather than the exact search attribute. However, these studies only considered substring search queries on a single attribute, which cannot perform compound substring searches across multiple attributes.

Our main contributions can be summarized as follows:

-

1.

We propose a new dynamic keyword search scheme based on the SCKHA algorithm (DKS-SCKHA) for smart grid data. This scheme maintains search functionality with higher efficiency compared to other schemes and may reduce significant communication overhead associated with trapdoor transformations.

-

2.

We generate a dynamic trapdoor that prevents adversaries from tracking trapdoor keywords through the keyword space, thereby resisting keyword-guessing attacks from malicious servers.

-

3.

The DKS-SCKHA scheme supports both partial and exact search capabilities over the encrypted database.

-

4.

The DKS-SCKHA scheme supports both single-keyword and multi-keyword (conjunctive and disjunctive) queries over encrypted databases.

-

5.

We implement and evaluate the proposed scheme using real datasets. The experiments demonstrate that the DKS-SCKHA scheme has faster search and trapdoor times compared to other schemes.

-

6.

Furthermore, we extend our scheme to enhance dynamic fuzzy keyword search (DEFKS-SCKHA) over encrypted smart grid data while preserving keyword privacy.

1.1 Organization of the paper

The rest of the paper is structured as follows: Sect. 2 reviews the existing relevant works. Section 3 introduces the system model and design goals, which includes the preliminaries and primitives used in this paper, as well as the threat model, design goals, and the data format for the outsourced encrypted data on the cloud server. In Sect. 4, we present the proposed SSE scheme for the smart grid, which includes both Exact/Partial and fuzzy keyword SSE schemes. Security analysis for our SSE scheme is introduced in Sect. 5. Section 6 demonstrates the implementation and performance evaluation. Finally, Sect. 7 concludes and provides directions for future work.

2 Related work

Due to the continuous development of smart grids, organizations are increasingly concerned about the confidentiality and privacy of their power data. Steganography and cryptography are techniques used for data privacy. Steganography aims to conceal a piece of information within an alternative medium such as audio or images. Several existing works, such as those in [8, 9], should be employed to hide the privacy details of smart grid videos and images [10] to enhance their confidentiality. Cryptography aims to encode data itself into unreadable formats. Cryptographic techniques are used in many SSE schemes to enable searching over encrypted data. This section includes a review of related works for both exact and fuzzy keyword SSE schemes.

2.1 Exact keyword SSE

Song et al. [11] proposed the first SSE scheme, which includes two-layered encryption to encrypt each keyword. However, this scheme consumes more time because the cloud server searches all documents sequentially. Curtmola et al. [14] presented two effective SSE schemes that allow multiple users to send search queries and achieve sublinear search. The cost of the search operation is proportional to the number of documents containing the queried keyword. Cao et al. [18] were the first to propose a multi-keyword ranked search scheme over cloud-encrypted data with a privacy-preserving technique. This scheme uses a coordinate matching mechanism to retrieve documents related to the queried keywords and adopts inner-product similarity to discover similarity measures. Xia et al. [17] introduced a dynamic and secure multi-keyword ranked search scheme over cloud-encrypted data. This scheme uses a tree-based index combined with both the vector model and TF-IDF (term frequency and inverse document frequency). Wang et al. [19] introduced a novel primitive single-hop unidirectional proxy re-encryption scheme that supports conjunctive keyword search. This proxy first checks if a ciphertext contains specific keywords and then re-encrypts it using the corresponding re-encryption key. Fu et al. [20] created a user model by analyzing individual users’ search histories to retrieve related data from the cloud server. Cao et al. [18] were the first to propose a multi-keyword ranked search scheme over cloud-encrypted data with a privacy-preserving technique. This scheme uses a coordinate matching mechanism to retrieve documents related to the queried keywords and adopts inner-product similarity to discover similarity measures. Xia et al. [17] introduced a dynamic and secure multi-keyword ranked search scheme over cloud-encrypted data. This scheme uses a tree-based index combined with both the vector model and TF-IDF (term frequency and inverse document frequency). Wang et al. [19] introduced a novel primitive single-hop unidirectional proxy re-encryption scheme that supports conjunctive keyword search. This proxy first checks if a ciphertext contains specific keywords and then re-encrypts it using the corresponding re-encryption key. Fu et al. [20] created a user model by analyzing individual users’ search histories to retrieve related data from the cloud server. Two years ago, Andola et al. [21] introduced a secure SSE scheme based on hash indexing, which decreases computational overhead

Due to the fixed data format and frequent updates for smart grid data, current classical SSE schemes are not appropriate. Li et al. [22] proposed a simple, efficient, and easy-to-update SSE scheme for smart grid data, relying on pseudo-random functions to construct the index. However, this scheme suffers from data leakage and false-positive problems. Zhu et al. [23] enhanced the scheme proposed by Li et al. [22] to support multi-keyword and fuzzy keyword searches using the Hamming distance and N-Gram algorithm. Xiong et al. [24] proposed a more secure, efficient, easy-to-update, and non-false-positive SSE scheme for smart grid data based on combining the bloom filter and pseudo-random function. However, both schemes in [22, 24] incur higher computational costs for trapdoor generation and query search. Therefore, we aim to address these issues in our scheme. Authors in [22] achieve index unlinkability by ensuring that the same keyword appears in different codewords for two different datasets, using the row number as the data’s ID. This approach has two drawbacks: firstly, because the index and dataset sorts are identical, the CS will learn the plaintext order. Secondly, the CS is directly exposed to the search results (number of records containing related keywords), allowing it to learn the relationship between the trapdoor and the row numbers of unencrypted data containing the keyword. Authors in [24] customized the ID and disrupted the order of the index after generating it for each unit of data to improve security. Additionally, they hashed the data ID to conceal the relationship between the data ID and the trapdoor. Hiemenz et al. [25] presented a dynamic SSE scheme that enables users to securely outsource geographical data to the cloud. This scheme allows users to encrypt and search their cloud-based data simultaneously. It supports dynamic operations such as deleting old entries and adding new data without requiring an initial phase. Molla et al. [26] proposed a new SSE scheme enabling both range queries and single-keyword searches, based on an innovative inverted index. Wang et al. [27] proposed a secure SSE scheme that supports multi-keyword search queries, allowing multiple users to input more complex queries. This scheme also reduces the probability of leakage of both search patterns and user access to a certain level. Wang et al. [28] proposed an efficient keyword searchable encryption scheme that aims to build a privacy-preserving framework enabling efficient predicate encryption with fine-grained searchable capabilities. This scheme relies on a predicate encryption approach using a dual encryption technique and analyzes the relationship between the two.

2.2 Fuzzy keyword SSE

Most existing SSE schemes only support exact keyword searches. However, these schemes fail to retrieve the required results when the data owner makes morphological modifications or spelling errors. Therefore, these schemes have limited practical importance in real-world applications. Fuzzy keyword search enhances system usability by allowing matches to exact or near-match text for the required keywords, returning the closest approximate results. To address this problem, Li et al. [28] proposed a fuzzy keyword searchable scheme for encrypted data. This scheme uses wildcard techniques when constructing fuzzy keyword sets. The authors used edit distance to measure the similarity between keywords and developed an advanced technique to construct fuzzy keyword sets. This approach helps reduce representation overhead and storage requirements. Wang et al. [29] proposed a multi-keyword fuzzy searchable scheme based on the LSH function and Bloom filter concept, where the keyword is converted into a bi-gram set. Fu et al. [30] proposed another multi-keyword fuzzy ranking search scheme based on the approach in [25]. This scheme uses a new technique for keyword conversion based on unigrams and considers the weight of the keyword when selecting a suitable matching file set. To enhance search accuracy, Zhong et al. [31] constructed a balanced binary tree for the index and proposed a method to retrieve the top-k results. Tong et al. [32] introduced the Verifiable Fuzzy Multi-Keyword Search (VFSA) technique, which builds an index tree based on a graph-based keyword partition algorithm to achieve adaptive sublinear retrieval. VFSA uses locality-sensitive hashing to hash misspelled and correct keywords to the same positions. It utilizes twin Bloom filter for each document to store and mask all keywords within in the document. Additionally, VFSA integrates the Merkle hash tree structure with an adapted multiset accumulator to verify correctness and completeness. Liu et al. [33] proposed an efficient Fuzzy Semantic SSE (FSSE) that provides multi-keyword search of encrypted data in the cloud. This scheme generates keyword fingerprints from the keyword dictionary and query keywords. It employs the Hamming distance to measure similarity between these fingerprints.

3 System model and design goal

3.1 Preliminaries and notations

To make our paper clearer and easier for readers, we list some used notations and symbols in Table 1.

3.2 Primitives

There are some tools and algorithms used for designing cryptographic schemes as follows:

-

1.

Secure SCKHA [34, 35]: It is a stream-cipher technique that keeps the symmetric key secret during the sharing of the encryption key through channels. It uses a hash function \(h\left({M}_{k}\right)\) of the master key \({m}_{k}\) to hide the encryption key, where \(h:{\left\{\text{0,1}\right\}}^{*}\to {\{\text{0,1}\}}^{r}\) is a hash function. When we use MD5 as an example for hash functions, the encryption key of changeable length is 128 bits. This value is represented as a 32-bit (hexadecimal) series. Constructing the encryption key in this way provides many resources to check every potential key. Additionally, using the hash function will create a key space of 2^128, and this is the least key space we can obtain for the SCKHA algorithm. This helps in securing the encryption key against brute-force attacks. Furthermore, in SCKHA, every plaintext character is represented by two ciphertext characters. We can exploit this property to search exactly or partially over encrypted data.

-

2.

Pseudo-Random Function (PRFs): It is an efficiently computed function that converts short random strings into longer “pseudo-random strings” that can deceive statistical tests, making it difficult to distinguish them from purely random strings. The strength of a PRF lies in its ability to deceive these statistical tests, which are modeled as Boolean functions. PRFs are computationally indistinguishable from random functions.

$$F:\left\{ {0, \, 1} \right\}^{n} \times \left\{ {0, \, 1} \right\}^{s} \longrightarrow\left\{ {0, \, 1} \right\}^{l}$$(1) -

3.

Symmetric Key Encryption Protocol: It contains three polynomial-time functions (\(Gen\), \(Enc\), \(Dec\)). \(Gen\) function receives a secure parameter λ and generates a secret key \(SK\). The \(Enc\) algorithm encrypts \(P\) into \(C\) using \(SK\). Finally, the \(Dec\) algorithm decrypts \(C\) into \(P\) using \(SK\). Therefore, we used non-deterministic Advanced Encryption Standard-Galois/Counter Mode (AES-GCM) [36, 37] with a 256-bit key, a 96-bit IV (Initial Vector), and authentication tags to encrypt the records. Using AES-GCM will guarantee both data authenticity and confidentiality. For key derivation, we used Scrypt as a very strong Cryptographic Key-Derivation-Function (KDF) with parameters such as the iteration count for CPU usage (n), block size (r), the parallelization factor of the algorithm (P), and securely generated random salts. This guarantees that an adversary cannot link two queries even if the same queried keywords are used. AES-GCM is secure against both Chosen-Plaintext Attack (CPA) [38] and Chosen-Ciphertext Attack (CCA).

-

4.

Edit Distance (ED) [39]: It is the least amount of required edits operations to convert one string into another. It is used for searching in fuzzy keyword techniques to determine how similar two texts are. The ED (S1, S2) between two strings S1 and S2 is the smallest number of required operations to convert one string S1 to another string S2. In general, the similarity of the two terms increases with decreasing editing distance. Three basic operations are available:

-

(1)

Substitution: an operation to transfer a letter to another in a string.

-

(2)

Deletion: an operation to remove a character from a string.

-

(3)

Insertion: an operation to insert a letter into a string.

-

(1)

For example, the steps needed to convert the string “hidden” to the string “kidding” are as follows:

-

(1)

hidden \(\to\) kidden (substitution of h by k).

-

(2)

Kidden \(\to\) kiddin (substitution of e by i).

-

(3)

Kiddin \(\to\) Kidding (insertion of g).

3.3 System model

In this system model, our primary focus is on outsourcing the AMI data in an encrypted format to a cloud server (CS) while enabling authorized users to query it. As illustrated in Fig. 1, the system comprises three entities: the AMI company, authorized data users (U), and the CS. The AMI company, also known as the data owner (DO), that owns the plaintext of AMI data and continuously collects newly generated data. The DO encrypts plaintext AMI data \(P({p}_{1}, {p}_{2}, \dots , {p}_{n})\) into ciphertexts \(C({c}_{1}, c, \dots , {c}_{n})\) using an appropriate cryptographic algorithm before sending it to the CS. To achieve effective secure search over outsourced encrypted data, the DO must create secure indexes for the original smart grid data using different master key. Now, the DO has two types of encrypted data: encrypted smart grid records (C) and their corresponding secure index records. The DO uploads this data to the CS. The U frequently need to query the encrypted outsourced electricity usage data for their specific tasks. Therefore, when the U wants to search for a keyword w in the outsourced encrypted data, he first computes the trapdoor Tw for the keyword w and send it to the CS. Upon receiving Tw, the CS performs a search operation on the ciphertexts and returns the relevant encrypted records to the U. Finally, the U decrypts the returned encrypted records using the master key (MK).

3.4 Threat model

The DO and the U are assumed to be completely trusted. Specifically, they adhere to the protocol and do not disclose their encryption keys to anyone. While our scheme makes realistic assumptions about the client’s data based on the properties of smart grid data outlined in Sect. 1, it differs from SSE approaches that assume the client’s data consists of random keyword combinations. Similar to most SSE schemes, our threat model assumes that the third party is honest-but-curious. This means the CS should provide standard cloud services without modifying, deleting, or sharing the client’s data with other parties. However, the CS may attempt to find a relationship between trapdoors and smart grid data in its storage system and analyze the content of processed data, such as query keywords. External attackers and unauthorized users might collect and analyze statistical information, including encrypted indices, trapdoor queries, statistical language models, and query results. Therefore, one of the privacy goals of our proposed SSE scheme is to thwart all attackers attempting to analyze and obtain statistical information. We will consider two threat models as shown as follows:

-

Known-ciphertext attack (KCA): in this model, the CS or adversary is assumed to know only the encrypted record collections and the secure indices [40, 41] that are outsourced to the CS.

-

Known-background attack (KBA): in addition to the encrypted information mentioned above, the CS or an adversary possesses further information, including keyword frequency statistics derived from search conditions. The adversary tries to identify these keywords and the search conditions of the trapdoors/indices [40, 42].

3.5 Design goals

Our plan should meet the following specifications:

-

1.

Keyword search: the scheme should be more efficient to retrieve records containing the required keyword \(w\).

-

2.

Privacy-preserving: the secure scheme must not reveal any information to the CS, such as the plaintext of keywords, trapdoor \({T}_{w}\), and encrypted records beyond limited leakage. We consider limited leakage through the following factors:

-

(i)

Index privacy the CS should not be able to find any relationship between the keywords and the records through the secure index.

-

(ii)

Trapdoor privacy the CS should not be able to distinguish between two trapdoors sent in search requests or reveal any information about the queried keyword.

-

(iii)

Keyword privacy the CS should not be able to identify the keyword from the trapdoor or secure indices through statistical analysis, such as keyword frequency.

-

(iv)

Search pattern privacy information derived from returning two or more search results related to the same keyword must be concealed from the CS. This means that when two search queries are performed, the CS cannot link their underlying keywords.

-

(v)

Access pattern privacy information extracted after returning a sequence of search results corresponding to the keyword must not be visible to the CS. This means the CS should not be able to reveal the identifiers of all matching records resulting from the search queries.

-

(i)

3.6 Outsourced data format for smart grid data

A third-party CSP hosts and manages the cloud database on behalf of an organization. Therefore, it is essential that the stored data be encrypted and outsourced in a format that the CSP cannot understand. We assume that the table \({T}_{x,y}\) has \(x\) rows and \(y\) columns. \({T}_{x,y}^{\text{e}}\) represents the encrypted data table. According to SCKHA algorithm, the first part of the ciphertext (\({C}_{1}\)) is computed as shown in Eq. 2, and the second part for the ciphertext (C2) is computed as shown in Eq. 3. The final format for the ciphertext (\({C}_{f}\)) is computed as shown in Eq. 4. Consequently, every plaintext character is represented by two ciphertext characters. We can exploit this ciphertext format as a trapdoor to search over the encrypted data. However, the SCKHA scheme faces a deterministic problem, which generates the same ciphertext for the same plaintext and key. This can leak information to an eavesdropper, who may recognize known ciphertexts through statistical analysis. So, it is necessary for the DO must generate a random string using a pseudo-random function and embed this string within SCKHA’s ciphertext format to make it dynamic while maintaining the ability to search over the encrypted cloud database. Therefore, the generated random character is appended before every character of the encrypted index for each data unit in the outsourced data, as represented in Eq. 5 and shown in Fig. 2. In this way, the DO can create an embedded encrypted index \({I}_{\text{SCKHAEmbd}}\) for the smart grid dataset and generate a trapdoor \({T}_{w}\) for the required word to send to the cloud server.

where \(P\) refers to the plaintext, \({K}_{(L,R)}\) is the key hash side whether it is left or right,\(\text{idx}\) refers to the computed position for the index in the key hash side and refers to the mapped cipher index.

where \({I}_{\text{SCKHA}}\) refers to the encrypted index, and \({I}_{\text{SCKHAEmbd}}\) refers to the encrypted embedded index.

4 The proposed SEE scheme for smart grid data

4.1 The proposed exact/partial keyword SSE scheme

The DKS-SCKHA scheme consists of 8 polynomial-time algorithms, as shown in Fig. 3. The steps of the 8 polynomial-time algorithms for DKS-SCKHA scheme (KeyGen, BuildEncryptedIndex, EmbedSCKHAIndex, Enc, Trapdoor, Clear, Search, Dec) are described as follows:

-

KeyGen (\(\text{MK}\)): It takes a security parameter (\(\text{MK}\)) and computes the hash value \(h (\text{MK})\) where h: {0, 1}* × {0, 1}r is a hash function. It is performed by the DO to generate the two portions \({K}_{L}\), and \({K}_{R}\) from the master key as shown in Algorithm1.

-

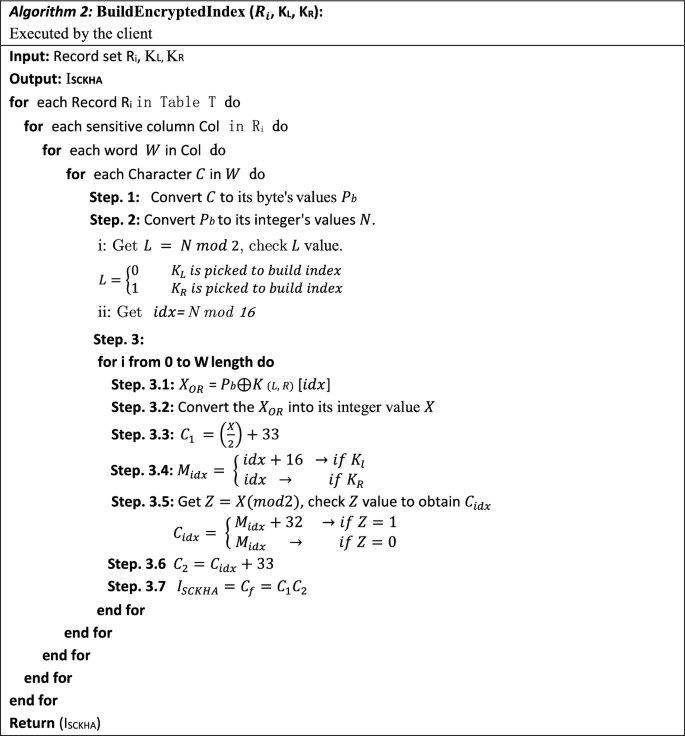

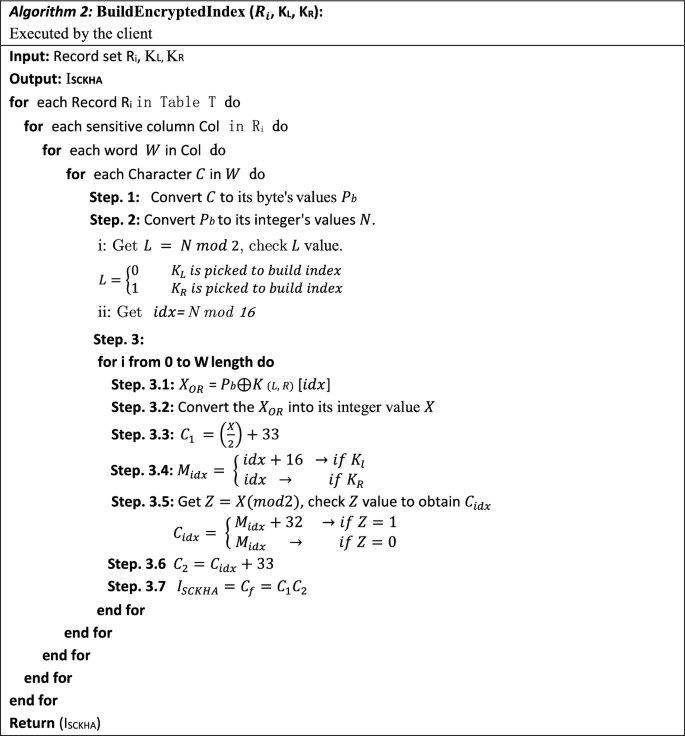

BuildEncryptedIndex \(({R}_{i},{K}_{L},{K}_{R}\)): This function is executed for each data unit \({w}_{i,j}(0\le j\le n)\) in the record set \({R}_{i}\). The DO takes both portions of master key \(({K}_{L},\) \({K}_{R})\), and \({R}_{i}\) as inputs, and then outputs the encrypted index \({I}_{\text{SCKHA}}\) for each column as shown in Algorithm 2.

-

EmbedSCKHAIndex (\({I}_{\text{SCKHA}}\)): This function calculates the codeword by taking the generated encrypted index \({I}_{\text{SCKHA}}\) as input. It uses Pseudo-random functions (PRFs) to generate an embedded random string (ERS), which is then append them across \({I}_{SCKHA}\). Embedding the ERS through the \({I}_{SCKHA}\) is achieved by appending ERS characters before every character in the \({I}_{\text{SCKHA}}\) to produce the codeword list \({I}_{\text{SCKHAEmbd}}\) as shown in Algorithm 3 and Fig. 2. This will randomize the searchable ciphertexts (secure indexes) for all records in the table with keeping the advantages of searching through it. To increase the security, the list order of the codeword \({I}_{\text{SCKHAEmbd}}\) is randomized before sending it to the CS.

-

Encryptcolumns (\({\mathbf{R}}_{{\varvec{i}}},\mathbf{S}\mathbf{K}\)): The DO takes the record of data and the \(\text{SK}\) as inputs. The DO encrypts the sensitive data columns stored in the cloud database using a nondeterministic encryption technique such as AES-GCM with a 256-bit key, using a Scrypt derivation key function for a random 96-bit IV, and including authentication tags to ensure data privacy, as shown in Algorithm 4.

-

Trapdoor (\({K}_{L}\), \({K}_{R}\), \(w\)): This function is a deterministic trapdoor generation executed by the DO. It takes the keyword w and the two parts of the master key (\({K}_{L}\),\({K}_{R}\)) as inputs, and outputs the trapdoor \({T}_{w}\) for the keyword \(w\). To transmit \({T}_{w}\) to the CS securely across channels, the DO needs to obfuscate the trapdoor \({T}_{w}\). This is achieved by embedding random strings across the trapdoor to produce the random trapdoor T′W. Algorithm 5 describes the steps for generating the random trapdoor for the keyword w.

-

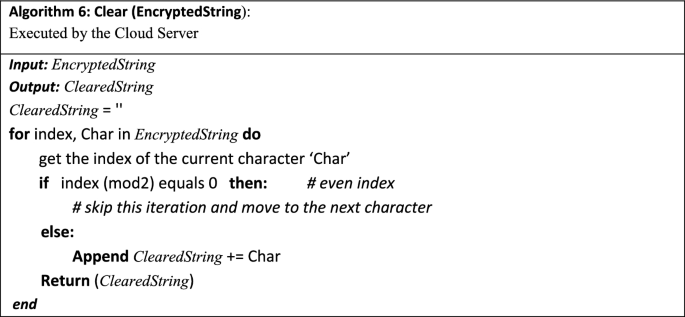

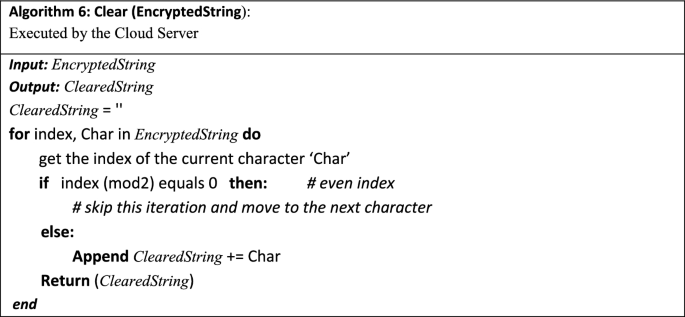

Clear (ERS): The CS removes the ERS from both the random trapdoor T′W and the codeword \({\text{I}}_{{{\text{SCKHAEmbd}}}}\) as shown in Algorithm 6. This algorithm is executed two times: once for \({\text{T}}_{{\text{w}}}\) to extract T′W and once for \({\text{I}}_{{{\text{SCKHAEmbd}}}}\) to extract \({\text{I}}_{\text{SCKHA}}\).

-

Search (\({T}_{w}\), \({I}_{\text{SCKHA}}\)): It is a deterministic search algorithm executed by the CS. It takes the trapdoor \({T}_{w}\) and the encrypted index \({\text{I}}_{\text{SCKHA}}\) as inputs. If the keyword w satisfies the query condition (w ∈ R), the function returns 1; otherwise, it returns 0, as shown in Algorithm 7. When the query condition is satisfied, the CS returns the encrypted records to the user.

-

Dec (\(\text{DecTable},\text{ SK}\)): The DO decrypts the retrieved encrypted records stored in the cloud database using symmetric key SK.

4.1.1 Dynamic DKS-SCKHA for smart grid

As a result of the frequent updates to smart grid data, it is necessary to support modifications to one or more records. Therefore, an updating operation is described as follows:

-

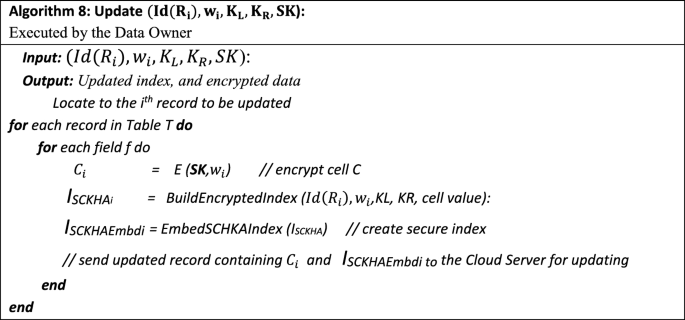

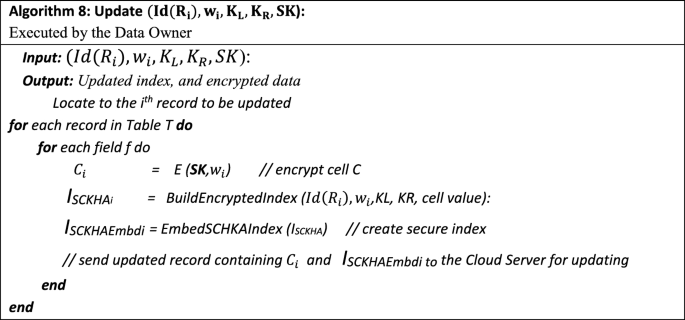

Update \((\text{Id}({R}_{i}), {K}_{L},{K}_{R},{R}_{i},SK\)): If the DO wants to modify a record(s), he/she first locates the position information \(d({R}_{i})\) corresponding to that record(s) and replaces the old record(s) with a new data record(s) after applying both secure index creation and encryption for the keyword wi in the record(s). The DO builds the codeword by taking the two portions of the master key \(({K}_{L},\) \({K}_{R})\), and \({R}_{i}\) as inputs, then outputs the encrypted index \({\text{I}}_{\text{SCKHA}}\) as shown in Algorithm 2. Next, the DO uses PRFs to generate ERS and embed them through the \({I}_{\text{SCKHA}}\) string as shown in Algorithm 3. After that, the DO re-encrypts the keyword wi in the record using SK as shown in Algorithm 4. Finally, the DO sends the updated data record(s) to the CS to replace the old record(s) according to their location, as shown in Algorithm 8.

In our SSE scheme, the embedded index \({\text{I}}_{\text{SCKHAEMbd}}\) of the data, along with the encrypted value, will be outsourced to the cloud database. The reason for outsourcing the data to the CS in this format is to conceal the outsourced data from cryptanalysis attacks. During the search operation, the DO will first generate the trapdoor Tw for the keyword w and then distort it by permeating ERS across the trapdoor to produce \(T^{\prime}_{w} .\) This method prevents the adversary or the CS from guessing the keyword that may have been inferred from earlier trapdoors. Data leakage may be determined by learning the retrieved records for the previous trapdoors and the frequency of identical ciphertext values in the encrypted indexes. Thus, the U can safely send \(T^{\prime}_{w}\) to the CS. On the other side, for each record Ri, the CS first runs the Clear algorithm to remove ERS from both \(T^{\prime}_{w}\) and \(I_{{{\text{SCKHAEMbd}}}}\) converting them back to \({T}_{w}\) and \({I}_{\text{SCKHA}}\) respectively, as described in Fig. 4. Now, it is time to perform the search operation using the Search (\({T}_{{\text{w}}_{i}}\), \({I}_{{\text{SCKHA}}_{\text{i}}}\)) function on the encrypted data to check whether the record Ri contains wi. The CS will return one or more records that contain wi to the DO. Finally, any updates will be done by the DO, who will upload the new encrypted data and \({I}_{\text{SCKHAEmbd}}\) to the outsourced encrypted database as shown in Algorithm 8.

4.2 The proposed fuzzy keyword SSE scheme

In this section, we will extend the DKS-SCKHA scheme to support a dynamic fuzzy keyword search on an encrypted database based on the SCKHA algorithm (DEFKS-SCKHA). The first step in building this scheme is to construct a fuzzy keyword set based on the wildcard technique [43, 44]. The wildcard fuzzy keyword set \({\text{FS}}_{{\text{w}}_{\text{i}},\text{d}}=\{{FS}_{{\text{w}}_{\text{i}},0},{FS}_{{\text{w}}_{\text{i}},1},{FS}_{{\text{w}}_{\text{i}},1},\dots \dots .{FS}_{{\text{w}}_{\text{i}},\text{d}}\}\) uses edit distance d = 1 for the keyword \({w}_{i} \in W (1 \le \text{ i} \le p)\). The total number of variations of the keyword in the wildcard technique is constructed by 2l + 2 when d = 1, instead of (2l + 1) × 26 + 1 as in the straightforward approach. A wildcard indicates all the edit operations at the same position for the keyword wi. For example, taking the keyword ‘ CAPITAL’ , the fuzzy keyword set with d = 1 is: {CAPITAL, *APITAL, C*APITAL, CA*PITAL, CA*ITAL, …., ‘ CAPITA*L, ‘ CAPITA*’ , CAPITAL*’ }. Therefore, the total number of variations for the keyword ‘CAPITAL’ is 16, which is much smaller than the 391 variations in the enumeration (straightforward) method. This helps improve search efficiency and saves storage space. Algorithm 9 shows the detailed operation to construct the fuzzy keyword set. The DEFKS-SCKHA scheme comprises eight polynomial-time algorithms, as described in Sect. 4.1. However, certain algorithms (BuildfuzzyIndex, EmbedfuzzyIndex, Trapdoor, Clear, Search) require some modifications to accommodate the fuzzy keyword set.

We can describe the functionality of the BuildfuzzyIndex, EmbedfuzzyIndex, Trapdoor, Clear, and Search algorithms as follows:

-

BuildfuzzyIndex (\({\text{K}}_{\text{L}}\), \({\text{K}}_{\text{R}}{,\text{ FS}}_{{\text{r}}_{\text{i}},\text{d}}\)): The DO will first apply the fuzzy keyword set algorithm to each data unit in the record to generate a set of keywords \({\text{FS}}_{{\text{rw}}_{\text{i}},\text{d}}\) for each data unit in the attribute. Then, the DO will apply the BuildEncryptedIndex algorithm, as shown in Algorithm 2. This algorithm takes two portions of the master key (KL, KR), and the generated fuzzy keyword set \({\text{FS}}_{{\text{rw}}_{\text{i}},\text{d}}\) as input, and outputs one index vector for each fuzzy keyword.

-

EmbedfuzzyIndex(\({\text{If}}_{\text{SCKHA}}\)): This function calculates the codeword by taking the generated fuzzy encrypted index \({\text{If}}_{\text{SCKHA}}\) as input and concatenating a random character before every character of the fuzzy encrypted index \({\text{If}}_{\text{SCKHA}}\) string as shown in Algorithm 10. The generated random characters are then embedded through the characters of the generated encrypted index \({\text{If}}_{\text{SCKHA}}\) to produce the codeword \({\text{If}}_{\text{SCKHAEmbd}}\). We disturb the order of the list for the codeword \({\text{If}}_{\text{SCKHAEmbd}}\) and send it to the CS.

-

Trapdoor (\({K}_{L}\),\({K}_{R}\),\({\text{FS}}_{{\text{w}}_{\text{i}},\text{d}}\)): This algorithm is run by the DO, taking the fuzzy keyword set \({\text{FS}}_{{\text{w}}_{\text{i}},\text{d}}\) and the two parts of the master key (\({K}_{L}\),\({K}_{R}\)) as inputs. It outputs the fuzzy trapdoor set \({\text{FTs}}_{\text{wi},\text{d}}\) for the keyword\({\text{w}}_{\text{i}}\). To securely transmit \({\text{FTs}}_{\text{wi},\text{d}}\) to the CS across channels, the DO must hide the generated fuzzy trapdoor set\({\text{FTs}}_{\text{wi},\text{d}}\). This is achieved by permeating random strings across the fuzzy trapdoor set strings to produce the fuzzy random trapdoor FT′swi,d

-

Clear (ERS): The CS removes the ERS from both \(T^{\prime}s_{wi,d}\) and \({\text{If}}_{\text{SCKHAEmbd}}\) using the steps shown in Algorithm 6. This algorithm is run twice: once for \(T^{\prime}s_{wi,d}\) to extract the trapdoor set \({\text{Ts}}_{{{\text{wi}},{\text{d}}}}\) and once for \({\text{If}}_{{{\text{SCKHAEmbd}}}}\) to extract the \({\text{If}}_{{{\text{SCKHA}}}}\).

-

Search (\({\text{Ts}}_{\text{wi},\text{d}}\), \({\text{If}}_{\text{SCKHA}}\)): This algorithm is run by the CS, taking the trapdoor set \({\text{Ts}}_{\text{wi},\text{d}}\) and \({\text{If}}_{\text{SCKHA}}\) as inputs. It returns 1 if w ∈ R (satisfying the query condition) or 0 otherwise, as shown in Algorithm 11. The CS returns the encrypted records to the user when the query condition is satisfied.

Finally, any updates will be performed by the DO to upload the new encrypted data and \({\text{If}}_{\text{SCKHAEmbd}}\) to the outsourced encrypted database. The update function is designed to update a dictionary-based index value. It takes the index dictionary and the new value to be added to the index. First, the function clears the ERS and checks if the key of the new value already exists in the index dictionary. If the key exists, the function appends the new value to the list of values associated with that key. If the key does not exist, the function creates a new key-value pair in the index dictionary and saves it to the \({\text{If}}_{\text{SCKHAEmbd}}\) file on the CS.

5 Security analysis

Privacy-preserving schemes may retrieve rows that do not include the requested keyword (false positives) or may miss out on some rows that do contain the keyword (false negatives). This utility loss can be characterized by two metrics: True Positive Rate (TPR) and False Positive Rate (FPR). TPR is the probability that the system returns rows containing the queried keyword as a query’s response. FPR is the probability that the CS returns rows that do not contain the requested keyword as a query’s response. Typically, TPR tends to approach 1, indicating higher accuracy in retrieving relevant results, while FPR tends to approach 0, indicating fewer irrelevant results returned by the CS.

5.1 Security analysis for exact/partial keyword search scheme

Generally, our scheme improves the efficiency of the SSE model while reducing information leakage. This section evaluates the privacy aspects of the DKS-SCKHA scheme, including Known-Ciphertext Attack, Known-Background Attack, Search Pattern Privacy, and Access Pattern Privacy, as described as follows:

5.1.1 Known-ciphertext attack (KCA)

Theorem 1

DKS-SCKHA guarantees that the adversaries cannot learn any keywords or search conditions from indices and/or trapdoor under KCA.

Proof

An adversary may attempt to analyze the polynomial function used in the search operation to predict encrypted keywords. The DKS-SCKHA scheme addresses this through several mechanisms:

-

Nondeterministic encryption: For the outsourced ciphertext, the DO uses a nondeterministic encryption technique (AES-GCM with 256-bit encryption) to encrypt each smart grid row using a shared key (SK). This ensures that the same keywords produce different ciphertexts each time, thereby guaranteeing both data authenticity and confidentiality. Consequently, the CS cannot infer any information about the plaintext rows of the smart grid data.

-

Master key usage: The DO uses another different key (MK) to create the encrypted searchable ciphertext. This MK is concealed by applying a hash function to it. The hashed key is then split into two chunk-keys (KL and KR) using a splitting technique [34, 35]. One of these chunks is chosen randomly based on a calculation performed on the plaintext keyword to construct the encrypted index. Therefore, even if the CS knows the MK, it cannot deduce any information about the encrypted outsourced data. The SCKHA algorithm and Pseudo-random Functions (PRFs) are used together to compute keywords, resulting in a different codeword list that is altered after each query process. This obfuscates the order of the retrieved records, preventing the CS from detecting any pattern.

-

Secure trapdoor construction: For each search query, the SCKHA algorithm and PRFs function are used together to compute a secure trapdoor. This ensures that both the conditions and the keywords are protected under the KCA attack. Thus, the DKS-SCKHA scheme effectively prevents adversaries from learning any keywords or search conditions from indices and/or trapdoors under the KCA.

5.1.2 Known-background attack (KBA)

Theorem 2

DKS-SCKHA guarantees that the CS or adversaries cannot recognize the keywords and conditions of the indices/trapdoors under KBA.

Proof

Currently, the adversary or the CS plans to use their prior knowledge and history information of the dataset to infer keywords based on search frequency. This could lead to the revelation of sensitive information within the smart grid data. The DKS-SCKHA scheme generates different trapdoors for similar keywords due to the randomly generated embedded strings. DKS-SCKHA aims to protect the privacy of keyword search operations, which involves three types of privacy: ciphertext privacy, index privacy, and trapdoor privacy. Ciphertext set privacy is achieved by encrypting the records using a block cipher, such as AES-GCM, which is secure against both Chosen-Plaintext Attacks (CPA) and Chosen-Ciphertext Attacks (CCA). In this way, the CS cannot infer any information without the key SK. The concepts of index unlinkability and trapdoor indistinguishability privacies will be described as follows:

-

Index unlinkability (IND-UN): It is a notation for security that is used to preserve the privacy of SSE schemes [45,46,47]. It prevents the CS from discovering any relationship between the encrypted indexes when more than one query is submitted. Therefore, the CS cannot infer whether two indices contain the same keywords. Assuming that the challenger (Ch) is a trusted confidential sender and the adversary (Adv) is an untrustworthy CSP, IND-UN ensures that the adversary cannot recognize whether two indexes contain the same plaintext keyword. The following content uses \(x\stackrel{R}{\leftarrow }X\) to indicate that x is uniformly random from the set X.

Theorem 3

We suppose that if adversary AdvA can break the created IND-UN with a non-negligible advantage, then there exists another adversary, AdvB, who can break the pseudo-random generated string and the SCKHA algorithm with a non-negligible advantage.

Proof

Setup: \(Ch\) obtains the private key \(MK\stackrel{R}{\leftarrow }KeyGen\) and generates \({K}_{L}\) \({and K}_{R}\).

Query: AdvA chooses two keywords: \({(w}_{0},{ w}_{1})\)∈ \(M\), where; \(M\) refers to the plaintext data in the dataset.

Challenge: Initially, \(Ch\) assumes a Boolean variable and sets \(b=1\) if there exists the same keyword in the two data units; otherwise\(, b = 0\). The \(Ch\) computes the indices of \({w}_{0}\) and\({w}_{1}\). For each keyword wi\(\left( {1 \le i \le n} \right)\), where \(n\) is the number of keywords.

$$T_{{{\text{wib}}}} = f_{{\text{MK }}} \left( {{\text{SCKHA}}_{{{\text{embed}}}} \left( {w_{{{\text{ib}}}} } \right)} \right)$$(7)$$X_{i} = S_{{1\left( {{\text{PRF}}} \right)}} f_{{\text{mk }}} (I_{{{\text{SCKHA}}_{1} }} \left( {w_{{{\text{ib}}}} )} \right) + \ldots + S_{{n\left( {{\text{PRF}}} \right)}} f_{{\text{mk }}} (I_{{{\text{SCKHA}}_{{\text{n}}} }} \left( {w_{{{\text{ib}}}} )} \right)$$(8)We inserted \(n{X}_{i}\) into a list of length \(n\) and shuffle the order of the list. Then, for both keywords, the \(Ch\) calculates the indexes \({I}_{wb}\), \({I}_{wb}\) for \({w}_{0}\), \({w}_{1}\), and sends these indexes to the adversary \(Adv\).

Adversary response: the adversary guesses and assigns\(b{^{\prime}} = 1\), if there exists same keyword in the two pieces of indexes; otherwise,\(b{^{\prime}} = { }0\), with \(b{^{\prime} } \in { }\left\{ {0,{ }1} \right\}.\) The adversary’ s advantage, denoted as \(\left| {Pr\left[ {b = b^{\prime}} \right] - 1/2} \right|\) is used by the AdvB to emulate AdvA and compromise \({S}_{1\left(PRF\right)}\) and\({\text{I}}_{\text{SCKHAEmbd}}\). Embedding the \({S}_{1\left(PRF\right)}\) before every character in the \({I}_{\text{SCKHA}}\) to produce the codeword list \({\text{I}}_{\text{SCKHAEmbd}}\) randomizes the searchable ciphertexts (indexes) for both identical plaintext data units. Additionally, randomizing the first letter in \({\text{I}}_{\text{SCKHAEmbd}}\) for every record prevents identical data units from being found in the same data block. This prevents the adversary from discovering any relation between two identical indexes in the outsourced encrypted data, even if they exist in the same data block. Therefore, adversary \(AdvB\) cannot break \({S}_{1\left(PRF\right)}\) and the SCKHA algorithm, as the adversary is a polynomial-time attacker. Consequently, the advantage is negligible, which indicated that our scheme achieves index unlinkability.

-

Trapdoor indistinguishability (TD-IND): It is a security notation used to maintain the security of SSE schemes. It aims to conceal the underlying keyword information of trapdoors. This prevents the adversary from discovering any relationship between the trapdoors generated from two submitted queries, thus preventing the determination of the underlying keyword. We assume that adversary \(Adv1\) is an untrustworthy CSP and Challenger \(Ch\) is a confidential and trusted sender. TD-IND ensures that the adversary is unable to determine whether two trapdoors correspond to similar plaintext keywords w0 and w1. The following contents uses \(x\stackrel{R}{\leftarrow }X\) to denote \(x\) as uniformly random from the set X, and \(n\) as the number of keywords.

Theorem 4

We suppose that if adversary \(\text{AdvA}\) can break the created TD-IND with a non-negligible advantage, then there is another adversary \(\text{AdvB}\) who can break the pseudo-randomly generated string and the SCKHA algorithm with a non-negligible advantage.

Proof

Setup: challenger \(Ch\) obtains the private key \(MK\stackrel{R}{\leftarrow }KeyGen\) and generate \({K}_{L}\) and \({K}_{R}\).

Query: Adv A will select two keywords \({w}_{0} {\text{and} w}_{1}\)∈ \(M\), where \(M\) denotes the plaintext data in the dataset.

Challenge: \(Ch\) calculates the trapdoor \({T}_{w}\) for the keyword \({w}_{b}\), and then computes the \({T^{\prime}}_{w}\) for \({T}_{w}\) when \(b\stackrel{R}{\leftarrow }\left\{\text{0,1}\right\}\). \({T^{\prime}}_{w}\) for the keyword \({w}_{b}\) can be calculated as described in Eq. 7 and it differs every time from the previous one. After the Ch obtains the codeword for the keyword \({w}_{b}\), he/she submits this \({T^{\prime}}_{w}\) to the adversary \(AdvA\).

Adversary response: It is time for the adversary to make a guess and choose b’ ∈ {0, 1}. Using the advantage of the adversary \(AdvA\) which is \(\left|Pr\left[b={b}^{\prime}\right]-\frac{1}{2}\right|\), the adversary \(AdvB\) will simulate adversary \(AdvA\) to compromise the pseudo-random function and the SCKHA algorithm. Because the adversary is a polynomial time attacker, it is impossible for them to break the SCKHA format or the pseudo-random function. Since the advantage is negligible, our technique satisfies the trapdoor indistinguishability requirement.

5.1.3 Search pattern privacy

Theorem 5

The proposed scheme protects privacy of search patterns.

Proof

When two search queries are launched by the \(\text{DO}\), the \(\text{CS}\) should not be able to determine whether these two queries have the same underlying keyword. This kind of security requirement is called search pattern privacy [48]. In our scheme, as described in TD-IND, when two trapdoors are given, the adversary cannot discover any relation between the generated trapdoors of two submitted queries to determine the underlying keyword.

5.1.4 Access pattern privacy

Theorem 6

Access pattern privacy is protected in the proposed scheme.

Proof

It is a leakage that indicates the matching record identifiers revealed during the search operation. This leakage primarily stems from the records retrieved by the user after obtaining the search results. Using random searchable ciphertext (embedded index), which starts from the first character will prevent them from being in the same data block. Additionally, the locations of the returned records are shuffled after executing each query. In this way, even if a query equivalent to the previously executed one is issued, the \(\text{CS}\) will see a different set of records being queried and returned, making it difficult to deduce patterns. This implies that the access pattern remains protected. Many schemes may insert some redundant irrelevant records on top of the target ones to confuse the \(\text{CS}\). But in our opinion, adding these redundant irrelevant records will decrease \(\text{TPR}\) and increase \(\text{FPR}\).

5.2 Security analysis for fuzzy keyword search scheme

The use of the generated vectors in both index and trapdoor generations requires further explanation in addition to the security analysis mentioned before in Sect. 5.1. Therefore, we can add the security analysis for three aspects: Index and query confidentiality, trapdoor unlinkability, and search privacy.

5.2.1 Index and query confidentiality

In the known ciphertext model, the data available to the CS includes encrypted index records and query trapdoors. However, neither the record index vectors nor the query vectors can be detected by the CS because they are encrypted with a secret key and then embedded with ERS. Additionally, there is no relationship between the record index vectors and the query vectors that the CS can exploit to reveal the data. Identical query requests submitted by authorized users generate different query indexes, preventing the CS from performing cryptanalysis. Consequently, index and query confidentiality are secure against the known ciphertext model.

5.2.2 Trapdoor unlinkability

Embedding the ERS through query trapdoor characters helps in producing distinct trapdoor vectors even for the same search keyword. The CS cannot differentiate between two trapdoors generated by the same keyword within polynomial time. As a result, it is challenging for the \(\text{CS}\) to infer the relation between two query trapdoors from the relevance score, making it impossible to link trapdoors.

5.2.3 Search privacy

Theorem 7

The DEFKS-SCKHA scheme is secure with respect to search privacy.

Proof

The computation of the index and the request for the same keyword are equivalent in the wildcard-based technique. Therefore, using reduction, we only need to demonstrate the privacy of the index. If the DEFKS-SCKHA scheme fails to achieve privacy for the embedded fuzzy encrypted index against the indistinguishability for the Chosen-Keyword-Attack (CKA), it implies that there exists an algorithm \(\text{Alg}\) capable of extracting implied information from the index for a specific keyword. We can construct another algorithm Alg′, which utilizes \({\text{Alg}}\) to determine whether some function \(f^{\prime}\) is a pseudo-random function or a random function. When trapdoor queries are submitted, the adversary Adv generates two keywords \(w_{0}\) and \(w_{1}\) with similar edit distance and length as a challenge \({\text{Ch}}\), which can be relaxed by including some extra trapdoors. Alg′ randomly selects \(b \in \left\{ {0, 1} \right\}\) and submits \(w_{b}\) to \({\text{Ch}}\). Subsequently, Subsequently, Alg′ receives a value V derived either from a random function or a pseudo-random function. Alg′ then forwards \(V\) to\({\text{Alg}}\), who replies with\(b \in \left\{ {0, 1} \right\}\). Assuming \({\text{Alg}}\) correctly guesses \(b\) with non-negligible advantage, this indicates that V is not randomly computed. Consequently, Alg′ concludes that f′ is a pseudo-random function. Based on this assumption, the pseudo-random function cannot be distinguished from the actual random function, and \(\text{Alg}\) is mostly guesses b correctly with estimated probability 1/2. Thus, the search privacy is obtained.

6 Implementation and performance evaluation

Our SSE scheme is implemented in Python (version 3.10) on a Windows 11 Operating System, running on an Intel Core i7-8550U processor (1.80–1.99 GHz), equipped with 16 GB RAM and a fast Ethernet of 140Gbps. The experiments utilize the Advanced Metering Infrastructure (AMI) statistics obtained from the Energy Information Administration (EIA) official website. This dataset is formatted according to EIA-861 M (2016), which is standard for monthly reporting in the power industry sector [49]. The dataset is provided in CSV format comprising 23 data elements including customer ID, customer address, month, year, etc. We host the AMI dataset on Clever Cloud [50], a public cloud storage platform. The dataset includes various data types such as dates and unsigned integers. We selected 13 attributes for analysis, including data ID, year, month, utility number, and name (Table 2). The dataset size ranges from 1000 to 30,000 records.

The analysis includes measuring time for each phase: generating the encrypted embedded index, encrypting the dataset, performing keyword searches, and decrypting data on the client side. For key setup, we hash the master key using SHA256 for generating a secure index. Dataset encryption employs AES-GCM with a 256-bit key. We use the Scrypt derivation key function with parameters (n = 2^14, r = 8, p = 1), along with securely generated random salts. Additionally, a random 96-bit IV is used as additional data during encryption.

6.1 Performance evaluation for exact/partial keyword search scheme

In this section, we will analyze the computation costs of DKS-SCKHA scheme with different related schemes, theoretically and experimentally respectively.

6.1.1 Theoretical analysis

As shown in Table 3, it includes 8 phases including performance functionalities and privacies between our scheme and other ones. The first six columns demonstrate the functionalities and the efficiency of the schemes. The last two columns of the table illustrate the privacy-preserving properties of the chosen SSE schemes. It is evident that schemes in [22], [24], and [52] support only exact search, whereas the remaining schemes support both exact and partial keyword search. Additionally, all schemes support dynamic operations except those described in [53] and [54].

For index generation size, our scheme requires that the \(\text{DO}\) to process a dataset with attributes, resulting in time complexity of \(O\left(2n*m\right)\). The time complexity for search in DKS-SCKHA is \(O ( n*m)\), where \(m\) is the number of rows in the table and n is the length of the keyword. Therefore, the search efficiency compares favorably with the schemes in [22, 24, 51], and [54]. The scheme in [52] offers the best search complexity; however, it is not dynamic, supports only single-keyword searches, and does not facilitate partial searches.

The search operation in [54] is performed first on Index1 with complexity \(O(K)\) returning a set of records that includes a certain false-positive rate. Subsequently, a search on Index2 with a complexity of \(O(2m\)) is executed on the record set that returned from Index1. This step reduces the false-positives rate. Our schemes eliminate false-positives like those in [51,52,53], and [24]. According to DEFKS-SCKHA, it takes more time for the index construction because it first constructs the fuzzy keyword list. Since the total number of variations of the keyword in the wildcard technique is constructed by \(2n+2\), this significantly impacts the creation of the secure index, resulting in a complexity of \(\text{O}(2{n}^{2}*m)\). When a user wants to search for a specific keyword, a fuzzy keyword set is first generated, requiring \(2n+2\) elements for each keyword. Subsequently, their corresponding trapdoor is also generated. However, longer keywords result in significantly larger fuzzy keyword sets (increasing both index and trapdoor sizes). As the number of characters \(|w|\) in the keyword increases, the size of fuzzy keyword set rises rapidly. Consequently, each encrypted element in the trapdoor must be matched with each encrypted element in the index. Specifically, the time complexity for fuzzy keyword search is \(O\left({n}^{2}*m\right)\). This notably reduces the efficiency of fuzzy search in DEFKS-SCKHA. Both DEFKS-SCKHA and the scheme in [54] support exact, partial, and fuzzy search. Other presented works often lack either privacy protections or functionalities. Therefore, it is clear that our schemes scheme can effectively satisfy most phases.

6.1.2 Experimental analysis

Since Li [22], and Xiong [24] schemes only support the single-keyword query, we will compare them with our experiments using three parameters: encrypted embedded index construction time, trapdoor generation time, and average query search time starting from user request to receiving and decrypting the encrypted results.

-

Embedded index construction time: We outsourced both the encrypted data and the embedded encrypted index for these attributes in a CSV file and which we then uploaded to the clever cloud server. To ensure the confidentiality, the outsourced data is encrypted by AES-GCM with the Scrypt derivation key function, and a randomly chosen IV (Initial Vector) is included as additional data. All encrypted values generated are saved in separate columns for each attribute. The time taken to create the encrypted index \({I}_{\text{SCKHAEmbd}}\) is measured and graphically represented in Fig. 5. The computation time for construction \({I}_{\text{SCKHAEmbd}}\) increases linearly with the number of records. DKS-SCKHA takes more time than Li and Xiong due to the increased complexity involved in constructing the \({I}_{\text{SCKHAEmbd}}\). However, it takes less time than the two schemes in two phases: trapdoor generation and keyword search as shown as follows:

-

Trapdoor cost time: the trapdoor generation phase is executed for different keywords, and the computation time for generating the trapdoor is depicted in Fig. 6. We measured the trapdoor time generation for 5 keywords. The graphical representation demonstrates that our scheme is taking less time than the Li and Xiong schemes.

-

Average keyword search computation time: it represents the final phase of accurately searching encrypted records after uploading the embedded encrypted index file to the cloud server and delivering the trapdoor. Figure 7 illustrates the graphical representation generated from executing an exact keyword search. The search time increases linearly for the keyword with the number of records. We queried by the keyword ‘Spruce Finance’ for the \(\text{Name}\) attribute. Our experimental results indicate that the search time for the DKS-SCKHA scheme is faster than both Xiong [24] and Li [22] schemes. Additionally, Fig. 8 depicts the graph generated from executing the partial keyword search stage using the keyword trapdoor. The computation time for partial keyword search also increases linearly. On average, the search efficiency of the DKS-SCKHA scheme is approximately 35% faster than the Xiong [24] scheme and 68% faster than the Li [22] scheme.

We evaluate the query operation through outsourced encrypted records in three scenarios: single-keyword queries, conjunctive keyword queries, and disjunctive keyword queries. Firstly, the computation time for encrypting rows is plotted in Fig. 9, showing linear growth with increasing record numbers. We also measured the number of retrieved records, average search time, and decryption time for the returned records, detailed in Table 4.

For single-keyword queries, we performed the search operation on different numbers of rows: 10, 20, and 30 k. Both the average search time and corresponding decryption time decrease linearly as the number of returned rows decreases.

For conjunctive and disjunctive keyword queries, we executed the search operation on 30 k rows. In conjunctive keyword query, the filtering rate of the returned records is decreases as the number of conditions increases. Conversely, in disjunctive keyword queries, the filtering rate increases with more conditions. In both cases, the average search time decreases linearly as the filtering rate of returned records decreases. In our proposed model, the \(\text{TPR}\) value is approximately 0.9987 and the FPR value is approximately 0.0001.

6.2 Performance evaluation for fuzzy keyword search scheme

Initially, we construct the fuzzy keyword set for each data unit in the record. Changing the length of each data unit (keyword) affects both the fuzzy keywords generated and the time required for their construction. As the length of each keyword increases, the time needed to construct the fuzzy keyword set also increases. Figure 10 illustrates the time cost to generate the fuzzy keyword set \({\text{FS}}_{{\text{w}}_{\text{i}},\text{d}}\) with d = 1.

We evaluated the performance of the DEFKS-SCKHA scheme using three parameters: encrypted embedded index construction time, trapdoor generation time, and average keyword search time. Experiments on the DEFKS-SCKHA scheme were conducted offline using the Utility Name attribute from the dataset.

-

Embedded index construction time: the time cost of constructing the secure index depends on the number of fuzzy keywords and the number of records. Therefore, more time is required for the fuzzy keyword set phase, which is crucial stage for generating an alternative fuzzy embedded encrypted indexes for each data unit. Figure 11 illustrates the time required for generating indexes for the DEFKS-SCKHA scheme. The index generation process involves creating an index vector for each individual data unit, and encrypting these vectors increases the time linearly with the number of distinct keywords.

-

Trapdoor Cost Time: the trapdoor generation phase is executed for different keywords. The computation time for generating the trapdoor is plotted graphically in Fig. 12. We measured the trapdoor generation time for five keywords. The required time to generate the trapdoor increases linearly with the size of the trapdoor keyword. This increase is due to the additional time needed to build a fuzzy keyword set, which is essential stage for generating fuzzy trapdoor alternatives for each keyword.

-

Average keyword search computation time: this phase contains two major steps: comparing the trapdoor with the embedded encrypted fuzzy indexes and extracting the matched index vector. The time cost to compare the trapdoor with the fuzzy indexes relates to the number of secure fuzzy indexes. As depicted in Fig. 13, the average search time for the DEFKS-SCKHA scheme is almost linearly with the number of keywords.

7 Conclusion and future work

We proposed a new DKS-SCKHA scheme tailored for smart grid data characteristics, emphasizing reliability and efficiency. Our scheme supports both partial and exact searches on encrypted databases effectively. We implemented a framework that integrates the search mechanism with a cloud-based MYSQL server. Experimental results demonstrate that our scheme outperforms other schemes in terms of trapdoor generation and keyword search speed. Moreover, extensive experiments on real datasets confirm that the DKS-SCKHA scheme provides a significantly more efficient tool for searching encrypted databases, achieving a performance improvement of 35 and 68% compared to the Xiong and Li schemes, respectively. The DKS-SCKHA scheme supports three scenarios of keyword search: single, conjunctive, and disjunctive. Theoretical analysis confirms that the DKS-SCKHA scheme is secure against both KCA and KBA attacks. We extended the DKS-SCKHA scheme to support a dynamic fuzzy keyword searches on the encrypted database (DEFKS-SCKHA), making it an effective and easily updatable scheme. The construction of the index in DEFKS-SCKHA involves first generating the fuzzy keyword list, which increases the time required. The total number of the variations of the keywords in the wildcard technique is constructed by \(2n+2\). The time complexity of fuzzy keyword search \(\text{is }O(m*{n}^{2})\). Consequently, the time complexity of fuzzy keyword searches is \(O(m*{n}^{2})\), significantly impacting the efficiency of DEFKS-SCKHA’ s fuzzy search capabilities. The security and efficiency of the DEFKS-SCKHA scheme have been assessed through comprehensive security analysis and experimental evaluation.

The DEFKS-SCKHA scheme becomes impractical with large data collections due to the exponential growth of the fuzzy keyword set, leading to excessive resource consumption and memory usage. As a future direction, integrating Locality Sensitive Hashing (LSH) instead of relying on edit distance d could address these scalability challenges.

Additionally, an open area of exploration involves identifying clients who used electricity within a specific range in the previous year. To fulfill this requirement, employing range search queries using cryptographic techniques such as homomorphic encryption (HE) and order-preserving encryption (OPE) will be necessary.

Data availability

The used dataset during the current study is in the standard format for monthly reports of advanced metering in the power industry [35].

References

Adepu S, Kandasamy NK, Zhou J, Mathur A (2019) Attacks on smart grid: power supply interruption and malicious power generation. Int J Inf Secur 19(2):189–211. https://doi.org/10.1007/s10207-019-00452-z.9

Pilz M et al (2019) Security attacks on smart grid scheduling and their defences: a game-theoretic approach. Int J Inf Secur 19(4):427–443. https://doi.org/10.1007/s10207-019-00460-z

Syrmakesis AD, Alcaraz C, Hatziargyriou ND (2022) Classifying resilience approaches for protecting smart grids against cyber threats. Int J Inf Secur 21(5):1189–1210. https://doi.org/10.1007/s10207-022-00594-7

Arnold WG (2010) {NIST} Framework and roadmap for smart grid interoperability standards, release 1.0, National institute of standards and technology, https://doi.org/10.6028/NIST.sp.1108

Tang J and Sui H (2017) Application technology of big data in smart grid and its development prospect in 2017 International conference on computer technology, electronics and communication ({ICCTEC}), IEEE, https://doi.org/10.1109/icctec.2017.00126

Atta-ur-Rahman NM, Ibrahim DM, Mohammed AA, Khan SC, Dash S (2022) Cloud-based smart grids: opportunities and challenges. In: Dehuri S, Mishra BSP, Mallick PK, Cho SB (eds) Biologically inspired techniques in many criteria decision making: proceedings of BITMDM 2021. Springer Nature, Singapore, pp 1–13. https://doi.org/10.1007/978-981-16-8739-6_1

Hart GW (1992) Nonintrusive appliance load monitoring. Proc IEEE 80(12):1870–1891. https://doi.org/10.1109/5.192069

Elhadad A, Tibermacine O and Hamad S (2022) Hiding privacy data in visual surveillance video based on wavelet and flexible function 2022. 2nd International Conference of Smart Systems and Emerging Technologies (SMARTTECH), Riyadh, Saudi Arabia, pp. 85-90, https://doi.org/10.1109/SMARTTECH54121.2022.00031

Elhadad A, Hamad S, Khalifa A et al (2017) High capacity information hiding for privacy protection in digital video files. Neural Comput Applic 28(Suppl 1):91–95. https://doi.org/10.1007/s00521-016-2323-7

Elhadad A and Rashad M (2021) Hiding privacy and clinical information in medical images using QR code 2021. International Telecommunications Conference (ITC-Egypt), Alexandria, Egypt, pp. 1-4, https://doi.org/10.1109/ITC-Egypt52936.2021.9513979

Song DX, Wagner D, and Perrig A (2000) Practical techniques for searches on encrypted data. In: Proceeding 2000 {IEEE} Symposium on Security and Privacy. {IEEE} Comput Soc https://doi.org/10.1109/secpri.2000.848445

Goh EJ (2003) Secure indexes IACR Cryptology ePrint Archive, p.216

. Chang C and Mitzenmacher M (2005) Privacy Preserving Keyword Searches on Remote Encrypted Data. In: Applied Cryptography and Network Security, Springer Berlin Heidelberg, pp. 442–455. https://doi.org/10.1007/11496137_30

Curtmola R, Garay J, Kamara S, and Ostrovsky R (2006) Searchable symmetric encryption,” In: Proceedings of the 13th ACM conference on Computer and communications security, ACM, https://doi.org/10.1145/1180405.1180417

Curtmola R, Garay J, Kamara S, Ostrovsky R (2011) Searchable symmetric encryption: improved definitions and efficient constructions. J Comput Secur 19(5):895–934. https://doi.org/10.3233/jcs-2011-0426

Sun W. et al. (2013) Privacy-preserving multi-keyword text search in the cloud supporting similarity-based ranking. In: Proceedings of the 8th {ACM} {SIGSAC} symposium on Information, computer and communications security, ACM, https://doi.org/10.1145/2484313.2484322

Xia Z, Wang X, Sun X, Wang Q (2016) A secure and dynamic multi-keyword ranked search scheme over encrypted cloud data. IEEE Trans Parallel Distrib Syst 27(2):340–352. https://doi.org/10.1109/tpds.2015.2401003

Cao N, Wang C, Li M, Ren K, and Lou W (2011) Privacy-preserving multi-keyword ranked search over encrypted cloud data. In: 2011 Proceedings {IEEE} {INFOCOM}, IEEE, https://doi.org/10.1109/infcom.2011.5935306.

Wang XA et al (2016) Efficient privacy preserving predicate encryption with fine-grained searchable capability for Cloud storage. Comput Electr Eng 56:871–883

Fu Z, Ren K, Shu J, Sun X, Huang F (2016) Enabling personalized search over encrypted outsourced data with efficiency improvement. IEEE Trans Parallel Distrib Syst 27(9):2546–2559. https://doi.org/10.1109/tpds.2015.2506573

Andola N, Prakash S, Yadav VK, Raghav SV, Verma S (2022) A secure searchable encryption scheme for cloud using hash-based indexing. J Comput Syst Sci 126:119–137. https://doi.org/10.1016/j.jcss.2021.12.004

Li J, Niu X, and Sun JS (2019) A practical searchable symmetric encryption scheme for smart grid data. In: {ICC} 2019 - 2019 {IEEE} International Conference on Communications ({ICC}), IEEE, https://doi.org/10.1109/icc.2019.8761599

Zhu J, Wu T, Li J, Liu Y, Jiang Q (2021) Multi-keyword cipher-text retrieval method for smart grid edge computing. J Phys Conf Ser 1754(1):12076. https://doi.org/10.1088/1742-6596/1754/1/012076

Xiong H et al (2022) An efficient searchable symmetric encryption scheme for smart grid data. Secur Commun Networks 2022:1–11. https://doi.org/10.1155/2022/9993963

Hiemenz B, Krämer M (2018) Dynamic searchable symmetric encryption for storing geospatial data in the cloud. Int J Inf Secur 18(3):333–354. https://doi.org/10.1007/s10207-018-0414-4

Molla E, Rizomiliotis P, Gritzalis S (2023) Efficient searchable symmetric encryption supporting range queries. Int J Inf Secur. https://doi.org/10.1007/s10207-023-00667-1

Wang D, Wu P, Li B, Du H, Luo M (2022) Multi-keyword searchable encryption for smart grid edge computing. Electr Power Syst Res 212:108223. https://doi.org/10.1016/j.epsr.2022.108223

Wang XA, Huang X, Yang X, Liu L, Xuguang W (2012) Further observation on proxy re-encryption with keyword search. J Syst Softw 85(3):643–654. https://doi.org/10.1016/j.jss.2011.09.035

Li J, Wang Q, Wang C, Cao N, Ren K and Lou W (2010) Fuzzy Keyword Search over Encrypted Data in Cloud Computing. In: 2010 Proceedings {IEEE} {INFOCOM}, IEEE, https://doi.org/10.1109/infcom.2010.5462196

Wang B, Yu S, Lou W, and Hou YT (2014) Privacy-preserving multi-keyword fuzzy search over encrypted data in the cloud. In: {IEEE} {INFOCOM} 2014 - {IEEE} Conference on Computer Communications, IEEE, https://doi.org/10.1109/infocom.2014.6848153

Fu Z, Wu X, Guan C, Sun X, Ren K (2016) Toward efficient multi-keyword fuzzy search over encrypted outsourced data with accuracy improvement. IEEE Trans Inf Forensics Secur 11(12):2706–2716. https://doi.org/10.1109/tifs.2016.2596138

Zhong H, Li Z, Cui J, Sun Y, Liu L (2020) Efficient dynamic multi-keyword fuzzy search over encrypted cloud data. J Netw Comput Appl 149:102469. https://doi.org/10.1016/j.jnca.2019.102469

Tong Q, Miao Y, Weng J, Liu X, Choo K-KR, Deng R (2022) Verifiable fuzzy multi-keyword search over encrypted data with adaptive security. IEEE Trans Knowl Data Eng. https://doi.org/10.1109/TKDE.2022.3152033

Liu G, Yang G, Bai S, Zhou Q, Dai H (2020) {FSSE}: an effective fuzzy semantic searchable encryption scheme over encrypted cloud data. IEEE Access 8:71893–71906. https://doi.org/10.1109/access.2020.2966367

Souror S, El-Fishawy N, and Badawy M (2021) SCKHA: A New Stream Cipher Algorithm Based on Key Hashing and Splitting Technique In: 2021 International Conference on Electronic Engineering (ICEEM), IEEE, https://doi.org/10.1109/iceem52022.2021.9480652

Souror S, El-Fishway N, Badawy M (2022) Security analysis for SCKHA algorithm: stream cipher algorithm based on key hashing technique. Chin J Electron CJE. https://doi.org/10.23919/cje.2021.00.383

Rogaway P, Atluri V (2002) Authenticated-encryption with associated-data. In: ACM Conference on Computer and Communications Security, pp. 98–107

Arunkumar B, Kousalya G (2018) Analysis of AES-GCM cipher suites in TLS. In: Thampi SM, Mitra S, Mukhopadhyay J, Li K-C, James AP, Berretti S (eds) Intelligent systems technologies and applications. Springer International Publishing, Cham, pp 102–111. https://doi.org/10.1007/978-3-319-68385-0_9

Katz J, Lindell Y (2020) Introduction to modern cryptography. CRC Press

Levenshtein VI (1965) Binary codes capable of correcting deletions, insertions, and reversals. Probl Inf Transm 1(1):8–17

Xia Z, Wang X, Sun X, Wang Q (2015) A secure and dynamic multikeyword ranked search scheme over encrypted cloud data’. IEEE Trans Parallel Distrib Syst 27(2):340–352

Wong WK, Cheung DW-L, Kao B, and Mamoulis N (2009) Secure KNN computation on encrypted databases. In: Proc. Int Conf Manage Data, pp. 139–152

Zerr S, Olmedilla D, Nejdl W, and Siberski W (2009) Zerber+R: Top-k retrieval from a confidential index. In: Proc. 12nd Int Conf Extending Database Technol Adv Database Technol (EDBT), pp. 439–449

Wang J et al (2013) Efficient verifiable fuzzy keyword search over encrypted data in cloud computing. Comput Sci Inf Syst 10(2):667–684. https://doi.org/10.2298/csis121104028w

Zhu X, Liu Q, and Wang G A (2016) Novel verifiable and dynamic fuzzy keyword search scheme over encrypted data in cloud computing. In: 2016 {IEEE} Trustcom/{BigDataSE}/{ISPA}, IEEE. https://doi.org/10.1109/trustcom.2016.0147

Abdelfattah S et al (2021) Efficient search over encrypted medical data with known-plaintext/background models and unlinkability. IEEE Access 9:151129–151141. https://doi.org/10.1109/ACCESS.2021.3126200

Song F, Qin Z, Liu D, Zhang J, Lin X, Shen X (2021) Privacy-preserving task matching with threshold similarity search via vehicular crowdsourcing. IEEE Trans Veh Technol 70(7):7161–7175. https://doi.org/10.1109/TVT.2021.3088869

Xiong H, Ting W, Qi Y, Shen Y, Zhu Y, Zhang P, Dong X (2022) An efficient searchable symmetric encryption scheme for smart grid data. Security Commun Netw 2022:1–11. https://doi.org/10.1155/2022/9993963

Baron J, El Defrawy K, Minkovich K, Ostrovsky R, Tressler E (2013) 5PM: secure pattern matching. J Comput Secur 21(5):601–625. https://doi.org/10.1007/978-3-642-32928-9_13

EIA Electricity (2021) https://www.eia.gov/electricity/data/eia861m/#ammeter

Yin F, Rongxing L, Zheng Y, Shao J, Yang X, Tang X (2021) Achieve efficient position-heap-based privacy-preserving substring of keyword query over cloud. Comput Secur 110:102432

Liu Q, Tian Y, Wu J, Peng T, Wang G (2019) Enabling verifiable and dynamic ranked search over outsourced data. IEEE Trans Serv Comput 15(1):69–82

Hoang CN, Nguyen MH, Nguyen TTT, Vu HQ (2023) A novel method for designing indexes to support efficient substring queries on encrypted databases. J King Saud Univ Comput Inf Sci 35(3):20–36

Fu S, Zhang Q, Jia N et al (2021) A privacy-preserving fuzzy search scheme supporting logic query over encrypted cloud data. Mobile Netw Appl 26:1574–1585. https://doi.org/10.1007/s11036-019-01493-3

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations