Abstract

In this paper, a new bounded distribution is introduced and some distributional properties of the new distribution are discussed. Moreover, the new distribution is implemented in the field of engineering to the Cpc process capability index. Three unknown parameters of the distribution are estimated with several estimators, and the performances of the estimators are evaluated with a Monte Carlo simulation. A new regression model is introduced based on this new distribution as an alternative to beta and Kumaraswamy models. Furthermore, it is considered one of the first studies where regression model parameters are estimated using least squares, weighted least squares, Cramér–von Mises, and maximum product spacing estimators other than the maximum likelihood. The efficiency of the estimators for the parameters of the regression model is further assessed through a simulation. Real datasets are analyzed to demonstrate the applicability of the new distribution and regression model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the field of statistics, numerous distributions are presented, and many of them are referred to as lifetime distributions. These distributions are particularly important when analyzing survival times and reliability. To effectively represent the behavior of the sample, statisticians explore various distribution models, especially in domains such as survival analysis and reliability engineering. The selection of a distribution should be made by the analysis based on the characteristics of the sample and the context of the analysis. Applying lifetime distributions to data expressed as proportions or percentages may lead to results diverging from the intended outcomes. Proportional data are frequently encountered in various situations, including mortality rates, recovery rates, smoking prevalence, efficiency of chemical reactions, percentages of educational attainment, and other scenarios. It is crucial to exercise caution when using lifetime distributions with such data, as their application may not align with the nature of proportional or percentage-based information, potentially resulting in inaccurate outcomes. The number of distributions available for modeling these samples is limited. Among the most well known are the beta and Kumaraswamy [1] distributions. Novel alternative distributions for beta and Kumaraswamy are proposed in the statistical literature. In the proposal of these new alternative distributions, various transformations, such as \(X = \exp \left( { - Y} \right),\,\,X = Y/\left( {Y + 1} \right),\,\,X = 1/\left( {Y + 1} \right),\,\,X = 1 - \exp \left( { - Y} \right),\) are applied to the existing random variables \(\left( Y \right)\) to construct a new unit random variable \(\left( X \right).\) Several alternative distributions derived through these transformations are presented as follows: unit-Lindley distribution is derived by [2] using the transformation \(X = Y/\left( {Y + 1} \right)\) where \(Y\) has the Lindley distribution [3]\(,\) unit-Weibull distribution is obtained by [4] with the transformation \(X = \exp \left( { - Y} \right),\) where \(Y\) has the Weibull distribution; unit Burr-XII distribution is introduced by [5] with the transformation \(X = \exp \left( { - Y} \right),\) where \(Y\) has the Burr-XII distribution; unit Johnson SU distribution is derived by [6] using the transformation \(X = 1 - \exp \left( { - Y} \right),\) where \(Y\) has the Johnson SU distribution; unit-Gamma distribution is obtained by [7] with the transformation \(X = \exp \left( { - Y} \right),\) where \(Y\) has the Gamma distribution; unit-inverse Gaussian distribution is obtained by [8] with the transformation \(X = \exp \left( { - Y} \right),\) where \(Y\) has the inverse Gaussian distribution; unit-Birnbaum–Saunders distribution is obtained by [9] with the transformation \(X = \exp \left( { - Y} \right),\) where \(Y\) has the Birnbaum-Saunders distribution; unit-logistic distribution is introduced by [10] with the transformation \(X = Y/\left( {Y + 1} \right)\) where \(Y\) has the logistic distribution; unit log–log distribution is derived by [11] using the transformation \(X = \exp \left( { - Y} \right),\) where \(Y\) has the log–log distribution and unit Teissier distribution is obtained by [12] with the transformation \(X = \exp \left( { - Y} \right),\) where \(Y\) has the Teissier distribution.

The beta regression model introduced by [13] is commonly used to elucidate the response variable when it is defined on \(\left( {0,1} \right)\). In recent years, several new regression models are proposed as alternatives to the beta regression model. These include the unit-Weibull regression model [4], unit Burr-XII regression model [5], unit log–log regression model [11], unit-Birnbaum–Saunders regression model [14], unit power-logarithmic regression model [15] and unit Burr–Hatke regression model [16]. This study presents an alternative regression model to current regression models. The effectiveness of this new regression model is indicated through its practical application. The cumulative distribution function of the new regression model is expressed in closed form, and it is an advantage over the beta regression model.

Evaluating the capability of a process is one of the crucial steps conducted by the quality controller. There are various process capability indices available to assess the capability of the process. In cases where measurements follow a normal distribution, the most popular process capability indices are Cp [17], Cpk [18], Cpm [19], and Cpmk [20]. However, for situations where measurements do not follow the normal distribution, several new process capability indices are developed, such as Spmk [21] and Cpyk [22]. The Cpc index suggested by [23] evaluates the capability of the process for normal and non-normal measurements. In this study, the Cpc index is examined based on the new distribution.

The main motivation of this paper is to introduce a new bounded distribution to the literature. Additionally, an alternative regression model based on this new distribution is introduced. Furthermore, to demonstrate the application of this new distribution in the field of quality control engineering, a process capability index is examined. In addition to all these significant motivations, one of the major contributions of this article is the utilization of estimation methods other than maximum likelihood estimation for the estimation of the unknown parameters of the proposed regression model. The rest of the paper is organized as follows: The new distribution is introduced and some of its mathematical properties are examined in Sect. 2. The point estimators are discussed in Sect. 3 with a Monte Carlo simulation for new distribution. A new regression analysis is introduced, and point estimation of the new regression model is examined with several estimators in Sect. 4 with a simulation experiment. In Sect. 5, three real data analyses are presented to demonstrate the applicability of the new distribution and regression model. The article is concluded in the section presenting the results and some suggestions in Sect. 6.

2 Unit NET distribution

In this section, a new distribution is presented based on the NET distribution introduced by [24]\(.\) The NET is expressed as “a new exponential distribution with trigonometric functions” in [24]. Let \(Y\) be a random variable following the NET distribution \(\left( {Y \sim NET\left( {\lambda ,\beta ,\alpha } \right)} \right)\) with cumulative distribution function (cdf) and probability density function (pdf) given, respectively, by

where \(\lambda ,\beta > 0\) and \(\alpha \in \left( { - 1,1} \right).\) In the following, the new distribution is introduced. Let \(Y \sim {\text{NET}}\left( {\lambda ,\beta ,\alpha } \right),\) and define the random variable \(X = \exp \left( { - Y} \right).\) Then, the cdf and pdf of the random variable \(X\) are given, respectively, by

and

where \(\lambda ,\beta > 0,\) and \(\alpha \in \left( { - 1,1} \right)\) are parameters of the new distribution. The new distribution, which cdf given in Eq. (1) and pdf in Eq. (2), is called the unit NET (UNET) distribution, and it is denoted by \(UNET\left( {\lambda ,\beta ,\alpha } \right).\) Moreover, the survival function (sf) of the UNET distribution can also be easily obtained as \(S_{{{\text{UNET}}}} \left( {x;\lambda ,\beta ,\alpha } \right) = 1 - F_{{{\text{UNET}}}} \left( {x;\lambda ,\beta ,\alpha } \right).\) The hazard rate function (hf) of the UNET distribution is obtained as

The plots of the pdf and hf for various parameter choices are presented in Figs. 1 and 2, respectively. It can be observed from Fig. 1 that the pdf of the UNET distribution exhibits shapes characterized by decreasing, increasing, unimodal, and decreasing–increasing patterns. It is concluded from Fig. 2 that the hf of the UNET distribution exhibits a decreasing, increasing, and unimodal shape.

2.1 Moments

In this subsection, moments for UNET distribution are derived. Let \(X \sim UNET\left( {\lambda ,\beta ,\alpha } \right),\) then the rth moment of a random variable \(X\) is given by

When \(r = 1,2,3\) and \(4\) are substituted into Eq. (3), the first four moments are obtained, respectively, by

and

The variance of the UNET distribution is obtained using Eqs. (4) and (5)

2.2 Order statistics

In this subsection, some findings related to the order statistics of the UNET distribution are presented. Consider a random sample \(X_{1} ,X_{2} , \ldots ,X_{n}\) from the UNET distribution, and \(X_{\left( 1 \right)} \le X_{\left( 2 \right)} \le \cdots \le X_{\left( n \right)}\) denote the related order statistics. The cdf and pdf of the rth order statistic are presented in general form, respectively, by

and

where \(B\left( {.;\,\,.} \right)\) is the classical beta function, and \(r = 1,2, \ldots ,n.\) The cdf and pdf of the rth order statistic of the UNET distribution are given by

and

Clearly, for \(r = 1\) and \(r = n,\) the cdf and pdf of \(X_{\left( 1 \right)} = \min \left( {X_{1} ,X_{2} , \ldots ,X_{n} } \right)\) and \(X_{\left( n \right)} = \max \left( {X_{1} ,X_{2} , \ldots ,X_{n} } \right)\) are obtained.

2.3 Stochastic ordering

In this subsection, stochastic ordering properties of the UNET distribution are discussed. For positive continuous random variables, stochastic ordering and other forms of ordering serve as crucial tools for assessing their comparative behavior. Stochastic ordering finds applications in various fields such as actuarial science, reliability, insurance, and economics. Detailed information on stochastic ordering can be found in [25].

Theorem 1

Let the random variable \(X \sim {\text{UNET}}\left( {\lambda_{1} ,\beta ,\alpha } \right)\) with pdf \(f_{x} \left( x \right)\) and the random variable \(Y \sim {\text{UNET}}\left( {\lambda_{2} ,\beta ,\alpha } \right)\) with the pdf \(f_{y} \left( x \right).\) Then, it \(\lambda_{1} < \lambda_{2} ,\)\(X\) is smaller than \(Y\) in the likelihood ratio order, i.e., the ratio function of the corresponding pdfs defined by \(g\left( x \right) = f_{x} \left( x \right)/f_{y} \left( x \right)\) is decreasing in \(x.\)

Proof:

After some algebraic manipulations, we get

Upon differentiation with respect to \(x,\) and after some algebraic manipulations, we obtain

It is evident that for \(\lambda_{1} < \lambda_{2} ,\) \(g^{\prime} \left( x \right) < 0,\) implying that \(g\left( x \right)\) is decreasing in \(x,\) and the desired likelihood ratio order is achieved.

2.4 Cpc index for UNET distribution

In this subsection, the Cpc index proposed by [23] is discussed based on the UNET distribution. The Cpc index is given by

where \(p_{0} \in \left( {0,1} \right)\) represents the efficiency of the process and should be taken to be close to 1; LSL and USL are the lower and upper specification limits of the process, respectively. The Cpc index is also considered for exponentiated-exponential distribution in [26].

The Cpc index relies on a crucial definition, namely net sensitivity (NS). The NS is obtained in general form as

where \(f\) represents the pdf. The nature of the process under consideration and the requirements of the customers will determine the \(p_{0}\) value, which should intuitively be close to one. A lower NS value in absolute terms indicates the production of less sensitive and more robust products or components. More sensitive and less durable products or parts are produced as the NS value rises.

Let random variable \(X\) have a UNET distribution, the Cpc index is given by

and NS based on the Cpc index for UNET distribution is given by

2.5 Data generating algorithm for UNET distribution

In this subsection, an algorithm is proposed to generate data from \(UNET\left( {\lambda ,\beta ,\alpha } \right)\) distribution. As the inverse transformation method fails to provide an explicit formula, we suggest employing an acceptance–rejection (AR) sampling in Algorithm 1; the uniform distribution is selected as the proposal distribution. The AR algorithm is outlined as follows.

Algorithm 1.

A1. Generate data on random variable \(W\) s from the standard uniform distribution with pdf \(g\left( w \right) = 1,\,\,0 < w < 1.\)

A2. Generate \(U\) from standard uniform distribution (independent of \(W\)).

A3. If

then set \(X = W\)(“accept”); otherwise, go back to A1 (“reject”), where \(f\) is the pdf of UNET distribution in Eq. (2) and

The output of Algorithm 1 gives a random data \(X\) from \({\text{UNET}}\left( {\lambda ,\beta ,\alpha } \right)\) distribution. It is noted that Algorithm 1 is used for all simulations in the paper.

3 Point estimation for parameters of the UNET distribution with a Monte Carlo simulation

In this section, three unknown parameters of the UNET distribution are estimated using five estimators, including maximum likelihood (ML), least squares (LS), weighted least squares (WLS), Cramér–von Mises (CvM), and maximum product spacing (MPS). Let \(X_{1} ,X_{2} , \ldots ,X_{n}\) be a random sample from the \(UNET\left( {\lambda ,\beta ,\alpha } \right)\) distribution, and \(x_{1} ,x_{2} , \ldots ,x_{n}\) is the observed value of the sample. Let \(X_{\left( 1 \right)} ,X_{\left( 2 \right)} , \ldots ,X_{\left( n \right)}\) be the order statistics based on the sample \(X_{1} ,X_{2} , \ldots ,X_{n}\) with the realization \(x_{\left( 1 \right)} ,x_{\left( 2 \right)} , \ldots ,x_{\left( n \right)} .\) Then, the likelihood and log-likelihood functions are given, respectively, by

and

where \(\Xi = \left( {\lambda ,\beta ,\alpha } \right).\) The ML estimator of \(\Xi ,\) say \(\widehat{\Xi } = \left( {\widehat{\lambda },\widehat{\beta },\widehat{\alpha }} \right)\) are obtained

Other estimators can be obtained by defining the following functions as given below

and

where \(F\left( {x_{0:n} |\lambda ,\beta ,\alpha } \right) = 0,F\left( {x_{n + 1:n} |\lambda ,\beta ,\alpha } \right) = 1,\) and \(F\) is the cdf of the UNET distribution. The LS, WLS, CvM, MPS estimators for parameters \(\Xi\) are obtained by minimizing or maximizing of the Eqs. (8)–(11), respectively, by

and

These equations cannot be minimized or maximized analytically, requiring the utilization of numerical methods such as the Newton–Raphson method for optimization. In this study, the optimization procedures are conducted utilizing the Nelder–Mead method, executed with the optim function [27] in R.

3.1 Monte Carlo simulation study

In this subsection, the bias, mean squared error (MSE), average absolute bias (ABB), and mean relative error (MRE) of ML, LS, WLS, CvM, and MPS estimates of parameters of the UNET distribution are estimated based on 5000 trials (Table 1). With these four criteria, the best estimator is determined in the simulation study. The sample sizes are selected as \(n = 25,50,100,\) and \(500.\) The results are reported in Tables 2, 3, 4, 5. It is concluded from Tables 2, 3, 4, 5 that the ML is the best estimator for the \(\lambda\) parameter based on the bias criterion. In contrast, the LS and CvM estimators perform best for the \(\beta\) parameter, and the ML and CvM arethe most effective estimator for the \(\alpha\) parameter based on the bias criterion. Based on the MSE criterion, the analysis reveals that the ML and WLS prove to be the most effective estimators for the \(\lambda\) parameter. Furthermore, the ML and WLS show superior performance in estimating the \(\beta\), while the ML and MPS are identified as the optimal choices for estimating the \(\alpha\). For the ABB criterion, the simulation results suggest that ML, CvM, and WLS are the most effective estimators for the \(\lambda\). Moreover, for the \(\beta\) and \(\alpha\) parameters, ML, CvM and MPS stand out as the optimal choices. Finally, simulation results based on the MRE criterion indicate that ML and WLS are the preferred methods for estimating the \(\lambda\), while ML and MPS are identified as the most effective methods for the \(\beta\) and \(\alpha\).

4 A novel quantile regression model

This section introduced a novel regression model based on the UNET distribution. Let \(\log \left( {\lambda_{i} } \right) = z_{i}^{T} {{\varvec{\upgamma}}},\) where \({{\varvec{\upgamma}}} = \left( {\gamma_{0} ,\gamma_{1} , \ldots ,\gamma_{p} } \right)^{T} \in {\mathbb{R}}^{p}\) and \(z_{i} = \left( {1,z_{i1} ,z_{i2} , \ldots ,z_{ip} } \right)\) for \(i = 1,2, \ldots ,n.\) Then, the cdf and pdf in Eqs. (1), (2) are re-parameterized, respectively, by

and

where \({{\varvec{\uppsi}}} = \left( {{{\varvec{\upgamma}}},\beta ,\alpha } \right).\) It is specified that for the rest of the paper, the random variable \(X_{i}\) is represented by \(X \sim {\text{RUNET}}\left( {{\varvec{\uppsi}}} \right).\)

4.1 Estimation for regression model parameters

Let \(X_{1} ,X_{2} , \ldots ,X_{n}\) be a random sample from the \({\text{RUNET}}\left( {{\varvec{\uppsi}}} \right)\) distribution, and \(x_{1} ,x_{2} , \ldots ,x_{n}\) is the observed value of the sample. Let \(X_{\left( 1 \right)} ,X_{\left( 2 \right)} , \ldots ,X_{\left( n \right)}\) be the order statistics based on the sample \(X_{1} ,X_{2} , \ldots ,X_{n}\) with the realization \(x_{\left( 1 \right)} ,x_{\left( 2 \right)} , \ldots ,x_{\left( n \right)} .\) The log-likelihood function is given by

The ML of \({{\varvec{\uppsi}}}\) states that \(\widehat{{{\varvec{\uppsi}}}}_{1} = \left( {\widehat{\gamma }_{0} ,\widehat{\gamma }_{1} , \ldots ,\widehat{\gamma }_{p} ,\widehat{\beta },\widehat{\alpha }} \right)\) is attained by maximization of the \(\ell \left( {{\varvec{\uppsi}}} \right)\) as

The asymptotic distribution of \(\left( {\widehat{{{\varvec{\uppsi}}}}_{1} - {{\varvec{\uppsi}}}_{1} } \right)\) is multivariate normal \(N_{p + 1} \left( {0,J^{ - 1} } \right),\) where \(J\) is the expected information matrix when provided some regularity conditions. The observed information matrix is generally used in place of \(J.\) The observed information matrix can be computed using any software. The optim function in R can be used to determine the asymptotic standard errors of estimates based on the observed Fisher information matrix.

Some estimators described in Sect. 3 are used to estimate the unknown parameter of the regression model. It is considered to be one of the first attempts to estimate unknown regression parameters using alternative estimators instead of maximum likelihood estimators. For the four estimators related to the regression model, the related functions are as follows:

and

where \(G\) is the cdf of the RUNET in Eq. (12). The LS, WLS, CvM, and MPS estimators for the unknown parameters of the regression model are obtained by minimizing or maximizing Eq. (15)–(18), respectively, by

and

All optimization problems in Eqs. (14), (19)–(22) can be achieved by the Nelder-Mead method in the optim function of R.

4.2 Simulation studies for regression model parameters

This subsection compares the effectiveness of estimators using a Monte Carlo simulation. The performance of the five proposed methods for estimating unknown parameters of the regression model is assessed according to their bias and MSE criteria. The relationships between independent variables are examined in four different scenarios (Table 6). The independent variables are generated from a multivariate normal distribution, and two different correlation matrices are chosen as

Monte Carlo simulation results for the suggested estimators are presented in Tables 7, 8. For \(n = 25,50,100,\) and \(500\) cases, 5000 trials are simulated. It is evident from Tables 7, 8 that for scenarios 1 and 2, the best estimation methods based on the bias criterion are ML, LS, WLS, CvM and MPS methods and, for the MSE criterion, the best estimation methods are ML and MPS. Similarly, for scenarios 3 and 4, it is observed that the best estimation methods based on the bias criterion are ML, LS, WLS, and MPS methods. Similarly, when considering the MSE criterion, the most effective estimation methods are observed to be ML, CvM, and MPS.

5 Practical examples

In this section, three real data analyses are conducted. The first analysis demonstrates the comparison of the UNET distribution with existing distributions. The second real data analysis demonstrates the applicability of the Cpc index. Finally, the third analysis presents the applicability of the recommended RUNET model as an alternative to the beta and Kumaraswamy regression models.

5.1 Real data example

In this subsection, the UNET distribution is compared to the Kumaraswamy (KW) [1], unit-Weibull (UW) [4], unit Burr-XII (UB-XII) [5], unit-Birnbaum (UB) [9], and unit Muth (UM) distributions [28]. The pdf of these distributions is given, respectively, by

UNET distribution

Kumaraswamy distribution

unit Weibull distribution

unit Burr-XII distribution

unit Birnbaum distribution

and unit Muth distribution

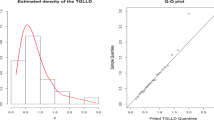

The ML estimates of parameters, estimated log-likelihood value \(\left( {\widehat{\ell }} \right)\), Akaike’s information criteria (AIC), Bayesian information criterion (BIC), consistent AIC (CAIC), Hannan-Quinn information criterion (HQIC), standard error (se) of ML estimate, and Kolmogorov Smirnov (KS) test statistics with related p value are reported in Table 9. The best model can be chosen with a smaller value of the AIC, BIC, CAIC, and HQIC, and larger values of \(\widehat{\ell }\), p values of the KS test. The data set represents the better life index taken from self-reported health (2015) in the link: https://stats.oecd.org/index.aspx?DataSetCode=BLI2015. The data consist of 34 samples. The data are: 0.85, 0.69, 0.74, 0.89, 0.59, 0.6, 0.72, 0.54, 0.65, 0.67, 0.65, 0.74, 0.57, 0.77, 0.82, 0.8, 0.66, 0.3, 0.35, 0.72, 0.66, 0.76, 0.9, 0.76, 0.58, 0.46, 0.66, 0.65, 0.72, 0.81, 0.81, 0.68, 0.74, and 0.88. When the UNET distribution is compared with distributions KW, UW, UB-XII, UB, and UM, it is observed that the UNET distribution has the highest \(\widehat{\ell }\) and KS p values. Additionally, it exhibits the lowest values for AIC, BIC, CAIC, and HQIC criteria. Furthermore, the fit to UNET distribution for better life index data can be observed in Fig. 3 with fitted pdf, cdf, sf, and probability–probability (P–P) plots.

5.2 Real data application for Cpc index

In this subsection, the usability of the Cpc index on the UNET distribution is demonstrated using real data. For some electrical insulating fluid samples exposed to a constant voltage stress of 34 kV (hours), and the dataset represents the failure times. The data are 0.0032, 0.0130, 0.0160, 0.0218, 0.0463, 0.0808, 0.0108, and 0.1225 and can be found in [29]. During this real data analysis for Cpc, ML estimations are used. The \(USL = 0.95\) and \(LSL = 0.6\) are selected, and then the ML estimates, KS test statistic, p value, Cpc, and NS are computed and presented in Table 10.

Based on the findings for the real data set regarding the Cpc index, it is possible to interpret some electrical insulating fluids exposed to 34 kV (hours) of constant voltage stress in the life test experiment as stronger, that is, less sensitive because the NS value is close to zero in absolute value.

5.3 Practical example for regression model

In this subsection, real data are employed to assess the applicability of the new regression model. These real data are accessible in [30] and can be found at https://stats.oecd.org/. The Kumaraswamy regression model (KWR), beta regression model (BR), and log-extended exponential geometric regression model (LEEGR, introduced by [31]) regression models are taken into consideration for comparative analysis. This application aims to correlate the percentage of educational attainment (variable y) in OECD countries with the percentage of voter turnout (variable x1), the murder rate (variable x2), and life satisfaction (variable x3). For four models, ML estimates of parameters, se of ML estimate, log-likelihood values \(\left( {\widehat{\ell }} \right),\) and AIC are computed. The findings are presented in Table 11. From Table 11, it is seen that the RUNET model suggested exhibits superior modeling capabilities compared to all other models from KWR, BR, and LEEGR.

Among the covariate parameters, only \(\gamma_{3}\) is found to be statistically significant at the 5% level in the RUNET regression model. The positive coefficient of \(\gamma_{3}\) indicates that it has a significant effect on increasing the median response.

6 Conclusion

In this study, a new unit distribution and a novel regression model are proposed. Several estimators are investigated to estimate the unknown parameters of both the new distribution and the new regression model. The examination of methods other than ML for estimating the unknown parameters of the regression model enriches the originality of the study. The performance of the estimators is evaluated through a Monte Carlo simulation. According to the simulation for UNET distribution, it is concluded that the generally best estimation methods are ML and MPS. According to the simulation for RUNET model, it is observed that the best estimation method for the bias criterion is ML, while MPS is the best estimator for the MSE criterion. Additionally, the new distribution is explored based on the Cpc index, and its usability in the field of engineering is demonstrated through a real data analysis. Furthermore, the potential of both the new distribution and the proposed regression model is demonstrated through real data applications in comparison to existing models. In the future studies, researchers can be able to use both the new distribution and the regression model to model the datasets. Moreover, interval estimation for unknown parameters of the new distribution and regression model can be addressed for future studies. Furthermore, other process capability indices can be examined based on the UNET distribution.

Data availability

No additional data or materials are available.

References

Kumaraswamy P (1980) A generalized probability density function for double-bounded random processes. J Hydrol 46(1–2):79–88

Mazucheli J, Menezes AFB, Chakraborty S (2019) On the one parameter unit- Lindley distribution and its associated regression model for proportion data. J Appl Stat 46(4):700–714

Lindley DV (1958) Fiducial distributions and Bayes’ theorem. J R Stat Soc Ser B (Methodological) 20:102–107

Mazucheli J, Menezes AFB, Fernandes LB, de Oliveira RP, Ghitany ME (2020) The unit-Weibull distribution as an alternative to the Kumaraswamy distribution for the modeling of quantiles conditional on covariates. J Appl Stat 47(6):954–974

Korkmaz MÇ, Chesneau C (2021) On the unit Burr-XII distribution with the quantile regression modeling and applications. Comput Appl Math 40:29

Gündüz S, Korkmaz MÇ (2020) A new unit distribution based on the unbounded Johnson distribution rule: the unit Johnson SU distribution

Mazucheli J, Menezes AFB, Dey S (2018) Improved maximum-likelihood estimators for the parameters of the unit-gamma distribution. Commun Stat-Theory Methods 47(15):3767–3778

Ghitany M, Mazucheli J, Menezes A, Alqallaf F (2019) The unit-inverse Gaussian distribution: a new alternative to two-parameter distributions on the unit interval. Commun Stat-Theory Methods 48(14):3423–4343

Mazucheli J, Menezes AF, Dey S (2018) The unit-Birnbaum-Saunders distribution with applications. Chilean J Stat 9(1):47–57

Menezes AFB, Mazucheli J, Dey S (2018) The unit-logistic distribution: different methods of estimation. Pesquisa Operacional 38:555–578

Korkmaz MÇ, Korkmaz ZS (2023) The unit log–log distribution: a new unit distribution with alternative quantile regression modeling and educational measurements applications. J Appl Stat 50(4):889–908

Krishna A, Maya R, Chesneau C, Irshad MR (2022) The unit Teissier distribution and its applications. Math Comput Appl 27(1):12

Ferrari S, Cribari-Neto F (2004) Beta regression for modeling rates and proportions. J Appl Stat 31:799815

Mazucheli J, Leiva V, Alves B, Menezes AF (2021) A new quantile regression for modeling bounded data under a unit Birnbaum-Saunders distribution with applications in medicine and politics. Symmetry 13(4):682

Chesneau C (2021) A note on an extreme left skewed unit distribution: theory, modeling, and data fitting. Open Statistics 2(1):1–23

Sağlam Ş, Karakaya K (2022) Unit Burr-Hatke distribution with a new quantile regression model. J Sci Arts 22(3):663–676

Juran JM (1974) Juran’s quality control handbook, 3rd edn. McGraw-Hill, New York

Kane VE (1986) Process capability indices. J Qual Technol 18:41–52

Hsiang TC, Taguchi G (1985) A tutorial on quality control and assurance-the taguchi methods. ASA Annual Meeting, Las Vegas, p 188

Choi BC, Owen DB (1990) A study of a new process capability index. Commun Stat: Theory Methods 19:1232–1245

Chen JP, Ding CG (2001) A new process capability index for non-normal distributions. Int J Qual Reliab Manag 18(7):762–770

Maiti SS, Saha M, Nanda AK (2010) On generalizing process capability indices. J Qual Technol Quant Manag 7(3):279–300

Perakis M, Xekalaki E (2002) A process capability index that is based on the proportion of conformance. J Stat Comput Simul 72(9):707–718

Bakouch HS, Chesneau C, Leao J (2018) A new lifetime model with a periodic hazard rate and an application. J Stat Comput Simul 88(11):2048–2065

Shaked M, Shanthikumar JG (eds) (2007) Stochastic orders. Springer, New York, NY

Saha M, Dey S, Nadarajah S (2022) Parametric inference of the process capability index Cpc for exponentiated exponential distribution. J Appl Stat 49(16):4097–4121

Varadhan R (2014) Numerical optimization in R: beyond optim. J Stat Softw 60:1–3

Maya R, Jodra P, Irshad M, Krishna A (2022) The unit muth distribution: statistical properties and applications. Ricerche di Matematica 1–24

Abd El-Monsef ME, Hassanein WAAEL (2020) Assessing the lifetime performance index for Kumaraswamy distribution under the first-failure progressive censoring scheme for ball bearing revolutions. Qual Reliab Eng Int 36(3):1086–1097

Korkmaz MÇ, Chesneau C, Korkmaz ZS (2021) Transmuted unit rayleigh quantile regression model: alternative to beta and Kumaraswamy quantile regression models. Univ Politeh Buchar Sci Bull Ser Appl Math Phys 83:149–158

Jodra P, Jimenez-Gamero MD (2020) A quantile regression model for bounded responses based on the exponential-geometric distribution. REVSTAT-Stat J 18(4):415–436

Acknowledgements

Kadir Karakaya is the main author of this study. This study is a part of Şule Sağlam’s M.Sc. The thesis entitled “A New Unit Distribution and Real Data Applications” supervised by Asst. Prof. Dr. Kadir Karakaya submitted by Statistics Department, Graduate School of Natural Sciences, Selcuk University, Konya, Turkey. The earlier version of this study is presented III. International Applied Statistics Conference (UYIK 2022) by the same authors.

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sağlam, Ş., Karakaya, K. An alternative bounded distribution: regression model and applications. J Supercomput 80, 20861–20890 (2024). https://doi.org/10.1007/s11227-024-06233-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-024-06233-3