Abstract

The increasing trend of manycore processors makes multithreaded communication more important to avoid costly global synchronization among cores. One of the representative approaches that require multithreaded communication is the global task-based programming model. In the model, a program is divided into tasks, and tasks are asynchronously executed by each node, and independent thread-to-thread communications are expected. However, the Message passing interface (MPI) based approach is not efficient because of design issues. In this research, we design and implement the utofu transport layer in an abstracted communication library called Unified communication-X (UCX) for efficient remote direct memory access (RDMA) based multithreaded communication on Tofu Interconnect D. The evaluation results on Fugaku show that UCX can significantly improve the multithreaded performance over MPI, while maintaining portability between systems thanks to UCX. UCX shows about 32.8 times lower latency than Fujitsu MPI with 24 threads in the multithreaded pingpong benchmark and about 37.8 times higher update rate than Fujitsu MPI with 24 threads on 256 nodes in multithreaded GUPs benchmark.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Modern high-performance computing (HPC) systems require higher network performance due to increased computational capabilities, thanks to many core processors and accelerators like the Graphics processing unit (GPU). One of the recent trends to achieve higher network performance is to have multiple network interfaces per node for higher network throughputs because bandwidth per single network interface is limited. For example, the Frontier supercomputer [1] operated by Oak Ridge National laboratory has four slingshot Network interface cards (NICs) per node to achieve 100GB/s bandwidth. Another example is the supercomputer Fugaku [2, 3] operated by the RIKEN center for computational science. Fugaku consists of 158,796 nodes. Fugaku uses Tofu Interconnect D for inter-node communications. The interface has six remote direct memory access (RDMA) engines per node to achieve 40.8 GB/s injection bandwidth. While, there are various network topologies such as fat-tree, dragonfly, and six-dimensional mesh/torus, effective use of those multiple networks is the common key to achieving higher network performance.

1.1 Motivations behind this work

One of our motivations for improving multithread communication is our interest in task-based programming approaches aimed at avoiding costly global thread synchronization. In the task model, a program is divided into a set of tasks, which are executed asynchronously. This interest follows the recent trend of manycore processors. The Frontier supercomputer uses an AMD EPYC processor, which has 64 cores. The supercomputer Fugaku uses a Fujitsu Arm A64FX processor [4], which has 48 computational cores. To extend task-based programming for multi-node execution, an efficient thread-to-thread communication framework is required to improve performance using multiple network interfaces. As tasks are executed asynchronously, communication techniques such as RDMA are preferred over tag-matching-based approaches. Additionally, in massively parallel processing (MPP) systems such as Fugaku, which consists of 158,796 nodes, tag-matching-based communication would require massive buffer resources for eager communications. Therefore, we think RDMA communication is preferred and can provide better scaling. For our planned distributed task-based programming framework, we are mainly targeting Fugaku as it is one of the representative MPP systems.

If such communication is implemented using MPI, MPI_THREAD_MULTIPLE support is required. Unfortunately, the current implementation of MPI_THREAD_MULTIPLE is relatively inefficient. With MPI, an endpoint object will be shared by multiple threads instead of having an endpoint per thread. This affects multithreaded performance, as all operations invoked from different threads are serialized internally due to exclusive controls. MPICH has a VCI feature to tackle these limitations, but it requires users to add MPICH-specific optimizations, reducing portability. In addition, vendor MPI implementations do not support that thread mode in Fugaku. Moreover, tag communication could cause deadlocks as tasks and communications are executed asynchronously [5, 6].

Our solution for better multithreaded performance is to use the one-sided communication of UCX [7, 8]. RDMA communication will avoid unnecessary synchronization for message buffer management and allow low-overhead communication on the Tofu Interconnect D to implement the global task programming model. We chose UCX over an interconnect network library because of its portability between systems, as UCX already supports multiple interconnects. Additionally, we chose UCX over MPI as UCX is more lightweight than MPI.

In this research, we design and evaluate the UCX for the Tofu Interconnect D to achieve efficient multithreaded communication performance. While, several methods exist to effectively use multiple network links, such as multiple MPI processes within a node, this research focuses on multithreaded communication. This paper extends our previous research about UCX for the Tofu Interconnect D [9]. The main topics of the previous research are preliminary implementation and evaluation that focus on single-thread performance. However, we found that implementation is not efficient for multithreaded communication, which is shown in Sect. 2. In this research, we extend the implementation towards efficient multithreaded performance. The main contributions, in addition to prior research, are as follows:

-

We designed and implemented a method to utilize multiple network interfaces with manycore based systems for efficient multithreaded performance with an example of Tofu Interconnect D

-

We proposed more explicit hardware resource management design on UCX transport layer for potential future network interfaces that expose hardware resources management to users

-

We implemented memory registration optimization, which significantly improves the performance of multithreaded communication on Tofu Interconnect D over vendor-based MPI without direct use of utofu library

-

We compared the result on Fugaku with InfiniBand-based systems, which confirms the performance portability between systems

The key highlights of this paper are as follows:

-

Improved design of UCX shows 36.7 times lower latency over prior design with multithreaded pingpong benchmark, which Sect. 5.1 describes

-

Improved design of UCX significantly improves multithreaded performance over MPI, while maintaining portability thanks to UCX, which Sect. 4 describes with pingpong, message rate, and Giga Update per Seconds (GUPs) benchmarks.

-

UCX based OpenSHMEM also shows about 18.6 times higher performance over MPI on Fugaku, which shows OpenSHMEM application can benefit from our work, as Sect. 4.3 describes

The rest of this paper is organized as follows: The later part of this section describes UCX, Tofu Interconnect D, and related works, respectively. Then, Sect. 2 describes the multithread performance problem of our previous research. Section 3 shows the current design and implementation for more efficient multithreaded communication and then evaluates the performance in Sect. 4. Section 5 presents discussions about evaluations and compares them with the InfiniBand-based system. Finally, Sect. 6 concludes this paper.

1.2 Unified communication-X (UCX)

UCX [7, 8, 10] is a modern communication framework developed as a collaboration among industry, laboratories, and academia. In the high-performance computing field, UCX is used as a lightweight abstracted transparent network library. For example, OpenMPI, one of the most widely used MPI implementations, can adopt UCX as the underlying communication library. UCX mainly consists of three modules: UC-Transport (UCT), UC-Protocol (UCP), and UC-Services (UCS). UCT abstracts the underlying communication library, such as ibverbs and uGNI. UCT also supports the XPMEM module for inter-process communication and GPUs. UCP provides higher-level communication APIs that are implemented on UCT. UCS provides useful functions such as data structures and memory pools.

1.2.1 UCX and multithreaded communication

UCP protocol supports two types of multithreaded communication models. The first is to use a single UCP worker and share with multiple threads. This is available with UCP’s thread multiple mode, similar to MPI’s MPI_THREAD_MULTIPLE mode. We call this single worker model in this paper. This is the simplest solution in terms of implementation, as users just need to create a single UCP worker with thread_multiple mode, and each thread simply uses it without additional exclusive controls by users. However, this would incur performance problems due to UCX’s exclusive controls. For example, when a thread calls PUT operation using UCP protocol, UCX tries to acquire a lock to the corresponding worker. Therefore, if multiple threads invoke PUT operations using the same UCP worker, those invocations will be serialized internally in the UCP protocol.

The second method is to create multiple workers for each thread. We call this the multiple worker model in this paper. When the user implements a program where each thread has an exclusive worker, multiple PUT invocations can be processed simultaneously. If multiple operation queues are available in the underlying communication layer, this method should be more efficient than the former, as those queues can be used in parallel.

1.3 Tofu Interconnect D

Tofu Interconnect D [11] is a six-dimensional mesh/torus network designed for the supercomputer Fugaku [2, 3] and systems based on the Fujitsu PrimeHPC FX1000 [12]. Users can use arbitrary shapes of the torus network. The network interface is integrated into the Fujitsu A64FX Arm processor. The block diagram of the A64FX processor and the block diagram of the tofu interface are shown in Figs. 1 and 2. Core Memory Group(CMG) in the Fig. 1 is a NUMA node that has cores and 8GB HBM memory. Tofu Interconnect D is connected to CPU cores through ring networks. A single tofu interface has six Tofu Network Interfaces (TNIs), which constitute an RDMA engine. Each TNI is connected to a Tofu network router (TNR). As shown in Table 1, each TNI has a bandwidth of 6.8 GB/s, and the injection bandwidth is 40.8 GB/s.

1.3.1 System architecture

The queue structure is shown in Fig. 3. Each TNI is connected to a Control Queue (CQ). CQ is a hardware queue that consists of three types of queues. The first queue type is the transmit order queue (TOQ). Each communication request to a remote node is posted to this queue. The second queue type is the Transmit Complete Queue (TCQ). The notification of a send completion is locally posted to this queue if the UTOFU_ONESIDED_FLAG_TCQ_NOTICE flag is specified when the request is posted into the TOQ. The third queue type is the message receive queue (MRQ). The notification of an operation completion, such as RDMA READ, is posted to this queue when a particular flag is specified. For example, if an RDMA READ request is posted to the TOQ with UTOFU_ONESIDED_FLAG_LOCAL_MRQ_NOTICE flag, the notification of completion is locally posted to the MRQ. The CQ is virtualized as a virtual control queue (VCQ), and users will use the tofu interface via VCQ.

The number of available CQs and VCQs are shown in Table 1. Users can use up to nine CQs per TNI and can create up to eight VCQs per CQ. Users can create a CQ-exclusive VCQ, which means only one VCQ is created on a specific CQ. This type of VCQ is helpful for better performance as no contention will occur within the CQ. However, if users write a program that uses both Fujitsu MPI and utofu, creating too many CQ-exclusive VCQs will degrade Fujitsu MPI’s collective communication performance as it also uses CQ-exclusive VCQs. In this research, we focus on using those hardware resources and not on effective routings.

1.3.2 utofu library

utofu [13], a library developed by Fujitsu, provides an API for the Tofu Interconnect D for user programs. With the utofu library, users can perform one-sided communication or barrier operations. For one-sided communication, RDMA PUT, RDMA GET, and atomic operations are available. For barrier operations, synchronization, and reduction are supported across multiple nodes. For example, a barrier operation can be used to implement the MPI’s MPI_Barrier or MPI_Allreduce operations.

1.4 Related works

There are several types of research about UCX with different network interfaces. Matthew et al. implemented the uGNI transport layer for Gemini and the Aries network on UCX and evaluated it with OpenSHMEM-UCX [14]. Nikela et al. conducted a detailed analysis of both UCP and UCT protocols over the InfiniBand network [15]. In our research, we implemented an utofu transport layer on UCX to support Fugaku. Additionally, due to utofu’s unique handling of virtual memory addresses and remote keys for memory protection, which differ from existing networks such as InfiniBand, we proposed a different approach with utofu. This approach could be beneficial for future interconnects aiming to support UCX.

For utofu support in UCX, the same author of this paper conducted a preliminary design and implementation of UCX for Tofu Interconnect D [9]. In the work, we did a basic design and conducted a performance evaluation with a single thread, which showed higher bandwidth and lower latency than MPI with smaller messages. However, we found the multithreaded communication performance with prior design is limited, which Sect. 2 describes. Additionally, the prior design lacked memory registration features, which limited the performance, as described in the original work. Therefore, in this work, we focused on improving the multithreaded communication performance and implementing optimization features to remove the overhead in the utofu transport layer.

For improving multithreaded communication, several approaches are studied by researchers [16,17,18,19]. Pavan et al. studied and pointed out the performance problem of multithreaded communication using MPI [16]. Sridharan et al. proposed an MPI-based approach with endpoint extension [17]. By preparing an explicit endpoint for each thread, their approach can reduce the overhead of exclusive control, and therefore, multithreaded communication performance is improved. Their MPI-based approach looks more portable as many applications are written with MPI, but this feature has not yet been introduced to MPI standards. The UCX-based approach is also studied. Aurelien et al. implemented a context mechanism into OpenSHMEM-UCX. Each context has a separated UCP worker for better multithreaded performance [20]. MPICH’s virtual communication interfaces (VCIs) is an MPI-based approach for better multithreaded communication performance, which is implemented by Zambre et al. [21]. VCI improves multithreaded performance by introducing multiple virtual network interfaces that avoid global exclusive controls. However, as pointed out by the same author, users are required to add hints to the program, and those hints are MPICH specific [19]. Therefore, portability is the problem. For Fugaku, MPICH for utofu is provided [22]. Unfortunately, the implementation is based on MPICH 3.4, and MPICH itself has been modified. Therefore, we could not use VCI on Fugaku for evaluation.

Optimal memory registrations for RDMA operation are also studied by several researchers [23, 24].

2 Multithreaded communication with UCX: single worker vs multiple worker

As shown in Sect. 1.2.1, UCP protocol supports two types of execution models for multithreaded communication. This discussion mainly focuses on the interconnect network with multiple interfaces and hardware resources, such as queue structures that are exposed to users via API. In addition to Tofu Interconnect D, this would be one of the new trends as a future interconnect network for efficient multithreaded communication with manycore processors.

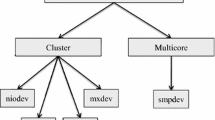

As for the single worker model, those multiple network resources, such as queue structures, are almost hidden from users by UCP protocols and allow easier programming. One of the possible ways to utilize multiple network resources with the single worker model is to rely on UCP’s multi-rail features. This feature implicitly utilizes multiple network interfaces by splitting large messages and sending them using up to four devices. However, this multi-rail feature is not helpful to Tofu Interconnect D as maximum network bandwidth is an inject bandwidth, not a theoretical bandwidth between nodes. In addition, Tofu has six TNIs, while multi-rail supports up to four devices. The other possible way is to expose multiple network resources as a single resource by implementing a transport layer in that manner. Our preliminary implementation of the utofu transport layer follows this method, as shown in Fig. 4, and users can use the single worker model for easier programming. However, our preliminary implementation did not support the multiple worker model and limited the performance of multithreaded communication, which will be shown last part of Sect. 4.1.

As for the multiple worker model, programming will become more complicated. This is because users are required to manage multiple workers by their responsibility. However, this method potentially allows users to manage multiple network resources more explicitly with less resource contention among threads. For example, assigning one hardware queue to one UCP worker would be considered. This method can expose more underlying layers’ structure as UCP workers to users, allowing more explicit hardware resource management than using the single worker model. The current utofu transport layer, which will be shown in Sect. 3, follows this method to allow users to have one VCQ per UCP worker and those VCQs will be created using six TNIs. As each thread has its own UCP worker, no resource contention will occur as threads do not share VCQs. This type of implementation will allow users to manage VCQs more explicitly than the single worker model. As hardware resources such as TNIs and CQs are exposed to users in Tofu Interconnect D, explicit management will benefit multithreaded performance.

3 Design of UCX for the Tofu Interconnect D for multithreaded communication

This section briefly describes the design of UCX for Tofu Interconnect D.

3.1 Basically function mappings between utofu and UCX

3.1.1 RDMA PUT and GET

In our design, we utilize utofu_put_piggyback and utofu_put APIs. The utofu_put_piggyback is used for up to 32-byte messages as it allows direct copying of small messages to the TOQ descriptor, which eliminates the local memory access and improves put latency. For larger than 32 byte messages, we utilize utofu_put API which requires local memory access.

For the RDMA GET operation, we utilize the utofu_get function.

3.1.2 Handlings of the steering address

To communicate using utofu’s RDMA APIs, users need to register a virtual memory address to the tofu interface and get the corresponding steering address using utofu_reg_mem API as shown in Code 1. The API takes VCQ, virtual memory address, and buffer size, flags such as read-only as input, and returns the corresponding steering address. The steering address is used in the tofu interface to identify the memory region instead of the virtual memory address. When registering virtual memory to multiple VCQs that belong to different CQs, the steering address will differ even if the virtual address is the same. Since, UCX handles virtual memory addresses and not steering addresses, it must be considered to support utofu in UCX. Because utofu needs a steering address but not remote keys, which are ordinarily used for memory protection, the steering address can be used as a remote key in UCX. By using the steering address as a remote key, as it will be exchanged with remote nodes before RDMA operation, the memory address gap between utofu and UCX can be solved.

Code 1: “Example code of utofu”

Overview of previous design [9]: single worker model

3.1.3 Memory domain

UCX’s architecture simply fits the InfiniBand verbs API because the request queue and memory domain are separate. However, in the utofu API, the memory domain and queue are not separate. All memory registration and RDMA communication require VCQs. Therefore, we cannot apply the method used in the InfiniBand layer to the utofu transport layer.

To solve this problem, we designed the utofu transport layer shown in Fig. 5. With this design, the memory domain has several VCQs and is shared by the interface or endpoint objects. When memory registration is performed, the memory domain is directly referenced and registers memory through a VCQ. When an RDMA operation such as the ucp_put_nbx function is called, it refers to the endpoint object and then refers to the memory domain object through the interface object. This design may reduce flexibility as the number of available VCQs is determined in the initialization phase of the memory domain. To increase the flexibility of queues, up to 432 VCQs can be created; however, each memory registration should be performed on all the queues. According to the utofu API specification [13], the use of too many memory registrations may cause performance degradation.

3.2 Handling of VCQs for multithreaded execution with multiple worker model

The relationship between VCQs and UCP workers is shown as Fig. 5. Each UCP worker will have at least a single CQ-exclusive VCQ to maximize concurrent RDMA performance. As shown in Sect. 1.2.1, if multiple threads share a single worker, then each operation will be serialized. To achieve the best multithreaded communication performance, having an exclusive worker for each thread is better.

Due to the current limitation of remote key length, which is up to 255 bytes in UCX, the utofu transport layer will create up to 24 CQ exclusive VCQs as we use the steering address as the remote key and the size of the steering address is eight. In UCX, the memory domain is associated with UCX’s context object and not the endpoint object. Besides, the steering address will differ when registering virtual address memory to VCQs on different CQs as shown in Sect. 3.1.2. Therefore, all steering addresses on multiple VCQs associated with specific virtual memory addresses must be packed with a single remote key. If we put a single VCQ on a memory domain and require users to create multiple contexts, this restriction will be resolved. However, since users need to manage multiple UCX contexts explicitly, the programming will be more complex. Additionally, this limitation can be solved by expanding the capacity of the remote key size in UCX.

3.3 Optimization of memory registration

This section describes how the utofu transport layer will optimize the memory registration to the tofu interface for RDMA operations. The purpose of this optimization is to reduce the number of memory registrations into the tofu interface. With utofu, most communication APIs require a steering address for local and remote buffer addresses, unlike InfiniBand, which directly uses virtual addresses. Since, UCX does not have a feature to use this type of interface-specific address, we need to implement a management system for the steering address for the tofu transport layer.

One of the straightforward methods is to register the local buffer to interface before RDMA operation and de-register it after the operation completes. However, the registration and de-registration costs will always be included in the RDMA operation using this method. Instead, we implement optimization features to reduce costs, as shown below.

3.3.1 Buffered copy operation

In the buffered copy implementation, we use the memory pool feature provided by UCS. The memory pool system feature allocates a large buffer and then divides it into chunks of buffers with specified sizes. To reduce the memory registration with the memory pool, we register the whole memory buffer of the pool and manage the header address of the pool and its steering address.

When an RDMA operation is called with the buffered copy mechanism, the runtime selects a chunk of buffer, the head address of the entire buffer, and a steering address from the pool. Subsequently, the runtime calculates the corresponding steering address of the chunk buffer by calculating the offset between the pool header address and the chunk address, instead of registering and de-register per every invocation. This method can avoid registering many buffers to the interface which could cause performance degradation.

3.3.2 Zero copy operation

To optimize the zero-copy operation, which directly uses a buffer that is specified by a user as source or destination, we designed a method to manage the registered buffer and corresponding Tofu’s steering address. This feature is implemented using a hashmap data structure called Khash in the UCS layer, which was initially implemented in klib [25]. We prepared the same number of hash tables as the number of VCQs. The key of the hashmap is a buffer address registered to the ’tofu interface’ through the corresponding VCQ, and the value is the steering address.

When an RDMA operation is invoked, the utofu transport layer checks if the memory is already registered and managed. If the memory is registered and the utofu transport layer manages the corresponding steering address, then the utofu transport layer reuses it. If not registered or managed by the utofu transport layer, then it registers the memory and manages the buffer address and corresponding steering address. Since, too many memory registrations could cause performance degradation, this feature can be enabled by environment variables.

4 Performance evaluation

In this section, we conduct various benchmarks to evaluate the multithreaded performance of UCX for the Tofu Interconnect D and compare it with utofu and MPI implementations on Fugaku. The hardware and software specifications of a node are shown as Table 2.

4.1 Multithreaded pingpong benchmark

To evaluate and compare the performance of multithreaded communication, we conduct a performance evaluation with a pingpong benchmark with various implementations of MPI, UCX, and utofu.

4.1.1 Experimental setup

The flow of the pingpong benchmark and the relationship between communication threads and UCP workers are shown as Figs. 6 and 7. We execute the benchmark program once for each combination of workers and threads, calculating the aggregate bandwidth and average latency. We expect there to be fewer effects by other workloads as one rack of nodes is allocated. The completion of the receive operation was checked by polling the last element of the data.

For MPI implementation, we use Fujitsu MPI and MPICH for Tofu Interconnect D [22]. This MPICH uses libfabrics with utofu support. Their work is conducted based on MPICH 3.4, with some modifications to MPICH itself. Since only MPICH 3.4 based implementation is provided, we did not use the latest MPICH, such as MPICH 4. For Fujitsu MPI, we use MPI_THREAD_SERIALIZED mode as Fujitsu MPI does not support MPI_THREAD_MULTIPLE mode. In order to serialize RDMA invocations, we use pthread mutex. For MPICH, we use MPI_THREAD_MULTIPLE mode.

For UCX implementation, we use 1 and 24 UCP workers, and each UCP worker will have an exclusive utofu VCQ. With one worker, UCX will use only 1 TNI and 1CQ. For 24 workers, UCX will use 6 TNI and 4 CQ. In addition, we have two UCX executions with or without memory registration optimization.

For utofu implementation, we prepare two versions. The first one relies on the utofu library’s thread-safe mode, and the second one uses a pthread lock to flush VCQ. The latter is similar to UCX, which will do exclusive control to each worker when the thread multiple mode is specified. Regarding VCQ usage, the same as UCX, we use 1 and 24 VCQs similarly. In the scenario of multiple threads using the same VCQ, we bind the neighboring threads to use the same VCQ. We set OMP_PROC_BIND=close to pin threads in a contiguous manner.

4.1.2 Execution result

For MPI implementation, both Fujitsu MPI and MPCIH show lower aggregated bandwidth and higher latency compared to UCX, as shown in Figs. 8b and 9b. For MPICH, this is because all operations are serialized internally due to exclusive controls. To compare UCX and Fujitsu MPI, the latency of a 4-byte message with 24 threads of UCX is about 1.7 µs with 6 TNIs and 4 CQs, and that of Fujitsu MPI is about 56.0µs, respectively with rounded to two decimal places, which UCX shows about 32.8 times improvements.

For the relationship between performance and the number of VCQs, with increasing VCQs, both UCX and utofu show higher bandwidth, as shown in Fig. 8a and b, and lower latency, as shown in Fig. 9a and b. When comparing utofu and UCX, utofu exhibits slightly higher bandwidth and lower latency. Specifically, in comparing latency between UCX and utofu, as shown in Fig. 9b, with 24 threads executing using 24 VCQs, the latency of utofu with a 32-byte message, without intentional pthread lock, is approximately 1.3 µs, while that of UCX with optimization is about 1.7 µs.

Another notable observation is the significant improvement in latency due to memory registration optimization in the utofu transport layer. With one thread using 1 CQ and 1 TNI, the latency of a 64-byte message decreases from 1.4 to 1.2 us with optimization, which is shown in Fig. 9a. Similarly, with 48 threads, 6 TNIs, and 4 CQs, latency reduces from 10.9 to 3.5 us for 64-byte messages, as shown in Fig. 9b. It’s worth noting that performance for messages up to 32 bytes remains unchanged due to UCX utilizing the utofu_put_piggyback API for efficient short message communication without local memory registration.

To compare with our previous design [9], we also conduct a pingpong benchmark with a single worker model, which is shown in Fig. 4. With the previous single worker model design, the aggregated bandwidth does not increase with more threads, as shown in Fig. 10. For latency, it increases with more threads, as shown in Fig. 11. This is because all operations are serialized in the UCP layer, and therefore, the underlying communication capabilities of Tofu Interconnect D cannot be utilized.

Pingpong aggregated bandwidth with previous single worker design [9]: bandwidth decreases with more threads

Pingpong latency with previous single worker design [9]: latency increase with more threads

4.2 Multithreaded message rate

To evaluate the message rate with multithreaded communication, we conduct a performance evaluation with UCX, utofu, Fujitsu MPI with MPI_THREAD_SERIALIZED, and MPICH with MPI_THREAD_MULTIPLE.

4.2.1 Experimental setup

The experimental setup is the same as the pingpong benchmark as Sect. 4.1.1 describes. We execute the benchmark program once for each combination of workers and threads and calculate the aggregated message rate with the execution time of the measurement section. The measurement section does not include initializations such as endpoint creation. For UCX, we measure the message rate by calling UCX’s nonblocking immediate API like ucp_put_nbi repeatedly and then flushes to complete operations.

For RDMA GET benchmarks, because of the current limitation with UCX, the benchmark program flushes every 200 requests per worker. This is because of the callback implementation for GET operation in the utofu transport layer, as utofu API allows only a 1-byte message as callback data for completing receive operation. We believe that calling RDMA GET operation hundreds of times with a particular node without flushing is an extreme situation, and this limitation will not be a critical problem in most applications.

4.2.2 Execution result

Regarding the PUT operation performance with one worker or VCQ, as shown in Fig. 12a, UCX zcopy with memory optimization matches or is slightly lower than utofu for message sizes up to 32 bytes. However, for larger message sizes, the performance gap widens until it’s constrained by theoretical bandwidth. This is due to additional processing overhead in the utofu transport layer in UCX, such as memory pooling and callback operations. Notably, when using 6 TNIs and 4 CQs with 48 threads, as shown in Fig. 12b, the performance gap between UCX and utofu for messages of 32 bytes or less becomes larger compared to using 6 TNIs and 4 CQs with 24 threads, due to the giant locking of UCP workers observed earlier.

The effect of zcopy memory registration optimization on the utofu transport layer is also a notable difference, as it significantly improves the message rate. When using 6 TNIs and 4 CQs with 24 threads from Fig. 12b, the 64-byte message rate with zcopy optimization is about 23.5 million, while that without zcopy optimization is only about 0.28 million. For both MPIs, the message rate is not improving with increasing threads. The maximum message rate of MPICH with 4-byte messages is about 0.29 million with one thread execution. This is about 107 times lower than UCX zcopy with memory registration optimization using 6 TNIs and 4 CQs with 24 threads, with a message rate of about 31.0 million.

For GET operation performance, utofu demonstrates over two times higher message rates compared to UCX zcopy with optimization when VCQ or UCP worker is not shared among threads, as shown in Fig. 13b. Specifically, with 6 TNIs and 4 CQs, and 4 byte messages on 24 threads, UCX zcopy achieves about 6.3 million messages per second, whereas utofu achieves about 14.7 million. This difference is partly due to UCX’s additional processing for its callback mechanism, which is similar to the PUT operation. Additionally, the limitations shown in Sect. 4.2.1 impact UCX’s performance. Notably, memory optimization within the utofu transport layer significantly enhances GET message rates. For instance, in Fig. 13b, the 4-byte message rate using 6 TNIs and 4 CQs with 24 threads reaches approximately 6.3 million with memory registration optimization, compared to only 2.8 million without optimization.

4.3 Multithreaded giga updates per seconds (GUPs) benchmark

We also conduct an additional evaluation using the GUPs benchmark to evaluate multithreaded random access performance on Fugaku.

4.3.1 Experimental setup

We used the GUPs_threaded program from Oak Ridge OpenSHMEM Benchmark [26, 27] as the original GUPs source code and modified it to implement utofu, UCX, and MPI version with Fujitsu MPI and MPICH. In addition, in order to evaluate with OSSS-UCX [28], an OpenSHMEM implementation with UCX, we modified the original program to accommodate up to 24 contexts and exclusive control for contexts to be shared by multiple threads. This is because each context will have one UCP worker, and the current utofu transport layer allows up to 24 workers due to restrictions. We also modified the original OSSS-UCX implementation to use Fujitsu MPI’s barrier API instead of hcoll’s API, as it is not optimized for Tofu Interconnect D.

The parameters and software used for this benchmark are shown as Table 3. We execute the benchmark program once for each combination of workers and threads and calculate the update rate with the execution time of the measurement section. The measurement section does not include initializations such as endpoint creation. We expect there to be fewer effects by other workloads as one rack of nodes is allocated. The performance is measured by repeatedly performing operations that involve retrieving eight bytes messages from randomly selected nodes and positions and updating the value with RDMA GET and PUT operations. Initializations, such as creating the endpoint objects, are performed outside the measurement region. To evaluate, we set the variable TotalMem to 20,000,000. We allocate 284 nodes in the shape of \(4\times 9\times 8\) with torus mode and use from 32 to 256 nodes for evaluation. Note that the performance will depend on the shape of nodes as network topology is a six-dimensional mesh/torus.

4.3.2 Execution result

As similar to prior evaluations, the performance of both MPI versions is not improving with the increasing threads. From Fig. 14c, the update rate of Fujitsu MPI with 256 nodes is about 0.019 billion with 48 threads. To compare UCX zcopy with optimization and Fujitsu MPI, UCX zcopy with optimization shows about 37.8 times higher update rates. The update rate of UCX zcopy with optimization is about 0.85 billion, and that of Fujitsu MPI is about 0.02 billion.

To compare utofu and UCX with 24 VCQs or UCP workers from Fig. 14c, when VCQ or UCP worker is not shared by threads, utofu shows about 20% higher update rate than UCX. However, when two threads share a VCQ or workers, such as with 48 threads on 256-node execution, the performance of UCX zcopy with optimization decreases from about 0.85 to 0.61 billion, while utofu improves from 1.04 to 1.38 billion, resulting in utofu showing 2.26 times higher performance than UCX. As for the performance with increasing VCQs, as shown in Fig. 14a–c, both show higher update rates with more VCQs, unless two or more threads share the same workers in UCX.

For OSSS-UCX, as seen in Fig. 14c, the performance with 24 threads utilizing 24 contexts on 256 nodes is approximately 18.6 times higher than Fujitsu MPI. However, it is around 2.034 times slower than UCX. One possible reason is OSSS-UCX’s additional processing steps, such as remote key resolutions, given that OSSS-UCX is implemented using UCP APIs.

5 Discussion

5.1 Performance improvement over previous single worker model approach

This section compares the performance between the previous single worker model implementation and the current multiple worker model implementation which are shown in Sects. 2 and 4.1. With the previous implementation, the latency of a 4-byte message with 24 threads and 48 threads is about 28.6 µs and 83.1 µs. With the current implementation, the latency of 4-byte messages with 24 and 48 threads using 6 TNIs and 4 CQs and zcopy optimization is about 1.7 µs and 2.3 µs. This means the ratio of improvements is about 16.7 times and 36.7 times, respectively. These performance improvements are thanks to adapting the multiworker model, as it reduces global exclusive controls. While the performance of the current implementation with 48 threads is not ideal because two threads share the same worker, the multiple worker model can provide significantly better multithreaded communication performance than the single worker model.

5.2 UCX performance using 24 workers with 48 threads on Fugaku

After several evaluations of Fugaku, we identified that the performance of 48 threads using 24 UCP workers was not as good as that of 24 threads. This is because two threads will share a UCP worker when executing with 48 threads using 24 workers. As described before, UCP protocol tries to take giant locking to the worker for every operation, and processings like callbacks and memory pool operations will be included, which limits the performance. As this trend is also observed with the evaluation of InfiniBand-based systems, which will be described later in this section, it is better to have an exclusive UCP worker per thread for higher multithreaded communication performance.

One of the possible solutions for the utofu transport layer is to increase UCP’s maximum remote key length to have 48 CQ exclusive VCQs and then be able to have 48 UCP workers. However, this could cause a performance problem if users use MPI’s collective operations in addition to UCX’s one-sided operations, as fewer CQ-exclusive VCQs would be available for MPI operations. Implementing collective communication using existing UCP workers would be a solution, but optimizing the 6-dimensional mesh/torus network would be hard, and utofu’s collective communication features, such as reduction and barriers, cannot be used in this way.

Another solution is to create two VCQs per CQ as shown in Fig. 15. If users register memory to two VCQs that are created on the same CQ, then the corresponding steering address would be the same. This would be a more flexible solution than the first method. However, it appears that the current utofu library does not provide a method for users to create a VCQ on a specific CQ, while users can create a VCQ exclusive to a CQ or a VCQ shared with no CQ number specification. Having multiple UCP contexts and placing only one VCQ per context would also be a possible solution. However, it requires more complex programming as users must manage contexts explicitly and have more memory spaces.

5.3 Comparison with InfiniBand

We use two nodes of the Pre PACS-X (PPX) system operated by the Center for Computational Sciences at the University of Tsukuba. The overview of the system is shown in Table 4. This system has two Xeon E5-2660 v4 CPUs, two NVIDIA P100 GPUs, and one Mellanox ConnectX-4 EDR InfiniBand card. We only use socket 0, which is the InfiniBand card connected via the PCIe bus.

We use UCX and three MPI implementations: OpenMPI, MPICH, and MVAPICH. Those MPIs are configured to use UCX as the underlying communication layer. For MPI, we use MPI_THREAD_MULTIPLE mode. We use dynamically connect (DC) protocol for InfiniBand by setting environment variable UCX_TLS to dc_x

5.3.1 Multithreaded pingpong

The trend in performance when the number of threads increases is similar to Fugaku’s pingpong evaluation. As the number of UCP workers increases, aggregated bandwidth increases, which can be seen from Fig. 16a and b, and then the latency decreases which can be seen from Fig. 17a and b.

For MPI, all MPI implementation shows similar trends. Because latency is increasing with more threads, those MPIs look to use only a single UCP worker.

5.3.2 Multithreaded message rate

The multithreaded message rate of PUT and GET operations are shown as Figs. 18 and 19. Similar to Fugaku’s evaluation, the update rate of UCX increases with more UCP workers, while that of MPI does not increase with more threads. As for UCX, when more threads share the UCP workers, the message rate decreases, the same as Fugaku’s evaluation result. For example, with Fig. 18a, when the message size is 4byte, the PUT message rate with one thread using one UCP worker is about 3.9 million, while that with four threads is about 2.4 million.

5.4 UCX and multithreaded performance

To achieve better multithreaded communication performance using the UCP protocol of UCX, users need to use multiple UCP workers, as described in this research. This is due to the implementation of thread multiple mode in UCP protocol, which uses giant locking to the worker for each operation. However, using multiple workers will cost users to modify their programs. If the UCP protocol supports fine-grained locking instead of giant locking to extract more multithreaded performance of underlying layers, such as multiple queues, the multithreaded performance might improve with a single UCP worker with UCP protocol.

Although the best multithreaded performance would be achieved with multiple UCP workers, even in that way, users might be able to benefit without modifying their applications. If users use the UCT protocol directly and the transport layer supports thread safety by fine-grained locking, then users might get better multithreaded performance with a single UCT worker. However, most users are expected to use the UCP protocol instead of the UCT protocol.

6 Conclusion

In this research, we designed and implemented a method to efficiently utilize multiple network interfaces with manycore based systems for efficient multithreaded performance with an example of Tofu Interconnect D. Our method proposes a more explicit hardware resource management to users, which should be helpful to implement an additional transport layer to UCX for future network interfaces with multiple network links, since multithreaded communication performance is one of the essential factors to achieve better performance, especially with manycore processors. To implement the utofu transport layer, we mainly designed and implemented two features, supporting multiple UCP workers and memory registration optimizations, respectively.

Our design of the transport layer towards effective multithreaded communication will also be applied to possible future interconnect networks, which will expose multiple hardware resources to users through API. With the multithreaded pingpong benchmark, the latency improvement of UCX using 6 TNIs and 4 CQs with 24-thread execution over vendor-provided Fujitsu MPI is about 32.8 times. With GUPs benchmarks, UCX also can improve the message rate over MPICH for Tofu Interconnect D, which supports MPI_THREAD_MULTIPLE mode. The improvement of UCX using 6 TNIs and 4 CQs with 24 thread execution is about 42 times that of MPICH, which is measured with 256 nodes. Those results show that UCX for the Tofu Interconnect D can be an alternative solution for users needing better multithreaded performance on Fugaku and Tofu Interconnect D based systems, while maintaining portability between systems. The comparison of UCX and utofu shows room for improvement, especially with 48-thread execution, and we discussed the possible solution.

Data availability

Data will be available on request.

Change history

13 August 2024

A Correction to this paper has been published: https://doi.org/10.1007/s11227-024-06421-1

References

Oak Ridge National Laboratory: Frontier. https://www.olcf.ornl.gov/frontier. [Online; accessed 23-July 2023]

Sato M, Ishikawa Y, Tomita H, Kodama Y, Odajima T, Tsuji M, Yashiro H, Aoki M, Shida N, Miyoshi I, et al. (2020) Co-design for a64fx manycore processor and “fugaku”. In: SC20: International Conference for High Performance Computing, Networking, Storage and Analysis, pp 1–15. IEEE

RIKEN Center for Computational Science: About Fugaku. https://www.r-ccs.riken.jp/en/fugaku/about/. [Online; accessed 23-July 2023]

Fujitsu global: FUJITSU Processor A64FX. https://www.fujitsu.com/global/products/computing/servers/supercomputer/a64fx/. [Online; accessed 23-July 2023]

Sala K, Bellón J, Farré P, Teruel X, Perez JM, Peña AJ, Holmes D, Beltran V, Labarta J (2018) Improving the interoperability between mpi and task-based programming models. In: Proceedings of the 25th European MPI Users’ Group Meeting. EuroMPI ’18. Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/3236367.3236382

Sala K, Teruel X, Perez JM, Peña AJ, Beltran V, Labarta J (2019) Integrating blocking and non-blocking MPI primitives with task-based programming models. Parallel Comput 85:153–166. https://doi.org/10.1016/j.parco.2018.12.008

Shamis P, Venkata MG, Lopez MG, Baker MB, Hernandez O, Itigin Y, Dubman M, Shainer G, Graham RL, Liss L, et al (2015) Ucx: an open source framework for HPC network APIS and beyond. In: 2015 IEEE 23rd Annual Symposium on High-Performance Interconnects, pp 40–43, IEEE

GitHub openucx/ucx. https://github.com/openucx/ucx. [Online; accessed 23-July 2023]

Watanabe Y, Sato M, Tsuji M, Murai H, Boku T (2022) Design and performance evaluation of UCX for Tofu-D interconnect with OpenSHMEM-UCX on fugaku. In: 2022 IEEE/ACM Parallel Applications Workshop: Alternatives to MPI+ X (PAW-ATM), pp 52–61 . IEEE

The Unified Communication X Library. http://www.openucx.org. [Online; accessed 23-July 2023]

Ajima Y, Kawashima T, Okamoto T, Shida N, Hirai K, Shimizu T, Hiramoto S, Ikeda Y, Yoshikawa T, Uchida K, et al. (2018) The Tofu interconnect D. In: 2018 IEEE International Conference on Cluster Computing (CLUSTER), pp 646–654. IEEE

Fujitsu global: FUJITSU Supercomputer PRIMEHPC. https://openucx.org/introduction/. [Online; accessed 23-July 2023]

Fujitsu: Technical Computing Suite V4.0L20 Development Studio uTofu User’s Guide. https://software.fujitsu.com/jp/manual/manualfiles/m210007/j2ul2482/02enz003/j2ul-2482-02enz0.pdf. [Online; accessed 23-July 2023]

Baker M, Aderholdt F, Venkata MG, Shamis P (2016) OpenSHMEM-UCX: evaluation of UCX for implementing openshmem programming model. In: OpenSHMEM and Related Technologies. Enhancing OpenSHMEM for Hybrid Environments: Third Workshop, OpenSHMEM 2016, Baltimore, MD, USA, August 2–4, 2016, Revised Selected Papers 3, pp 114–130 . Springer

Papadopoulou N, Oden L, Balaji P (2017) A performance study of UCX over InfiniBand. In: 2017 17th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID), pp 345–354. IEEE

Balaji P, Buntinas D, Goodell D, Gropp W, Thakur R (2010) Fine-grained multithreading support for hybrid threaded MPI programming. Int J High Perform Comput Appl 24(1):49–57

Sridharan S, Dinan J, Kalamkar DD (2014) Enabling efficient multithreaded MPI communication through a library-based implementation of MPI endpoints. In: SC’14: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, pp 487–498. IEEE

Patinyasakdikul T, Eberius D, Bosilca G, Hjelm N (2019) Give MPI threading a fair chance: a study of multithreaded MPI designs. In: 2019 IEEE International Conference on Cluster Computing (CLUSTER), pp 1–11. IEEE

Zambre R, Chandramowlishwaran A (2022) Lessons learned on MPI+ threads communication. In: SC22: International Conference for High Performance Computing, Networking, Storage and Analysis, pp 1–16. IEEE

Bouteiller A, Pophale S, Boehm S, Baker MB, Venkata MG (2018) Evaluating contexts in OpenSHMEM-X reference implementation. In: OpenSHMEM and Related Technologies. Big Compute and Big Data Convergence: 4th Workshop, OpenSHMEM 2017, Annapolis, MD, USA, August 7-9, 2017, Revised Selected Papers 4, pp 50–62. Springer

Zambre R, Chandramowliswharan A, Balaji P (2020) How i learned to stop worrying about user-visible endpoints and love MPI. In: Proceedings of the 34th ACM International Conference on Supercomputing. ICS ’20. Association for Computing Machinery, New York, NY, USA. https://doi.org/10.1145/3392717.3392773

RIKEN center for computational science: an overview of RIKEN MPI (MPICH-Tofu). https://www.r-ccs.riken.jp/wp/wp-content/uploads/2021/01/MPICH-Tofu.pdf. [Online; accessed 23-July 2023]

Bell C, Bonachea D (2003) A new DMA registration strategy for pinning-based high performance networks. In: Proceedings International Parallel and Distributed Processing Symposium, p 10. IEEE

Ou L, He X, Han J (2009) An efficient design for fast memory registration in RDMA. J Netw Comput Appl 32(3):642–651

Klib–a generic library in C. https://attractivechaos.github.io/klib/. [Online; accessed 23-Mar 2024]

Naughton T, Aderholdt F, Baker M, Pophale S, Gorentla Venkata M, Imam N (2019) Oak ridge OpenSHMEM benchmark suite. In: OpenSHMEM and Related Technologies. OpenSHMEM in the Era of Extreme Heterogeneity: 5th Workshop, OpenSHMEM 2018, Baltimore, MD, USA, August 21–23, 2018, Revised Selected Papers 5, pp 202–216. Springer

GitHub ornl-languages/osb. https://github.com/ornl-languages/osb. [Online; accessed 23-July 2023]

Baker M, Aderholdt F, Venkata MG, Shamis P (2016) OpenSHMEM-UCX: evaluation of UCX for implementing OpenSHMEM programming model. In: OpenSHMEM and Related Technologies. Enhancing OpenSHMEM for Hybrid Environments: Third Workshop, OpenSHMEM 2016, Baltimore, MD, USA, August 2–4, 2016, Revised Selected Papers 3, pp 114–130. Springer

Acknowledgements

This research used the computational resources of the supercomputer Fugaku provided by the RIKEN Center for Computational Science and Pre-PACS-X system provided by the Center for Computational Sciences, University of Tsukuba. This study was supported by JSPS KAKENHI Grant Number 21H04869. The authors would like to thank Dr. Tony Curtis at Stony Brook University and Dr. Steve Poole at Los Alamos National Laboratory for the valuable discussion about implementing the utofu transport layer for UCX.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Software design, implementation, and evaluation are performed by Y.W. and supervised by M.S. and T.B. Discussions and analysis are performed by all authors.

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: The unit “million” was mistakenly placed in section 4.3.2, the correct unit is “billion” instead. A line of the Acknowledgements section was missing from this article and should have read ‘This study was supported by JSPS KAKENHI Grant Number 21H04869’.

The original article has been corrected.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Watanabe, Y., Tsuji, M., Murai, H. et al. Design and performance evaluation of UCX for the Tofu Interconnect D on Fugaku towards efficient multithreaded communication. J Supercomput 80, 20715–20742 (2024). https://doi.org/10.1007/s11227-024-06201-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-024-06201-x