Abstract

In recent years, free energy perturbation calculations have garnered increasing attention as tools to support drug discovery. The lead optimization mapper (Lomap) was proposed as an algorithm to calculate the relative free energy between ligands efficiently. However, Lomap requires checking whether each edge in the FEP graph is removable, which necessitates checking the constraints for all edges. Consequently, conventional Lomap requires significant computation time, at least several hours for cases involving hundreds of compounds, and is impractical for cases with more than tens of thousands of edges. In this study, we aimed to reduce the computational cost of Lomap to enable the construction of FEP graphs for hundreds of compounds. We can reduce the overall number of constraint checks required from an amount dependent on the number of edges to one dependent on the number of nodes by using the chunk check process to check the constraints for as many edges as possible simultaneously. Based on the analysis of the execution profiles, we also improved the speed of cycle constraint and diameter constraint checks. Moreover, the output graph is the same as that obtained using the conventional Lomap, enabling direct replacement of the original one with our method. With our improvement, the execution was hundreds of times faster than that of the original Lomap.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the early stages of drug discovery, hit-to-lead optimization is conducted to identify lead compounds with sufficient affinity and desirable pharmacological properties from hit compounds that bind weakly to the target receptor [1]. The alchemical free energy perturbation (FEP) calculation [2,3,4] is a popular tool for lead optimization because it uses a perturbation-based approach to calculate the exact relative binding free energies between a candidate compound and its target receptor from molecular dynamics simulations [5, 6].

Closed thermodynamic cycles are crucial for minimizing errors in the relative binding free energy calculations [7,8,9]. The sum of the relative free energies of the thermodynamically closed paths is theoretically zero. The error in a closed cycle is called the cycle closure error or hysteresis [7]. Cycle closure methods, which reduce errors based on closed cycles, can improve the prediction accuracy of perturbation calculations. Therefore, to guarantee the accuracy of the FEP calculations, the FEP graph must include as many closed cycles as computational resources allow. Figure 1 illustrates an example FEP graph of a TYK2 target benchmark set by Wang et al. [2] generated with Lomap [7].

Here, we introduce the types of FEP graphs and their generation methods. First, the most common FEP graph is the star graph, which connects all compounds to only one reference compound. No particular graph construction algorithm is required, and the perturbation calculation is only performed \(N-1\) times. However, the accuracy of the FEP calculation cannot be guaranteed because no single closed thermodynamic cycle exists. PyAutoFEP [10], an open-source tool for automating FEP calculations, provides an implementation that builds wheel-shaped and optimal graphs using ant colony optimization.

DiffNet [8] provides a theoretical paradigm for optimizing the covariance matrix for errors. HiMap [9] also provides a statistically optimal design based on optimization of the covariance matrix, similar to DiffNet.

Lomap [7] is an automated planning algorithm for calculating relative free energy. Lomap calculates the scores between compound pairs based on several design criteria for proper relative free energy calculations, and FEP graphs are constructed based on these scores. Here, Lomap constructs an FEP graph based on the criterion that all molecules must be part of at least one closed cycle. However, Lomap only handles the generation of FEP graphs for a few dozen compounds and must consider hundreds of FEP calculations.

Recently, active learning [11,12,13], a machine learning approach for planning experiments for lead optimization, has been applied to relative binding free energy calculations [14,15,16]. Active learning involves hundreds or thousands of extensive FEP calculations. However, conventional Lomap calculations incur a substantial computational cost for FEP graphs of several hundred compounds. Therefore, applying the Lomap graph directly to active learning cases requires significant effort. Conventional active learning methods with FEP only attempt to perform star-shaped FEP calculations without closed cycles [15, 16] or perform more detailed calculations for a limited number of compounds [14]. Therefore, simplifying the introduction of FEP cycles, even for hundreds of compounds, is imperative to support FEP calculations by active learning.

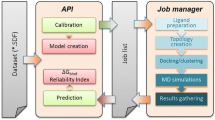

The main reason why Lomap calculations require a considerable amount of time is that they check the constraints for every edge in the initial graph. Figure 2 displays the outcomes of generating FEP graphs via the Lomap algorithm across 500 nodes. A significant portion of the build_graph function’s execution time, as indicated in the first line of Fig. 2, was consumed by the check_constraints function, noted in the fourth line of the same figure.

In this paper, we propose an improved Lomap algorithm that introduces a chunk check process in which edges are processed collectively as much as possible to maintain the number of constraint checks dependent on the number of nodes. We also improved the calculation of graph diameters and bridges. The proposed method can construct FEP graphs several hundred times faster for hundreds of compounds. Furthermore, the output FEP graph is the same as the original Lomap.

We published a preliminary version of this work in conference proceedings [17], including limited experiments and discussion. We have proposed a new streamlining constraint checks mechanism and added it to the implementation to further speed up Lomap. We have added accompanying experiments to significantly extend the results and discussion.

2 Methods

2.1 Lomap algorithm

Lomap is available as an open-source implementation using RDKit (https://github.com/OpenFreeEnergy/Lomap), and this study is based on and refined from it. In this section, we describe the algorithm used in the original Lomap. Lomap generates an undirected graph that determines the compound pairs for which relative binding free energy calculations will be conducted. To obtain valid results in FEP calculations, the target compound pairs must be sufficiently similar to each other. The link score is an index based on the similarity between two compounds. Therefore, the FEP graph uses the link score, an index based on the similarity between two compounds, to evaluate whether a compound pair is valid for FEP calculation. The link score is calculated based on the maximum common substructure (MCS) of a compound pair using the following equation:

where \(\beta\) is a parameter, and \(N_A\), \(N_B\) and \(N_{\rm MCS}\) are the number of heavy atoms in the two input compounds and MCS, respectively. That is the number of insertions and deletions required for the perturbation. The score was defined between 0 and 1, with a maximum of 1 when the two compounds were the same. The score includes a correction that takes a product of other scores calculated based on whether they are valid for the perturbation calculation, such as having the same net charge and preserving the rings as much as possible. A crucial aspect of graph generation is that edges with low link scores should be removed except when they favorably impact a closed cycle. Computing the link scores among all the compounds is required to generate an FEP graph using the Lomap algorithm. In this study, the score calculation for each compound pair was not subject to acceleration because it could be parallelized. In addition, score calculation is not subject to execution time measurement because the scores are calculated in advance.

Lomap’s FEP graph generation is explained as follows:

-

1.

Compute a scoring matrix for all compound-to-compound pairs.

-

2.

The initial graph should consist of only the edges of pairs whose link scores are above the threshold. The initial graph may be partitioned into multiple subgraphs.

-

3.

The edge removal process is executed for each connected component of the initial graph. First, sort the edges in the subgraph in order of descending link score.

-

4.

Begin with the edge having the lowest link score and assess whether the constraints are met upon its removal. If the constraints are satisfied, remove the edge; otherwise, leave it in place. The four constraints are as follows (shown in Fig. 3):

-

(a)

Connectivity constraints The subgraph is connected.

-

(b)

Cycle constraints The number of nodes not included in the cycles does not change. This constraint can be determined by the number of bridges in the cycle not changing.

-

(c)

Diameter constraints The diameter of the subgraph is less than or equal to the threshold MAXDIST.

-

(d)

Distance constraints (if necessary) The distance from the known active ligand is less than or equal to the threshold.

-

(a)

-

5.

Connect each connected component.

Constraints for Lomap edge deletion decisions. a Removing an edge makes node 4 disconnected. b Removing an edge makes node 4 not belong to any cycle. c Removing an edge makes the distance from node 4 to node 3 to 2, and the constraint is not satisfied (when MAXDIST=1). d Removing an edge makes the distance from node 2 (known active ligand) to node 4 to 2, and the constraint is not satisfied (for threshold=1)

Here, Process (4) required the most time when the graph was large. This is because Process (4) checks all edges in the subgraph to determine whether an edge can be removed. The graph diameter and bridges were then calculated to determine whether an edge could be removed. If the number of nodes in the graph is N and the number of edges is M, the computational complexity of the diameter is O(MN), and that of the bridges is \(O(M+N)\). The computational complexity becomes at least \(O(M^2N)\) because the number of edges in the graph changes each time the FEP graph is updated. The most significant factor in this computational complexity is that it checks all edges to determine whether they can be removed. The Lomap algorithm removes edges in the order of the worst score; however, this order must be preserved. Therefore, the graph-generation phase cannot be computed in parallel.

2.2 Chunk check process

In this section, we propose FastLomap, which introduces an algorithm called the chunk check process that streamlines the Lomap algorithm. Because the number of edges in the initial graph is proportional to the square of the number of nodes, if the link score is not pruned with a threshold, the ratio of edges that do not satisfy the constraint follows the inverse of the number of nodes. In other words, the larger the number of nodes, the greater the number of edges that can be removed. Edges that do not satisfy the constraints are unevenly distributed toward the end of the checking process. Figure 4 illustrates this tendency during the edge removal process. This is because numerous redundant edges exist at the outset of the process, making it easier to satisfy the constraints by removing the edges. However, as the procedure progressed, the conditions became more stringent, making edge removal more challenging. Therefore, these blank areas should be removed as much as possible in a single check, and the edges need to be removed individually in areas with a concentration of unremovable edges.

Given these observations, we propose a chunk check process. Figure 5 provides an overview of the chunk check process.

First, let the chunk scale s be a parameter related to the size of the chunk, let the chunk level k be the depth of the layer of the chunk process, and let \(C_{k}\) be the chunk when chunk level k is set. In addition, for the number of edges M, \(k_{max}=\lfloor \log _{s}M \rfloor\) represents the maximum chunk level, indicating the number of times that chunk processing can be executed recursively. The chunk size for the first \(k=0\) was \(S_0=s^k_{\rm max}\). For example, when \(M=5100\) and \(s=10\), \(S_0=s^{k_{\rm max}}=10^3\). The algorithmic procedure for the chunk check process is as follows:

-

1.

Check the constraints of step (2) for every \(S_0\) edge sorted in order of decreasing link score.

-

2.

Constraint check. If the constraint is not satisfied (i.e., there are some unremovable edges in the chunk), the check fails, and all edges are restored and go to the next step (3). The constraint check succeeds when the constraint is satisfied even if \(S_0\) edges are removed. If the constraint check succeeds for all edges, return to step (1).

-

3.

As \(S_{k}=\max (\lfloor S_{k-1}/s\rfloor , 1)\), check \(C_k\) constraints for each \(S_{k}\) edges in chunk \(C_{k-1}\).

-

4.

If the unremovable edges are identified, check the constraints for the remaining edges of chunk \(C_{k-1}\). If the constraint is satisfied, complete the chunk process for chunk \(C_{k-1}\) and return to step (2). If not satisfied, continue with step (3) until the chunk check of all edges in the chunk succeeds.

The chunk check process allows the removal of each large chunk in areas with few unremovable edges. When the chunk scale is large, unremovable edges can be identified quickly in areas with numerous unremovable edges.

2.3 Streamlining constraint checks

In FastLomap, we accelerated the diameter and cycle constraints, which had been bottlenecks. First, we explain the speeding up of diameter constraints. The original Lomap implementation used the NetworkX [18] eccentricity method, but we changed it to calculate the diameter of the graph using the bounding diameter algorithm [19, 20]. This algorithm is available in the NetworkX diameter function with the usebounds option. The diameter calculation is usually O(MN) computation complex because it computes the all-pairs shortest path (ASAP). The algorithm uses the lower and upper boundary relationships between node eccentricity and diameter to determine the exact diameter within a computation time proportional to O(N). Border cases involving complete graphs and circular graphs need to be considered, but are acceptable when constructing FEP graphs.

Next, we explain the speeding up of cycle constraints The original Lomap keeps cycle constraints by checking that the number of bridges does not change from the initial graph. It uses a bridge calculation based on the chain decomposition method [21] implemented in NetworkX [18]. Here, we replaced the bridge calculation method by Tarjan because it is faster than that method. Tarjan’s method is an \(O(N+M)\) algorithm that decomposes into strongly connected components by considering the smallest reachable index called lowlink. We will also evaluate the effects of these constraint check changes through experiments.

2.4 Code availability

Our proposed improved version of the Lomap code (called FastLomap) is available at https://github.com/ohuelab/FastLomap under the same MIT license as the original Lomap.

3 Experiments

3.1 Thompson TYK2 dataset

In this study, we used 100–1000 nodes for extensive FEP graph construction experiments. Therefore, we used a congeneric series of TYK2 inhibitors based on the aminopyrimidine scaffold provided by Thompson et al. [22].

- (a) Thompson TYK2 dataset:

-

From the original dataset, we randomly selected 1000 cases and constructed a subset of the experimental set from 100 to 1000 nodes.

- (b) Thompson TYK2\(_{0.7}\) dataset:

-

From the original dataset with a link score of 0.7 or higher with the reference ligand (PDB: 4GIH [23]), the experimental set was constructed from 100 to 1000 cases in the same way. This case is to evaluate the effectiveness of the proposed method when the number of edges is extremely large.

Figure 6 illustrates the number of edges in the initial graph against the number of nodes for the Thompson TYK2 and Thompson TYK2\(_{0.7}\) datasets. The edges of the initial graph were pruned to a link score threshold of 0.4. Therefore, the number of edges in the Thompson TYK2\(_{0.7}\) dataset, which consisted of similar compounds, was at most five times larger than that in the Thompson TYK2 dataset. Figure 7 shows the distribution of link scores for the two datasets. The peak of the link score for the Thompson TYK2\(_{0.7}\) dataset was higher than that for the Thompson TYK2 dataset. The numbers of edges in the generated FEP graph for each node are shown in Fig. 8. Both datasets have a similar number of edges in the FEP graph. Note that the generated graphs are the same for the proposed method and the existing Lomap.

3.2 Wang dataset

The Wang dataset [2] was used as a benchmark to evaluate RBFE prediction performance and contained several dozen ligands for each of the eight targets. Our proposed algorithm assumes that the number of compounds exceeds 100, and its benefit is small for such a dataset. However, we used the Wang dataset to demonstrate that the proposed method can be applied to a general use case.

3.3 Setup for graph generation

First, we generated a complex structure because no complex structure was provided for the Thompson TYK2 dataset. Complex structures were generated by the superposition of a reference ligand (PDB: 4GIH [23]) using the alignment tools (very permissive option) of Cresset Flare [24].

All link scores were computed using Lomap’s default 3D mode. Note that the link score calculation for all pairs of 1000 compounds takes 26.4 min in parallel processing on 28 CPUs.

We experimented with the original Lomap calculation as a baseline and with chunk scales \(s=2, 5, 10, 50\) for the proposed method. To generate the FEP graph, we used the default settings of Lomap, that is, a graph diameter of 6, no tree structure allowed and a link score cutoff of 0.4.

4 Results and discussion

4.1 Results of Thompson TYK2 dataset

Figures 9 and 10 show the execution time and the number of constraint checks for each number of nodes in the Thompson TYK2 and Thompson TYK2\(_{0.7}\) datasets. Here, all methods are compared using the fastest constraint checking method. The baseline method did not complete the calculation within 24 h for \(N\ge 700\) in the Thompson TYK2\(_{0.7}\) dataset. First, we compared the baseline and the proposed methods. For the Thompson TYK dataset, it is up to about 20 times faster at \(N=1000\). In the case of the Thompson TYK2\(_{0.7}\) dataset, the FEP graph was hundred times faster at \(N=600\), and the baseline method could not construct an FEP graph at \(N\ge 700\). The Thompson TYK2\(_{0.7}\) dataset has many edges in the initial graph owing to the poor pruning effect. Consequently, it is evident that the baseline method, which performs constraint checks an equal number of times as the number of edges, is unsuitable. Hence, the proposed method is effective for cases involving many edges because the number of constraint checks depends on the number of nodes.

Next, the chunk scale score was evaluated. For both datasets, the chunk scale was less for the execution time and the number of constraint checks when \(s=5, 10\) than \(s=2, 50\). This was better than \(s=2\) because a larger chunk scale reduced the maximum chunk level \(k_{\max}\) and promptly identified unremovable edges. Conversely, if the chunk scale is larger, such as \(s=50\), the chunk process becomes less effective in areas with few unremovable edges because a single chunk check failure leads to splitting into finer chunks. Moreover, it is essential to note that the number of constraint checks and execution time do not directly correspond because deleting and restoring edges consumes time. The number of constraint checks for \(s=50\) is lower than that for \(s=2\) in Fig. 10, but the execution time is comparable due to the time to remove and restore edges.

4.2 Results of Wang dataset

Figure 11 exhibits the execution time and the number of constraint checks for each number of nodes in the Wang dataset. Although the execution time increases with the number of nodes, even for \(s=5, 10\), the execution time is slightly shorter than that of the baseline. Even at baseline, the process took less than 3 s at most. Therefore, the proposed method has no negative impact on general use cases.

4.3 Results of streamlining constraint checks

We compared the following 4 variations on the implementation of constraint checks (chunk scale \(s=5\))

- Version 1:

-

Existing Lomap case with no changes to either cycle or diameter constraints.

- Version 2:

-

The case with speedup of cycle constraints only.

- Version 3:

-

The case with speedup of diameter constraints only.

- Version 4:

-

The fastest case with both cycle and diameter constraints changed.

Figures 12 shows the execution time for each number of nodes in the Thompson TYK2 and Thompson TYK2\(_{0.7}\) datasets for 4 variations. Figures 13 also show how many times faster each version is compared to Version 1 in the Thompson TYK2 dataset (number of nodes is fixed at \(N=500\)). From the figure, Version 4 modified both constraint checks is the fastest, more than 10 times faster than Version 1, which made no changes to the constraint checks. In addition, Version 3 modified with only the diameter constraint check is faster than Version 2 modified with only the cycle constraint check. Therefore, the diameter constraint is the most significant contribution to the implementation change.

Finally, Fig. 14 shows how many times faster the proposed method FastLomap is compared to conventional Lomap for Version 1 and Version 4. (The number of nodes is fixed at \(N=500\).) FastLomap is 7.7 times faster than Lomap, and combined with faster constraint checks, it is 100 times faster.

5 Conclusion

In this study, we propose a chunk check process to improve the Lomap algorithm and make it scalable for increasing the number of compounds. The proposed method may have limited effectiveness for general use in a few dozen cases; however, it demonstrates significant efficiency and is several dozen times faster when applied to several hundred cases. In addition to the chunk check process, Lomap can be made about one hundred times faster by streamlining the cycle constraint and diameter constraint checks. As the output graph of the proposed method is the same as that of the original Lomap algorithm, it can be used immediately to replace existing methods.

Although not addressed in this study, accelerating link score calculation is an issue for future research. Because link score calculations for all compound pairs are required, the computational complexity increases by an order of squares. The recently proposed HiMap [9] uses the DBSCAN method for unsupervised clustering of similar compounds. Clustering using compound fingerprints and calculating the scores based on the maximum common substructure for each cluster may reduce the overall computational cost. In addition, further discussion is required on whether the Lomap’s graphs is appropriate for large FEP designs. It is crucial to analyze the impact of cycle closure redundancy on the accuracy of perturbation maps in large FEP graphs.

Our proposed method can generate graphs up to hundreds times faster than the original Lomap for cases with fewer edges and up to several hundred times faster for those with numerous edges. The proposed method is not only applicable to the planning of large-scale FEP experiments but is also promising as an active learning approach for relative binding free energy calculations.

Availability of data and materials

Not applicable.

Code availability

FastLomap is available at https://github.com/ohuelab/FastLomap under the MIT license.

References

Bleicher KH, Böhm H-J, Müller K, Alanine AI (2003) Hit and lead generation: beyond high-throughput screening. Nat Rev Drug Discov 2(5):369–378

Wang L, Wu Y, Deng Y, Kim B, Pierce L, Krilov G, Lupyan D, Robinson S, Dahlgren MK, Greenwood J, Romero DL, Masse C, Knight JL, Steinbrecher T, Beuming T, Damm W, Harder E, Sherman W, Brewer M, Wester R, Murcko M, Frye L, Farid R, Lin T, Mobley DL, Jorgensen WL, Berne BJ, Friesner RA, Abel R (2015) Accurate and reliable prediction of relative ligand binding potency in prospective drug discovery by way of a modern free-energy calculation protocol and force field. J Am Chem Soc 137(7):2695–2703

Kuhn M, Firth-Clark S, Tosco P, Mey AS, Mackey M, Michel J (2020) Assessment of binding affinity via alchemical free-energy calculations. J Chem Inf Model 60(6):3120–3130

Gapsys V, Pérez-Benito L, Aldeghi M, Seeliger D, Van Vlijmen H, Tresadern G, De Groot BL (2020) Large scale relative protein ligand binding affinities using non-equilibrium alchemy. Chem Sci 11(4):1140–1152

Muegge I, Hu Y (2023) Recent advances in alchemical binding free energy calculations for drug discovery. ACS Med Chem Lett 14(3):244–250

Schindler CEM, Baumann H, Blum A, Böse D, Buchstaller H-P, Burgdorf L, Cappel D, Chekler E, Czodrowski P, Dorsch D, Eguida MKI, Follows B, FuchSS T, Grädler U, Gunera J, Johnson T, Jorand Lebrun C, Karra S, Klein M, Knehans T, Koetzner L, Krier M, Leiendecker M, Leuthner B, Li L, Mochalkin I, Musil D, Neagu C, Rippmann F, Schiemann K, Schulz R, Steinbrecher T, Tanzer E-M, Unzue Lopez A, Viacava Follis A, Wegener A, Kuhn D (2020) Large-scale assessment of binding free energy calculations in active drug discovery projects. J Chem Inf Model 60(11):5457–5474

Liu S, Wu Y, Lin T, Abel R, Redmann JP, Summa CM, Jaber VR, Lim NM, Mobley DL (2013) Lead optimization mapper: automating free energy calculations for lead optimization. J Comput Aided Mol Des 27(9):755–770

Xu H (2019) Optimal measurement network of pairwise differences. J Chem Inf Model 59(11):4720–4728

Pitman M, Hahn DF, Tresadern G, Mobley DL (2023) To design scalable free energy perturbation networks, optimal is not enough. J Chem Inf Model 63(6):1776–1793

Carvalho Martins L, Cino EA, Ferreira RS (2021) PyAutoFEP: An automated free energy perturbation workflow for GROMACS integrating enhanced sampling methods. J Chem Theory Comput 17(7):4262–4273

Settles B (2009) Active learning literature survey

Reker D, Schneider G (2015) Active-learning strategies in computer-assisted drug discovery. Drug Discov Today 20(4):458–465

Reker D, Schneider P, Schneider G, Brown JB (2017) Active learning for computational chemogenomics. Future Med Chem 9(4):381–402

Konze KD, Bos PH, Dahlgren MK, Leswing K, Tubert-Brohman I, Bortolato A, Robbason B, Abel R, Bhat S (2019) Reaction-based enumeration, active learning, and free energy calculations to rapidly explore synthetically tractable chemical space and optimize potency of cyclin-dependent kinase 2 inhibitors. J Chem Inf Model 59(9):3782–3793

Gusev F, Gutkin E, Kurnikova MG, Isayev O (2023) Active learning guided drug design lead optimization based on relative binding free energy modeling. J Chem Inf Model 63(2):583–594

Khalak Y, Tresadern G, Hahn DF, Groot BL, Gapsys V (2022) Chemical space exploration with active learning and alchemical free energies. J Chem Theory Comput 18(10):6259–6270

Furui K, Ohue M (2023) Faster lead optimization mapper algorithm for large-scale relative free energy perturbation. In: Proceedings of The 29th International Conference on Parallel & Distributed Processing Techniques and Applications (PDPTA’23), pp 2126–2132

Hagberg AA, Schult DA, Swart PJ (2008) Exploring network structure, dynamics, and function using NetworkX. In: Proceedings of the 7th Python in Science Conference, pp 11–15

Takes FW, Kosters WA (2011) Determining the diameter of small world networks. In: Proceedings of the 20th ACM International Conference on Information and Knowledge Management, pp 1191–1196

Borassi M, Crescenzi P, Habib M, Kosters WA, Marino A, Takes FW (2015) Fast diameter and radius BFS-based computation in (weakly connected) real-world graphs: with an application to the six degrees of separation games. Theor Comput Sci 586:59–80

Schmidt JM (2013) A simple test on 2-vertex- and 2-edge-connectivity. Inf Process Lett 113(7):241–244

Thompson J, Walters WP, Feng JA, Pabon NA, Xu H, Goldman BB, Moustakas D, Schmidt M, York F (2022) Optimizing active learning for free energy calculations. Artif Intell Life Sci 2:100050

Liang J, Tsui V, Van Abbema A, Bao L, Barrett K, Beresini M, Berezhkovskiy L, Blair WS, Chang C, Driscoll J, Eigenbrot C, Ghilardi N, Gibbons P, Halladay J, Johnson A, Kohli PB, Lai Y, Liimatta M, Mantik P, Menghrajani K, Murray J, Sambrone A, Xiao Y, Shia S, Shin Y, Smith J, Sohn S, Stanley M, Ultsch M, Zhang B, Wu LC, Magnuson S (2013) Lead identification of novel and selective TYK2 inhibitors. Eur J Med Chem 67:175–187

Bauer MR, Mackey MD (2019) Electrostatic complementarity as a fast and effective tool to optimize binding and selectivity of protein-ligand complexes. J Med Chem 62(6):3036–3050

Funding

This study was partially supported by JST FOREST (JPMJFR216J), JST ACT-X (JPMJAX20A3), JSPS KAKENHI (20H04280, 22K12258, 23H04887) and AMED BINDS (JP23ama121026).

Author information

Authors and Affiliations

Contributions

KF and MO conceived the idea of the study. KF developed the computational methods, conducted implementation and analyses, and drafted the original manuscript. MO contributed to the interpretation of the results and supervised the conduct of this study. All authors reviewed the manuscript draft and revised it critically on intellectual content. All authors approved the final version of the manuscript to be published.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Furui, K., Ohue, M. Fastlomap: faster lead optimization mapper algorithm for large-scale relative free energy perturbation. J Supercomput 80, 14417–14432 (2024). https://doi.org/10.1007/s11227-024-06006-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-024-06006-y