Abstract

The brain is the most vital component of the neurological system. Therefore, brain tumor classification is a very challenging task in the field of medical image analysis. There has been a qualitative leap in the field of artificial intelligence, deep learning, and their medical imaging applications in the last decade. The importance of this remarkable development has emerged in the field of biomedical engineering due to the sensitivity and seriousness of the issues related to it. The use of deep learning in the field of detecting and classifying tumors in general and brain tumors in particular using magnetic resonance imaging (MRI) is a crucial factor in the accuracy and speed of diagnosis. This is due to its great ability to deal with huge amounts of data and avoid errors resulting from human intervention. The aim of this research is to develop an efficient automated approach for classifying brain tumors to assist radiologists instead of consuming time looking at several images for a precise diagnosis. The proposed approach is based on 3064 T1-weighted contrast-enhanced brain MR images (T1W-CE MRI) from 233 patients. In this study, the proposed system is based on the results of five different models to use the combined potential of multiple models, trying to achieve promising results. The proposed system has led to a significant improvement in the results, with an overall accuracy of 99.31%.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cancer is one of the leading causes of death worldwide, and it is a significant barrier to improving life expectancy. A brain tumor is caused by the growth of abnormal cells inside the brain, which damages the brain’s key tissues and progresses to cancer [1]. The American Cancer Society (ASC) predicts that 24, 810 people will have malignant tumors by 2023, with 18, 990 dying as a result [2]. There are about 150 different types of brain tumors that may be found in humans. There are (i) benign tumors; (ii) malignant tumors among them [3].While benign tumors develop slowly and do not spread to other tissues, destructive malignant tumors grow quickly [4]. The malignant tumors can be categorized into gliomas, meningiomas, and pituitary tumors as they are the most common types of brain tumors [5]. Gliomas grow from glial cells in the brain. Meningioma tumors often develop on the protective membrane of the brain and spinal cord [6]. Pituitary brain tumors are benign and develop in the pituitary glands, which are the underlying layer of the brain that produces some of the essential hormones in the body. In clinics, MRI can be the most effective tool for in vivo and noninvasive visualization of brain tumor anatomy and functionality [7]. Early diagnosis and accurate classification of brain tumors are urgent for saving a human’s life. There is a great difficulty in using manual technique due to its responsibility for about 10-30% of all misdiagnoses, so the use of computer-aided diagnosis (CAD) is vital for helping radiologists improve the accuracy and needed time for image classification [8,9,10]. In radiology, the application of artificial intelligence (AI) decreases error rates further than human effort [11]. Machine learning and deep learning are branches of artificial intelligence that can enable radiologists to locate and classify tumors quickly without requiring surgical intervention. [12]. A convolutional neural network (CNN) is a branch of a deep learning approach that has achieved great success recently in the field of medical imaging problems [13]. CNNs can extract the most useful features automatically and decrease the dimensions [14, 15]. So using CNN, the traditional handcrafted features are no longer necessary because it automatically learns the important features to provide final output predictions on its own. Various CNN models were used for brain tumor classification (i.e., GoogleNet [16], AlexNet [17], SqueezeNet [18], ShuffleNet [19], and NASNet-Mobile [20]). CNN models have achieved great success in the field of medical classification, such as skin lesion classification, breast cancer classification, COVID-19 classification, diabetic retinopathy classification, and arrhythmia classification [21,22,23,24,25]. By applying these pre-trained CNN models to classify brain tumors using majority voting, the strengths of each of the five CNN models were well exploited using the 3064 T1W-CE MRI dataset [26]. Nevertheless, brain tumor classification is extremely challenging due to changing morphological structure, complicated tumor appearance in an image, and irregular illumination effects, requiring the employment of an effective technique regarding brain tumor classification to support the radiologist’s choice. Every year, new classification methodologies emerge to overcome limitations in prior approaches. The contribution of this study could be summarized as follows:

-

This research proposes a hybrid technique based on majority voting to make the final classification decision for three categories of brain tumors (meningioma, glioma, and pituitary) using five fine-tuned pre-trained models to offer reliable and precise tumor classification and provide radiologists with an accurate opinion;

-

The majority voting technique is based on the prediction outputs of five fine-tuned, pre-trained models: GoogleNet, AlexNet, ShuffleNet, SqueezeNet, and NASNet-Mobile which are able to perform classification tasks well and compete with more advanced CNNs;

-

We have used a public brain tumor image dataset with minimal pre-processing steps in the training phase, and in the testing phase, images have been tested without any pre-processing steps;

-

Finally, the performance of our suggested technique has proved its efficient against state-of-the-art based on a variety of metrics, such as accuracy, recall, precision, specificity, confusion matrix, and f1-score, to classify three types of brain tumors.

This paper is organized as follows: the next part deals with the associated related work about brain tumor classification; Section 3 proposes methods that are described in detail; additionally, Section 4 emphasizes results of the experiments and discussion; and finally, Section 5 gives the conclusion and discusses future work.

2 Related works

Machine learning and deep learning are the two main techniques used for brain tumor classification [27, 28]. Machine learning has been utilized in various studies such as k-nearest neighbor (KNN), support vector machines (SVM), decision trees, and genetic algorithms [28,29,30,31]. Cheng et al. [26] proposed an approach to brain tumor classification that augmented the region of the tumor to improve the performance of classification. They utilized three techniques for feature extraction: First, a gray-level co-occurrence matrix, second, a bag of words; and finally, an intensity histogram. They obtained an accuracy of 91.28%. On the other hand, CNN can extract features well, classify with the last layers of fully connected layers, and achieve high results in medical imaging. Recent human works on medical image detection, segmentation, and classification of brain tumors from MR images are a very urgent task to determine the appropriate treatment in a timely manner [32,33,34]. For example, Cherukuri et al. [35] used Xception as a backbone model and proposed a multi-level attention network (MANet) that included both spatial and cross-channel attention on the 3064 T1W-CE MRI dataset. They achieved an accuracy of 96.51% on the T1W-CE MRI dataset. One of the major disadvantages of long-short-term memory (LSTM) networks is that they are more computationally expensive and require more complex tuning of the network. Furthermore, since LSTMs are more complicated than CNNs and RNNs, it can be difficult to debug them and identify any problems that may arise.

Guan et al. [36] first improved visual quality of the input image using contrast optimization and nonlinear strategies. Secondly, the tumor locations were acquired based on both segmentation and clustering techniques. Then, these locations were scored, used in parallel with the corresponding input image, and provided to EfficientNet to extract features. Thirdly, these locations were further optimized to improve detection performance. Finally, these locations were aligned and used to define tumor classes and their locations. They achieved accuracy of 98.04% with fivefold cross-validation on the T1W-CE MRI dataset. However, this study has the drawback of increasing the computational cost because it requires the training of many networks. Badža. et al. [37] proposed a developed CNN with 22 layers to classify three types of tumors (meningioma, glioma, and pituitary) in the T1W-CE MRI dataset. They used subject-wise and record-wise tenfold cross-validation on both the augmented and original image databases for the network test. The best accuracy obtained using record-wise tenfold cross-validation with an augmented dataset was 96.56% on the 3064 T1W-CE MRI dataset. Deepak et al. [38] used deep learning and machine learning as they modified a pre-trained GoogleNet with the Adam optimizer. When SVM or KNN was employed instead of the classification layer within the transfer learning model, the system’s accuracy increased. They obtained accuracy of 92.3%, 97.8%, and 98% for GoogleNet, SVM, and KNN, respectively, with fivefold cross-validation on the T1W-CE MRI dataset. This research supports the ability of the proposed system to precisely classify the three types of brain tumors, but it has many drawbacks, including: first, as an independent classifier, the transfer-learned model performed relatively poorly. Second, there was a significant misclassification in meningioma class samples.

Díaz-Pernas et al. [39] proposed a method for brain tumor segmentation and classification on the T1W-CE MRI dataset. Sliding window segmentation, with each pixel classified using a N \(\times \) N window. They used data augmentation for the input data to prevent overfitting. Classification CNN has three pathways (small, medium, and large feature scales) for extracting features. The multiscale CNN can effectively segment and classify the three different types of tumors with an accuracy of 97.3% on the T1W-CE MRI dataset. The lowest value of sensitivity for meningioma is because of the lower intensity of contrast between the tumor and healthy areas, which can be considered a limitation. Alhassan et al. [40] proposed a CNN with a hard-swish-based RELU activation function. The proposed approach included three steps: First, an image pre-processing step using the normalization technique was applied to enhance the visualization of the brain images. Then, they used the HOG feature descriptor for extracting the feature vectors from normalized images. Finally, for the classification step, a hard-swish-based RELU activation function was used as a CNN classifier to classify gliomas, meningiomas, and pituitary tumors. They attained accuracy of 98.6% for brain tumor classification on the T1W-CE MRI dataset.

Ghassemi et al. [41] utilized a pre-trained deep convolutional neural network as a GAN discriminator (DCGAN). The discriminator can learn and extract robust features from MR image structures. Then, a softmax layer was used within the GAN discriminator instead of the last fully connected layer. They achieved accuracy of 95.6% for brain tumor classification on the T1W-CE MRI dataset. One of the drawbacks of the study is the limitations of GAN, as the size of the network was 64\(\times \)64 and this prevents the use of pre-trained architectures as discriminators because they need larger input sizes. Noreen et al. [42] used deep learning and machine learning for brain tumor classification. They applied a fine-tuned Inception-v3 to extract features, and then, classification was performed by replacing the final layer with a softmax layer, SVM, KNN, random forest (RF), and ensemble technique using machine learning. They applied a fine-tuned Xception to extract features, and then, classification was performed by replacing the final layer with a softmax layer, SVM, KNN, random forest (RF), and ensemble technique using machine learning. Inception-v3-Ensemble method produced the best testing accuracy of 94.34% among all proposed methods for brain tumor classification on the T1W-CE MRI dataset. Proposed methods suffer from time-consuming and high computational costs.

Gumaei et al. [43] proposed a regularized extreme learning machine (RELM) as a hybrid feature extraction method. First, the step of pre-processing the brain images using min–max normalization to boost the contrast of brain edges. Next, features of brain tumors were extracted based on hybrid methods called PCA-NGIST that used a Normalized GIST descriptor with PCA to extract the significant features. Finally, a RELM was used to classify brain tumor types. They achieved accuracy of 94.233% with fivefold cross-validation on the T1W-CE MRI dataset.

Haq et al. [44] produced the DCNN technique for detection and classification of brain tumors, which included three stages: first, pre-processing step was applied using (a) N4ITK, (b) normalization, (c) for each pixel in the image, the mean intensity value and standard deviation were calculated, (d) after completing N4ITK and normalization, the proposed model parameters were initialized, and (e) the values of each parameter were then updated. Data augmentation methods were applied to flipping, translation, and rotation. Second, they used a GoogleNet variant model with back-propagation with and without using conditional random fields (CRF) as a post-processing step that can assign image pixel features and their associated labels. They achieved 97.3%, and 95.1% accuracy with and without CRF, respectively, on the T1W-CE MRI dataset. The proposed model has disadvantages, such as not being suitable for classification tasks involving small amounts of data. When the network receives erroneous information from various imaging modalities of patients, the classification performance suffers. A lot of pre-processing steps.

Ghosal et al. [45] used a DCNN-based SE-ResNet-101 architecture that was fine-tuned to fit training data. For the pre-processing step, they applied ROI segmentation, intensity zero-centering, intensity normalization, and data augmentation methods such as elastic transform, flip, shear with different transformation degrees, and rotate. The SE-ResNet-101 model, which combines ResNet-101 with squeeze and excitation (SE) blocks, was then trained to classify three brain tumors (meningiomas, gliomas, and pituitary) on the T1W-CE MRI dataset. They achieved overall accuracy of 89.93% and 93.83% without and with data augmentation, respectively. Nawaz et al. [46] utilized three steps to classify brain tumors: firstly, they developed annotations to identify the exact location of the interest region. Second, to extract the deep features from the suspected samples, they implemented a custom CornerNet with a base network of DenseNet-41. Finally, they employed the one-stage detector with CornerNet to locate and classify several brain tumors. They achieved 98.8% based on the T1W-CE MRI dataset. The proposed method has the advantage of providing a low-cost solution to brain tumor classification, as CornerNet employs a one-stage object identification framework.

Verma et al. [47] suggested Hyper-Sphere Angular Deep Metric-based Learning (HSADML) with MobileNet as the backbone network, which used deep angular metric learning using SphereFace Loss to improve generalization and robustness in classification. They achieved 98.69% of overall accuracy based on the T1W-CE MRI dataset. The suggested method had the benefit of enhancing intra-class separability and decreasing intra-class variability, which resulted in significant efficiency increases. The weakness of this research is that it did not emphasize on the backbone network; thus, in future aspects of the work, there is a gap for the introduction of particular attention-based domain-specific networks.

Cinar et al. [48] applied image cropping initially to eliminate any unneeded areas in the image and make sure that the model focused only on those areas. Then, they implemented different data augmentation techniques on the original images: rotating, flipping, zooming, cropping, and translation. They designed a CNN from scratch to classify different types of brain tumors based on the T1W-CE MRI dataset. They achieved an overall accuracy of 98.09%, 98.32%, and 96.35% where the dataset was divided at different rates (70–30%, 80–20%, and 90–10%) for training and testing, respectively. The suggested CNN has the advantage of demonstrating that categorization can be done without the aid of deep networks. The model also has some drawbacks; the training period is very long without the use of the transfer learning method. As a result, working with larger datasets proved impractical, and training the model with a large dataset like ImageNet would have been impossible on normal computers.

Deepak et al. [49] proposed a custom CNN model that consisted of five convolutional layers and two fully connected layers, where each convolution layer was followed by a batch normalization layer, then a ReLU as the activation function, and max pooling was provided after each ReLU activation function. For the pre-processing steps, the dataset was resized into (256\(\times \)256) and then normalized between [0 and 1]. They used two experiments using a fivefold cross-validation method: first, the CNN with a softmax classifier, and second, The CNN with a SVM, which improved classification results from 94.2 to 95.8% where the dataset was divided into 70–30% for training and testing based on the T1W-CE MRI dataset.

Deepak et al. [50] introduced two approaches to enhance the expert system’s performance: the first, deep feature fusion, concerned with the fusion of deep features extracted from CNN models trained with different loss functions. Common models such as SVM and KNN have been used to categorize the fused deep features. In the second approach, majority voting, the outputs for three distinct feature sets were extracted from CNN models trained with separate loss functions. The same classifier was applied to three separate feature sets using a majority voting approach. The experiments were applied to the TL ResNet-18 model to validate the suggested idea. For all experiments, the dataset was divided into training sets, validation sets, and test sets (60:20:20). On CNN, they achieved accuracy of 94.8%, 95.3%, 94.9%, and 95.6% for deep feature fusion with SVM, deep feature fusion with KNN, majority voting with SVM, and majority voting with KNN, respectively. On CNN, they achieved Balanced accuracy of 94.2%, 94.5%, 93.9%, and 94.8% for deep feature fusion with SVM, deep feature fusion with KNN, majority voting with SVM, and majority voting with KNN, respectively. On TL, they achieved accuracy of 94.1%, and 94.1% for deep feature fusion with KNN and majority voting with SVM, respectively. On TL, they achieved accuracy of 94.8% and 94.8% for deep feature fusion with KNN, and majority voting with SVM, respectively. Compared with the existing methods, the proposed approaches based on CNN trained with cross-entropy loss significantly enhanced the predictions of the three types of brain tumors. On the other hand, the proposed approaches have a drawback of increasing computation involved as they required training the CNN using three different loss functions, with 0.34G MAC operations have used in each training iteration.

Kumar et al. [51] used three CNN models, which were AlexNet, ResNet 50, and Inception V3. For the pre-processing step, the images were resized, normalized between [0] and [1], and some augmentation techniques were performed, such as sheer transformation, fill-up, crop, flips, translation, and rotation. This work demonstrated the efficiency of the CNN architecture in identifying enhanced MRI brain tumor images based on their results using different techniques like ResNet50, AlexNet, and Inception V3. They achieved accuracy of 93.51%, 98.24%, and 92.07%, respectively. This work also has some disadvantages: (1) Due to an operation like max pool, a CNN is much slower. (2) If the CNN has multiple layers, the training procedure will take a long time if the computer’s GPU is not up to par. (3) For a ConvNet, a large amount of data needs to be trained.

Briefly, although distinct techniques and algorithms have been discussed in related works for the classification of brain tumors, the majority of prior techniques have some limitations, such as: (1) Traditional (ML) classifiers depend on handcrafted features, which are time-consuming, memory-intensive, and reducing the efficiency of the system. (2) On the other hand, CNN approaches are getting more attention because they can extract features directly from input data, but they require high complexity, fixed input image sizes for each model, and expensive (3) In addition, the challenging task of selecting a CNN model with suitable hyperparameters to maximize the model’s performance for the classification process. (4) Furthermore, most of the previous works suffered from a large number of pre-processing steps. In this research, to overcome these limitations, a minimum number of pre-processing steps are used, along with an accurate choice of deep learning models with suitable hyperparameters. Furthermore, as previously noted, CNN-based classification of brain tumor images was promoted as the single best-performing model in previous research studies. As a result, it is necessary to make the best use of the potential of the hybrid technique for this challenging task. In order to accomplish this, five fine-tuned pre-trained CNN models have been used: GoogleNet [16], AlexNet [17], ShuffleNet [19], NASNet-Mobile [20], and SqueezeNet [18], respectively. These CNN models have been used in medical classification tasks and achieved good results, discussed in [22, 23, 25, 52,53,54]. Fine-tuned pre-trained models achieved superior or equal classification results compared to other, more complicated CNNs for COVID-19 classification [23, 54]. Therefore, the hybrid technique of using multiple effective models can offer additional benefits over a stand-alone model, allowing for a remarkable accuracy rate that outperforms other previous solutions.

3 Proposed methods

The proposed research methodology is illustrated in Fig. 1, which depicts an abstract representation of the proposed hybrid technique for classifying brain tumors using MRI images. The basic steps of the proposed hybrid technique for classifying brain tumors are as follows: Firstly, we downloaded the freely accessible T1W-CE MRI dataset [26], including meningioma, glioma, and pituitary MR images, and we randomly split the dataset into 70% training and 30% testing. Thirdly, we used a data augmentation technique for training images only and testing images without any pre-processing; thus, only original images from the dataset were used to test the trained models. In the fourth stage, the input MRI images of the dataset were resized to fit the suggested CNN model’s input image size. Next, we employed different five fine-tuned pre-trained models, i.e., GoogleNet, AlexNet, ShuffleNet, SqueezeNet, and NASNet-Mobile to distinguish their performance in classifying different categories of brain tumors. The suggested CNN models contained layers from pre-trained networks, with the last three layers replaced to accommodate the new image classes (meningioma, pituitary, and glioma) except for in the pre-trained SqueezeNet, the 1-by-1 convolutional layer was changed instead of the last learnable 1-by-1 convolutional learnable layer with the same number of filters as the number of classes, where WeightLearnRateFactor and BiasLearnRateFactor were changed to 10 to make the learning rates faster than the transferred layers. Then, the majority voting technique was applied using three models, which have the highest accuracy among others. Finally, the proposed majority voting technique was applied to the combination of the outputs of the five models, treating them as a decision-making committee. The voting approach is effective in covering the classification error of the individual models in the proposed methodology. The performance of the proposed technique was evaluated using standard performance measures such as overall accuracy, specificity, sensitivity, f1-score, and confusion matrix.

3.1 GoogLeNet

GoogleNet was the winner of the ILSVRC 2014 competition, which has 138 million to 4 million parameters. The GoogleNet architecture utilizes nine inception modules and consists of 22 learning layers with four maximum pooling layers and one average pooling layer, as shown in Fig. 2a. To reduce overfitting, each fully connected layer contains a rectified linear activation (ReLU) function [16, 55]. The main objective of the inception module is to run multiple operations (pooling and convolution) with multiple filter sizes (1\(\times \)1,3\(\times \)3, and 5\(\times \)5) in parallel, as shown in Fig. 2b. It can capture various patterns of data using different sizes of kernels and filters, to combine features and provide effective output. GoogleNet was fine-tuned by replacing the last three layers, ”loss3-classifier,” ”prob,” and ”output” layer by a ”fully connected layer,” a ”softmax layer,” and a ”classification output” layer to classify new images with types of tumors (meningiomas, gliomas, and pituitary).

3.2 AlexNet

AlexNet was the first deep CNN architecture trained using 1000 different classes on 1.2 million images to win the ILSVRC competition in 2012 [17]. Figure 3 shows that it consists of five convolutional layers and three fully connected layers. The first two convolutional layers are connected by overlapping max-pooling layers in order to extract deep features. The third, fourth, and fifth convolutional layers are directly connected to the fully connected layers. All of the outputs of the convolutional and fully connected layers are connected to the ReLU nonlinear activation function [56]. A softmax activation layer is connected to fully connected layers to produce 1000 different classes. The input image for this network has a size of \(227 \times 227 \times 3\). The last three layers must be fine-tuned and replaced to classify the three types of brain tumors (meningiomas, gliomas, and pituitary).

3.3 SqueezeNet

SqueezeNet is a CNN that employs design strategies to reduce the number of parameters, in particular with the use of fire modules. Figure 4a shows that it contains 15 layers with 5 different layers: 2 layers of convolution, 3 layers of max pooling, 9 layers of fire, 1 layer of global average pooling, and 1 output layer of softmax [18].Initially, a stand-alone convolutional layer named ”conv1” is applied to an input image. An individual filter exists in a squeeze convolutional layer. These are input into an expanded layer that combines 1\(\times \)1 and 3\(\times \)3 convolution filters to extract features at different scales (capture spatial information). Following this layer are eight ”fire modules,” denoted by the numbers ”fire2” through ”fire9.” Then, ”Conv1,” ”Fire4,” ”Fire8,” and ”Conv10” are followed by max pooling with a stride of 2. Figure 4b illustrates that a fire module is formed of the squeeze, the ReLU activation, and expand layers which form the fire layers between the layers of convolution [57]. Assume that FM is feature maps, c is channels, and the output layer of the squeeze operation using kernel w is called f{y}, which can be expressed in an Equation (1) [58]:

This CNN model must be fine-tuned to classify the three types of brain tumors (meningioma, glioma, and pituitary) as follows:

-

The last learnable layer and a final classification layer use the image features extracted by the network’s convolutional layers to categorize the input image;

-

Set a new convolutional2dlayer instead “conv10” which has filter size [1,1] with numfilters of 3 as the number of classes;

-

Set WeightLearnRateFactor and BiasLearnRateFactor to 10 to make the learning rates faster in the new layer than in the transferred layers.

3.4 ShuffleNet

Shufflenet is a CNN that is computationally effective and is designed essentially for devices with limited computational abilities, such as mobiles [19]. It can achieve better accuracy with low computational costs as it utilizes two operations (”channel shuffle” and ”point wise group”). It has 172 layers with input image size \(224 \times 224 \times 3\). In Fig. 5a on the bottleneck feature map, there is a 3\(\times \)3 depthwise convolution for the 3\(\times \)3 layer In Fig. 5b, A ShuffleNet unit is created by replacing the initial 1\(\times \)1 layer with pointwise group convolution and channel shuffle. The second pointwise group convolution’s objective is to restore the channel dimension to match the shortcut path [59]. In Fig. 5c, there are two modifications:

-

Use a \(3\times 3\) average pooling to find the shortest path.

-

Replace element-wise addition with channel concatenation to easily increase channel dimension with a minimum extra computation cost.

All components of the ShuffleNet unit can be calculated effectively thanks to pointwise group convolution with channel shuffle. The ShuffleNet model must be fine-tuned to classify the brain tumor classes by replacing the last three layers of the model. The last three layers of the model, i.e., the ”fully connected layer,” the ”softmax layer,” and the ”classification output layer” were replaced by a new ”fully connected layer,” ”softmax layer,” and ”classification output layer” to classify new images with types of tumor (meningioma, glioma, and pituitary).

3.5 NASNet-Mobile

The Google Brain team developed the Neural Architecture Search Network (NASNet) [20], which is shown in Figure. 6a. NASNet, which framed the problem of determining the best CNN architecture as a reinforcement learning problem with the ability to recall information in order to improve learning accuracy. Figure. 6b shows that the smallest unit in NASNet architecture is the block, and the combination of blocks is called a cell. It has two types of convolutional cells that are repeated several times [60, 61]. These are normal and reduction cells; both of these yield a feature map as shown in Fig. 7:

-

A normal cell, which determined the size of the feature map.

-

A reduction cell, which sorted the reduction feature map’s dimension.

There are two types of NASNet architecture: NASNet-Large and NASNet-Mobile. NASNet-Mobile has 53,26,716 parameters, but NASNet-Large has 8,894,918. Therefore, NASNet-Mobile is more reliable. The NASNet-Mobile model must be fine-tuned to classify the brain tumor classes by replacing the last three layers of the model. The model’s final three layers, ”fully connected layer,” ”softmax layer,” and ”classification output layer” were replaced by a new ”fully connected layer,” ”softmax layer,” and ”classification output layer” to classify new images with tumor types (meningioma, glioma, and pituitary).

3.6 Majority voting technique

The suggested technique employs a hybrid technique that combines five prediction outputs from fundamental models to offer a robust predictive model for classifying brain tumors into meningioma, glioma, and pituitary tumors. The five deep learning models used to categorize brain tumors are GoogleNet, AlexNet, SqueezeNet, ShuffleNet, and NASNet-Mobile. Although each of the aforementioned models performs well in classifying brain tumors individually, they are still far from an optimum classification system. These models’ capabilities can be combined to approximate the performance of the suggested technique and develop an effective brain tumor classification technique. So, when the above five models are combined, all three or four learning models may cover the mistake of the fifth learning model, reducing the total error of the system in the classification task as shown in Fig. 8a. In the following sections, we will discuss the results of each model and then demonstrate that the proposed technique of a majority vote improved outcomes when compared to related work.

3.7 Fivefold cross-validation

For the experiments, the fivefold cross-validation methodology was used. Five distinct sets were generated at random from each dataset using this technique. There were various patient samples in each fold, or training and test set as represented in Fig. 8b. It can evaluate the performance of the model more accurately and provide a more reliable prediction of how the model will perform on untested data.

4 Results and evaluation

4.1 Dataset and pre-processing

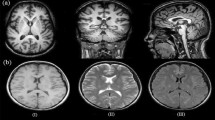

Public brain tumor data was used from a Figshare source, which consists of 3,064 T1-CE brain MRI slices acquired from 233 patients and presented by Cheng et al. in 2017. These images were obtained over 5 years from Nanfang Hospital, Guangzhou, China, and Tianjian Medical University General Hospital, China, in 2015 and updated in 2017. It has three types of brain tumors: 1426 (glioma), 708 (meningioma), and 930 (pituitary tumor), each with three views: axial, sagittal, and coronal. Images in the dataset are in 2D volumes with a resolution of 512\(\times \)512 and pixel sizes of \(0.49 \times 0.49 \, \text {mm}^2\) in.mat format. Furthermore, three experienced radiologists bordered the tumor region in the MRI manually [26]. The data was randomly split into 70% for training and 30% for testing. Figure 9 shows three different types of brain tumors, as follows: meningioma, glioma, and pituitary, which have different acquisition views (axial, coronal, and sagittal) and are shown from left to right, respectively. Table 1 provides supplementary details on the description of the T1W-CE MRI dataset.

Pre-processing data aids in optimizing input data for the next training step. We convert data from.mat files into.png format, then duplicate them to provide m\(\times \)n\(\times \)3 images. Data augmentation techniques can improve model training capabilities [37, 38]. The five models make use of two augmentation techniques: translation on both the x and y axes and reflection on the x axis. These techniques enable CNN to look everywhere in the image to capture it. Then, the MR images were resized to fit the input image size for each CNN model: (224\(\times \)224\(\times \)3) for GoogleNet, ShuffleNet, and NASNet-Mobile, and (227\(\times \)227\(\times \)3) for AlexNet and SqueezeNet.

4.2 Evaluation metrics

The suggested model’s performance was evaluated based on its overall accuracy, specificity, sensitivity, precision, f1-score, confusion matrix, Matthews correlation coefficient (MCC), kappa, and classification success index (CSI). It is easy to obvious the harmonic mean of recall and precision values through the f1-score value. Considering the extreme cases is the reason why a harmonic mean is more preferable than a simple mean. In a case of simple average calculation, a model with a recall value of 1 and a precision value of 0 might have 0.5 f1-score, and this might cause misleading [62,63,64,65]. The mathematical representations are defined in Eq. 2:Eq. 11 as follows:

where Po = the observed agreement, and Pe = the expected agreement which were calculated in Eqs. 9, and 10

The classification success index (CSI) is an evaluation tool that measures a classification model’s efficiency by calculating the percentage of the samples that were correctly classified relative to all samples.

4.3 Hyper-parameters

The goal of hyperparameter optimization is to improve the performance of a particular deep learning model by selecting the most appropriate hyperparameter. Table 2 demonstrates that all models, stochastic gradient descent (SGD), 50 epochs, false verbose, every-epoch shuffle, and a minibatch size of 10 images were employed that all utilized models, stochastic gradient descent (SGD), 50 epochs, verbose of false, shuffle of every-epoch, and a minibatch size of 10 images were used. But GoogleNet and AlexNet models used a learning rate of \(10^{-4}\), while SqueezeNet, ShuffleNet, and NasNet-Mobile used a learning rate of \(2 \times 10^{-4}\), which depended on a trial-and-error method. All experimentations were applied using laptop computer with MATLAB© 2023a. Dell G5-5587 Core i7-8750 H 16 g 1T hard desk drive (HDD) 256 G solid state drive (SSD) GTX1050Ti 4 G Windows10.

4.4 Experimental results and discussion

This section discusses the performance of different five pre-trained DL models and the suggested hybrid technique for brain tumor classification using the T1W-CE MRI dataset into meningiomas, gliomas, and pituitary tumors. At each training phase, 70% of the dataset is used for model training and validation. The performance of the suggested model was assessed using the remaining 30% of the dataset. Figure 10 represents the accuracy and loss of the training and test phases for the ShuffleNet and SqueezeNet models over fifty epochs. A confusion matrix is constructed based on the model’s positive and false predictions to evaluate the effectiveness of the proposed system.

Figure 11 outlines the confusion matrices acquired during experiments, where class ”1” indicates ”meningioma,” ”2” indicates ”glioma,” and ”3” indicates ”pituitary.” It was noticed that the pituitary class has the highest classification proportion. Table 3 presents a comparison between the five fine-tuned pre-trained models that were selected based on their effectiveness in classification tasks to help select an appropriate model for image classification. To achieve the goal, the performance of five pre-trained networks, including GoogleNet, AlexNet, SqueezeNet, ShuffleNet, and NasNet-Mobile, was also examined based on the testing results, which achieved an accuracy of 96.08%, 95.16%, 96.67%, 97.17%, and 97.5%, respectively. NasNet-Mobile has achieved the greatest accuracy of 97.50%, as well as outperforming various assessment measures such as sensitivity (recall), specificity, precision, f1-score, MCC, K., and CSI of 97.4%, 98.52%, 97.04%, 97.47%, 97.03%, and 97.61%, respectively. However, GoogleNet achieved the lowest accuracy of 95.16%, in addition to other evaluation metrics such as sensitivity (recall), specificity, precision, f1-score, MCC, K., and CSI of 95.21%, 97.58%, 95.15%, 95.16%, 95.13%,95.15%, and 95.18%, respectively.

The majority voting technique was applied based on three models (ShuffleNet, SqueezeNet, and NASNet-Mobile), which have the highest accuracy among the five utilized models, and achieved an accuracy of 98.5%. But to get more accurate image classification, the majority voting technique was applied using five fine-tuned pre-trained models, including GoogleNet, AlexNet, SqueezeNet, ShuffleNet, and NasNet-Mobile, achieved an accuracy of 99.31% and outperformed against other utilized fine-tuned models and majority voting based on three models. Thus, it has been shown to be the most effective technique for brain tumor classification.

We compared the results obtained from the hybrid technique with the latest results in the literature, which considered evaluations on a 70–30% training–testing data split against [46,47,48]. Table 4represents a comparison of our proposed technique with that of Deepak et al. [50], who applied the majority technique and divided the same dataset into 60:20:20 for training, validation, and testing. The comparative results indicate a better efficiency of the majority voting technique over the other techniques. It is also important to mention that the most unique point of our proposed hybrid technique is that it is based on more than one model, so it is less likely to make errors than what could happen to a single model. The proposed method provides a 0.51% performance boost in terms of classification accuracy. Furthermore, it can also provide an average improvement of 1.24%, 1.1%, and 0.2% in precision, recall, and F1-score, respectively. Therefore, it is possible to deduce that the proposed hybrid technique is more robust for brain tumor classification. So that it can be used to develop a classification of brain tumors using MR images. Moreover, it will aid radiologists and surgeons in treating the deadly tumor, which causes so many deaths.

Using fivefold cross-validation, the five different pre-trained models from GoogleNet, ShuffleNet, SqueezeNet, AlexNet, and NASNet-Mobile were implemented and achieved accuracy of 96.31%, 97.55%, 97.9%, 96.7%, and 98.3%, respectively. Table 5 illustrates the results accomplished from the five fine-tuned with fivefold cross-validation method. NASNet-Mobile outperformed other utilized models by achieving an accuracy of 98.3%. Comparing with Cheng et al. [26] and Deepak et al. [38]. NASNet-Mobile has performed very well in classifying brain tumors and has achieved good results, where GoogleNet achieved less accuracy compared to other proposed fine-tuned models.

5 Conclusion and future work

This research provides an efficient hybrid brain tumor classification technique using minimal pre-processing. The proposed technique applied the concept of majority voting to the prediction outputs of five different CNN models to get an accurate classification of brain tumors based on the T1W-CE MRI dataset. The system demonstrated the highest classification accuracy when compared with related work on similar datasets. Several metrics have been used to assess the system’s performance in order to determine its robustness. The proposed hybrid classification technique for classifying brain tumors achieves an accuracy of 99.31%. Our robust automated classification technique will vastly reduce the amount of effort and time required to classify brain tumors. This hybrid technique outflanks the existing models; therefore, radiologists can utilize this system as a second opinion, as it reduces computation time while increasing accuracy and reducing the rate of misclassification. Even with the improvements described in this research, there are significant limitations, such as the need for much more patient data, especially for the meningioma class, which has the lowest number of images among the three studied categories. Moreover, the efficiency of the proposed technique will be investigated for various types of medical image analysis, such as lung cancer, multi-skin lesions, and breast cancer classification. Finally, by including normal brain CE MRI images in the dataset, further distinction for tumor classification may be provided.

Availability of data and materials

The study’s data is available publicly and can be easily accessed from the internet.

References

Hossain A, Islam MT, Abdul Rahim SK, Rahman MA, Rahman T, Arshad H, Khandakar A, Ayari MA, Chowdhury ME (2023) A lightweight deep learning based microwave brain image network model for brain tumor classification using reconstructed microwave brain (rmb) images. Biosensors 13(2):238

T. A. C. S. medical and editorial content team, “Key statistics for brain and spinal cord tumors.” https://www.cancer.org/cancer/brain-spinal-cord-tumors-adults/about/key-statistics.html. Accessed: 2023-03-20

Pradhan A, Mishra D, Das K, Panda G, Kumar S, Zymbler M (2021) On the classification of mr images using elm-ssa coated hybrid model. Mathematics 9(17):2095

Rasool M, Ismail NA, Boulila W, Ammar A, Samma H, Yafooz WM, Emara A-HM (2022) A hybrid deep learning model for brain tumour classification. Entropy 24(6):799

Abd El-Wahab BS, Nasr ME, Khamis S, Ashour AS (2023) Btc-fcnn: fast convolution neural network for multi-class brain tumor classification. Health Inf Sci Syst 11(1):3

Louis DN, Perry A, Reifenberger G, Von Deimling A, Figarella-Branger D, Cavenee WK, Ohgaki H, Wiestler OD, Kleihues P, Ellison DW (2016) The 2016 world health organization classification of tumors of the central nervous system: a summary. Acta Neuropathol 131:803–820

Wang S, Feng Y, Chen L, Yu J, Van Ongeval C, Bormans G, Li Y, Ni Y (2022) Towards updated understanding of brain metastasis. Am J Cancer Res 12(9):4290–4311

Dutta P, Upadhyay P, De M, Khalkar R (2020) “Medical image analysis using deep convolutional neural networks: Cnn architectures and transfer learning,” in 2020 International Conference on Inventive Computation Technologies (ICICT), pp. 175–180, IEEE

Chahal PK, Pandey S, Goel S (2020) A survey on brain tumor detection techniques for mr images. Multimedia Tools Appl 79:21771–21814

Hamed G, Marey M, Amin S, Tolba M (2021) Comparative study and analysis of recent computer aided diagnosis systems for masses detection in mammograms. Int J Intell Comput Inf Sci 21(1):33–48

McBee MP, Awan OA, Colucci AT, Ghobadi CW, Kadom N, Kansagra AP, Tridandapani S, Auffermann WF (2018) Deep learning in radiology. Acad Radiol 25(11):1472–1480

Mansour RF, Escorcia-Gutierrez J, Gamarra M, Díaz VG, Gupta D, Kumar S (2021) “Artificial intelligence with big data analytics-based brain intracranial hemorrhage e-diagnosis using ct images,” Neural Computing and Applications, pp. 1–13,

Özcan H, Emiroğlu BG, Sabuncuoğlu H, Özdoğan S, Soyer A, Saygı T(2021) “A comparative study for glioma classification using deep convolutional neural networks,” Molecular Biology and Evolution

Arabahmadi M, Farahbakhsh R, Rezazadeh J (2022) Deep learning for smart healthcare-a survey on brain tumor detection from medical imaging. Sensors 22(5):1960

Lundervold AS, Lundervold A (2019) An overview of deep learning in medical imaging focusing on mri. Zeitschrift für Medizinische Physik 29(2):102–127

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 1–9

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Iandola FN, Han S, Moskewicz MW, Ashraf K, Dally WJ, Keutzer K (2016) “Squeezenet: Alexnet-level accuracy with 50x fewer parameters and \(< 0.5\) mb model size,” arXiv preprint arXiv:1602.07360

Zhang X, Zhou X, Lin M, Sun J (2018) Shufflenet: An extremely efficient convolutional neural network for mobile devices. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 6848–6856

Zoph B, Vasudevan V, Shlens J, Le QV (2018) Learning transferable architectures for scalable image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 8697–8710

Arora G, Dubey AK, Jaffery ZA, Rocha A (2022) A comparative study of fourteen deep learning networks for multi skin lesion classification (mslc) on unbalanced data. Neural Computing and Applications 1–27

Morovati B, Lashgari R, Hajihasani M, Shabani H (2023) “Reduced deep convolutional activation features (r-decaf) in histopathology images to improve the classification performance for breast cancer diagnosis,” arXiv preprint arXiv:2301.01931

Pham TD (2021) Classification of covid-19 chest x-rays with deep learning: new models or fine tuning? Health Inf Sci Syst 9:1–11

Yasser I, Khalifa F, Abdeltawab H, Ghazal M, Sandhu HS, El-Baz A (2022) Automated diagnosis of optical coherence tomography angiography (octa) based on machine learning techniques. Sensors 22(6):2342

Tesfai H, Saleh H, Al-Qutayri M, Mohammad MB, Tekeste T, Khandoker A, Mohammad B (2022) Lightweight shufflenet based cnn for arrhythmia classification. IEEE Access 10:111842–111854

Cheng J, Huang W, Cao S, Yang R, Yang W, Yun Z, Wang Z, Feng Q (2015) Enhanced performance of brain tumor classification via tumor region augmentation and partition. PloS one 10(10):e0140381

Ding Y, Zhang C, Lan T, Qin Z, Zhang X, Wang W (2015) “Classification of alzheimer’s disease based on the combination of morphometric feature and texture feature,” in 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), pp. 409–412, IEEE

Ahmad I, Ullah I, Khan WU, Ur Rehman A, Adrees MS, Saleem MQ, Cheikhrouhou O, Hamam H, Shafiq M (2021) Efficient algorithms for e-healthcare to solve multiobject fuse detection problem. J Healthcare Eng 2021:1–16

Ahmad I, Liu Y, Javeed D, Ahmad S (2020) “A decision-making technique for solving order allocation problem using a genetic algorithm,” in IOP Conference Series: Materials Science and Engineering, vol. 853, p. 012054, IOP Publishing

Binaghi E, Omodei M, Pedoia V, Balbi S, Lattanzi D, Monti E (2014) Automatic segmentation of mr brain tumor images using support vector machine in combination with graph cut. IJCCI (NCTA) 152–157

Zikic D, Glocker B, Konukoglu E, Criminisi A, Demiralp C, Shotton J, Thomas OM, Das T, Jena R, Price SJ (2012) Decision forests for tissue-specific segmentation of high-grade gliomas in multi-channel mr. MICCAI 3:369–376

Ait Amou M, Xia K, Kamhi S, Mouhafid M (2022) “A novel mri diagnosis method for brain tumor classification based on cnn and bayesian optimization,” in Healthcare, vol. 10, p. 494, MDPI

Biswas A, Islam MS (2023) A hybrid deep cnn-svm approach for brain tumor classification. J Inf Syst Eng Bus Intell 9(1)

Poonguzhali R, Ahmad S, Sivasankar PT, Anantha Babu S, Joshi P, Joshi GP, Kim SW (2023) Automated brain tumor diagnosis using deep residual u-net segmentation model. Comput Mater Continua 74(1):2179–2194

Shaik NS, Cherukuri TK (2022) Multi-level attention network: application to brain tumor classification. Signal Imag Video Process 16(3):817–824

Guan Y, Aamir M, Rahman Z, Ali A, Abro WA, Dayo ZA, Bhutta MS, Hu Z (2021) “A framework for efficient brain tumor classification using mri images,”

Badža MM, Barjaktarović MČ (2020) Classification of brain tumors from mri images using a convolutional neural network. Appl Sci 10(6):1999

Deepak S, Ameer P (2019) Brain tumor classification using deep cnn features via transfer learning. Comput Biol Med 111:103345

Díaz-Pernas FJ, Martínez-Zarzuela M, Antón-Rodríguez M, González-Ortega D (2021) “A deep learning approach for brain tumor classification and segmentation using a multiscale convolutional neural network,” in Healthcare, vol. 9, p. 153, MDPI

Alhassan AM, Zainon WMNW (2021) Brain tumor classification in magnetic resonance image using hard swish-based relu activation function-convolutional neural network. Neural Comput Appl 33:9075–9087

Ghassemi N, Shoeibi A, Rouhani M (2020) Deep neural network with generative adversarial networks pre-training for brain tumor classification based on mr images. Biomed Signal Process Control 57:101678

Noreen N, Palaniappan S, Qayyum A, Ahmad I, Alassafi MO (2021) Brain tumor classification based on fine-tuned models and the ensemble method. Comput Mater Continua 67(3):3967–3982

Gumaei A, Hassan MM, Hassan MR, Alelaiwi A, Fortino G (2019) A hybrid feature extraction method with regularized extreme learning machine for brain tumor classification. IEEE Access 7:36266–36273

Haq EU, Jianjun H, Li K, Haq HU, Zhang T (2021) An mri-based deep learning approach for efficient classification of brain tumors. J Amb Intell Human Comput 1–22

Ghosal P, Nandanwar L, Kanchan S, Bhadra A, Chakraborty J, Nandi D (2019) “Brain tumor classification using resnet-101 based squeeze and excitation deep neural network,” in 2019 Second International Conference on Advanced Computational and Communication Paradigms (ICACCP), pp. 1–6, IEEE

Nawaz M, Nazir T, Masood M, Mehmood A, Mahum R, Khan MA, Kadry S, Thinnukool O (2021) Analysis of brain mri images using improved cornernet approach. Diagnostics 11(10):1856

Verma A, Singh VP (2022) “Hsadml: hyper-sphere angular deep metric based learning for brain tumor classification,” in Proceedings of the Satellite Workshops of ICVGIP 2021, pp. 105–120, Springer

Cinar N, Kaya M, Kaya B (2022) “A novel convolutional neural network-based approach for brain tumor classification using magnetic resonance images,” Int J Imag Syst Technol

Deepak S, Ameer P (2021) Automated categorization of brain tumor from mri using cnn features and svm. J Amb Intell Human Comput 12:8357–8369

Deepak S, Ameer P (2023) Brain tumor categorization from imbalanced mri dataset using weighted loss and deep feature fusion. Neurocomputing 520:94–102

Kumar KK, Dinesh P, Rayavel P, Vijayaraja L, Dhanasekar R, Kesavan R, Raju K, Khan AA, Wechtaisong C, Haq MA et al (2023) Brain tumor identification using data augmentation and transfer learning approach. Comput Syst Sci Eng 46(2)

Medhat S, Abdel-Galil H, Aboutabl AE, Saleh H (2022) Skin cancer diagnosis using convolutional neural networks for smartphone images: a comparative study. J Radiat Res Appl Sci 15(1):262–267

Mohammed AHM, Çevik M (2022) “Googlenet cnn classifications for diabetics retinopathy,” in 2022 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), pp. 1–4, IEEE

Kc K, Yin Z, Wu M, Wu Z (2021) Evaluation of deep learning-based approaches for covid-19 classification based on chest x-ray images. Signal Imag Video Process 15:959–966

Pawara P, Okafor E, Surinta O, Schomaker L, Wiering MA (2017) Comparing local descriptors and bags of visual words to deep convolutional neural networks for plant recognition. ICPRAM 479(2017):486

Grm K, Štruc V, Artiges A, Caron M, Ekenel HK (2018) Strengths and weaknesses of deep learning models for face recognition against image degradations. Iet Biometrics 7(1):81–89

Rasool M, Ismail NA, Al-Dhaqm A, Yafooz WM, Alsaeedi A (2022) A novel approach for classifying brain tumours combining a squeezenet model with svm and fine-tuning. Electronics 12(1):149

Ucar F, Korkmaz D (2020) Covidiagnosis-net: Deep bayes-squeezenet based diagnosis of the coronavirus disease 2019 (covid-19) from x-ray images. Med Hypo 140:109761

Ullah N, Khan JA, El-Sappagh S, El-Rashidy N, Khan MS (2023) A holistic approach to identify and classify covid-19 from chest radiographs, ecg, and ct-scan images using shufflenet convolutional neural network. Diagnostics 13(1):162

Radhika K, Devika K, Aswathi T, Sreevidya P, Sowmya V, Soman K (2020) Performance analysis of nasnet on unconstrained ear recognition. Nature inspired computing for data science 57–82

Addagarla SK, Chakravarthi GK, Anitha P (2020) Real time multi-scale facial mask detection and classification using deep transfer learning techniques. Int J 9(4):4402–4408

Özkaraca O, Bağrıaçık Oİ, Gürüler H, Khan F, Hussain J, Khan J, Ue Laila (2023) Multiple brain tumor classification with dense cnn architecture using brain mri images. Life 13(2):349

Asad R, Rehman SU, Imran A, Li J, Almuhaimeed A, Alzahrani A (2023) Computer-aided early melanoma brain-tumor detection using deep-learning approach. Biomedicines 11(1):184

Altheneyan A, Alhadlaq A (2023) Big data ml-based fake news detection using distributed learning. IEEE Access 11:29447–29463

Alkaissy M, Arashpour M, Golafshani EM, Hosseini MR, Khanmohammadi S, Bai Y, Feng H (2023) Enhancing construction safety: machine learning-based classification of injury types. Safety Sci 162:106102

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

SEN did the whole research and wrote the manuscript under the supervision of MAM, the major supervisor, and IY and HMA, the co-supervisors. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

No conflicts of interest are disclosed by the authors.

Ethical approval

The research is compatible with ethical standards.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nassar, S.E., Yasser, I., Amer, H.M. et al. A robust MRI-based brain tumor classification via a hybrid deep learning technique. J Supercomput 80, 2403–2427 (2024). https://doi.org/10.1007/s11227-023-05549-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-023-05549-w