Abstract

The creativity of an excellent design work generally comes from the inspiration and innovation of its main visual features. The similarity among primary visual elements stands as a paramount indicator when it comes to identifying plagiarism in design concepts. This factor carries immense importance, especially in safeguarding cultural heritage and upholding copyright protection. This paper aims to develop an efficient similarity evaluation scheme for graphic design. A novel deep visual saliency feature extraction generative adversarial network is proposed to deal with the problem of lack of training examples. It consists of two networks: One predicts a visual saliency feature map from an input image and the other takes the output of the first to distinguish whether a visual saliency feature map is a predicted one or ground truth. Unlike traditional saliency generative adversarial networks, a residual refinement module is connected after the encoding and decoding network. Design importance maps generated by professional designers are used to guide the network training. A saliency-based segmentation method is developed to locate the optimal layout regions and notice insignificant regions. Priorities are then assigned to different visual elements. Experimental results show the proposed model obtains state-of-the-art performance among various similarity measurement methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

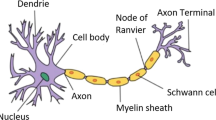

Human beings perceive the external world mainly through the information obtained through auditory, visual, olfactory, taste, and tactile sensory pathways. Among all the information processing subsystems of the brain, the visual processing system occupies the most important position because more than 70% of external information comes from visual perception. Image has the advantages of intuitive and explicit content, easy acquisition, convenient dissemination, and rich information. It is the most essential carrier of visual information in daily human activities. With the rapid development of society, breakthroughs in science and technology, and the increasing popularity of the Internet, the means for people to obtain images has become increasingly convenient and flexible, and the amount of image data obtained has also increased rapidly. Thanks to the visual attention mechanism for complex scenes, we can process a vast amount of information in real time. People can quickly locate the salient or interesting content in the visual scene and further process it, ignoring other inconspicuous or uninteresting content.

Cognitive psychologists and neurophysiologists explore the psychological and biophysical essence of the attention principle from human psychological activities and neuroanatomy. Since the 1990s, more and more computer vision studies have focused on the visual attention mechanism area. In the cognitive theory of visual attention [1], salience is usually defined as:

-

Certain parts of the visual scene are intuitively salient relative to their surrounding parts, which may be certain objects or certain regions.

Visual saliency detection (VSD) [2] finds salient regions in the visual scene and estimates their saliency. By leveraging visual saliency to allocate computing resources preferentially, the interference caused by redundant information in image analysis and processing can be eliminated. This leads to improved speed and accuracy of computer vision algorithms. The interference of redundant information to computing can be eliminated, which not only improves the speed of computer vision algorithms but also improves the accuracy of algorithms. VSD has a wide range of applications in many fields, such as image or video compression [3], content-aware image scaling [4], image rendering [5], image retrieval [6], image segmentation [7], target detection and recognition [8], behavior recognition [9], and target tracking [10].

However, the wide sharing and rapid dissemination of design artworks have brought about serious problems of design homogeneity. Since the main visual features in image design can well reflect the designer’s ideas and creativity [11]. The similarity evaluation of the primary visual features is not only conducive to content-based image retrieval (CBIR) but also helps to detect plagiarism of design concepts, which is of great significance for cultural protection and copyright protection. The widespread and unprecedented distribution of digital artworks (e.g., posters, illustrations, advertisements) puts them at a higher risk of plagiarism [12]. The plagiarism of designs is often based on other people’s ideas (such as layout, form, creative concept, etc.) and is often carried out by hand drawing. This makes it difficult to describe the similarity between the plagiarized designs and generate quantitative indicators [13]. Figure 1 presents two graphic posters with a similar design concept and layout structure. Although they are entirely different at the pixel level, they will still be considered suspected of plagiarism.

Two graphic posters with similar design concepts and layout structures. Available: https://ent.sina.cn/tv/jp_kr/2022-09-05/detail-imizmscv9236093.d.html

This paper proposes a novel visual saliency features (VSF) extraction network named VSFGAN. It consists of two networks: One predicts VSF maps from an input image and the other one takes the output of the first one to discriminate whether a VSF map is a predicted one or ground truth. Different from traditional saliency GANs, we proposed a specific loss function for the VSF of design. The VSF map is segmented based on saliency features guided by the aesthetic rule. We apply the diffusion equation to compute the probability maps for non-dominant visual regions. Finally, a multi-weight similarity measure method is developed based on SSIM [14]. The highlights of this paper can be summarized as follows:

-

A similarity evaluation scheme is proposed for graphic design, which can be used for plagiarism detection.

-

A novel VSF extraction model based on GAN is developed. A residual refinement module is connected after the encoding and decoding network. Design importance maps generated by professional designers are used to guide the network training.

-

The minor visual element regions should be placed in the non-salient areas of the image according to the aesthetic rules. It still needs to be considered in the similarity calculation of design works. We proposed an algorithm to calculate the minor visual element probability map.

The rest of this paper is organized as follows. In Sect. 2, related works are presented and discussed. The proposed method is presented in Sect. 3. Experiments and results are shown in Sect. 4. Finally, the conclusion and future work are presented in Sect. 5.

2 Related works

2.1 Visual saliency detection

In 1998, a classic saliency calculation model based on the neurophysiological mechanism of visual attention and cognitive psychology was proposed by Itti and Koch [15], which laid the foundation for saliency research in computer vision. Since then, the field of visual saliency detection has begun to flourish, bringing computer vision closer to human vision.

From the perspective of the information processing mechanism, VSD methods can be roughly divided into two categories [16]: task-driven top–down (TD) models [17] and data-driven bottom–up (BU) models [18]. TD models feature saliency mapping is mainly guided by task-specific or prior knowledge learned from training scenarios [2]. In contrast, BU models are unconscious, guided by underlying visual features present in the visual field, such as color, orientation, texture, and intensity, without specific task guidance. The main difference between the two models is whether the feature integration computation considers indicators from volitional tasks or learned priors. TD methods generally need to use a large amount of data containing the true value for training or high-level information to guide the saliency detection under a specific task. Compared with the BU methods, TD methods have great limitations in application.

Previous VSD methods mainly utilize the low-level feature (color, orientation, intensity, etc.) contrasts and calculate saliency through linear or nonlinear combinations. With the in-depth research on visual saliency detection, some new salient features have been used, such as uniqueness, distribution, focus, and objectness. At the same time, more and more frameworks are also introduced, such as saliency detection based on cellular automata.

Itti and Koch [15] attempted to model the bottom-up processing performed by early vision systems to detect salient regions and thus estimate visual fixation locations. The model detects salient regions using central–peripheral differences in color, brightness, and orientation. A saliency map is then computed by linearly combining the resulting feature maps. Three primary features were Gaussian filtered to obtain a multi-scale feature pyramid, and the central–peripheral operation is to calculate the difference between different scales in each feature dimension. The final saliency map obtained is a grayscale image, and points with high pixel values have high saliency. Harel et al. [19] proposed a graph-based visual saliency (GBVS) model to improve the model proposed in [15]. Similar to [15], GBVS simulates the visual principle in the feature extraction stage. But it introduces the Markov chain in generating the saliency map to improve the accuracy of saliency detection. The FES proposed by Tavakoli et al. [20] can be considered as a model for simulating visual processing because it also designs a central–peripheral mechanism. FES applies the Bayesian framework to multi-scale central–peripheral analysis, and the required distribution in the Bayesian formula is obtained through sparse sampling and kernel density estimation. Borji [21] combined low-level features such as orientation, color, intensity, and saliency maps of previous best bottom-up models with top-down cognitive visual features (e.g., faces, humans, cars, etc.), and the direct mapping is learned from those features to eye fixations using regression, SVM, and AdaBoost classifiers.

Like many computer vision applications, recent studies have entered the era of using deep learning as feature extraction, and these solutions have greatly improved the performance of VSD. This paper mainly introduces the VSD schemes based on deep learning techniques.

Liu et al. [22] assumed that the saliency of image elements could be derived from the relevance of the saliency seeds (i.e., the most representative salient elements). In this view, they developed a regular linear elliptic system with a Dirichlet boundary to match the diffusion from seeds to other relevant points. Li and Yu [23] found that the salience model can be derived from multi-scale features obtained using deep convolutional neural networks. They used fully connected layers on the top of a CNN, responsible for extracting the features at different levels. Although CNNs have substantially improved human attention prediction, Wang and Shen [24] have improved the CNN-based attention models by efficiently leveraging multi-scale features. Hierarchical saliency information is captured by the visual attention network from deep coarse layers with global saliency information to shallow fine layers with local saliency responses. In this model, supervision is directly fed into multi-level layers. Cornia et al. [25] used a convolutional long short-term memory (LSTM) network [26] to iteratively obtain the most salient area from input to refine the predicted saliency map. Moreover, a set of prior maps generated with Gaussian functions are learned to tackle the center bias typical of human eye fixations.

With the rapid development of GAN models [27,28,29], more and more GAN-based VSD methods have been proposed. Pan et al. [30] proposed a deep CNN for visual saliency prediction named SalGAN. In the generator, weights are learned by back-propagation computed from a binary cross-entropy loss over downsampled versions of the saliency maps. To resolve the distinction between the saliency map produced by the generation stage and the ground-truth map, the generated predictions are processed by a trained discriminator network.

Most previous studies were aimed at improving the detection accuracy of the region. To obtain clear salient object detection boundaries, Qin et al. [31] proposed a hybrid training loss to better preserve the original image’s structure. Their architecture consists of two main components: a densely supervised encoder-decoder network and a residual refinement module. The encoder-decoder network is designed to predict saliency in images, while the residual refinement module focuses on refining the saliency maps generated by the encoder-decoder network. The primary objective is to assess the saliency model's ability to accurately predict where humans typically look in images. With the development of visual saliency detection algorithm research to evaluate the performance of various saliency detection methods, many corresponding image databases and evaluation indicators have been proposed and have been widely used by researchers. The proposal of different saliency detection databases and evaluation metrics also promotes the development of visual saliency detection. Bylinskii et al. [32] analyzed eight evaluation metrics and their properties. With the help of systematic experiments and visualization of metric computations, the interpretability of saliency scores and transparency were added to the evaluation of saliency models. They recommended metric selection under certain assumptions and specific applications based on the metric properties and behavior differences.

2.2 Similarity evaluation of graphic designs

The evaluation of the similarity of graphic designs has always been an unavoidable problem in the visual design domain, especially for copyright protection. Most plagiarized works are often based on other people's creative ideas, show a certain but incomplete visual similarity (such as layout, form, and color matching), and are often done by hand. This makes it difficult to describe the similarity between plagiarized designs and to generate quantitative metrics. Since the high-level similarity of graphic designs generally is not directly copied from the original image, most traditional image forensics methods are hard to detect such plagiarism too. As shown in Fig. 2, the left and right posters are similar in terms of composition, spatial organization, and object properties in each space. But they are not similar in terms of pixels and image features.

Posters suspected of plagiarism. Available: https://www.shrx.org/plus/view-60913-1.html

Most plagiarism identification of graphic designs is based on human eye observation and comparison, leading to high subjectivity in similarity judgments. With the continuous improvement of computer vision technology, researchers try to use computers to calculate the similarity of graphic designs. Garrett and Robinson developed iTrace [33] to explore the possibility of detecting plagiarism in visual works based on image similarity. Bozkr and Sezer [34] tried to evaluate the layout similarity of web pages. Spatial pyramid matching was used to classify web page elements. Finally, the histogram intersection mode was used to capture and measure the visual similarity of partial and entire page layouts. Morphological analysis [35] is a method based on morphological theory to analyze target objects. Its principle is to decompose a problem that needs to be studied into small individual elements and conduct separate processing. These small independent elements are arranged and combined in a network diagram to produce a systematic solution to a problem or a method. Artistic style can be used for image classification [36]. The curvature of the line is used to describe the fluidity of the lines in the image, and the color contrast is used to describe the characteristics of the color style of the image. The similarity rules of artistic image style were generated to classify images based on artistic style.

Lang et al. [37] studied the plagiarized clothing retrieval problem. They proposed a novel network called Plagiarized-Search-Net (PS-Net) based on region representations, and then, the utilized landmarks were used to guide the learning of region representations. Finally, the suspected fashion items were compared region by region. In addition, they proposed a plagiarized fashion database for plagiarized clothes retrieval, which provides a meaningful addition to the existing field of fashion retrieval. Cui et al. [38] elaborate on eight elements that form unique posters and six judgment criteria for plagiarism using an exploratory study with designers. They proposed models leveraging the combination of primary elements and criteria of plagiarism to find suspect instances in a retrieval process. The models are trained under the context of modern artwork and evaluated on the poster plagiarism dataset. Finally, they showed through experiments that the proposed method outperforms the baseline with excellent Top-K accuracy (33%) and retrieval performance (42%).

Although in recent years, many scholars have begun to enter higher-dimensional image similarity calculations (i.e., cognitive dimensions). Due to the highly abstract and aesthetic features of design and artwork, similarity studies on these works are rare. In this paper, we propose to analyze the similarity of graphic designs in the cognitive dimension through visual saliency features.

3 Method

Visual saliency detection refers to simulating the human visual attention mechanism through computer vision algorithms, calculating the importance of information in images, and extracting salient regions (regions of interest) [39]. In this paper, we aim to simulate the visual attention area of people when viewing art and design works through deep learning techniques. Our VSFGAN consists of two networks: One predicts VSF maps from an input image and the other one takes the output of the first one to discriminate whether a VSF map is a predicted one or ground truth. Different from traditional saliency GANs, we proposed a specific loss function for the VSF of design. Visual elements in the image will be assigned different priorities, and secondary visual elements will be greatly suppressed.

The proposed scheme is shown in Fig. 3.

VSD is the calculation of the visual importance of different elements in natural images or graphic designs. Most previous traditional methods are aimed at visual saliency detection of natural images rather than graphic design. Although some images appear to consist mainly of natural images, they are still valued as elements in graphic design. Therefore, every background image element needs to be considered as a graphic design element at the VSD stage.

3.1 Design importance map from human vision (DIM-HV)

When creating a design, controlling the perceived importance of various elements is crucial, and designers often arrange elements to convey their importance. Color, size, and position all affect the position of an element in a design, but it is not easy to quantify this position through mathematical formulas. There is a clear relative difference in the importance of elements in a design: A large graphic in the center will be much more important than a small text in the corner [40]. However, how important is typography to the similarity judgment of the same type of graphic designs? How does the significance of other elements depend on it? The importance of the image is correlated with its salience. To define plagiarism in the context of graphic design, professionals should consider the following instructions:

-

Composition/layout: A design that closely replicates the arrangement or structure of another design without significant modifications.

-

Concept/idea: Directly copying the core concept or idea behind a design from someone else's work.

-

Visual elements: Illegitimately using or imitating unique visual elements such as illustrations, icons, patterns, or typography.

-

Branding/identity: Creating designs that bear striking resemblance to existing brands or their visual identities.

-

Copyrighted material: Unauthorized use of copyrighted images, illustrations, fonts, or other protected materials.

-

Design style/execution: Excessively imitating a specific designer's style, resulting in designs that lack originality.

Inspired by Judd [41], DIM-HV is generated by using the following data-driven process Fig. 4:

-

1.

We downloaded 500 graphic designs from datasets in [40] and departmental repository.

-

2.

Eight professional designers were asked to mark the essential regions of graphic designs that can be identified as plagiarism.

-

3.

The responses over all users were averaged, and the DIM-HV of each design work was obtained by normalizing the responses. Let I be the image with pixel values represented by I (i, j), where 0 ≤ i < H and 0 ≤ j < W. The averaged image Iavg(i, j) is:

$$I_{avg} \left( {i,\;j} \right) = \frac{{\mathop \sum \nolimits_{v = 1}^{8} I_{v} \left( {i,\;j} \right)}}{8}$$(1)

where Iv is the responsive image marked up by the vth professional designer.

-

4.

The normalized image I'(i,j) is:

$$I^{\prime}\left( {i,\;j} \right) = \frac{{I_{avg} \left( {i,\;j} \right) - min\_val}}{max\_val - min\_val}$$(2)where min_val = min (I (i, j)) and max_val = max (I (i, j)) for all i and j in the image.

-

5.

The DIM-HV of each design work is used as the ground-truth mask for our collected experimental images.

3.2 Visual saliency features GAN

Many VSD schemes need to train the network by designing a specific loss function to achieve pleased performance. However, it is difficult to measure the significant effect in a unified way due to the elusive design ideas. Inspired by SalGAN [30], we introduce the idea of generative adversarial, instead of focusing on complex loss functions, expecting the generator to generate saliency maps close to the real through adversarial. GAN is an unsupervised learning method that learns through two neural networks playing games. It consists of a generative network and a discriminative network. The generator network randomly samples from the latent space as input, and its output must mimic the real samples in the training set as much as possible. The input of the discriminative network is the real sample or the output of the generation network, and its purpose is to distinguish the output of the generation network from the real sample as much as possible. The generative network should deceive the discriminative network as much as possible. These two networks fight against each other and constantly adjust the parameters. The ultimate goal is to make the discriminative network unable to judge whether the output of the generating network is true or not. Since GAN does not require many training samples, it is suitable in scenarios with a lack of plagiarism samples of the design. To this end, we proposed a novel visual saliency features extraction network. It consists of two networks: One predicts VSF maps from an input image and the other one takes the output of the first one to discriminate whether a VSF map is a predicted one or ground truth. The overall model framework is based on the encoder–decoder architecture.

VSD requires the input and output images to be consistent in pixel and size. Therefore, we adopt the scheme of downsampling first and then upsampling to restore the output image to the same size as the input image. The encoder–decoder architecture is based on pre-trained VGG-16. To enable the proposed network simultaneously capture the global high-level semantic information and low-level detail information of the graphic designs, a residual refinement module (RRM) [42] is connected after the encoding and decoding network. RRM is a residual block with spatial attention and is adopted to refine the features effectively. It mainly consists of an input, a downsampling, a bridge, an upsampling, and an output layer. The input stage has 64 3 × 3 convolution kernels, followed by batch normalization and ReLU function. The RRM module refines the predicted saliency map by learning the residual of the predicted saliency map and the real one. The architecture of the proposed VSFGAN is presented in Fig. 5.

Tables 1 and 2 list the implementation details of the proposed generator and discriminator, respectively.

Different from the BASNet [31], which focuses on detecting and segmenting salient objects, the goal proposed VSD model is to evaluate the pixel-level visual saliency map. That is, the saliency value of each pixel is in the real range of [0,1]. There are several differences between the VSFGAN and the previous GAN model's loss.

-

6.

The goal is to generate the actual significance value instead of getting a real image from random noise; in this case, the input to the generator is no longer random noise but a design image.

-

7.

The generator not only generates a saliency map indistinguishable from the real one but also makes them correspond to the same input; therefore, the design image and the corresponding DSIM are used as the input of the discriminator.

-

8.

When updating the parameters of the generating function, using the loss function of the combination of discriminator error and cross-entropy relative to ground truth can improve the stability and convergence speed of training.

We used a hybrid loss function for VSFGAN:

where LBCE is the content loss function. When looking at a design, a user may notice more than just a single pixel, so treating each predictor as independent of the other predictors makes more sense. Thus, the binary cross-entropy (BCE) is calculated by averaging individual BCEs over all pixels.

where Sj and \(\widehat{{S}_{j}}\) are the ground-truth normalized VSF and the predicted normalized VSF of the input, respectively.

LSSIM(Θ) is the loss function of SSIM [14], and Θ represents the parameters of the visual saliency detection network. SSIM loss function can capture the structural information of each element in the image. It is a region-level measurement method. It will give higher weight to the element boundary when the model predicts the same salient value between pixels. This will help to obtain clear element boundaries in VSF maps. Suppose x = [xn|n = 1, 2, …, N] and y = [ym|m = 1,2,…, M] denote two images extracted from VSF maps Sj and \(\widehat{{S}_{j}}\), respectively. The SSIM loss function can be defined as:

where µx, µy and σ2x, σ2y represent the mean and variance of x and y, respectively, and σxy is their covariance. We set C1 = 0.012 and C2 = 0.032 based on experimental experience. Experiments have shown that when the hyperparameter α in function (1) is set to 0.005, the effect of the model is the best.

3.3 Saliency-based segmentation

After obtaining a saliency map of an image using a visual saliency detection network, the input image should be segmented to evaluate the similarity of different regions. Designers often use grids or rectangular areas to organize elements. Observers perceive this structure and associate alignment, grouping, and symmetry with these regions. The global position features can be used for segmentation which includes the distance to the “third lines,” power points (intersections of the third lines), image center, boundaries, and diagonals. To compare design similarities, we estimate layout structures based on DSF and assign weights for visual importance.

The minor visual element (MVE) regions should be placed in the non-salient areas of the image according to the aesthetic rules. To calculate the optimal layout area, an intuitive method is to exhaustively enumerate the possible positions and sizes of all MVE regions and calculate the visual salience value of these areas as the score of the layout. But this method has three areas for improvement. First, since there are many inconspicuous background areas, the salient values of these pixels are all small and close, so it is not easy to directly calculate the most suitable MVE layout based on the salient values area. Second, since the saliency value at the edge of the image is usually small, only considering the visual saliency value will place the MVE near the edge of the image, which violates the aesthetic rules and leads to bad visual presentation. Third, similar saliency values in the background region will also lead to a vast search space and increase the amount of computation.

We proposed an algorithm to solve the above shortcomings. First, the diffusion equation is used to calculate the MVE probability map. This map represents the probability of the MVE layout at the corresponding position, and then the candidate area generation algorithm is used to obtain the design layout.

We apply the diffusion equation to compute the probability maps for MVE regions, which are defined as follows:

where ∇X and ∇Y represent the gradients in the horizontal and vertical directions of the pixel, respectively. cX and cY represent the diffusion coefficients in the two directions, respectively. The goal of the diffusion equation we defined is to calculate the probability maps of the MVE regions. The diffusion coefficients cX and cY are set to 1 and 0.6, respectively, according to the aesthetic rules.

In the probability map of the initial stage, there are many regions with the same probability value. While the diffusion equation considers the visual saliency distribution of image elements around each possible region, the number of suitable MVE regions continuously reduces during the iterative process. The iteration stops when the difference between the MVE probability map and the initial saliency map is more significant than a threshold.

In object detection tasks, many methods for generating bounding boxes were developed. However, most of them need to consider the local relationship between the main visual region and surrounding image elements. The generated candidate boxes are hard to be applied for design layout segmentation. We used the hierarchical segmentation algorithm in [40] to segment the design image. Different from [40], we used the probability maps of the MVE regions computed by Eq. 3 as segmentation input. Furthermore, the main visual region will be fixed and set to the highest energy term. The proposed algorithm takes as input a layout, a binary mask for each element, and the element class (graphic or text); the output is a hierarchical segmentation of the design into non-overlapping rectangular regions.

Given a rectangular region R, a cut c is defined as a point (x,y) in R that divides the region into two rectangular subregions, r1 and r2. The energy term penalizes cuts based on the distance to each element’s bounding box. Cuts near the center are weighted more, while cuts near the region’s border are given less weight.

where p ∈ c is the pixels p along the cut c, \({I}_{i}^{p}\) is an indicator variable indicating if element i overlaps with pixel p, and disci(p) is the distance of pixel p to the bounding box of element i. This distance depends on the cut type c. Regions r1 and r2 and counts the number of elements of the same class (text or graphics) in both regions are calculated by an energy function Felm(c).

where N(r) is the number of same class elements in region r, and 0 means no element.

The algorithm tends to divide regions evenly with the regional center. Thus, we normalize the distance of the cut:

where rc is the regional center’s location and rl is the length of the region. A cut F(c) is then evaluated by:

We set vint = 50, velm = 100, and vcen = 1 to obtain the best experimental results.

3.4 Similarity evaluation

Similarity evaluation is to calculate the similarity distance between feature vectors through a certain measurement algorithm. The commonly used measurement algorithms include Euclidean distance, cosine distance, hash distance, mutual information, etc. However, many image similarity calculation methods are based on deep learning models. Because the layout similarity of the graphic designs is not enough to judge the similarity or plagiarism of the works, it is also necessary to evaluate the similarity of the conceptual design of the main visual region. We propose a multi-weight similarity measure based on the Structural SIMilarity (SSIM) index [14] since the segmented image already has a relatively obvious element relationship structure.

where Ri is ith segmented region of the image. Each segmented region is performed SSIM. C is the number of segmented image regions. The weighted VSF of region Ri is calculated by function WV(x).

where VSFx is the normalized VSF of Rx. wx and hx represent the width and height of the segmented region Rx (the segmented region is a rectangle), respectively; and W and H are the width and height of an image, respectively.

4 Evaluation and discussion

4.1 Experimental setup

Two datasets are used to train and test our proposed scheme. (1) The Plagiarized Poster dataset [38] contains 22,624 images with 224 query images; each poster has an average of 4.92 plagiarized designs. It is used to train and test the similarity evaluation ability of our proposed scheme on visual saliency in poster design. (2) The Graphic Design Importance dataset by O’Donovan et al. [40] comes with importance annotations for 1,078 graphic designs from Flickr. It is used to test the similarity evaluation ability of our proposed scheme on the layout in poster design. Some samples are shown in Fig. 6.

Experiments were evaluated on a PC server with Nvidia GeForce GTX two TitanX. The proposed models are trained with a learning rate of 0.0002. Seventy percent of the samples were selected as the training set, and the rest were used for testing. As few studies have been concerned with the plagiarism issue for graphic designs. We compared our scheme to the method proposed in [38], which focuses on retrieving plagiarized posters. Similar test metrics, as in [38], are chosen to make the test results clearer and more comparable.

-

Top-k accuracy. The number of accurate plagiarized samples retrieved in the rank K images. K is set to 10 and 20.

-

Normalized discounted cumulative gain (NDCG) [43]. It is used to measure and evaluate the accuracy of search result algorithms and index ranking results.

4.2 Evaluation results

We implemented six related image similarity measurement methods for comparison. Two of them [44, 45] focus on copy-move forgery detection since plagiarism detection can be considered a kind of clone forensics. Other methods [13, 34, 37, 38] are developed for artwork plagiarism detection. The experiment result on the Plagiarized Poster dataset [38] is shown in Table 3.

Our proposed method performs best in all metrics (i.e., top-10 accuracy is 78.36%, top-20 accuracy is 63.38%, and NDCG is 0.94). This is a benefit of the use of VSDGAN and saliency-based segmentation algorithm. Since GAN does not require a large number of training samples, it is suitable in scenarios where there is a lack of plagiarism samples of the design. As long as the image database and loss function for training is equipped, the applicability of this generative confrontation mode will be significantly improved. That is why the VAE-WGAN-based method [13] can still handle some types of plagiarism in artworks. As shown in Fig. 7, when using "tower" as the main similarity detection element, it can correctly retrieve plagiarized images. However, method [13] was developed for computing the cognitive similarity of graphic logos. It does not perform well on images with complex compositions and rich elements. Two copy–move forgery detection methods [44, 45] can barely retrieve plagiarism in graphic designs, which means that traditional clone forensics cannot be directly applied to perceptual similarity measurement. Notice that the spatial pyramid matching method [34] developed for website design failed to perform in this application. This is because it only focuses on the similarity of the website layout structure. Layout similarity measure is insufficient to detect plagiarism in graphic designs. The closest to our performance is method [38] (i.e., top-10 accuracy is 67.45%). But since this method ignores the importance of compositional similarity in plagiarism identification, it is difficult to detect similar images based on element replacement (for example, the image in the last row and eighth column in Fig. 7). Figure 7 shows the retrieval results of the proposed method and other five comparison methods. (We omit method [34] as it only focuses on Website design.)

To show the effect of using the RRM module and the MVE region in the proposed scheme, we tried four different strategies (i.e., non-RRM and non-MVE, RRM without MVE, MVE without RRM, and RRM with MVE) to evaluate our method. The experiment results shown in Table 4 demonstrate the importance of using the RRM module and the MVE region to evaluate the similarity of graphic designs.

The relevant ablation experiment results shown in Fig. 8 demonstrate the effectiveness of the RRM and MVE components. Note that we did not morph and threshold the image to make the effect clearer.

As can be seen, MVE is more important than RRM in the proposed network architecture. This is because the MVE regions enable the similarity evaluation algorithm to notice insignificant regions, which is essential for higher-dimensional similarity calculations according to the aesthetic rules of designs. In column (c) of Fig. 8, the proposed network model with MVE noticed the title text area, which is an important element of poster design and is often used as an aid to determine plagiarism.

5 Conclusions

Similarity studies of designs and artworks are rare due to their highly abstract and aesthetic features. We propose to analyze the similarity of graphic designs in the cognitive dimension through visual saliency features. A novel visual saliency features extraction network based on the GAN model is developed. The RRM module enables the proposed network to simultaneously capture global high-level semantic information and low-level detail information of graphic designs. Finally, since the segmented image already has a relatively prominent element relationship structure, a multi-weight similarity measure based on SSIM is developed. There are currently some limitations to our scheme. Our optimization and learning process needs to be more efficient for real-time interaction. Predicting element importance is time-consuming; investigating simpler models is possible in future work. In addition, the similarity evaluation performance still has much room for improvement.

References

Borji A, Itti L (2013) State-of-the-Art in visual attention modeling. IEEE Trans Pattern Anal Mach Intell 35(1):185–207. https://doi.org/10.1109/TPAMI.2012.89

Niu Z, Zhong G, Yu H (2021) A review on the attention mechanism of deep learning. Neurocomputing 452:48–62

Ross J, Simpson R, Tomlinson B (2011) Media richness, interactivity and retargeting to mobile devices: a survey. Int J Arts Technol 4(4):442–459

Garg A, Negi A, Jindal P (2021) Structure preservation of image using an efficient content-aware image retargeting technique. SIViP 15(1):185–193

Nasiripour R, Farsi H, Mohamadzadeh S (2019) Visual saliency object detection using sparse learning. IET Image Proc 13(13):2436–2447

Shamir L (2015) What makes a Pollock Pollock: a machine vision approach. Int J Arts Technol 8(1):1–10

Liu Y, Zhang D, Zhang Q, Han J (2021) Part-object relational visual saliency. IEEE Trans Pattern Anal Mach Intell. https://doi.org/10.1109/TPAMI.2021.3053577

Yang Y, Zhang Y, Huang S, Zuo Y, Sun J (2020) Infrared and visible image fusion using visual saliency sparse representation and detail injection model. IEEE Trans Instrum Meas 70:1–15

Zhu Y, Zhai G, Yang Y, Duan H, Min X, Yang X (2021) Viewing behavior supported visual saliency predictor for 360° videos. IEEE Trans Circ Syst Video Technol 32(7):4188–4201

Zhang C, He Y, Tang Q, Chen Z, Mu T (2021) Infrared small target detection via interpatch correlation enhancement and joint local visual saliency prior. IEEE Trans Geosci Rem Sens 60:1–14

Yang B, Wei L, Pu Z (2020) Measuring and improving user experience through artificial intelligence-aided design (in English). Front Psychol 11(3):595374. https://doi.org/10.3389/fpsyg.2020.595374

Farhan NS and Abdulmunem ME (2019) Image plagiarism system for forgery detection in maps design. In: 2019 2nd Scientific Conference of Computer Sciences (SCCS). IEEE, pp. 51–56.

Yang B (2021) Perceptual similarity measurement based on generative adversarial neural networks in graphics design. Appl Soft Comput 110:107548. https://doi.org/10.1016/j.asoc.2021.107548

Wang Z, Simoncelli EP and Bovik AC (2003) Multiscale structural similarity for image quality assessment In: The Thirty-Seventh Asilomar Conference on Signals, Systems & Computers. (2003). vol 2, IEEE, pp. 1398–1402.

Itti L, Koch C, Niebur E (1998) A model of saliency-based visual attention for rapid scene analysis. IEEE Trans Pattern Anal Mach Intell 20(11):1254–1259

Tsotsos JK, Culhane SM, Kei Wai WY, Lai Y, Davis N, Nuflo F (1995) Modeling visual attention via selective tuning. Artif Intell 78(1):507–545. https://doi.org/10.1016/0004-3702(95)00025-9

Marchesotti L, Cifarelli C and Csurka G (2009) A framework for visual saliency detection with applications to image thumbnailing In: 2009 IEEE 12th International Conference on Computer Vision. IEEE, pp. 2232–2239.

Xia C, Qi F, Shi G (2016) Bottom–up visual saliency estimation with deep autoencoder-based sparse reconstruction. IEEE Trans Neural Netw Learn Syst 27(6):1227–1240

Harel J, Koch C, Perona P (2006) Graph-based visual saliency. Adv Neural Inf Process Syst 19:1

Rezazadegan Tavakoli H, Rahtu E, and Heikkilä J (2011) Fast and efficient saliency detection using sparse sampling and kernel density estimation In: Scandinavian Conference on Image Analysis. Springer, pp. 666–675.

Borji A (2012) Boosting bottom-up and top-down visual features for saliency estimation. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition. IEEE. pp. 438–445.

Liu R, Cao J, Lin Z and Shan S (2014) Adaptive partial differential equation learning for visual saliency detection In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition pp. 3866–3873.

Li G, Yu Y (2016) Visual saliency detection based on multiscale deep CNN features. IEEE Trans Image Process 25(11):5012–5024

Wang W, Shen J (2017) Deep visual attention prediction. IEEE Trans Image Process 27(5):2368–2378

Cornia M, Baraldi L, Serra G, Cucchiara R (2018) Predicting human eye fixations via an lstm-based saliency attentive model. IEEE Trans Image Process 27(10):5142–5154

Phan HTH, Kumar A, Feng D, Fulham M, Kim J (2019) Unsupervised two-path neural network for cell event detection and classification using spatio-temporal patterns. IEEE Trans Med Imaging 38(6):1477–1487. https://doi.org/10.1109/tmi.2018.2885572

Sbai O, Elhoseiny M, Bordes A, Lecun Y, and Couprie C (2018) DeSIGN: Design inspiration from generative networks In: Computer Vision – ECCV 2018 Workshops: Munich, Germany, September 8–14, 2018, Proceedings, Part III. Springer, Cham.

Elgammal A, Liu B, Elhoseiny M and Mazzone M (2017) CAN: Creative adversarial networks, generating "Art" by learning about styles and deviating from style norms In: the eighth International Conference on Computational Creativity (ICCC), held in Atlanta, GA, June 20th–June 22nd 2017. [Online]. Available: https://arxiv.org/abs/1706.07068. [Online].

Andries M, Dehban A, Santos-Victor J (2020) Automatic generation of object shapes with desired affordances using voxelgrid representation. Front Neurorobotics 14:05–14. https://doi.org/10.3389/fnbot.2020.00022

Pan J et al. (2017) Salgan: visual saliency prediction with generative adversarial networks. arXiv preprint arXiv:1701.01081.

Qin X, Zhang Z, Huang C, Gao C, Dehghan M and Jagersand M (2019) Basnet: Boundary-aware salient object detection In: Proceedings of the IEEE/CVF Conference on Computer Vision And Pattern Recognition, pp. 7479–7489.

Bylinskii Z, Judd T, Oliva A, Torralba A, Durand F (2018) What do different evaluation metrics tell us about saliency models? IEEE Trans Pattern Anal Mach Intell 41(3):740–757

Garrett L and Robinson A (2012) Spot the difference! Plagiarism identification in the visual arts.

Bozkr AS and Sezer EA (2014) SimiLay: a developing web page layout based visual similarity search engine In: 10th International Conference on Machine Learning and Data Mining MLDM.

Álvarez A, Ritchey T (2015) Applications of general morphological analysis. Acta Morphol Gen 4(1):40

Cetinic E, Lipic T, Grgic S (2018) Fine-tuning convolutional neural networks for fine art classification. Expert Syst Appl 114:107–118

Lang Y, He Y, Yang F, Dong J, and Xue H (2020) Which is plagiarism: Fashion image retrieval based on regional representation for design protection In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2595–2604.

Cui S, Liu F, Zhou T and Zhang M (2022) Understanding and identifying artwork plagiarism with the wisdom of designers: a case study on poster artworks In: Proceedings of the 30th ACM International Conference on Multimedia, pp. 1117–1127.

Huo C, Zhou Z, Ding K, Pan C (2017) Online target recognition for time-sensitive space information networks. IEEE Trans Comput Imaging 3(2):254–263. https://doi.org/10.1109/TCI.2017.2655448

O’Donovan P, Agarwala A, Hertzmann A (2014) Learning layouts for single-pagegraphic designs. IEEE Trans Visual Comput Graph 20(8):1200–1213. https://doi.org/10.1109/TVCG.2014.48

Judd T, Ehinger K, Durand F and Torralba A (2009) Learning to predict where humans look In 2009 IEEE 12th International Conference on Computer Vision, IEEE. pp. 2106–2113.

Zhu Y, Chen C, Yan G, Guo Y, Dong Y (2020) AR-Net: adaptive attention and residual refinement network for copy-move forgery detection. IEEE Trans Industr Inf 16(10):6714–6723

Distinguishability C (2013) A theoretical analysis of normalized discounted cumulative gain (NDCG) ranking measures. Peking University, Peking

Yang B, Sun X, Guo H, Xia Z, Chen X (2018) A copy-move forgery detection method based on CMFD-SIFT. Multimed Tools Appl J Article 77(1):837–855. https://doi.org/10.1007/s11042-016-4289-y

Zhong J-L, Pun C-M (2020) An end-to-end dense-inceptionnet for image copy-move forgery detection. IEEE Trans Inf Forensics Secur 15:2134–2146. https://doi.org/10.1109/TIFS.2019.2957693

Acknowledgements

This work was partly supported by the National Social Science Foundation of China (21BG131).

Funding

This work was partly supported by the National Social Science Foundation of China (21BG131).

Author information

Authors and Affiliations

Contributions

All authors have contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest in this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, Z., Yang, B., An, J. et al. Similarity evaluation of graphic design based on deep visual saliency features. J Supercomput 79, 21346–21367 (2023). https://doi.org/10.1007/s11227-023-05468-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-023-05468-w