Abstract

Cache prefetching is a traditional way to reduce memory access latency. In multi-core systems, aggressive prefetching may harm the system. In the past, prefetching throttling strategies usually set thresholds through certain factors. When the threshold is exceeded, prefetch throttling strategies will control the aggressive prefetcher. However, these strategies usually work well in homogeneous multi-core systems and do not work well in heterogeneous multi-core systems. This paper considers the performance difference between cores under the asymmetric multi-core architecture. Through the improved hill-climbing method, the aggressiveness of prefetching for different cores is controlled, and the IPC of the core is improved. Through experiments, it is found that compared with the previous strategy, the average performance of big core is improved by more than 3%, and the average performance of little cores is improved by more than 24%.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cache is an important component between CPU and memory, which plays an important role in balancing frequency and capacity. Cache prefetching is a mature technology that reduces cache misses and improves core IPC. The basic idea of cache prefetching is that it can predict the missing cache block in the future and then send a request of the missing cache block to memory in advance. If the missing cache block is successfully predicted, the cache miss will be reduced and the performance of the system will improve.

Cache prefetching is closely related to two factors: prefetch distance and prefetch degree. Taking the commonly used Stride Prefetcher as an example, it will record the missing addresses that appear regularly in a certain span. If the number of such regularly missing addresses reaches a certain threshold, the prefetcher will detect that there may be subsequent addresses missing with this regularity, this threshold is called the prefetch distance. When the prefetcher determines that missing addresses reach prefetch distance, it may issue multiple subsequent address requests, and this number of requests is called the prefetch degree.

Due to the emergence of the prefetching mechanism, there will be two memory access requests in the computer system. One is the request when the CPU is missing data. This type of request is called a demand request. This type of request will cause the CPU to wait for data, which will affect system performance. Another type of request is called a prefetch request, which improves the system by reducing CPU stall time when successfully predicting missing addresses. For single-core processors, aggressive prefetching can achieve good results, but for multi-core processors, aggressive prefetching may have the opposite effect. Because too many prefetch requests will saturate the bandwidth of the last-level shared cache and memory, resulting in a longer response time for demand requests, and a correspondingly longer CPU pause time, resulting in system performance degradation.

The prefetch throttling strategy can control aggressive prefetching. In the case of multi-core, it can reduce the number of excessive prefetch requests, thereby reducing the occurrence of last-level cache and memory bandwidth saturation. However, most of the previous throttling strategies are characterized by some side indicators, such as prefetch accuracy, bandwidth need, and cache pollution. When these indicators reach a certain threshold, it indicates that the prefetching in the current system is aggressive, and the control strategy will adjust the prefetching aggressiveness. However, these strategies are only applicable to specific architectures. When these strategies are applied to heterogeneous architectures, the effect of aggressive prefetch throttling through these empirical thresholds may not be ideal.

ARM big.LITTLE is an asymmetric multi-core architecture where the cores use the same instruction set, but have significantly different microarchitectures. Usually, the big core and the little core are packaged in different chips. The big core has a higher frequency and pipeline depth, which is suitable for computing-intensive tasks. The frequency and pipeline depth of the little core is lower. Compared with the big core, it consumes less power and has more advantages in processing memory-intensive tasks.

In this paper, we use gem5 [1] to build a big.LITTLE architecture, which is based on MESI directory protocol implementation, where each core includes private L1 and L2, and each chip shares a LLC. Based on this architecture, the defects of the previous prefetch throttling strategy are summarized. The improved hill-climbing method is used for prefetch throttling control, which can help each core control its aggressiveness and achieve better performance. The prefetch throttling effect improves the overall performance of the system.

The main contributions of this paper are:

-

1.

The architecture of big.LITTLE cores is built by gem5, and the sensitivity of big and little cores to aggressive prefetching is verified by experiments.

-

2.

An improved hill-climbing method is proposed, which is a simple and more efficient method to control aggressive prefetching under heterogeneous architectures.

-

3.

We evaluate a heterogeneous system consisting of a big-core DIE and a little-core DIE, and we demonstrate the effectiveness of the method by comparing it with HPAC [2] and Band-Pass [3]. Through experiments, the average performance of big cores is improved by more than 3%, and the average performance of little cores is improved by more than 24%.

2 Background

2.1 Prefetch strategy and prefetch control strategy

To maximize system performance, designing prefetchers often requires a delicate balance between coverage and accuracy. Achieving high coverage and accuracy is especially challenging in workloads with complex address patterns, which can require large amounts of history to accurately predict future addresses. The Signature Path Prefetcher (SPP) provided an efficient solution [4]. The Bingo prefetcher proposed to use short and long events and selected the best access pattern for prefetching to achieve high accuracy and not lose prediction opportunities [5]. T2 proposed a design only for the canonical stride access pattern, which had a wider coverage and much less memory traffic, lower energy consumption, and better average performance [6]. Decoupled lookahead architecture (DLA) exploited implicit parallelism, using separate thread contexts to perform successive lookaheads to improve data and instruction supply to the main pipeline [7]. The per-page most offset prefetcher (PMOP) minimized hardware cost while improving accuracy and maintaining coverage and timeliness [8]. A variable length delta prefetcher (VLDP) built a history of deltas between consecutive cache-line misses within a physical page and then used these histories to predict the order of cache-line misses in new pages [9]. Expert prefetch prediction was an expert method for predicting the usefulness of prefetched addresses, using the prediction of the best predictor from a pool of predictors [10].

Aggressive prefetching strategies can usually bring higher performance improvements to applications with accurate prefetch requests, but may also put greater pressure on last-level cache and memory bandwidth. When the last level cache and memory bandwidth are saturated, the response to the demand request will become longer, thus affecting the overall performance of the system. Many scholars have done research in this area. HPAC could dynamically adjust the aggressiveness of the prefetchers of multiple cores to control the inter-core interference caused by the prefetchers [2]. SPAC explored the interaction between the throttling decisions of the prefetchers and limited the prefetcher based on fair speedup improvement for multi-core systems [11]. CAFFEINE was a model-based approach that analyzed the effectiveness of a prefetcher and used a metric called net utility to control the aggressiveness [12]. Band-Pass consisted of local and global prefetcher aggressiveness control components and controlled the flow of prefetch requests between a range of prefetch to demand requests ratios [3]. Heirman et al. proposed the idea of near-side throttling based on the principle of detecting delayed prefetches [13]. Lee et al. studied the effect of memory diversity on prefetcher parameter selection and proposed a dynamic parameter search mechanism to tune prefetch aggressiveness under various memory architectures [14]. Lu et al. first proposed the pure prefetch coverage (PPC) metric, which can identify accurate prefetch coverage under the concurrent memory access model, and then proposed APAC, an adaptive prefetching framework with PPC metrics [15]. Sun et al. combined prefetcher control and cache partition control in a coordinated manner and proposed a combined control strategy for the two [16]. Holtryd et al. proposed CBP, a coordination mechanism for dynamically managing prefetch throttling, caching, and bandwidth partitioning to reduce average memory access time and improve performance [17]. Hiebel et al. proposed a framework for learning a prefetcher control policy that tunes the use of hardware prefetchers at runtime based on workload performance behavior [18].

Previous prefetch control strategies are usually based on a homogeneous architecture, with the same core configuration and the same control requirements for the core. The corresponding thresholds are set for indicators such as prefetch accuracy, prefetch coverage, and cache pollution caused by prefetch. When the evaluation indicators reach the threshold, aggressive prefetching will be controlled. However, such prefetching strategies will not apply to heterogeneous architectures, such as big.LITTLE architecture. This case will be explained next.

2.2 big.LITTLE architecture

As an asymmetric multiprocessor architecture, the big.LITTLE architecture has been widely used in modern embedded platforms. In the current architecture, big and little cores can work simultaneously to accommodate more flexible application scenarios. Butko explored the architectural design based on ARM big.LITTLE technology by modeling performance and power consumption in gem5 and McPAT frameworks [19]. Yu proposed a generic asymmetric-aware scheduler for multithreaded multiprogramming workloads, which estimates the performance of each thread on each type of core and determines communication patterns and bottleneck threads [20]. Guo et al. proposed a selective asymmetric-aware work-stealing runtime system to address the workload imbalance, severe shared cache misses, and remote memory accesses in asymmetric multi-core architectures [21]. Garcia-Garcia et al. proposed CAMPS, a competition-aware fair scheduler for AMP, targeting long-running computationally intensive workloads [22]. A study by Kim et al. investigated the fairness issue in asymmetric multi-core, proposing four scheduling strategies to guarantee a minimum performance bound while increasing overall throughput and reducing performance variation [23]. Considering how to assign jobs to asymmetric cores running at tunable frequencies, Lin et al. [24] proposed an energy-efficient scheduler for throughput-guaranteed jobs running on asymmetric multi-core platforms. Wolff et al. used a simple trained hardware model of cache interconnect characteristics, along with real-time hardware monitors, to continually adjust core frequencies to maximize system performance [25]. Bringye developed a user-mode CPUFreq governor alternative that provided a suitable framework for exploiting the features of the big.LITTLE architecture to develop governors [26].

Next, we show the feature impact of big and little core architectures on cache prefetching. Similar to the network-on-chip [27, 28], we use gem5 to build a flexible and configurable core architecture, the architecture is shown in Fig. 1, and the system architecture configuration is shown in Table 1.

The architecture consists of a big core DIE and a little core DIE, where each core has a private L1 cache and a private L2 cache, and each L2cache, last-level cache, and memory are connected through a network. In this architecture, we use the directory-based MESI protocol to ensure cache coherence. Each L1 cache is equipped with a prefetcher, which is used for cache prefetching of core missed data (not marked in the figure).

To test the memory bandwidth requirements of big and little cores, we use PARSEC, a multithreaded benchmark that allows a program to run on different cores simultaneously. The bandwidth results are shown in Fig. 2. It can be seen from the experimental results that the memory bandwidth requirement of the big core is higher than that of the little core during the running of the program.

To test the different requirements of large and small cores for the aggressiveness of prefetching, we tested SPEC06. We started four identical test programs and bound the four programs to the four cores in a one-to-one correspondence. By changing different prefetch distances and prefetch degrees, we test the IPC of each core under different prefetch distances and prefetch degrees. For each core’s IPC, we normalized the results and the results are shown in Figs. 3 and 4.

Therefore, it can be concluded from the above experimental results: 1. The requirements for memory and shared cache bandwidth are different for big and little cores during the same program running; 2. Big and little cores have different IPCs under the same prefetch distance and prefetch degree performance, and big and little cores also vary widely for prefetch configurations for optimal performance.

Using the same prefetch control strategy for each core will not be suitable for heterogeneous multi-core architectures. For complex and changeable system architectures, a more flexible prefetch control strategy should be adopted for each core. Lee et al. have previously studied the impact of memory diversity on prefetchers [14]. They adjusted the prefetching strategy by using the hill-climbing method. However, since the IPC of the big and little cores under different prefetching distances and prefetching degrees is not a gradient rise, there will be a situation of falling into a local optimum. Therefore, we propose a control strategy based on the improved hill-climbing method in the next chapter.

3 Method

3.1 Flexible configuration of big.LITTLE core dies

First, we will introduce a flexible big and little core DIE model based on gem5. The model is transformed based on the basic model of gem5. The schematic diagram of module is shown in Fig. 5.

In this architecture, each core has a L1 cache and a L2 cache. The L2 cache is connected to the last-level cache through a network, and all cores share the last-level cache. At the same time, the Memory controller is also connected to the network. We choose MESI three-level protocol as the cache coherence protocol of the whole system.

To simulate the dual-DIE architecture, we have modified the system architecture. The structure is shown in Fig. 6. We put the last-level cache off-chip and share it among DIEs. For different DIEs, we can configure the core on a DIE as a big core with higher performance or a little core with lower performance, where the number of cores is arbitrarily configurable.

3.2 Hill-climbing algorithm

Hill-climbing is a kind of greedy search strategy. It compares the results of the current system with the results of the previous execution and then judges based on the comparison results to optimize in a certain direction. In this paper, the hill-climbing method is used as the basic algorithm to search for the best prefetching distance and prefetching degree. We only use the IPC as the indicator of the result of the hill-climbing method, because the ultimate goal is to improve the core IPC. The advantage of the hill-climbing method is that it is simple and efficient. It only needs to record the results of the previous two operations and does not need to record the global information. However, the disadvantage of the hill-climbing method is also obvious. First, the hill-climbing method is suitable for the situation with only one variable, and we need to search for two variables, the prefetch distance, and the prefetch degree. Secondly, the hill-climbing method is easy to fall into the local optimal solution, and the optimal solution obtained by the currently used hill-climbing method may only be the locally optimal solution in a system, not the globally optimal solution. To solve the problem that the hill-climbing method is not applicable in the current application scenario, this paper combines the idea of simulated annealing algorithm.

3.3 Simulated annealing algorithm

In previous experiments, we found that the IPC changes for some benchmarks do not have monotonic characteristics, so only using the hill-climbing method will fall into the local optimum. The improvement in the simulated annealing algorithm compared to the hill-climbing method is that the simulated annealing algorithm can receive a suboptimal solution with a probability. At the beginning of the simulated annealing algorithm, an initial temperature T and a cooling rate R are set. When selecting the optimal solution in each round, if the new result (denoted as F(a)) is higher than the old result (denoted as F(b)), the new result is adopted, otherwise, the new result is adopted with probability p. The probability p is calculated by Eq. 1.

At the end of each round, the temperature of the next round is updated in the way of \(T=T*R\), which can avoid falling into local optimum to some extent.

3.4 Improved hill-climbing method

To solve the problem of two-dimensional search, we adopt the method of controlling variables. We divide the search into several rounds, each round selects a different prefetching degree and then searches the prefetching distance using a hill-climbing method combined with a simulated annealing algorithm.

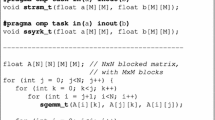

We set the prefetch distance set, prefetch degree set, and candidate node set. We iterate over the values in prefetch degree set in turn. With a fixed prefetch degree, the prefetch distance is searched using a hill-climbing method combined with simulated annealing. In each round of prefetch distance search, we record the prefetch distance and prefetch degree corresponding to the largest IPC. At the end of prefetch distance search, the optimal prefetch degree and prefetch distance will be added into the candidate node set. After searching all prefetch degree nodes, each subsequent adjustment process selects the optimal value from the candidate node set, and continuously updates the optimal value in the candidate node set. This process is shown in Algorithm 1.

Next is the explanation of the algorithm. First, we select a prefetch degree and a prefetch distance, and initialize the parameters. This corresponds to 2–5 lines of code. Then, we search for the best prefetch distance corresponding to this prefetch degree. We will search the prefetch distance in S round. The system will run for a period of time in order to obtain a steady ipc under the combination of current prefetch degree and prefetch distance. Then in the second and subsequent rounds, we obtain the current and previous rounds of ipc. In addition, if the current round is higher than all previous ipcs, the optimal value is updated based on the prefetch degree, prefetch distance and ipc of the current round. In this step, we recorded the best prefetch distance corresponding to the current prefetch degree. This corresponds to 7–16 line of the code.

Then the prefetch distance will be adjusted according to the previous round of ipc and the current round of ipc. First, we initialize the random value and the probability p of simulated annealing algorithm. If the current round ipc is greater than the last round ipc, the prefetch distance will be updated in the original direction, but ensure that the updated prefetch distance is still in \( PD_{is} \). This corresponds to 17–22 line of the code. If the current round ipc is less than the last round ipc, the simulated annealing algorithm will be used. The prefetch distance will be updated in the original direction with probability p, otherwise it will be updated in the opposite direction. Then the temperature and round will be updated. This corresponds to 23–35 line of the code. After the prefetch distance search is conducted for s rounds, the best prefetch distance, prefetch degree and ipc will be added to the candidate node set C. The best prefetch degree will be removed from the \( PD_{eg} \). Then we start the next round of prefetch degree search until \( PD_{eg} \) is empty. This corresponds to 23–37 line of the code.

When the prefetch degree set \( PD_{eg} \) is empty, we will select the prefetch distance and prefetch degree corresponding to the highest ipc from the candidate node set C. Then we sample the system and update the ipc. Finally, we put the updated prefetch distance, prefetch degree and ipc back into the candidate node set. This process continues until the end of the program. This corresponds to 39–45 line of the code. The time complexity of the algorithm is \( O (n^{2}) \) when exploring the combination of prefetch degree and prefetch distance, and O(n) when exploring the set of candidate nodes. In general, the time complexity of the algorithm is \( O (n^{2}) \).

4 Evaluation

4.1 Four-core simulation result

In this part, we use the big.LITTLE core dual-DIE module built by gem5 for simulation evaluation. This heterogeneous system contains two big cores and two little cores. The big core and the little core are distributed on different DIEs, respectively. The SPEC06 benchmark combinations we adopted are shown in Table 2.

We compare the improved hill-climbing method with the classic throttling algorithms HPAC and Band-Pass. As far as we know, the Band-Pass algorithm is a state-of-the-art algorithm in the prefetch control strategy. At the same time, HPAC is the most classical algorithm in this field. The comparison results are shown in Figs. 7 and 8. For big cores, the improved hill-climbing method has an average increase of 3.7% compared with Band-Pass, and an average increase of 3.4% compared to HPAC. For little cores, the improved hill-climbing method has an average increase of 12.4% compared with Band-Pass, and an average increase of 5.6% compared to HPAC. It can be seen from the experimental results that the improved hill-climbing method is better than Band-Pass and HPAC, and the performance improvement in the little core is higher.

The reason Band-Pass is less performant is that it controls the most aggressive prefetcher between the last level cache and memory. At this point, the most aggressive prefetchers may be little cores, and little cores usually require higher prefetch aggressiveness than big cores. If there is no distinction between big and little cores, Band-Pass will always control the aggressiveness of little cores, which will greatly reduce the performance of little cores.

From the experimental results of the hill-climbing method and HPAC, it can be concluded that using the same control strategy for the big and little cores will limit the performance of the big and little cores. HPAC adopts the same control conditions for all cores. After any core reaches the specified threshold, it will be controlled to adjust the aggressive degree of prefetching. However, according to our previous experiments, big and little cores have different requirements in terms of memory bandwidth and prefetch aggressiveness. This also means that setting the same threshold for both big and little cores cannot fully meet the needs of both, so HPAC cannot perform well in the control of the prefetch radical degree of big and little cores.

4.2 Eight-core simulation results

To illustrate the effectiveness of the strategy in the case of more cores, we configure two big cores and six little cores in gem5, and the big cores and little cores are distributed on two DIEs. The experimental results are shown in Figs. 9 and 10. For big cores, the improved hill-climbing method has an average increase of 3.8% compared with Band-Pass, and the improved hill-climbing method has an average increase of 2.2% compared with HPAC. For little cores, the improved hill-climbing method is 24.9% compared with Band-Pass. And the improved hill-climbing method is 8.5% higher than HPAC. When the number of cores increases, the competition between cores becomes more intense. Compared with the previous control strategy, the hill-climbing method can improve the system performance and control the aggressiveness of the prefetcher better.

4.3 Prefetch accuracy

Next is the experimental results of the improved hill-climbing method for prefetch accuracy. We use the dual-DIE model built by two big cores and two little cores for experiment. We tested several benchmarks of SPEC06. We simulated the first 500 million instructions of the program. The experimental results are shown in Tables 3 and 4. Hit indicates the number of prefetch hits. Partial_hit indicates the number of prefetches successfully predicted but failed to write data back to the cache in time. Miss indicates the number of missing prefetches. Accuracy is calculated by Eq. 2. Partical_accuracy is calculated by Eq. 3.The higher the value of partical_accuracy, the worse the timeliness of the prefetcher. The lower the value of partical_accuracy, the better the timeliness of the prefetcher.

According to the experimental results, the average prefetch accuracy of big cores increased from 4.81 to 5.8% and that of little cores increased from 6.75 to 11.95%. The improved hill-climbing method can improve the accuracy of prefetching by adjusting the aggressive degree of prefetching. This is obvious in the small core and slightly reflected in the big core. This also verifies that the algorithm can improve performance by improving the prefetch accuracy.

5 Conclusion

This paper constructs a flexible and configurable dual-DIE model and summarizes the feature that different cores have different performances for prefetching in an asymmetric multi-core architecture. We obtained through experiments: 1. The requirements for memory and shared cache bandwidth are different for big and little cores during program running; 2. Big and little cores have different IPC performance under the same prefetch distance and prefetch degree, and big and little cores also have different prefetch configurations for optimal performance.

Based on the above conclusions, because the previous prefetch throttling strategy did not consider the difference between cores, the previous prefetch throttling strategy will not be suitable for asymmetric multi-core architecture. For such a complex system, we use an improved hill-climbing method to control the prefetch aggressiveness of each core at different times. Compared with the previous prefetch throttling strategy, the IPC of the big core is slightly improved, and the IPC of the little core is significantly improved, which can improve the overall IPC of the system to a certain extent. This can give guidance to the prefetch control strategy in the future heterogeneous multi-core system.

6 Future work

In the future, we will consider more complex application scenarios, such as different cache associativity, cache size, and more cores to study the asymmetric multi-core prefetch control strategy. At the same time, this paper describes the difference requirements of memory bandwidth and prefetch aggressive degree between big core and little core. Based on this observation, an improvement strategy is proposed, which is simple but effective. In the future, we will consider more advanced heuristic strategies to deal with more complex application scenarios.

Data availability

All data generated or analyzed during this study are included in this published article.

References

Lowe-Power J, Ahmad AM, Akram A, Alian M, Amslinger R, Andreozzi M, Armejach A, Asmussen N, Bharadwaj S, Black G, Bloom G, Bruce BR, Carvalho DR, Castrillón J, Chen, L, Derumigny N, Diestelhorst S, Elsasser W, Fariborz M, Farahani AF, Fotouhi P, Gambord R, Gandhi J, Gope D, Grass T, Hanindhito B, Hansson A, Haria S, Harris A, Hayes T, Herrera A, Horsnell M, Jafri SAR, Jagtap R, Jang H, Jeyapaul R, Jones TM, Jung M, Kannoth S, Khaleghzadeh H, Kodama Y, Krishna T, Marinelli T, Menard C, Mondelli A, Mück T, Naji O, Nathella K, Nguyen H, Nikoleris N, Olson LE, Orr MS, Pham B, Prieto P, Reddy T, Roelke A, Samani M, Sandberg A, Setoain J, Shingarov B, Sinclair MD, Ta T, Thakur R, Travaglini G, Upton M, Vaish N, Vougioukas I, Wang Z, Wehn N, Weis C, Wood DA, Yoon H, Zulian ÉF (2020) The gem5 simulator: version 20.0+. CoRR arXIv: abs/2007.03152

Ebrahimi E, Mutlu O, Chang JL, Patt YN ( 2009) Coordinated control of multiple prefetchers in multi-core systems. In: 42st Annual IEEE/ACM International Symposium on Microarchitecture (MICRO-42 2009), December 12–16, 2009, New York, New York, USA

Sridharan A, Seznec A, Panda B (2017) Band-pass prefetching: an effective prefetch management mechanism using prefetch-fraction metric in multi-core systems. Acm Trans Archit Code Optim 14:1–27

Kim J, Pugsley SH, Gratz PV, Reddy A, Chishti Z (2016) Path confidence based lookahead prefetching. In: IEEE/ACM International Symposium on Microarchitecture

Bakhshalipour M, Shakerinava M, Lotfi-Kamran P, Sarbazi-Azad H ( 2019) Bingo spatial data prefetcher. In: 2019 IEEE International Symposium on High Performance Computer Architecture (HPCA)

Kondguli S, Huang M ( 2017) T2: a highly accurate and energy efficient stride prefetcher. In: IEEE International Conference on Computer Design, pp 373– 376

Kondguli S, Huang M (2018) R3-DLA (reduce, reuse, recycle): A more efficient approach to decoupled look-ahead architectures. In: 2019 IEEE International Symposium On High Performance Computer Architecture (HPCA), pp 533–544. https://doi.org/10.1109/HPCA.2019.00064

Kanghee KIM, Wooseok LEE, Sangbang CHOI (2019) Pmop: efficient per-page most-offset prefetcher. IEICE Trans Inf Syst E102.D(7):1271–1279

Shevgoor M, Koladiya S, Wilkerson C, Chishti Z, Balasubramonian R ( 2017) Efficiently prefetching complex address patterns. In: IEEE/ACM International Symposium on Microarchitecture

Panda B, Balachandran S (2015) Expert prefetch prediction: an expert predicting the usefulness of hardware prefetchers. IEEE Comput Archit Lett 15:13–16

Panda B (2016) Spac: a synergistic prefetcher aggressiveness controller for multi-core systems. IEEE Trans Comput 65:3740–3753

Panda B, Balachandran S (2015) Caffeine: a utility-driven prefetcher aggressiveness engine for multicores. Acm Trans Archit Code Optim 12(3):1–25

Heirman W, Bois KD, Vandriessche Y, Eyerman S, Hur I (2018) Near-side prefetch throttling: adaptive prefetching for high-performance many-core processors. In: Proceedings of the 27th International Conference on Parallel Architectures and Compilation Techniques, PACT 2018, Limassol, Cyprus, November 01–04, 2018, pp 1–11

Lee J, Kim T, Huh J ( 2016) Dynamic prefetcher reconfiguration for diverse memory architectures. In: IEEE International Conference on Computer Design

Lu X, Wang R, Sun XH (2020) Apac: an accurate and adaptive prefetch framework with concurrent memory access analysis. In: 2020 IEEE 38th International Conference on Computer Design (ICCD)

Sun G, Shen J, Veidenbaum AV (2019) Combining prefetch control and cache partitioning to improve multicore performance. In: 2019 IEEE International Parallel and Distributed Processing Symposium (IPDPS)

Holtryd NR, Manivannan M, Stenstrm P, Pericàs M ( 2021) Cbp: coordinated management of cache partitioning, bandwidth partitioning and prefetch throttling

Hiebel J, Brown LE, Wang Z (2019) Machine learning for fine-grained hardware prefetcher control. In: Proceedings of the 48th International Conference on Parallel Processing, pp 1–9

Butko A, Bruguier F, Gamatié A, Sassatelli G, Novo D, Torres L, Robert M (2016) Full-system simulation of big.little multicore architecture for performance and energy exploration. In: 2016 IEEE 10th International Symposium on Embedded Multicore/Many-Core Systems-on-Chip (MCSOC), pp 201–208

Yu T, Petoumenos P, Janjic V, Leather H, Thomson J ( 2020) Colab: a collaborative multi-factor scheduler for asymmetric multicore processors. In: CGO ’20: 18th ACM/IEEE International Symposium on Code Generation and Optimization

Guo H, Quan C, Guo M, Xu L ( 2016) Saws: selective asymmetry-aware work-stealing for asymmetric multi-core architectures. In: 2016 IEEE 18th International Conference on High Performance Computing and Communications; IEEE 14th International Conference on Smart City; IEEE 2nd International Conference on Data Science and Systems (HPCC/SmartCity/DSS)

Adrian GG, Carlos SJ, Manuel PM (2018) Contention-aware fair scheduling for asymmetric single-isa multicore systems. IEEE Trans Comput 67:1703–1719

Kim C, Huh J (2018) Exploring the design space of fair scheduling supports for asymmetric multicore systems. IEEE Trans Comput 67:1136–1152

Lin CC, Li HH, Wu JJ, Liu P (2017) An energy-efficient scheduler for throughput guaranteed jobs on asymmetric multi-core platforms. In: IEEE International Conference on Parallel & Distributed Systems

Wolff W, Porter B (2020) Performance optimization on big.little architectures: A memory-latency aware approach. In: LCTES ’20: 21st ACM SIGPLAN/SIGBED Conference on Languages, Compilers, and Tools for Embedded Systems

Bringye Z, Sima D, Kozlovszky M ( 2019) Power consumption aware big.little scheduler for linux operating system. In: 2019 IEEE International Work Conference on Bioinspired Intelligence (IWOBI)

Fang J, Yu L, Liu S, Lu J, Chen T (2015) Kl_ga: an application mapping algorithm for mesh-of-tree (mot) architecture in network-on-chip design. J Supercomput 71:4056–4071. https://doi.org/10.1007/s11227-015-1504-y

Fang J, Ma A (2021) Iot application modules placement and dynamic task processing in edge-cloud computing. IEEE Intern Things J 8(16):12771–12781. https://doi.org/10.1109/JIOT.2020.3007751

Acknowledgements

This work is supported by Beijing Natural Science Foundation (4192007), and supported by the National Natural Science Foundation of China (61202076), along with other government sponsors. The authors would like to thank the reviewers for their efforts and for providing helpful suggestions that have led to several important improvements in our work. We would also like to thank all teachers and students in our laboratory for helpful discussions.

Funding

This work is supported by Beijing Natural Science Foundation (4192007), and supported by the National Natural Science Foundation of China (61202076), along with other government sponsors.

Author information

Authors and Affiliations

Contributions

In order to better control the prefetch aggressiveness of each core in multi-core heterogeneous systems, we propose a prefetch control strategy based on an improved hill-climbing method. Han Kong and Min Cai did a comparative experiment of big and little cores under different degrees of prefetching aggressiveness and composed the second half of Chapter 2. Juan Fang and Yixiang Xu designed the improved climbing method, and composed the Chapter 3. Yixiang Xu prepared all figures and tables in the manuscript and analyzed the statistics elaborated in Chapter 4. All authors composed the rest of the manuscript and reviewed the whole manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declared no potential conflicts of interest with respect to the research, author-ship, or publication of this article.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fang, J., Xu, Y., Kong, H. et al. A prefetch control strategy based on improved hill-climbing method in asymmetric multi-core architecture. J Supercomput 79, 10570–10588 (2023). https://doi.org/10.1007/s11227-023-05078-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-023-05078-6