Abstract

We develop a comprehensive methodological workflow for Bayesian modelling of high-dimensional spatial extremes that lets us describe both weakening extremal dependence at increasing levels and changes in the type of extremal dependence class as a function of the distance between locations. This is achieved with a latent Gaussian version of the spatial conditional extremes model that allows for computationally efficient inference with R-INLA. Inference is made more robust using a post hoc adjustment method that accounts for possible model misspecification. This added robustness makes it possible to extract more information from the available data during inference using a composite likelihood. The developed methodology is applied to the modelling of extreme hourly precipitation from high-resolution radar data in Norway. Inference is performed quickly, and the resulting model fit successfully captures the main trends in the extremal dependence structure of the data. The post hoc adjustment is found to further improve model performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The effects of climate change and the increasing availability of large high-quality data sets have lead to a surge of research on the modelling of spatial extremes (e.g., Simpson and Wadsworth 2021; Vandeskog et al. 2022; Opitz et al. 2018; Richards et al. 2022; Koch et al. 2021; Castro-Camilo et al. 2019; Shooter et al. 2019; Koh et al. 2023). Such modelling is challenging for two main reasons: (1) classical extreme value models are often not flexible enough to provide realistic descriptions of extremal dependence, and (2) inference can be computationally demanding or intractable, so modellers must often rely on less efficient inference methods; see Huser and Wadsworth (2022) for a review of these challenges. In this paper, we propose a comprehensive methodological workflow, as well as practical strategies, on how to perform efficient, robust and flexible high-dimensional modelling of spatial extremes.

An important focus in spatial extreme value analysis is the characterisation of a random process’ asymptotic dependence properties (e.g., Coles et al. 1999). Two random variables with a positive limiting probability to experience their extremes simultaneously are denoted asymptotically dependent. Otherwise, they are denoted asymptotically independent. As demonstrated by Sibuya (1960), two asymptotically independent random variables may still be highly correlated and display strong so-called sub-asymptotic dependence. Thus, correct estimation of both asymptotic and sub-asymptotic dependence properties is of utmost importance when assessing the risks of spatial extremes.

Most classical spatial extreme value models are based on max-stable processes (Davison et al. 2012, 2019). These allow for rich modelling of asymptotic dependence, but are often too rigid in their descriptions of asymptotic independence and sub-asymptotic dependence. Other approaches have been proposed, such as scale-mixture models (Engelke et al. 2019; Huser and Wadsworth 2019), which allow for rich modelling of both asymptotic dependence and independence, and a more flexible description of sub-asymptotic dependence. However, these models require that all location pairs share the same asymptotic dependence class, which is problematic, as one would expect neighbouring locations to be asymptotically dependent and far-away locations to be asymptotically independent. Max-mixture models (Wadsworth and Tawn 2012) allow for more flexible descriptions of sub-asymptotic dependence, and for changes in the asymptotic dependence class as a function of distance. However, it is often difficult to estimate the key model parameter, which describes the transition between extremal dependence classes. Additionally, these models must often rely on less efficient inference methods. Further improvements are given by the kernel convolution model of Krupskii and Huser (2022), more recent scale-mixture models such as that of Hazra et al. (2021), and the spatial conditional extremes model of Wadsworth and Tawn (2022), all allowing for flexible modelling of different extremal dependence classes as a function of distance. The spatial conditional extremes model allows for a particularly simple way of modelling spatial extremes. It is based on the conditional extremes model of Heffernan and Tawn (2004), Heffernan and Resnick (2007), which describes the behaviour of a random vector conditional on one of its components being extreme, and it can be interpreted as a semi-parametric regression model, which makes it intuitive and simple to tailor or extend. Due to its high flexibility and conceptual simplicity, this is our chosen model for high-dimensional spatial extremes.

To make the spatial conditional extremes model computationally efficient in higher dimensions, Wadsworth and Tawn (2022) propose to model spatial dependence using a residual random process with a Gaussian copula and delta-Laplace marginal distributions. However, inference for Gaussian processes typically requires computing the inverse of the covariance matrix, whose cost scales cubicly with the model dimension. Thus, Simpson et al. (2023), replace the delta-Laplace process by a Gaussian Markov random field (Rue and Held 2005) created using the so-called stochastic partial differential equations (SPDE) approach of Lindgren et al. (2011). Then, to achieve fast Bayesian inference, Simpson et al. (2023) change the spatial conditional extremes model into a latent Gaussian model, to perform inference using integrated nested Laplace approximations (INLA; Rue et al. 2009), implemented in the R-INLA software (Rue et al. 2017). In this paper, we build upon the work of Simpson et al. (2023) and develop a more general methodology for modelling spatial conditional extremes with R-INLA. We also point out a theoretical weakness in the constraining methods proposed by Wadsworth and Tawn (2022) and used by Simpson et al. (2023), and we demonstrate a computationally efficient way of fixing it.

Most statistical models for extremes rely on asymptotic arguments and assumptions. Therefore, some misspecification will always be present when modelling finite data sets. Model choices made purely for computational reasons, such as the Markov assumption of the SPDE model, may produce further misspecification. This complicates Bayesian inference and can result in misleading posterior distributions (Ribatet et al. 2012; Kleijn and van der Vaart 2012). Shaby (2014) proposes a method for more robust inference through a post hoc transformation of posterior samples created using Markov chain Monte Carlo (MCMC) methods. We develop a refined version of his method, and use it to achieve more robust inference with R-INLA.

As extreme behaviour is, by definition, rare, inference with the conditional extremes model often relies on a composite likelihood that combines data from different conditioning sites under the working assumption of independence (Heffernan and Tawn 2004; Shooter et al. 2019; Wadsworth and Tawn 2022; Richards et al. 2022; Simpson and Wadsworth 2021). However, composite likelihoods can lead to large amounts of misspecification (Ribatet et al. 2012; Simpson et al. 2023 therefore abstain from using them when performing Bayesian inference with R-INLA. Shooter et al. (2019) perform Bayesian inference with a composite likelihood, but they justify their choice by stating that correct estimation of the model uncertainty is of secondary importance for their work. We show that our post hoc adjustment method accounts for the misspecification caused by a composite likelihood, thus allowing for more efficient and reliable inference using considerably more data.

In summary, we develop a workflow for high-dimensional modelling of spatial extremes using the spatial conditional extremes model. We improve upon the work of Simpson et al. (2023) by developing a more general, flexible and computationally efficient methodology for modelling spatial conditional extremes with R-INLA and the SPDE approach. Then, we make inference more robust towards misspecification by extending the post hoc adjustment method of Shaby (2014), and we use this adjustment to obtain efficient inference by combining information from multiple conditioning sites.

The remainder of the paper is organised as follows: In Sect. 2, the spatial conditional extremes model is presented as a flexible choice for modelling spatial extremes. Modifications and assumptions that allow for computationally efficient inference with improved data utilisation are also presented. In Sect. 3, we develop a general methodology for implementing a large variety of spatial conditional extremes models in R-INLA. Section 4 examines the problems that can occur when performing Bayesian inference based on a misspecified likelihood, and demonstrates how to perform more robust inference with R-INLA by accounting for possible misspecification. In Sect. 5, our workflow is applied to modelling extreme hourly precipitation from high-resolution radar data in Norway. Finally, we conclude in Sect. 6 with some discussion and perspectives on future research.

2 Flexible modelling with spatial conditional extremes

2.1 The spatial conditional extremes model

Let \(Y(\varvec{s})\) be a random process defined over space \((\varvec{s} \in \mathcal S \subset \mathbb R^2)\) with Laplace margins. For this random process, Wadsworth and Tawn (2022) assume the existence of standardising functions \(a(\varvec{s}; \varvec{s}_0, y_0)\) and \(b(\varvec{s}; \varvec{s}_0, y_0)\) such that, for a large enough threshold \(t\),

where \(Z(\varvec{s}; \varvec{s}_0)\) is a random process satisfying \(Z(\varvec{s}_0; \varvec{s}_0) = 0\) almost surely, and \(a(\varvec{s}; \varvec{s}_0, y_0) \le y_0\), with equality when \(\varvec{s} = \varvec{s}_0\). Asymptotic dependence can be measured with the extremal correlation coefficient

where \(F^{-1}_Y(p)\) is the marginal quantile function of \(Y(\varvec{s})\). If \(\chi (\varvec{s}_1, \varvec{s}_2) > 0\), then \(Y(\varvec{s}_1)\) and \(Y(\varvec{s}_2)\) are asymptotically dependent, whereas if \(\chi (\varvec{s}_1, \varvec{s}_2) = 0\), they are asymptotically independent. Furthermore, \(Y(\varvec{s})\) and \(Y(\varvec{s}_0)\) are asymptotically dependent when \(a(\varvec{s}; \varvec{s}_0, y_0) = y_0\) and \(b(\varvec{s}; \varvec{s}_0, y_0) = 1\), while they are asymptotically independent when \(a(\varvec{s}; \varvec{s}_0, y_0) < y_0\) (Heffernan and Tawn 2004). However, under asymptotic independence, the convergence of \(\chi _p(\cdot )\) to \(\chi (\cdot )\) is slower for larger values of \(a(\cdot )\) and \(b(\cdot )\).

Wadsworth and Tawn (2022) provide some guidance on parametric functions for \(a(\cdot )\) and \(b(\cdot )\), together with parametric distributions for \(Z(\cdot )\), that cover a large range of already existing extreme value models. For \(a(\cdot )\) they propose the parametric function

with parameters \(\Delta \ge 0\) and \(\lambda _a, \kappa _a > 0\). This may lead to asymptotic dependence for locations closer to \(\varvec{s}_0\) than the distance \(\Delta \), and asymptotic independence for distances larger than \(\Delta \), with weakening sub-asymptotic dependence as the distance to \(\varvec{s}_0\) increases. To the best of our knowledge, this model (and its sub-models) is the most popular choice for \(a(\cdot )\) in the literature. Several functions are proposed for \(b(\cdot )\), including \(b(\varvec{s}; \varvec{s}_0, y_0) = y_0^\beta \), given that \(\Delta = 0\). This results in asymptotic independence with positive dependence, where the \(\beta \) parameter helps to control the speed of convergence of \(\chi _p(\varvec{s}_1, \varvec{s}_2)\) to \(\chi (\varvec{s}_1, \varvec{s}_2)\). A weakness of this form is that it enforces the same positive dependence for all distances \(\Vert \varvec{s} - \varvec{s}_0\Vert \), including large ones where \(Y(\varvec{s})\) should be independent of \(Y(\varvec{s}_0)\). To remedy this issue, Wadsworth and Tawn (2022) also propose the model \(b(\varvec{s}; \varvec{s}_0, y_0) = 1 + a(\varvec{s}; \varvec{s}_0, y_0)^\beta \), which converges to one as the distance increases. Alternatively, Shooter et al. (2021) and Richards et al. (2022) propose different models on the form \(b(\varvec{s}; \varvec{s}_0, y_0) = y_0^{\beta (\Vert \varvec{s} - \varvec{s}_0\Vert )}\), where they let the function \(\beta (d)\) converge to zero as \(d \rightarrow \infty \).

Clearly, the choice of \(a(\cdot )\) and \(b(\cdot )\) should depend on the application. Therefore, in Sect. 3, we develop a general methodology for implementing the spatial conditional extremes model in R-INLA for any function \(a(\cdot )\) and \(b(\cdot )\). In addition, in Sect. 5, we provide practical guidance and diagnostics on how to choose \(a(\cdot )\) and \(b(\cdot )\).

2.2 Modifications for high-dimensional modelling

To perform high-dimensional inference, Wadsworth and Tawn (2022) propose to model \(Z(\cdot )\) as a random process with a Gaussian copula and delta-Laplace marginal distributions. Their model has later been used by, e.g., Shooter et al. (2021), Shooter et al. (2022), Shooter et al. (2021) and Richards et al. (2022). However, to perform fast Bayesian inference with R-INLA, Simpson et al. (2023) modify (1) into a latent Gaussian model by adding a Gaussian nugget effect and requiring \(Z(\cdot )\) to be a fully Gaussian random field. This gives the model

where \(\epsilon (\varvec{s}; \varvec{s}_0)\) is Gaussian white noise with constant variance, satisfying \(\epsilon (\varvec{s}_0; \varvec{s}_0) = 0\) almost surely. They further assume that \(Z(\cdot )\) is a Gaussian Markov random field (GMRF), i.e., a Gaussian random field with a sparse and low-rank precision matrix, created using the SPDE approach of Lindgren et al. (2011). The fact that \(Y(\varvec{s})\) has Laplace marginal distributions, but is modelled using a fully Gaussian random field, clearly leads to some model misspecification. We nevertheless adopt the same assumption, as we find it necessary for performing truly high-dimensional Bayesian inference with R-INLA. Unlike Simpson et al. (2023), we account for this misspecification using the robustifying approach described in Sect. 4.

2.3 Efficient data utilisation with a composite likelihood

The spatial conditional extremes model describes a spatial process given that it experiences extreme behaviour at a predefined conditioning site. When the conditioning site only contains few extremes, inference can be challenging. It is therefore common to assume stationarity, in the sense that the parameters of \(a(\cdot )\), \(b(\cdot )\), \(Z(\cdot )\) and \(\epsilon (\cdot )\) are independent of the conditioning site. Under such stationarity, information from multiple conditioning sites can be combined into one global model fit, using the composite likelihood of Heffernan and Tawn (2004) and Wadsworth and Tawn (2022). Given observations \(\mathcal Y = \{y_i(\varvec{s}_j): i = 1, 2, \ldots , n, j = 1, 2, \ldots , m\}\) from \(n\) time points and \(m\) locations, the composite log-likelihood may be expressed as

where \(\ell (\cdot )\) is the log-likelihood of the conditional extremes model, \(\varvec{y}_{i, -j}\) is an \((m - 1)\)-dimensional vector containing observations from time point \(i\), for all locations except \(\varvec{s}_j\), \(I(\cdot )\) is the indicator function and \(\varvec{\theta }\) contains all parameters of the spatial conditional extremes model. If \(m\) is too large, one may choose to build the composite likelihood using only a subset of the available conditioning sites, and \(\varvec{y}_{i, -j}\) may be modified to contain only a subset of the available observations from time point \(i\), which may vary with both \(i\) and \(j\).

The composite likelihood is not a valid likelihood, since multiple terms in (4) may contain the same observations. Incorrectly interpreting the composite likelihood as a true likelihood is therefore tantamount to specifying a model in which \([\varvec{y}_{i, -j} \mid y_i(\varvec{s}_j)]\) is (wrongly) assumed to be independent from \([\varvec{y}_{i, -k} \mid y_i(\varvec{s}_k)]\) for all time points \(i\) and locations pairs \((\varvec{s}_j,\varvec{s}_k)\) with \(y_i(\varvec{s}_j) > t\) and \(y_i(\varvec{s}_k) > t\). This interpretation is, however, implicit when we perform inference using a composite likelihood. This form of model misspecification should be accounted for before drawing conclusions from the model fit. We show how to do this in Sect. 4. To the best of our knowledge, this is the first attempt to perform Bayesian inference for the spatial conditional extremes model based on a composite likelihood, while also attempting to adjust the uncertainty estimates to account for any misspecification caused by the composite likelihood.

3 Fast inference using R-INLA

3.1 Latent Gaussian model framework

R-INLA performs inference on latent Gaussian models of the form

where \(\varvec{u}\) is a latent Gaussian field with mean \(\varvec{\mu }(\varvec{\theta }_2)\) and precision matrix \(\varvec{Q}(\varvec{\theta }_2)\), the hyperparameters \(\varvec{\theta }= (\varvec{\theta }_1^{\top }, \varvec{\theta }_2^{\top })^{\top }\) are assigned priors \(\pi (\varvec{\theta }_1)\) and \(\pi (\varvec{\theta }_2)\), and observations \(\varvec{y} = (y_1, \ldots , y_n)^{\top }\) are linked to the latent field through the linear predictor \(\varvec{\eta }= (\eta _1(\varvec{u}), \ldots , \eta _n(\varvec{u}))^{\top } = \varvec{A} \varvec{u}\), where \(\varvec{A}\) is a known design matrix. This linear predictor defines the location parameter of the likelihood \(\pi (\varvec{y} \mid \varvec{\eta }, \varvec{\theta }_1)\), via a possibly non-linear link function. All observations are assumed to be conditionally independent given \(\varvec{\eta }\) and \(\varvec{\theta }_1\), so that \(\pi (\varvec{y}\mid \varvec{\eta }, \varvec{\theta }_1) = \prod _{i = 1}^n\pi ( y_i\mid \eta _i(\varvec{u}), \varvec{\theta }_1)\). The linear predictor can be decomposed into \(N \ge 1\) components, \(\varvec{\eta }= \varvec{A}^{(1)} \varvec{u}^{(1)} + \dots + \varvec{A}^{(N)}\varvec{u}^{(N)}\), where each component represents, e.g., an intercept term, a linear combination of regression coefficients, an SPDE component, etc. R-INLA offers a large pool of predefined likelihoods, priors and components. In addition the uses is able to implement new priors and components, but not new likelihoods.

The spatial conditional extremes model in (3) is a latent Gaussian model with a Gaussian likelihood, where the linear predictor is equal to \(a(\varvec{s}; \varvec{s}_0, y_0) + b(\varvec{s}; \varvec{s}_0, y_0) Z(\varvec{s}; \varvec{s}_0)\), and the hyperparameter \(\varvec{\theta }_2\) contains the parameters of \(a(\cdot )\), \(b(\cdot )\) and \(Z(\cdot )\), while \(\theta _1\) is the variance of the nugget effect. R-INLA does not contain suitable predefined model components for the linear predictor for most choices of \(a(\cdot )\) and \(b(\cdot )\), so we must define these manually. To define a new R-INLA component \(\varvec{u}^{(N + 1)}\), with parameters \(\varvec{\theta }^{(N + 1)}\), one can use the rgeneric/cgeneric frameworks. Necessary inputs are functions, written in R or C, respectively, that compute the mean vector, precision matrix and prior density of \(\varvec{u}^{(N + 1)}\) for any value of \(\varvec{\theta }^{(N + 1)}\). The cgeneric framework is faster but requires knowledge of the lower-level C programming language. In this paper, we propose a method for defining the linear predictor using the rgeneric/cgeneric frameworks, for any kind of functions \(a(\cdot )\) and \(b(\cdot )\). A code example, used in Sect. 5, is also provided in the online Supplementary Material.

3.2 Defining \(b(\varvec{s}; \varvec{s}_0, y_0) Z(\varvec{s}; \varvec{s}_0)\) in R-INLA

The SPDE approach creates a GMRF \({\widehat{Z}}(\varvec{s})\) that approximates a Gaussian random field \(Z(\varvec{s})\) with Matérn covariance function

where \(\sigma ^2\) is the marginal variance, \(\nu > 0\) is a smoothness parameter, \(\rho = \sqrt{8 \nu } / \kappa \) is a range parameter and \(K_\nu \) is the modified Bessel function of the second kind and order \(\nu \). The smoothness parameter \(\nu \) is difficult to estimate from data and is therefore often given a fixed value (Lindgren and Rue 2015). Moreover, in the default R-INLA implementation of the SPDE approximation, it is in fact not possible to estimate the smoothness parameter, which must be fixed when performing inference. A rational approximation approach for the SPDE method has been developed that makes it possible to estimate the smoothness parameter (Bolin et al. 2024; Bolin and Simas 2023; Bolin and Kirchner 2020). However, to keep our cgeneric component for \(b(\varvec{s}, \varvec{s}_0, y_0) Z(\varvec{s}, \varvec{s}_0)\) as simple and robust as possible, we base our model on the default SPDE representation with a fixed smoothness parameter.

The SPDE approximation \({\widehat{Z}}(\varvec{s})\) is constructed as a linear combination of Gaussian Markov random variables on a triangulated mesh, i.e., \({\widehat{Z}}(\varvec{s}) = \sum _{i = 1}^M \phi _i(\varvec{s}) W_i\), where the random variables \(W_1, \ldots , W_M\) are from a Gaussian Markov random field and \(\phi _i, \ldots , \phi _M\) are piecewise linear basis functions. To approximate the non-stationary Gaussian random field \(b(\varvec{s}; \varvec{s}_0, y_0) Z(\varvec{s})\) with the SPDE approach, for any function \(b(\varvec{s}; \varvec{s}_0, y_0)\), we modify the weights \(W_i\) to get

where \(\varvec{s}_1, \ldots \varvec{s}_M\) are the locations of the \(M\) mesh nodes. This shares some similarities with the approach of Ingebrigtsen et al. (2014) for implementing non-stationary SPDE fields. Since \({\widehat{Z}}_b(\cdot )\) is a linear combination of Gaussian random variables, its variance equals

which differs from \(b(\varvec{s}; \varvec{s}_0, y_0)^2 \sigma ^2\), the variance of \(b(\varvec{s}; \varvec{s}_0, y_0) Z(\varvec{s})\). If \(\varvec{s}\) coincides with a mesh node, then one of the basis functions equals \(1\), while the others equal \(0\), giving \(\text {Var}\left( {\widehat{Z}}_b(\varvec{s}; \varvec{s}_0, y_0)\right) = b(\varvec{s}; \varvec{s}_0, y_0)^2 \text {Var}(W_i)\), which is much closer to the correct variance. If, however, \(\varvec{s}\) is far away from a mesh node, the variance of \({\widehat{Z}}_b(\varvec{s}; \varvec{s}_0, y_0)\) may be considerably different from \(b(\varvec{s}; \varvec{s}_0, y_0)^2 \sigma ^2\). We therefore recommended to use a fine mesh, so all observation locations are close enough to a mesh node.

The process \({\widehat{Z}}_b(\cdot )\) approximates an unconstrained Gaussian random field. What we eventually need is to approximate the constrained field \(b(\varvec{s}; \varvec{s}_0, y_0) Z(\varvec{s}; \varvec{s}_0)\), where \(Z(\varvec{s}_0; \varvec{s}_0) = 0\) almost surely. Wadsworth and Tawn (2022) describe two methods for turning an unconstrained Gaussian field \(Z(\varvec{s})\) into a constrained field \(Z(\varvec{s}; \varvec{s}_0)\). The first is constraining by conditioning, i.e., \(Z(\varvec{s}; \varvec{s}_0) = [Z(\varvec{s}) \mid Z(\varvec{s}_0) = 0]\); and the second is constraining by subtraction, i.e., \(Z(\varvec{s}; \varvec{s}_0) = Z(\varvec{s}) - Z(\varvec{s}_0)\). In their case studies, Wadsworth and Tawn (2022) use the first method, while Simpson et al. (2023) use the second method. We argue that constraining by subtraction yields unrealistic dependence structures, and should be avoided if other alternatives are available. A quick computation indeed shows that, if \(Z(\varvec{s}; \varvec{s}_0)\) is a process obtained by constraining a stationary random process through subtraction, then the limiting correlation between \(Z(c \varvec{s}; \varvec{s}_0)\) and \(Z(-c \varvec{s}; \varvec{s}_0)\), as \(c \rightarrow \infty \) equals \(1/2\). Furthermore, the limiting correlation of \(Z(\varvec{s}_0 + \Delta \varvec{s}; \varvec{s}_0)\) and \(Z(\varvec{s}_0 - \Delta \varvec{s}; \varvec{s}_0)\) as \(\Vert \Delta \varvec{s}\Vert \rightarrow 0\) is often negative. Such a limiting correlation (at infinitesimal distances) is equal to \(0\)/\(-1\) if the unconstrained random field has an exponential/Gaussian correlation function, respectively. Thus, points that are infinitely distant are strongly correlated while points that are infinitesimally close to each other might be negatively correlated or independent.

Constraining by conditioning for the random field \(\widehat{Z}_b(\cdot )\) can easily be implemented in R-INLA by using the extraconstr option. However, this entails extra computations involving an \((n \times k)\)-dimensional dense matrix, where \(n\) is the number of rows of the precision matrix and \(k\) is the number of added constraints. In practice, we experience the extra computational cost to quickly turn intractable for large data sets.

We propose another way of constraining \({\widehat{Z}}_b(\cdot )\) by conditioning. It is known that, for a zero-mean Gaussian random vector \(\varvec{y} = (\varvec{y}_1^{\top }, \varvec{y}_2^{\top })^{\top }\) with precision matrix \(\varvec{Q} = \left( \begin{array}{ll} \varvec{Q}_{11} &{} \varvec{Q}_{12} \\ \varvec{Q}_{21} &{} \varvec{Q}_{22} \end{array}\right) \), the conditional distribution of \([\varvec{y}_1 \mid \varvec{y}_2 = \varvec{0}]\) is Gaussian with zero mean and precision matrix \(\varvec{Q}_{11}\) (Rue and Held 2005). Thus, if we ensure that a mesh node coincides with \(\varvec{s}_0\), we can constrain \(Z(\cdot )\) by removing all rows and columns of \(\varvec{Q}\) that correspond to the mesh node at \(\varvec{s}_0\). This requires no extra computational effort, and it is easily achievable using rgeneric/cgeneric within R-INLA.

3.3 Defining \(a(\varvec{s}; \varvec{s}_0, y_0)\) in R-INLA

In R-INLA, all latent Gaussian field components must be Gaussian random variables. However \(a(\varvec{s}; \varvec{s}_0, y_0)\) is a deterministic function. Nevertheless, we can approximate any deterministic vector \(\varvec{a}\) as \(\varvec{a} + \varvec{\epsilon }\), where \(\varvec{\epsilon }\) is Gaussian with zero mean and a diagonal covariance matrix with small and fixed marginal variances. By using rgeneric/cgeneric, we can approximate any deterministic function \(a(\varvec{s}; \varvec{s}_0, y_0)\) with a latent Gaussian random field. Such field has mean \(a(\varvec{s}; \varvec{s}_0, y_0)\) and diagonal covariance matrix with marginal variances set to e.g. \(\delta ^2 = \exp (-15)\).

4 Robust inference using post hoc adjustments

4.1 Adjusting posterior samples

All models are misspecified, because data always deviate from the model assumptions to a certain extent. This is particularly true for extreme value models that rely on imposing asymptotically justified assumptions onto finite amounts of data. It is also notable in the context of high-dimensional spatial models, where strict assumptions of (un)conditional independence and Gaussianity are often made for computational convenience. Accounting for this misspecification is therefore an important step for modelling high-dimensional spatial extremes.

Given \(n\) independent realisations \(\mathcal Y = \{\varvec{y}_1, \ldots , \varvec{y}_n\}\) of a random vector \(\varvec{Y}\) with true distribution \(G\), we aim to extract information about certain properties of G, using some function \(\ell (\varvec{\theta }; \varvec{y})\), by estimating the parameters \(\varvec{\theta }^*\) that give E\([\nabla _{\varvec{\theta }} \ell (\varvec{\theta }^*; \varvec{Y})] = 0\). The estimator, \(\hat{\varvec{\theta }}\), of \(\varvec{\theta }^*\), is then computed by solving \(\sum _{i = 1}^n \nabla _{\varvec{\theta }} \ell (\hat{\varvec{\theta }}; \varvec{y}_i) = 0\). The function \(\ell \) is most commonly chosen as a log-likelihood function, but it does not need to be so. Under some mild regularity conditions on G and \(\ell \), the estimator \(\hat{\varvec{\theta }}\) is asymptotically Gaussian (Godambe 1960; Godambe and Heyde 1987), i.e. \({\varvec{\mathcal {I}}}(\varvec{\theta }^*)^{1/2} \left( \widehat{\varvec{\theta }} - \varvec{\theta }^*\right) \rightsquigarrow \mathcal N(\varvec{0}, \varvec{I}),\) as \(n \rightarrow \infty ,\) where \(\varvec{I}\) is the identity matrix, and \({\varvec{\mathcal {I}}}(\varvec{\theta })\) is known as the Godambe sandwich information matrix,

where all expectations are taken with respect to \(G\). If \(\ell (\varvec{\theta }^*; \cdot )\) is the log-likelihood corresponding to \(G\), then \(\varvec{J}(\varvec{\theta }^*) = \varvec{H}(\varvec{\theta }^*)\), and \({\varvec{\mathcal {I}}}(\varvec{\theta }^*)\) reduces to \(\varvec{H}(\varvec{\theta }^*)\). If it is not, we say that we have a misspecified model, even in the case where \(\ell \) is, e.g. a composite log-likelihood, and thus not the logarithm of any valid likelihood function.

From a Bayesian perspective, given a prior \(\pi (\varvec{\theta })\) and appropriate regularity conditions, the generalised posterior density, \(\pi (\varvec{\theta }\mid \mathcal Y) \propto \exp \{\ell (\varvec{\theta }; \mathcal Y)\} \pi (\varvec{\theta })\), is known to converge asymptotically to a Gaussian density with mean \(\varvec{\theta }^*\) and covariance matrix \(\varvec{H}(\varvec{\theta }^*)^{-1}\) (Ribatet et al. 2012; Kleijn and van der Vaart 2012). As the sample size increases and the effect of the prior distribution diminishes, credible intervals and confidence intervals should ideally coincide. However, if the model is misspecified so \({\varvec{\mathcal {I}}}(\varvec{\theta }^*) \ne \varvec{H}(\varvec{\theta }^*)\), then the asymptotic \((1 - \alpha )\)-credible interval differs from all well-calibrated asymptotic \((1 - \alpha )\)-confidence intervals, and we say that they attain poor frequency properties. Ribatet et al. (2012) illustrate how easily a misspecified model can lead to misleading inference through posterior intervals with poor frequency properties.

Several approaches have been proposed for robustifying inference under a misspecified model (Chandler and Bate 2007; Pauli et al. 2011; Ribatet et al. 2012; Syring and Martin 2018), but all of these are based on modifying \(\ell \) before inference, which is impossible to do within the R-INLA framework. However, Shaby (2014) proposes a post hoc adjustment method that properly accounts for model misspecification by an affine transformation of posterior samples when performing MCMC-based inference. Since this is a post hoc adjustment method, it is possible to extend it for usage with R-INLA. Given a sample \(\varvec{\theta }\) from a generalised posterior distribution, the adjusted posterior sample is defined as

where the matrix \(\varvec{C}\) is chosen so the asymptotic distribution of \(\varvec{\theta }_{\text {adj}}\), as \(n \rightarrow \infty \), is Gaussian with mean \(\varvec{\theta }^*\) and covariance matrix \({\varvec{\mathcal {I}}}(\varvec{\theta }^*)^{-1}\). This can be achieved by setting \(\varvec{C} = \left( \varvec{M}_1^{-1} \varvec{M}_2 \right) ^{\top }\), where \(\varvec{M}_1^{\top } \varvec{M}_1 = \varvec{H}({\varvec{\theta }}^*)^{-1}\) and \(\varvec{M}_2^{\top } \varvec{M}_2 = {\varvec{\mathcal {I}}}({\varvec{\theta }}^*)^{-1}\). The matrix square roots \(\varvec{M}_1\) and \(\varvec{M}_2\) can be computed using, e.g., singular value decomposition.

A problem with this adjustment method is that it distorts the contributions of the prior distribution. Using the formula for the probability density function of a transformed random variable, one can show that the distribution of the adjusted samples is

However, the prior distribution reflects our prior knowledge about \(\varvec{\theta }\), and it should not be affected when adjusting for the misspecification from \(\ell \). If the prior is not overly informative and the sample size is large enough, this may not matter, as the contribution of the prior will be minimal. However, if that is not the case, we propose to additionally adjust the prior distribution before inference as

such that the adjusted posterior samples have distribution

Using the rgeneric/cgeneric framework, one can easily define a model in R-INLA with the adjusted prior distribution \(\pi _{\text {adj}}(\varvec{\theta })\).

4.2 Estimating \(\varvec{C}\) and \(\varvec{\theta }^*\)

Here, we detail how to estimate the parameters \(\varvec{\theta }^*\) and the matrix \(\varvec{C}\) when performing Bayesian inference based on the composite log-likelihood \(\ell _c\) in (4). Our approach can also be applied in settings where inference is based on valid likelihood functions or other types of loss functions.

The parameter \(\varvec{\theta }^*\) can be estimated by the mode of the posterior \(\pi (\varvec{\theta }\mid \mathcal Y)\), denoted \(\hat{\varvec{\theta }}^*\). The estimator \(\hat{\varvec{\theta }}^*\) indeed provides a good approximation to \(\varvec{\theta }^*\) if the prior is not overly informative and the sample size is large enough, but other estimators, such as the maximum composite likelihood estimator, may be more suitable if this does not hold.

To estimate \(\varvec{C}\) one must first estimate \(\varvec{H}(\varvec{\theta }^*)\) and \(\varvec{J}(\varvec{\theta }^*)\). To estimate \(\varvec{J}(\varvec{\theta }^*)\) we must first compute the gradients \(\nabla _{\varvec{\theta }} \ell (\hat{\varvec{\theta }}^*; \varvec{y}_{i, -j} \mid y_i(\varvec{s}_j))\), with \(\ell \) defined in (4), for all \(j = 1, 2, \ldots , m\) and \(i = 1, 2, \ldots , n\), such that \(y_i(\varvec{s}_j) > t\). These gradients can be computed analytically or estimated using numerical derivation. We then estimate \(\varvec{J}(\varvec{\theta }^*)\) with

where \(\Delta (i, j)\) is the set of neighbours of \((i, j)\), i.e., \((i', j') \in \Delta (i, j)\) if and only if \(\nabla _{\varvec{\theta }} \ell (\cdot ; \varvec{y}_{i', -j'} \mid y_{i'}(\varvec{s}_{j'}))\) is correlated with \(\nabla _{\varvec{\theta }} \ell (\cdot ; \varvec{y}_{i, -j} \mid y_{i}(\varvec{s}_j))\). Summing over all non-correlated pairs of tuples introduces unnecessary noise that could cause the estimator to approximately equal zero (Lumley and Heagerty 1999). In practice one can often compute (10) using a sliding window approach. This is an improvement over the proposed estimation methods of Shaby (2014), which require either that all log-likelihood terms are independent or that it is possible to simulate data from the true and unknown distribution of the data.

Estimation of \(\varvec{H}(\varvec{\theta }^*)\) is often easier than that of \(\varvec{J}(\varvec{\theta }^*)\). The law of large number implies that, for \(n\) and \(m\) large enough, a good estimator for \(\varvec{H}(\varvec{\theta }^*)\) is simply \(\hat{\varvec{H}}(\hat{\varvec{\theta }}^*) = - \nabla ^2_{\varvec{\theta }} \ell _c(\hat{\varvec{\theta }}^*; \mathcal Y)\). Thus, all we need for estimating \(\varvec{H}(\varvec{\theta }^*)\) is to compute the Hessian of the composite log-likelihood terms at \(\hat{\varvec{\theta }}^*\). For users of R-INLA, this is especially simple, since the program returns the inverse of the Hessian matrix

Thus, if the prior is not overly informative and the sample size is large enough, we can estimate \(\varvec{H}(\varvec{\theta }^*)\) with \(\tilde{\varvec{H}}(\hat{\varvec{\theta }}^*)\). If this is not the case, we can still estimate \(\varvec{H}(\varvec{\theta }^*)\) by simply subtracting the contribution of the prior distribution from \(\tilde{\varvec{H}}(\hat{\varvec{\theta }}^*)\).

If we adjust the prior distribution as in (9), it may be necessary to run R-INLA twice: once for estimating \(\varvec{\theta }^*\) and \(\varvec{C}\), and once for performing inference with the adjusted prior.

A small numerical example in the Supplementary Material demonstrates the ability of our method to recover the frequency properties of a posterior distribution based on a misspecified model.

5 Case study: extreme precipitation in Norway

We apply our workflow to modelling extreme hourly precipitation in Norway. Data are presented in Sect. 5.1 and the inference is described in Sect. 5.2. Results are presented and evaluated in Sect. 5.3.

5.1 Data

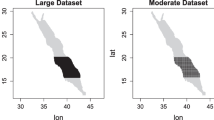

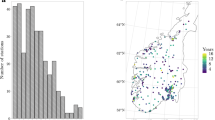

We consider \(1 \times 1\) km\(^2\) maps of mean hourly precipitation, produced by the Norwegian Meteorological Institute by processing raw reflectivity data from the weather radar located in Rissa (\(63^\circ 41' 26''\)N, \(10^\circ 12' 14''\)E) in central Norway. The data are available online (https://thredds.met.no), dating back to 1 January 2010. We extract data from a \(31\times 31\) km\(^2\) domain, close to the Rissa radar. Denote the set of all grid points in this domain as \(\mathcal S\). We then have \(|\mathcal S| = 961\) unique locations containing hourly precipitation estimates. A map containing \(\mathcal S\) and the Rissa radar is displayed in Fig. 1. For each \(\varvec{s} \in \mathcal S\), we extract all hourly observations from the summer months (June, July and August) for the years 2010–2021. Removal of missing data and correction of a few obvious artefacts, gives a total of 25,512 observations at each location. Of these, between 3500 and 4500 per location are nonzero.

5.2 Modelling and inference

The conditional extremes model in (3) is defined for a random process with Laplace margins. Thus, we standardise the marginal distributions of the precipitation data using the probability integral transform. For details, see the Supplementary Material. Note that the marginal standardisation is performed separately and prior to fitting the spatial conditional extremes model, i.e., no uncertainty propagation takes place. In future research, it would be interesting to explore ways to fit the marginal distributions and the extremal dependence structure jointly, in order to take all sources of uncertainty into account.

The first step in our workflow is the choice of models for \(a(\varvec{s}; \varvec{s}_0, y_0)\) and \(b(\varvec{s}; \varvec{s}_0, y_0)\). We assume that all model parameters are independent of the choice of conditioning sites, so that \(a(\varvec{s}; \varvec{s}_0, y_0)=a(d; y_0)\) and \(b(\varvec{s}; \varvec{s}_0, y_0)= b(d; y_0)\), only depend on the distance \(d = \Vert \varvec{s} - \varvec{s}_0\Vert \) and threshold exceedance \(y_0\). With these assumptions, we can visualise the shapes of \(a(d; y_0)\) and \(b(d; y_0)\) by empirically computing conditional means and variances of the data. In our model, all random variables with distance \(d\) from \(\varvec{s}_0\) have conditional mean \(\mu (d; y_0) = a(d; y_0)\) and conditional variance \(\zeta ^2(d; y_0) = \sigma ^2(d) b^2(d; y_0) + \tau ^{-1}\), where \(\sigma ^2(d)\) is the variance of the residual field at distance \(d\) from the conditioning site, and \(\tau \) is the precision of the nugget effect. These empirical conditional moments of the data can be computed using a sliding window approach over the values of \(d\) and \(y_0\). We choose a rectangular window with a width of \(1\) km in the \(d\)-direction and of \(0.1\) in the \(y_0\)-direction. Similarly, we can empirically estimate the extremal correlation \(\chi _p(d)\) using a sliding window approach, by counting the number of threshold exceedances for all observations within a distance \(d \pm 0.5\) km from a conditioning site.

This initial data exploration shows that the threshold \(t\) must be very large for a model with \(a(d; y_0) = \alpha (d) y_0\) and \(b(d; y_0) = y_0^{\beta (d)}\) to provide a good fit. Consequently, we choose a threshold equal to the \(99.97\%\) quantile of the Laplace distribution, which yields between 0 and 5 threshold exceedances at each conditioning site. See the Supplementary Materials for more details and discussion on this choice. Estimators for \(\chi _p(d)\), \(\mu (d; y_0)\) and \(\zeta (d; y_0)\), created using this threshold, are displayed in the leftmost column of Fig. 2. Based on the lack of changes in \({\widehat{\zeta }}(\cdot ; y_0)\) as \(y_0\) varies, we choose to model \(b(d; y_0)\) as a function not depending on \(y_0\), and set \(b(d; y_0) \equiv b(d) = 1 + b_0 \exp \left\{ -\left( d / \lambda _b\right) ^{\kappa _b}\right\} \), with positive parameters \(b_0\), \(\lambda _b\) and \(\kappa _b\), while we use the model (2) for \(a(\cdot )\).

With only one or two threshold exceedances at most conditioning sites, separate inference for each conditioning site is highly challenging. Inference is therefore performed using the composite likelihood, based on every single conditioning site in \(\mathcal S\). We remove the two last years of data before performing inference. These are then used to compare the performance of the adjusted and the unadjusted model fits. Just as in the simulation study (see the Supplementary Material), some of the observations far away from the conditioning sites are discarded during inference, and we define different triangulated meshes for the SPDE approximations based on each conditioning site. The Matérn smoothness parameter \(\nu \) is fixed to \(1\). This value is argued by Whittle (1954) to be a natural choice for many spatial models, and it is the most extensively tested and default value of the parameter when using the SPDE approach within R-INLA (Lindgren and Rue 2015). Prior distributions are displayed in Table 1. Finally, the post hoc adjustment method is performed on the output of R-INLA. Here, we have a large enough sample size that we choose not to adjust the prior distribution as in (9). Estimates for \(\varvec{\theta }^*\) and \(\varvec{H}(\varvec{\theta }^*)\) are provided directly from R-INLA, while \(\varvec{J}(\varvec{\theta }^*)\) is estimated using (10) with a sliding window that has a width of \(10\) hours.

5.3 Results

We simulate \(10^5\) extreme realisations from the adjusted and unadjusted model fits. Statistics of the simulated data are displayed in the two rightmost columns of Fig. 2. There are noticeable differences between the samples from the two model fits. However, both model fits seem to capture most of the trends in the transformed precipitation data well. Interestingly, the adjusted conditional second moments \({\widehat{\zeta }}(d; y_0)\) are more different from those of the original data than those of the unadjusted model fit. However, this is not reflected in the estimated extremal correlation coefficients, \({\widehat{\chi }}_p(d)\). As our main goal is to capture the trends in \(\chi _p(d)\), we see that the adjusted model fit seems to outperform the unadjusted one overall, especially for higher values of \(p\). None of the model fits fully capture the rate of weakening dependence with increasing thresholds, which probably requires a more complex model for \(b(d; y_0)\). As discussed in the Supplementary Materials, this is outside the scope of this paper and it would require further investigation in future research. However, any such model extension can easily be implemented using our proposed R-INLA methodology.

Posterior distributions for all parameters of the two model fits are displayed in Fig. 3. There are considerable differences between the adjusted and the unadjusted posterior distributions. The latter one being too focused due to the working assumption of independence in the composite likelihood.

To further compare the two model fits, composite log-scores are computed using the last two years of the available data (see Section 2 of the Supplementary Material for more details on how to compute the composite log-scores). These are estimated using \(n_s = 1000\) posterior samples. This results in a composite log-score of \(-96,520\) for the unadjusted model fit, and \(-88,029\) for the adjusted model fit, meaning that the adjusted model fit seems to perform considerably better. Nonparametric bootstrapping of all time points in the test data is performed to examine if the difference is significant. Using \(5000\) bootstrap samples, we find that the difference in composite log-score is significantly different from zero at a \(0.1\%\) significance level, so we conclude that the adjusted model fit outperforms the unadjusted model fit.

6 Conclusion

We propose an efficient workflow for robust modelling of spatial high-dimensional extremes using the spatial conditional extremes model with a composite likelihood and R-INLA, and a post hoc adjustment method that corrects for possible model misspecification. The workflow is applied to the modelling of spatial high-dimensional extremes of Norwegian precipitation data. The fitted model performs well, and we are able to capture the main extremal dependence trends in the data.

Framing the spatial conditional extremes model as a latent Gaussian model, as proposed by Simpson et al. (2023), makes inference for truly high-dimensional problems computationally tractable. The post hoc adjustment we propose leads to more robust inference by accounting for misspecification caused, e.g., by modelling data with Laplace marginals using a Gaussian process. It also leads to considerably more efficient inference, using multiple conditioning sites and a composite likelihood.

In developing our workflow, we describe a flaw in previously-used methods to constrain the residual field in the spatial conditional extremes model, and develop a novel method that is fast and easy to use when performing inference with R-INLA. We also propose and demonstrate a general methodology for selecting appropriate forms for the standardising functions \(a(\varvec{s}; \varvec{s}_0, y_0)\) and \(b(\varvec{s}; \varvec{s}_0, y_0)\), and for defining and implementing these in R-INLA using the rgeneric/cgeneric frameworks. Additionally, we propose an improved extension to the post hoc adjustment method that allows for correct model contributions from the prior distribution.

For transforming precipitation data onto Laplace marginals, a nonparametric method is used for estimating the marginal distributions of the original data. This method can be problematic if the aim is to estimate properties of the original and untransformed process. Further work should therefore focus on improving the transformation method when modelling extremes with the spatial conditional extremes model. The marginal modelling is also performed separately and prior to the spatial conditional extremes modelling, so no uncertainty propagation takes place. While it would be interesting in future research to develop ways to fit the margins and the spatial extremal dependence structure jointly, this is currently impossible within the R-INLA framework. A possibility could be to adapt the INLA-within-MCMC approach of Gómes-Rubio and Rue (2017), though this would likely be computationally prohibitive.

Even though the spatial conditional extremes model provides good fits to the data in the case study, there are still some small differences between properties of the data and properties of the model fits. These differences can probably be reduced by choosing better, possibly more complex, forms for \(a(\varvec{s}; \varvec{s}_0, y_0)\) and \(b(\varvec{s}; \varvec{s}_0, y_0)\), such as, e.g., \(a(\varvec{s}; \varvec{s}_0, y_0) = \alpha (\varvec{s}; \varvec{s}_0, y_0) y_0\) or \(b(\varvec{s}; \varvec{s}_0, y_0) = y_0^{\beta (\varvec{s}; \varvec{s}_0, y_0)}\). Further work should therefore focus on the theoretical properties of more complex models for \(a(\cdot )\) and \(b(\cdot )\), and on how to best perform model selection with the spatial conditional extremes model.

The smoothness parameter \(\nu \) of the SPDE approximation was fixed to a value of 1. Other values of the parameters were also tested. Lower values of \(\nu \) typically lead to a smaller nugget variance, as a less smooth field can explain more of the variance in the data. A less smooth field also leads to minor changes in the estimated shapes of the means, variances and threshold probabilities in Fig. 2. However, none of these changes are considerable enough to alter any of the findings of our work. In principle, it should be possible to apply our non-stationary SPDE extension to the rational SPDE approximation of Bolin et al. (2024). This could be an interesting avenue for future research to further improve the proposed model framework.

Finally, the selection of a threshold \(t\) for the spatial conditional extremes model can have great importance for the resulting model fit, as seen in the case study. However, to the best of our knowledge, little attention has so far been given to the problem of threshold selection in this context. Further work should therefore focus on methods for choosing thresholds that are large enough to provide a somewhat correct model fit and small enough to perform inference with low uncertainty.

Code and data availability

The necessary code and data for achieving these results are available online at https://github.com/siliusmv/spatialConditionalExtremes.

References

Bolin, D., Kirchner, K.: The rational SPDE approach for Gaussian random fields with general smoothness. J. Comput. Gr. Stat. 29(2), 274–285 (2020). https://doi.org/10.1080/10618600.2019.1665537

Bolin, D., Simas, A.B.: rspde: Rational approximations of fractional stochastic partial differential equations [Computer software manual]. (R package version 2.3.3) (2023)

Bolin, D., Simas, A.B., Xiong, Z.: Covariance-based rational approximations of fractional SPDEs for computationally efficient Bayesian inference. J. Comput. Gr. Stat. 33(1), 64–74 (2024). https://doi.org/10.1080/10618600.2023.2231051

Castro-Camilo, D., Huser, R., Rue, H.: A spliced gamma-generalized Pareto model for short-term extreme wind speed probabilistic forecasting. J. Agric. Biol. Environ. Stat. 24(3), 517–534 (2019). https://doi.org/10.1007/s13253-019-00369-z

Chandler, R.E., Bate, S.: Inference for clustered data using the independence loglikelihood. Biometrika 94(1), 167–183 (2007). https://doi.org/10.1093/biomet/asm015

Coles, S., Heffernan, J., Tawn, J.: Dependence measures for extreme value analyses. Extremes 2(4), 339–365 (1999). https://doi.org/10.1023/A:1009963131610

Davison, A.C., Huser, R., Thibaud, E.: Spatial extremes. A.E. Gelfand, M. Fuentes, J.A. Hoeting, & R.L. Smith (Eds.), Handbook of environmental and ecological statistics (pp. 711–744). Chapman and Hall/CRC (2019)

Davison, A.C., Padoan, S.A., Ribatet, M.: Statistical modeling of spatial extremes. Stat. Sci. 27(2), 161–186 (2012). https://doi.org/10.1214/11-STS376

Engelke, S., Opitz, T., Wadsworth, J.L.: Extremal dependence of random scale constructions. Extremes 22(4), 623–666 (2019). https://doi.org/10.1007/s10687-019-00353-3

Fuglstad, G.-A., Simpson, D., Lindgren, F., Rue, H.: Constructing priors that penalize the complexity of Gaussian random fields. J. Am. Stat. Assoc. 114(525), 445–452 (2019). https://doi.org/10.1080/01621459.2017.1415907

Godambe, V.P.: An optimum property of regular maximum likelihood estimation. Ann. Math. Stat. 31(4), 1208–1211 (1960)

Godambe, V.P., Heyde, C.C.: Quasi-likelihood and optimal estimation. Int. Stat. Rev. 55(3), 231–244 (1987)

Gómes-Rubio, V., Rue, H.: Markov chain Monte Carlo with the integrated nested Laplace approximation. Stat. Comput. 28, 1033–1051 (2017). https://doi.org/10.1007/s11222-017-9778-y

Hazra, A., Huser, R., Bolin, D.: Realistic and fast modeling of spatial extremes over large geographical domains. arXiv: 2112.10248 (2021)

Heffernan, J.E., Resnick, S.I.: Limit laws for random vectors with an extreme component. Ann. Appl. Probab. 17(2), 537–571 (2007). https://doi.org/10.1214/105051606000000835

Heffernan, J.E., Tawn, J.A.: A conditional approach for multivariate extreme values (with discussion). J. R. Stat. Soc. Ser. B (Stat. Methodol.) 66(3), 497–546 (2004). https://doi.org/10.1111/j.1467-9868.2004.02050.x

Huser, R., Wadsworth, J.L.: Modeling spatial processes with unknown extremal dependence class. J. Am. Stat. Assoc. 114(525), 434–444 (2019). https://doi.org/10.1080/01621459.2017.1411813

Huser, R., Wadsworth, J.L.: Advances in statistical modeling of spatial extremes. Wiley Interdisciplinary Reviews (WIREs): Computational Statistics, 14 (1), e1537, https://doi.org/10.1002/wics.1537https://wires.onlinelibrary.wiley.com/doi/pdf/10.1002/wics.1537 (2022)

Ingebrigtsen, R., Lindgren, F., Steinsland, I.: Spatial models with explanatory variables in the dependence structure. Spatial Stat. 8, 20–38 (2014). https://doi.org/10.1016/j.spasta.2013.06.002

Kleijn, B., van der Vaart, A.: The Bernstein-Von-Mises theorem under misspecification. Electron. J. Stat. 6, 354–381 (2012). https://doi.org/10.1214/12-EJS675

Koch, E., Koh, J., Davison, A.C., Lepore, C., Tippett, M.K.: Trends in the extremes of environments associated with severe U.S. thunderstorms. J. Clim. 34(4), 1259–1272 (2021). https://doi.org/10.1175/JCLI-D-19-0826.1

Koh, J., Pimont, F., Dupuy, J.-L., Opitz, T.: Spatiotemporal wildfire modeling through point processes with moderate and extreme marks. Ann. Appl. Stat. 17(1), 560–582 (2023). https://doi.org/10.1214/22-AOAS1642

Krupskii, P., Huser, R.: Modeling spatial tail dependence with Cauchy convolution processes. Electron. J. Stat. 16(2), 6135–6174 (2022). https://doi.org/10.1214/22-EJS2081

Lindgren, F., Rue, H.: Bayesian spatial modelling with R-INLA. J. Stat. Softw. 63(19), 1–25 (2015). https://doi.org/10.18637/jss.v063.i19

Lindgren, F., Rue, H., Lindströom, J.: An explicit link between Gaussian fields and Gaussian Markov random fields: the stochastic partial differential equation approach. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 73(4), 423–498 (2011). https://doi.org/10.1111/j.1467-9868.2011.00777.x

Lumley, T., Heagerty, P.: Weighted empirical adaptive variance estimators for correlated data regression. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 61(2), 459–477 (1999) https://doi.org/10.1111/1467-9868.00187

Opitz, T., Huser, R., Bakka, H., Rue, H.: INLA goes extreme: Bayesian tail regression for the estimation of high spatio-temporal quantiles. Extremes 21(3), 441–462 (2018). https://doi.org/10.1007/s10687-018-0324-x

Pauli, F., Racugno, W., Ventura, L.: Bayesian composite marginal likelihoods. Stat. Sin. 21(1), 149–164 (2011)

Ribatet, M., Cooley, D., Davison, A.C.: Bayesian inference from composite likelihoods, with an application to spatial extremes. Stat. Sin. 22(2), 813–845 (2012)

Richards, J., Tawn, J.A., Brown, S.: Modelling extremes of spatial aggregates of precipitation using conditional methods. Ann. Appl. Stat. 16(4), 2693–2713 (2022). https://doi.org/10.1214/22-AOAS1609

Rue, H., Held, L.: Gaussian Markov Random Fields: Theory and Applications. CRC Press, Cambridge (2005)

Rue, H., Martino, S., Chopin, N.: Approximate Bayesian inference for latent Gaussian models by using integrated nested Laplace approximations. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 71(2), 319–392 (2009). https://doi.org/10.1111/j.1467-9868.2008.00700.x

Rue, H., Riebler, A., Sørbye, S.H., Illian, J.B., Simpson, D.P., Lindgren, F.K.: Bayesian computing with INLA: a review. Ann. Rev. Stat. Its Appl. 4(1), 395–421 (2017). https://doi.org/10.1146/annurev-statistics-060116-054045

Shaby, B.A.: The open-faced sandwich adjustment for MCMC using estimating functions. J. Comput. Gr. Stat. 23(3), 853–876 (2014). https://doi.org/10.1080/10618600.2013.842174

Shooter, R., Ross, E., Ribal, A., Young, I.R., Jonathan, P.: Spatial dependence of extreme seas in the North East Atlantic from satellite altimeter measurements. Environmetrics 32(4), e2674 (2021). https://doi.org/10.1002/env.2674

Shooter, R., Ross, E., Ribal, A., Young, I.R., Jonathan, P.: Multivariate spatial conditional extremes for extreme ocean environments. Ocean Eng. 247, 110647 (2022). https://doi.org/10.1016/j.oceaneng.2022.110647

Shooter, R., Ross, E., Tawn, J., Jonathan, P.: On spatial conditional extremes for ocean storm severity. Environmetrics 30(6), e2562 (2019). https://doi.org/10.1002/env.2562

Shooter, R., Tawn, J., Ross, E., Jonathan, P.: Basin-wide spatial conditional extremes for severe ocean storms. Extremes 24(2), 241–265 (2021). https://doi.org/10.1007/s10687-020-00389-w

Sibuya, M.: Bivariate extreme statistics. Ann. Inst. Stat. Math. 11(2), 195–210 (1960)

Simpson, E.S., Opitz, T., Wadsworth, J.L.: High-dimensional modeling of spatial and spatio-temporal conditional extremes using INLA and Gaussian Markov random fields. Extremes (2023). https://doi.org/10.1007/s10687-023-00468-8

Simpson, E.S., Wadsworth, J.L.: Conditional modelling of spatio-temporal extremes for Red Sea surface temperatures. Spat. Stat. 41, 100482 (2021). https://doi.org/10.1016/j.spasta.2020.100482

Syring, N., Martin, R.: Calibrating general posterior credible regions. Biometrika 106(2), 479–486 (2018). https://doi.org/10.1093/biomet/asy054

Vandeskog, S.M., Martino, S., Castro-Camilo, D., Rue, H.: Modelling sub-daily precipitation extremes with the blended generalised extreme value distribution. J. Agric. Biol. Environ. Stat. 27(4), 598–621 (2022). https://doi.org/10.1007/s13253-022-00500-7

Wadsworth, J.L., Tawn, J.A.: Dependence modelling for spatial extremes. Biometrika 99(2), 253–272 (2012). https://doi.org/10.1093/biomet/asr080

Wadsworth, J.L., Tawn, J.A.: Higher-dimensional spatial extremes via single-site conditioning. Spat. Stat. 51, 100677 (2022). https://doi.org/10.1016/j.spasta.2022.100677

Whittle, P.: On stationary processes in the plane. Biometrika 41(3/4), 434–449 (1954). https://doi.org/10.2307/2332724

Acknowledgements

The authors are grateful to Jordan Richards, Håvard Rue and Geir-Arne Fuglstad for many helpful discussions.

Funding

Open access funding provided by NTNU Norwegian University of Science and Technology (incl St. Olavs Hospital - Trondheim University Hospital) Raphaël Huser was partially supported by the King Abdullah University of Science and Technology (KAUST) Office of Sponsored Research (OSR) under Award No. OSR- CRG2020-4394.

Author information

Authors and Affiliations

Contributions

All authors contributed to the conceptualisation of the manuscript. S.V. wrote the code, created the figures and wrote the main manuscript text. S.M. and R.H. provided continuous feedback during the development process. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors report there are no conflict of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vandeskog, S.M., Martino, S. & Huser, R. An efficient workflow for modelling high-dimensional spatial extremes. Stat Comput 34, 137 (2024). https://doi.org/10.1007/s11222-024-10448-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-024-10448-y

))

))