Abstract

Semi-parametric Gaussian mixtures of non-parametric regressions (SPGMNRs) are a flexible extension of Gaussian mixtures of linear regressions (GMLRs). The model assumes that the component regression functions (CRFs) are non-parametric functions of the covariate(s) whereas the component mixing proportions and variances are constants. Unfortunately, the model cannot be reliably estimated using traditional methods. A local-likelihood approach for estimating the CRFs requires that we maximize a set of local-likelihood functions. Using the Expectation-Maximization (EM) algorithm to separately maximize each local-likelihood function may lead to label-switching. This is because the posterior probabilities calculated at the local E-step are not guaranteed to be aligned. The consequence of this label-switching is wiggly and non-smooth estimates of the CRFs. In this paper, we propose a unified approach to address label-switching and obtain sensible estimates. The proposed approach has two stages. In the first stage, we propose a model-based approach to address the label-switching problem. We first note that each local-likelihood function is a likelihood function of a Gaussian mixture model (GMM). Next, we reformulate the SPGMNRs model as a mixture of these GMMs. Lastly, using a modified version of the Expectation Conditional Maximization (ECM) algorithm, we estimate the mixture of GMMs. In addition, using the mixing weights of the local GMMs, we can automatically choose the local points where local-likelihood estimation takes place. In the second stage, we propose one-step backfitting estimates of the parametric and non-parametric terms. The effectiveness of the proposed approach is demonstrated on simulated data and real data analysis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Finite mixture models have become a useful tool for studying any variable, say y, that takes its values from a population that is made up of a number of a priori known, say K, sub-populations mixed randomly in proportion to their relative sizes \(\pi _1,\pi _2,\dots ,\pi _K\). In this case, each sub-population, known as a component, is usually distributed by a parametric distribution having a density function \(f(\cdot |\theta _k)\), for \(k=1,2,\dots ,K\). The component parameters \(\theta _k\), often vector-valued, and the relative sizes (weights), positive and summing to unity, are distinct across the components.

The most frequently used mixture model for a univariate variable y arises when each component density is assumed to be normal, henceforth Gaussian. In this case, the parameter vector \(\varvec{\theta }_k=(\mu _k,\sigma ^{2}_k)\). The mixture density function of y is a convex combination of the Gaussian component densities

where \({\mathcal {N}}\{\cdot |\mu ,\sigma ^2\}=f(\cdot |\mu ,\sigma ^2)\) denotes a Gaussian density with mean \(\mu \) and variance \(\sigma ^2\). The weights \(\pi _k\) are also known as mixing proportions or probabilities. Model (1) is known as a Gaussian mixture model (GMM). For the theory and application of GMMs and mixture models, in general, see Titterington et al. (1985); McLachlan and Peel (2000) and more recently (Fruhwirth-Schnatter et al. 2019).

Suppose the variable y depends on a set of D covariates \({\textbf{x}}=(x_1,x_2,\dots ,x_D)\) and we are interested in studying this dependence. In this case, each component, known as a regression component, is typically a linear regression model of y on \({\textbf{x}}\) having a Gaussian error distribution. The resulting model is a Gaussian mixture of linear regressions (GMLRs) given as

where \(m_k({\textbf{x}})={\textbf{x}}^{\intercal }\varvec{\beta }_k\) is the regression function of the \(k^{th}\) regression component, \({\textbf{x}}=(x_0,x_1,x_2,\dots ,x_D)\), with \(x_0=1\), and \(\varvec{\beta }_k=(\beta _0,\beta _1,\dots ,\beta _K)\) is the regression parameter vector of the \(k^{th}\) regression component.

GMLRs were first introduced by Quandt (1972) as switching regression models. The models have received widespread adoption in areas such as economics (Quandt and Ramsey 1978), marketing (DeSarbo and Cron 1988), machine learning (Jacobs et al. 1991), environmental economics (Hurn et al. 2003), medicine (Schlattmann 2009), among many other fields. See Chapter 8 of (Frühwirth-Schnatter 2006) for more details on the theory of GMLRs, in particular, and mixtures of regression models, in general.

The linearity assumption imposed on model (2), through the component regression functions (CRFs), is quite restrictive. The main reason for this assumption is that an additive covariate effect makes for ease of interpretation ( Hastie and Tibshirani 1990). Efforts to relax this assumption, partly or completely while retaining the desirable additive covariate effect, have emerged in the literature. The proposed models assume that some of the covariates are linearly related to the response variable y while the relationship between y and the other variables is characterised by additive non-parametric univariate functions. Let \({\textbf{x}}=({\textbf{x}},{\textbf{t}})\), the general form of this class of models is

where \(m_{k}({\textbf{x}},{\textbf{t}})={\textbf{x}}\varvec{\beta }_{k}+\sum _{r=1}^{D_2}g_{k}(t_r)\).

In model (3), the covariates \({\textbf{x}}\in {\mathbb {R}}^{D_1}\) are assumed to enter the model linearly (hence, parametric) characterised by the regression parameters \(\varvec{\beta _{k}}\), for \(k=1,2,\dots ,K\). On the other hand, the covariates \({\textbf{t}}\in {\mathbb {R}}^{D_2}\) are assumed to be characterised by smooth unknown (hence, non-parametric) additive univariate functions \(g_{k}(t_r)\) of the covariates \(t_r, r=1,2,\dots ,D_2\), respectively. Thus, the CRFs are semi-parametric functions.

Model (3) was first introduced and studied by ( Zhang (2020)) as a finite semi-parametric Gaussian mixture of partially linear additive models (SPGMPLAMs). For identifiability, \({\mathbb {E}}\{g_k(t_r)\}=0\), for \(t=1,2,\dots ,D_2\). Moreover, without loss of generality, we assume that the covariates \(t_r:r=1,2,\dots ,D_2\) take values on the compact interval [a, b], where \(b>a\).

If \(K=1\), model (3) reduces to an additive partial linear model (APML) ( Opsomer 1999). If each \(g_{k}(t_r)\), for \(t_r, r=1,2,\dots ,D_2\), is a linear function of the corresponding covariate, then model (3) is the same as model (2). Thus, model (3) is a natural extension of an APLM and a GMLRs model.

Model (3) encompasses many Gaussian mixtures of regressions some of which were introduced recently. The following list is in no way exhaustive:

-

1.

If \(D_2=0\) and \(D_1=1\), model (3) reduces to the semi-parametric Gaussian mixture of non-parametric regressions model (SPGMNRs) introduced by Xiang and Yao (2018).

-

2.

If \(D_2=1\), model (3) reduces to the semi-parametric Gaussian mixture of partially linear models (SPGMPLMs) introduced by Wu (2016).

-

3.

If \(D_1=0\), model (3) reduces to the semi-parametric Gaussian mixture of additive regressions model (SPGMARs) introduced by Zhang (2017).

From a statistical inference point of view, the advantage of model (3) is that it combines the flexibility of a non-parametric model and the simplicity, in particular interpretability, of a parametric model. However, in practice, due to the presence of both parametric and non-parametric terms, model (3) poses an estimation and computational challenge. First, a likelihood approach for estimating the non-parametric functions requires that we maximize a set of locally defined likelihood functions. Using the Expectation-Maximization (EM) algorithm to separately maximize each local likelihood function may lead to label switching ( Huang 2012 and Huang and Li (2013)). This problem is illustrated in Sect. 3. Second, note that efficient parametric estimation requires all the observed data whereas non-parametric estimation uses data in the neighbourhood of a local point. Thus, how can we construct an estimation procedure that is appropriate for estimating both the parametric and non-parametric term?

In this paper, we propose a unified approach to address all of these challenges. The proposed approach has two stages. In the first stage, we propose a model-based approach to address the label-switching problem. Briefly, we first note that each local likelihood function is a likelihood function of a GMM (1). Next, we rewrite model (3) as a mixture of these GMMs. Lastly, using a modified Expectation-Conditional-Maximization (ECM) algorithm, we estimate the mixture of GMMs thus simultaneously estimating the component non-parametric functions. We refer to this approach as a model-based approach. More details are given in Sect. 4. In the second stage, we propose one-step backfitting estimates of the parametric and non-parametric terms.

To aid the reader’s comprehension of the novelty of the proposed ideas, in this paper we will develop the proposed estimation approach for a simple special case of the general model (3), the SPGMNRs given by

An extension of the method proposed in this paper to the general model (3) can be found in Appendix A. Throughout the paper, we assume that the number of components K is known. In practice, K is unknown and its optimal value is obtained using a data-driven approach such as the information criteria (see Huang and Li (2013)).

The rest of the paper is organized as follows: Sect. 2 presents the traditional (naive) local likelihood approach used to estimate model (4). Section 3 discusses the label-switching problem encountered when estimating the non-parametric term. Section 4 presents the proposed estimation strategy to estimate model (4) and address label-switching. Section 5.2 and 6 presents a simulation study and two real data applications to demonstrate the performance of the proposed approach, respectively. Section 7 concludes the paper and then provides direction for future research.

2 Estimation

Consider a random sample \(\{(x_i,y_i):i=1,2,\dots ,n\}\) of size n obtained from model (4). The corresponding log-likelihood function is given as

where \(\varvec{\theta }=(\varvec{\pi },{\textbf{m}},\varvec{\sigma }^{2})= (\pi _1,\dots ,\pi _K;{\textbf{m}}_{1},\dots ,{\textbf{m}}_{K};\sigma _{1}^{2},\dots ,\sigma _{K}^{2})\), with \({\textbf{m}}_{k}=(m_{k}(x_1),\dots ,m_{k}(x_n))\), for \(k=1,2,\dots ,K\), is the vector of all the model parameters.

In order to estimate model (4), we must estimate \(\varvec{\theta }\), this is done using a likelihood approach. Direct maximization of the log-likelihood function (5) with respect to \(\varvec{\theta }\) poses a challenge due to the presence of both a parametric term \((\varvec{\pi },\varvec{\sigma }^{2})\) (henceforth, global parameters) and a non-parametric term \({\textbf{m}}\). It is straightforward to maximize (5) with respect to either \(\varvec{\pi }\) or \(\varvec{\sigma }^{2}\), however this is not the case for \({\textbf{m}}\). Maximizing (5) with respect to \({\textbf{m}}\) without any constraints or restrictions on the component regression functions \({\textbf{m}}\) would result in estimates that are practically useless due to overfitting ( Tibshirani and Hastie 1987). To overcome this problem, we make use of the local-likelihood estimation (LLE) ( Tibshirani and Hastie 1987). LLE is an extension of local-polynomial kernel estimation (see Fan and Gijbels (1996)) for likelihood-based models (see Tibshirani and Hastie (1987) for more details).

2.1 Local-polynomial likelihood (LPL) estimator

The local polynomial likelihood (LPL) estimation procedure proceeds as follows. Let \({\mathcal {U}}=\{u_1,u_2,\dots ,u_N\}\) be a set of N local points on the domain of the covariate x. Assume that at each \(u\in {\mathcal {U}}\), each component regression function \(m_{k}(x)\), for \(k=1,2,\dots ,K\), has a \((p+1)^{th}\) derivative. By Taylor expansion, a \(p^{th}\) degree polynomial function can be used to locally approximate each component regression function \(m_{k}(x)\), for \(k=1,2,\dots ,K\), in the neighbourhood of u, as

where \(m_k^{(r)}(u)\) denotes the \(r^{th}\) derivative of \(m_k(u)\) at local point u and \(m_{kj}(u)=\frac{m_k^{(j)}(u)}{j!}\) for \(k=1,2,\dots ,K\).

Let \({\textbf{m}}(u)=({\textbf{m}}_{1}(u),\dots ,{\textbf{m}}_{K}(u))\), with \({\textbf{m}}_k(u)=(m_{k0}(u),m_{k1}(u),\dots ,m_{kp}(u))\), be the vector of all local parameters at local point u. The estimate of \({\textbf{m}}_k(u)\), denoted \(\hat{{\textbf{m}}}_k(u)\), for \(k=1,2,\dots ,K\) and \(u\in {\mathcal {U}}\), is obtained by maximizing the following weighted (local) log-likelihood function

where \(m_k(u)=\sum _{j=0}^{p}m_{kj}(u)[x_i-u]^j\), \(K_{h}(x)=K(x/h)/h\) and K(x) is a kernel function used to assign weights to the data points in the neighbourhood of a given local point u and \(h>0\) is the bandwidth used to specify the size of the neighbourhood.

From (6), we obtain the estimator of \(m_k(u)\), denoted by \({\hat{m}}_{k}(u)\), for \(k=1,2,\dots ,K\), as

\({\hat{m}}_{k0}(u)\) can be referred to as a local polynomial likelihood (LPL) estimator. To estimate \(m_{k}(u)\), for all \(u\in {\mathcal {U}}\), we repeat the above maximization. To obtain the estimated CRFs \({\hat{m}}_{k}(x_i)\), for \(i=1,2,\dots ,n\) and \(k=1,2,\dots ,K\), we interpolate over \({\hat{m}}_{k}(u_t)\), for \(t=1,2,\dots ,N\) and \(k=1,2,\dots ,K\).

2.2 Local-likelihood fitting algorithm

To maximize the likelihood function for any mixture model, the standard algorithm is the Expectation-Maximization (EM) algorithm (Dempster et al. 1977). Recall that we have both global and local parameters. As already mentioned, estimation of the latter uses only the data in a neighbourhood of some local point whereas efficient estimation of the former requires the use of all the observed data. Thus, to satisfy these competing interests, the estimation procedure must be implemented in two stages. In the first-stage, we locally maximize (7) with respect to \({\textbf{m}}(u)\), \(\varvec{\pi }(u)\) and \(\varvec{\sigma }^2(u)\), for \(u\in {\mathcal {U}}\). Let \({\hat{m}}_{k}(u)={\hat{m}}_{k0}(u)\), for \(k=1,2,\dots ,K\), be the resulting local parameter estimates obtained from maximizing (7) at local point u. Obtain \({\hat{m}}_{k}(x_i)\), for \(i=1,2,\dots ,n\) and \(k=1,2,\dots ,K\) by linear interpolation. In the second-stage, given \({\hat{m}}_{k}(x_i)\), for \(i=1,2,\dots ,n\) and \(k=1,2,\dots ,K\), globally estimate \(\varvec{\pi }\) and \(\varvec{\sigma }^2\) by maximizing

with respect to \(\varvec{\pi }\) and \(\varvec{\sigma }^2\). Let \(\hat{{\textbf{m}}}\) and (\(\hat{\varvec{\pi }}, \hat{\varvec{\sigma }}^2\)) be the resulting estimates from the first-stage and second-stage, respectively. These estimators are the so-called one-step estimators of the local and global parameters, see Carroll et al. (1997). The one-step algorithm is an intermediate step of the one-step backfitting algorithm of Xiang and Yao (2018) for fitting model (4). The one-step procedure is summarized in Algorithm 1.

One-step algorithm for the SPGMNRs model (4)

Notice that, in Stage 1 of Algorithm 1, we must define N local likelihood functions, where N is the number of local points (see (7)). Thereafter, the natural way to proceed is to apply the EM algorithm to each local likelihood function in turn. This is demonstrated below.

For each \(u\in {\mathcal {U}}\), the EM algorithm to maximize (7) proceeds as follows. Define a \(K-\)dimensional latent variable \({\textbf{z}}_i=(z_{i1},z_{i2},\dots ,z_{iK})^\intercal \) for \(i=1,2,\dots ,n\). The \(k^{th}\) element of this variable is set equal to 1 if observation \((x_i,y_i)\) belongs to the \(k^{th}\) component and the rest of the elements are set equal to 0. Let \(\{(x_i,y_i,{\textbf{z}}_i):i=1,2,\dots ,n\}\) be the complete data. Then the corresponding complete-data log likelihood is

where \(\varvec{\theta }(u)=(\varvec{\pi }(u),{\textbf{m}}(u),\varvec{\sigma }^{2}(u))\), with \(\varvec{\pi }(u)=(\pi _{1}(u),\dots ,\pi _{K}(u))\), \({\textbf{m}}(u)=(m_{1}(u),\dots ,m_{K}(u))\) and \(\varvec{\sigma }^{2}(u)=(\sigma ^{2}_{1}(u),\dots ,\sigma ^{2}_{K}(u))\), is a vector of the local parameters at local point u. At the E-step, we calculate the expected value of \(\ell ^{c}(\varvec{\theta }(u))\) with respect to the conditional distribution of \({\textbf{z}}\), denoted \(Q\{\varvec{\theta }(u)|\varvec{\theta }^{(r)}(u)\}\). This corresponds to calculating the latent variable \(z_{ik}\), for \(i=1,2,\dots ,n\) and \(k=1,2,\dots ,K\), using its conditional expectation \({\mathbb {E}}(z_{ik}|x_i,y_i,\varvec{\theta }^{(r)}(u))\) as

for \(i=1,2,\dots ,n\) and \(k=1,2,\dots ,K\).

\(\gamma ^{(r+1)}_{ik}(u)\) is referred to the responsibility of the \(k^{th}\) component for the \(i^{th}\) observation (see Bishop (2006) and Hastie et al. (2009)). It gives the probability that the \(i^{th}\) observation belongs to the \(k^{th}\) component.

From (11), it follows that \(Q\{\varvec{\theta }(u)|\varvec{\theta }^{(r)}(u)\}\) is

At the M-step, we maximize \(Q(\varvec{\theta }(u)|\varvec{\theta }^{(r)}(u))\) to update \(\varvec{\theta }(u)\). For instance, to update \(m^{(r)}_k(u)\), for \(u\in {\mathcal {U}}\) and \(k=1,2,\dots ,K\), let \(({\hat{m}}^{(r)}_{k0}(u),{\hat{m}}^{(r)}_{k1}(u),\dots ,{\hat{m}}^{(r)}_{kp}(u))\) be the maximizers of

Then \(m^{(r+1)}_k(u)={\hat{m}}^{(r+1)}_{k0}(u)\), for \(k=1,2,\dots ,K\). An expression for \({\hat{m}}^{(r+1)}_{k0}(u)\) using matrix notation is useful and can be easily obtained.

Let

be the design matrix at local point u and set \({\textbf{y}}=(y_1,y_2,\dots ,y_n)^\intercal \) and \({\textbf{m}}_k(u)=(m_{k0}(u),m_{k1}(u),\dots ,m_{kp}(u))^\intercal \). Moreover, let

be the \(n\times n\) diagonal matrix of the weights at local point u. The maximum likelihood criterion can be written as

Solving (15), gives the following expression for \({\hat{m}}^{(r+1)}_{k0}(u)\) (and consequently \(m^{(r+1)}_k(u)\))

where \({\textbf{A}}_k=({\textbf{X}}^\intercal {\textbf{W}}_k{\textbf{X}})\), \({\textbf{B}}_k={\textbf{X}}^\intercal {\textbf{W}}_k\) and \({\textbf{e}}\) is a \((p+1)-\)dimensional vector where the first entry is 1 and the other entries are set to zero. The local estimators of the other local parameters (\(\varvec{\pi }(u), \varvec{\sigma }^{2}(u))\) can be obtained in a similar fashion. However, note that for \(p>0\), LPL estimator of \(\varvec{\pi }(u)\) does not have a closed form expression. Thus, we estimate this local parameter using the LCE. Furthermore, with the assumption that the regression components are homoscedastic, an LCE can be used to estimate \(\varvec{\sigma }^{2}(u)\) and the additional improvement from using an LPL estimator with \(p>0\) will be negligible.

The above EM algorithm proceeds by repeatedly iterating between the E-Step and M-Step until convergence.

3 Label-switching problem

In this Section, we give a description of the label-switching problem encountered when using Algorithm 1 and we review previous work proposed to address the problem.

3.1 A brief description of the label-switching problem

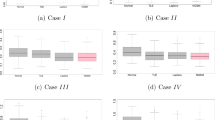

In order to obtain \({\hat{m}}_{k}(x_i)\), for \(x_i\notin {\mathcal {U}}\) and \(k=1,2,\dots ,K\), we interpolate over \({\hat{m}}_k(u)\), for \(u\in {\mathcal {U}}\). Let \(\hat{{\textbf{m}}}_{k}=({\hat{m}}_{k}(x_1),{\hat{m}}_{k}(x_2),\dots ,{\hat{m}}_{k}(x_n))\), for \(k=1,2,\dots ,K\), be the resulting component non-parametric functions. The latter may be non-smooth, exhibiting irregular and non-uniform behaviour. This is because, for each local point \(u\in {\mathcal {U}}\), the M-step is based on a unique set of local responsibilities \(\{\gamma _{ik}(u):i=1,2,\dots ,n;k=1,2,\dots ,K\}\). These sets of local responsibilities are not guaranteed to be aligned across the local points. In the event of a misalignment, the labels attached to the mixture components may switch from one local grid point to the next. The practical consequence of this label-switching is estimates of the non-parametric functions that are characterised by discontinuities near the points where the switch took place. Figure 1 illustrates this label-switching phenomenon. The figure shows a simple example of a \(K=2\) component mixture of non-parametric regressions where the regression function of one component is consistently above that of the other component (given by the solid black curves). Consider maximising the local-likelihood functions at three local points \(u=-1,0\) and 1 using Algorithm 1. There are \((2!)^{3}=8\) possible configurations of the component regression functions when we join the local parameter estimates at the three local points. Figure 1 shows two of these configurations, the true configuration given by the solid curves and another configuration given by the dotted curves where the labels of the local parameter estimates at local point -1 and 1 have switched. Note that only 2 of these configurations will result in the correct CRFs. Thus, there is 0.75 probability that Algorithm 1 will result in label-switching. This probability is approximately 1 for \(K>2\).

Thus, Algorithm 1 does not work. Henceforth, we refer to Algorithm 1 as the naive EM algorithm.

3.2 Previous work addressing label-switching

This form of label-switching problem was first mentioned by Huang (2012) and subsequently (Huang and Li 2013). To address the problem, the authors proposed a modified EM-type algorithm that simultaneously maximizes the complete-data local-likelihood functions (12) using the same (common) responsibilities \(\gamma ^{(r)}_{ik}=\gamma ^{(r)}_{ik}(u)\) for all \(u\in {\mathcal {U}}\). In other words, the responsibilities are independent of the local points. This algorithm has been applied by many authors to estimate models of the form (3). It is used in the estimation procedure (PL-EM) of Wu (2016) for estimating SPGMPLMs. It is an intermediate part of the one-step backfitting (LEM) algorithm of Xiang and Yao (2018) for estimating model (4) and the spline-backfitted kernel (SBK) EM algorithm of Zhang (2017) for estimating SPGMARs.

In particular, the LEM algorithm is a modified version of Algorithm 1 where in Stage 1, the responsibilities (11) at the E-step are replaced by

In other words, the responsibilities are independent of the local grid points. This implies that the LEM algorithm and the other above-mentioned EM-type algorithms do not directly maximize the observed local log-likelihood functions but the complete-data local log-likelihood functions. Thus, the calculation of the common responsibilities does not take into account the local information. In a previous work ( Skhosana et al. 2022), the authors of the current paper proposed a novel EM-type algorithm that obtains the common responsibilities \(\{\gamma _{ik}:i=1,2,\dots ,n;k=1,2,\dots ,K\}\) from the local responsibilities \(\{\gamma _{ik}(u):i=1,2,\dots ,n; k=1,2,\dots ,K;u\in {\mathcal {U}}\}\) thus incorporating the local information. As with the LEM algorithm, the proposed EM-type algorithm is a modified version of Algorithm 1. Briefly, the algorithm replaces the responsibilities (1) with common responsibilities selected as the local responsibilities that correspond to the smoothest estimates of the component non-parametric functions. See the paper for more details. The algorithm was later extended to estimate SPGMPLMs ( Skhosana et al. 2023). In the next section, we propose an alternative estimation strategy to address label-switching.

4 The proposed approach

In this section, we propose to address label-switching by reformulating model (4) as a mixture of GMMs. Estimating the mixture of GMMs is, in effect, equivalent to simultaneously estimating all the parameters of each local GMM and hence the component non-parametric functions. Note that, in contrast to existing estimation strategies, this implies that the proposed estimation strategy estimates all the local parameters by maximizing only one likelihood function. Nevertheless, the strategies follow the same principle, simultaneous maximization (estimation) of the local likelihood functions (parameters).

At the end of Sect. 4.1, we show that the proposed approach encompasses, as a special case, an estimation strategy similar to the one proposed in ( Skhosana et al. (2022)).

4.1 The mixture of GMMs

As discussed in Sect. 3, label-switching takes place when estimating the local parameters by separately maximizing each local-likelihood function (7). In the following, we propose an estimation strategy that can

-

1.

simultaneously estimate the local parameters in order to address label switching; and

-

2.

select the optimal set of local grid points.

Towards that end, we reformulate the model (4) by introducing a second source of missing information. We assume that the parameters \(\pi _k\) and \(\sigma ^2_k\) are also non-parametric functions of x and let \({\mathcal {U}}=\{u_1,u_2,\dots ,u_N\}\) be a set of N local points in the domain of the covariate x. It follows that, at each local point \(u_t\), for \(t=1,2,\dots ,N\), model (4) is a GMM (1)

where \(\pi _k=\pi _k(u_t)\), \(\mu _k=m_k(u_t)\) and \(\sigma ^2_k=\sigma ^2_k(u_t)\).

One of these local GMMs can be viewed as a distribution of the response variable y. Since we do not observe the identity of this local GMM, y follows a mixture of these local GMMs

where \(\lambda _t>0\) (satisfying \(\sum _{t=1}^{N}\lambda _t=1\)) is the mixing proportion, probability or weight. As a mixing proportion, \(\lambda _t\) can be viewed as the relative number of data points that were generated by the \(t^{th}\) local GMM. As a mixing probability, \(\lambda _t\) can be interpreted as the probability that a given data point, say \(y_i\), was generated by the \(t^{th}\) local GMM. Thus, the larger the value of \(\lambda _t\), the more data will be associated with the \(t^{th}\) local GMM. Alternatively, the larger the value of \(\lambda _t\), the more likely that a given data point was generated by the local model \(f_{u_t}(y)\). As a mixing weight, \(\lambda _t\) can be viewed as specifying the relative importance of the \(t^{th}\) local GMM. The larger the weight, the more significant the local model is to the the overall model. Stated differently, a local model with a small weight (\(\lambda _t\approx 0\)) is indicative of a sparse local region with few to no data points in the neighbourhood of the local point.. This in turn implies that the local model has little to no information about the data and consequently about the overall model. Thus, the use of the corresponding local point is of little value to the overall fit of the model.

From the previous discussion, the benefits of the weights \((\lambda _1,\lambda _2,\dots ,\lambda _N)\) become apparent. They can be used in various innovative ways as we discuss below.

To estimate model (19), we first need to specify the set of local grid points \({\mathcal {U}}\). We can follow convention and use the observed covariate values or a set of equally-spaced values from the domain of the covariate. Alternatively, we can use the weights as follows: we begin by setting \({\mathcal {U}}\) as all the observed covariate values. Next, we modify the EM algorithm by introducing a step between the E- and M- step that determines all the weights that are below a certain threshold, say \(\lambda _0\), that measures relative importance. Recall that the weights correspond with the local grid points. Thus, all the local grid points whose corresponding weights are below \(\lambda _0\) are removed and the algorithm continues with the remaining local grid points. We repeat the steps of this modified EM algorithm until convergence. The advantage of this approach is that it finds both the number, N, and location of the grid points.

Another benefit of the weights is in suggesting an alternative approach to address label-switching. As mentioned before, estimating model (19) is equivalent to simultaneously estimating all the local parameters thus addressing label-switching. Moreover, the estimation can be done using the classical EM algorithm or the modified EM algorithm described above. An alternative strategy to addressing label-switching is to estimate all the local GMMs and choose the one with the largest weight and use its resulting local responsibilities as the common responsibilities used to maximise all the local-likelihood functions. In this manner, this proposed alternative approach is, in principle, similar to the approach proposed in Skhosana et al. (2022).

Note that since the set of local points \({\mathcal {U}}\) is determined by the range \({\mathcal {X}}\) of the covariate x, model (19) represents a reformulation of model (4). Moreover, due to the mixture of mixtures structure (19), the new model is a hierarchical. To highlight this hierarchy, model (4) can be written as

where \(\pi _{t,k}=\pi _k(u_t)\), \(m_{t,k}=m_{k}(u_t)\) and \(\sigma ^2_{t,k}=\sigma ^{2}_{k}(u_t)\).

4.2 Estimation procedure

In this section, we propose an estimation procedure for model (20). Consider a random sample \(\{(x_i,y_i):i=1,2,\dots ,n\}\) from model (20). The corresponding log-likelihood function is

where \(\varvec{\lambda }=(\lambda _1,\dots ,\lambda _N)\) and \(\varvec{\theta }=(\varvec{\theta }(u_1),\dots ,\varvec{\theta }(u_N))\) with \(\varvec{\theta }(u_t)=(\varvec{\pi }_{t\cdot },{\textbf{m}}_{t\cdot },\varvec{\sigma }^2_{t\cdot })\), \(\varvec{\pi }_{t\cdot }=(\pi _{t,1},\dots ,\pi _{t,K})\), \({\textbf{m}}_{t\cdot }=(m_{t,1},\dots ,m_{t,K})\) and \(\varvec{\sigma }^2_{t\cdot }=(\sigma ^2_{t,1},\dots ,\sigma ^2_{t,K})\), for \(t=1,2,\dots ,N\).

We propose a modified Expectation Conditional Maximization (ECM-) type ( Meng and Rubin 1993) to maximize (21). The ECM is a modified version of the classical EM algorithm where the M-step is split into simpler M-steps also known as conditional M (CM-) steps. Note that we now have two latent variables. The first latent variable serves as an indicator variable for the identity of the local model that generated a given data point. For each data point, we define this latent variable as \({\textbf{v}}_i=(v_{i1},v_{i2},\dots ,v_{iN})\) where \(v_{it}=1\) if the \(i^{th}\) data point belongs or was generated by the \(t^{th}\) local model and 0 otherwise. The second latent variable, \({\textbf{z}}_{it}\), serves as an indicator variable for the identity of the Gaussian component, from the \(t^{th}\) local model, that generated a given data point. Thus, \({\textbf{z}}_{it}=(z_{it1},z_{it2},\dots ,z_{itK})\), where \(z_{itk}=1\) if the \(i^{th}\) data point was generated by the \(k^{th}\) component from the \(t^{th}\) local mixture model. Given the completed-data \(\{(x_i,y_i,{\textbf{z}}_{it},{\textbf{v}}_i):i=1,2,\dots ,n;t=1,2,\dots ,N\}\), the corresponding (complete-data) log-likelihood is

where

with \(\varvec{\pi }=(\varvec{\pi }_{t\cdot })_{1\le t\le N}\) and \(\varvec{\theta }=({\textbf{m}}_{t\cdot },\varvec{\sigma }^2_{t\cdot })_{1\le t\le N}\).

Let \({\mathcal {T}}=\{t|\lambda _t>\lambda _0\}\) be the set of all indices of the local models where the weights \(\lambda _t\)’s are greater than some constant \(0<\lambda _0<1\). The constant \(\lambda _0\) is a threshold that specifies a level beyond which a local point can be considered to be significant in the sense discussed above. The threshold \(\lambda _0\) is a free parameter (hyperparameter) that can be chosen subjectively or objectively based on the data. More details will be given below.

At the \((r+1)^{th}\) iteration of the E-step, we calculate the conditional expected value of \(\ell ^{1c}_{0}(\varvec{\lambda })\), \(\ell ^{2c}_{0}(\varvec{\pi })\) and \(\ell ^{3c}_{0}(\varvec{\theta })\), denoted by \(Q(\varvec{\lambda }|\varvec{\lambda }^{(r)})\), \(Q(\varvec{\pi }|\varvec{\pi }^{(r)})\) and \(Q(\varvec{\theta }|\varvec{\theta }^{(r)})\), respectively, with respect to the conditional distribution of \({\textbf{v}}\) and \({\textbf{z}}\). This corresponds to estimating the latent variables \(v_{it}\) and \(z_{itk}\) for \(i=1,2,\dots ,n\), \(k=1,2,\dots ,K\) and \(t\in {\mathcal {T}}^{(r)}\) using \({\mathbb {E}}[v_{it}|x_i,y_i,\lambda _t^{(r)},\varvec{\pi }^{(r)}_{t\cdot },\varvec{\theta }^{(r)}(u_t)]\) and \({\mathbb {E}}[z_{itk}|x_i,y_i,{\textbf{v}}_i,\varvec{\pi }^{(r)}_{t\cdot },\varvec{\theta }^{(r)}(u_t)]\), respectively. Using Bayes’ theorem, the latter are calculated as

and

Note that (24) is similar to (11), with the difference being that we now have to take into account the value of \({\textbf{v}}_i\), for \(i=1,2,\dots ,n\). Expression (23) \({\hat{v}}^{(r+1)}_{it}\) can be interpreted as the probability that the \(i^{th}\) data point was generated by the \(t^{th}\) local model. In other words, it represents the responsibility of the \(t^{th}\) local model for the \(i^{th}\) data point. Given that the \(i^{th}\) data point belongs to the \(t^{th}\) local model, \({\hat{z}}_{itk}^{(r+1)}\) has the same interpretation as \(\gamma _{ik}(u_t)\).

After replacing \(v_{it}\) with \({\hat{v}}_{it}\) and \(z_{itk}\) with \({\hat{z}}_{itk}\) in (22), we obtain \(Q(\varvec{\lambda }|\varvec{\lambda }^{(r)})\), \(Q(\varvec{\pi }|\varvec{\pi }^{(r)})\) and \(Q(\varvec{\theta }|\varvec{\theta }^{(r)})\).

At the first CM-step, on the \((r+1)^{th}\) iteration, we update \(\lambda ^{(r)}\), by maximizing \(Q(\varvec{\lambda }|\varvec{\lambda }^{(r)})\), given \({\mathcal {T}}^{(r)}\), to obtain

To update \({\mathcal {T}}^{(r)}\), let

At the second CM-step, we update \(\varvec{\pi }^{(r)}\) and \(\varvec{\theta }^{(r)}\) by maximizing \(Q(\varvec{\pi }|\varvec{\pi }^{(r)})\) and \(Q(\varvec{\theta }|\varvec{\theta }^{(r)})\), respectively. Note that if we maximize, say \(Q(\varvec{\theta }|\varvec{\theta }^{(r)})\), with respect to \(m_{t,k}\), for \(t\in {\mathcal {T}}^{(r)}\), the resulting estimated function \(m_k(x_i)\), for \(i=1,2,\dots ,n\), may exhibit wild oscillations. This is because, at each local point \(u_t\), the contribution of all the covariate values \(\{x_1,x_2,\dots ,x_n\}\) to the likelihood function is equal. Thus, the local parameter estimate, say \({\hat{m}}_{t,k}\), will be sensitive to values of the covariate that are not within its neighbourhood. This might possibly lead to a biased estimate.

To remedy this, we propose to maximize kernel weighted versions of these complete-data log-likelihood functions

where the kernel function \(K_h(x_i-u_t)\) is used to provide a weight to \(x_i\) relative to the local point \(u_t\). Note that if we choose \(K_h(\cdot )\) as the uniform kernel function, the above problem persists. Thus, \(Q(\varvec{\pi }|\varvec{\pi }^{(r)})\) and \(Q(\varvec{\theta }|\varvec{\theta }^{(r)})\) are implicitly kernel weighted, where the kernel function is uniform.

Maximizing (27) with respect to \(\pi _{t,k}\), we get

Maximizing (28) with respect to \(m_{t,k}\) and \(\sigma ^2_{t,k}\) we get

where \(w^{(r+1)}_{itk}={\hat{v}}^{(r+1)}_{it}{\hat{z}}^{(r+1)}_{itk}K_h(t_i-u_t)\).

We repeat the above E- and CM-steps until convergence.

The derivations of (29), (30) and (31) are given in Appendix B.

Let \(r=R\) be the iteration index at convergence. To obtain \({\hat{m}}_{k}(x_i)\) for \(i=1,2,\dots ,n\) and \(k=1,2,\dots ,K\), we linearly interpolate over \(m^{(R)}_{t,k}\), for \(k=1,2,\dots ,K\) and \(t\in {\mathcal {T}}^{(R)}\). The first-stage estimates of the other non-parametric functions can be obtained in a similar manner.

We refer to the above algorithm as the model-based EM-type (henceforth, MB-EM) algorithm. Model-based because of its hierarchical mixture of mixtures structure as well as its ability to select the local grid points in a principled manner by making use of a probability distribution (model) and EM because it is a modified version of the classical EM algorithm.

Note the following properties of the MB-EM algorithm:

-

Choice of \(\lambda _0\): Based on empirical evidence in Sect. 5.2, we showed that the algorithm is not sensitive to the choice of the parameter \(\lambda _0\);

-

Ascent property: An important and attractive property of the classical EM algorithm is the ascent property. That is, at each iteration \(\ell ^{(r+1)}_0(\varvec{\lambda },\varvec{\beta })\ge \ell ^{(r)}_0(\varvec{\lambda },\varvec{\beta })\). Empirical evidence shows that the MB-EM algorithm also has this property;

-

Convergence: The convergence of the algorithm can be evaluated in either one of the following ways: (1) Stop the algorithm when the increase in the likelihood from one iteration to the next is below some small pre-specified threshold. (2) Stop the algorithm when the change in the estimated parameters from one iteration to the next is smaller than some small value. For instance, \(||\varvec{\lambda }^{(r+1)}-\varvec{\lambda }^{(r)}||_{1}<10^{-5}\) or \(||\varvec{\lambda }^{(r+1)}-\varvec{\lambda }^{(r)}||_{2}<10^{-5}\), where \(||\cdot ||_{1}\) and \(||\cdot ||_{2}\) denotes the \(L_{1}\) and \(L_{2}\) norm, respectively, on \({\mathbb {R}}^{N^{(r+1)}}\). The superscript \(N^{(r+1)}\) is used to denote the number of local grid points at the \((r+1)^{th}\) iteration.

-

Algorithm complexity: At each iteration of the MB-EM algorithm, the overall time complexity of the E-step is \(O(n\times N^{(r+1)}\times K)\). In comparison, the time complexity of the NaiveEM algorithm and the LEM algorithm is \(O(n\times N\times K)\) and \(O(n\times K)\), respectively. It is known that the slow convergence of the classical EM algorithm is largely as a result of the E-step computations (see Chapter 2 of Fruhwirth-Schnatter et al. (2019)). This implies that the LEM algorithm should be computationally faster than the proposed algorithm. Note that the overall time complexity of the proposed CM-steps is \(O(n\times N^{(r+1)}\times K)\) and that of the M-step of both the NaiveEM algorithm and LEM algorithm is \(O(n\times N\times K)\). However, as shown in the simulations, the computational advantage of the LEM comes at the cost of inaccurate estimation.

Let (\(\hat{\varvec{\pi }},\hat{{\textbf{m}}},\hat{\varvec{\sigma }}^{2}\)) be the estimates of the parametric and non-parametric terms \((\varvec{\pi },\varvec{\sigma })\) and \({\textbf{m}}\), respectively, obtained from estimating model (20). Note that when defining the mixture of GMMs (20), we assumed that the global parameters \((\varvec{\pi },\varvec{\sigma }^2)\) were local. However, to obtain efficient estimates of the global parameters, we must use all of the data during estimation. Thus, in an effort to improve the estimates (\(\hat{\varvec{\pi }},\hat{\varvec{\sigma }}^2\)), given \(\hat{{\textbf{m}}}\), we propose updated estimates \(\tilde{\varvec{\pi }}\) and \(\tilde{\varvec{\sigma }}^{2}\) obtained by maximizing the global log-likelihood function

Given the global parameter estimates \(\tilde{\varvec{\pi }}\) and \(\tilde{\varvec{\sigma }}^{2}\), we can improve the local estimate \(\hat{{\textbf{m}}}\). To achieve this, we propose the estimate \(\tilde{{\textbf{m}}}\) obtained by maximizing the local log-likelihood function

over all grid points \(u_t, t=1,2,\dots ,N\).

Note that the global parameter estimates \(\tilde{\varvec{\pi }}\) and \(\tilde{\varvec{\sigma }}^{2}\) are well labelled. This implies that the local log-likelihood functions (33) can be maximized separately without being concerned about label switching.

In summary, the proposed estimation procedure proceeds in two stages. In the first stage, we obtain the estimates (\(\hat{\varvec{\pi }},\hat{{\textbf{m}}},\hat{\varvec{\sigma }}^{2}\)). Thereafter, in the second stage, we obtain the estimates (\(\tilde{\varvec{\pi }},\tilde{\varvec{\sigma }}^2,\tilde{{\textbf{m}}}\)).

We refer to the second-stage estimates \((\tilde{\varvec{\pi }},\tilde{\varvec{\sigma }}^{2},\tilde{{\textbf{m}}})\) as the one-step backfitting estimate.

4.3 One-step backfitting algorithm

In this section, we propose a one-step backfitting algorithm to obtain the one-step backfitting estimates \(\tilde{\varvec{\theta }}\). The MB-EM algorithm is an intermediate part of this algorithm.

4.3.1 Stage 0: Initializing the algorithm

Obtain appropriate initial estimates of the global parameters and the non-parametric functions, denoted \(({\textbf{m}}^{(0)},\varvec{\sigma }^{2(0)})\) and \(\varvec{\pi }^{(0)}\), respectively, by making use of, say mixture of regression splines (see Xiang and Yao (2018)). Moreover, let \({\mathcal {U}}\) be the set of N grid points, \({\mathcal {T}}^{(0)}=\{1,2,\dots ,N\}\) be the initial set of indices and specify \(\lambda _0\). In our preliminary numerical experiments, we found that the model estimates are not sensitive to the specified value of \(\lambda _0\) provided that the value is not chosen too large. In this case, the algorithm may fail because it is using few to no local points. In the extreme case, \({\mathcal {T}}\) will be empty. Thus, we recommend using the parameter value \(\lambda _0=1\times 10^{-5}\).

4.3.2 Stage 1: MB-EM algorithm to maximize \(\ell _0\)

Let \(\varvec{\lambda }^{(r)}\), \(\varvec{\theta }_1^{(r)}\) and \(\varvec{\theta }_2^{(r)}\) be the parameter estimates obtained at the \(r^{th}\) iteration.

E-Step: At the \((r+1)^{th}\) iteration, calculate \(Q(\varvec{\lambda }|\varvec{\lambda }^{(r)})\), \(Q(\varvec{\pi }|\varvec{\pi }^{(r)})\) and \(Q(\varvec{\theta }|\varvec{\theta }^{(r)})\) by first estimating \({\textbf{v}}_i\) and \({\textbf{z}}_i\), for \(i=1,2,\dots ,n\), using (23) and (24), respectively.

CM-Step 1: Maximize \(Q(\varvec{\lambda }|\varvec{\lambda }^{(r)})\) to obtain \(\varvec{\lambda }^{(r+1)}\) and \({\mathcal {T}}^{(r+1)}\) using (25) and (26), respectively.

CM-Step 2: Given \({\mathcal {T}}^{(r+1)}\), maximize \(Q(\varvec{\pi }|\varvec{\pi }^{(r)})\) and \(Q(\varvec{\theta }|\varvec{\theta }^{(r)})\) to obtain \(\varvec{\pi }^{(r+1)}\) and \(\varvec{\theta }^{(r+1)}\) using (29), (30) and (31), respectively.

Repeat the above E- and CM-steps until convergence.

4.3.3 Stage 2(a): EM algorithm to maximize \(\ell _{1}\)

Given \(\hat{{\textbf{m}}}\) obtained from Stage 1, we obtain the global estimates \(\tilde{\varvec{\pi }}\) and \(\tilde{\varvec{\sigma }}^{2}\) of the global parameters \(\varvec{\pi }\) and \(\varvec{\sigma }^{2}\), respectively, by maximizing \(\ell _{1}\) in (32) using the usual EM algorithm.

E-Step: At the \((r+1)^{th}\) iteration, calculate the expected value of the latent variable as

M-Step: We obtain the global parameter estimates \(\varvec{\pi }^{(r+1)}\) and \(\varvec{\sigma }^{2(r+1)}\) using the following equations

Repeat the above E- and M-step until convergence

4.3.4 Stage 2(b): EM algorithm to maximize \(\ell _{2}\)

Given \(\tilde{\varvec{\pi }}\) and \(\tilde{\varvec{\sigma }}^{2}\) obtained from Stage 2(a), we propose an improved estimate of the component non-parametric functions, denoted by \(\tilde{{\textbf{m}}}\), obtained by maximizing each local log-likelihood function in (33) using the usual EM algorithm.

E-Step: At the \((r+1)^{th}\) iteration, calculate the expected value of the latent variable as

M-Step: We obtain \(m_k^{(r+1)}(u_t)\), for \(t=1,2,\dots ,N\), using (16).

Repeat the above E- and M-step until convergence.

At convergence of the EM algorithm of Stage 2(b), we obtain \(\tilde{{\textbf{m}}}=(\tilde{{\textbf{m}}}_1,\tilde{{\textbf{m}}}_2,\dots ,\tilde{{\textbf{m}}}_K)\), where \(\tilde{{\textbf{m}}}_k=({\tilde{m}}_{k}(x_1),\dots ,{\tilde{m}}_{k}(x_n))\) by linear interpolation over \(m^{(R)}_{k}(u_t)\) for \(t=1,2,\dots ,N\) and \(k=1,2,\dots ,K\).

We refer to the estimates \(\tilde{\varvec{\pi }}\), \(\tilde{\varvec{\sigma }}^{2}\) and \(\tilde{{\textbf{m}}}\) as the one-step backfitting estimates. To further improve the one-step backfitting estimates, we can repeat Stage 2(a) and 2(b) of the algorithm until convergence.

Remark 1

Note that label-switching is not a concern when obtaining the non-parametric estimates \(\tilde{{\textbf{m}}}\). This is because the global parameter estimates \(\tilde{\varvec{\pi }}\) and \(\tilde{\varvec{\sigma }}^{2}\) are the same across all the local points in Stage 2(b).

5 Simulations

In this section, we perform numerical experiments to demonstrate the performance of the proposed method. The purpose of these experiments is two fold. First, we want to demonstrate the effectiveness of the proposed method towards addressing label-switching. Second, we want to evaluate the accuracy of the proposed one-step backfitting estimators. Moreover, we want to demonstrate the practical suitability of the fitted model based on these estimators. For the rest of the chapter, we refer to the proposed model-based one-step backfitting algorithm, simply as the MB-EM algorithm. All numerical experiments are performed using the R programming language (R Core Team 2023).

5.1 Choosing the bandwidth, h

Among other things, local polynomial fitting requires the bandwidth, h. In practice, this component is usually chosen using a data-driven approach such as cross-validation (CV). In this paper, we propose a generalized CV (GCV) approach (see Craven and Wahba (1979)) for bandwidth selection. The GCV approach is less computationally intensive compared to the ordinary multi-fold CV approach, it alleviates the tendency of the ordinary CV approach to undersmooth ( Hastie et al. 2009) and, more importantly, it allows us to express the CV error as a function of the complexity (number of parameters) of the estimator. This is important when comparing two different local polynomial estimators as will be shown in section 5.2.

Let \(\hat{{\textbf{y}}}_k=({\hat{y}}_{1k},\dots ,{\hat{y}}_{nk})^\intercal \) be the vector of fitted values, where \({\hat{y}}_{ik}={\tilde{m}}_k(x_i)\) is the one-step backfitting estimate of \(m_k(x_i)\). Using (16), it can be shown that \({\tilde{m}}_k(x_i)={\textbf{s}}(x_i){\textbf{y}}\), where \({\textbf{s}}(x_i)={\textbf{e}}^\intercal {\textbf{A}}_k^{-1}{\textbf{B}}_k\) after replacing u by \(x_i\). Then

where \({\textbf{S}}_{hk}=({\textbf{s}}(x_1),{\textbf{s}}(x_2),\dots ,{\textbf{s}}(x_n))^\intercal \) is known as the smoother matrix, see Buja et al. (1989) for more details. The first subscript shows that the smoother matrix depends on the bandwidth h, among others. We propose the following GCV error

where ASE\(_k\)=\(({\textbf{y}}-\hat{{\textbf{y}}}_k)^{\intercal }{\textbf{W}}_k({\textbf{y}}-\hat{{\textbf{y}}}_k)/n_k\), with \(n_k=\sum _{i=1}^{n}{\hat{\gamma }}_{ik}\), is the average squared error (ASE) of the fitted \(k^{th}\) CRF, \({\textbf{W}}_k=\text {diag}({\hat{\gamma }}_{1k},{\hat{\gamma }}_{2k},\dots ,{\hat{\gamma }}_{nk})\) is the diagonal matrix of the responsibilities of the \(k^{th}\) component obtained based on the one-step backfitting estimates \(\tilde{\varvec{\theta }}=(\tilde{\varvec{\pi }},\tilde{\varvec{\sigma }}^{2},\tilde{{\textbf{m}}})\) and

where \(s_{ii}\), for \(i=1,2,\dots ,n\), are the diagonal entries of the smoother matrix \({\textbf{S}}_{hk}\). Expression (40) denotes the degrees of freedom of the \(k^{th}\) component. The latter quantifies the complexity of the fitted CRF as it gives the effective number of parameters used to estimate the \(k^{th}\) CRF, see Buja et al. (1989) for more details. This concept is very useful for comparing local polynomial estimates of different degrees. We will demonstrate this in our simulation study.

5.2 Simulation studies

For each of our numerical experiments, we generate 500 data sets of sizes \(n=250\), 500, 1000 and 2000. We make use of \(N=100\) local points chosen uniformly on the domain of x. In all our simulations, the covariate x is generated from a uniform distribution on the interval (0, 1). We make use of the Gaussian kernel function.

To initialize the proposed method, we make use of the mixture of regression splines (MRS) ( Xiang and Yao 2018). To estimate the MRS, we make use of the bs and ns functions from the R package splines. The knots are chosen as the quartiles of x.

To evaluate the performance of the proposed method, we make use of the following measures:

-

Root average squared error (RASE):

$$\begin{aligned} \text {RASE}^2({\textbf{y}})= & {} \sum _{k=1}^{K}\text {ASE}_k \end{aligned}$$(41)$$\begin{aligned} \text {RASE}^{2}({\textbf{m}})= & {} \frac{1}{n}\sum _{i=1}^{n}\sum _{k=1}^{K}\bigg [{\tilde{m}}_{k}(x_i)-m_{k}(x_i)\bigg ]^2\nonumber \\ \end{aligned}$$(42)$$\begin{aligned} \text {RASE}^{2}(f_{\varvec{\theta }})= & {} \frac{1}{n}\sum _{i=1}^{n}\bigg [{\hat{f}}_{\tilde{\varvec{\theta }}}(y_i|x_i)-f_{\varvec{\theta }}(y_i|x_i)\bigg ]^2\nonumber \\ \end{aligned}$$(43) -

Adjusted Rand Index (ARI) is used to evaluate the clustering ability of the fitted model (ARI; Hubert and Arabie (1985)).

-

Kolmogorov-Smirnov (KS) statistic is used to assess the goodness of the fit \({\hat{F}}_{\tilde{\varvec{\theta }}}\) as

$$\begin{aligned} \text {KS}=\text {max}_{i}|F_{\varvec{\theta }}(y_i|x_i)-{\hat{F}}_{\tilde{\varvec{\theta }}}(y_i|x_i)|, \end{aligned}$$(44)for \(i=1,2,\dots ,n\). Finally, to evaluate the accuracy of the estimated parameters \((\tilde{\varvec{\pi }},\tilde{\varvec{\sigma }}^2)\), we make use of the ASE.

where \(f_{\varvec{\theta }}\) and \(F_{\varvec{\theta }}\) are the true conditional probability distribution (4) and the corresponding cumulative conditional probability distribution, respectively, and \({\hat{f}}_{\tilde{\varvec{\theta }}}\) and \({\hat{F}}_{\tilde{\varvec{\theta }}}\) are the respective estimates.

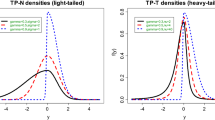

Evaluating the performance of the proposed method towards addressing label-switching We first demonstrate that the proposed method is less sensitive to label-switching and produces reliable model estimates. First, we consider data generated from a \(K=2\) component SPGMNRs given in Table 1.

The CRFs, \(m_{k}(x)'\)s, in Table 1 are given in Fig. 2a. We fit model (4) for \(K=2\) on the generated data using the LCEs obtained via the naive EM algorithm (naiveEM), the proposed MB-EM algorithm and the local EM algorithm of Xiang and Yao (2018). The bandwidths were chosen as 0.05, 0.045, 0.04 and 0.035 for the sample sizes \(n=250\), 500, 1000 and 2000, respectively.

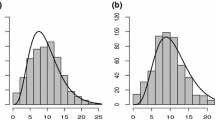

Fig. 3 shows examples of the fitted CRFs for typical samples of sizes \(n=250\), 500, 1000 and 2000. These fitted CRFs were each chosen from the fitted models, among the 500 replicates, with the largest likelihood value based on the results of the naiveEM. As can be seen from the figure, the estimates based on the naiveEM (right-column) are wiggly and non-smooth whereas those based on both the proposed MB-EM (center) and the LEM (right-column) algorithm appear to be stable. For a full picture of the performance of the proposed method compared with both the naiveEM and LEM algorithm, Table 2 gives the average and standard deviations of the performance measures over all the 500 replicates. The results from Table 2 show that the proposed MB-EM algorithm significantly outperforms the naiveEM. Moreover, for small sample sizes, MB-EM generally gives stable (small standard deviations) and slightly better estimates compared with the LEM algorithm.

Next, we consider data generated from the model given in Table 3. The CRFs in Table 3 are plotted in Fig. 2b.

We fitted model (4) for \(K=2\) on the data using the LCEs obtained via the naiveEM, the MB-EM and the LEM. The results are given in Table 4. The results from Table 4 show that MB-EM performs slightly better than both the naiveEM and the LEM algorithm. To further emphasise this last point, Fig. 4 shows examples of the fitted CRFs for typical samples of sizes \(n=250\), 500, 1000 and 2000 chosen as before. As can be seen from the figure, the fitted CRFs based on MB-EM appear to be stable and, more importantly, in line with the true CRFs. In contrast, the fitted CRFs based on the naiveEM exhibit wild oscillations and hence are unstable whereas the estimates based on the LEM, although stable, may not be in line with the true CRFs. Thus, given the instability of the naiveEM, the latter is not useful in practice. Moreover, the estimates based on the LEM cannot be relied upon as they may lead to wrong conclusions. This serves as a further motivation for the proposed method.

True (black curves) and fitted (red curves) CRFs obtained via the NaiveEM algorithm (left-column), the MB-EM algorithm (center) and LEM algorithm (righ-column) for samples of sizes \(n=250\) (first-row), 500 (second-row), 1000 (third-row) and 2000 (fourth-row) generated from the model in Table 1. These CRFs were chosen from the fitted models with the largest likelihood value based on the naiveEM

True (black curves) and fitted (red curves) CRFs obtained via the NaiveEM algorithm (left-column), the MB-EM algorithm (center) and LEM algorithm (righ-column) for samples of sizes \(n=250\) (first-row), 500 (second-row), 1000 (third-row) and 2000 (fourth-row) generated from the model in Table 3. These CRFs were chosen from the fitted models with the largest likelihood value based on the NaiveEM

Local-constant estimator vs. Local-linear estimator Next, we compare the LCEs and LLEs obtained using the proposed MB-EM. The data for this experiment is generated from the model in Table 5. A plot of the CRFs is given in Fig. 2c. It is known that the first and second derivatives of the regression function is a multiplicative and additive term, respectively, in the theoretical bias of a LCE of the regression function (see Fan (1992)). Thus, the CRF for component 2 was chosen so that its first and second derivatives are large. Since we are interested in the performance of the estimators in estimating the CRFs, we only report the \(\text {RASE}({\textbf{m}})\).

Following (Buja et al. 1989), we obtain the bandwidths such that the two estimators have the same total degrees of freedom (tdf), \(\sum _{k=1}^{K}\text {df}_k\), where df\(_k\) is given by (40). This is done so that we can be able to compare the results based on the LCE and LLE (see Buja et al. (1989) for more details). Table 6 gives the average and standard deviations of the RASE, over all the 500 replicates, using the LCEs and LLEs obtained via the proposed MB-EM. As can be seen from the table, LLEs perform better than the LCEs for estimating the CRFs. This is not unexpected. As alluded to above, if the true non-parametric function has a large first and second derivative, then the LCEs will be subject to bias (see Fan (1992)).

Evaluating the sensitivity of the proposed MB-EM algorithm on the value of the parameter \(\lambda _0\) Next, we evaluate the sensitivity of the proposed MB-EM algorithm on the value of the parameter \(\lambda _0\). Before presenting any empirical results, intuitively, the value of \(\lambda _0\) should not be too large because it might lead to the choice of an inadequately small (or zero!) number of local points. In the extreme case the algorithm will fail. On the other hand, if \(\lambda _0\) is chosen too small, the algorithm may not be able to select the optimal set of local points. The resulting local neighbourhood will include all the initial local points.

We evaluate the sensitivity of the fitted model on the value of \(\lambda _0\) using data generated from the models in Tables 1 and 3. For a sample of size \(n=500\), Table 7 gives the results of the MB-EM algorithm for a range of values of \(\lambda _0\). The value \(1\times 10^{-5}=0.00001\).

As can be seen from the table, for values of \(\lambda _0\) at most \(1\times 10^{-4}\), the performance of the algorithm is virtually the same. However, when \(\lambda _0\) is chosen greater than \(1\times 10^{-4}\), the performance deteriorates. In terms of choosing the number of local points where the estimation takes place, for \(\lambda _0=1\times 10^{-2}\), the algorithm tends to choose \(2-10\) local points thus resulting in an inadequate fit. On the other hand, for \(\lambda _0=1\times 10^{-8}\), the algorithm tends to choose \(95-100\) local points. This results are consistent with our above intuition. Thus, any value of \(\lambda _0\) that is not too small (to prevent a large non-local neighbourhood) and not too large (to prevent empty neighbourhoods) will suffice. Clearly, the latter scenario results in the most undesirable outcome. In our simulations and applications, we chose our value of \(\lambda _0\) to be sufficiently small.

Note that the above results still hold if we increase the sample size to say \(n=1000\). The results can be provided upon request from the authors.

Evaluating the computational time Finally, we evaluate the computational time when practically implementing the proposed algorithm compared with the LEM algorithm. The simulations were conducted on a computer with 2 Skylake CPUs each with 24-cores at 2.6 GHz frequency and a 512 GB RAM. Table 8 gives the average time (in minutes) it takes to run the MB-EM algorithm and the LEM algorithm for samples of sizes \(n=250\), 500, 1000 and 2000 using data generated from the models in Tables 1 and 3. The results show that the LEM algorithm is computationally faster than the proposed MB-EM algorithm. However, we believe that the practical performance of the MB-EM algorithm, in terms of producing accurate estimates as clearly shown in Table 4 and Fig. 4, justifies the computational cost of the algorithm.

6 Applications

In this section, we demonstrate the practical usefulness of the proposed method on real data. For real data analysis,

-

1.

we present results based on the proposed MB-EM algorithm and compare them with the results based on the LEM algorithm;

-

2.

we initialize each fitting algorithm by making use of the fitted model based on the local constant estimator;

-

3.

we use the GCV criterion to to select the bandwidth for the local constant estimator. We then choose a bandwidth for the local linear estimator such that the total degrees of freedom (tdf) of the two estimators are the same. As before, this renders the fit based on the two estimators comparable;

-

4.

we measure the goodness-of-fit using the RASE and Bayesian information criterion (BIC)

$$\begin{aligned} \text {BIC}=-2\ell +\text {df}\times \text {log}(n) \end{aligned}$$(45)where \(\text {df}=\text {tdf}+2K-1\) is the overall model degrees of freedom and \(\ell \) is the maximum log-likelihood value. Moreover, we assess the predictive ability of the fitted model using the mean squared prediction error (MPSE). Following (Xiang and Yao 2018), we calculate the MSPE via a Monte Carlo cross validation (MCCV) procedure. The MCCV procedure randomly partitions the data into a training set with size \(n(1-r)\) and a test set with size nr, where r is the proportion of data in the test set. The model is estimated using the data in the training set and then validated using data in the test set. The procedure is repeated T times and we take the average of the MSPEs. We use \(r=0.1\) and \(T=200\); and

-

5.

lastly, we use a conditional bootstrap approach to calculate the pointwise 95% confidence intervals of the fitted CRFs and the 95% confidence intervals of the component mixing proportions and variances. That is, for a given value of x, we sample the corresponding value of the response, denoted by \(y^*\), from the fitted SPGMNRs model \(\sum _{k=1}^K{\hat{\pi }}_k{\mathcal {N}}\{y|{\hat{m}}_k(x),{\hat{\sigma }}^2_k\}\). We repeat this sampling process n times to get a bootstrap sample \({\mathcal {S}}=\{(x_i,y_i^*):i=1,2,\dots ,n\}\). We generate B bootstrap samples \({\mathcal {S}}^{(1)},{\mathcal {S}}^{(2)},\dots ,{\mathcal {S}}^{(B)}\) in the above manner. We fit the SPGMNRs model (4) on each of these bootstrap samples, thus generating a sampling distribution of \({\hat{\pi }}_k\), \({\hat{\sigma }}^2_k\) and \({\hat{m}}_k(x)\). To compute the 95% confidence intervals, we take the \(2.5^{th}\) and \(97.5^{th}\) percentiles of the sampling distributions as the lower and upper limits, respectively, of the interval. We set \(B=200\).

6.1 South African Covid-19 data

For our first application, we consider the Covid-19 infection rates (\(r_t\)) over time (t) in two South African provinces, Kwa-Zulu Natal (KZN) and the Eastern Cape (EC), for the period December 2020 to 15 February 2021. This data set was previously used by Millard and Kanfer (2022) where a description can be found. The data was collected from the Data Science for Social Impact COVID-19 data repository.

Figure 5a gives a scatter plot of the data along with the identity of the province that generated each data point. The purpose of this application is to demonstrate the effectiveness of the proposed method in addressing label-switching and identify each data point with the province that generated it. Thus, we take province as a latent variable. It is clear from Fig. 5a that the relationship between the infection rate, \(r_t\), and time, t, is non-linear in each province. Thus, we fit a \(K=2\) component SPGMNRs to the data.

The GCV criterion gave a bandwidth of 1.0249 for the local constant estimator which corresponds with a tdf of about 71. The bandwidth for the local linear estimator with about the same tdf is 1.0468.

Table 9 gives the results of the fitted model obtained using the MB-EM algorithm and LEM algorithm. Since we know the actual component (province) where each data point belongs to, we also measure the clustering ability of the fitted models using the ARI. For this data, the local constant estimated model is slightly better than the local linear estimate, with a small BIC and RASE. However, the predictive ability of the two estimates is virtually the same. The results based on the proposed MB-EM and the LEM algorithm are virtually the same for this data set.

Figure 5b shows the fitted component regression functions (CRFs) using the proposed MB-EM algorithm. We can see that the proposed method was able to detect the "latent" structure.

6.2 African CO2 data

For our next analysis on real data, we consider the relationship between carbon dioxide (CO\(_2\)) emissions, a measure of environmental degradation, and gross domestic product (GDP), a measure of the monetary value produced by a country in a given period. Figure 6a shows a scatter plot of CO\(_2\) per capita (in metric tons) on GDP per capita (in US$) for a group of 51 African countries in 2014. The countries includes, among others, South Africa (ZAF), Botswana (BWA) and Zimbabwe (ZWE). The data were obtained from the World Bank’s World development indicators database (accessed on 10 April 2023). A quick visual inspection of Fig. 6a reveals two clusters (groups) of countries based on the relationship between CO\(_2\) and GDP. Moreover, this relationship is not linear in either of the two groups. A mixture of non-parametric regression analysis is apt for this data. Such an analysis can assist us in answering questions such as

-

What development path is adopted by each group of countries? Especially, the low GDP countries.

-

Which countries, if any, are pursuing economic growth at a high cost to the environment?

-

Is a linear relationship between CO2 and GDP appropriate for each group of countries?

-

Are there more than two groups of countries?

After standardizing the variables, we fit a \(K=2\) component SPGMNRs model to the data on Fig. 6a in an attempt to answer some of the questions above. The GCV criterion chose a bandwidth of 0.1725 for the LCE which corresponds to a tdf of about 14. To obtain about the same tdf, the bandwidth of the LLE was chosen to be 0.2343. To confirm that there are indeed two groups and the regression relationships are non-linear, we also fitted the SPGMNRs and GMLRs models with \(K=1,3,4\) and 5 components and compared them based on the BIC. The SPGMNRs and the GMLRs for \(K=1\) are essentially the non-parametric regression and linear regression models, respectively. These models were fitted using the R functions: locfit ( Loader 2023) and glm, respectively.

The results (Table 10) show that the \(K=2\) component SPGMNRs model is appropriate for this data having the smallest value of the BIC. Thus, we have confirmed that there are indeed two groups of countries. We therefore proceed with the fitted \(K=2\) component SPGMNRs model.

Table 11 gives the results from the fitted model. It can be seen that the model based on the local linear estimator is the best as it attains the best overall model goodness-of-fit and good performance on out-of-sample prediction. Moreover, the overall performance of the proposed MB-EM algorithm is slightly better than that the other LEM for this data set.

Based on the proposed local linear one-step backfitting estimators via the MB-EM algorithm, the mixing proportions and and variances, along with their 95% bootstrap confidence intervals, were obtained as 0.4775 (0.2425 - 0.5054), 0.5225 (0.4946 - 0.7576), 0.0106 (0.0047 - 0.0343) and 0.0053 (0.0010 - 0.0148), respectively. Figure 6c and 6d gives the fitted CRFs obtained using the proposed LLEs via the MB-EM algorithm. Included in Fig. 6 are the 95% pointwise bootstrap confidence intervals. The estimated CRFs based on the LEM are similar and hence they are excluded.

The estimated CRF in Fig. 6c reveals an interesting phenomenon. CO\(_2\) emissions increase up until a certain level of GDP. Thereafter, beyond this level, they exhibit a slow down in further increases of CO\(_2\) emissions. This is consistent with the well-known environmental Kuznets curve (EKC) hypothesis in environmental economics (see Dinda (2004)). The EKC says that, at the development phase, the value of a country’s economy increases at a high cost to the environment due to high carbon emissions from the industrialization process. Beyond a certain level of growth, this effect is reversed and economic growth leads to lower carbon emissions. This phenomenon hypothesizes a non-linear negative parabolic-like relationship between CO2 and GDP. Assuming that all countries follow the same EKC, for a cross-section of countries, the estimated EKC’s in Fig. 6 show countries at different stages of development ( Dinda 2004). Using model-based clustering (see McNicholas (2016)), we can use the fitted model to assign each country to a given group. The results are given in Fig. 6b. We find that the developmental path given by the curve in Fig. 6c is made up by countries such as Namibia, Swaziland and Botswana. Countries in which the energy mix is becoming less dominated by fossil fuels. Whereas the developmental path given by the curve in Fig. 6d is made up by countries such as South Africa, Morocco and Egypt. Countries in which the energy mix is still heavily dominated by fossil fuels.

7 Conclusion

This paper was concerned with addressing the label-switching problem encountered when estimating semi-parametric Gaussian mixtures of non-parametric regressions (SPGMNRs) using local likelihood methods. Applying the EM algorithm to maximize each local likelihood function separately does not guarantee that the component labels on the local parameter estimates will be aligned. We proposed a two-stage approach to: (1) address label-switching and (2) obtain good estimates of the parametric and non-parametric terms of the model. In the first-stage, we use a model-based approach to, in effect, simultaneously maximize the local-likelihood functions thus addressing label-switching. Within the model-based framework, we use a modified ECM algorithm, to automatically choose the number and location of the local points. In the second-stage, to improve the first-stage estimates, we propose one-step backfitting estimates of the parametric and non-parametric terms. We demonstrated the effectiveness and usefulness of the proposed method on simulated and two real datasets.

The proposed approach incorporates a tuning parameter (threshold) \(\lambda _0\) which was shown by simulation, under different scenarios, to have less influence on the results if chosen to be neither too small or too large. This is similar to the bias-variance trade-off when choosing the optimal bandwidth. As with the bandwidth, we can use a data-driven approach to choose the optimal \(\lambda _0\). This will be a subject for future studies. Given the success of the proposed method when only the CRFs are semi- or non-parametric, it could be interesting, and of practical use for future studies, to investigate the effectiveness of the approach when, in addition to the CRFs, the mixing proportions and/or variances are also non-parametric.

Availability of data and materials

All the data and software used in this research can be accessed through the link: Data and Software

References

Bishop, C.M.: Pattern Recognition and Machine Learning. Springer, New York (2006)

Buja, A., Hastie, T., Tibshirani, R.: (1989) Linear smoothers and additive models. Ann. Stat. pp. 453–510

Carroll, R.J., Fan, J., Gijbels, I., et al.: Generalized partially linear single-index models. J. Am. Stat. Assoc. 10(1080/01621459), 10474001 (1997)

Craven, P., Wahba, G.: Smoothing noisy data with spline functions: estimating the correct degree of smoothing by the method of generalized cross-validation. Numer. Math. 31(4), 377–403 (1979)

Dempster, A.P., Laird, N.M., Rubin, D.B.: Maximum likelihood from incomplete data via the EM algorithm. J. Roy. Stat. Soc.: Ser. B Methodol. 39(1), 1–22 (1977)

DeSarbo, W.S., Cron, W.L.: A maximum likelihood methodology for clusterwise linear regression. J. Classif. 5, 249–282 (1988)

Dinda, S.: Environmental Kuznets curve hypothesis: a survey. Ecol. Econ. 49(4), 431–455 (2004)

Fan, J.: Design-adaptive nonparametric regression. J. Am. Stat. Assoc. 87(420), 998–1004 (1992)

Fan, J., Gijbels, I.: Local Polynomial Modelling and its Applications. CRC Press, New York (1996)

Frühwirth-Schnatter, S.: Finite Mixture and Markov Switching Models. Springer Series in Statistics, Springer, New York (2006)

Fruhwirth-Schnatter, S., Celeux, G., Robert, C.P.: Handbook of Mixture Analysis. CRC Press, New York (2019)

Hastie, T., Tibshirani, R., Friedman, J.H.: The Elements Of Statistical Learning: Data Mining, Inference, and Prediction. Springer, New York (2009)

Hastie, T.J., Tibshirani, R.J.: Generalized Additive Models. Taylor & Francis, New York (1990)

Huang, M., Yao, W.: Mixture of regression models with varying mixing proportions: a semiparametric approach. J. Am. Stat. Assoc. 10(1080/01621459), 682541 (2012)

Huang, M., Li, R., Wang, S.: Nonparametric mixture of regression models. J. Am. Stat. Assoc. 10(1080/01621459), 772897 (2013)

Hubert, L., Arabie, P.: Comparing partitions. J. Classif. 2, 193–218 (1985)

Hurn, M., Justel, A., Robert, C.P.: Estimating mixtures of regressions. J. Comput. Graph. Stat. (2003). https://doi.org/10.1198/1061860031329

Jacobs, R.A., Jordan, M.I., Nowlan, S.J., et al.: Adaptive mixtures of local experts. Neural Comput. 3(1), 79–87 (1991)

Loader, C.: (2023) locfit: local regression, likelihood and density estimation. https://CRAN.R-project.org/package=locfit, r package version 1.5-9.8

McLachlan, G., Peel, D.: Finite Mixture Models. Wiley Series in Probability and Statistics, Toronto (2000)

McNicholas, P.D.: Model-based clustering. J. Classif. 33, 331–373 (2016)

Meng, X., Rubin, D.B.: Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 80(2), 267–278 (1993)

Millard, S.M., Kanfer, F.H.J.: Mixtures of semi-parametric generalised linear models. Symmetry (2022). https://doi.org/10.3390/sym14020409

Opsomer, J.D., Ruppert, D.: A root-n consistent backfitting estimator for semiparametric additive modeling. J. Comput. Graph. Stat. 10(1080/10618600), 10474845 (1999)

Quandt, R.E.: A new approach to estimating switching regressions. J. Am. Stat. Assoc. 67(338), 306–310 (1972)

Quandt, R.E., Ramsey, J.B.: Estimating mixtures of normal distributions and switching regressions. J. Am. Stat. Assoc. 73(364), 730–738 (1978)

R Core Team (2023) R: a language and environment for statistical computing. R foundation for statistical computing, Vienna, Austria, https://www.R-project.org/

Schlattmann, P.: Medical Applications of Finite Mixture Models. Springer, Berlin (2009)

Skhosana, S.B., Kanfer, F.H.J., Millard, S.M.: Fitting non-parametric mixture of regressions: introducing an EM-type algorithm to address the label-switching problem. Symmetry (2022). https://doi.org/10.3390/sym14051058

Skhosana, S.B., Millard, S.M., Kanfer, F.H.J.: A novel EM-type algorithm to estimate semi-parametric mixtures of partially linear models. Mathematics (2023). https://doi.org/10.3390/math11051087

Tibshirani, R., Hastie, T.: Local likelihood estimation. J. Am. Stat. Assoc. 82(398), 559–567 (1987)

Titterington, D.M., Smith, A.F.M., Makov, U.E.: Statistical Analysis of Finite Mixture Distributions. Wiley, New York (1985)

Wu, X., Liu, T.: Estimation and testing for semiparametric mixtures of partially linear models. Commun. Stat. Theory Method. 10(1080/03610926), 1189569 (2016)

Xiang, S., Yao, W.: Semiparametric mixtures of nonparametric regressions. Ann. Inst. Statistical Math. (2018). https://doi.org/10.1007/s10463-016-0584-7

Zhang, Y., Pan, W.: (2022) Estimation and inference for mixture of partially linear additive models. Commun. Stat. Theory Method. 10(1080/03610926), 1777305 (2020)

Zhang, Y.: Zheng Q (2018) Semiparametric mixture of additive regression models. Communications in Statistics-Theory and Methods 10(1080/03610926), 1310243 (2017)

Acknowledgements

The authors would like to thank Dr. Jannie Pretorius from the Center for the Advancement of Scholarship at the University of Pretoria for providing the computing platform used to do perform the numerical data analysis presented in this research.

Funding

Open access funding provided by University of Pretoria. Partial financial support was received from STATOMET at the University of Pretoria and the New Generation of Academics programme (nGAP), Department of Higher Education, South Africa.

Author information

Authors and Affiliations

Contributions

Conceptualization: [Sphiwe B. Skhosana; Salomon M. Millard and Frans H. J. Kanfer], Methodology: [Sphiwe B. Skhosana; Salomon M. Millard and Frans H. J. Kanfer], Formal analysis and investigation: [Sphiwe B. Skhosana], Writing - original draft preparation: [Sphiwe B. Skhosana]; Writing - review and editing: [Sphiwe B. Skhosana; Salomon M. Millard and Frans H. J. Kanfer], Supervision: [Salomon M. Millard and Frans H. J. Kanfer]. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

In this appendix, we show how the proposed estimation strategy can be extended to estimate the general model (3).

Let \({\bar{\pi }}_k\), \(\bar{\varvec{\beta }}_k\), \({\bar{\sigma }}^2_k\) and \({\bar{g}}_k(t_r)\), for \(r=1,2,\dots ,D_2\) and \(k=1,2,\dots ,K\), be the pilot or initial estimates of \(\pi _k\), \(\varvec{\beta }_k\), \(\sigma ^2_k\) and \(g_k(t_r)\), for \(r=1,2,\dots ,D_2\) and \(k=1,2,\dots ,K\), respectively. To estimate \(g_k(t_q)\), for \(k=1,2,\dots ,K\), using the model-based approach, define the pseudo response variable \(y_q=y-\sum _{k=1}^K{\bar{\pi }}_k\big [{\textbf{x}}^\intercal \bar{\varvec{\beta }}_k+\sum _{r\ne q}{\bar{g}}_k(t_r)\big ]\) corresponding to the covariate \(t_q\). Then, model (3) reduces to

Model (A1) is the SPGMNRs (4). The estimation of model (A1) can be done similar to that of model (4) as discussed in section 4.

Let \({\hat{g}}_k(t_q)\), for \(k=1,2,\dots ,K\),- be the estimates of \(g_k(t_q)\), for \(k=1,2,\dots ,K\), obtained from fitting model (A1).

To obtain the estimates \({\hat{g}}_k(t_r)\), for \(r\ne q\) and \(k=1,2,\dots , K\), for the other non-parametric additive functions, we repeat the above procedure.

Finally, let \({\hat{g}}_k(t_r)\), for \(r=1,2,\dots ,D_2\) and \(k=1,2,\dots , K\), be the model-based estimates of the non-parametric additive functions.

Given the estimates \({\hat{g}}_k(t_r)\), for \(r=1,2,\dots ,D_2\) and \(k=1,2,\dots , K\), we can improve the estimates \({\bar{\pi }}_k\), \(\bar{\varvec{\beta }}_k\) and \({\bar{\sigma }}^2_k\) by maximizing the log-lilekihood function

Let \({\tilde{\pi }}_k\), \(\tilde{\varvec{\beta }}_k\) and \({\tilde{\sigma }}^2_k\) be the global parameter estimates obtained from maximizing (A2).

Given \({\tilde{\pi }}_k\), \(\tilde{\varvec{\beta }}_k\) and \({\tilde{\sigma }}^2_k\) and \({\hat{g}}_k(t_r)\), for \(r\ne q\) and \(k=1,2,\dots , K\), we can improve the estimates of the non-parametric additive functions \({\hat{g}}_k(t_q)\), for \(k=1,2,\dots , K\), by maximizing the local-likelihood function

for \(u\in {\mathcal {U}}\), where \({\mathcal {U}}\) is the set of all the local grid points in the domain of the covariate \(t_q\).

Let \({\tilde{g}}_k(t_q)\), for \(k=1,2,\dots ,K\), be the new estimates of \(g_k(t_q)\), for \(k=1,2,\dots ,K\) and set \({\hat{g}}_k(t_q)={\tilde{g}}_k(t_q)\), for \(k=1,2,\dots ,K\). We repeat the above procedure to obtain the estimates \({\tilde{g}}_k(t_r)\), for \(r\ne q\) and \(k=1,2,\dots ,K\).

Finally, let \({\tilde{\pi }}_k\), \(\tilde{\varvec{\beta }}_k\), \({\tilde{\sigma }}^2_k\) and \({\tilde{g}}_k(t_r)\), for \(r=1,2,\dots ,D_2\) and \(k=1,2,\dots ,K\), be the one-step backfitting model-based EM estimates.

The above estimating strategy for estimating model (3) is a three-stage estimation procedure. In the first-stage, we obtain the pilot or initial estimates of the parameters and non-parametric additive functions. This can be done using B-splines as in Zhang (2020). In the second-stage, we use the proposed model-based approach to estimate the non-parametric additive functions. Finally, in the third-stage, we re-estimate the parameters and then the non-parametric additive functions.

Appendix B Derivations

1.1 B.1 Derivation of \(\pi ^{(r+1)}_{t,k}\)

Note that \(\sum _{k=1}^K\pi _{t,k}=1\), for \(t\in {\mathcal {T}}^{(r+1)}\). Thus, the maximization of \(Q^w(\varvec{\theta }|\varvec{\theta }^{(r)})\) with respect to \(\pi _{t,k}\) is subject to the above constraint.