Abstract

Consider bivariate observations \((X_1,Y_1), \ldots , (X_n,Y_n) \in {\mathbb {R}}\times {\mathbb {R}}\) with unknown conditional distributions \(Q_x\) of Y, given that \(X = x\). The goal is to estimate these distributions under the sole assumption that \(Q_x\) is isotonic in x with respect to likelihood ratio order. If the observations are identically distributed, a related goal is to estimate the joint distribution \(\mathcal {L}(X,Y)\) under the sole assumption that it is totally positive of order two. An algorithm is developed which estimates the unknown family of distributions \((Q_x)_x\) via empirical likelihood. The benefit of the stronger regularization imposed by likelihood ratio order over the usual stochastic order is evaluated in terms of estimation and predictive performances on simulated as well as real data.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Consider a univariate regression setting with observations \((X_1,Y_1)\), \((X_2,Y_2)\), ..., \((X_n,Y_n)\) in \({\mathfrak {X}} \times {\mathbb {R}}\), where \({\mathfrak {X}}\) is an arbitrary real set. We assume that conditional on \(\varvec{X} := (X_i)_{i=1}^n\), the observations \(Y_1,Y_2,\ldots ,Y_n\) are independent with distributions \(\mathcal {L}(Y_i \,|\, \varvec{X}) = Q_{X_i}\), where the distributions \(Q_x\), \(x \in {\mathfrak {X}}\), are unknown. The goal is to estimate the latter under the sole assumption that \(Q_x\) is isotonic in x in a certain sense. That means, if (X, Y) denotes a generic observation, the larger (or smaller) the value of X, the larger (or smaller) Y tends to be. An obvious notion of order would be the usual stochastic order, which states that \(Q_{x_1} \le _{\textrm{st}}Q_{x_2}\) whenever \(x_1 \le x_2\), that is, \(Q_{x_1}((-\infty ,y]) \ge Q_{x_2}((-\infty ,y])\) for all \(y\in {\mathbb {R}}\). This concept has been investigated and generalized by numerous authors, see Henzi et al. (2021b) and Mösching and Dümbgen (2020) and the references cited therein. The latter paper illustrates the application of isotonic distributional regression in weather forecasting, and Henzi et al. (2021a) use it to analyze the length of stay of patients in Swiss hospitals.

The present paper investigates a stronger notion of order, the so-called likelihood ratio order. The usual definition is that for arbitrary points \(x_1 < x_2\) in \({\mathfrak {X}}\), the distributions \(Q_{x_1}\) and \(Q_{x_2}\) have densities \(g_{x_1}\) and \(g_{x_2}\) with respect to some dominating measure such that \(g_{x_2}/g_{x_1}\) is isotonic on the set \(\{g_{x_1} + g_{x_2} > 0\}\), and this condition will be denoted by \(Q_{x_1} \le _{\textrm{lr}}Q_{x_2}\). At first glance, this looks like a rather strong assumption coming out of thin air, but it is familiar from mathematical statistics or discriminant analyses and has interesting properties. For instance, \(Q_{x_1} \le _{\textrm{lr}}Q_{x_2}\) if and only if \(Q_{x_1}(\cdot \,|\, B) \le _{\textrm{st}}Q_{x_2}(\cdot \,|\, B)\) for any real interval B such that \(Q_{x_1}(B), Q_{x_2}(B) > 0\), where \(Q_{x_j}(A \,|\, B) := Q_{x_j}(A\cap B)/Q_{x_j}(B)\). Furthermore, likelihood ratio ordering is a frequent assumption or implication of models in mathematical finance, see Beare and Moon (2015) and Jewitt (1991). The notion of likelihood ratio order is reviewed thoroughly in Dümbgen and Mösching (2023), showing that it defines a partial order on the set of all probability measures on the real line which is preserved under weak convergence. That material generalizes definitions and results in Shaked and Shanthikumar (2007).

Thus far, estimation of distributions under a likelihood ratio order constraint was mainly limited to settings with two or finitely many samples and populations. First, Dykstra et al. (1995) estimated the parameters of two multinomial distributions that are likelihood ratio ordered via a restricted maximum likelihood approach. After reparametrization, they found that the maximization problem at hand had reduced to a specific bioassay problem treated by Robertson et al. (1988) and which makes use of the theory of isotonic regression. It is then suggested that their approach generalizes well to any two distributions that are absolutely continuous with respect to some dominating measure. Later, Carolan and Tebbs (2005) focused on testing procedures for the equality of two distributions \(Q_1\) and \(Q_2\) versus the alternative hypothesis that \(Q_1 \le _{\textrm{lr}}Q_2\), in the specific case where the cumulative distribution functions \(G_i\) of \(Q_i\), \(i=1,2\), are continuous. To this end, they made use of the equivalence between likelihood ratio order and the convexity of the ordinal dominance curve \(\alpha \mapsto G_2\bigl (G_1^{-1}(\alpha )\bigr )\), \(\alpha \in [0,1]\), which holds in case of \(G_2\) being absolutely continuous with respect to \(G_1\). The convexity of the ordinal dominance curve was also exploited by Westling et al. (2023) to provide nonparametric maximum likelihood estimators of \(G_1\) and \(G_2\) under likelihood ratio order for discrete, continuous, as well as mixed continuous-discrete distributions using the greatest convex minorant of the empirical ordinal dominance curve. However, this method still necessitates the restrictive assumption that \(G_2\) is absolutely continuous with respect to \(G_1\). Other attempts at estimating two likelihood ratio ordered distributions include Yu et al. (2017) who treat the estimation problem with a maximum smoothed likelihood approach, requiring the choice of a kernel and bandwidth parameters, and Hu et al. (2023) who suppose absolutely continuous distributions and model the logarithm of the ratio of densities as a linear combination of Bernstein polynomials.

To the best of our knowledge, only Dardanoni and Forcina (1998) considered the problem of estimating an arbitrary fixed number \(\ell \ge 2\) of likelihood ratio ordered distributions \(Q_1,Q_2,\ldots ,Q_\ell \), all of them sharing the same finite support. They showed that the constrained maximum likelihood problem may be reparametrized to obtain a convex optimization problem with linear inequality constraints, and they propose to solve the latter via a constrained version of the Fisher scoring algorithm. At each step of their procedure, it is necessary to solve a quadratic programming problem.

Within the setting of distributional regression, we follow an empirical likelihood approach (Owen 1988, 2001) to estimate the family \((Q_x)_{x\in {\mathfrak {X}}}\) for arbitrary real sets \({\mathfrak {X}}\). After a reparametrization similar to that of Dardanoni and Forcina (1998), we show that the problem of maximizing the (empirical) likelihood under the likelihood ratio order constraint yields again a finite-dimensional convex optimization problem with linear inequality constraints. We did experiments with active set algorithms in the spirit of Dümbgen et al. (2021) which are similar to the algorithms of Dardanoni and Forcina (1998). But, as explained later, the computational burden may become too heavy for large sample sizes n. Alternatively, we devise an algorithm which adapts and extends ideas from Jongbloed (1998) and Dümbgen et al. (2006) for the present setting. It makes use of a quasi-Newton approach, and new search directions are obtained via multiple isotonic weighted least squares regression.

There is an interesting aspect of the present estimation problem. If we assume that the observations \((X_i,Y_i)\) are independent copies of a generic random pair (X, Y), the new estimation method may also be interpreted as an empirical likelihood estimator of the joint distribution of (X, Y), hypothesizing that the latter is bivariate totally positive of order two (TP2). That is, for arbitrary intervals \(A_1, A_2\) and \(B_1, B_2\) such that \(A_1 < A_2\) and \(B_1 < B_2\) element-wise,

If the joint distribution of (X, Y) has a density h with respect to Lebesgue measure on \({\mathbb {R}}\times {\mathbb {R}}\), or if it is discrete with probability mass function h, then TP2 is equivalent to requiring that

and this is just a special case of multivariate total positivity of order two (Karlin 1968). For further equivalences and results in dimension two, see Dümbgen and Mösching (2023). Interestingly, this TP2 constraint is symmetric in X and Y, and our algorithm exploits this symmetry. A different, more restrictive approach to the estimation of a TP2 distribution is proposed by Hütter et al. (2020). They assume that the distribution of (X, Y) has a smooth density with respect to Lebesgue measure on a given rectangle and devise a sieve maximum likelihood estimator.

The rest of the article is structured as follows. Section 2 explains why empirical likelihood estimation of a family of likelihood ratio ordered distributions is essentially equivalent to the estimation of a discrete bivariate TP2 distribution. In Sect. 3 we present an algorithm to estimate a bivariate TP2 distribution. In Sect. 4, a simulation study illustrates the benefits of the new estimation paradigm compared to the usual stochastic order constraint. Proofs and technical details are deferred to the appendix.

2 Two versions of empirical likelihood modelling

With our observations \((X_i,Y_i)\in {\mathfrak {X}}\times {\mathbb {R}}\), \(1 \le i \le n\), let

with \(x_1< \cdots < x_\ell \) and \(y_1< \cdots < y_m\). For an index pair (j, k) with \(1 \le j \le \ell \) and \(1 \le k \le m\), let

That means, the empirical distribution \({\widehat{R}}_{\textrm{emp}}\) of the observations \((X_i,Y_i)\) can be written as \({\widehat{R}}_{\textrm{emp}} = n^{-1} \sum _{j=1}^\ell \sum _{k=1}^m w_{jk}^{} \delta _{(x_j,y_k)}^{}\).

2.1 Estimating the conditional distributions \(Q_x\)

To estimate \((Q_x)_{x \in {\mathfrak {X}}}\) under likelihood ratio ordering, we first estimate \((Q_{x_j})_{1 \le j \le \ell }\). If that results in \(({\widehat{Q}}_{x_j})_{1 \le j \le \ell }\), we may define

This piecewise linear extension preserves isotonicity with respect to \(\le _{\textrm{lr}}\), see Lemma 3.

To estimate \(Q_{x_1}, \ldots , Q_{x_\ell }\), we restrict our attention to distributions with support \(\{y_1,\ldots ,y_m\}\). That means, we assume temporarily that for \(1 \le j \le \ell \),

with weights \(q_{j1}, \ldots , q_{jm} \ge 0\) summing to one. The empirical log-likelihood for the corresponding matrix \(\varvec{q} = (q_{jk})_{j,k} \in [0,1]^{\ell \times m}\) equals

Then the goal is to maximize this log-likelihood over all matrices \(\varvec{q} \in [0,1]^{\ell \times m}\) such that

The latter constraint is equivalent to saying that \(Q_{x_j}\) is isotonic in \(j \in \{1,\ldots ,\ell \}\) with respect to \(\le _{\textrm{lr}}\).

2.2 Estimating the distribution of (X, Y)

Suppose that the observations \((X_i,Y_i)\) are independent copies of a random pair (X, Y) with unknown TP2 distribution R on \({\mathbb {R}}\times {\mathbb {R}}\). An empirical likelihood approach to estimating R is to restrict one’s attention to distributions

with \(\ell m\) weights \(h_{jk} \ge 0\) summing to one. The empirical log-likelihood of the corresponding matrix \(\varvec{h} = (h_{jk})_{j,k}\) equals \(L_{\textrm{raw}}(\varvec{h})\) with the function \(L_{\textrm{raw}}\) defined in (1). But now the goal is to maximize \(L_\textrm{raw}(\varvec{h})\) over all matrices \(\varvec{h} \in [0,1]^{\ell \times m}\) satisfying the constraints

and (3). As mentioned in the introduction, requirement (3) for \(\varvec{h}\) is equivalent to R being TP2. One can get rid of the constraint (4) via a Lagrange trick and maximize

over all \(\varvec{h}\) satisfying (3), where \(h_{++} := \sum _j \sum _k h_{jk}\). Indeed, if \(\varvec{h}\) is a matrix in \([0,\infty )^{\ell \times m}\) such that \(L_{(\textrm{raw})}(\varvec{h}) > - \infty \), then \(\tilde{\varvec{h}} := (h_{jk}/h_{++})_{j,k}\) satisfies (3) if and only if \(\varvec{h}\) does, and

with equality if and only if \(h_{++} = 1\), that is, \(\varvec{h} = \tilde{\varvec{h}}\).

2.3 Equivalence of the two estimation problems

For any matrix \(\varvec{a} \in {\mathbb {R}}^{\ell \times m}\) define the row sums \(a_{j+} := \sum _k a_{jk}\) and column sums \(a_{+k} := \sum _j a_{jk}\). If \(\varvec{h}\) is an arbitrary matrix in \([0,\infty )^{\ell \times m}\) such that \(L_{\textrm{raw}}(\varvec{h}) > - \infty \), and if we write

then \(\varvec{h}\) satisfies (3) if and only if \(\varvec{q}\) does. Furthermore, \(\varvec{q}\) satisfies (2), and elementary algebra shows that

The unique maximizer \(\varvec{p} = (p_j)_j\) of \(\sum _j (w_{j+} \log p_j - n p_j + w_{j+})\) is the vector \((w_{j+}/n)_j\), and this implies the following facts:

-

If \(\widehat{\varvec{h}}\) is a maximizer of \(L(\varvec{h})\) under the constraints (3), then \({\widehat{h}}_{j+} = w_{j+}/n\) for all j, and \({\widehat{q}}_{jk} := {\widehat{h}}_{jk}/{\widehat{h}}_{j+}\) defines a maximizer \(\widehat{\varvec{q}}\) of \(L_{\textrm{raw}}(\varvec{q})\) under the constraints (2) and (3).

-

If \(\widehat{\varvec{q}}\) is a maximizer of \(L_{\textrm{raw}}(\varvec{q})\) under the constraints (2) and (3), then \({\widehat{h}}_{jk} := (w_{j+}/n) {\widehat{q}}_{jk}\) defines a maximizer \(\widehat{\varvec{h}}\) of \(L(\varvec{h})\) under the constraints (3).

As a final remark, note that the two estimation problems are monotone equivariant in the following sense: If (X, Y) is replaced with \(({\tilde{X}}, {\tilde{Y}})=(\sigma (X), \tau (Y))\) with strictly isotonic functions \(\sigma :{\mathfrak {X}}\rightarrow {\mathbb {R}}\) and \(\tau :{\mathbb {R}}\rightarrow {\mathbb {R}}\), then \({\mathcal {L}}({\tilde{Y}}|{\tilde{X}}=\sigma (x)) = {\mathcal {L}}(\tau (Y)|X = x)\) for \(x\in {\mathfrak {X}}\). Furthermore, the constraints of likelihood ratio ordered conditional distributions or of a TP2 joint distribution remain valid under such transformations.

2.4 Calibration of rows and columns

The previous considerations motivate to find a maximizer \(\widehat{\varvec{h}} \in [0,\infty )^{\ell \times m}\) of \(L(\varvec{h})\) under the constraint (3), even if the ultimate goal is to estimate the conditional distributions \(Q_x\), \(x \in {\mathfrak {X}}\). They also indicate two simple ways to improve a current candidate \(\varvec{h}\) for \(\widehat{\varvec{h}}\). Let \(\tilde{\varvec{h}}\) be defined via

i.e. we rescale the rows of \(\varvec{h}\) such that the new row sums \({\tilde{h}}_{j+}\) coincide with the empirical weights \(w_{j+}/n\). Then

with equality if and only if \(\tilde{\varvec{h}} = \varvec{h}\). Similarly, one can improve \(\varvec{h}\) by rescaling its columns, i.e. replacing \(\varvec{h}\) with \(\tilde{\varvec{h}}\), where

3 Estimation

3.1 Dimension reduction

The minimization problem mentioned before involves a parameter \(\varvec{h} \in [0,\infty )^{\ell \times m}\) under \(\left( {\begin{array}{c}\ell \\ 2\end{array}}\right) \left( {\begin{array}{c}m\\ 2\end{array}}\right) \) nonlinear inequality constraints. The parameter space and the number of constraints may be reduced as follows.

Lemma 1

Let \(\mathcal {P}\) be the set of all index pairs (j, k) such that there exist indices \(1 \le j_1 \le j \le j_2 \le \ell \) and \(1 \le k_1 \le k \le k_2 \le m\) with \(w_{j_1k_2}, w_{j_2k_1} > 0\).

(a) If \(\varvec{h} \in [0,\infty )^{\ell \times m}\) satisfies (3) and \(L(\varvec{h}) > - \infty \), then \(h_{jk} > 0\) for all \((j,k) \in \mathcal {P}\).

(b) If such a matrix \(\varvec{h}\) is replaced with \(\tilde{\varvec{h}}\! := \!\bigl ( 1_{[(j,k) \!\in \mathcal {P}]} h_{jk} \bigr )_{j,k}\), then \(\tilde{\varvec{h}}\) satisfies (3), too, and \(L(\tilde{\varvec{h}}) \ge L(\varvec{h})\) with equality if and only if \(\tilde{\varvec{h}} = \varvec{h}\).

(c) If \(\varvec{h} \in [0,\infty )^{\ell \times m}\) such that \(\{(j,k):h_{jk} > 0\} = \mathcal {P}\), then constraint (3) is equivalent to

All in all, we may restrict our attention to parameters \(\varvec{h} \in (0,\infty )^{\mathcal {P}}\) satisfying (5), where \(h_{jk} := 0\) for \((j,k) \not \in \mathcal {P}\). Note that (5) involves only \((\ell -1)(m-1)\) inequalities, and the inequality for one particular index pair (j, k) is nontrivial only if the two pairs \((j-1,k),(j,k-1)\) belong to \(\mathcal {P}\).

The set \(\mathcal {P}\) consists of all pairs (j, k) such that the support of the empirical distribution \({\widehat{R}}_{\textrm{emp}}\) contains a point \((x_{j_1},y_{k_2})\) “northwest” and a point \((x_{j_2},y_{k_1})\) “southeast” of \((x_j,y_k)\). If \(\mathcal {P}\) contains two pairs \((j_2,k_1), (j_1,k_2)\) with \(j_1 < j_2\) and \(k_1 < k_2\), then it contains the whole set \(\{j_1,\ldots ,j_2\} \times \{k_1,\ldots ,k_2\}\). Figure 1 illustrates the definition of \(\mathcal {P}\). It also illustrates two alternative codings of \(\mathcal {P}\): An index pair (j, k) belongs to \(\mathcal {P}\) if and only if \(m_j \le k \le M_j\), where

Note that \(m_j \le M_j\) for all j, \(1 = m_1 \le \cdots \le m_\ell \), and \(M_1 \le \cdots \le M_\ell = m\). Analogously, a pair (j, k) belongs to \(\mathcal {P}\) if and only if \(\ell _k \le j \le L_k\), where

Here \(\ell _k \le L_k\) for all k, \(1 = \ell _1 \le \cdots \le \ell _M\), and \(L_1 \le \cdots \le L_m = \ell \).

Note that by definition, for any index pair (j, k),

3.2 Reparametrization and reformulation

If we replace a parameter \(\varvec{h} \in (0,\infty )^{\mathcal {P}}\) with its component-wise logarithm \(\varvec{\theta }\in {\mathbb {R}}^{\mathcal {P}}\), then property (5) is equivalent to

The set of all \(\varvec{\theta }\in {\mathbb {R}}^{\mathcal {P}}\) satisfying (8) is a closed convex cone and is denoted by \(\Theta \).

Now our goal is to minimize

over all \(\varvec{\theta }\in \Theta \).

Theorem 1

There exists a unique minimizer \({\widehat{\varvec{\theta }}}\) of \(f(\varvec{\theta })\) over all \(\varvec{\theta }\in \Theta \).

Uniqueness follows directly from f being strictly convex, but existence is less obvious, unless \(w_{jk} > 0\) for all (j, k). With \({\widehat{\varvec{\theta }}}\) at hand, the corresponding solution \(\widehat{\varvec{h}} \in [0,\infty )^{\ell \times m}\) of the original problem is given by

In the proof of Theorem 1 and from now on, we view \({\mathbb {R}}^{\mathcal {P}}\) as a Euclidean space with inner product \(\langle \varvec{x},\varvec{y}\rangle := \sum _{(j,k) \in \mathcal {P}} x_{jk}^{} y_{jk}^{}\) and the corresponding norm \(\Vert \varvec{x}\Vert := \langle \varvec{x},\varvec{x}\rangle ^{1/2}\). For a differentiable function \(f : {\mathbb {R}}^{\mathcal {P}} \rightarrow {\mathbb {R}}\), its gradient is defined as \(\nabla f(\varvec{x}) := \bigl ( \partial f(\varvec{x}) / \partial x_{jk}^{} \bigr )_{(j,k) \in \mathcal {P}}\).

Let us explain briefly why traditional optimization algorithms may become infeasible for large sample sizes n. Depending on the input data, the set \(\mathcal {P}\) may contain more than \(cn^2\) parameters, and the constraint (8) may involve at least \(cn^2\) linear inequalities, where \(c > 0\) is some generic constant. Even if we restrict our attention to parameters \(\varvec{\theta }\in \Theta \) such that a given subset of the inequalities in (8) are equalities, they span a linear space of dimension at least \(\max (\ell ,m)\), because all parameters \(\theta _{jm_j}\) and \(\theta _{\ell _kk}\) are unconstrained, and \(\max (\ell ,m)\) may be at least cn. Just determining a gradient and Hessian matrix of the target function f within this linear subspace would then require at least \(cn^4\) steps. Consequently, traditional minimization algorithms involving exact Newton steps may be computationally infeasible. Alternatively, we propose an iterative algorithm with quasi Newton steps each of which has running time \(O(n^2)\), and the required memory is of this order, too.

3.3 Finding a new proposal

Version 1. To determine whether a given parameter \(\varvec{\theta }\in {\mathbb {R}}^{\mathcal {P}}\) is already optimal and, if not, to obtain a better one, we reparametrize the problem a second time. Let \(\tilde{\varvec{\theta }}= T(\varvec{\theta }) \in {\mathbb {R}}^{\mathcal {P}}\) be given by

Then \(\varvec{\theta }= T^{-1}(\tilde{\varvec{\theta }}) = \bigl ( \sum _{k'=m_j}^k {\tilde{\theta }}_{jk'} \bigr )_{j,k}\), and \(f(\varvec{\theta })\) is equal to

More importantly, we may represent \(\mathcal {P}\) as

where the latter equation follows from (6) and (7). Now the constraints (8) read

Here \({\mathbb {R}}_\uparrow ^d := \{ \varvec{x} \in {\mathbb {R}}^d : x_1 \le \cdots \le x_d\}\). The set of \(\tilde{\varvec{\theta }}\in {\mathbb {R}}^{\mathcal {P}}\) satisfying (10) is denoted by \({\tilde{\Theta }}\).

For given \(\varvec{\theta }\) and \(\tilde{\varvec{\theta }}= T(\varvec{\theta })\), we approximate \({\tilde{f}}(\tilde{\varvec{x}})\) by the quadratic function

with

This quadratic function of \(\tilde{\varvec{x}}\) is easily minimized over \({\tilde{\Theta }}\) via the pool-adjacent-violators algorithm, applied to the subtuple \(({\tilde{x}}_{jk})_{j=\ell _k}^{L_{k-1}}\) for each \(k=2,\ldots ,m\) separately. Then we obtain the proposal

Interestingly, if \(\varvec{\theta }\) is row-wise calibrated in the sense that \(n \sum _{k=m_j}^{M_j} \exp (\theta _{jk}) = w_{j+}\) for \(1 \le j \le \ell \), then \({\tilde{\gamma }}_{jm_j}(\varvec{\theta }) = {\tilde{\theta }}_{jm_j}\) and thus \(\Psi ^{\textrm{row}}_{jm_j}(\varvec{\theta }) = \theta _{jm_j}\) for \(1 \le j \le \ell \).

Version 2. Instead of reparametrizing \(\varvec{\theta }\in \Theta \) in terms of its values \(\theta _{jm_j}\), \(1 \le j \le \ell \), and its increments within rows, one could reparametrize it in terms of its values \(\theta _{\ell _kk}\), \(1 \le k \le m\), and its increments within columns, leading to a proposal \(\Psi ^{\textrm{col}}(\varvec{\theta })\). Here, \(\Psi ^{\textrm{col}}_{\ell _kk}(\varvec{\theta }) = \theta _{\ell _kk}\) for \(1 \le k \le m\), provided that \(\varvec{\theta }\) is column-wise calibrated.

3.4 Calibration

In terms of the log-parametrization with \(\varvec{\theta }\in \Theta \), the row-wise calibration mentioned earlier for \(\varvec{h}\) means to replace \(\theta _{jk}\) with

Analogously, replacing \(\theta _{jk}\) with

leads to a column-wise calibrated parameter \(\varvec{\theta }\). Iterating these calibrations alternatingly, leads to a parameter which is (approximately) calibrated, row-wise as well as column-wise.

3.5 From new proposal to new parameter

Both functions \(\Psi = \Psi ^{\textrm{row}}, \Psi ^{\textrm{col}}\) have some useful properties summarized in the next lemma.

Lemma 2

The function \(\Psi \) is continuous on \(\Theta \) with \(\Psi ({\widehat{\varvec{\theta }}}) = {\widehat{\varvec{\theta }}}\). For \(\varvec{\theta }\in \Theta \setminus \{{\widehat{\varvec{\theta }}}\}\),

and

with continuous functions \(\beta _1, \beta _2 : \Theta \rightarrow (0,\infty )\).

In view of this lemma, we want to replace \(\varvec{\theta }\ne {\widehat{\varvec{\theta }}}\) with \((1 - t_*) \varvec{\theta }+ t_* \Psi (\varvec{\theta })\) for some suitable \(t_* = t_*(\varvec{\theta }) \in [0,1]\) such that \(f(\varvec{\theta })\) really decreases. More specifically, with

our goals are that for some constant \(\kappa \in (0,1]\),

and in case of \(\rho _{\varvec{\theta }}\) being (approximately) a quadratic function, \(t_*\) should be (approximately) equal to \(\mathop \mathrm{arg\,max}_{t \in [0,1]} \rho _{\varvec{\theta }}(t)\). For that, we proceed similarly as in Dümbgen et al. (2006). We determine \(t_o := 2^{-n_o}\) with \(n_o\) the smallest integer such that \(\rho _{\varvec{\theta }}(2^{-n_o}) \ge 0\). Then we define a Hermite interpolation of \(\rho _{\varvec{\theta }}\):

This new function is such that \({\tilde{\rho }}_{\varvec{\theta }}(t)=\rho _{\varvec{\theta }}(t)\) for \(t = 0, t_o\), and \({\tilde{\rho }}_{\varvec{\theta }}'(0) = \rho _{\varvec{\theta }}'(0) > 0\). Since \({\tilde{\rho }}_{\varvec{\theta }}'(t) = \rho _{\varvec{\theta }}'(0) - 2 t c_o\), the maximizer of \({\tilde{\rho }}_{\varvec{\theta }}\) over \([0,t_o]\) is given by

As shown in Lemma 1 of Dümbgen et al. (2006), this choice of \(t_*\) fulfils the requirements just stated, where \(\kappa = 1/4\).

3.6 Complete algorithms

A possible starting point for the algorithm is given by \(\varvec{\theta }^{(0)} := ( - \log (\#\mathcal {P}) )_{(j,k) \in \mathcal {P}}\), but any other parameter \(\varvec{\theta }^{(0)} \in \Theta \) would work, too. Suppose we have determined already \(\varvec{\theta }^{(0)}, \ldots , \varvec{\theta }^{(s)}\) such that \(f(\varvec{\theta }^{(0)}) \ge \cdots \ge f(\varvec{\theta }^{(s)})\). Let \(\Psi (\varvec{\theta }^{(s)})\) be a new proposal with \(\Psi = \Psi ^{\textrm{row}}\) or \(\Psi = \Psi ^{\textrm{col}}\), and let \(\varvec{\theta }^{(s+1)} = (1 - t_*^{(s)}) \varvec{\theta }^{(s)} + t_*^{(s)} \Psi (\varvec{\theta }^{(s)})\) with \(t_*^{(s)} = t_*(\varvec{\theta }^{(s)}) \in [0,1]\) as described before. No matter which proposal function \(\Psi \) we are using in each step, the resulting sequence \((\varvec{\theta }^{(s)})_{s\ge 0}\) will always converge to \({\widehat{\varvec{\theta }}}\).

Theorem 2

Let \((\varvec{\theta }^{(s)})_{s \ge 0}\) be the sequence just described. Then \(\lim _{s \rightarrow \infty } \varvec{\theta }^{(s)} = {\widehat{\varvec{\theta }}}\).

Our numerical experiments showed that a particularly efficient refinement is as follows: Before computing a new proposal \(\Psi (\varvec{\theta }^{(s)})\), one should calibrate \(\varvec{\theta }^{(s)}\) in the sense that it is row-wise and column-wise calibrated. If s is even, we compute \(\Psi ^{\textrm{row}}(\varvec{\theta }^{(s)})\) to determine the next candidate \(\varvec{\theta }^{(s+1)}\). If s is odd, we compute \(\Psi ^\textrm{col}(\varvec{\theta }^{(s)})\) to obtain \(\varvec{\theta }^{(s+1)}\). The algorithm stops as soon as \(\delta (\varvec{\theta }^{(s)}) = \bigl \langle \nabla f(\varvec{\theta }^{(s)}), \varvec{\theta }^{(s)} - \Psi (\varvec{\theta }^{(s)}) \bigr \rangle \) is smaller than a prescribed small threshold. Table 1 provides corresponding pseudo code.

4 Simulation study

In this section, we compare estimation and prediction performances of the likelihood ratio order constrained estimator presented in this article with the estimator under usual stochastic order obtained via isotonic distributional regression. The latter estimator was mentioned briefly in the introduction. It is extensively discussed in Henzi et al. (2021b) and Mösching and Dümbgen (2020).

4.1 A Gamma model

We choose a parametric family of distributions from which we draw observations. We will then use these data to provide distribution estimates which we then compare with the truth. The specific model we have in mind is a family \((Q_x)_{x\in {\mathfrak {X}}}\) of Gamma distributions with densities

with respect to Lebesgue measure on \((0,\infty )\), with some shape function \(a:{\mathfrak {X}}\rightarrow (0,\infty )\) and scale function \(b:{\mathfrak {X}}\rightarrow (0,\infty )\). Then \(Q_x\) is isotonic in \(x \in {\mathfrak {X}}\) with respect to likelihood ratio ordering if and only if both functions a and b are isotonic. Recall that since the family is increasing in likelihood ratio order, it is also increasing with respect to the usual stochastic order.

The specific shape and scale functions used for this study are

defined for \(x\in {\mathfrak {X}}:=[1,4]\). Figure 2 displays corresponding true conditional distribution functions for a selection of x’s.

4.2 Sampling method

Let \(\ell _o\in \{50,1000\}\) be a predefined number and let

For a given sample size \(n\in {\mathbb {N}}\), the sample \((X_1,Y_1),(X_2,Y_2),\ldots ,(X_n,Y_n)\) is obtained as follows: Draw \(X_1,X_2,\ldots ,X_n\) uniformly from \({\mathfrak {X}}_o\) and sample independently each \(Y_k\) from \(Q_{X_k}\). This yields unique covariates \(x_1<\cdots <x_\ell \) as well as unique responses \(y_1< \cdots < y_m\), for some \(1\le \ell ,m \le n\).

For each such sample, we compute estimates of \((Q_{x_j})_{j=1}^\ell \) under likelihood ratio order and usual stochastic order constraints. Using linear interpolation, we complete both families of estimates with covariates originally in \(\{x_j\}_{j=1}^\ell \) to families of estimates with covariates in the full set \({\mathfrak {X}}_o\), see Lemma 3. We therefore obtain estimates \(({\widehat{Q}}_{x})_{x\in {\mathfrak {X}}_o}\) and  under likelihood ratio order and usual stochastic order constraint, respectively. The corresponding families of cumulative distribution functions are written \(({\widehat{G}}_x)_{x\in {\mathfrak {X}}_o}\) and

under likelihood ratio order and usual stochastic order constraint, respectively. The corresponding families of cumulative distribution functions are written \(({\widehat{G}}_x)_{x\in {\mathfrak {X}}_o}\) and  , whereas the truth is denoted by \((G_x)_{x\in {\mathfrak {X}}_o}\). Although the performance of the empirical distribution is worse than those of the two order constrained estimators, it is still useful to study its behaviour, for instance to better understand boundary effects. The family of empirical cumulative distribution functions will be written \((\widehat{{\mathbb {G}}}_x)_{x\in {\mathfrak {X}}_o}\).

, whereas the truth is denoted by \((G_x)_{x\in {\mathfrak {X}}_o}\). Although the performance of the empirical distribution is worse than those of the two order constrained estimators, it is still useful to study its behaviour, for instance to better understand boundary effects. The family of empirical cumulative distribution functions will be written \((\widehat{{\mathbb {G}}}_x)_{x\in {\mathfrak {X}}_o}\).

4.3 Single sample

Selection of \(\beta \)-quantile curves. Specifically, a taut-string (Dümbgen and Kovac 2009) is computed between the lower \({\mathfrak {X}}\ni x\mapsto \min \{y\in {\mathbb {R}}: {\tilde{G}}_x(y)\ge \beta \}\) and upper \({\mathfrak {X}}\ni x\mapsto \inf \{y\in {\mathbb {R}}: {\tilde{G}}_x(y)> \beta \}\) quantile curves for each  (corresponding respectively to ‘Truth’, ‘LR’ and ‘ST’) and \(\beta \in \{0.1,0.25,0.5,0.75,0.9\}\)

(corresponding respectively to ‘Truth’, ‘LR’ and ‘ST’) and \(\beta \in \{0.1,0.25,0.5,0.75,0.9\}\)

Figure 2 provides a visual comparison of a selection of true conditional distribution functions with their corresponding estimates under order constraint for a single sample generated in the setting \(\ell _o=1000\) and \(n=1000\). It shows that the estimates under likelihood ratio order constraint are much smoother than those under usual stochastic order constraint. The former are in general also closer to the truth than the latter. This fact is in reality true on average, as demonstrated in the next paragraph. Smoothness and greater precision in estimation resulting from the likelihood ratio order is also apparent in Fig. 3, which displays a selection of quantile curves for each  .

.

Monte Carlo simulations to evaluate estimation performances with a simple score. First row: Simple scores with \({\tilde{G}}\) being either \({\widehat{G}}\) (solid line),  (dashed line) or \(\widehat{{\mathbb {G}}}\) (dotted line). Second row: Relative change of score when enforcing a likelihood ratio order constraint over the usual stochastic order constraint. The thicker line is the median variation, whereas the thin lines are the first and third quartiles. Negative values represent an improvement in score

(dashed line) or \(\widehat{{\mathbb {G}}}\) (dotted line). Second row: Relative change of score when enforcing a likelihood ratio order constraint over the usual stochastic order constraint. The thicker line is the median variation, whereas the thin lines are the first and third quartiles. Negative values represent an improvement in score

4.4 A simple score

To assess the ability of each estimator to retrieve the truth, we produce Monte-Carlo estimates of the median of the score

for each estimator  and for each \(x\in {\mathfrak {X}}_o\). The above score may be decomposed as a sum of simple expressions involving the evaluation of \({\tilde{G}}_x\) and \(G_x\) on the finite set of unique responses, see Sect. 3. We also compute Monte-Carlo quartiles of the relative change in score

and for each \(x\in {\mathfrak {X}}_o\). The above score may be decomposed as a sum of simple expressions involving the evaluation of \({\tilde{G}}_x\) and \(G_x\) on the finite set of unique responses, see Sect. 3. We also compute Monte-Carlo quartiles of the relative change in score

Monte Carlo simulations to evaluate prediction performances using a CRPS-type score. First row: CRPS scores with \({\tilde{G}}\) being either \({\widehat{G}}\) (solid line),  (dashed line) or \(\widehat{{\mathbb {G}}}\) (doted line). Second row: Relative change of score when enforcing a likelihood ratio order constraint over the usual stochastic order constraint

(dashed line) or \(\widehat{{\mathbb {G}}}\) (doted line). Second row: Relative change of score when enforcing a likelihood ratio order constraint over the usual stochastic order constraint

The results of the simulations are displayed in Fig. 4. A first observation is that the performance of all three estimators decreases towards the boundary points of \({\mathfrak {X}}\), and this effect is more pronounced for the two order constrained estimators. This is a known phenomenon from shape constrained inference. However, in the interior of \({\mathfrak {X}}\), taking the stochastic ordering into account pays off. The second row of plots in Fig. 4 shows the relative change in score when estimating the family of distributions with a likelihood ratio order constraint instead of the usual stochastic order constraint. It is observed that the improvement in score becomes larger and occurs on a wider sub-interval of \({\mathfrak {X}}\) as \(\ell _o\) and n increase. Only towards the boundary, the usual stochastic order seems to have better performance.

4.5 Theoretical predictive performances

Using the same Gamma model, we evaluate predictive performances of both estimators using the continuous ranked probability score

The CRPS is a sctrictly proper scoring rule which allows for comparisons of probabilistic forecasts, see Gneiting and Raftery (2007) and Jordan et al. (2019). It can be seen as an extension of the mean absolute error for probabilistic forecasts. The CRPS is therefore interpreted in the same unit of measurement as the true distribution or data.

Because the true underlying distribution is known in the present simulation setting, the expected CRPS score is given by

where \(y_0:=0\), \(y_{m+1}:=+\infty \) and \(B(\cdot ,\cdot )\) is the beta function. As shown in Sect. 3, the above sum of integrals may be rewritten as a sum of elementary expressions involving the evaluation of \({\tilde{G}}_{x}\) and \(G_{x}\) on the finite set of unique responses, as well as two simple integrals which are computed via numerical integration. Consequently, we compute Monte-Carlo estimates of the median of each score \(S_x({\tilde{G}}, G)\),  , as well as estimates of quartiles of the relative change in score when choosing \({\widehat{G}}\) over

, as well as estimates of quartiles of the relative change in score when choosing \({\widehat{G}}\) over  .

.

Figure 5 outlines the results of the simulations. Similar boundary effects as for the simple score are observed. On the interior of \({\mathfrak {X}}\), the usual stochastic order improves the naive empirical estimator, and the likelihood ratio order yields the best results. In terms of relative change in score, it appears that imposing a likelihood ratio order constraint to estimate the family of distributions yields an average score reduction of about \(0.5\%\) in comparison with the usual stochastic order estimator for a sample of \(n=50\). For \(n=1000\), this improvement occurs on a wider subinterval of \({\mathfrak {X}}\) and more frequently, as shown by the third quartile curve. Note further that the expected CRPS increases on the interior of \({\mathfrak {X}}\). This is due to the fact that the CRPS has the same unit of measurement as the response variable. Since the scale of the response characterized by b increases with x, then so does the corresponding score.

Monte Carlo simulations to evaluate prediction performances using an empirical CRPS score. First row: empirical CRPS scores with \({\tilde{G}}\) being either \({\widehat{G}}\) (solid line),  (dashed line, hardly distinguishable from solid line) or \(\widehat{{\mathbb {G}}}\) (dotted line). Second row: Relative change of score when enforcing a likelihood ratio order constraint over the usual stochastic order constraint

(dashed line, hardly distinguishable from solid line) or \(\widehat{{\mathbb {G}}}\) (dotted line). Second row: Relative change of score when enforcing a likelihood ratio order constraint over the usual stochastic order constraint

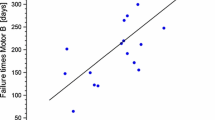

4.6 Empirical predictive performances

We use the weight for age dataset already studied in Mösching and Dümbgen (2020). It comprises the age and weight of \(n=16\,432\) girls whose age in years lies within \({\mathfrak {X}}:=[2,16]\). A subsample of these data of size \(2\,000\) is presented in Fig. 6, along with estimated quantile curves under likelihood ratio order using that subsample. The dataset was publicly released as part of the National Health and Nutrition Examination Survey conducted in the US between 1963 and 1991 (data available from www.cdc.gov) and was analyzed by Kuczmarski et al. (2002) with parametric models to produce smooth quantile curves.

Although the likelihood ratio order constraint is harder to justify than the very natural stochastic order constraint, we are interested in the effect of a stronger regularization imposed by the former constraint.

The forecast evaluation is performed using a leave-\(n_{\text {train}}\)-out cross-validation scheme. More precisely, we choose random subsets \(\mathcal {D}_{\text {train}}\) of \(n_{\text {train}}\) observations which we use to train our estimators. Using the rest of the \(n_{\text {test}}:=n-n_{\text {train}}\) data pairs in \(\mathcal {D}_{\text {test}}\), we evaluate predictive performance by computing the sample median of \({\widehat{S}}_x({\tilde{G}},\mathcal {D}_{\text {test}})\) for each estimator  and each \(x\in {\mathfrak {X}}_o\), where

and each \(x\in {\mathfrak {X}}_o\), where

Quartile estimates of the relative change in score are also computed.

Figure 7 shows the forecast evaluation results. As expected, the empirical CRPS increases with age, since the spread of the weight increases with age. As to the relative change in score, improvements of about \(0.5\%\) can be seen for both training sample sizes. The region of \({\mathfrak {X}}\) where the estimator under likelihood ratio order constraint shows better predictive performances is the widest for the largest training sample size. These results show the benefit of a stronger regularization.

References

Beare, B.K., Moon, J.-M.: Nonparametric tests of density ratio ordering. Economet. Theor. 31, 471–492 (2015)

Carolan, C.A., Tebbs, J.M.: Nonparametric tests for and against likelihood ratio ordering in the two-sample problem. Biometrika 92, 159–171 (2005)

Dardanoni, V., Forcina, A.: A unified approach to likelihood inference on stochastic orderings in a nonparametric context. J. Am. Stat. Assoc. 93, 1112–1123 (1998)

Dümbgen, L., Freitag-Wolf, S., Jongbloed, G.: Estimating a unimodal distribution from interval-censored data. J. Am. Stat. Assoc. 101, 1094–1106 (2006)

Dümbgen, L., Kovac, A.: Extensions of smoothing via taut strings. Electron. J. Stat. 3, 41–75 (2009)

Dümbgen, L., Mösching, A.: On stochastic orders and total positivity. ESAIM Probab. Stat. 27, 461–481 (2023)

Dümbgen, L., Mösching, A., Strähl, C.: Active set algorithms for estimating shape-constrained density ratios. Comput. Stat. Data Anal. 163(107300), 19 (2021)

Dykstra, R., Kochar, S., Robertson, T.: Inference for likelihood ratio ordering in the two-sample problem. J. Am. Stat. Assoc. 90, 1034–1040 (1995)

Gneiting, T., Raftery, A.E.: Strictly proper scoring rules, prediction, and estimation. J. Am. Stat. Assoc. 102, 359–378 (2007)

Henzi, A., Kleger, G.-R., Hilty, M.P., Wendel Garcia, P.D., Ziegel, J.F.: Strictly proper scoring rules, prediction, and estimation. PLoS ONE 16, e0247265 (2021)

Henzi, A., Ziegel, J.F., Gneiting, T.: Isotonic distributional regression. J. R. Stat. Soc. Ser. B Stat. Methodol. 83, 963–993 (2021)

Hu, D., Yuan, M., Yu, T., Li, P.: Statistical inference for the two-sample problem under likelihood ratio ordering, with application to the ROC curve estimation. Stat. Med. 42(20), 3649–3664 (2023)

Hütter, J.-C., Mao, C., Rigollet, P., Robeva, E.: Optimal rates for estimation of two-dimensional totally positive distributions. Electron. J. Stat. 14(2), 2600–2652 (2020)

Jewitt, I.: Applications of likelihood ratio orderings in economics. In: Stochastic Orders and Decision Under Risk (Hamburg, 1989), vol. 19 of IMS Lecture Notes Monogr. Ser. Inst. Math. Statist. Hayward, pp. 174–189 (1991)

Jongbloed, G.: The iterative convex minorant algorithm for nonparametric estimation. J. Comput. Graph. Stat. 7, 310–321 (1998)

Jordan, A., Krüger, F., Lerch, S.: Evaluating probabilistic forecasts with scoringrules. J. Stat. Softw. 90, 1–37 (2019)

Karlin, S.: Total Positivity, vol. I. Stanford University Press, Stanford (1968)

Kuczmarski, R.J., Ogden, C.L., Guo, S.S., Grummer-Strawn, L.M., Flegal, K.M., Mei, Z., Wei, R., Curtin, L.R., Roche, A.F., Johnson, C.L.: CDC growth charts for the united states: methods and development. Vital Health Stat. 246 (2002)

Mösching, A., Dümbgen, L.: Monotone least squares and isotonic quantiles. Electron. J. Stat. 14, 24–49 (2020)

Owen, A.B.: Empirical likelihood ratio confidence intervals for a single functional. Biometrika 75, 237–249 (1988)

Owen, A.B.: Empirical likelihood. No. 92 in Monographs on Statistics and Applied Probability. Chapman and Hall/CRC (2001)

Robertson, T., Wright, F.T., Dykstra, R.L.: Order Restricted Statistical Inference. Wiley Series in Probability and Mathematical Statistics: Probability and Mathematical Statistics. Wiley, Chichester (1988)

Shaked, M., Shanthikumar, J.G.: Stochastic Orders. Springer Series in Statistics, Springer, New York (2007)

Westling, T., Downes, K.J., Small, D.S.: Nonparametric maximum likelihood estimation under a likelihood ratio order. Stat. Sin.33 (2023) (in press)

Yu, T., Li, P., Qin, J.: Density estimation in the two-sample problem with likelihood ratio ordering. Biometrika 104, 141–152 (2017)

Acknowledgements

The authors are grateful to Johanna Ziegel, Alexander Jordan and Tilmann Gneiting for stimulating discussions and useful hints. We also thank a reviewer for constructive comments. This work was supported by Swiss National Science Foundation.

Funding

Open access funding provided by University of Bern. This work was supported by Swiss National Science Foundation (Grant No. 172549).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Code availability

Our procedure is implemented in the R-package LRDistReg and is available from GitHub: https://github.com/AlexandreMoesching/LRDistReg. Its implementation includes C++ code which is then integrated in R using Rcpp.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proofs and technical details

Proofs and technical details

1.1 Proofs for Sections 2 and 3

Lemma 3

Let \(Q_0\) and \(Q_1\) be probability distributions on \({\mathbb {R}}\) such that \(Q_0 \le _{\textrm{lr}}Q_1\). If we define \(Q_t := (1 - t) Q_0 + t Q_1\) for \(0< t < 1\), then \(Q_s \le _{\textrm{lr}}Q_t\) for \(0 \le s < t \le 1\).

Proof

By assumption, there exist densitites \(g_0\) of \(Q_0\) and \(g_1\) of \(Q_1\) with respect to some dominating measure \(\mu \) such that \(g_1/g_0\) is isotonic on \(\{g_0 + g_1 > 0\}\), and this is equivalent to the property that

Now, \(Q_t\) has density \(g_t := (1 - t) g_0 + t g_1\) with respect to \(\mu \), and elementary algebra reveals that for \(0 \le s < t \le 1\) and arbitrary \(x < y\),

is nonnegative, whence \(Q_s \le _{\textrm{lr}}Q_t\). \(\square \)

Proof of Lemma 1

Let \(\varvec{h} \in [0,\infty )\) satisfy (3) and \(L(\varvec{h}) > - \infty \).

As for part (a), it follows from \(L(\varvec{h}) > - \infty \) that \(h_{jk} > 0\) whenever \(w_{jk}> 0\). We have to show that for arbitrary index pairs \((j_1,k_2), (j_2,k_1)\) with \(j_1 \le j_2\), \(k_1 \le k_2\) and \(w_{j_1k_2}, w_{j_2k_1} > 0\), also \(h_{jk} > 0\) for all \(j \in \{j_1,\ldots ,j_2\}\) and \(k \in \{k_1,\ldots ,k_2\}\).

Since \(h_{j_1k_2}, h_{j_2k_1} > 0\), it follows from (3) that \(h_{j_1k_1}, h_{j_2k_2} > 0\), too. (If \(j_1 = j_2\) or \(k_1 = k_2\), this conclusion is trivial.) This type of argument will reappear several times, so we denote it by \(A(j_1,j_2,k_1,k_2)\).

Next we show that \(h_{jk_1}, h_{jk_2} > 0\) for \(j_1< j < j_2\). Indeed, there exists an index \(k_*\) such that \(w_{jk_*} > 0\), whence \(h_{jk_*} > 0\). If \(k_* \le k_2\), we may conclude from \(A(j_1,j,k_*,k_2)\) that \(h_{j,k_2} > 0\), and then it follows from \(A(j,j_2,k_1,k_2)\) that \(h_{jk_1} > 0\). Similarly, if \(k_* \ge k_1\), we may conclude from \(A(j,j_2,k_1,k_*)\) that \(h_{jk_1} > 0\), and then \(A(j_1,j,k_1,k_2)\) shows that \(h_{jk_2} > 0\).

Analogously, one can show that \(h_{j_1k}, h_{j_2k} > 0\) for \(k_1< k < k_2\).

Finally, if \(j_1< j < j_2\) and \(k_1< k < k_2\), then we may apply \(A(j_1,j,k_1,k)\) or \(A(j,j_2,k,k_2)\) to deduce that \(h_{jk} > 0\).

As to part (b), since \(\mathcal {P}\) contains all pairs (j, k) with \(w_{jk} > 0\), we know that \(L_{\textrm{raw}}(\tilde{\varvec{h}}) = L_\textrm{raw}(\varvec{h})\), and \(n - n {\tilde{h}}_{++} \ge n - n h_{++}\) with equality if and only if \(\tilde{\varvec{h}} = \varvec{h}\). This proves the assertions about \(L(\tilde{\varvec{h}})\) and \(L(\varvec{h})\). That \(\tilde{\varvec{h}}\) inherits property (3) from \(\varvec{h}\) can be deduced from the fact that for indices \(j_1 < j_2\) and \(k_1 < k_2\), it follows from \({\tilde{h}}_{j_1k_2} {\tilde{h}}_{j_2k_1} > 0\), that \((j_1,k_2), (j_2,k_1) \in \mathcal {P}\), so \((j_1,k_1), (j_2,k_2) \in \mathcal {P}\) as well, and \({\tilde{h}}_{j_1k_1} {\tilde{h}}_{j_2k_2} - {\tilde{h}}_{j_1k_2} {\tilde{h}}_{j_2k_1}\) is identical to \(h_{j_1k_1} h_{j_2k_2} - h_{j_1k_2} h_{j_2k_1} \ge 0\).

Concerning part (c), we have to show that (5) implies (3). To this end, let \((j_1,k_2), (j_2,k_1) \in \mathcal {P}\) with \(j_1 < j_2\) and \(k_1 < k_2\). Since \(\{j_1,\ldots ,j_2\} \times \{k_1,\ldots ,k_2\} \subset \mathcal {P}\), one can write

for \(j_1 < j \le j_2\), and

so (3) is satisfied as well. \(\square \)

Proof of Theorem 1

Since f is strictly convex and \(\Theta \) is convex, f has at most one minimizer in \(\Theta \). To prove existence of a minimizer, it suffices to show that

Suppose that (11) is false. Then there exists a sequence \((\varvec{\theta }^{(s)})_s\) in \(\Theta \) such that \(\Vert \varvec{\theta }\Vert \rightarrow \infty \) but \(\bigl ( f(\varvec{\theta }^{(s)}) \bigr )_s\) is bounded. With \(r_s := \Vert \varvec{\theta }^{(s)}\Vert \) and \(\varvec{u}^{(s)} := r_s^{-1} \varvec{\theta }^{(s)}\), we may assume without loss of generality that \(\varvec{u}^{(s)} \rightarrow \varvec{u}\) as \(s \rightarrow \infty \) for some \(\varvec{u}\in \Theta \) with \(\Vert \varvec{u}\Vert = 1\). For any fixed \(t > 0\) and sufficiently large s, convexity and differentiablity of f imply that

Since \(\lim _{s \rightarrow \infty } f(t \varvec{u}^{(s)}) = f(t \varvec{u})\) and \(\lim _{s \rightarrow \infty } \partial f(t \varvec{u}^{(s)}) / \partial t = \partial f(t \varvec{u}) / \partial t\), we conclude that

But as \(t \rightarrow \infty \), the directional derivative \(\partial f(t \varvec{u}) / \partial t = \sum _{(j,k) \in \mathcal {P}} \bigl ( - w_{jk} u_{jk} + u_{jk} \exp (t u_{jk}) \bigr )\) converges to

Consequently, the limiting direction \(\varvec{u}\) lies in \(\Theta \cap (-\infty ,0]^{\mathcal {P}}\) and satisfies \(u_{jk} = 0\) whenever \(w_{jk} > 0\). But as shown below, this implies that \(\varvec{u}= \varvec{0}\), a contradiction to \(\Vert \varvec{u}\Vert = 1\).

The proof of \(\varvec{u} = \varvec{0}\) is very similar to the proof of Lemma 1. If \(j_1 \le j_2\) and \(k_1 \le k_2\) are indices such that \(u_{j_1k_2} = u_{j_2k_1} = 0\), then it follows from \(\varvec{u}\in (-\infty ,0]^{\mathcal {P}}\) and (8) that \(u_{j_1k_1} + u_{j_2k_2} \ge 0\), whence \(u_{j_1k_1} = u_{j_2k_2} = 0\). Repeating this argument as in the proof of Lemma 1, one can show that for arbitrary \((j_1,k_2), (j_2,k_1) \in \mathcal {P}\) with \(j_1 \le j_2\), \(k_1 \le k_2\), and \(w_{j_1k_2}, w_{j_2,k_1} > 0\), we have \(u_{jk} = 0\) for \(j_1 \le j \le j_2\) and \(k_1 \le k \le k_2\). By definition of \(\mathcal {P}\), this means that \(\varvec{u}= \varvec{0}\). \(\square \)

Proof of Lemma 2

With the linear bijection \(T : {\mathbb {R}}^{\mathcal {P}} \rightarrow {\mathbb {R}}^{\mathcal {P}}\) and \({\tilde{\Theta }} = T(\Theta )\), \(\tilde{\varvec{\theta }}= T(\varvec{\theta })\), \({\tilde{f}} = f \circ T^{-1}\), one can show that for arbitrary \(\varvec{x}\in {\mathbb {R}}^{\mathcal {P}}\) and \(\tilde{\varvec{x}}= T(\varvec{x})\),

so

with

and \({\tilde{v}}_{jk}(\varvec{\theta }) := \partial ^2 {\tilde{f}}(\tilde{\varvec{\theta }})/\partial {\tilde{\theta }}_{jk}^2\). It follows from parts (i) and (ii) of Lemma 4 in Sect. 2 that \(\Psi \) is continuous on \({\mathbb {R}}^{\mathcal {P}}\), and that \(\delta (\varvec{\theta }) = \bigl \langle \nabla f(\varvec{\theta }), \varvec{\theta }- \Psi (\varvec{\theta }) \bigr \rangle > 0\) for \(\varvec{\theta }\in \Theta \setminus \{{\widehat{\varvec{\theta }}}\}\). Moreover,

But

so

with \(\beta _1(\varvec{\theta })\) being the square root of \(6 \max _{(j,k) \in \mathcal {P}} \, {\tilde{v}}_{jk}(\varvec{\theta })\). In case of \(\Psi = \Psi ^{\textrm{row}}\) and \(\varvec{\theta }\) being row-wise calibrated, \(\beta _1(\varvec{\theta })^2\) is no larger than \(6 \max _{1 \le j \le \ell } w_{j+}\), and in case of \(\Psi = \Psi ^{\textrm{col}}\) and \(\varvec{\theta }\) being column-wise calibrated, \(\beta _1(\varvec{\theta })^2 \le 6 \max _{1 \le k \le m} w_{+k}\).

Concerning the lower bound for the maximum of \(f(\varvec{\theta }) - f \bigl ( (1 - t)\varvec{\theta }+ t \Psi (\varvec{\theta }) \bigr )\) over all \(t \in [0,1]\), note that for arbitrary \(\varvec{\theta }', \varvec{\theta }'' \in {\mathbb {R}}^{\mathcal {P}}\),

Thus part (iii) of Lemma 4 yieds the asserted lower bound with

\(\square \)

Proof of Theorem 2

It follows from Lemma 2 and the construction of the sequence \((\varvec{\theta }^{(s)})_{s \ge 0}\) that

for all \(s \ge 0\) with some continuous function \(\beta : \Theta \rightarrow [0,\infty )\) such that \(\beta > 0\) on \(\Theta \setminus \{{\widehat{\varvec{\theta }}}\}\). Note that \(f(\varvec{\theta }^{(s)})\) is antitonic in \(s \ge 0\), so the sequence \((\varvec{\theta }^{(s)})_{s \ge 0}\) stays in the compact set \(R_0 := \bigl \{ \varvec{\theta }\in \Theta : f(\varvec{\theta }) \le f(\varvec{\theta }^{(0)}) \bigr \}\). For each \(\varvec{\theta }\in R_0 \setminus \{{\widehat{\varvec{\theta }}}\}\), there exists a \(\delta _{\varvec{\theta }} > 0\) such that the open ball \(U(\varvec{\theta },\delta _{\varvec{\theta }})\) with center \(\varvec{\theta }\) and radius \(\delta _{\varvec{\theta }}\) satisfies

In particular, if \(\varvec{\theta }^{(s)} \in U(\varvec{\theta },\delta _{\varvec{\theta }})\) for some \(s \ge 0\), then \(f(\varvec{\theta }^{(s+1)}) < f(\varvec{\theta }) - \beta (\varvec{\theta })/3\). Consequently, \(\varvec{\theta }^{(s)} \in U(\varvec{\theta },\delta _{\varvec{\theta }})\) for at most one index \(s \ge 0\). But for each \(\epsilon > 0\), the compact set \(\bigl \{ \varvec{\theta }\in R_0 : \Vert \varvec{\theta }- {\widehat{\varvec{\theta }}}\Vert \ge \epsilon \bigr \}\) can be covered by finitely many of these balls \(U(\varvec{\theta },\delta _{\varvec{\theta }})\). Hence, \(\Vert \varvec{\theta }^{(s)} - {\widehat{\varvec{\theta }}}\Vert \ge \epsilon \) for at most finitely many indices \(s \ge 0\). \(\square \)

1.2 Minimizing convex functions via quadratic approximations

Let \(f : {\mathbb {R}}^d \rightarrow {\mathbb {R}}\) be a strictly convex and differentiable function, and let \(\Theta \subset {\mathbb {R}}^d\) be a closed, convex set such that a minimizer

exists. For \(\varvec{\theta }_o \in \Theta \) and some nonsingular matrix \(\varvec{A}\in {\mathbb {R}}^{d\times d}\) consider the quadratic approximation

of \(f(\varvec{x})\). By construction, \(f_o(\varvec{\theta }_o) = f(\varvec{\theta }_o)\) and \(\nabla f_o(\varvec{\theta }_o) = \nabla f(\varvec{\theta }_o)\), and there exists a unique minimizer

The next lemma clarifies some connections between \(\varvec{\theta }_*\) and \({\widehat{\varvec{\theta }}}\) in terms of the directional derivative

Lemma 4

(i) The point \(\varvec{\theta }_*\) equals \(\varvec{\theta }_o\) if and only if \(\varvec{\theta }_o = {\widehat{\varvec{\theta }}}\). Furthermore,

and

(ii) If f is continuously differentiable, the minimizer \(\varvec{\theta }_*\) is a continuous function of \(\varvec{\theta }_o \in \Theta \) and \(\varvec{A}\).

(iii) If f is even twice differentiable such that for some constant \(c_o > 0\) and any \(t \in [0,1]\),

then in case of \(\varvec{\theta }_o \ne {\widehat{\varvec{\theta }}}\),

Proof

By strict convexity of f, \(\varvec{\theta }_o = {\widehat{\varvec{\theta }}}\) if and only if

But since \(f_o\) is strictly convex, too, with \(\nabla f_o(\varvec{\theta }_o) = \nabla f(\varvec{\theta }_o)\), the latter displayed condition is also equivalent to \(\varvec{\theta }_o = \varvec{\theta }_*\).

Since the asserted inequalities are trivial in case of \(\varvec{\theta }_o = {\widehat{\varvec{\theta }}} = \varvec{\theta }_*\), let us assume in the sequel that \(\varvec{\theta }_* \ne \varvec{\theta }_o \ne {\widehat{\varvec{\theta }}}\). By convexity of f and \(f_o\),

and

On the other hand, since \(\varvec{\theta }_*\) minimizes \(f_o\) over \(\Theta \),

is nonnegative, so

Moreover, with \({\widehat{\delta }} := \nabla f(\varvec{\theta }_o)^\top (\varvec{\theta }_o - {\widehat{\varvec{\theta }}})\) and \({\widehat{\gamma }} := \Vert \varvec{A}\varvec{\theta }_o - \varvec{A}{\widehat{\varvec{\theta }}}\Vert ^2\),

where \(t_o := \min \bigl ( 1, {\widehat{\delta }}/{\widehat{\gamma }} \bigr )\). In case of \({\widehat{\delta }} \ge {\widehat{\gamma }}\), we may conclude that \(2\delta _o \ge 2{\widehat{\delta }} - {\widehat{\gamma }} \ge {\widehat{\delta }}\), so \({\widehat{\delta }} \le 2 \delta _o\), and otherwise, \(2 \delta _o \ge {\widehat{\delta }}^2 / {\widehat{\gamma }}\), whence \({\widehat{\delta }} \le \sqrt{2 \delta _o {\widehat{\gamma }}}\). This proves part (i).

As to part (ii), let \((\varvec{\theta }_o^{(s)})_{s \ge 1}\) be a sequence in \(\Theta \) with limit \(\varvec{\theta }_o\), and let \((\varvec{A}^{(s)})_{s \ge 1}\) be a sequence of nonsingular matrices in \({\mathbb {R}}^{d\times d}\) converging to a nonsingular matrix \(\varvec{A}\). Definining \(f_o^{(s)}\) as \(f_o\) with \((\varvec{\theta }_o^{(s)}, \varvec{A}^{(s)})\) in place of \((\varvec{\theta },\varvec{A})\), we know that \(f_o^{(s)} \rightarrow f_o\) as \(s \rightarrow \infty \) uniformly on any bounded subset of \({\mathbb {R}}^d\). Consequently, for any fixed \(\epsilon > 0\) and \(R_\epsilon := \bigl \{ \varvec{\theta }\in \Theta : \Vert \varvec{\theta }- \varvec{\theta }_*\Vert = \epsilon \bigr \}\),

as \(s \rightarrow \infty \). But as soon as \(\gamma _\epsilon ^{(s)} > 0\), it follows from convexity of \(\Theta \) and \(f^{(s)}\) that the minimizer \(\varvec{\theta }_*^{(s)}\) of \(f_o^{(s)}\) satisfies \(\Vert \varvec{\theta }_*^{(s)} - \varvec{\theta }_*\Vert < \epsilon \).

Part (iii) follows from

where \(t_o := \min \bigl ( 1, \delta _o / (c_o \Vert \varvec{\theta }_o - \varvec{\theta }_*\Vert ^2) \bigr )\). \(\square \)

1.3 Technical details for Section 4

For fixed \(\ell _o,n\in {\mathbb {N}}\), let \(({\widehat{G}}_x)_{x\in {\mathfrak {X}}_o}\),  and \((\widehat{{\mathbb {G}}}_x)_{x\in {\mathfrak {X}}_o}\) be estimates of \((G_x)_{x\in {\mathfrak {X}}_o}\) from a sample \(\{(X_i,Y_i)\}_{i=1}^n\) as described in Sect. 4.2. Then, for all

and \((\widehat{{\mathbb {G}}}_x)_{x\in {\mathfrak {X}}_o}\) be estimates of \((G_x)_{x\in {\mathfrak {X}}_o}\) from a sample \(\{(X_i,Y_i)\}_{i=1}^n\) as described in Sect. 4.2. Then, for all  and \(x\in {\mathfrak {X}}_o\), the estimate \({\tilde{G}}_{x}\) is a step function with jumps in the set \(\{y_1,\ldots ,y_m\}\) of unique observations. For convenience, we further denote \(y_0:=0\), \(y_{m+1}:=\infty \), and define

and \(x\in {\mathfrak {X}}_o\), the estimate \({\tilde{G}}_{x}\) is a step function with jumps in the set \(\{y_1,\ldots ,y_m\}\) of unique observations. For convenience, we further denote \(y_0:=0\), \(y_{m+1}:=\infty \), and define

for all \(1\le j \le \ell _o\) and  .

.

For the remainder of this section, we fix \(1\le j \le \ell _o\) and  . Observe that \(R_{x_j}({\tilde{G}}, G)\) is the sum of the terms

. Observe that \(R_{x_j}({\tilde{G}}, G)\) is the sum of the terms

defined for \(0 \le k \le m\), where \(g_{x_j}\) is the density of \(Q_{x_j}\) with respect to Lebesgue measure. But since

we find that

for \(1\le k < m\), where \(\rho (z_1, z_2) \ := \ z_1z_2 - z_2^2/2\).

Similarly, the computation of the CRPS involves the sum of the following integrals

defined for \(0 \le k \le m\). But integration by parts yields

where \(c_j := b(x_j)\Gamma (a(x_j)+1)/\Gamma (a(x_j))\) and \({\bar{G}}_{x_j}\) denotes the cumulative distribution function of a Gamma distribution with shape \(a(x_j)+1\) and scale \(b(x_j)\). In consequence, if we define \({\bar{G}}_{jk}:= {\bar{G}}_{x_j}(y_k)\) and

for \(1 \le k < m\), we obtain

where the above two integrals are computed numerically.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mösching, A., Dümbgen, L. Estimation of a likelihood ratio ordered family of distributions. Stat Comput 34, 58 (2024). https://doi.org/10.1007/s11222-023-10370-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10370-9

Keywords

- Empirical likelihood

- Likelihood ratio order

- Order constraint

- Quasi–Newton method

- Stochastic order

- Total positivity

are displayed from left to right for

are displayed from left to right for