Abstract

Maximum likelihood estimation in logistic regression with mixed effects is known to often result in estimates on the boundary of the parameter space. Such estimates, which include infinite values for fixed effects and singular or infinite variance components, can cause havoc to numerical estimation procedures and inference. We introduce an appropriately scaled additive penalty to the log-likelihood function, or an approximation thereof, which penalizes the fixed effects by the Jeffreys’ invariant prior for the model with no random effects and the variance components by a composition of negative Huber loss functions. The resulting maximum penalized likelihood estimates are shown to lie in the interior of the parameter space. Appropriate scaling of the penalty guarantees that the penalization is soft enough to preserve the optimal asymptotic properties expected by the maximum likelihood estimator, namely consistency, asymptotic normality, and Cramér-Rao efficiency. Our choice of penalties and scaling factor preserves equivariance of the fixed effects estimates under linear transformation of the model parameters, such as contrasts. Maximum softly-penalized likelihood is compared to competing approaches on two real-data examples, and through comprehensive simulation studies that illustrate its superior finite sample performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Generalized linear mixed models (GLMMs; McCulloch et al. 2008, Chapter 7) are a potent class of statistical models that can associate Gaussian and non-Gaussian responses, such as counts, proportions, positive responses, and so on, with covariates, while accounting for complex multivariate dependencies. This is achieved by linking the expectation of a response to a linear combination of covariates and parameters (fixed effects), and sources of extra variation (random effects) with known distributions. Among GLMMs, mixed effects logistic regression is arguably the most frequently used model to analyse binary response outcomes. Although these models find application in numerous fields such as biology, ecology and the social sciences (Bolker et al. 2009), estimation of GLMMs is not straightforward in practice because their likelihood is generally an intractable integral.

Maximum likelihood (ML) methods for GLMMs maximize the GLMM likelihood or an approximation thereof, which can in principle be made arbitrarily accurate (see, for example, Raudenbush et al. 2000; Pinheiro and Chao 2006). Such methods are pervasive in contemporary GLMM practice because the resulting estimators are consistent under general model regularity conditions, and the resulting estimates and the (approximate) likelihood can be used for likelihood-based inferential devices, such as likelihood-ratio or Wald tests, and model selection procedures based on information criteria. An alternative approach is to use Bayesian posterior update procedures (see, for example, Zhao et al. 2006; Browne and Draper 2006). However, such procedures come with various technical difficulties, such as determining the scaling of the covariates, selecting appropriate priors, coming up with efficient posterior sampling algorithms, and determining burn-in times of chains for reliable estimation. Yet another alternative are maximum penalized quasi-likelihood methods (Schall 1991; Wolfinger and O’connell 1993; Breslow and Clayton 1993) which essentially fit a linear mixed model to transformed pseudo-responses. However, the penalized quasi-likelihood may not yield an accurate approximation of the GLMM likelihood. As a result, these estimators can have large bias when the random effects variances are large (Bolker et al. 2009; Rodriguez and Goldman 1995) and are not necessarily consistent (Jiang 2017, Chapter 3.1).

Despite the pervasiveness of ML methods in the statistical practice for GLMMs, certain data configurations can result in estimates of the variance-covariance matrix of the random effects distribution to be on the boundary of the parameter space, such as infinite or zero estimated variances, or, more generally, singular estimates of the variance-covariance matrix. In addition, as is the case in ML estimation for Bernoulli-response generalized linear models (GLMs; see, for example McCullagh and Nelder 1989, Chapter 4) the ML estimates of Bernoulli-response GLMMs, such as the mixed effects logistic regression model, can be infinite. As is well-acknowledged in the GLMM literature (see, for example Bolker et al. 2009; Bolker 2015; Pasch et al. 2013), both instances of estimates on the boundary of the parameter space can cause havoc to numerical optimization procedures, and if such estimates go undetected, they may substantially impact first-order inferential procedures, like Wald tests, resulting in spuriously strong or weak conclusions; see Chung et al. (2013, Section 2.1) for an excellent discussion. In contrast to the numerous approaches to detect (see, for example, Kosmidis and Schumacher 2021 for the detectseparation R (R Core Team 2022) package that implements the methods in Konis 2007) and handle (see, for example, Kosmidis and Firth 2021; Heinze and Schemper 2002; Gelman et al. 2008) infinite estimates in logistic regression with fixed effects, little methodology or guidance is available on how to detect or deal with degenerate estimates in logistic regression with mixed effects. For this reason, it is practically desirable to have access to methods that are guaranteed to return estimates away from the boundary of the parameter space, while preserving the key properties that the maximum likelihood estimator has.

We introduce a maximum softly-penalized (approximate) likelihood (MSPL) procedure for mixed effects logistic regression that returns estimators that are guaranteed to take values in the interior of the parameter space, and are also consistent, asymptotically normally distributed, and Cramér-Rao efficient under assumptions that are typically employed for establishing consistency, and asymptotic normality of ML estimators. The composite penalty we propose consists of appropriately scaled versions of Jeffreys’ invariant prior for the model with no random effects to ensure the finiteness of the fixed effects estimates, and of compositions of the negative Huber loss functions to prevent variance components estimates on the boundary of the parameter space. We show that the MSPL estimates are guaranteed to be in the interior of the parameter space, and scale the penalty appropriately to guarantee that (i) penalization is soft enough for the MSPL estimator to have the same optimal asymptotic properties expected by the ML estimator and (ii) that the fixed effects estimates are equivariant under linear transformations of the model parameters, such as contrasts, in the sense that the MSPL estimates of linear transformations of the fixed effects parameters are the linear transformations of the MSPL estimates. Other prominent penalization procedures, for which open-source software implementations exist (for example, the bglmer routine of the blme R package; see Chung et al. 2013, 2015 for details) do not necessarily have these properties. Maximum softly-penalized likelihood is compared to prominent competing approaches through two real-data examples and comprehensive simulation studies that illustrate its superior finite-sample performance. Although the developments here are for logistic regression with mixed effects, they provide a blueprint for the construction of penalties and estimators of the fixed effects and/or the variance components with values in the interior of the parameter space for any GLMM and, more generally, for M-estimation settings where boundary estimates occur.

The remainder of the paper is organized as follows. Section 2 defines the mixed effects logistic regression model and Sect. 3 gives a motivating real-data example of degenerate ML estimates in mixed effect logistic regression. Section 4 introduces the proposed composite penalty, which gives non-boundary MSPL estimates (Sect. 5), is equivariant under linear transformations of fixed effects (Sect. 6) and achieves ML asymptotics (Sect. 7). Section 8 demonstrates the performance of the MSPL on another real-data example for mixed effects logistic regression with bivariate random effect structure and presents the results of a simulation study based on the data set and Sect. 9 provides concluding remarks. Proofs are provided in the Appendix to this paper. Further material related to the examples and the simulations is given in the accompanying Supplementary Material document, along with additional simulation studies that illustrate the relative performance of MSPL to alternative methods in artificial mixed effects logistic regression settings with extreme fixed effects and variance components.

2 Mixed effects logistic regression

Suppose that response vectors \(\varvec{y}_1, \ldots , \varvec{y}_k\) are observed, where \(\varvec{y}_i = (y_{i1}, \ldots , y_{in_i})^\top \in \{0, 1\}^{n_i}\), possibly along with covariate matrices \(\varvec{V}_1, \ldots , \varvec{V}_k\), respectively, where \(\varvec{V}_i\) is a \(n_i \times s\) matrix. A logistic regression model with mixed effects assumes that \(\varvec{y}_1, \ldots , \varvec{y}_k\) are realizations of independent random vectors \(\varvec{Y}_1, \ldots , \varvec{Y}_k\), where the entries \(Y_{i1}, \ldots , Y_{in_i}\) are independent Bernoulli random variables conditionally on a vector of random effects \(\varvec{u}_i\) \((i = 1, \ldots , k)\). The vectors \(\varvec{u}_1, \ldots , \varvec{u}_k\) are assumed to be independent draws from a multivariate normal distribution. The conditional mean of each Bernoulli random variable \(Y_{ij}\) is associated with a linear predictor \(\eta _{ij}\), which is a linear combination of covariates with fixed and random effects, through the logit link function. Specifically,

where \(\mu _{ij} = P(Y_{ij} = 1 \mid \varvec{u}_i, \varvec{x}_{ij}, \varvec{z}_{ij})\). The vector \(\varvec{x}_{ij}\) is the jth row of the \(n_i \times p\) model matrix \(\varvec{X}_{i}\) associated with the p-vector of fixed effects \(\varvec{\beta }\in \Re ^p\), and \(\varvec{z}_{ij}\) is the jth row of the \(n_i \times q\) model matrix \(\varvec{Z}_{i}\) associated with the q-vector of random effects \(\varvec{u}_i\). The model matrices \(\varvec{X}_i\) and \(\varvec{Z}_i\) are formed from subsets of columns of \(\varvec{V}_i\), and the matrices \(\varvec{X}\) and \(\varvec{V}\) with row blocks \(\varvec{X}_1, \ldots , \varvec{X}_n\) and \(\varvec{V}_1, \ldots , \varvec{V}_n\) are assumed to be full rank. The variance-covariance matrix \(\varvec{\Sigma }\) collects the variance components and is assumed to be symmetric and positive definite. The marginal likelihood about \(\varvec{\beta }\) and \(\varvec{\Sigma }\) for model (1) is proportional to

where \({b({\textbf {u}}_{i}\varvec{\Sigma })}=-\frac{\varvec{u}_i^\top \varvec{\Sigma }^{-1}\varvec{u}_i}{2}\).

Formally, the ML estimator is the maximizer of (2) with respect to \(\varvec{\beta }\) and \(\varvec{\Sigma }\). However, (2) involves intractable integrals, which are typically approximated before maximization. For example, the popular glmer routine of the R package lme4 (Bates et al. 2015) uses adaptive Gauss-Hermite quadrature for one-dimensional random effects and Laplace approximation for higher-dimensional random effects. A detailed account of those approximation methods can be found in Pinheiro and Bates (1995); see also Liu and Pierce (1994) for adaptive Gauss-Hermite quadrature rules.

3 Motivating example

The following section provides a real-data working example, which is a reduced version of the data in McKeon et al. (2012) as provided in the worked examples of Bolker (2015), that motivates our developments in this paper.

The data, which is given in Table 1, comes from a randomized complete block design involving coral-eating sea stars novaeguineae (hereafter Culcita) attacking coral that harbor differing combinations of protective symbionts. There are four treatments, namely no symbionts, crabs only, shrimp only, both crabs and shrimp, and ten temporal blocks with two replications per block and treatment, which gives a total of 80 observations on whether predation was present (recorded as one) or not (recorded as zero). By mere inspection of the responses in Table 1, we note that predation becomes more prevalent with increasing block number and that predation gets suppressed when either crabs or shrimp are present, and more so when both symbionts are present. The only observation that deviates from this general trend is the observation in block 10 with no predation and no symbionts.

A logistic regression model with one random intercept per block can be used here to associate predation with treatment effects while accounting for heterogeneity between blocks, i.e.

where \(a(j) = \lceil j/2 \rceil \) is the ceiling of j/2. In the above expressions, \((Y_{i1}\), \(Y_{i2})^\top \), \((Y_{i3}, Y_{i4})^\top \), \((Y_{i5}, Y_{i6})^\top \), \((Y_{i7}, Y_{i8})^\top \) correspond to the two responses for each of “none”, “crabs”, “shrimp”, and “both”, respectively. We set \(\beta _1 = 0\) for identifiability purposes, effectively using “none” as a reference category. The logarithm of the likelihood (2) about the parameters \(\varvec{\beta }= (\beta _0, \beta _2, \beta _3, \beta _4)^\top \) and \(\psi = \log \sigma \) of model (3) is approximated by an adaptive quadrature rule as implemented in glmer with \(Q = 100\) quadrature points, which is the maximum the current glmer implementation allows. All parameter estimates of model (3) reported in the current example are computed after removal of the atypical observation with zero predation in block 10 when there are no symbionts. This is also done in Bolker (2015, Section 13.5.6) and the corresponding worked examples, which are available at https://bbolker.github.io/mixedmodels-misc/ecostats_chap.html. Estimates based on all data points are provided in Table S1 of the Supplementary Material document.

The ML estimates of \(\varvec{\beta }\) and \(\psi \) in Table 2 are computed using the numerical optimization procedures BFGS and CG (ML[BFGS] and ML[CG], respectively), as these are provided by the optimx R package (see Nash 2014, Section 3 for details), with the same starting values. The ML[BFGS] and ML[CG] estimates are different and notably extreme on the logistic scale. This is due to the two optimization procedures stopping early at different points in the parameter space after having prematurely declared convergence. The large estimated standard errors are indicative of an almost flat approximate log-likelihood around the estimates. In this case, the ML estimates of the fixed effects \(\beta _0, \beta _2, \beta _3, \beta _4\) are in reality infinite in absolute value, which has also been observed in Bolker (2015, Section 13.5.6).

Parameter estimates are also obtained using the bglmer routine of the blme R package (Chung et al. 2013) that has been developed to ensure that parameter estimates from GLMMs are away from the boundary of the parameter space. The estimates shown in Table 2 are obtained using a penalty for \(\sigma \) inspired by a gamma prior (default in bglmer; see Chung et al. 2013 for details) and two of the default prior specifications for the fixed effects: (i) independent normal priors (bglmer[n]), and (ii) independent t priors (bglmer[t]), as these are implemented in blme; see bmerDist-class in the help pages of blme for details. We also show the estimates obtained using the MSPL estimation method that we propose in the current work.

The maximum penalized likelihood estimates from bglmer and the corresponding estimated standard errors appear to be finite. Nevertheless, the use of the default priors directly breaks parameterization equivariance under contrasts, which ML estimates enjoy. For example, Table 2 also shows the estimates of model (3) with \(\eta _{ij} = \gamma _0 + u_i + \gamma _{j}\), where \(\gamma _{4} = 0\), i.e. setting “both” as a reference category. Hence, the identities \(\gamma _0 = \beta _0 + \beta _4\), \(\gamma _1 = -\beta _4\), \(\gamma _2 = \beta _2 - \beta _4\), \(\gamma _3 = \beta _3 - \beta _4\) hold, and it is natural to expect those identities from the estimates of \(\varvec{\beta }\) and \(\varvec{\gamma }\). As is evident from Table 2, the bglmer estimates with either normal or t priors can deviate substantially from those identities. For example, the bglmer estimate of \(\gamma _1\) based on normal priors is 5.75 while that for \(\beta _4\) is \(-\,4.73\), and the estimate of \(\log \sigma \) is 1.54 in the \(\varvec{\beta }\) parameterization and 1.66 in the \(\varvec{\gamma }\) parameterization. Furthermore, different contrasts give varying amounts of deviations from these identities. On the other hand, the approximate likelihood is invariant under monotone parameter transformations. As a result, the corresponding identities hold exactly for the ML estimates with the deviations observed in Table 2 being due to early stopping of the optimization routines. The bglmer estimates are typically closer to zero in absolute value than the ML estimates because the normal and t priors are all centered at zero. Note that the estimates using normal priors tend to shrink more towards zero than those using t priors because the latter have heavier tails.

Simulation results based on \(10\,\,000\) samples from (3) at the ML estimates in Table S1 in the Supplementary Material document. Estimates are obtained using ML, bglmer, and MSPL, with a 100-point adaptive Gauss-Hermite quadrature approximation to the likelihood. Parameter estimates on the boundary are discarded for the calculation of the simulation summaries. The number of estimates used for the calculation of summaries is given in the top right panel (R). The top left panel shows the centered sampling distribution of the estimators for MSPL, bglmer and ML. The bottom panels give simulation-based estimates of the bias, variance, mean squared error (MSE), probability of underestimation (PU), and coverage based on 95% Wald-confidence intervals

In order to assess the impact of shrinkage on the frequentist properties of the estimators, we simulate \(10\ 000\) independent samples of responses for the randomized complete block design in Table 1, at the ML estimates in the \(\varvec{\beta }\) parameterization, when all data points are used (see Table S1 of the Supplementary Material document). For each sample, we compute the ML and MSPL estimates, as well as the bglmer estimates based on normal and t priors. Figure 1 shows boxplots for the sampling distributions of the estimators, centered at the true values, the estimated finite-sample bias, variance, mean squared error, and probability of underestimation for each estimator, along with the estimated coverage of 95% Wald confidence intervals based on the estimates and estimated standard errors from the negative Hessian of the approximate log-likelihood at the estimates. The plotting range for the support of the distributions has been restricted to \((-11, 11)\), which does not contain all ML estimates in the simulation study but contains all estimates for the other methods. Note that apart from the estimated probability of underestimation, estimates for the other summaries are not well-defined for ML, because the probability of boundary estimates is positive. In fact, there were issues with at least one of the ML estimates for \(9.25\%\) of the simulated samples. These issues are either due to convergence failures or because the estimates or estimated standard errors have been found to be atypically large in absolute value. The displayed summaries for ML are computed based only on estimates which have not been found to be problematic. Clearly, the amount of shrinkage induced by the normal and t priors is excessive. Although the resulting estimators have small finite-sample variance (with the one based on normal priors having the smallest), they have excessive finite-sample bias, which is often at the order of the standard deviation. The combination of small variance and large bias results in large mean squared errors, and the sampling distributions to be located far from the respective true values, impacting first-order inferences; Wald-type confidence intervals about the fixed effects are found to systematically undercover the true parameter value. Finally, neither bglmer[n] nor bglmer[t] appear to prevent extreme positive variance estimates.

As is apparent from Table 2, the MSPL estimates are equivariant under contrasts. The identities between the \(\varvec{\beta }\) and \(\varvec{\gamma }\) parameterization of the model hold exactly for the proposed MSPL estimates, where the small observed deviations are attributed to rounding and the stopping criteria used for the numerical optimization of the penalized log-likelihood. Furthermore, we see from Fig. 1 that the penalty we propose not only ensures that estimates are away from the boundary of the parameter space, but its soft nature leads to estimators that possess the good frequentist properties that would be expected by the ML estimator had it not taken boundary values.

4 Composite penalty

We define a penalty for the log-likelihood or an approximation thereof for mixed effects logistic regression that returns MPL estimators that are always in the interior of the parameter space and are equivariant under scaled contrasts of the fixed effects. The penalty is appropriately scaled to be soft enough to return MPL estimators that are consistent and asymptotically normally distributed. For this reason, the resulting MPL estimators are termed maximum softly-penalized likelihood (MSPL) estimators.

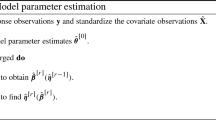

Let \(\varvec{\theta }= (\varvec{\beta }^\top , \varvec{\psi }^\top )^\top \) and \(\ell (\varvec{\theta }) = \log L(\varvec{\beta }, s(\varvec{\psi }))\) with \(s(\varvec{\psi }) = \varvec{\Sigma }\), where \(L(\varvec{\beta }, s(\varvec{\psi }))\) is (2). For clarity of presentation, we shall write \(\ell (\varvec{\theta })\) to denote both the log-likelihood or an appropriate approximation of the log-likelihood that is bounded from above. Sufficient conditions for consistency and asymptotic normality of the MSPL estimator using the exact likelihood and an approximation thereof are provided in Section B of the Appendix. The parameter vector \(\varvec{\psi }\) is defined as \(\varvec{\psi }= (\log l_{11}, \ldots , \log l_{qq}, l_{21}, \ldots , l_{q1}, l_{32}, \ldots , l_{q2}, \ldots , l_{qq-1})^\top \), where \(l_{ij}\) \((i > j)\) is the (i, j)th element of the lower-triangular Cholesky factor \(\varvec{L}\) of \(\varvec{\Sigma }\), i.e. \(\varvec{\Sigma }= \varvec{L}\varvec{L}^\top \). Consider the estimator

where \(c_{1} > 0\), \(c_{2} > 0\), and \(P_{(f)}(\varvec{\beta })\) and \(P_{(v)}(\varvec{\psi })\) are unscaled penalty functions for the fixed effects and variance components, respectively.

For the unscaled fixed effects penalty, we use the logarithm of Jeffreys’ prior for the corresponding GLM, that is

where \(\varvec{X}_i\) collects the covariates for the fixed effects in model (1), and \(\varvec{W}_i\) is a diagonal matrix with jth diagonal element \(\mu _{ij}^{(f)} (1 - \mu _{ij}^{(f)})\) with \(\mu _{ij}^{(f)} = \exp (\eta _{ij}^{(f)}) / \{1 + \exp (\eta _{ij}^{(f)})\}\) and \(\eta _{ij}^{(f)} = \varvec{x}_{ij}^\top \varvec{\beta }\). For the variance components penalty, we use a composition of negative Huber loss functions on the components of \(\varvec{\psi }\). In particular,

where

5 Non-boundary MPL estimates

Denote by \(\partial \Theta \) the boundary of \(\Theta \) and let \(\varvec{\theta }(r)\), \(r \in \Re \), be a path in the parameter space such that \(\lim _{r \rightarrow \infty }\varvec{\theta }(r) \in \partial \Theta \). A common approach to resolving issues with ML estimates being in \(\partial \Theta \), like those encountered in the example of Sect. 3, is to introduce an additive penalty to the (approximate) log-likelihood that satisfies \(\lim _{r \rightarrow \infty } P(\varvec{\theta }(r)) = -\infty \). Hence, if \(\ell (\varvec{\theta })\) is bounded from above and there is at least one point \(\varvec{\theta }\in \Theta \) such that \(\ell (\varvec{\theta }) + P(\varvec{\theta }) > -\infty \), then \({\tilde{\varvec{\theta }}}\) is in the interior of \(\Theta \).

Kosmidis and Firth (2021, Theorem 1) show that if the matrix \(\varvec{X}\) with row blocks \(\varvec{X}_1, \ldots , \varvec{X}_n\) is full rank, then the limit of (4) is \(-\infty \) as \(\varvec{\beta }\) diverges to any point with at least one infinite component. This result holds for a range of link functions including the commonly-used logit, probit, complementary log–log, log–log, and the cauchit link (see Kosmidis and Firth 2021, Section 3.1, for details). Now, noting that (2) is always bounded from above by one as a probability mass function, the penalized log-likelihood \(\ell (\varvec{\theta }) + P(\varvec{\theta })\) diverges to \(-\infty \) as \(\varvec{\beta }\) diverges, for any value of \(\varvec{\psi }\). Hence, the MPL estimates for the fixed effects always have finite components as long as there is at least one point in \(\Theta \) such that \(\ell (\varvec{\theta })\) is not \(-\infty \).

The penalty (5) on the variance components takes value \(-\infty \) whenever at least one component of \(\varvec{\psi }\) diverges. Hence, by parallel arguments to those in the previous paragraph, the penalized log-likelihood \(\ell (\varvec{\theta }) + P(\varvec{\theta })\) diverges to \(-\infty \) as any component of \(\varvec{\psi }\) diverges, for any value of \(\varvec{\beta }\). Hence, the MPL estimates for \(\varvec{\psi }\) have finite components and the value of \({\tilde{\varvec{\Sigma }}} = s(\tilde{\varvec{\psi }})\) is guaranteed to be non-degenerate in the sense that it is positive definite with finite entries, implying correlations away from one in absolute value (see Theorem A.1), as long as there is at least one point in \(\Theta \) such that \(\ell (\varvec{\theta })\) is not \(-\infty \).

The condition on the boundedness of (2) from above is just one sufficient condition for the finiteness of the MPL estimates, which is also satisfied by a vanilla (non-adaptive) Gauss-Hermite quadrature or simulation-based approximations of the likelihood (see, for example, McCulloch 1997). A weaker sufficient condition is that the penalized objective diverges to \(-\infty \) as \(\varvec{\theta }(r)\) diverges to \(\partial \Theta \), or, in other words, that the penalty dominates the likelihood in absolute value for any divergent path. From the numerous numerical experiments we carried out, we encountered no evidence that this weaker condition does not hold for the adaptive quadrature and Laplace approximations to the log-likelihood that the glmer routine of the R package lme4 employs.

The penalties arising from the independent normal and independent t prior structures implemented in blme are such that \(\lim _{r \rightarrow \infty } P(\varvec{\theta }(r)) = -\infty \), whenever \(\varvec{\theta }(r)\) diverges to the boundary of the parameter space for the fixed effects. As a result, the bglmer[n] and bglmer[t] estimates for the fixed effects are always finite, as also illustrated in Table 2. Nevertheless, the default gamma-prior like penalty used in bglmer for the variance component \(\sigma \) is \(1.5 \log \sigma \), which, while it ensures that the estimate of \(\log \sigma \) is not minus infinity, does not guard against estimates that are \(+\infty \). This is apparent in Fig. 1, where several extreme positive bglmer[n] and bglmer[t] estimates are observed for \(\log \sigma \).

6 Equivariance under linear transformations of fixed effects

The ML estimates are known to be equivariant under transformations of the model parameters (see, for example Zehna 1966). A particularly useful class of transformations in mixed effects logistic regression, and more generally GLMMs with categorical covariates, is the collection of scaled linear transformations \(\varvec{\beta }' = \varvec{C}\varvec{\beta }\) of the fixed effects for known, invertible, real matrices \(\varvec{C}\).

Such invariance properties of the ML estimates guarantee that one can obtain ML estimates and corresponding estimated standard errors for arbitrary sets of scaled parameter contrasts of the fixed effects, when estimates for one of those sets of contrasts are available and with no need to re-estimate the model. Such equivariance properties eliminate any estimation and inferential ambiguity when two independent researchers analyze the same data set using the same model but with different contrasts for the fixed effects, for example, due to software defaults.

Following the argument in Zehna (1966), the condition required for achieving equivariance of MPL estimators is that the penalty for the fixed effects parameters behaves like the log-likelihood under linear transformations; that is

where \(a \in \Re \) is a scalar that does not depend on \(\varvec{\beta }\).

Let \(\eta _{ij} = \varvec{x}_{ij}^\top \varvec{C}^{-1} \varvec{\gamma }+ \varvec{z}_{ij}^\top \varvec{u}_i\) in (1) for a known real matrix \(\varvec{C}\). Then, \(\varvec{\gamma }= \varvec{C}\varvec{\beta }\), and the penalty for the fixed effects in the \(\varvec{\gamma }\) parameterization is \(P_{(f)}(\varvec{\gamma }) = P_{(f)}(\varvec{\beta }) - \log {\textrm{det}}(\varvec{C})\), which is of the form of (6). In contrast, the penalties arising from the normal and t prior structures used to compute the bglmer[n] and bglmer[t] fixed effect estimates in Table 2 do not satisfy (6). Hence, the bglmer[n] and bglmer[t] estimates are not equivariant under linear transformations of the parameters.

7 Consistency and asymptotic normality of the MSPL estimator

To mitigate any distortion of the estimates by the penalization of the log-likelihood, and preserve ML asymptotics, we choose the scaling factors \(c_1,c_2\) to be “soft” enough to control \({\left\| \nabla _{\varvec{\theta }} P(\varvec{\theta }) \right\| }\) in terms of the rate of information accumulation \(\varvec{r}_n = (r_{n1},\ldots ,r_{nd})^\top \in \Re ^d\) with \(d = p + q(q + 1)/2\). The components of the rate of information accumulation are defined to diverge to \(+\infty \) and satisfy \(R_n^{-1/2}J(\varvec{\theta })R_n^{-1/2} \overset{p}{\rightarrow }\ I(\varvec{\theta })\) as \(n \rightarrow \infty \), where \(R_n\) is a diagonal matrix with the elements of \(\varvec{r}_n\) on its main diagonal, \(J(\varvec{\theta }) = -\nabla _{\varvec{\theta }} \nabla _{\varvec{\theta }}^\top \ell (\varvec{\theta })\) is the observed information matrix, and \(I(\varvec{\theta })\) is a \(\mathcal {O}(1)\) matrix. In this way, we allow for scenarios where the rate of information accumulation varies across the components of the parameter vector.

According to Theorem C.1 in the Appendix, the gradient of the logarithm of the Jeffreys’ prior in (4) can be bounded as \({\left\| \nabla _{\varvec{\beta }} P_{(f)}(\varvec{\beta }) \right\| } \le p^{3/2} \max _{s,t} |x_{st}|/2\), where \(x_{st}\) is the tth element in the sth row of \(\varvec{X}\) as defined in Sect. 5 and \({\left\| \cdot \right\| }\) is the Euclidean norm. Furthermore, \({\left\| \nabla _{\varvec{\psi }} P_{(v)}(\varvec{\psi }) \right\| } \le \sqrt{q(q+1)/2}\) because \(|d D(x) / dx| \le 1\). Hence, an application of the triangle inequality gives that \({\left\| \nabla _{\varvec{\theta }} P(\varvec{\theta }) \right\| } \le c_1 p^{3/2} \max _{s, t} |x_{st}| / 2 + c_2 \sqrt{q(q+1) / 2}\). For the scaling factors \(c_1\) and \(c_2\), we propose using \(c_1 = c_2 = c\) to be the square root of the average of the approximate variances of \(\hat{\eta }_{ij}^{(f)}\) at \(\varvec{\beta }= \varvec{0}_p\). A delta method argument gives that \(c = 2 \sqrt{p/n}\). Therefore,

Hence, as long as \(\max _{s, t} |x_{st}| = O_p(n^{1/2})\), it holds that \( {\sup }_{{\varvec{\theta }\in \Theta }} {\left\| R_n^{-1} \nabla _{\varvec{\theta }} P(\varvec{\theta }) \right\| } = o_p(1)\), and the conditions for consistency and asymptotic normality of \(\tilde{\varvec{\theta }}\) in Theorems B.1 and B.2, respectively, are satisfied. The condition on the maximum of the absolute elements of the model matrix is not unreasonable in practice. It certainly holds true for bounded covariates such as dummy variables, as encountered in the real-data examples in the current paper. It is also true for model matrices with subgaussian random variables with common variance proxy \(\sigma ^2\), in which case \(\max _{s,t} |x_{st} | = {\mathcal {O}_p(\sqrt{2\sigma ^2 \log (2np)})}\) (see, for example, Rigollet 2015 Theorem 1.14).

8 Conditional inference data

To demonstrate the performance of the MSPL mixed effects logistic regression with a multivariate random effects structure, we consider a subset of the data analyzed by Singmann et al. (2016). As discussed on CrossValidated (https://stats.stackexchange.com/questions/38493), this data set exhibits both infinite fixed effects estimates as well as degenerate variance components estimates when a Bernoulli-response GLMM is fitted by ML.

The data set, originally collected as a control condition of experiment (3)(b) in Singmann et al. (2016) and therein analyzed in a different context, comes from an experiment in which participants worked on a probabilistic conditional inference task. Participants were presented with the conditional inferences modus ponens (MP; “If p then q. p. Therefore q.”), modus tollens (MT; “If p then q. Not q. Therefore not p.”), affirmation of the consequent (AC; “If p then q. q. Therefore p”), and denial of the antecedent (DA, “If p then q. Not p. Therefore not q”), and asked to estimate the probability that the conclusion (“Therefore...”) follows from the conditional rule (“If p then q.”) and the minor premise (“p.”, “not p.”, “q.”, “not q.”). The material of the experiment consisted of the following four conditional rules with varying degrees of counterexamples (alternatives, disablers; indicated in parentheses below).

-

1.

If a predator is hungry, then it will search for prey. (few disablers, few alternatives)

-

2.

If a person drinks a lot of coke, then the person will gain weight. (many disablers, many alternatives)

-

3.

If a girl has sexual intercourse with her partner, then she will get pregnant. (many disablers, few alternatives)

-

4.

If a balloon is pricked with a needle, then it will quickly lose air. (few disablers, many alternatives)

To illustrate, for MP and conditional rule 1, a participant was asked: “If a predator is hungry, then it will search for prey. A predator is hungry. How likely is it that the predator will search for prey?” Additionally, participants were asked to estimate the probability of the conditional rule, e.g. “How likely is it that if a predator is hungry it will search for prey?”, and the probability of the minor premises, e.g. “How likely is it that a predator is hungry?”.

The response variable of this data set is then a binary response indicating whether, given a certain conditional rule, the participants’ probabilistic inference is p-valid. An inference is deemed p-valid if the summed uncertainty of the premises does not exceed the uncertainty of the conclusion, where uncertainty of a statement x is defined as one minus the probability of x. For example, for MP, a respondent’s inference is p-valid if \(1-Pr({``q''}) \le 1 - Pr({``If \; p \; then \; q''}) + 1-Pr({``p''}) \), where \(Pr(x)\) indicates the participant’s estimated probability of statement x (p-valid inferences are recorded as zero, p-invalid inferences as one). Covariates are the categorical variable “counterexamples” (“many”, “few”), that indicates the degree of available counterexamples to a conditional rule, “type” (“affirmative”, “denial”) which describes the type of inference (MP and AC are affirmative, MT and DA are denial), and “p-validity” (“valid”, “invalid”), indicating whether an inference is p-valid in standard probability theory where premise and conclusions are seen as events (MP and MT are p-valid, while AC and DA are not). For each of the 29 participants, there exist 16 observations corresponding to all possible combinations of inference and conditional rule, giving a total of 464 observations, which are grouped along individuals by the clustering variable “code”. A mixed effects logistic regression model can be employed to investigate the probabilistic validity of conditional inference given the type of inference and conditional rule as captured by the covariates and all possible interactions thereof. A random intercept and random slope for the variable “counterexamples” are introduced to account for response heterogeneity between participants. Hence the model under consideration is given by

Simulation based estimates of the bias, variance, mean squared-error (MSE), and the probability of underestimation (PU), for MSPL, bglmer, and ML estimators based on \(10\,\,000\) samples from (7) at the MSPL estimates in Table 3. Parameter estimates on the boundary are discarded from the calculation of the simulation summaries. The number of samples used for the calculation of summaries per parameter is given in the rightmost panel (R)

where \(\varvec{\beta }= (\beta _0,\beta _1,\ldots ,\beta _8)\) are the fixed effects pertaining to the model matrix of the R model formula response \(\sim \) type * p.validity * counterexamples + (1+counterexamples|code). Gauss-Hermite quadrature is computationally challenging for multivariate random effect structures. For this reason glmer and bglmer do not offer it as an option. We approximate the likelihood of (7) about the parameters \(\varvec{\beta }\), \(\varvec{L}\) using Laplace’s method. We estimate the parameters \(\varvec{\beta }\), \(\varvec{L}\) by ML using the optimization routines CG (ML[CG]) and BFGS (ML[BFGS]) of the optimx R package (Nash 2014), bglmer from the blme R package Chung et al. (2013) using independent normal (bglmer[n]) and t (bglmer[t]) priors for the fixed effects and the default Wishart prior for the multivariate variance components. We also estimate the parameters using the proposed MSPL estimator with the fixed and random effects penalties of Sect. 4. The estimates are given in Table 3, where we denote the entries of \(\varvec{L}\) by \(l_{st}\), for \(s,t=1,2\). As in the Culcita example of Sect. 3, we encounter fixed effects estimates that are extreme on the logistic scale for ML[BFGS] and ML[CG]. We note that the strongly negative estimates for \(l_{22}\) in conjunction with the inflated asymptotic standard errors of the ML[BFGS] estimates are highly indicative of parameter estimates on the boundary of the parameter space, meaning that \(l_{22}\) is effectively estimated as zero. The degeneracy of the estimated variance components is even more striking for the bglmer estimates, which give estimates of \(l_{11},l_{21}\) greater than 28 in absolute value, which corresponds to estimated variance components greater than 800 in absolute value. This underlines that, as with the gamma prior penalty for univariate random effects, the Wishart prior penalty, while effective in preventing variance components being estimated as zero, cannot guard against infinite estimates for the variance components. We finally note that for the MSPL, all parameter estimates as well as their estimated standard errors appear to be finite. Further, while the variance components penalty guards against estimates that are effectively zero, the penalty induced shrinkage towards zero is not as strong as with the Wishart prior penalty of the bglmer routine.

To investigate the frequentist properties of the estimators on this data set, we repeat the simulation design of the Culcita data example from Sect. 3 for the conditional inference data at the MSPL estimate of Table 3. We point out the extremely low percentage of bglmer estimates without estimation issues that were used in the summary of Fig. 2. We note that the MSPL outperforms ML and bglmer, which incur substantial bias and variance due to their singular and infinite variance components estimates. Table 4, which shows the percentiles of the centered variance components estimates for each estimator, shows that ML and bglmer return heavily distorted variance components estimates, reflecting the fact that these estimators are unable to fully guard against degenerate variance components estimates. A comprehensive simulation summary for all parameters is given in Figure S1 and Table S2 of the Supplementary Material document.

9 Concluding remarks

This paper proposed the MSPL estimator for stable parameter estimation in mixed effects logistic regression. The method has been found, both theoretically and empirically, to have superior finite sample properties to the maximum penalized likelihood estimator proposed in Chung et al. (2013). We showed that penalizing the log-likelihood by scaled versions of the Jeffreys’ prior for the model with no random effects and of a composition of the negative Huber loss gives estimates in the interior of the parameter space. Scaling the penalty function appropriately preserves the optimal ML asymptotics, namely consistency, asymptotic normality and Cramér-Rao efficiency.

We note that the conditions of Theorems B.1 and B.2 that are used for establishing the consistency and asymptotic normality of the MSPL estimator in Sect. 7 are merely sufficient; there may be other sets of conditions that lead to the same results.

While the MSPL is particularly relevant for mixed effects logistic regression, the concept is far more general and we expect it to be useful in other settings, for which degenerate ML estimates are known to occur, such as GLMMs with categorical or ordinal responses. The composite negative Huber loss penalty can be readily applied to other GLMMs, to prevent singular variance components estimates. We point out that the bound on the partial derivatives of the Jeffreys’ prior in Theorem C.1 for a logistic GLM extends to the cauchit link up to a constant; bounds for other link functions, like the probit and the complementary log-log are the subject of current work.

10 Supplementary materials

The supplementary material to this paper is available at https://github.com/psterzinger/softpen_supplementary, and consists of the three folders “Scripts”, “Data”, “Results”, and a Supplementary Material document, which provides further outputs and additional simulation studies. The “Scripts” directory contains all R scripts to reproduce the numerical analyses, simulations, graphics and tables in the main text and the Supplementary Material document. The “Data” directory contains the data used for the numerical examples in Sects. 3 and 8, and the “Results” directory provides all results from the numerical experiments and analyses in the main text and the Supplementary Material. All numerical results are replicable in R version 4.2.2 (2022-10-31), and with the following packages: blme 1.0-5 (Chung et al. 2013), doMC 1.3.8 (Revolution Analytics and Weston 2022), dplyr 1.0.10 (Wickham et al. 2022), lme4 1.1-31 (Bates et al. 2015), MASS 7.3-58 (Venables and Ripley 2002), Matrix 1.5-3 Bates et al. (2022), numDeriv 2016.8-1.1 (Gilbert and Varadhan 2019), optimx 2022-4.30 (Nash 2014).

References

Bates, D., Mächler, M., Bolker, B., Walker, S.: Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67(1), 1–48 (2015)

Bates, D., Mächler, M., Jagan, M.: Matrix: Sparse and Dense Matrix Classes and Methods. R package version 1.5–3 (2022)

Bolker, B.M.: Linear and generalized linear mixed models. In: Fox, G.A., Negrete-Yankelevich, S., Sosa, V.J. (eds.) Ecological Statistics, pp. 309–333. Oxford University Press, Oxford (2015)

Bolker, B.M., Brooks, M.E., Clark, C.J., Geange, S.W., Poulsen, J.R., Stevens, M.H.H., White, J.-S.S.: Generalized linear mixed models: a practical guide for ecology and evolution. Trends Ecol. Evol. 24(3), 127–135 (2009)

Breslow, N.E., Clayton, D.G.: Approximate inference in generalized linear mixed models. J. Am. Stat. Assoc. 88(421), 9–25 (1993)

Browne, W.J., Draper, D.: A comparison of Bayesian and likelihood-based methods for fitting multilevel models. Bayesian Anal. 1(3), 473–514 (2006)

Chung, Y., Rabe-Hesketh, S., Dorie, V., Gelman, A., Liu, J.: A nondegenerate penalized likelihood estimator for variance parameters in multilevel models. Psychometrika 78(4), 685–709 (2013)

Chung, Y., Gelman, A., Rabe-Hesketh, S., Liu, J., Dorie, V.: Weakly informative prior for point estimation of covariance matrices in hierarchical models. J. Educ. Behav. Stat. 40(2), 136–157 (2015)

Gelman, A., Jakulin, A., Pittau, M.G., Su, Y.-S.: A weakly informative default prior distribution for logistic and other regression models. Ann. Appl. Stat. 2(4), 1360–1383 (2008)

Gilbert, P., Varadhan, R.: numDeriv: accurate numerical derivatives. R Package Vers. 2016(8–1), 1 (2019)

Harville, D.A.: Matrix algebra from a statistician’s perspective (1998)

Heinze, G., Schemper, M.: A solution to the problem of separation in logistic regression. Stat. Med. 21(16), 2409–2419 (2002)

Jiang, J.: Asymptotic Analysis of Mixed Effects Models: Theory, Applications, and Open Problems. Chapman and Hall/CRC, Boca Raton (2017)

Jin, S., Andersson, B.: A note on the accuracy of adaptive Gauss–Hermite quadrature. Biometrika 107(3), 737–744 (2020)

Konis, K.: Linear programming algorithms for detecting separated data in binary logistic regression models. Ph.D. thesis, University of Oxford (2007)

Kosmidis, I., Firth, D.: Jeffreys-prior penalty, finiteness and shrinkage in binomial-response generalized linear models. Biometrika 108(1), 71–82 (2021)

Kosmidis, I., Schumacher, D.: detectseparation: Detect and Check for Separation and Infinite Maximum Likelihood Estimates. R package version 0.2 (2021)

Liu, Q., Pierce, D.A.: A note on Gauss–Hermite quadrature. Biometrika 81(3), 624–629 (1994)

Magnus, J.R., Neudecker, H.: Matrix Differential Calculus with Applications in Statistics and Econometrics. Wiley, Hoboken (2019)

McCullagh, P., Nelder, J.A.: Generalized Linear Models, 2nd edn. Chapman & Hall/CRC, Boca Raton (1989)

McCulloch, C.E.: Maximum likelihood algorithms for generalized linear mixed models. J. Am. Stat. Assoc. 92(437), 162–170 (1997)

McCulloch, C.E., Searle, S.R., Neuhaus, J.M.: Generalized, Linear, and Mixed Models, vol. 2. Wiley, Hoboken (2008)

McKeon, C.S., Stier, A.C., McIlroy, S.E., Bolker, B.M.: Multiple defender effects: synergistic coral defense by mutualist crustaceans. Oecologia 169(4), 1095–1103 (2012)

Nash, J.C.: On best practice optimization methods in R. J. Stat. Softw. 60(2), 1–14 (2014)

Ogden, H.: On asymptotic validity of Naive inference with an approximate likelihood. Biometrika 104(1), 153–164 (2017)

Ogden, H.: On the error in Laplace approximations of high-dimensional integrals. Stat 10(1), e380 (2021)

Pasch, B., Bolker, B.M., Phelps, S.M.: Interspecific dominance via vocal interactions mediates altitudinal zonation in neotropical singing mice. Am. Nat. 182(5), E161–E173 (2013)

Pinheiro, J.C., Bates, D.M.: Approximations to the log-likelihood function in the nonlinear mixed-effects model. J. Comput. Graph. Stat. 4(1), 12–35 (1995)

Pinheiro, J.C., Chao, E.C.: Efficient Laplacian and adaptive Gaussian quadrature algorithms for multilevel generalized linear mixed models. J. Comput. Graph. Stat. 15(1), 58–81 (2006)

R Core Team: R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna (2022)

Raudenbush, S.W., Yang, M.-L., Yosef, M.: Maximum likelihood for generalized linear models with nested random effects via high-order, multivariate Laplace approximation. J. Comput. Graph. Stat. 9(1), 141–157 (2000)

Revolution Analytics, Weston, S.: doMC: Foreach Parallel Adaptor for ‘parallel’. R package version 1(3), 8 (2022)

Rigollet, P.: 18.S997 High-dimensional statistics. Massachusetts Institute of Technology, MIT OpenCourseWare (2015). https://ocw.mit.edu

Rodriguez, G., Goldman, N.: An assessment of estimation procedures for multilevel models with binary responses. J. R. Stat. Soc. Ser. A (Stat. Soc.) 158(1), 73–89 (1995)

Schall, R.: Estimation in generalized linear models with random effects. Biometrika 78(4), 719–727 (1991)

Singmann, H., Klauer, K.C., Beller, S.: Probabilistic conditional reasoning: disentangling form and content with the dual-source model. Cogn. Psychol. 88, 61–87 (2016)

Stringer, A., Bilodeau, B.: Fitting generalized linear mixed models using adaptive quadrature (2022)

Vaart, A.W.: Asymptotic Statistics. Cambridge Series in Statistical and Probabilistic Mathematics, Cambridge University Press, Cambridge (1998)

Venables, W.N., Ripley, B.D.: Modern Applied Statistics with S, 4th edn. Springer, New York. ISBN 0-387-95457-0 (2002)

Wickham, H., François, R., Henry, L., Mller, K.: dplyr: a grammar of data manipulation. R Package Vers. 1, 9 (2022)

Wolfinger, R., O’connell, M.: Generalized linear mixed models a pseudo-likelihood approach. J. Stat. Comput. Simul. 48(3–4), 233–243 (1993)

Zehna, P.W.: Invariance of maximum likelihood estimators. Ann. Math. Stat. 37(3), 744–744 (1966)

Zhao, Y., Staudenmayer, J., Coull, B.A., Wand, M.P.: General design Bayesian generalized linear mixed models. Stat. Sci. 21(1), 35–51 (2006)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

A Theorem A.1

Theorem A.1

Let \(\tilde{\varvec{L}} \in \Re ^{q \times q}\) be a real, lower triangular matrix with finite entries and strictly positive entries on its main diagonal. Then \(\tilde{\varvec{\Sigma }} = \tilde{\varvec{L}}\tilde{\varvec{L}}^\top \) is not degenerate.

Proof

Recall that a variance–covariance matrix is not degenerate if it is positive definite with finite entries, implying correlations away from one in absolute value. We prove each property in turn. To see that \(\tilde{\varvec{\Sigma }}\) is positive-definite, take any \(\varvec{x}\in \Re ^q:\varvec{x}\ne \varvec{0}_q\). Then by straightforward manipulations

where \(\langle \cdot ,\cdot \rangle \) denotes the standard Euclidean inner product. Hence \(\tilde{\varvec{\Sigma }}\) is positive semi-definite. Suppose that there is some \(\varvec{x} \in \Re ^q\) such that \(\varvec{x}^\top \varvec{\Sigma }\varvec{x}=0\). Then by (9), \(\langle \tilde{\varvec{L}}^\top \varvec{y}, \tilde{\varvec{L}}^\top \varvec{y} \rangle = 0\) which holds if and only if \(\tilde{\varvec{L}}^\top \varvec{y}=\varvec{0}_q\). Now since \(\tilde{\varvec{L}}\) is lower triangular with strictly positive diagonal entries, it is full rank. But then \(\varvec{y} = \tilde{\varvec{L}}^\top \varvec{x}=\varvec{0}_q\) implies that \(\varvec{x} = \varvec{0}_q\) so that \(\tilde{\varvec{\Sigma }}\) is positive definite. To prove that \(\tilde{\varvec{\Sigma }}\) has finite entries, note that \(\tilde{\varvec{\Sigma }}_{st} = \langle \tilde{\varvec{l}}_s,\tilde{\varvec{l}}_t \rangle \), where \(\tilde{\varvec{l}}_s\) is the sth row vector of \(\tilde{\varvec{L}}\). Since all elements of \(\tilde{\varvec{l}}_s,\tilde{\varvec{l}}_t\) are finite, so is their inner product. Finally, towards a contradiction, assume that \(\tilde{\varvec{\Sigma }}\) implies correlations of one in absolute value. Then there exist indices \(s,t, s \ne t\) such that

where \(\Vert \varvec{x}\Vert = \sqrt{\langle \varvec{x},\varvec{x}\rangle }\) is the induced inner product norm. It follows from the Cauchy-Schwarz inequality that the last equality holds if and only if \(\tilde{\varvec{l}}_s,\tilde{\varvec{l}}_t\) are linearly dependent. Since \(\tilde{\varvec{L}}\) is lower triangular, this is only possible if \(\tilde{\varvec{l}}_s,\tilde{\varvec{l}}_t\) have zeros in the same positions. But since all diagonal entries of \(\tilde{\varvec{L}}\) are strictly positive, this is not possible. \(\square \)

B Consistency and asymptotic normality of MPL estimators

1.1 B.1 Setup

Suppose that we observe the values \(\varvec{y}_1, \ldots , \varvec{y}_k\) of a sequence of random vectors \(\varvec{Y}_1, \ldots , \varvec{Y}_k\) with \(\varvec{y}_i = (y_{i1}, \ldots , y_{in_i})^\top \in \mathcal {Y} \subset \Re ^{n_i}\), possibly with a sequence of covariate vectors \(\varvec{v}_1, \ldots , \varvec{v}_k\), with \(\varvec{v}_i = (v_{i1}, \ldots , v_{is})^\top \in \mathcal {X} \subset \Re ^{s}\). Let \(\varvec{Y}= (\varvec{Y}_1^\top , \ldots , \varvec{Y}_k^\top )^\top \), and denote by \(\varvec{V}\) the set of \(\varvec{v}_1, \ldots , \varvec{v}_k\). Further, assume that the data generating process of \(\varvec{Y}\) conditional on \(\varvec{V}\) has a density or probability mass function \(f(\varvec{Y}\mid \varvec{V}; \varvec{\theta })\), indexed by a parameter \(\varvec{\theta }\in \Theta \subset \Re ^d\). Denote the parameter that identifies the conditional distribution of \(\varvec{Y}\) given \(\varvec{V}\) by \(\varvec{\theta }_0\in \Theta \).

Define the ML estimator as \({\hat{\varvec{\theta }}} = \arg \max _{\varvec{\theta }\in \Theta } \ell (\varvec{\theta })\), where \(\ell (\varvec{\theta }) = \log f(\varvec{Y}\mid \varvec{V}; \varvec{\theta })\), and let \({\tilde{\varvec{\theta }}}\) be the MPL estimator \({\tilde{\varvec{\theta }}} = \arg \max _{\varvec{\theta }\in \Theta }\{\ell (\varvec{\theta }) + P(\varvec{\theta }) \}\), where \(P(\varvec{\theta })\) is an additive penalty to \(\ell (\varvec{\theta })\) that may depend on \(\varvec{Y}\) and \(\varvec{V}\). Consistency and asymptotic normality of the proposed MPL estimator follow readily from similar such results for ML estimators in Ogden (2017) where the approximation error to the log-likelihood is an additive error term. In fact, the results presented in this section are a direct translation of the work in Ogden (2017), where the term “approximation error” is replaced by “penalty function”, and by allowing the rate of information accumulation to vary across the components of the parameter vector \(\varvec{\theta }\). Finally, let \({\left\| \cdot \right\| }\) be some vector norm and \({\left| \left| \left| \cdot \right| \right| \right| }\) be the corresponding operator norm.

1.2 B.2 Consistency

The consistency of \(\tilde{\varvec{\theta }}\) can be established under the following regularity conditions on the log-likelihood gradient (see Vaart 1998 Chapter 5) and the penalty gradient.

- A1:

-

\(\ell (\varvec{\theta })\) is differentiable with gradient \(S(\varvec{\theta })\).

- A2:

-

\({\sup }_{\varvec{\theta }\in \Theta } \; {\left\| R_n^{-1} S(\varvec{\theta }) - S_0(\varvec{\theta }) \right\| } \overset{p}{\rightarrow }0\) for some deterministic function \(S_0(\varvec{\theta })\), where \(R_n\) is a diagonal matrix whose diagonal elements diverge to \(+\infty \) as n grows.

- A3:

-

For all \(\varepsilon >0\), \({\inf }_{\varvec{\theta }\in \Theta : {\left\| \varvec{\theta }-\varvec{\theta }_0 \right\| }\ge \varepsilon } {\left\| S_0(\varvec{\theta }) \right\| }>0 = {\left\| S_0(\varvec{\theta }_0) \right\| }\).

- A4:

-

\(P(\varvec{\theta })\) is differentiable with gradient \(A(\varvec{\theta })\).

Define \(\hat{\varvec{\theta }}\) and \(\tilde{\varvec{\theta }}\) to be such that \(S(\hat{\varvec{\theta }}) = \varvec{0}_d\) and \(S(\tilde{\varvec{\theta }}) + A(\tilde{\varvec{\theta }}) = \varvec{0}_d\), respectively.

Theorem B.1

(Consistency) Suppose that A1–A4 hold and \({\sup }_{\varvec{\theta }\in \Theta } \, {\left\| R_n^{-1}A(\varvec{\theta }) \right\| } = o_p(1)\). Then, \(\tilde{\varvec{\theta }}\overset{p}{\rightarrow }\ \varvec{\theta }_0\).

Proof

The proof is analogous to the proof of Ogden (2017, Theorem 1) and follows from. Vaart (1998, Theorem 5.9) on the consistency of M-estimators. Vaart (1998, Theorem 5.9) states that under assumptions A2 and A3 about the log-likelihood gradient, if \({\left\| R_n^{-1}S(\tilde{\varvec{\theta }}) \right\| } = o_p(1)\) then \(\tilde{\varvec{\theta }}\overset{p}{\rightarrow }\ \varvec{\theta }_0\). It holds that

The second equality follows from the definition of \({\tilde{\varvec{\theta }}}\), and the last equality follows from the assumption that \({\sup }_{\varvec{\theta }\in \Theta } \, {\left\| R_n^{-1}A(\varvec{\theta }) \right\| } = o_p(1)\).

\(\square \)

1.3 B.3 Asymptotic normality

The asymptotic normality of \(\tilde{\varvec{\theta }}\) can be established under the following conditions

- A5:

-

\(\ell (\varvec{\theta })\) is three times differentiable.

- A6:

-

\(\sup _{\varvec{\theta }\in \Theta } {\left| \left| \left| R_n^{-1/2}J(\varvec{\theta })R_n^{-1/2} -I(\varvec{\theta }) \right| \right| \right| } \overset{p}{\rightarrow }\ 0\) for some positive definite O(1) matrix \(I(\varvec{\theta })\), that is continuous in \(\varvec{\theta }\) in a neighbourhood around \(\varvec{\theta }_0\), where \(R_n\) is a diagonal matrix whose diagonal elements diverge to \(+\infty \) as n grows.

- A7:

-

\(R_n^{1/2}({\hat{\varvec{\theta }}}-\varvec{\theta }_0) \overset{d}{\rightarrow } \text {N}(0,I(\varvec{\theta }_0)^{-1})\).

- A8:

-

\(\tilde{\varvec{\theta }}\overset{p}{\rightarrow }\ \varvec{\theta }_0\).

- A9:

-

\(P(\varvec{\theta })\) is three times differentiable.

Theorem B.2

(Asymptotic Normality) Suppose that A5-A9 hold, and \({\sup }_{\varvec{\theta }\in \Theta } \, {\left\| R_n^{-1/2}A(\varvec{\theta }) \right\| } =~o_p(1)\). Then, \(R_n^{1/2}(\tilde{\varvec{\theta }}-\varvec{\theta }_0) \overset{d}{\rightarrow } \text {N}(0,I(\varvec{\theta }_0)^{-1})\).

Proof

We show that \(R_n^{1/2}(\tilde{\varvec{\theta }} -\hat{\varvec{\theta }}) = o_p(1)\), which by A7, establishes the claim. Let \(S(\varvec{\theta })\) be the gradient of \(\ell (\varvec{\theta })\) and \(J(\varvec{\theta }) = -\nabla \nabla ^\top \ell (\varvec{\theta })\) and consider a first-order Taylor expansion of \(S(\varvec{\theta })\) around \(\hat{\varvec{\theta }}\). Then premultiplying by \(R_n^{-1}\) gives

where \(\varvec{\theta }^*\) lies between \(\varvec{\theta }\) and \(\hat{\varvec{\theta }}\) and the second equality follows by the definition of \(\hat{\varvec{\theta }}\) and \(J(\varvec{\theta })\). Hence

where the first equality follows by the definition of \(\tilde{\varvec{\theta }}\), and the second from substituting the right hand side of (11) for \(S(\tilde{\varvec{\theta }})\). Therefore

Note that by A6, and an application of the continuous mapping theorem (see for example Vaart (1998, Theorem 2.1), \({\left| \left| \left| [R_n^{-1/2}J(\varvec{\theta }^*)R_n^{-1/2}]^{-1}\right| \right| \right| } =~{\mathcal {O}_p(1)}\). Hence,

where the first inequality follows from the definition of the operator norm, and the last line from the assumption of the Theorem. Therefore \(R_n^{1/2}(\tilde{\varvec{\theta }} -\hat{\varvec{\theta }}) = o_p(1)\) as required. \(\square \)

1.4 B.4 Approximate likelihoods

We note that the large sample results for the MPL estimator derived here operate under the assumption that \(\ell (\varvec{\theta })\) is the exact log-likelihood. If \(\bar{\ell }(\varvec{\theta })\) is an approximation to the exact log-likelihood and \(\bar{\varvec{\theta }}\) is the maximizer of the penalized approximate likelihood, then consistency and asymptotic normality of \(\bar{\varvec{\theta }}\) can be established under extra conditions on \(\bar{S}(\varvec{\theta })\), the gradient of the approximate likelihood \(\bar{\ell }(\varvec{\theta })\). In particular, for consistency it is sufficient that \({\sup }_{\varvec{\theta }\in \Theta } \, {\left\| R_n^{-1} \left\{ \bar{S}(\varvec{\theta })-S(\varvec{\theta }) \right\} \right\| }=o_p(1)\), and for asymptotic normality it is sufficient that \(\bar{\ell }(\varvec{\theta })\) is three-times differentiable and \({\sup }_{\varvec{\theta }\in \Theta } \, {\left\| R_n^{-1/2} \left\{ \bar{S}(\varvec{\theta })-S(\varvec{\theta }) \right\} \right\| }=o_p(1)\). In this instance, one can replace all occurrences of \(A(\varvec{\theta })\) by \(A(\varvec{\theta }) + \bar{S}(\varvec{\theta })-S(\varvec{\theta })\) in the proofs of Theorems B.1 and B.2. A simple application of the triangle inequality establishes that \({\sup }_{\varvec{\theta }\in \Theta } {\left\| R_n^{-c}\left\{ A(\varvec{\theta }) + \bar{S}(\varvec{\theta })-S(\varvec{\theta })\right\} \right\| } = o_p(1)\) as in the assumptions of Theorem B.1 with \(c = 1\), and Theorem B.2 with \(c = 1/2\), and thus the proofs apply. We refer the reader to Ogden (2021) for approximation errors to the log-likelihood in clustered GLMMs using Laplace’s method, Ogden (2017) for approximation errors to the gradient of the log-likelihood with an example for an intercept-only Bernoulli-response GLMM, Stringer and Bilodeau (2022) for approximation errors to the log-likelihood in clustered GLMMs using adaptive Gauss–Hermite quadrature and Jin and Andersson (2020) for general approximation errors for adaptive Gauss–Hermite quadrature.

C Bound on the gradient of the logarithm of the Jeffreys’ prior

Theorem C.1

(Bound on the partial derivative of the logarithm of Jeffreys’ prior)

Let \(\varvec{X} \) be the \(n\times p\) full column rank matrix defined in Sect. 2, and \(\varvec{W}\) a block-diagonal matrix with blocks \(\varvec{W}_i\) as defined in Sect. 4 (\(i=1,\ldots ,k\)). Then

where \(x_{ts}\) denotes the tth element in the sth column of \(\varvec{X}\).

Proof

We shall find it notationally convenient to neglect the block-structure of \(\varvec{X}\) and refer to the tth element of the sth column of \(\varvec{X}\) by \(x_{ts}\) and define \(\mu _t^{(f)}(\varvec{\beta }) = \exp (\eta _t^{(f)}(\varvec{\beta }))/(1+\exp (\eta _t^{(f)}(\varvec{\beta })))\) for \(\eta _t^{(f)}(\varvec{\beta }) = \varvec{x}_t^\top \varvec{\beta }\), and where \(\varvec{x}_t^\top \) is the tth row of \(\varvec{X}\). It is noted without proof that

where \(\widetilde{\varvec{W}}_s\) is a diagonal matrix with main-diagonal entries \(\widetilde{w}^{(s)}_{t}= x_{ts}(1-2\mu _t^{(f)}(\varvec{\beta }))\). Now by the cyclical property of the trace, it follows that

For notational brevity, denote the projection matrix \(\varvec{X}(\varvec{X}^\top \varvec{W}\varvec{X})^{-1}\varvec{X}^\top \varvec{W}\) by \(\varvec{P}\). Since \(\widetilde{\varvec{W}}_s\) is a diagonal matrix, one gets that

Here the second line is due to the triangle inequality. The third line follows by nonnegativity of the main-diagonal elements of \(\varvec{P}\). To see this, note that \(\varvec{X}^\top \varvec{W}\varvec{X}\) is positive definite as \(\varvec{X}\) has full column rank and \(\varvec{W}\) is a diagonal matrix with positive entries. It thus follows that \((\varvec{X}^\top \varvec{W}\varvec{X})^{-1}\) is positive definite. Hence for any \(\varvec{y} \in \Re ^{n}, \Vert \varvec{y}\Vert _2\ne 0\), \(\varvec{y}^\top \varvec{X}(\varvec{X}^\top \varvec{W}\varvec{X})^{-1}\varvec{X} \varvec{y} = \tilde{\varvec{y}}^\top (\varvec{X}^\top \varvec{W}\varvec{X})^{-1} \tilde{\varvec{y}} \ge 0\), for \(\tilde{\varvec{y}} = \varvec{X}\varvec{y}\), so that \(\varvec{X}(\varvec{X}^\top \varvec{W}\varvec{X})^{-1}\varvec{X}\) is positive semi-definite. It is well known that the main diagonal entries of a positive semi-definite matrix are nonnegative. Hence, as \(\varvec{W}\) is a diagonal matrix with nonnegative diagonal entries it follows that the main diagonal entries of \(\varvec{P}\), which are the elementwise product of the diagonals of \(\varvec{X}(\varvec{X}^\top \varvec{W}\varvec{X})^{-1}\varvec{X}\) and \(\varvec{W}\) are nonnegative. The fifth line follows since \(\varvec{P}\) is an idempotent matrix of rank p, and the fact that the trace of an idempotent matrix equals its rank (Harville 1998, Corollary 10.2.2). The fact that \(\varvec{P}\) has rank p follows from the assumption that \(\varvec{X}\) has full column rank and since \(\varvec{W}\) is invertible for any \(\varvec{\beta }\in \Re ^p,\varvec{X}\in \Re ^{n \times p}\) by construction and is a standard result in linear algebra (see for example Magnus and Neudecker (2019), Chapter 1.7). The last line follows since \(\mu _t^{(f)}(\varvec{\beta }) \in (0,1)\).

\(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sterzinger, P., Kosmidis, I. Maximum softly-penalized likelihood for mixed effects logistic regression. Stat Comput 33, 53 (2023). https://doi.org/10.1007/s11222-023-10217-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10217-3