Abstract

Herding is a deterministic algorithm used to generate data points regarded as random samples satisfying input moment conditions. This algorithm is based on a high-dimensional dynamical system and rooted in the maximum entropy principle of statistical inference. We propose an extension, entropic herding, which generates a sequence of distributions instead of points. We derived entropic herding from an optimization problem obtained using the maximum entropy principle. Using the proposed entropic herding algorithm as a framework, we discussed a closer connection between the herding and maximum entropy principle. Specifically, we interpreted the original herding algorithm as a tractable version of the entropic herding, the ideal output distribution of which is mathematically represented. We further discussed how the complex behavior of the herding algorithm contributes to optimization. We argued that the proposed entropic herding algorithm extends the herding to probabilistic modeling. In contrast to the original herding, the entropic herding can generate a smooth distribution such that both efficient probability density calculation and sample generation become possible. To demonstrate the viability of these arguments in this study, numerical experiments were conducted, including a comparison with other conventional methods, on both synthetic and real data.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

In a scientific study, we often collect data on the study object; however, its perfect information cannot be obtained. Therefore, we often have to perform statistical inference based on the available data, assuming a certain background distribution. One popular approach to statistical inference consists of adopting a distribution with the largest uncertainty, consistent with the available information. This is referred to as the maximum entropy principle (Jaynes 1957) because entropy is often used to measure uncertainty.

Specifically, for a distribution that has a probability mass (or density) function p(x), the (differential) entropy is defined as

where \({\mathbb {E}}_p\) denotes the expectation over p. Assume that the collected data are a set of averages \(\mu _m\) of feature values \(\varphi _m(x)\in {\mathbb {R}}\) for \(m\in {\mathcal {M}}\), where \({\mathcal {M}}\) is a set of indices. Based on the maximum entropy principle, we make the smallest assumption on the probability distribution p that the expectations of the feature values \({\mathbb {E}}_{p}\left[ \varphi _m(x)\right] \) are equal to the given values \(\mu _m\). Thus, the maximum entropy principle can be explicitly represented as the following optimization problem:

We can also represent it as the equivalent minimization problem \(\min _p \textrm{KL}({p}\Vert {q})\) with the same equality constraints, where \(\textrm{KL}({p}\Vert {q})\equiv {\mathbb {E}}_{p}\left[ \log p(x)/\log q(x)\right] \) denotes the Kullback–Leibler (KL) divergence.

The distribution p that the maximum entropy principle adopts is known as the Gibbs distribution:

where \({\varvec{\theta }}\) is the vector consisting of parameters \(\theta _m\) for \(m\in {\mathcal {M}}\), and \(Z_{\varvec{\theta }}\) is the coefficient that makes the total probability mass one (refer to Chen and Welling 2016 for further details). The parameters can be chosen by maximizing the likelihood of the data. However, if the distribution is defined in a high-dimensional or continuous space, parameter learning becomes difficult because approximations of the averages over the distribution are required.

The herding algorithm (Welling 2009b; Welling and Chen 2010) is another method that can be used to avoid parameter learning. This algorithm, which is represented as a dynamical system in a high-dimensional space, deterministically generates an apparently random sequence of data, where the average of feature values converges to the input target value \(\mu _m\). The generated sequence is treated as a set of pseudo-samples generated from the background distribution in the following analysis. Thus, we can bypass the difficult step of parameter learning of the Gibbs distribution model. A study by Welling (2009b) describes its motivation concerning the maximum entropy principle.

In this study, we propose an extension of herding, which is called the entropic herding. We use the proposed entropic herding as a framework to analyze the close connection between the herding and maximum entropy principle. The entropic herding outputs a sequence of distributions instead of points that greedily minimize the target function obtained from an explicit representation of the maximum entropy principle. The target function is composed of the error term of the average feature value and entropy term. We mathematically analyze the minimizer, which can be regarded as the ideal output of the entropic herding. We also argue that the original herding is obtained as a tractable version of the entropic herding and discuss the inherent characteristics of the herding concerning its complex behavior.

We further discuss the advantages of the proposed entropic herding, compared to the original, which we call the point herding. The advantages include the smoothness of the output distribution, availability of model validation based on the likelihood, and feasibility of random sampling from the output distribution.

This paper is organized as follows: We first introduce the original herding algorithm and notations in Sect. 2. Then, we derive the proposed entropic herding in Sect. 3. Using it as a framework, we discuss the relationship among the entropic herding, original herding, and maximum entropy principle in Sect. 4. Particularly in Sect. 4.5, we argue the advantages of the proposed entropic herding to the point herding. In Sect. 5, we show numerical examples using synthetic and real data. In Sect. 6, we further discuss its applications, its relationship to other existing methods, and future perspectives. Finally, we conclude our study in Sect. 7.

2 Herding

Let \({\mathcal {X}}\) be a set of possible data points. Let us consider a set of feature functions \(\varphi _m:{\mathcal {X}}\rightarrow {\mathbb {R}}\) indexed with \(m\in {\mathcal {M}}\), where \({\mathcal {M}}\) is the set of indices. Let M be the size of \({\mathcal {M}}\), and let the bold symbols denote M-dimensional vectors with indices in \({\mathcal {M}}\). Let \(\mu _m\) be the input target value of the feature mean, which can be obtained from the empirical mean of the features over the collected samples. The herding generates a sequence of points \(x^{(T)}\in {\mathcal {X}}\) for \(T=1,2,\ldots ,T_{\text {max}}\) according to the following equations:

where \(w_m^{(T)}\) are auxiliary weight variables, starting from initial values \(w_m^{(0)}\). These equations can be regarded as a dynamical system for \(w_m^{(T)}\) in M-dimensional space. The average of the features over the generated sequence converges as

at the rate of \(O(1/T_{\text {max}})\) (Welling 2009b). Therefore, the generated sequence reflecting the given information can be used as a set of pseudo-samples from the background distribution. This convergence is derived using equation \(w_m^{(T_{\text {max}})}-w_m^{(0)}=T_{\text {max}}\mu _m-\sum _{T=1}^{T_{\text {max}}}\varphi _m(x^{(T)})\) and the boundedness of \({\varvec{w}}^{(T_{\text {max}})}\). The equation is obtained by summing both sides of Eq. (6) for \(T=1,\ldots ,T_{\text {max}}\). The boundedness of \({\varvec{w}}^{(T_{\text {max}})}\) is guaranteed under some mild assumptions using the optimality of \(x^{(T)}\) in the maximization of Eq. (5) (Welling 2009b).

The probabilistic model (4) is also regarded as a Markov random field (MRF). The herding can be extended to the case in which only a subset of variables in an MRF is observed (Welling 2009a). Furthermore, it is combined with the kernel method to generate a pseudo-sample sequence for arbitrary distribution (Chen et al 2010). The steps of the herding can be applied to the update steps of Gibbs sampling (Chen et al 2016) and the convergence analysis is provided by Yamashita and Suzuki (2019).

3 Entropic herding and its target function representing the maximum entropy principle

In this section, we derive the proposed algorithm, which we refer to as the entropic herding. We introduce the target function in Sect. 3.1 and derive the algorithm from its optimization in Sect. 3.2.

Before deriving the proposed algorithm, we first present its overview. Let us suppose the same situation as in Sect. 2. For simplicity, we assume that \({\mathcal {X}}\) is continuous, although the arguments also hold when \({\mathcal {X}}\) is discrete. Let ± be the feature functions and \(\mu _m\) be the input target value of the feature mean. Additionally, two parameters, the step size \(\varepsilon ^{(T)}\) and scale parameter \(\Lambda _m\) for each condition \(m\in {\mathcal {M}}\), were also introduced to the algorithm. As described below, \(\Lambda _m\) also works as controlling the penalty for condition \({\mathbb {E}}_{p}\left[ \varphi _m\right] =\mu _m\). The proposed algorithm is an iterative process similar to the original herding; it also maintains time-varying weights \(a_m\) for each feature. Each iteration of the proposed algorithm indexed with T is composed of two steps as in the herding: the first step is to solve the optimization problem, and the second is the parameter update based on the solution. The proposed algorithm outputs a sequence of distributions \((r^{(T)})\) instead of points as is the case with the original herding. The two steps in each time step, which will be derived later, are represented as follows:

where \({\mathcal {Q}}\) is the set of candidate distributions of the output for each step and \(\eta _m(q)\equiv {\mathbb {E}}_{q}\left[ \varphi _m(x)\right] \) is the feature mean for the distribution q. The pseudocode of the algorithm is provided in Algorithm 1. The exact solution for the optimization problem Eq. (8) sometimes becomes computationally expensive. Thus, as will be described later, we can modify the algorithm by changing the set of candidate distributions \({\mathcal {Q}}\) and optimization method to reduce the computational cost, allowing a suboptimal solution for Eq. (8).

3.1 Target function

The proposed procedure summarized above is derived from the minimization of a target function as presented below.

Let us consider the problem of minimizing the following function:

This problem minimizes the difference between feature means over p and the target vector, and simultaneously maximizes the entropy of p. \(\Lambda _m\) can be regarded as the weights for moment conditions \(\eta _m(p)=\mu _m\). When \(\Lambda _m\rightarrow +\infty \) for all \(m\in {\mathcal {M}}\), the problem becomes an entropy maximization problem that requires a solution to satisfy the moment condition exactly. Thus, this can be interpreted as a relaxed form of the maximum entropy principle. Function \({\mathcal {L}}\) is convex because the negated entropy function \(-H(p)\) is convex.

Let \(\pi _{\varvec{\theta }}\) be the Gibbs distribution, whose density function is defined as Eq. (4). Suppose that there exists \({{\varvec{\theta }}^*}\) that satisfies the following equations

The conditions for the solution existence are provided in “Appendix C”. The functional derivative \(\frac{\delta {\mathcal {L}}}{\delta p}(x)\) of \({\mathcal {L}}\) at \(\pi _{{\varvec{\theta }}^*}\) is obtained as follows:

Suppose that we perturb the distribution \(\pi _{{\varvec{\theta }}^*}\) to p. Then, because \(\int _{{\mathcal {X}}}(\pi _{{\varvec{\theta }}^*}(x)-p(x))dx=1-1=0\), the inner product \(\langle \frac{\delta {\mathcal {L}}}{\delta p}, p-\pi _{{\varvec{\theta }}^*}\rangle \) becomes zero, where the inner product is defined as \(\langle f, g\rangle =\int _{{\mathcal {X}}} f(x)g(x)dx\). Therefore, \(\pi _{{\varvec{\theta }}^*}\) is the optimal distribution for the problem owing to the convexity of \({\mathcal {L}}\). If \(\Lambda _m\) is sufficiently large, the feature mean \(\eta _m(\pi _{{\varvec{\theta }}^*})\) for the optimal distribution is close to the target value \(\mu _m\) from Eq. (11).

3.2 Greedy minimization

Let us consider the following greedy construction of the solution for the minimization problem of \({\mathcal {L}}\). Let \(r^{(T)}\) be a distribution that is selected at time T and \(p^{(T)}\) be the distribution to be constructed as the weighted average of the sequence of distributions \(\{r^{(t)}\}_{t = 0}^{T}\):

where \(\rho ^{(t)}\) are given as a fixed sequence of parameters. We greedily construct tentative solution \(p^{(T)}\) by choosing \(r^{(T)}\) for each time step \(T=1,\ldots ,T_{\text {max}}\) by minimizing the loss value \({\mathcal {L}}^{(T)}\equiv {\mathcal {L}}(p^{(T)})\) for

where \(\rho ^{t,T}=\rho ^{(t)}/(\sum _{t=0}^{T}\rho ^{(t)})\). Feature mean \(\eta _m(p^{(T)})\) for each step is also represented as a weighted sum:

For particular types of weight sequences \((\rho ^{(T)})\), the feature means can be iteratively computed based on previous time instances as follows:

For example, if the coefficients geometrically decay at rate \(\rho \in (0,1)\) as \(\rho ^{(T)}=\rho ^{T_{\text {max}}-T}\) for \(T>0\) and \(\rho ^{(0)} = \rho ^{T_{\text {max}}} / (1-\rho )\), we can update the feature mean with \(\varepsilon ^{(T)}=1-\rho \). If \(\rho ^{(T)}\) is constant over T, then we set \(\varepsilon ^{(T)}=\frac{1}{T+1}\).

We approximate the entropy term \(H(p^{(T)})\) by the weighted sum of the components:

Similarly, the update equation for \(\tilde{H}\) is obtained as

The minimization problem to be solved at time T is given as follows:

From the convexity of negated entropy, \(\tilde{H}^{(T)}\) is the lower bound of \(H(p^{(T)})\); hence, \(\tilde{{\mathcal {L}}}^{(T)}\) is the upper bound of the original target function \({\mathcal {L}}^{(T)}\).

The step of Eq. (8) is derived from the greedy construction of the solution that minimizes \(\tilde{{\mathcal {L}}}\) as follows. Using Eqs. (18) and (20), the target function can be rewritten as

For all T, let us define

Subsequently, \(\tilde{{\mathcal {L}}}^{(T)}\) also follows the update rule similar to Eq. (18) up to the linear term regarding \(\varepsilon ^{(T)}\):

If \(\varepsilon ^{(T)}\) is small, by neglecting the higher-order term \(O((\varepsilon ^{(T)})^2)\), the optimization problem at time T can be reduced to a minimization of \(F^{(T-1)}(q)\) for \(q\in {\mathcal {Q}}\), where

From Eq. (18) and (23), the coefficients \(a_m^{(T)}\) follow the update rule as

which is equivalent to Eq. (9).

In summary, the proposed algorithm is derived from the greedy construction of the solution of the optimization problem \(\min {\mathcal {L}}(q)\): it selects distribution \(r^{(T)}\) in each step by minimizing the tentative loss. The minimization can be reduced to the minimization of \(F^{(T-1)}(r^{(T)})\) that has a parametrized form, and the algorithm updates the parameters by calculating their temporal differences. The algorithm thus derived, referred to as the entropic herding, has a form similar to the original herding.

4 Entropy maximization in herding

Let us further study the relationship between the entropic herding, the original herding, and the maximum entropy principle. First, in Sect. 4.1, we compare the entropic herding with the parameter learning of the Gibbs distribution in terms of the maximum entropy principle. We then discuss the similarities and differences between the two herding algorithms. By introducing a tractable version of the entropic herding by restricting the search space in Sect. 4.2, we show that it is an extension of the original herding algorithm in Sect. 4.3. We investigate the roles of each component of the two herding algorithms by comparing them in Sect. 4.4, and we compare them from an application perspective in Sect. 4.5.

4.1 Target function minimization and parameter learning of the Gibbs distribution

Figure 1 is a schematic of the relationship and difference between the entropic herding and parameter learning of the Gibbs distribution. Each point in the figure represents a probability distribution in the space of all distributions on \({\mathcal {X}}\). The vertical axis, which is represented by the dotted arrow, represents the entropy value.

If we fix the value of the moment vector \({\varvec{\mu }}\), we can find various distributions that satisfy all the moment conditions \(\eta _m(p)=\mu _m\) (\(\forall m\in {\mathcal {M}}\)), which are represented by the vertical dotted line. The top-most point of the line represents the distribution with the highest entropy value under the moment condition. Thus, for moment vector \({\varvec{\mu }}\), Gibbs distribution \(\pi _{\hat{\varvec{\theta }}}\) is obtained by entropy maximization (red arrow) whose search space is represented by the dotted line.

The family of Gibbs distributions represented as Eq. (4) are denoted by the black solid curve. We can also try to find \(\pi _{\hat{\varvec{\theta }}}\) by the parameter learning of \({\varvec{\theta }}\) represented by the green arrow along this curve.

The process of entropic herding, or more specifically, the sequence of tentative solutions \(p^{(T)}\), is represented by the arrowed blue curve. Contrary to the parameter learning of the Gibbs distribution, it approaches the target \(\pi _{\hat{\theta }}\) by minimizing target function \({\mathcal {L}}\) that simultaneously represents both moment condition and entropy maximization. The search space, which is represented by Eq. (15), is different from the above two.

4.2 Tractable entropic herding

Weneglected \(O((\varepsilon ^{(T)})^2)\) terms in Eq. (26) because we are assuming that \(\varepsilon ^{(T)}\) is small. Then, we have

because \(\tilde{H}^{(T-1)}\le H(p^{(T-1)})\). If we can find the optimum distribution at time T, then \(F^{(T-1)}(r^{(T)})\) is less than \(F^{(T-1)}(p^{(T-1)})\) unless \(p^{(T-1)}\) is the optimum. Then, \(\tilde{{\mathcal {L}}}^{(T)}\) will be smaller than \(\tilde{{\mathcal {L}}}^{(T-1)}\). This corresponds to the theorem of the herding (Corollary, Welling 2009b Section 4) stating that \(\sum ((1/T_{\text {max}})\sum _{T=1}^{T_{\text {max}}}\varphi _m(x^{(T)})-\mu _m)^2\) is bounded if we can find the optimum \(x^{(T)}\) in each optimization (Eq. (5)).

Let \(\pi _{{\varvec{a}}^{(T-1)}}\) be the Gibbs distribution defined as Eq. (4) with parameters \({\varvec{\theta }}= {\varvec{a}}^{(T-1)}\). The optimization problem of minimizing \(F^{(T-1)}(q)\) is equivalent to minimizing KL divergence \(\textrm{KL}({q}\Vert {\pi _{{\varvec{a}}^{(T-1)}}})\), the solution of which is given by \(q=\pi _{{\varvec{a}}^{(T-1)}}\) as follows:

However, the Gibbs distribution is known to produce difficulties in applications such as the calculation of expectations. Fortunately, a suboptimal distribution \(r^{(T)}\) may be sufficient for decreasing \(\tilde{{\mathcal {L}}}\), such as the tractable version of the herding introduced in Welling (2009a). Practically, we can perform the optimization on a restricted set of distributions, as in variational inference, and allow a suboptimal solution for the optimization step (Eq. (8)). Let \({\mathcal {Q}}\) denote the set of candidate distributions. We refer to this tractable version of the proposed algorithm as the entropic herding, contrary to the algorithm using the exact solution \(r^{(T)}=\pi _{{\varvec{a}}^{(T-1)}}\).

4.3 Point herding

Subsequently, we show that the proposed algorithm can be interpreted as an extension of the original herding. Recall that, in the original herding in Sect. 2, the optimization problem to generate sample \(x^{(T)}\) and the update rule of weight \(w_m^{(T)}\) are represented in Eq. (5) and (6), respectively.

Let us consider the proposed algorithm with the candidate distribution set \({\mathcal {Q}}\) restricted to point distributions. Then, the distribution \(r^{(T)}\) is the point distribution at \(x^{(T)}\), which is obtained by solving

because the entropy term of the optimization problem Eq. (8) can be dropped. Let us further suppose that \(\varepsilon ^{(T)}=\frac{1}{T+1}\) and \(\Lambda _m\) are the same for all features. We introduce the variable transformation of weights as \({w'}_m^{(T+1)}=-\frac{T+1}{\Lambda _m}a_m^{(T)}\). Then, the above optimization problem is equivalent to Eq. (5) with relationship \(w_m={w'}_m\).

The update rule for \({w'}_m^{(T)}\) is

Because \(\eta _m(r^{(T)})=\varphi _m(x^{(T)})\) holds, this update rule is also equivalent to Eq. (6).

Therefore, the original herding can be interpreted as the tractable version of the proposed method by restricting \({\mathcal {Q}}\) to the point distributions. In the following, we refer to the original algorithm as point herding. In this case, weights \(\rho ^{(T)}\) in Eq. (15) are constant for all T because we set \(\varepsilon ^{(T)}=\frac{1}{T+1}\). This agrees with the fact that the point herding puts equal weights on the output sequence while taking the average of the features, the difference of which with the input moment is minimized (see Eq. (7)).

4.4 Diversification of output distributions by dynamic mixing in herding

The minimizer of Eq. (10) is Gibbs distribution \(\pi _{{{\varvec{\theta }}^*}}\), where \({{\varvec{\theta }}^*}\) is given by Eq. (11), but there is a gap between the distribution obtained from the tractable entropic herding and minimizer. This is because it solves the optimization problem with the approximate target function and by using sub-optimal greedy updates, as described in Sects. 3.2 and 4.2. Here, we discuss the inherent characteristics of the entropic herding to decrease the gap, which cannot be fully described as greedy optimization.

The target function for greedy optimization in the (tractable) entropic herding is \(\tilde{{\mathcal {L}}}\) (Eq. (21)). While deriving \(\tilde{{\mathcal {L}}}\) from \({\mathcal {L}}\), we introduced an approximation for the entropy term \(H(p^{(T)})\) by its lower bound \(\tilde{H}^{(T)}\), which is the weighted average of the entropy values of the components as defined in Eq. (19). The gap between \(H\) and \(\tilde{H}\) can be calculated using the following equations:

where \(H_{\rho }^{(T)}=-\sum _{t=0}^{T}\rho ^{t,T}\log \rho ^{t,T}\), \(H_{c}^{(T)}(x)=-\sum _{t=0}^{T}\frac{\rho ^{t,T}r^{(t)}(x)}{p^{(T)}(x)}\log \frac{\rho ^{t,T}r^{(t)}(x)}{p^{(T)}(x)}\). Then, the gap is represented by

If the coefficients \(\rho ^{t,T}\) are fixed, the gap is determined by \(H_{c}^{(T)}\). If this gap is positive, we have an additional decrease in the true target function \({\mathcal {L}}\) compared to the approximate target \(\tilde{{\mathcal {L}}}\) of the greedy minimization.

Suppose that \(t'\) is a random variable drawn with a probability of \(\rho ^{t',T}\), and x is drawn from \(r^{(t')}\). Then, \(H_{c}^{(T)}(x)\) can be interpreted as the average entropy of the conditional distribution of \(t'\), given x. If the probability weights assigned to x by components t, represented as \(\rho ^{t,T}r^{(t)}(x)\) are unbalanced over t, then \(H_{c}^{(T)}(x)\) is small such that gap \(H(p^{(T)})-\tilde{H}^{(T)}\) is large. Contrarily, if \(r^{(t)}\) are the same for all t such that \(r^{(t)}=p^{(T)}\), then \(H_{c}^{(T)}(x)=H_{\rho }^{(T)}\) for all x. Then, gap \(H(p^{(T)})-\tilde{H}^{(T)}\) becomes zero, which is the minimum value. That is, we achieve an additional decrease in \({\mathcal {L}}\) if the generated sequence of distributions \(r^{(t)}\) is diverse. This can happen if \({\varvec{a}}^{(t)}\) are diverse, as the optimization problems in Eq. (8), defined by the parameters \({\varvec{a}}^{(t)}\), become diverse. In the original herding, the weight dynamics is weakly chaotic, and thus, it can generate diverse samples. We also expect that the coupled system of Eqs. (8) and (9) in the high-dimensional space exhibits complex behavior as the original coupled system of the herding (Welling 2009b) does, and that it achieves diversity in \(\{{\varvec{a}}^{(t)}\}\) and \(\{r^{(t)}\}\). The extra decrease is bounded from above by \(H_{\rho }^{(T)}\), which usually increases as T increases. For example, if \(\rho ^{t,T}\) is constant over t, then \(H_{\rho }^{(T)}=\log (T+1)\).

In summary, the proposed algorithm, which is a generalization of the herding, minimizes loss function \({\mathcal {L}}\) using the following two means:

-

(a)

Explicit optimization: greedy minimization of \(\tilde{{\mathcal {L}}}\), which is the upper bound of \({\mathcal {L}}\), by solving Eq. (8) with the entropy term.

-

(b)

Implicit diversification: extra decrease of \({\mathcal {L}}\) from the diversity of components \(r^{(T)}\) achieved by the complex behavior of the coupled dynamics of the optimization and the update step (Eqs. (8) and (9), respectively).

The complex behavior of the herding contributes to the optimization implicitly through an increase of gap \(H-\tilde{H}\) by diversification of the samples. In addition, the proposed entropic herding can improve the output through explicit entropy maximization.

Thus, we can generalize the concept of the herding by regarding it as an iterative algorithm having two components as described above.

4.5 Probabilistic modeling with entropic herding

The extension of the herding to the proposed entropic herding expands its application as follows.

The first important difference introduced by the extension is that the output becomes a mixture of probabilistic distributions. Thus, we can consider the use of the entropic herding in probabilistic modeling. Let \(\pi _{\hat{\varvec{\theta }}}\) be the distribution obtained from the maximum entropy principle. For each time step T, the tentative distribution \(p^{(T)}=\sum _{t=0}^{T}\rho ^{t,T}r^{(t)}(x)\) is obtained by minimizing \({\mathcal {L}}\), which represents the maximum entropy principle. Then, we can expect it to be close to \(\pi _{\hat{\varvec{\theta }}}\) and use it as probabilistic model \(p_{\text {output}}\) derived from the data. It should be noted that the model thus obtained \(p_{\text {output}}=p^{(T)}\) is different from \(\pi _{\hat{\varvec{\theta }}}\). It includes the difference between \(\pi _{\hat{\varvec{\theta }}}\) and minimizer \(\pi _{{{\varvec{\theta }}^*}}\) of \({\mathcal {L}}\) by the finiteness of weights \(\Lambda _m\) in Eq. (10) and the difference between \(\pi _{{\varvec{\theta }}^*}\) and \(p_{\text {output}}\) because of the inexact optimization. We can also obtain another model \(p_{\text {output}}\) by further aggregating tentative distributions \(p^{(T)}\), expecting additional diversification. For example, we can use average \(p_{\text {output}}=\frac{1}{T_{\text {max}}}\sum _{T=1}^{T_{\text {max}}}p^{(T)}\) as the output probabilistic model. This is again a mixture of output sequence \((r^{(T)})\) that only differs from \(p^{(T_{\text {max}})}\) in the coefficients. A more specific method for output aggregation is described in “Appendix A”.

If \({\mathcal {Q}}\) is set appropriately and the number of components is not significantly large, the probability density function of output \(p_{\text {output}}\) can be easily calculated. The availability of the density function value enables us to use likelihood-based model evaluation tools in statistics and machine learning, such as cross-validation. This can also be used to select the parameters of the algorithm such as \(\Lambda _m\).

If \({\mathcal {X}}\) is continuous, we cannot use the point herding to model the probability density because the sample points can only represent the non-smooth delta functions. Even if \({\mathcal {X}}\) is discrete, when the number of samples T is much smaller than the number of possible states \(|{\mathcal {X}}|\), the zero-probability weight should be assigned to a large fraction of states. The zero-probability weight causes infiniteness when the log-likelihood is calculated. Moreover, the probability mass can only take a multiple of 1/T, which causes inaccuracy, particularly for a state with a small probability mass. Many samples are required to obtain accurate probability mass values for likelihood-based methods. Conversely, the output of the entropic herding has sample efficiency because each output component can assign non-zero probability mass to many states. This is demonstrated by numerical examples in the next section.

Another important difference is that the entropic herding explicitly handles the entropy in each optimization step, not depending only on the complex behavior of herding. In each optimization step, because entropy term H(p) is included in the target function and the search space \({\mathcal {Q}}\) is extended from the point distribution, it can generate a distribution \(r^{(T)}\) that has a higher entropy value than the point distribution of point herding. Thus, we can expect that final collection \(p_{\text {output}}\) of the output also has a higher entropy value than that of the point herding. Further, we can control how it focuses on the entropy or moment information of the data by changing parameter \(\Lambda _m\) in the target function. While the point herding diversifies the sample points by its complex behavior, the optimization problem solved in each time step does not explicitly contain \(\Lambda _m\) such that the balance between entropy maximization and moment error minimization is not controlled.

Moreover, if we restrict candidate distribution set \({\mathcal {Q}}\) to be appropriately simple, we can easily obtain any number of random samples from \(p_{\text {output}}\) by sampling from \(r^{(t)}\) for each randomly sampled index t. This sample generation process can also be parallelized because each sample generation is independent. For example, we can use independent random variables following normal distributions or Bernoulli distributions as \({\mathcal {Q}}\), as shown in the numerical examples below.

Theoretically, the exact solution of problem Eq. (8) is obtained as \(\pi _{{\varvec{a}}^{(T-1)}}\). However, we can enjoy the advantages of the entropic herding described above when \({\mathcal {Q}}\) is restricted to simple, analytic, and smooth distributions, although the optimization performance is suboptimal.

In summary, the entropic herding has the merits of both the herding and probabilistic modeling using the distribution mixture.

5 Numerical examples

In this section, we present numerical examples of the entropic herding and its characteristics. We also present comparisons between the entropic herding and several conventional methods.

We show the numerical results for three target distributions. The first two distributions, the one-dimensional distribution in Sect. 5.1 and multi-dimensional distribution in Sects. 5.2–5.3 are synthetic, and the last distribution in Sect. 5.4 is from real data. A detailed description of the experimental procedure is provided in “Appendix A”. The definitions of the detailed parameters, not described here, are also provided in the “Appendix”.

Output sequences of a the point herding and b entropic herding for the one-dimensional bimodal distribution \(p_{\text {bi}}\). The horizontal axis represents the index of the step denoted by t in the text. The color represents the probability mass for each bin, where yellow corresponds to a larger mass. The probability mass values were normalized to [0, 1] in each plot before being colorized. Panels c, d show output sequences of the point herding and entropic herding with stochastic optimization steps, respectively. (Color figure online)

5.1 One-dimensional bimodal distribution

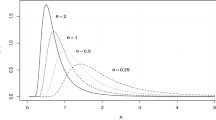

As a target distribution, we took a one-dimensional bimodal distribution

where Z is the normalizing factor. This distribution is indicated by the orange curves in each panel of Fig. 3. For this distribution, we consider a set of four polynomial feature functions for the herding as follows:

From the moment values \(\mu _m={\mathbb {E}}_{p_{\text {bi}}}\left[ \varphi _m(x)\right] \), the maximum entropy principle reproduces the target distribution \(p_{\text {bi}}\). Using this feature set, we ran the point herding and entropic herding. Figure 2 shows the time series of the generated sequence, and Fig. 3 shows the output compared to target distribution \(p_{\text {bi}}\). For the entropic herding, we set the candidate distribution set \({\mathcal {Q}}\) as

where \({\mathcal {N}}(\mu ,\sigma ^2)\) denotes a one-dimensional normal distribution with mean \(\mu \) and variance \(\sigma ^2\).

Figures 2a and 3a are for the point herding. We observed the periodic sequence, and this caused a large difference between the distributions around \(x=0\). The point herding exhibits complex dynamics of the high-dimensional weight vector that achieves random-like behavior even in the deterministic system. However, in this case, the dimension of the weight vector was only four. This low dimensionality of the system can be a cause of the periodic output.

Contrarily, Figs. 2b and 3b show the results of the entropic herding. Although this trajectory is periodic as well, the output in each step is a distribution with a positive variance such that it can represent a more diverse distribution. The difference between the distributions reduced, as shown in Fig. 3b.

Additionally, the periodic behavior of the point herding can be mitigated by introducing a stochastic factor into the system. Figures 2c and 3c show the results of the point herding with the stochastic Metropolis update (see “Appendix A” for details). We observed some improvements in the difference between the distributions. The stochastic update increases the diversity of samples, as described in (b) in Sect. 4.4. Thus, this algorithm is conceptually in line with the entropic herding, although not included in the proposed mathematical formulation. The entropic herding, which generates a sequence of distributions, can also be combined with a stochastic update. The results of this combination are shown in Figs. 2d and 3d, respectively. Clearly, the periodic behavior of the point herding is diversified. The improvements in the difference between the distributions are significant particularly for the point herding (Fig. 3a,c).

Output distribution of a the point herding and b entropic herding compared to the one-dimensional bimodal target distribution \(p_{\text {bi}}\). The horizontal axis represents space \({\mathcal {X}}={\mathbb {R}}\). The vertical axis represents the probability mass of each bin. Panels c, d show the output distributions of the point herding and entropic herding with stochastic optimization steps, respectively. The length of the output sequence used in the plot was \(T_{output}=1000\) (a, c) or \(T_{output}=100\) (b, d)

5.2 Boltzmann machine

Next, we present a numerical example of the entropic herding for a higher dimensional distribution. We consider the Boltzmann machine with \(N=10\) variables. The state vector was \({{\varvec{x}}}=(x_1,\ldots ,x_N)^{\top }\), where \(x_i\in \{-1,+1\}\) for all \(i\in \{1,\ldots , N\}\). The target distribution is defined as follows:

where \(W_{ij}\) are the randomly drawn coupling weights, and Z is the normalizing factor. For simplicity, we did not include bias terms. For this distribution, we used a set of feature functions \(\{\varphi _{ij} \mid i<j\}\), as follows:

The dimension of the weight vector was \(N(N-1)/2\). With this feature set, we ran the entropic herding and obtained the output sequence of 320 distributions. The input target value \({\varvec{\mu }}\) was obtained from feature mean \({\mathbb {E}}_{p_{\text {BM}}}\left[ \varphi _{ij}\right] \) calculated with definition Eq. (48). We used candidate distribution set \({\mathcal {Q}}\), defined as the set of the following distributions:

where \(p_i\in [0, 1]\) are the parameters and \(x_1,\ldots , x_N\) are independent. We also generated 320 identically and independently distributed random samples from target \(p_{\text {BM}}\) for comparison.

Figure 4 is a scatter plot comparing the probability mass between the output and target distribution for each state. Figure 4a shows the result of the empirical distribution of random samples. The Boltzmann machine had 1,024 states for \(N=10\), but we generated only 320 samples in this experiment. Therefore, the mass values for the empirical distribution had discrete values. Furthermore, there were many states with mass values of zero. There was a large difference in mass value between the empirical distribution and target distribution.

Contrarily, Fig. 4b shows the results of the entropic herding. As in the above example for bimodal distribution, each output of the entropic herding can represent a more diverse distribution than a single sample. For most of the states that have large probability weights, the difference in mass value was within a factor of 1.5. This is significantly smaller than that in the case of random samples.

Probability mass values of a the empirical distribution of random samples and b output distribution of the entropic herding for the Boltzmann machine compared to the target distribution. Each state of the target Boltzmann machine is represented by a point. The states with zero probability mass are represented by a small value of \(10^{-5}\) and in red-plus signs. The vertical axis represents the probability mass of the obtained distribution, whereas the horizontal axis represents those of the target distribution. A logarithmic scale was used for both axes. The point on the dashed diagonal line indicates that the two probability masses are identical. The point between two dotted lines indicates the difference in mass value is within a factor of 1.5. The result with \(\lambda =13\) was chosen by model validation. The length of the output sequence used in the plot is \(T_{output}=320\). (Color figure online)

5.3 Model selection

Using the Boltzmann machine above, we present a numerical example of the dependency of parameter choice on output and model selection for the entropic herding. In the experiment, \(\Lambda _m\) for all m was proportional to the scalar parameter \(\lambda \). Figure 5 shows the moment error and entropy of the output distribution for different values of \(\lambda \) and number of samples. The error is measured by the sum squared error between the feature mean of the output and target distribution. The results of the identical number of samples are represented by points connected by black broken lines. By comparing these results, we observe that the error in the feature means mostly decreases with increasing \(\lambda \). Conversely, we can obtain a more diverse distribution with a large entropy value by decreasing \(\lambda \). Therefore, there is a trade-off between accuracy and diversity when choosing parameter values.

Moment error and the entropy value of the output distribution \(p_{\text {output}}\) for various values of weight parameter \(\lambda \) and output length \(T_{\text {output}}\in \{20,40,80,160,320\}\). The mean values of 10 trials are shown, and the standard errors of the mean are represented by error bars. The horizontal axis represents the sum squared error of the feature on a logarithmic scale, which is defined as \(SSE=\sum _m(\eta _m(p_{\text {output}})-\mu _m)^2\). The vertical axis represents the entropy value, \(H(p_{\text {output}})\). The horizontal dashed line represents the entropy of target \(p_{\text {BM}}\). The results for each \(\lambda \) are represented as points with the same color and are connected by lines. The points corresponding to identical \(T_{\text {output}}\) are connected by black lines. (Color figure online)

We can compare the output for various parameters by comparing the KL-divergence

between target distribution \(p_{\text {BM}}\) and output distribution \(p_{\text {output}}\), where value \(p_{\text {output}}(x)\) can be easily evaluated for each x. Note that if we have a validation dataset instead of the target distribution, we can also compare the negative log-likelihood for the validation set. Figure 6 shows the KL-divergence for various values of \(\lambda \) and the number of samples. Clearly, optimal \(\lambda \) depends on the number of samples.

5.4 UCI wine quality dataset

Finally, we present an example of an application of the entropic herding to real data. We used a wine quality dataset (Cortez et al 2009) from the UCI data repositoryFootnote 1. It was composed of 11 physicochemical features of 4,898 wines. The wines are classified into red and white, which have different distributions of feature values. We applied some pre-processing to the data, including log-transformation and z-score standardization. A summary of the features and pre-processing methods is provided in Table 1.

A simple model for this distribution is a multivariate normal distribution, defined as follows:

where \({{\varvec{x}}}=(x_1,\ldots ,x_{11})^{\top }\) is the vector of the feature values, and Z is the normalizing factor. Parameter W in this model can be easily estimated from the covariance matrix of the features. This model is unimodal and has a symmetry such that it is invariant under transformation \({{\varvec{x}}}\leftarrow -({{\varvec{x}}}-{\varvec{\mu }})+{\varvec{\mu }}\).

However, as shown in Fig. 7a, b, the distribution of this dataset is asymmetric, and bimodal distributions can be observed in the pair plots. To model such a distribution, we improved the model by introducing higher-order terms as follows:

The direct parameter estimation for this model is difficult. However, the entropic herding can be applied to draw inferences from the feature statistics of the data. We used the following feature set:

where each feature is defined as

We added \(\varphi ^{(1)}_i\) to control the mean of each variable. Using the maximum entropy principle with the moment values taken from an assumed background distribution Eq. (53), we reproduce the distribution where the coefficients corresponding to \(\varphi ^{(1)}_i\) are zero.

KL-divergence \(\textrm{KL}({p_{\text {BM}}}\Vert {p_{\text {output}}})\) for different values of weight parameter \(\lambda \) and output length \(T_{\text {output}}\in \{20,40,80,160,320\}\). The mean values of 10 trials are shown, and the standard errors of the mean are represented by error bars. The horizontal and vertical axes represent \(T_{\text {output}}\) and KL-divergence, respectively. The logarithmic scale was used for both axes. The results for each \(\lambda \) are represented as points and are connected by lines. For \(\lambda =16\), we also made a linear fitting of \(\log \textrm{KL}\) on \(\log T\) and the fitted line is represented by the black dotted line

We used candidate distribution set \({\mathcal {Q}}\), defined as a set of the following distributions:

where \(\mu _i\in {\mathbb {R}}\) and \(\sigma _i > 0.01\) for all \(i\in \{1,\ldots ,11\}\) are the parameters and \(x_1,\ldots , x_{11}\) are independent.

Figure 7 shows the pair plot of the distribution of the dataset and distribution obtained from the entropic herding. We picked three variables in the plot for ease of comparison. The plot for all variables is presented in “Appendix B”. We observed that the distribution obtained well represents the characteristics of the dataset distribution. Particularly, the asymmetry and two modes were well represented by the output. Figure 8 illustrates some components in the output distribution \(p_{\text {output}}\).

Pair plot of the distribution of the dataset and distribution obtained from the entropic herding. For ease of comparison, we selected three variables \((x_1, x_4, x_8)\), which are shown from top to bottom and from left to right in each panel. The plots for all variables are presented in the “Appendix”. The plots on the diagonal represent the marginal distribution of each variable. The vertical axis has its scale of probability mass and does not correspond to the scale shown in the plot. The other plots represent the marginal distribution of two variables for each. The horizontal and vertical axes represent the variables corresponding to rows and columns, respectively. The left (a, c) and right (b, d) panels correspond to the red and white wine, respectively. The top (a, b) and bottom (c, d) panels are for the dataset and entropic herding with \(T_{output}=500\), respectively. (Color figure online)

Distribution of \(x_4\) (horizontal axis) and \(x_8\) (vertical axis) obtained by the entropic herding with \(T_{output}=500\) for white wine. The red circles represent 20 distributions \(r^{(T)}\) randomly drawn from the output. The sizes of the circles along the vertical and horizontal axes represent the standard deviations of \(x_4\) and \(x_8\), respectively. (Color figure online)

We can use the herding output as a probabilistic model. Figure 9 shows the negative log-likelihood of the validation data. Clearly, the model corresponding to the true class of wine assigns larger likelihood values than the other models. We used the results for the classification of red and white wines using the difference in the log-likelihood as a score. The AUC for the validation set was 0.998. The score was close to the AUC value obtained using the log-likelihood of fitted multivariate normal distribution (0.995) and linear logistic regression (0.998).

Negative log-likelihood for the validation data. The red-crossed marks and green-plus signs represent the red and white wines in the validation data, respectively. The horizontal and vertical axes represent the negative log-likelihood \(-\log p_{\text {output}}({{\varvec{x}}})\) for models obtained by the entropic herding with \(T_{output}=500\) for red and white wines, respectively. The dashed line represents where the two negative log-likelihoods are identical. (Color figure online)

The simple analytic form of the output distribution can also be used for the probabilistic estimation of missing values. We generated a dataset with missing values by dropping \(x_4\) from the validation set for white wine. The output distribution is \(p_{\text {output}}(x)=\frac{1}{T_{\text {max}}}\sum _{T=1}^{T_{\text {max}}} r^{(T)}(x)\), where \(r^{(T)}\in {\mathcal {Q}}\) is given by the parameter \((\mu _i^{(T)}, \sigma _i^{(T)})\) for \(i=1,\ldots ,11\). Let \(r_i(x_i;\mu _i^{(T)},\sigma _i^{(T)})\) denote the marginal distribution of \(x_i\) for \(r^{(T)}\), which is a normal distribution. The conditional distribution of \(x_4\) on the other variables is expressed as follows:

where \(w^{(T)}=\prod _{i\ne 4}r_i(x_i;\mu _i^{(T)},\sigma _i^{(T)})\) and \(Z=\sum _{T=1}^{T_{\text {max}}}w^{(T)}\). Figure 10a shows a violin plot of the conditional distribution for 50 randomly sampled data. Figure 10b shows the results of the multivariate normal distribution. The standard deviations of the estimations shown in Fig. 10b are identical because they are from the same multivariate normal distribution. Comparing these plots, clearly, the entropic herding is better for the more flexible model than the multivariate normal distribution. The dotted horizontal line shows the true value, and the short horizontal lines show the [10, 90] quantile of the estimated distribution. We counted the number of data with true values in this range. The proportion of such data was 79.7% for the entropic herding and 51.1% for multivariate normal distribution. We can conclude that estimation by the entropic herding was better calibrated.

Violin plots of the conditional distribution of \(x_4\) given other variables for 50 randomly sampled validation data. The horizontal axis represents the data index. The vertical axis corresponds to the value of \(x_4\). The dotted horizontal line represents the true values in the data. The short horizontal lines represent the [10, 90] quantile of the estimated distribution. a Output distribution of the entropic herding with \(T_{output}=500\). b Multivariate normal distribution

6 Discussion

In this section, we further discuss the proposed algorithm compared to other algorithms to place this study in the context of previous research. First, in Sect. 6.1, we highlight the uniqueness of the entropic herding that differs from both the point herding and other mixture methods. We further focus on the difference between the entropic herding and mixture models in Sect. 6.2. In Sect. 6.3, we give remarks and future perspectives on the practice of the entropic herding. Finally, we refer to studies from different research areas in Sect. 6.4 to show the similarities in the dynamical mechanisms between the herding and these different algorithms, which is insightful for future study.

6.1 Comparison of entropic and point herding

The most significant difference between the proposed entropic herding and original point herding is that the entropic herding represents the output distribution as a mixture of probability distributions. As for the applications of the entropic herding, some of the desirable properties of density calculation and sampling, discussed in Sect. 4.5, are the results of using the distribution mixture for the output. Many probabilistic modeling methods use the distribution mixture, such as the Gaussian mixture model and kernel density estimation (Parzen 1962). All of these methods, including the entropic herding, share the above characteristics.

The most important difference between the entropic herding and other methods is that it does not require specific data points and only uses the aggregated moment information of the features. The use of aggregated information has recently attracted increasing attention (Sheldon and Dietterich 2011; Law et al 2018; Tanaka et al 2019a, b; Zhang et al 2020). Sometimes, we can only use the aggregated information for privacy reasons. For example, statistics, such as population density or traffic volumes, are often aggregated to mean values by spatial regions, which often have various granularities (Law et al 2018; Tanaka et al 2019a, b). In addition to data availability, features can be selected to avoid irrelevant information depending on the focus of the study and data quality. These advantages are common to the entropic and point herding methods, but nonetheless distinctive when compared with other probabilistic modeling methods. Notably, the kernel herding (Chen et al 2010) is a prominent variant of the herding that has a convergence guarantee; however, it does not share the aforementioned advantages because it requires individual datapoints to use the features defined in the reproducing kernel Hilbert space.

Note that the entropic herding has a drawback, compared to the point herding, that the optimization step becomes more intricate and costly. Particularly, the calculation and optimization with \({\mathbb {E}}_{q}\left[ \varphi _m(x)\right] \) are more complicated and computationally intensive than those for \(\varphi _m(x)\). The evaluation of the probability density value on output distribution \(p_{\text {output}}\) can also be in practice computationally demanding, although it is not available for the point herding (particularly in the case that \({\mathcal {X}}\) is continuous).

6.2 Comparison of entropic herding to other methods

The proposed entropic herding estimates the target distribution with mixture models. We assumed use cases for the entropic herding that differ from other existing mixture models.

For estimated mixture models, we often expect that each component represents a cluster of datapoints with a common characteristic. Therefore, the number of components used for the mixture model is usually relatively small.

Nevertheless, in the learning process of mixture models, we have to jointly optimize many parameters whose number linearly grows with the number of components. Then, the optimization algorithm and hyperparameters should be carefully designed to ensure the numerical stability of the algorithm and avoid the problem of local optima.

Contrarily, for the entropic herding, even if the number of components and their parameters increase, the optimization problem for each component is independent of the others, and the algorithm design is kept simple. Therefore, we suppose that the entropic herding is more suited when the target distribution is more complicated such that it requires a larger number of components, although the interpretation of each component is less informative.

A method of moments (MoM) is another approach that has a similarity to the entropic herding. It shares its concepts of matching moment information with the entropic herding. It chooses a distribution from an assumed family of distributions such that the moments of statistics \({\mathbb {E}}_{p}\left[ \varphi _m(x)\right] \) match the given input. To fit with the complexity of the target distribution, we require a complex model of distributions. Such a model is likely to have many degrees of freedom, but this must be smaller than the number of input moments \((\mu _m)\) to avoid an ill-posed problem in MoM. For example, when we model the bimodal distribution in Fig. 3 by the mixture of two normal distributions, we have five degrees of freedom, including the mean and variance for each component and mixture weight. However, we only have four input moments \(\mu _m\) for \(m=1,\ldots ,4\). Even when we can use a complex model with a sufficiently large number of inputs, the optimization process of MoM is more complicated than the entropic herding. Conversely, we can use simple \({\mathcal {Q}}\) and a large number of components even in such cases for the entropic herding. It is because, to satisfy the complexity of the output, we can resort to the complex behavior of the herding.

6.3 Remarks for application of entropic herding

The framework of entropic herding in this study is written in a general form such that it does not depend on a choice of feature functions ± and candidate distribution \({\mathcal {Q}}\). In the application of the entropic herding, we have to specify parameters including these components. Specifically, we should focus on the choice of \({\mathcal {Q}}\) and T because the characteristics of the output largely depend on them. Although we can say little about the expressive power and convergence behavior of the output, we present a general idea for choosing \({\mathcal {Q}}\) and T below.

In one respect, larger \({\mathcal {Q}}\), which is large as a set of distributions and has larger expressive power, can be considered a desirable choice, because we can obtain a better solution for the optimization (Eq. (8)) and then a better output mixture \(p_{\text {output}}\). However, in another respect, simple \({\mathcal {Q}}\) is also a better choice. This is because it simplifies the optimizations in the algorithm and use of the output. The extremum choice in the former respect is \({\mathcal {Q}}\) that includes Gibbs distributions \(\{\pi _\theta \}\). However, in this case, the problem is simply reduced to the parameter learning of the Gibbs distribution. The other extremum choice in the latter respect is using the set of point distributions for \({\mathcal {Q}}\); however, as argued above, it is equivalent to the existing point herding. The framework of the entropic herding allows us to choose \({\mathcal {Q}}\) in an intermediate of these two extrema.

The choice of \({\mathcal {Q}}\) is also relevant to the required number of components or, equivalently T. For example, suppose that the target distribution has a large number of modes. If \({\mathcal {Q}}\) is simple such that it only includes unimodal distributions, required number T of components from \({\mathcal {Q}}\) to represent the target is at least as large as the number of its modes. We expect that it is reduced by using larger \({\mathcal {Q}}\). Generally, choosing simple \({\mathcal {Q}}\) will increase the number of components, and the computational cost of the use of the output mixture increases only linearly to T. However, with simple \({\mathcal {Q}}\), each computational process becomes simple. Thus, choosing simple \({\mathcal {Q}}\) is sometimes worth increasing the number of components T.

Summarizing the above, we can enjoy the merits of the entropic herding when we use relatively simple \({\mathcal {Q}}\). The complexity of the target distribution such as multimodality and dependency is handled by increasing T, although the optimal choice depends on applications. The numerical examples in this study, where we used the distributions of independent random variables for \({\mathcal {Q}}\), are also informative from this viewpoint.

In general, determining the optimal T value in advance from the data is difficult. It is similar to the problem of choosing the number of mixtures when using mixture models. In the case of multimodal distribution as above, running the entropic herding with enough large T and clustering obtained distributions \(\{r_t\}\) may help estimate the optimal number of components or T if the computational budget is enough. Note that this clustering of the collection of distributions is not identical to that of samples, which may not even be possible when we only have moments \((\mu _m)\). We can also quantitatively validate the output distribution like in Sect. 5.3 as other probability models when we have sample points or target density function.

Additionally, we made two remarks about the future improvement in the optimization step of the entropic herding, which is one of the most computationally intensive parts at present.

First, the gradient descent in the entropic herding is easily realizable if the analytic form of entropy and expectation of the feature over the candidate distribution \(q\in {\mathcal {Q}}\) are available. However, if the analytic form is not available, we should resort to more sophisticated optimization methods. The problem solved in the optimization step is equivalent to obtaining the distribution approximation by minimizing the KL-divergence (see Eq. (34)). This problem often appears in the field of machine learning, such as in variational Bayes inference. To extend the application of the herding, it can be combined with recent optimization techniques developed in this field.

Second, it is observed that slightly different optimization problems, which are modified by the weight dynamics, are repeatedly solved in the algorithm. If the amount of weight update in each step is small, we can assume that the problems in the consecutive steps are similar. As described in the “Appendix”, we used gradient descent from the latest solution for the optimization of the experiment and demonstrated its feasibility. We expect that exploiting the characteristics of repetitive optimization will produce further improvement.

In this study, we proposed the framework of entropic herding in a general form with the expectation of future extension. In recent years, many methods have been developed for the generative expression of a probability distribution with high expressive power and simultaneously equipped with stable and efficient optimizing algorithms. For example, we can find studies that used neural networks (Goodfellow et al 2014; Kingma and Welling 2014) and decision trees (Criminisi 2012). We also expect that this study will serve as a theoretical framework for the more advanced use of these sophisticated generative models, which can use them as a mixture.

6.4 Algorithmic roles of dynamical feature weighting in herding and other research areas

The herding requires the complex behavior of the process to obtain a random-like sequence. However, we currently have to only rely on the empirical observation that the coupled system of Eqs. (5) and (6) or Eqs. (8) and (9) exhibits complex behavior in high-dimensional space. Theoretical understanding of the behavior of the herding as a nonlinear dynamical system is a challenging but important direction for future study.

In the optimization step (Eq. (5)), the herding used the weighted average of features, whose weights were changed dynamically depending on the process state. The combination of dynamically changing weights and optimization of functions weighted by them, which we call the dynamical feature weighting, has also been employed in other research areas that are not directly related to the herding, as in the following examples. In these examples, the weight dynamics is combined with the process that takes the weighted sum of functions as the energy and is interpreted as minimizing the energy, such as the gradient flow.

The first example is from the field of machine learning similar to the herding. The maximum likelihood learning of MRF (4) is often performed using the following gradient:

The expectation in the second term can be approximated using the Markov chain Monte Carlo (MCMC) (Hinton 2002; Tieleman 2008) on the potential function \(E(x)=-\log p(x)+\textrm{const}\). However, it is known that MCMC often suffers from slow mixing because of the sharp local minima of the potential. In the case of MRF (4), the potential function is \(E(x)=\sum _{m\in {\mathcal {M}}}\theta _m\varphi _m(x)\), which also has the form of a weighted sum of the features. Parameters \(\theta _m\) were updated during the learning process. Tieleman and Hinton (2009) demonstrated that the efficiency of learning can be improved by adding extra time variation to parameters \(\theta _m\). We can interpret this as that the dynamics of MCMC on energy E(x) is combined with dynamical weights \(\theta _m\) to increase the complexity of the MCMC dynamics and improve its mixing.

Combinatorial optimization is another example of the application of dynamical feature weighting. The Boolean satisfiability problem (SAT) is the problem of finding a binary assignment to a set of variables satisfying the given Boolean formula. A Boolean formula can be represented in a conjunctive normal form (CNF), which is a set of constraints called clauses combined by logical conjunctions. This problem can be regarded as the minimization of the weighted sum of features, where the features are defined by the violations of the constraints in the CNF. Several methods (Morris 1993; Wu and Wah 2000; Thornton 2005; Cai and Su 2011) solve the SAT problem by repeatedly improving the assignment for the target function and changing its weights when the process is trapped in a local minimum. Ercsey-Ravasz and Toroczkai (2011), Ercsey-Ravasz and Toroczkai (2012) proposed a continuous-time dynamical system to solve the SAT problem, which implements the local improvement and time variation of the weight values. It is also shown that this system is effective in solving MAX-SAT, which is the optimization version of SAT problem (Molnár et al 2018). One advantage of using deterministic dynamics is the possibility of efficient physical implementation, as suggested by Welling (2009b) and demonstrated by Yin et al (2018). In these cases, to avoid the search process halting, the technique of dynamical feature weighting was used to increase the complexity of the search process.

As illustrated in these examples, the technique of dynamical feature weighting underlies various computational methods not limited to the herding. Thus, theoretical studies of the herding can lead to a better understanding of such methods. We expect that the arguments in this study, which connect the herding and entropy, also work as the first step of the research from this perspective.

7 Conclusion

In this study, we proposed an entropic herding algorithm as an extension of the herding.

By using the proposed algorithm as a framework, we discussed the connection between the herding and maximum entropy principle. Specifically, the entropic herding is based on the minimization of target function \({\mathcal {L}}\). This function, which is composed of the feature moment error and entropy term, represents the maximum entropy principle. The herding minimizes this function in two ways: The first is the minimization of the upper bound of the target function by solving the optimization problem in each step. The second is the diversification of the samples by the complex dynamics of the high-dimensional weight vector. We also investigated the output of the entropic herding through a mathematical analysis of the optimal distribution of this minimization problem.

We also clarified the difference between the entropic and point herding for application both theoretically and numerically. We demonstrated that the point herding can be extended by explicitly considering entropy maximization in the optimization step using distributions rather than points for the candidates. The output sequence of the entropic herding has more efficiency in the number of samples than the point herding because each output is a distribution that can assign a probability mass to many states. The output sequence of the distribution can be used as a mixture distribution that enables independent sample generation. The mixture distribution also has an analytic form. Therefore, model validation using likelihood and inference through conditional distribution is also possible.

As discussed in Sect. 6, the entropic herding enables flexibility in the choice of the feature set and candidate distribution. We expect the entropic herding to be used as a framework for developing effective algorithms based on the distribution mixture.

Notes

https://archive.ics.uci.edu/ml/datasets/Wine+Quality, Accessed November 16, 2021

References

Bergstra, J., Breuleux, O., Bastien, F., et al.: Theano: a CPU and GPU math expression compiler. In: Proceedings of the Python for Scientific Computing Conference (SciPy) 2010 (2010)

Cai, S., Su, K.: Local search with configuration checking for SAT. In: 2011 IEEE 23rd International Conference on Tools with Artificial Intelligence, pp. 59–66 (2011)

Chen, Y., Welling, M.: Herding as a learning system with edge-of-chaos dynamics. In: Hazan, T., Papandreou, G., Tarlow, D. (eds.) Perturbations, Optimization, and Statistics. MIT Press, Cambridge (2016)

Chen, Y., Welling, M., Smola, A.: Super-samples from kernel herding. In: Proceedings of the Twenty-Sixth Conference on Uncertainty in Artificial Intelligence, pp. 109–116 (2010)

Chen, Y., Bornn, L., Freitas, N.D., et al.: Herded Gibbs sampling. J. Mach. Learn. Res. 17(1), 263–291 (2016)

Cortez, P., Cerdeira, A., Almeida, F., et al.: Modeling wine preferences by data mining from physicochemical properties. Decis. Support Syst. 47(4), 547–553 (2009)

Criminisi, A.: Decision forests: a unified framework for classification, regression, density estimation, manifold learning and semi-supervised learning. Found. Trends Comput. Graph. Vis. 7(2–3), 81–227 (2012)

Ercsey-Ravasz, M., Toroczkai, Z.: Optimization hardness as transient chaos in an analog approach to constraint satisfaction. Nat. Phys. 7, 966–970 (2011)

Ercsey-Ravasz, M., Toroczkai, Z.: The chaos within Sudoku. Sci. Rep. 2, 725 (2012)

Goodfellow, I.J., Pouget-Abadie, J., Mirza, M., et al: Generative adversarial nets. In: Advances in Neural Information Processing Systems 27 (NIPS 2014), pp. 2672–2680 (2014)

Granas, A., Dugundji, J.: Fixed Point Theory. Springer Monographs in Mathematics. Springer, New York (2003)

Hinton, G.E.: Training products of experts by minimizing contrastive divergence. Neural Comput. 14(8), 1771–1800 (2002)

Jaynes, E.T.: Information theory and statistical mechanics. Phys. Rev. 106(4), 620–630 (1957)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: International Conference on Learning Representations (ICLR) (2015)

Kingma, D.P., Welling, M.: Auto-encoding variational Bayes. In: Proceedings of the 2nd International Conference on Learning Representations, ICLR 2014 (2014)

Kulpa, W.: The Poincaré–Miranda theorem. Am. Math. Mon. 104(6), 545–550 (1997)

Law, H.C.L., Sejdinovic, D., Cameron, E., et al: Variational learning on aggregate outputs with Gaussian processes. In: Advances in Neural Information Processing Systems 31 (NeurIPS 2018), pp. 6084–6094 (2018)

Miranda, C.: Un’osservazione su una teorema di Brouwer. Boll. Unione Mat. Ital. 3(2), 5–7 (1940)

Molnár, B., Molnár, F., Varga, M., et al.: A continuous-time MaxSAT solver with high analog performance. Nat. Commun. 9, 4864 (2018)

Morris, P.: The breakout method for escaping from local minima. In: Proceedings of the Eleventh National Conference on Artificial Intelligence, pp. 40–45 (1993)

Parzen, E.: On estimation of a probability density function and mode. Ann. Math. Stat. 33(3), 1065–1076 (1962)

Poincare, H.: Sur certaines solutions particulieres du probleme des trois corps. C. R. Acad. Sci. Paris 97, 251–252 (1883)

Poincare, H.: Sur certaines solutions particulieres du probleme des trois corps. Bull. Astronomique 1, 63–74 (1884)

Sheldon, D.R., Dietterich, T.G.: Collective graphical models. In: Advances in Neural Information Processing Systems 24 (NIPS 2011), pp. 1161–1169 (2011)

Tanaka, Y., Iwata, T., Tanaka, T., et al: Refining coarse-grained spatial data using auxiliary spatial data sets with various granularities. In: Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19), pp. 5091–5100 (2019a)

Tanaka, Y., Tanaka, T., Iwata, T., et al: Spatially aggregated Gaussian processes with multivariate areal outputs. In: Advances in Neural Information Processing Systems 32 (NeurIPS 2019) (2019b)

Thornton, J.: Clause weighting local search for SAT. J. Autom. Reason. 35, 97–142 (2005)

Tieleman, T.: Training restricted Boltzmann machines using approximations to the likelihood gradient. In: Proceedings of the 25th International Conference on Machine Learning, pp. 1064–1071 (2008)

Tieleman, T., Hinton, G.: Using fast weights to improve persistent contrastive divergence. In: Proceedings of the 26th Annual International Conference on Machine Learning, pp. 1033–1040 (2009)

Welling, M.: Herding dynamic weights for partially observed random field models. In: Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence, pp. 599–606 (2009a)

Welling, M.: Herding dynamical weights to learn. In: Proceedings of the 26th Annual International Conference on Machine Learning, pp. 1121–1128 (2009b)

Welling, M., Chen, Y.: Statistical inference using weak chaos and infinite memory. J. Phys. Conf. Ser. 233, 012005 (2010)

Wu, Z., Wah, B.W.: An efficient global-search strategy in discrete Lagrangian methods for solving hard satisfiability problems. In: Proceedings of the Seventeenth National Conference on Artificial Intelligence (AAAI-00), pp. 310–315 (2000)

Yamashita, H., Suzuki, H.: Convergence analysis of herded-Gibbs-type sampling algorithms: effects of weight sharing. Stat. Comput. 29(5), 1035–1053 (2019)

Yin, X., Sedighi, B., Varga, M., et al: Efficient analog circuits for Boolean satisfiability. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 26(1), 155–167 (2018)

Zhang, Y., Charoenphakdee, N., Wu, Z., et al: Learning from aggregate observations. In: Advances in Neural Information Processing Systems 33 (NeurIPS 2020) (2020)

Acknowledgements

This work is partially supported by JST CREST (JP-MJCR18K2), by AMED (JP21dm0307009), by UTokyo Center for Integrative Science of Human Behavior (CiSHuB), by the International Research Center for Neurointelligence (WPI-IRCN) at the University of Tokyo Institutes for Advanced Study (UTIAS), and by JST Moonshot R &D Grant Number JPMJMS2021.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Details of optimization algorithms and experiments

Here, we present detailed descriptions of the methods used for the experiments in this study.

The pre-processing applied to the feature functions is detailed below. Let \(\varphi _m\) be the mth feature function provided to the algorithm. Let \(x_1,\ldots , x_D\) be the input data vector. We calculated the mean \(\mu _m\) and standard deviation \(\sigma _m\) of the feature values, namely, \(\varphi _m(x_1),\ldots ,\varphi _m(x_D)\). We then standardized the feature values as follows:

The feature value for the distribution is defined as \(\eta _m'(p)\equiv {\mathbb {E}}_{p}\left[ \varphi '_m(x)\right] \). After standardization, the average of the feature values used for the target value became zero, and we used the same \(\Lambda _m\) for all m. Namely, we used

instead of using Eq. (23). Note that this is equivalent to \(a_m^{(T)}=\frac{\lambda }{\sigma _m}(\eta _m(p^{(T)})-\mu _m)\), which can also be obtained by substituting \(\Lambda _m=\lambda /\sigma _m\) into Eq. (23). Here, the parameter tuning of \(\Lambda _m\) was simplified to optimize the single global parameter \(\lambda \). Note that the pre-processing simplifies parameter tuning and is not generally necessary. When we do not have information on the standard deviation, we can still use the entropic herding by tuning each \(\Lambda _m\).

The optimization problem in Eq. (8) was solved using the gradient method. A different parameterized candidate distribution set \({\mathcal {Q}}\) was used for each case, and the parameter was optimized by repeating a small update following the target function gradient. The optimization for each T was performed by iterating the number \(k_{\text {update}}\) of optimization steps. The obtained state \(r^{(T)}\) was used as the initial state for the next optimization at \(T+1\). The amount of updates in each optimization step was modified using the Adam method (Kingma and Ba 2015) to maintain the numerical stability of the procedure. The hyperparameters in Adam were set to \((\beta _1, \beta _2, \varepsilon ) = (0.8, 0.99, 10^{-8})\) (see Kingma and Ba 2015). The gradient after the modification was multiplied by the learning rate, denoted by \(\eta _{\text {learn}}\). At the beginning of the inner loop of optimization, for each T, the rolling means maintained by Adam were reset to the initial value of zero.

In some cases, we used modified weight values depending on the optimization state. Instead of \(a_m^{(T-1)}\), we used weight values \({a^{'}_{m}}\) defined as follows:

where \(q_{cur}\) denotes the current state in the inner loop of the optimization. Note that this is different from \(r^{(T-1)}\), except for the beginning of the inner loop. This modification was also used for numerical stability. The justification was as follows: Eq. (24) was equivalent to

The terms dependent on \(r^{(T)}\) are

By neglecting small higher-order term \(O((\varepsilon ^{(T)})^2)\), the minimization was reduced to solving \(r^{(T)}=\mathop {\mathrm { arg~min}}\limits _{q\in {\mathcal {Q}}}\left( \left( \sum _{m\in {\mathcal {M}}}{a^{'}_{m}}\eta _m(q)\right) -H(q)\right) \), which is equivalent to Eq. (8) with the substitution of \(a_m\) by \({a^{'}_{m}}\).

We introduced a stochastic jump in the optimization step in some cases. In this case, a jump was proposed with a probability of \(p_{\text {jump}}\) in each optimization step. The candidate distribution \(q'\in {\mathcal {Q}}\) was drawn randomly and accepted as the next state if it had a better target function-value than the distribution of the current state. The method of candidate generation is described for each problem in the following sections.

The amount of the update in each step, denoted by \(\varepsilon ^{(T)}\), was set to the same value. Namely, we set \(\varepsilon ^{(T)}=\varepsilon _{\text {herding}}\) for each T. This means that distribution weights \(\rho ^{t,T}\) in Sect. 3.2 geometrically decayed at the rate of \(\rho =1-\varepsilon _{\text {herding}}\).

To eliminate nonstationary behavior depending on the initial condition, we set a burn-in period in the algorithm similar to conventional MCMC algorithms. After the herding ran with \(T_{\text {max}}=T_{\text {burnin}}+T_{\text {output}}\), the output sequence, except for the burn-in period, was aggregated into an output mixture distribution. The output mixture was obtained as follows:

We implemented the method above using the automatic differentiation provided by the Theano (Bergstra et al 2010) framework. The default settings for the above parameters are summarized in Table 2. The values in the table were used unless explicitly stated otherwise.

1.1 A.1 One-dimensional bimodal distribution

Using the Metropolis–Hastings method, 10,000 samples were generated and used as the input. We used candidate distribution set \({\mathcal {Q}}\) defined as

The four feature means over the candidate distribution, denoted by \(\eta _i(q)={\mathbb {E}}_{q}\left[ \varphi _i(x)\right] ={\mathbb {E}}_{q}\left[ x^i\right] \), are obtained as follows:

As they all have analytic expressions, the gradients regarding the parameters can be easily obtained.

We applied variable transformation \(l=\log \sigma \) and optimized parameter set \((\mu ,l)\in {\mathbb {R}}\times [\log 0.01,+\infty )\) in the algorithm. We reported the results for \(\lambda =100\) in this study.

For the case of the entropic herding with stochastic update, \(p_{\text {jump}}=0.1\) was used. When a random jump was proposed, the candidate distribution was determined using the current value of l and drawing \(\mu \) randomly from \([\mu _{min}, \mu _{max}]\), where \(\mu _{min}\) and \(\mu _{max}\) are the minimum and maximum values of \(\mu \) that have so far appeared in the procedure, respectively.

Point herding with Metropolis updates was implemented for comparison. In this case, a random jump was proposed in each step (\(p_{\text {jump}}=1\)). The candidate was accepted based on the Metropolis rule. That is, it is accepted with a probability \(\min (1, \exp (-\Delta F))\), where \(\Delta F\) is the increase in the target function \(F_{{\varvec{a}}^{(T-1)}}(x)\). In this case, modified weight values \({a^{'}_{m}}\) were not used.

1.2 A.2 Boltzmann machine

Parameters \(W_{ij}\) (\(i<j\)) for \(p_{\text {BM}}\) were drawn from a normal distribution with mean zero and variance \((0.2)^2/N\). We then added some structural interactions to increase nontrivial correlations; we assigned \(W_{45}=0\) and \(W_{i(i+1)}=-0.3\) for \(i\ne 4\). We obtained the values of \(\mu _m\) and \(\sigma _m\) used for feature standardization by calculating the expectation following the definition of target model \(p_{\text {BM}}\).

We used candidate distribution set \({\mathcal {Q}}\), defined as consisting of the following set of random variables: