Abstract

Developing efficient MCMC algorithms is indispensable in Bayesian inference. In parallel tempering, multiple interacting MCMC chains run to more efficiently explore the state space and improve performance. The multiple chains advance independently through local moves, and the performance enhancement steps are exchange moves, where the chains pause to exchange their current sample amongst each other. To accelerate the independent local moves, they may be performed simultaneously on multiple processors. Another problem is then encountered: depending on the MCMC implementation and inference problem, local moves can take a varying and random amount of time to complete. There may also be infrastructure-induced variations, such as competing jobs on the same processors, which arises in cloud computing. Before exchanges can occur, all chains must complete the local moves they are engaged in to avoid introducing a potentially substantial bias (Proposition 1). To solve this issue of randomly varying local move completion times in multi-processor parallel tempering, we adopt the Anytime Monte Carlo framework of (Murray, L. M., Singh, S., Jacob, P. E., and Lee, A.: Anytime Monte Carlo. arXiv preprint arXiv:1612.03319, (2016): we impose real-time deadlines on the parallel local moves and perform exchanges at these deadlines without any processor idling. We show our methodology for exchanges at real-time deadlines does not introduce a bias and leads to significant performance enhancements over the naïve approach of idling until every processor’s local moves complete. The methodology is then applied in an ABC setting, where an Anytime ABC parallel tempering algorithm is derived for the difficult task of estimating the parameters of a Lotka–Volterra predator-prey model, and similar efficiency enhancements are observed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Consider a set of m observations \(y = \left\{ y_1, \ldots , y_m\right\} \in {\mathcal {Y}}\) following a probability model with underlying parameters \(\theta \in \Theta \) and associated likelihood \(f(y_1, \ldots , y_m\,|\,\theta )\) which we abbreviate to \(f(y \,|\, \theta )\). In most cases, the posterior \(\pi (\text {d}\theta )\) of interest is intractable and must be approximated using computational tools such as the commonly used Metropolis-Hastings (M-H) algorithm (Robert and Casella (2004)) with random walk proposals, for example. However, as models become more complex, the exploration of the posterior using such basic methods quickly becomes inefficient (Beskos et al. (2009)). Furthermore, the model itself can pose its own challenges such as the likelihood becoming increasingly costly or even impossible to evaluate (Tavaré et al. (1997)); the Lotka-Volterra predator-prey model of Sect. 5 is a concrete example.

Parallel tempering, initially proposed by Swendsen and Wang (1986) and further developed under the name Metropolis-coupled Markov chain Monte Carlo (MC)\(^3\) by Geyer (1991), is a generic method for improving the efficiency of MCMC that can be very effective without significantly altering the original MCMC algorithm, beyond perhaps tuning its local proposals for each temperature. The parallel tempering algorithm runs multiple interacting MCMC chains to more efficiently explore the state space. The multiple MCMC chains are advanced independently, in what is known as the local moves, and the performance enhancement steps are the exchange moves, where the chains pause and attempt to swap their current sample amongst each other. Parallel tempering allows for steps of various sizes to be made when exploring the parameter space, which makes the algorithm effective, even when the distribution we wish to sample from has multiple modes. In order to reduce the real time taken to perform the independent local moves, they may be performed simultaneously on multiple processors, a feature we will focus on in this work.

Let the parallel tempering MCMC chain be \(\left( X_n^{1:\Lambda }\right) _{n=1}^{\infty } = \left( X_n^1, \ldots , X_n^{\Lambda }\right) _{n=1}^{\infty }\) with initial state \(\left( X^{1:\Lambda }_0\right) \) and target distribution

where the \(\pi _{\lambda }(\, \cdot \,)\) are independent marginals corresponding to the target distribution of each of \(\Lambda \) chains, running in parallel at different temperatures indexed by \(\lambda \). One of these chains, say \(\lambda =\Lambda \), is the cold chain, and its target distribution \(\pi _{\Lambda } = \pi \) is the posterior of interest. At each step n of parallel tempering (Geyer (2011)), one of two types of updates is used to advance the Markov chain \(X_n^{1:\Lambda }\) to its next state:

-

1.

Independent local moves: for example, a standard Gibbs or Metropolis-Hastings update, applied to each tempered chain \(X^{\lambda }_n\) in parallel.

-

2.

Interacting exchange moves: propose to swap the states \(x \sim \pi _{\lambda }\) and \(x' \sim \pi _{\lambda '}\) of one or more pairs of adjacent chains. For each pair, accept a swap with probability

$$\begin{aligned} a_{swap}(x', x) = \min \left\{ 1, \frac{\pi _{\lambda }(x') \pi _{\lambda '}(x)}{\pi _{\lambda }(x) \pi _{\lambda '}(x')}\right\} , \end{aligned}$$(2)otherwise, the chains in the pair retain their current states.

With the cold chain providing the desired precision and the warmer chains more freedom of movement when exploring the parameter space, the combination of the two types of update allows all chains to mix much faster than any one of them would mix on its own. This provides a way to jump from mode to mode in far fewer steps than would be required under a standard non-tempered implementation using, say, the Metropolis-Hastings algorithm.

A particular advantage of parallel tempering is that it is possible to perform the independent local moves in parallel on multiple processors in order to reduce the real time taken to complete them. Unfortunately, this gives rise to the following problem: depending on the MCMC implementation and the inference problem itself, the local moves can take a varying and random amount of time to complete, which depends on the part of the state space it is exploring (see the Lotka-Volterra predator-prey model in Sect. 5.3 for a specific real example). Thus, before the exchange moves can occur, all chains must complete the local move they are engaged in to avoid introducing a potentially substantial bias (see Proposition 1). Additionally, the time taken to complete local moves may also reflect computing infrastructure induced variations, for example, due to variations in processor hardware, memory bandwidth, network traffic, I/O load, competing jobs on the same processors, as well as potential unforeseen interruptions, all of which affect the compute time of local moves. Local moves in parallel tempering algorithms can also have temperature-dependent completion times. This is the case of the approximate Bayesian computation (ABC) application in Sect. 4. In Earl and Deem (2004), the authors consider a similar problem of temperature \(\lambda \) dependent real completion times of local moves. To tackle the problem, they redistribute the chains among the processors in order to minimise processor idling that occurs while waiting for all local moves to finish. This strategy is a deterministic allocation of processor time to simulation and entails completing part of a simulation on one processor and then continuing on another. Our approach to removing idling doesn’t involve redistributing partially completed simulations, and instead imposes real-time deadlines at which simulations are stopped to perform exchange moves before resuming work on their respective processors. The contributions of this paper are as follows.

Firstly, to solve the problem of randomly distributed local move completion times when parallel tempering is implemented on a multi-processor computing resource, we adopt the Anytime Monte Carlo framework of Murray et al. (2016): we guarantee the simultaneous readiness of all chains by imposing real-time deadlines on the parallelly computed local moves, and perform exchange moves at these deadlines without any idling, i.e. without waiting for the slowest of them to complete their local moves. Idling is both a financial cost, for example in a cloud computing setting, and can also significantly reduce the effective Monte Carlo sample size returned. We show that hard deadlines introduce a bias which we mitigate using the Anytime framework (see Proposition 2).

Secondly, we illustrate our gains through detailed numerical work. The first experiment considered is a mixture model where the biased and de-biased target distributions can be characterised for ease of comparison with the numerical results. We then apply our Anytime parallel tempering methodology in the realm of ABC ( Tavaré et al. (1997); Pritchard et al. (1999)). In ABC, simulation is used instead of likelihood evaluations, which makes it particularly useful for Bayesian problems where the likelihood is unavailable or too costly to compute. In Lee (2012), a more efficient MCMC kernel for ABC (as measured by the effective sample size), called the 1-hit MCMC kernel, was devised to significantly improve the probability that a good proposal in the direction of a higher posterior density is accepted, thus more closely mimicking exact likelihood evaluations. This new MCMC kernel was subsequently shown in Lee and Łatuszyński (2014) to also theoretically outperform competing ABC methods. The 1-hit kernel has a random execution time that depends on the part of the parameter space being explored, and is thus a good candidate for our Anytime parallel tempering method. In this paper, we show that we can improve the performance of the 1-hit MCMC kernel by introducing tempering and exchange moves, and embed the resulting parallel tempering algorithm within the Anytime framework to mitigate processor idling due to random local move completion times. Parallel tempering for ABC has been proposed by Baragatti et al. (2013), but hasn’t been studied in the Anytime context as we do for random local move completion times, nor has the more efficient 1-hit MCMC kernel been employed. We perform a detailed numerical study of the Lotka-Volterra predator-prey model, which has an intractable likelihood and is a popular example used to contrast methods in the ABC literature ( Fearnhead and Prangle (2012); Toni et al. (2009); Prangle et al. (2017)). The time taken to simulate from the Lotka-Volterra model is random and parameter value dependent; this randomness is in addition to that induced by the 1-hit kernel.

The Anytime parallel tempering framework can be applied in several contexts. For example, another candidate for our framework is reversible jump MCMC (RJ-MCMC) by Green (1995), which is a variable-dimension Bayesian model inference algorithm. An instance of RJ-MCMC within a parallel tempering algorithm is given in Jasra et al. (2007), where multiple chains are simultaneously updating states of variable dimensions (depending on the model currently considered on each chain), and the real completion time of local moves depends on the dimension of the state space under the current model. Additionally, in the fixed dimension parallel tempering setting, if the local moves use any of the following MCMC kernels, then they have a parameter dependent completion time and thus could benefit from an Anytime formulation: the no-U-turn sampler (NUTS) ( Hoffman and Gelman (2014)) and elliptical slice sampling ( Murray et al. (2010); Nishihara et al. (2014)). Even if the local moves do not take a variable random time to complete by design ( Friel and Pettitt (2008); Calderhead and Girolami (2009)), computer infrastructure induced variations, such as memory bandwidth, competing jobs, etc. can still affect the real completion time of local moves in a parallel tempering algorithm, such as in Rodinger et al. (2006). In the statistical mechanics literature, there are also parallel tempering-based simulation problems where the local move completion time is temperature- and parameter-dependent as well as random, e.g. see Hritz and Oostenbrink (2007); Karimi et al. (2011); Wang and Jordan (2003); Earl and Deem (2004), and thus could benefit from our Anytime formulation. Finally, the Anytime framework has not been tested beyond the SMC\(^2\) example of Murray et al. (2016), but it can be applied to any parallelisable population-based MCMC algorithm which includes local moves and interacting moves where all processors must communicate, such as sequential Monte Carlo (SMC) samplers ( Del Moral et al. (2006)), or parallelised generalised elliptical slice sampling ( Nishihara et al. (2014)).

This paper is structured as follows. Sections 2 and 3 develop our Anytime Parallel Tempering Monte Carlo (APTMC) algorithm and then Sect. 4 extends our framework further for the 1-hit MCMC kernel of Lee (2012) for ABC. Experiments are run in Sect. 5 and include a carefully constructed synthetic example to demonstrate the workings and salient features of Anytime parallel tempering. Section 5 also presents an application of Anytime parallel tempering to the problem of estimating the parameters of a stochastic Lotka-Volterra predator-prey model. Finally, Sect. 6 provides a summary and some concluding remarks.

2 Anytime Monte Carlo

Let \(\left( X_n\right) _{n=0}^{\infty }\) be a Markov chain with initial state \(X_0\), evolving on state space \({\mathcal {X}}\), with transition kernel \(X_n \, | \, x_{n-1} \sim \kappa (\text {d}x_n | x_{n-1})\) and target distribution \(\pi (\text {d}x)\). Define the hold time \(H_{n-1}\) as the random and positive real time required to complete the computations necessary to transition from state \(X_{n-1}\) to \(X_n\) via the kernel \(\kappa \). Then let \(H_{n-1} \, | \, x_{n-1} \sim \tau (\text {d}h_{n-1}|x_{n-1})\) where \(\tau \) is the hold time distribution.

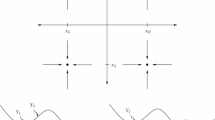

(Murray et al. (2016), Fig. 1) Real-time realisation of a Markov chain with states \(\left( X_n\right) _{n=0}^{\infty }\), arrival times \(\left( A_n\right) _{n=0}^{\infty }\) and hold times \(\left( H_n\right) _{n=0}^{\infty }\).

Assume that the hold time \(H> \epsilon >0\) for minimal time \(\epsilon \), \(\sup _{x \in {\mathcal {X}}} {\mathbb {E}}\left[ H\,|\,x\right] < \infty \), and the hold time distribution \(\tau \) is homogeneous in time. In general, nothing is known about the hold time distribution \(\tau \) except how to sample from it, i.e. by recording the time taken by the algorithm to simulate \(X_n \, | \, x_{n-1}\). Let \(\kappa (\text {d}x_n, \text {d}h_{n-1} | x_{n-1}) = \kappa (\text {d}x_n | h_{n-1}, x_{n-1}) \tau (\text {d}h_{n-1}|x_{n-1})\) be a joint kernel. The transition kernel \(\kappa (\text {d}x_n | x_{n-1})\) is the marginal of the joint kernel over all possible hold times \(H_{n-1}\). Denote by \(\left( X_n\right) _{n=0}^{\infty }\) and \(\left( H_n\right) _{n=0}^{\infty }\) the states and hold times of the joint process, and define the arrival time of the n-th state as

where \(a_0 := 0\). A possible realisation of the joint process is illustrated in Fig. 1.

Let the process \(N(t) := \sup \left\{ n:A_n\le t \right\} \) count the number of arrivals by time t. From this, construct a continuous Markov jump process \(\left( X, L\right) (t)\) where \(X(t) := X_{N(t)}\) and \(L(t):=t-A_{N(t)}\) is the lag time elapsed since the last jump. This continuous process describes the progress of the computation in real time.

Proposition 1

(Murray et al. (2016), Proposition 1) The continuous Markov jump process \(\left( X, L\right) (t)\) has stationary distribution given by

where \(\bar{F}_{\tau }\left( l \, | \, x \right) = 1-F_{\tau }\left( l \, | \, x \right) \), and \(F_{\tau }\left( l \, | \, x \right) \) is the cumulative distribution function (cdf) of \(\tau (\text {d}h_n|x_{n})\).

Corollary 1

( Murray et al. (2016), Corollary 2) The marginal \(\alpha (\mathrm {d}x)\) of the density in (3) is length-biased with respect to the target density \(\pi (\mathrm {d}x)\) by expected hold time, i.e.

The proofs of Proposition 1 and Corollary 1 are given in Murray et al. (2016).

The distribution \(\alpha \) is referred to as the anytime distribution and is the stationary distribution of the Markov jump process. Note that Proposition 1 suggests that when the real time taken to draw a sample depends on the state of the Markov chain, i.e. \({\mathbb {E}}[H\,|\,x]\ne {\mathbb {E}}[H]\), a length bias with respect to computation time is introduced. In other words, when interrupted at real time t, the state of a Monte Carlo computation targeting \(\pi \) is distributed according to the anytime distribution \(\alpha \), which can essentially be seen as a length-biased target distribution. This bias diminishes with time, and when an empirical approximation or average over all post burn-in samples is required, it may be rendered negligible for a long enough computation. However, the bias in the final state does not diminish with time, and when this final state is important—which is the case in parallel tempering—the bias cannot be avoided by running the algorithm for longer. We now discuss the approach in Murray et al. (2016) to correct this bias. The main idea is to make it so expected hold time is independent of X, which leads to \({\mathbb {E}}\left[ H\,|\,x\right] = {\mathbb {E}}\left[ H\right] \) and hence \(\alpha (\text {d}x) = \pi (\text {d}x)\), following Corollary 1. This is trivially the case for iid sampling as \(\kappa (\text {d}x|x_{n-1})=\pi (\text {d}x)\), so the hold time \(H_{n-1}\) for \(X_{n-1}\) is the time taken to sample \(X_n \sim \pi (\text {d}x)\), and therefore independent of the state \(X_{n-1}\). One approach to non-iid sampling involves simulating \(K+1\) Markov chains for \(K > 0\), where we assume for now that all the Markov chains are targeting \(\pi \) and using the same transition kernel \(\kappa \) and hold time distribution \(\tau \). These \(K+1\) chains are simulated on the same processor in a serial schedule. This ensures that whenever the real-time deadline t is reached, states from all but one of the chains, say the \((K+1)\)-th chain, are independently distributed according to the target \(\pi \). Since the \((K+1)\)-th chain is the currently working chain, i.e. the latest to go through the simulation process, its state at the real-time deadline is distributed according to the anytime distribution \(\alpha \). Simply discarding or ignoring the state of this \((K+1)\)-th chain eliminates the length bias. See Murray et al. (2016) (Section 2.1) for more details.

Using this multiple chain construction, it is thus possible draw samples from \(\pi \) by interrupting the process at any time t. This sets the basis for the focus of this paper: the Anytime Parallel Tempering Monte Carlo (APTMC) algorithm, described next. From this point onward, the number of chains on a given worker or processor within the Anytime framework is referred to as K rather than \(K+1\) for simplicity.

3 Anytime parallel tempering Monte Carlo (APTMC)

3.1 Overview

Consider the problem in which we wish to sample from target distribution \(\pi (\text {d}x)\). In a parallel tempering framework, construct \(\Lambda \) Markov chains where each individual chain \(\lambda \) targets the tempered distribution

and is associated with kernel \(\kappa _{\lambda }(\text {d}x_{n} \, | \, \text {d}x_{n-1})\) and hold time distribution \(\tau _{\lambda }(\text {d}h_{n}\,|\,x_{n})\). In this setting, the hold time distribution is not assumed to be homogeneous across all chains, and may be temperature-dependent. Assume that all \(\Lambda \) chains are running concurrently on \(\Lambda \) processors. We aim to interrupt the computations on a real-time schedule of times \(t_1, t_2, t_3, \ldots \) to perform exchange moves between adjacent pairs of chains before resuming the local moves. To illustrate the challenge of this task, we discuss the case where \(\Lambda =2\). Let \(\pi _2\) be the desired posterior and \(\pi _1\) the ‘warm’ chain, with associated hold time distributions \(\tau _1\) and \(\tau _2\), respectively. When the two chains are interrupted at some time t, assume that the current sample on chain 1 is \(X_m^{1}\) and that of chain 2 is \(X_n^2\). It follows from Corollary 1 that

and similarly for \(X_n^2\). Exchanging the samples using the acceptance probability in (2) is incorrect. Indeed, exchanging using the current samples \(X_m^1\) and \(X_n^2\), if accepted, will result in the sample sets \(\left\{ X_1^1, X_2^1, \ldots \right\} \) and \(\left\{ X_1^2, X_2^2, \ldots \right\} \) being corrupted with samples which arise from their respective length-biased, anytime distributions \(\alpha _1\) and \(\alpha _2\), as opposed to being exclusively from \(\pi _1\) and \(\pi _2\). Furthermore, the expressions for \(\alpha _1\) and \(\alpha _2\) will most often be unavailable, since their respective hold time distributions \(\tau _1\) and \(\tau _2\) are not explicitly known but merely implied by the algorithm used to simulate the two chains. Finally, we could wait for chains 1 and 2 complete their computation of \(X_{m+1}^1\) and \(X_{n+1}^2\) respectively, and then accept/reject the exchange \(\left( X_{m+1}^1, X_{n+1}^2\right) \rightarrow \left( X_{n+1}^2, X_{m+1}^1\right) \) according to (2). This approach won’t introduce a bias but can result in one processor idling while the slower computation finishes. We show this can result in significant idling in numerical examples.

In the next section, we describe how to correctly implement exchange moves within the Anytime framework.

3.2 Anytime exchange moves

Here, we adapt the multi-chain construction devised to remove the bias present when sampling from \(\Lambda \) Markov chains, where each chain \(\lambda \) targets the distribution \(\pi _{\lambda }\) for \(\lambda = 1, \ldots , \Lambda \). Associated with each chain is MCMC kernel \(\kappa _{\lambda }(\text {d}x^{\lambda }_n \, | \, \text {d}x^{\lambda }_{n-1})\) and hold time distribution \(\tau _{\lambda }(\text {d}h \, | \, x)\).

Proposition 2

Let \(\pi _\lambda (\mathrm {d}x)\), \(\lambda = 1\ldots , \Lambda \) be the stationary distributions of \(\Lambda \) Markov chains with associated MCMC kernels \(\kappa _{\lambda }(\mathrm {d}x^{\lambda }_n \, | \, \mathrm {d}x^{\lambda }_{n-1})\) and hold time distributions \(\tau _{\lambda }(\mathrm {d}h \, | \, x)\). Assume the chains are updated sequentially and let j be the index of the currently working chain. The joint anytime distribution is the following generalisation of Proposition 1

The proof of Proposition 2 is given in Appendix A.1. Conditioning on \(x^j\), j and l we obtain

Therefore, if exchange moves on the conditional \(A(\text {d}x^{1:\Lambda \setminus j}\, | \, x^j, l, j)\) are performed by ‘eliminating’ the j-th chain to obtain the expression in (5), they are being performed involving only chains distributed according to their respective targets \(\pi _\lambda \) and thus the bias is eliminated.

3.3 Implementation

On a single processor, the algorithm may proceed as in Algorithm 1, where in Step 3 the \(\Lambda \) chains are simulated one at a time in a serial schedule. Figure 2 provides an illustration of how the algorithm works.

Illustration of the progression of three chains in the APTMC algorithm on a single processor. The green (local move) and blue (exchange move) dots represent samples from the posterior being recorded as their respective local and exchange moves are completed. When exchange moves occur at \(t_1\), chain \(\lambda =1\) is currently moving and cannot participate in exchange moves without introducing a bias. Therefore it is ignored, and the exchange moves are performed on the remaining (inactive) chains. Similarly, at time \(t_2\) chain \(\lambda =2\) is excluded from the exchange. The widths of intervals \(t_1\) and \(t_2\) are for illustrating the exchange procedure only. (Color figure online)

When multiple processors are available, the \(\Lambda \) chains can be allocated to them. However, running a single chain on each processor means that when the real-time deadline occurs, all chains will be distributed according to their respective anytime distributions \(\alpha _{\lambda }\), and thus be biased as exchange moves occur. Therefore, all processors must contain at least two chains. A typical scenario would be each processor is allocated two or more temperatures to sample from. The implementation is defined as described in Algorithm 3. Note that the multiple chain construction eliminates the intractable densities in the acceptance ratio for the exchange step when \(\tau \) differs between processors, since exchange moves are performed between chains that are not currently working (i.e. on density (5) for a single processor and (6) for multiple processors), so the hold time distribution does not factor in.

Depending on the problem at hand and computing resources available, there are various approaches to distributing the chains across workers. We distinguish three possible scenarios. The first is an ideal scenario, where the number of processors exceeds \(\Lambda \) and the communication overhead between workers is negligible. In this scenario, each worker implements \(K=2\) chains running at the same temperature. For example, with \(W = \Lambda \) workers, worker \(w = \lambda \) contains 2 chains targeting \(\pi _{\lambda }\). The second scenario arises when the number of workers available is limited, but communication overhead is still negligible. In this case, the chains, sorted in increasing order of temperature, are divided evenly among workers. For example, with \(W = \frac{\Lambda }{2}\) workers, worker w could contain two chains, one with target \(\pi _{2w-1}\) and one with target \(\pi _{2w}\). The third scenario deals with non-negligible inter-processor communication overhead (which only affects the exchange moves). To account for this, exchange moves are divided into two types:

-

1.

Within-worker exchange move: performed on each individual worker in parallel, between a pair of adjacent chains. No communication between workers is necessary in this case.

-

2.

Between-worker exchange move: performed by selecting a pair of adjacent workers and exchanging between the warmest eligible chain from the first worker and coldest from the second. Thus, an exchange move between two adjacent chains is effectively being performed, except this time communication between workers is required.

3.4 Tuning considerations

In this section we discuss the issue of tuning Anytime parallel tempering by drawing on various ideas from the literature. The main concerns are the selection of the number of chains and their temperatures, the tuning of the local moves for each chain and the selection of appropriate hard deadlines for the exchange moves to occur. In our setting, the computational budget determines the number of chains \(\Lambda \), and for such a fixed budget we aim to improve sampling of the cold chain through the adoption of parallel tempering stages. The issue of determining the temperature of adjacent chains has been considered in Rathore et al. (2005); Kone and Kofke (2005); Atchadé et al. (2011) where it was shown that an exchange success rate of approximately 20-25% for adjacent chains is optimal, in an appropriate sense, and is demonstrated to confer the most benefit to sampling the coldest chain. However, the optimality curve (Kone and Kofke (2005); Atchadé et al. (2011)) has a broad mode, and even 40% seems appropriate. To achieve this 25% acceptance rate of exchange moves, other than employing pilot runs, adaptive tuning is possible and Miasojedow et al. (2013) use a Robbins-Munro scheme to adjust the temperatures to target a 25% acceptance rate during runtime. The next issue is local proposals, and how large a change of state one should attempt (for the local accept/reject step). This subject has received ample attention in the literature following the seminal paper by Roberts et al. (2001), where a 25% local move acceptance rate is again optimal. The local proposal can be a Gaussian proposal whose mean and covariance matrix are tuned online (Miasojedow et al. (2013)) via a Robbins-Munro scheme to achieve the 25% local move acceptance rate. The tuning of Gaussian proposals for MCMC in general was popularised by the seminal paper of Haario et al. (2001).

When performing exchange moves, rather than selecting a single pair of adjacent chains from \(\left\{ (1, 2), (2, 3), \ldots ,\right. \) \(\left. (\Lambda -1, \Lambda )\right\} \) for an exchange, it is common to propose to swap multiple pairs of chains simultaneously, as the exchange move is relatively cheap. To avoid selecting the same chain twice, they are divided into odd \(\left\{ (1, 2), (3, 4), \ldots \right\} \) and even \(\left\{ (2, 3), (4, 5), \ldots \right\} \) pairs of indices in Lingenheil et al. (2009), and all odd or even pairs are selected for exchange with equal probability. It is however shown in Syed et al. (2019) that it is better to deterministically cycle between exchanging odd and even pairs.

Although thus far we have suggested tuning the number of chains and annealing schedule for APTMC as if one were tuning a standard parallel tempering algorithm, there are some caveats which we now highlight. Selecting chains for exchange moves can be applied by omitting the currently working chains and relabelling the indices of the remaining, inactive or eligible chains. However, note that by the nature of the Anytime exchange moves, the Anytime version of an optimised parallel tempering algorithm can be suboptimal, since one or more temperature(s) might be missing from exchange moves. Considering the example in Fig. 2 and assuming the chains are all running at increasing temperatures, at \(t_2\), chain 2 is working, so the exchange move is performed between chains 1 and 3. In a practical example, these chains would be further apart, which would lead to a lower exchange move acceptance rate. Selecting adjacent chains to target a slightly higher successful exchange rate, say 40%, would mitigate this issue; noting that even 40% is close to optimal Kone and Kofke (2005); Atchadé et al. (2011). In our implementation, we only experienced a small drop in acceptance rate due to attempting to swap two eligible chains that are not immediately adjacent, and this event becomes less likely as the number of chains increases.

Another important facet of tuning APTMC is the issue of determining the real-time schedule \(t_1, t_2, \ldots \) of exchange moves. Let \(\delta \) be the real-time interval or deadline between exchange moves, so that \(t_i = i\delta \) for \(i=1, 2, \ldots \) We now present guidelines for calibrating \(\delta \). Let K be the number of chains, labelled \(k=1, \ldots , K\), on the slowest processor \(w_s\) (generally the one containing the cold chain), our experiments have shown that exchange moves should occur once every chain on this processor has completed at least one local move (see Dupuis et al. (2012) for an alternative view advocating an infinite exchange frequency version of parallel tempering). The expected hold time of one set of local moves on processor \(w_s\), denoted \({\mathsf {H}} := \sum _{k=1}^K{\mathbb {E}}[H_{k}]\), can be estimated by repeatedly measuring the time taken for one set of local moves to complete, and averaging across all measurements. Using a pilot run, an estimate \(\widehat{{\mathsf {H}}}\) of this expected hold time can be obtained, then set \(\delta = \widehat{{\mathsf {H}}}\) for running the APTMC algorithm. This \(\delta \) value can also be calibrated in real time, denoted \(\delta (t)\) where t is the real time. At \(t=0\), initialise \(\delta (0) = \delta _0\) such that \(\delta _0>0\) is an initial, user-defined guess. Similarly as before, record a hold time sample every time a set of local move occurs on processor \(w_s\), then after every exchange move, recompute \(\widehat{{\mathsf {H}}}\) and update \(\delta (t) = \widehat{{\mathsf {H}}}\). An advantage of this second approach is that \(\delta (t)\) then adapts to a potentially time-inhomogeneous hold time, due e.g. to competing jobs on the processors starting mid-algorithm and suddenly slowing down the computation time of local moves.

A scenario we encountered in our experiments was non-negligible communication overhead between workers to execute the exchange moves, and this overhead was comparable to local move times which were themselves lengthy. To mitigate the communication overhead, as described in Sect. 3.3, exchange moves are divided into within- and between- workers. On a given worker with K chains, a set of worker specific moves is performed before inter-worker exchanges. These were K local moves, one (set of) within-worker exchange moves, then K more local moves, before inter-worker communication occurs for between-worker exchange moves. Given that within-worker exchanges are instant, this amounts in real time to performing 2K local moves on this worker before inter-worker communication occurs. Therefore, the real-time deadline in the Anytime version of the algorithm for this scenario is set to be \(\delta = 2{\mathsf {H}}\) and can be determined as above. See Sect. 5.3.2 for an example.

Finally, Sect. 5.1.3 details other, empirical tools that help with tuning by assessing the efficiency of each chain. These include evaluating the sample autocorrelation function (acf), as well as the integrated autocorrelation time (IAT) and effective sample size (ESS).

4 Application to approximate Bayesian computation (ABC)

In this section we adapt the APTMC framework to ABC.

4.1 Overview of ABC

The notion of ABC was developed by Tavaré et al. (1997) and Pritchard et al. (1999). It can be seen as a likelihood-free way to perform Bayesian inference, using instead simulations from the model or system of interest, and comparing them to the observations available.

Let \(y \in {\mathbb {R}}^d\) be some data with underlying unknown parameters \(\theta \sim p(\text {d}\theta )\), where \(p(\theta )\) denotes the prior for \(\theta \in \Theta \). Suppose we are in the situation in which the likelihood \(f(y \, | \, \theta )\) is either intractable or too computationally expensive, which means that MCMC cannot be performed as normal. Assuming that it is possible to sample from the density \(f(\, \cdot \, | \, \theta )\) for all \(\theta \in \Theta \), approximate the likelihood by introducing an artificial likelihood \(f^{\varepsilon }\) of the form

where \(B_{\varepsilon }(y)\) denotes a metric ball centred at y of radius \(\varepsilon > 0\) and Vol\((\varepsilon )\) is its volume. The resulting approximate posterior is given by

The likelihood \(f^{\varepsilon }(y\,|\,\theta )\) cannot be evaluated either, but an MCMC kernel can be constructed to obtain samples from the approximate posterior \(\pi ^{\varepsilon }(\theta , x)\) defined as

where \(\mathbb {1}_{\varepsilon }(x)\) is the indicator function for \(x \in B_{\varepsilon }(y)\). This is referred to as hitting the ball \(B_{\varepsilon }(y)\). In the MCMC kernel, one can propose \(\theta ' \sim q(\text {d}\theta ' \,|\,\theta )\) for some proposal density q, simulate the dataset \(x \sim f(\text {d}x \,|\,\theta ')\) and accept \(\theta '\) as a sample from the posterior if \(x \in B_{\varepsilon }(y)\).

The 1-hit MCMC kernel, proposed by Lee (2012) and described in Algorithm 4 introduces local moves in the form of a ‘race’: given current and proposed parameters \(\theta \) and \(\theta '\), respectively simulate corresponding datasets x and \(x'\) sequentially. The state associated with the first dataset to hit the ball \(B_{\varepsilon }(y)\) ‘wins’ and is accepted as the next sample in the Markov chain. The proposal \(\theta '\) is also accepted if both x and \(x'\) hit the ball at the same time.

4.2 Anytime parallel tempering Monte Carlo for approximate Bayesian computation (ABC-APTMC)

Including the 1-hit kernel in the local moves of a parallel tempering algorithm is straightforward. Exchange moves must however be adapted to this new likelihood-free setting. Additionally, the race that occurs takes a random real time to complete, and this time is temperature-dependent, as it is quicker to hit a ball of larger radius. This provides good motivation for the use of Anytime Monte Carlo.

4.2.1 Exchange moves

The exchange moves for ABC are derived similarly as in Baragatti et al. (2013). Let \((\theta , x)\) and \((\theta ', x')\) be the states of two chains targeting \(\pi ^{\varepsilon }\) and \(\pi ^{\varepsilon '}\), respectively, where \(\varepsilon '>\varepsilon \). Here, this is equivalent to saying \(\theta '\) is the state of the ‘warmer’ chain. We already know that \(x'\) falls within \(\varepsilon '\) of the observations y, i.e. \(x' \in B_{\varepsilon '}(y)\). Similarly, we also know that \(x \in B_{\varepsilon }(y)\), and trivially that \(x \in B_{\varepsilon '}(y)\). If \(x'\) also falls within \(\varepsilon \) of y, then swap the states, otherwise do not swap. The odds ratio is

so the probability of the swap being accepted is the probability of \(x'\) also hitting the ball of radius \(\varepsilon \) centred at y. This type of exchange move is summarised in Algorithm 5.

4.2.2 Implementation

The full implementation of the ABC-APTMC algorithm on a single processor (ABC-APTMC-1) is described in Algorithm 6. The multi-processor algorithm can similarly be modified to reflect these new exchange moves and the resulting algorithm is referred to as ABC-APTMC-W.

5 Experiments

In this section, we first illustrate the workings of the algorithms presented in Sect. 3.3 on a simple model, in which real-time behaviour is simulated using virtual time and an artificial hold distribution. The model is also employed to demonstrate the gain in efficiency provided by the inclusion of exchange moves. Then, the ABC version of the algorithms, as presented in Sect. 4, is applied to two case studies. The first case is a simple model and serves to verify the workings of the ABC algorithm, including bias correction. The second case considers the problem of estimating the parameters of a stochastic Lotka-Volterra predator-prey model − in which the likelihood is unavailable − and serves to evaluate the performance of the Anytime parallel tempering version of the ABC-MCMC algorithm, as opposed to the standard versions (with and without exchange moves) on both a single and multiple processors. The exchange moves are set up so that multiple pairs could be swapped at each iteration. All experiments in this paper were run on Matlab and the code is available at https://github.com/alixma/ABCAPTMC.git.

5.1 Analytic example: Gamma mixture model

In this example we attempt to sample from an equal mixture of two Gamma distributions using the APTMC algorithm. Define the target \(\pi (\text {d}x)\) and an ‘artificial’ hold time \(\tau (\text {d}h \, | \, x)\) distribution as follows:

with mixture coefficients \(\phi = \frac{1}{2}\) and \(\psi \), where Gamma\((\,\cdot \,,\,\cdot \,)\) denotes the probability density function of a Gamma distribution, with shape and scale parameters \((k_1, \theta _1)\) and \((k_2, \theta _2)\) for each components, respectively, and with polynomial degree p, assuming it remains constant for both components of the mixture.

In the vast majority of experiments, the explicit form of the hold time distribution \(\tau \) is not known, but observed in the form of the time taken by the algorithm to simulate X. For this example, so as to avoid external factors such as competing jobs affecting the hold time, we assume an explicit form for \(\tau \) is known and simulate virtual hold times. This consists of simulating a hold time \(h \sim \tau (\text {d}h \, | \, x)\) and advancing the algorithm forward for h units of virtual time without updating the chains, effectively ‘pausing’ the algorithm. These virtual hold times are introduced such that what in a real-time example would be the effects of constant \((p=0)\), linear \((p=1)\), quadratic \((p=2)\) and cubic \((p=3)\) computational complexity can be studied. Another advantage is that the anytime distribution \(\alpha _{\Lambda }(\text {d}x)\) of the cold chain can be computed analytically and is the following mixture of two Gamma distributions

where

Density estimates of the cold chain for bias corrected and uncorrected runs of the single (APTMC-1) and multi-processor (APTMC-W) algorithms on various hold time distributions \(p \in \left\{ 0, 1, 2, 3\right\} \). In the single-processor case, the cold chain is made up entirely of updates resulting from exchange moves. The solid dark grey line represents the true posterior density \(\pi \) and the solid light grey line the anytime distribution \(\alpha \). The case \(p=0\) represents an instance in which, in a real-time situation, the local moves do not take a random time to complete, and therefore all densities are identical. The two green dashed lines represent bias corrected densities and the red dot-dashed represent uncorrected densities. For \(p\ge 1\), the two corrected densities are identical to the posterior, indicating that the bias correction was successful. (Color figure online)

We refer the reader to Appendix A.2 for the proof of (8). In the anytime distribution, one of the components of the Gamma distribution will have an associated mixture coefficient \(\varphi (p, k_{1:2}, \theta _{1:2})\) or \(1-\varphi (p, k_{1:2}, \theta _{1:2})\) which increases with p while the coefficient of the other component decreases proportionally. Note that for constant \((p=0)\) computational complexity, the anytime distribution is equal to the target distribution \(\pi \).

5.1.1 Implementation

On a single processor, the Anytime Parallel Tempering Monte Carlo algorithm (referred to as APTMC-1) is implemented as follows: simulate \(\Lambda = 8\) Markov chains, each targeting the distribution \(\pi _{\lambda }(\text {d}x) = \pi (\text {d}x)^{\frac{\lambda }{\Lambda }}\). To construct a Markov chain \((X^{\lambda })_{n=0}^{\infty }\) with target distribution

for \(\lambda = 1, \ldots , \Lambda \), use a Random Walk Metropolis update, i.e. symmetric Gaussian proposal distribution \({\mathcal {N}}(x_n^{\lambda }, \sigma ^2)\) with mean \(x_n^{\lambda }\) and standard deviation \(\sigma = 0.5\). Set \((k_1, k_2) = (3, 20)\), \((\theta _1, \theta _2) = (0.15, 0.25)\) and use \(p \in \left\{ 0, 1, 2, 3\right\} \). The single processor algorithm is run for \(T = 10^8\) units of virtual time, with exchange moves alternating between occurring on all odd (1, 2), (3, 4), (5, 6) and all even (2, 3), (4, 5), (6, 7) pairs of inactive chains every \(\delta = 5\) units of virtual time. When the algorithm is running, a sample is recorded every time a local or exchange move occurs.

On multiple processors, the APTMC algorithm (referred to as APTMC-W) is implemented similarly. A number of \(W = \Lambda = 8\) processors is used, where each worker \(w = \lambda \) contains \(K=2\) chains, all targeting the same \(\pi _{\lambda }\) for \(\lambda = 1, \ldots , \Lambda \). The multiple processor algorithm is run for \(T = 10^7\) units of virtual time, with exchange moves alternating between occurring on all odd (1, 2), (3, 4), (5, 6), (7, 8) and all even (2, 3), (4, 5), (6, 7) pairs of workers every \(\delta =5\) units of virtual time. On each worker, the chain which was not working when calculations were interrupted is the one included in the exchange moves.

5.1.2 Verification of bias correction

To check that the single and multiple processor algorithms are successfully correcting for bias, they are also run uncorrected, i.e. not excluding the currently working chain. This means that exchange moves are also performed on samples distributed according to \(\alpha \) instead of \(\pi \), thus causing the algorithm to yield biased results. Since the bias is introduced by the exchange moves (when they are performed on \(\alpha \)), we attempt to create a ‘worst case scenario’, i.e. maximise the amount of bias present when the single processor algorithm is uncorrected. The algorithm is further adjusted such that local moves are not performed on the cold chain and it is instead solely made up of samples resulting from exchange moves with the warmer chains. The multi-processor APTMC-W algorithm is not run in a ‘worst case scenario’, so local moves on the cold chain of the multi-processor algorithm are therefore allowed. This is meant to reveal how the bias caused by failing to correct when performing exchange moves across workers is still apparent, if less strongly.

Figure 3 shows kernel density estimates of the post burn-in cold chains resulting from runs of the APTMC-1 and APTMC-W algorithms, uncorrected and corrected for bias. As expected, when the hold time does not depend on x, which corresponds to the case there \(p=0\), no bias is observed. On the other hand, the cold chains for the single-processor algorithm with computational complexity \(p \in \left\{ 1, 2, 3\right\} \) have been corrupted by biased samples and converged to a shifted distribution which puts more weight the second Gamma mixture component, instead of an equal weight. Additionally, the bias becomes stronger as computational complexity p increases. A similar observation can be made for the cold chains from the multi-processor experiment − which display a milder bias due to local moves occurring on the cold chain. The green dashed densities indicate that when the algorithms are corrected, i.e. when the currently working chain is not included in exchange moves, it successfully eliminates the bias for all \(p \in \left\{ 1, 2, 3\right\} \) to return the correct posterior \(\pi \) − despite even this being the ‘worst case scenario’ in the case of the APTMC-1 algorithm. Note that the uncorrected density estimates do not exactly correspond to the anytime distributions. This has nothing to do with burn-in, but with the proportion of biased samples (from exchange moves) present in the chain.

5.1.3 Performance evaluation

Next we verify that introducing the parallel tempering element to the Anytime Monte Carlo algorithm improves performance. A standard MCMC algorithm is run for computational complexities \(p \in \left\{ 0, 1, 2, 3\right\} \), applying the random walk Metropolis update described in Sect. 5.1.1. The single and multiple processor APTMC algorithms are run again for the same amount of virtual time, with exchange moves occurring every \(\delta _{0:2}=5\) units of virtual time for \(p\le 2\) and every \(\delta _3 = 30\) units when \(p=3\). The single processor version is run on \(\Lambda _s = 8\) chains, and the multi-processor on \(W = 8\) workers, with \(K=2\) chains per worker, so \(\Lambda _m = 16\) chains in total. This time, local moves are performed on the cold chain of the single processor APTMC-1 algorithm.

To compare results, kernel density estimates of the posterior are obtained from the post burn-in cold chains for each algorithm using the kde function in MATLAB (2019), developed by Botev et al. (2010). It is also important to note that even though all algorithms run for the same (virtual) duration, the standard MCMC algorithm is performing local moves on a single chain uninterrupted until the deadline, while the APTMC-1 algorithm has to update \(\Lambda =8\) chains in sequence, and each worker w of the APTMC-W algorithm has to update \(K=2\) chains in sequence before exchange moves occur. Therefore, by (virtual) time T the algorithms will not have returned samples of similar sizes. For a fair performance comparison, the sample autocorrelation function (acf) is estimated first of all. When available, the acf is averaged over multiple chains to reduce variance in its estimates. Other tools employed are

-

Integrated Autocorrelation Time (IAT), the computational inefficiency of an MCMC sampler. Defined as

$$\begin{aligned} IAT_s = 1+2\sum _{\ell =1}^{\infty } \rho _s({\ell }), \end{aligned}$$where \(\rho _s({\ell })\) is the autocorrelation at the \(\ell \)-th lag of chain s. It measures the average number of iterations required for an independent sample to be drawn, or in other words the number of correlated samples with same variance as one independent sample. Hence, a more efficient algorithm will have lower autocorrelation values and should yield a lower IAT value. Here, the IAT is estimated using a method initially suggested in Sokal (1997) and Goodman and Weare (2010), and implemented in the Python package emcee by Foreman-Mackey et al. (2013) (Section 3). Let

$$\begin{aligned} {\hat{IAT}}_s = 1 + 2\sum _{\ell =1}^{M} {\hat{\rho }}_s({\ell }), \end{aligned}$$where M is a suitably chosen cutoff, such that noise at the higher lags is reduced. Here, the smallest M is chosen such that \(M \ge C{\hat{\rho }}_s(M)\) where \(C\approx 6\). More information on the choice of C is available in Sokal (1997).

-

Effective Sample Size (ESS), the amount of information obtained from an MCMC sample. It is closely linked to the IAT by definition:

$$\begin{aligned} ESS_s = \frac{N_s}{IAT_s}, \end{aligned}$$where \(N_s\) is the size of the current sample s. The ESS measures the number of independent samples obtained from MCMC output.

As per Foreman-Mackey et al. (2013), when multiple repeat runs of an experiment are performed (see Sect. 5.3), the IAT for a given algorithm is obtained by averaging the acf returned by this algorithm over the repeat runs, and the resulting ESSs of each run are summed to obtain a cumulative ESS for this algorithm.

Density estimates of the cold posterior for runs of the single (orange) and multiple (blue) processor APTMC algorithms (APTMC-1 and APTMC-W, respectively) as well as the standard (green) MCMC algorithm. The grey line represents the true posterior density \(\pi \). Each plot corresponds to a different hold time distribution \(p \in \left\{ 0, 1, 2, 3\right\} \). While the multi processor density has successfully converged for all p − as evidenced by the perfect overlap between the grey and dark blue lines −, the other two algorithms tend to struggle more and more to estimate the first mode of the posterior as p increases. (Color figure online)

The resulting ESS and IAT for different algorithms and computational complexities are computed and shown in Table 1. If an exchange move is accepted, the new state of the chain does not depend on the value of the previous state. This means that the autocorrelation in a chain containing a significant proportion of (accepted) samples originating from exchange moves will be lower. For low p, significantly more local moves occur before each deadline, as hold times are short, while for a higher p, the hold times are longer and hence fewer local moves are able to occur. Therefore, higher values of p will yield a higher proportion of samples from exchange moves, and thus a more notable increase in efficiency.

In Fig. 4 we observe, that the quality of the posterior estimates decreases as p increases. As a matter of fact, \(10^7\) units of virtual time tend to not be enough for the some of the posterior chains to completely converge. Indeed, while the standard MCMC algorithm performs reasonably well for \(p=0\), it becomes increasingly harder for it to fully converge for higher computational complexities. Similarly, the single processor APTMC-1 algorithm returns reasonably accurate posterior estimates for \(p\le 2\) but then visibly underestimates the first mode of the true posterior for \(p=3\). In general, the multi-processor APTMC-W algorithm returns results closest to the true cold posterior for all p.

As for efficiency, Table 1 displays a much lower IAT and much higher ESS for both APTMC algorithms, indicating that they are much more efficient than the standard MCMC algorithm. This is further supported by the sample autocorrelation decaying much more quickly for APTMC algorithms than for the MCMC algorithm for all p in Fig. 5. The multi-processor APTMC-W algorithm also yields IAT values that are lower than those returned by the single processor APTMC-1 algorithm for \(p<3\), and similarly yields ESSs that are higher for all p. The ESS and IAT values for chains that have not converged to their posterior (their resulting kernel density estimates significantly under or overestimate modes in Fig. 4) have been omitted from the table.

Next, we consider an application of the APTMC framework to ABC, a class of algorithms that are well-adapted to situations in which the likelihood is either intractable or computationally prohibitive. ABC features a real hold time at each MCMC iteration, making it an ideal candidate for adaptation to the Anytime parallel tempering framework.

Plots of the sample autocorrelation function up to lag 2500 of the post burn-in cold chain for runs of the single (orange) and multiple (blue) processor APTMC algorithms (APTMC-1 and APTMC-W, respectively) as well as for the output of the standard Anytime Monte Carlo (MCMC) algorithm (green). Each plot corresponds to a different computational complexity \(p \in \left\{ 0, 1, 2\right\} \). The two APTMC algorithms perform considerably better than standard MCMC for all p. The sample acf plot for \(p=3\) has been omitted due to both the APTMC-1 and MCMC chains not having fully converged to their posterior. (Color figure online)

5.2 ABC example: univariate Normal distribution

To validate the results of Sect. 4.2, consider another simple example, initially featured in Lee (2012), and adapted here within the APTMC framework. Let Y be a Gaussian random variable, i.e. \(Y \sim {\mathcal {N}}(\theta , \sigma ^2)\), where the standard deviation \(\sigma \) is known but the mean \(\theta \) is not. The ABC likelihood here is

for \(\varepsilon > 0\) where \(\Phi (\,\cdot \,)\) is the cumulative distribution function (cdf) of a standard Gaussian. Using numerical integration tools in Matlab, it is possible to obtain a good approximation of the true posterior for any \(\varepsilon \) for visualisation. Let \(y=3\) be an observation of Y and \(\sigma ^2=1\), and put the prior \(p(\theta ) = {\mathcal {N}}\left( 0, 5\right) \) on \(\theta \). In this example, the exact posterior distribution for \(\theta \) can straightforwardly be shown to be \({\mathcal {N}}\left( \frac{5}{2}, \frac{5}{6}\right) \).

Kernel density estimates of all chains for corrected and uncorrected runs of the single processor ABC-APTMC algorithm. In each subplot, the light grey line is fixed and represents the cold posterior for reference, the dark grey line represents each chain’s target posterior (obtained by numerical integration), the dot-dashed green lines are kernel density estimates of the chain’s posterior returned by the corrected algorithm and are indistinguishable from the dark grey line. The orange lines are kernel density estimates for the uncorrected algorithm, and do not agree with the dark grey line, as expected. (Color figure online)

When performing local moves (Algorithm 4), use a Gaussian random walk proposal with standard deviation \(\xi =0.5\). The real-time Markov jump process is run using \(\Lambda = 10\) chains. The algorithm is run on a single processor for one hour or \(T=3600\) seconds in real time after a 30 second burn-in, with exchange moves occurring every \(\delta _T=5 \times 10^{-4}\) seconds (or 0.5 milliseconds). The radii of the balls \(\varepsilon ^{1:\Lambda }\) are defined to vary between \(\varepsilon ^1=0.1\) and \(\varepsilon ^{\Lambda }=1.1\).

We verify that bias correction must be applied for all chains to converge to the correct posterior. This is done by visually comparing density estimates of each of the post burn-in chains to the true corresponding posterior (obtained by numerical integration). When bias correction is not applied, the ABC-APTMC algorithm does not exclude the currently working chain j in its exchange moves. In this case, every chain converges to an erroneous distribution which overestimates the mode of its corresponding posterior, as is visible in Fig. 6. On the other hand, correcting the algorithm for such bias ensures that every chain converges to the correct corresponding posterior.

Next, we compare the performance of the ABC-APTMC algorithm to that of a standard ABC (referred to as standard ABC) algorithm. For that, a more applied parameter estimation example is considered, for which the adoption of a likelihood-free approach is necessary.

5.3 Stochastic Lotka-Volterra model

In this section, we consider the stochastic Lotka-Volterra predator-prey model ( Lotka (1926), Volterra (1927)), a model which has been explored in the past ( Wilkinson (2011); Boys et al. (2008)), including in an ABC setting (Lee and Łatuszyński (2014); Fearnhead and Prangle (2012); Toni et al. (2009); Prangle et al. (2017)). Let \(X_{1:2}(t)\) be a bivariate, integer-valued pure jump Markov process with initial values \(X_{1:2}(0) = (50, 100)\), where \(X_1(t)\) represents the number of prey and \(X_2(t)\) the number of predators at time t. For small time interval \(\Delta t\), we describe the predator-prey dynamics in the following way:

In this example, the only observations available are the number of prey, i.e. \(X_1\) at 10 discrete time points. Following theory in Wilkinson (2011) (Chapter 6), the process can be simulated and discretised using the Gillespie (1977) algorithm, in which the inter-jump times follow an exponential distribution. The observations employed were simulated in Lee and Łatuszyński (2014) with true parameters \(\theta = (1, 0.005, 0.6)\), giving \(y = \left\{ 88, 165, 274, 268, 114, 46, 32, 36, 53, 92\right\} \) at times \(\left\{ 1, \ldots , 10\right\} \).

This case study presents various challenges. The first challenge is that the posterior is intractable, and some of the components of the parameters \(\theta :=\theta _{1:3}\) (namely \(\theta _2\) and \(\theta _3\)) exhibit strong correlations. Therefore, ABC must be employed, and the ‘ball’ considered takes the following form for \(\varepsilon >0\):

So a set of simulated \(X_1(t)\) is considered as ‘hitting the ball’ if all 10 simulated data points are at most \(e^\varepsilon \) times (and at least \(e^{-\varepsilon }\) times) the magnitude of the corresponding observation in y.

In Lee and Łatuszyński (2014) (Algorithm 3), the 1-hit MCMC kernel (referred to here as standard ABC) is shown to return the most reliable results by comparison with other MCMC kernels, which are not considered here. The second challenge is that while this algorithm can be reasonably fast, it is highly inefficient as it has a very low acceptance rate, and thus the autocorrelation between samples for low lags is very high.

We have established that the race step in Algorithm 4 takes a random time to complete. In addition to that, its hold time distribution for the Lotka-Volterra model is a mixture between quick and lengthy completion times, as the simulation steps within the 1-hit kernel race are capable of taking a considerable amount of time despite often being almost instant. Indeed, when simulating observations using the discretised Gillespie algorithm, if the number of predators is low, prey numbers will flourish and the simulation will take longer. Hence, the third challenge in this particular model is that the race can sometimes get stuck for extended periods of time if the number of prey to simulate is especially high. Therefore, we aim to first of all improve performances by introducing exchange moves on a single processor (ABC-PTMC). Then − and most importantly − we further improve the algorithm by implementing it within the Anytime framework (ABC-APTMC), a method which works especially well on multiple processors.

5.3.1 One processor

The first part of this case study is run on a single processor and serves to demonstrate the gain in efficiency introduced by the exchange moves described in Algorithm 5. Define the prior on \(\theta \in \left[ 0, \infty \right) ^3\) for the single processor experiment to be \(p(\theta ) = \exp \left\{ -\theta _1 - \theta _2 - \theta _3\right\} \). The proposal distribution is a truncated normal, i.e. \(\theta ' \, | \, \theta \sim {{\mathcal {T}}}{{\mathcal {N}}}(\theta , \Sigma )\), \(\theta ' \in (0, 10)\) with mean \(\theta \) and covariance \(\Sigma =\text {diag}\left( 0.25, 0.0025, 0.25\right) \). The truncated normal is used in order to ensure that all proposals remain non-negative. For reference, 2364 independent samples from the posterior are obtained via ABC rejection sampling with \(\varepsilon =1\) and the density estimates in Figure 6 of Lee and Łatuszyński (2014) are reproduced. To obtain these posterior samples, \(10^7\) independent samples from the prior were required, yielding the very low \(0.024\%\) acceptance rate. This method of sampling from the posterior is therefore extremely inefficient, and the decision to resort to MCMC kernels in order to improve efficiency is justified.

On a single processor, the three algorithms considered are the vanilla 1-hit MCMC kernel (standard ABC) defined in Algorithm 4, the single processor version of the algorithm with added exchange moves (ABC-PTMC-1) and the same but within the Anytime framework (ABC-APTMC-1). They are run nine times for 100800 seconds (28 hours) − after 3600 seconds (1 hour) of burn-in − and their main settings are summarised in Table 2.

Given the aim is to compare the performance of these algorithms, it is important to note that the parallel tempering algorithms, having to deal with updating multiple chains sequentially, are likely to return cold chains with fewer samples. The algorithms must therefore be properly set up such that the gain in efficiency introduced by exchange moves is not overshadowed by the greater number of chains and computational cost of having to update them all. In this experiment, the parallel tempering algorithms are run on \(\Lambda =6\) chains, each targeting posteriors associated with balls of radii \(\varepsilon ^{1:6} = \left\{ 1, 1.1447, 1.3104, 1.5, 11, 15\right\} \) and the proposal distribution has covariance \(\Sigma ^{1:6}\) where \(\Sigma ^{\lambda } = \text {diag}\left( \sigma ^{\lambda } , \sigma ^{\lambda } 10^{-2}, \sigma ^{\lambda } \right) \) and \(\sigma ^{1:6} = \left\{ 0.008, 0.025, 0.05, 0.09, 0.25, 0.5\right\} \). Exchange moves are performed as described in Algorithm 5 and alternate between odd (1, 2), (3, 4), (5, 6) (excluding (5, 6) in the Anytime version) and even (2, 3), (4, 5) pairs of eligible chains. As per Sect. 3.4, exchange moves for the ABC-PTMC-1 algorithm are performed every \(\Lambda =6\) local moves, and the real-time deadline \(\delta \) for exchange moves in the ABC-APTMC-1 algorithm is determined by repeatedly measuring the times taken by the ABC-PTMC-1 algorithm to perform these 6 moves and setting \(\delta \) to be the median over all measured times.

5.3.2 Multiple processors

Next, we demonstrate the gain in efficiency introduced by running the parallel tempering algorithm within the Anytime framework on multiple processors. The algorithms considered are the multi-processor ABC-PTMC-W and ABC-APTMC-W algorithms and their single processor counterparts ABC-PTMC-1 and ABC-APTMC-1 . This time, we define a uniform prior between 0 and 3. The proposal distribution is still a truncated normal, but with tighter limits (corresponding to the prior) i.e. \(\theta ' \, | \, \theta \sim T{\mathcal {N}}(\theta , \Sigma )\), \(\theta ' \in (0, 3)\).

The two algorithms are run on \(\Lambda = 20\) chains, each targeting posteriors associated with balls of radii ranging from \(\varepsilon ^{1} = 1\) to \(\varepsilon ^{20} = 11\) and proposal distribution covariances \(\Sigma ^{1:20}\) where \(\Sigma ^{\lambda } = \text {diag}\left( \sigma ^{\lambda } , \sigma ^{\lambda } 10^{-2}, \sigma ^{\lambda } \right) \) for chain \(\lambda =1, \ldots , 20\) and where values range from \(\sigma ^1 = 0.008\) to \(\sigma ^{20} = 0.5\) (see Table 6). The algorithms are run four times for 864000 seconds (24 hours) and their main settings are summarised in Table 3. Given the non-negligible 1.1 second communication overhead, this experiment is run according to the third scenario from Sect. 3.3, i.e. dividing exchange moves into within- and between-worker exchange moves. As described in Sect. 3.4, a full set of parallel moves here consists of \(K=5\) local moves, followed by within-worker exchange moves between a pair of adjacent chains selected at random, followed by 5 more local moves. The between-worker exchange moves are performed after a full set of parallel moves on the master by selecting a pair of adjacent workers at random and exchanging between the warmest eligible chain from the first worker and coldest from the second so that they are adjacent.

5.3.3 Performance evaluation

All algorithms returned density estimates that were close to those obtained via rejection sampling. In order to compare the performance of the algorithms, they are set to run for the same real time period. The IAT and cumulative ESS over all repeat runs are computed for all algorithms. The ESS is particularly important here, as it gives us how many effective samples the different algorithms can return within a fixed time frame. For example, a very fast algorithm could still return a higher ESS even if it has a much higher IAT. Additionally, to illustrate how the Anytime version of the parallel tempering algorithms works compared to standard ABC-PTMC, the real times all algorithms take to perform local and exchange moves are measured and their timelines plotted in Fig. 8.

One processor

Both the ABC-PTMC-1 and ABC-APTMC-1 algorithm display an improvement in performances: they return IATs that are respectively 3.2 and 1.6 times lower on average than those of the standard ABC algorithm in Table 4, and display a steeper decay in sample autocorrelation in Fig. 7. In the 28 hours (post burn-in) during which the algorithms ran, both parallel algorithms also yielded an increased ESS. The effect of the Anytime framework on the behaviour of the parallel tempering algorithm is demonstrated in Fig. 8. The timeline of local moves for the ABC-PTMC-1 algorithm illustrates the fact that local moves take a random amount of time to complete. In the Anytime version of the algorithm, this is mitigated since a deadline was implemented. As a result, the bottom plot in Fig. 8 displays more consistent local move times.

Note that in Table 4, while the improvement in IAT is significant, the increase in ESS after 28 hours is not particularly huge. This is due to the previously mentioned erratic behaviour of the hold time distribution for this example. Other examples explored such as the moving average example in Marin et al. (2012) (not reported here) yielded a much more significant increase in ESS after introducing exchange moves. We also note that in this example, the ABC-PTMC-1 algorithm returned a lower IAT than its Anytime counterpart but Anytime had a larger ESS. There are two potential reasons to account for the IAT. The first is the many swaps which are cycling the same samples among the held chains of Anytime. The second, as mentioned in Sect. 3.4, is that the Anytime algorithms cannot always exchange the samples of adjacent chains, because they must exclude the chain that is currently computing, and this causes a slightly higher rejection rate compared to the standard version (in the multi-processor example with more chains, this is mitigated). However, the many more swap moves of Anytime does then result in more returned samples, which leads to a higher ESS. The single processor experiment was mainly designed to demonstrate the performance improvements brought by adding exchange moves to the 1-hit MCMC kernel (referred to as standard ABC) and to show that Anytime does match the performance of parallel tempering on a single processor. Since a single processor is never idling, the strength of the Anytime framework is best illustrated in a multi-processor setting.

Plots of the sample autocorrelation function up to lag 200 of the cold chain for runs of the standard ABC (green), ABC-PTMC-1 (blue) and ABC-APTMC-1 (orange) algorithms to estimate the posterior distributions of the parameters \(\theta = \left( \theta _1, \theta _2, \theta _3\right) \) of a stochastic Lotka-Volterra model. The inclusion of exchange moves boosts efficiency and leads to a steeper decay in the parallel tempering algorithms. (Color figure online)

Timeline of local and exchange moves for the ABC-PTMC-1 and ABC-APTMC-1 algorithms for the first 300 seconds. The exchange moves are represented by the white and red lines and the local moves by the dark blue and orange coloured blocks. Local moves take a random amount of time to complete, as illustrated by the times consumed by local moves for the ABC-PTMC-1 algorithm. The Anytime (ABC-APTMC-1) version effectively implements a hard deadline for the exchange moves (without introducing a bias), as can be seen by the regularity of local move times in the bottom figure. (Color figure online)

5.3.4 Multiple processors

In the multi-processor case study, both the ABC-PTMC-1 and ABC-PTMC-W were set so that on each worker, an exchange move occurred after all chains had been updated locally once, as described in Table 3. The total number of samples returned by the ABC-PTMC-W algorithm is higher for all chains (see Table 6). However, the ABC-PTMC-W algorithm is just as affected by the distribution of the hold times being a mixture of quick and lengthy completion times as its single processor counterpart, and is just as prone to getting stuck in a race for an extended period. During this time, all processors sit idle while waiting for the race to complete, as illustrated in Fig. 9. Therefore, the ABC-PTMC-W algorithm struggles to properly boost the total sample size output by the cold chain, and the ESS is not markedly higher on average in Table 5. On the other hand, thanks to the real time deadlines implemented, the ABC-APTMC-W algorithm is able to more than double the total size of the samples output (see Table 6), and the ESSs for the cold chain returned in Table 5 are on average 3.41 times higher than those of the single processor version.

As for the main comparison − namely Anytime vs standard ABC with exchange moves − both single and multi-processor Anytime algorithms return an ESS larger than their respective standard versions in Table 5. While the improvement on a single processor is modest, the ESS has more than quadrupled on multiple processors. Figures 9 and 10 illustrate well the advantage of implementing a real-time deadline to local moves. At most local moves, the issue in which all workers sit idle waiting for the slowest to finish arises for the ABC-PTMC-W algorithm. On the other hand, Fig. 10 shows that the Anytime version of the algorithm is making better use of the allocated computational resources. The Anytime framework ensures that none of the workers need to wait for the slowest among them to finish, allowing for more exploration of the sample space in the faster workers. Additionally, the real time deadline ensures that even if chain k on worker w remains stuck in a race for an extended period of time, the other workers are still updating. Therefore, while the remaining four chains on worker w wait for chain k to complete its race, they also continue to be updated at regular intervals thanks to the exchange moves with other workers. The addition of ABC exchange moves in his case study proved fruitful, as the ESS for the parameters of the Lotka-Volterra model was increased.

6 Conclusion

In an effort to increase the efficiency of MCMC algorithms, in particular for use on multiple processors, and for situations in which compute times of the algorithms depend on their current states, the APTMC algorithm was developed. The algorithm combines the enhanced exploration of the state space, provided by the between-chain exchange moves in parallel tempering, with control over the real-time budget and robustness to interruptions available within the Anytime Monte Carlo framework. Then, an application of APTMC to problems where the likelihood is unavailable and an ABC MCMC kernel, in particular the 1-hit MCMC kernel, must be employed instead was considered.

Timeline of local and exchange moves for the ABC-PTMC-W algorithm for the first 300 seconds. Within and between worker exchange moves are represented by the white lines on the Global timeline and blue lines on the various Worker timelines, respectively. Local moves on each worker are represented by the light blue coloured blocks and the dark blue coloured blocks correspond to the global time all workers spend running in parallel, including communication overhead. Significant idle time is apparent on all workers as they always have to wait for the slowest among them to complete its set of local moves. (Color figure online)

Timeline of local and exchange moves for the ABC-APTMC-W algorithm for the first 300 seconds. Within and between worker exchange moves are represented by the red lines. Local moves on each worker are represented by the various orange coloured blocks, with the brighter blocks corresponding to the global time all workers spend running in parallel, including communication overhead. The significant idle time from Fig. 9 has been greatly reduced thanks to the deadlines implemented

Initially, the construction of the Anytime Monte Carlo algorithm with the inclusion of exchange moves on a single and multiple processors was verified on a Gamma mixture example. The performance improvements they brought were then demonstrated by comparing the algorithm to a standard MCMC algorithm. Subsequently, the exchange moves were adapted for pairing with the 1-hit MCMC kernel, a simulation-based algorithm within ABC framework, which provides an attractive, likelihood-free approach to MCMC. The construction of the adapted ABC algorithm was verified using a univariate normal example. Then, the increased efficiency of the inclusion of exchange moves was demonstrated in comparison to that of a standard ABC algorithm on a parameter estimation problem. The problem involved the parameters of a stochastic Lotka-Volterra predator-prey model based on partial and discrete data, and the likelihood of this model is intractable. On a single processor, it was shown that introducing exchange moves provides an improvement in performance and an increase in the effective sample size compared to that of the standard, single chain ABC algorithm. The Anytime results for a single processor matches the efficiency of standard parallel tempering, which is to be expected since the single processor is never idling in both the Anytime and non-Anytime versions. The ESS gains of Anytime become significant in a multi-processor setting since one slow processor will lead to all the others idling in standard parallel tempering.

One major class of applications with local moves that have state-dependent real completion times and could benefit from the APTMC framework are transdimensional applications, such as RJ-MCMC ( Green (1995)), which has a parallel tempering implementation in Jasra et al. (2007). The performance of parallel tempering algorithms with temperature-dependent completion times, as addressed in Earl and Deem (2004), can also be improved by the Anytime framework. Examples of such algorithms occur in Hritz and Oostenbrink (2007); Karimi et al. (2011); Wang and Jordan (2003). From a purely computing infrastructure-related perspective, exogenous factors such as processor hardware, competing jobs, memory bandwidth, network traffic or I/O load also affect the completion time of local moves even if they are not state-dependent within the algorithm itself. This is the case in Rodinger et al. (2006). In a more general setting, any population-based MCMC algorithm such as parallel tempering, SMC samplers ( Del Moral et al. (2006)), or parallelised generalised elliptical slice sampling ( Nishihara et al. (2014)), which combines a parallel updates step (e.g. local moves) and an inter-processor communication step (e.g. exchange moves, resampling) can benefit from the APTMC framework in future implementations.

As a final comment, we note the potential relevance of the work of Dupuis et al. (2012) in studying efficiency as a function of exchange frequency. As exchange steps of Anytime parallel tempering become more frequent, i.e. many occur between the stalled chains before the local move completes, it would be interesting to explore if our Anytime parallel tempering algorithm could be understood in the framework of the infinite swapping limit version of parallel tempering which has been shown in Dupuis et al. (2012) to dominate in numerical examples and in a specific theoretical context. However, their analysis ignores the cost of performing exchanges, which is non-negligible when communicating across processors, and thus cannot be plainly advocated without more consideration.

References

Atchadé, Y.F., Roberts, G.O., Rosenthal, J.S.: Towards optimal scaling of Metropolis-coupled Markov chain Monte Carlo. Stat. Comput. 21(4), 555–568 (2011)

Baragatti, M., Grimaud, A., Pommeret, D.: Likelihood-free parallel tempering. Stat. Comput. 23(4), 535–549 (2013)

Beskos, A., Roberts, G., Stuart, A., et al.: Optimal scalings for local Metropolis-Hastings chains on nonproduct targets in high dimensions. Ann. Appl. Probab. 19(3), 863–898 (2009)

Botev, Z.I., Grotowski, J.F., Kroese, D.P., et al.: Kernel density estimation via diffusion. Ann. Stat. 38(5), 2916–2957 (2010)

Boys, R.J., Wilkinson, D.J., Kirkwood, T.B.: Bayesian inference for a discretely observed stochastic kinetic model. Stat. Comput. 18(2), 125–135 (2008)

Calderhead, B., Girolami, M.: Estimating Bayes factors via thermodynamic integration and population MCMC. Comput. Stat. Data Anal. 53(12), 4028–4045 (2009)

Del Moral, P., Penev, S.: Stochastic Processes: From Applications to Theory. CRC Press, Boca Raton (2017)

Del Moral, P., Doucet, A., Jasra, A.: Sequential Monte Carlo samplers. J. R. Stat. Soc. Ser. B 68(3), 411–436 (2006)

Dupuis, P., Liu, Y., Plattner, N., Doll, J.D.: On the infinite swapping limit for parallel tempering. Multiscale Model. Simul. 10(3), 986–1022 (2012)

Earl, D.J., Deem, M.W.: Optimal allocation of replicas to processors in parallel tempering simulations. J. Phys. Chem. B 108(21), 6844–6849 (2004)

Fearnhead, P., Prangle, D.: Constructing summary statistics for approximate Bayesian computation: semi-automatic approximate Bayesian computation. J R. Stat. Soc. Ser. B 74(3), 419–474 (2012)