Abstract

Recent years have seen a huge development in spatial modelling and prediction methodology, driven by the increased availability of remote-sensing data and the reduced cost of distributed-processing technology. It is well known that modelling and prediction using infinite-dimensional process models is not possible with large data sets, and that both approximate models and, often, approximate-inference methods, are needed. The problem of fitting simple global spatial models to large data sets has been solved through the likes of multi-resolution approximations and nearest-neighbour techniques. Here we tackle the next challenge, that of fitting complex, nonstationary, multi-scale models to large data sets. We propose doing this through the use of superpositions of spatial processes with increasing spatial scale and increasing degrees of nonstationarity. Computation is facilitated through the use of Gaussian Markov random fields and parallel Markov chain Monte Carlo based on graph colouring. The resulting model allows for both distributed computing and distributed data. Importantly, it provides opportunities for genuine model and data scalability and yet is still able to borrow strength across large spatial scales. We illustrate a two-scale version on a data set of sea-surface temperature containing on the order of one million observations, and compare our approach to state-of-the-art spatial modelling and prediction methods.

Similar content being viewed by others

References

Aune, E., Simpson, D.P., Eidsvik, J.: Parameter estimation in high dimensional Gaussian distributions. Stat. Comput. 24, 247–263 (2014)

Banerjee, S., Gelfand, A.E., Finley, A.O., Sang, H.: Gaussian predictive process models for large spatial data sets. J. R. Stat. Soc. B 70, 825–848 (2008)

Bender, E.A., Wilf, H.S.: A theoretical analysis of backtracking in the graph coloring problem. J. Algorithms 6, 275–282 (1985)

Berliner, L.M.: Hierarchical Bayesian time series models. In: Hanson, K.M., Silver, R.N. (eds.) Maximum Entropy and Bayesian Methods, pp. 15–22. Springer, New York (1996)

Besag, J., Green, P., Higdon, D., Mengersen, K.: Bayesian computation and stochastic systems. Stat. Sci. 10, 3–41 (1995)

Brown, D.A., McMahan, C.S., Self, S.W.: Sampling strategies for fast updating of Gaussian Markov random fields. Am. Stat. (2019). https://doi.org/10.1080/00031305.2019.1595144

Cao, C., Xiong, J., Blonski, S., Liu, Q., Uprety, S., Shao, X., Bai, Y., Weng, F.: Suomi NPP VIIRS sensor data record verification, validation, and long-term performance monitoring. J. Geophys. Res. Atmos. 118, 11664–11678 (2013)

Cressie, N., Johannesson, G.: Fixed rank kriging for very large spatial data sets. J. R. Stat. Soc. B 70, 209–226 (2008)

Cressie, N., Wikle, C.K.: Statistics for Spatio-Temporal Data. Wiley, Hoboken (2011)

Dewar, M., Scerri, K., Kadirkamanathan, V.: Data-driven spatio-temporal modeling using the integro-difference equation. IEEE Trans. Signal Process. 57, 83–91 (2009)

Eberly, L.E., Carlin, B.P.: Identifiability and convergence issues for Markov chain Monte Carlo fitting of spatial models. Stat. Med. 19, 2279–2294 (2000)

Finley, A.O., Datta, A., Cook, B.C., Morton, D.C., Andersen, H.E., Banerjee, S.: Efficient algorithms for Bayesian nearest-neighbor Gaussian processes. J. Comput. Graph. Stat. 28, 401–414 (2019)

Gelfand, A.E., Carlin, B.P., Trevisani, M.: On computation using Gibbs sampling for multilevel models. Stat. Sin. 11, 981–1003 (2001)

Gelman, A., Carlin, J.B., Stern, H.S., Dunson, D.B., Vehtari, A., Rubin, D.B.: Bayesian Data Analysis, 3rd edn. Chapman & Hall/CRC Press, Boca Raton (2013)

Gneiting, T., Raftery, A.E.: Strictly proper scoring rules, prediction, and estimation. J. Am. Stat. Assoc. 102, 359–378 (2007)

Gonthier, G.: Formal proof—the four-color theorem. Notices of the AMS 55, 1382–1393 (2008)

Gonzalez, J., Low, Y., Gretton, A., Guestrin, C.: Parallel Gibbs sampling: from colored fields to thin junction trees. In: Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, pp. 324–332 (2011)

Jensen, C.S., Kjærulff, U., Kong, A.: Blocking Gibbs sampling in very large probabilistic expert systems. Int. J. Hum. Comput. Stud. 42, 647–666 (1995)

Katzfuss, M.: A multi-resolution approximation for massive spatial datasets. J. Am. Stat. Assoc. 112, 201–214 (2017)

Katzfuss, M., Hammerling, D.: Parallel inference for massive distributed spatial data using low-rank models. Stat. Comput. 27, 363–375 (2017)

Knorr-Held, L., Rue, H.: On block updating in Markov random field models for disease mapping. Scand. J. Stat. 29, 597–614 (2002)

Lauritzen, S.L.: Graphical Models. Clarendon Press, Oxford (1996)

Lindgren, F., Rue, H.: Bayesian spatial modelling with R-INLA. J. Stat. Softw. 63(19), 1–25 (2015)

Lindgren, F., Rue, H., Lindström, J.: An explicit link between Gaussian fields and Gaussian Markov random fields: the stochastic partial differential equation approach. J. R. Stat. Soc. B 73, 423–498 (2011)

Monterrubio-Gómez, K., Roininen, L., Wade, S., Damoulas, T., Girolami, M.: Posterior inference for sparse hierarchical non-stationary models. Comput. Stat. Data Anal. 148, 106954 (2020)

Nordhausen, K., Oja, H., Filzmoser, P., Reimann, C.: Blind source separation for spatial compositional data. Math. Geosci. 47, 753–770 (2015)

Nychka, D., Bandyopadhyay, S., Hammerling, D., Lindgren, F., Sain, S.: A multiresolution Gaussian process model for the analysis of large spatial datasets. J. Comput. Graph. Stat. 24, 579–599 (2015)

R Core Team: R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna (2019)

Rue, H., Held, L.: Gaussian Markov Random Fields: Theory and Applications. Chapman and Hall/CRC Press, Boca Raton (2005)

Rue, H., Tjelmeland, H.: Fitting Gaussian Markov random fields to Gaussian fields. Scand. J. Stat. 29, 31–49 (2002)

Sahr, K.: Location coding on icosahedral aperture 3 hexagon discrete global grids. Comput. Environ. Urban Syst. 32, 174–187 (2008)

Sahu, S.K., Mardia, K.V.: A Bayesian kriged Kalman model for short-term forecasting of air pollution levels. J. R. Stat. Soc. Ser. C 54, 223–244 (2005)

Sang, H., Huang, J.Z.: A full scale approximation of covariance functions for large spatial data sets. J. R. Stat. Soc. B 74, 111–132 (2012)

Scherer, P.O.J.: Computational Physics: Simulation of Classical and Quantum Systems, 3rd edn. Springer, Cham (2017)

Simpson, D., Illian, J.B., Lindgren, F., Sørbye, S.H., Rue, H.: Going off grid: computationally efficient inference for log-Gaussian Cox processes. Biometrika 103, 49–70 (2016)

Van Dyk, D.A., Park, T.: Partially collapsed Gibbs samplers: theory and methods. J. Am. Stat. Assoc. 103, 790–796 (2008)

Wikle, C.K., Zammit-Mangion, A., Cressie, N.: Spatio-Temporal Statistics with R. Chapman & Hall/CRC, Boca Raton (2019)

Wilkinson, D.J.: Parallel Bayesian computation. In: Kontoghiorghes, E.J. (ed.) Handbook of Parallel Computation and Statistics, pp. 477–508. CRC Press, Boca Raton (2006)

Zammit-Mangion A, Cressie N (2020) FRK: an R package for spatial and spatio-temporal prediction with large datasets. J. Stat. Softw. https://arxiv.org/pdf/1705.08105.pdf

Zammit-Mangion, A., Sanguinetti, G., Kadirkamanathan, V.: Variational estimation in spatiotemporal systems from continuous and point-process observations. IEEE Trans. Signal Process. 60, 3449–3459 (2012)

Zammit-Mangion, A., Cressie, N., Shumack, C.: On statistical approaches to generate Level 3 products from satellite remote sensing retrievals. Remote Sens. 10, 155 (2018)

Acknowledgements

We thank Yuliya Marchetti for providing the sea-surface temperature data set, Bohai Zhang for providing the MATLAB code for the implementation of the FSA, Matt Moores for general discussions on improving MCMC mixing, and Quan Vu for providing comments on an early version of this manuscript. AZ–M was supported by the Australian Research Council (ARC) Discovery Early Career Research Award, DE180100203.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Targeted distribution of the Markov chain

In Sect. 3.2 we asserted that it is important to update \({\varvec{\eta }}_k\) concurrently with \({\varvec{\theta }}_k\), even though \({\varvec{\eta }}_k\) is later re-updated (Sect. 3.3). If one does not do this, \({\varvec{\eta }}_k^{\mathrm {rest}}\) in (14) would be ‘out of sync’ with the updated parameters \({\varvec{\theta }}_k\); as a consequence, when \({\varvec{\eta }}_k^{{\mathcal {T}}_1}\) or \({\varvec{\eta }}_k^{{\mathcal {T}}_2}\) is updated in (14), an incorrect distribution would be targeted. This phenomenon occurs when marginalising (termed ‘trimming’ by Van Dyk and Park 2008) in Gibbs samplers.

We show the importance of resampling on a very simple spatial model, where we have two sets of parameters, \({\varvec{\theta }}_1\) and \({\varvec{\theta }}_2\), and two sets of basis-function coefficients, \({\varvec{\eta }}_1\) and \({\varvec{\eta }}_2\). In what follows we omit conditioning on the data \({\mathbf {Z}}\), since this is implicit in all the distributions. We denote the target (posterior) distribution as \({{\,\mathrm{\mathrm {pr}}\,}}_0({\varvec{\varTheta }})\) where \({\varvec{\varTheta }}\equiv \{{\varvec{\theta }}_1, {\varvec{\theta }}_2, {\varvec{\eta }}_1, {\varvec{\eta }}_2\}\). In MCMC we seek a transition kernel \(q({\varvec{\varTheta }}' \mid {\varvec{\varTheta }})\) such that

If (A.17) holds, then we say that the Markov chain preserves the target distribution, \({{\,\mathrm{\mathrm {pr}}\,}}_0\).

In a vanilla Gibbs sampler, one constructs the transition kernel from full conditional distributions of the target distribution:

Successive updating of the parameters in this fashion preserves the target distribution. To see this, substitute (A.18) in (A.17) to obtain

as required. Now, this vanilla sampler does not mix well due to the correlation a posteriori between \({\varvec{\eta }}_i\) and \({\varvec{\theta }}_i\), \(i = 1,2\) (Knorr-Held and Rue 2002). Since our model is in a linear, Gaussian, setting, one might be tempted to instead use the following transition kernel:

A similar treatment to the vanilla Gibbs case reveals that this only targets the correct distribution if \({{\,\mathrm{\mathrm {pr}}\,}}_0({\varvec{\theta }}_1, {\varvec{\theta }}_2, {\varvec{\eta }}_1, {\varvec{\eta }}_2) = {{\,\mathrm{\mathrm {pr}}\,}}_0({\varvec{\theta }}_1, {\varvec{\theta }}_2){{\,\mathrm{\mathrm {pr}}\,}}_0({\varvec{\eta }}_1,{\varvec{\eta }}_2)\), which is almost certainly not the case in our spatial models. Therefore, (A.19) is not a target-preserving kernel. In our MCMC scheme this is important: updating \({\varvec{\theta }}_k\) in blocks and subsequently updating \({\varvec{\eta }}_k\) in blocks will not yield samples from the posterior distribution.

A kernel which preserves the target can be constructed by updating \({\varvec{\eta }}_1\) and \({\varvec{\eta }}_2\) twice, with the intermediate quantities then discarded. As in Algorithm 1, denote these intermediate quantities as \({\varvec{\eta }}_1^*\) and \({\varvec{\eta }}_2^*\), respectively, and consider the transition kernel

This kernel preserves the target (posterior) distribution since

as required.

Appendix B: Simulation experiment illustrating the benefit of alternating tilings

Consider a GMRF \({\varvec{\eta }}\sim \mathrm {Gau}({\mathbf {0}}, \mathbf{Q }^{-1})\), where \(\mathbf{Q }\) is the sparse tridiagonal matrix

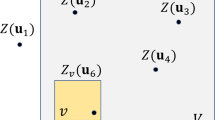

where omitted entries are zero, \(\phi \) is a length-scale parameter, and \(\sigma ^2_v\) a variance parameter. We consider the case where \({\varvec{\eta }}\in {\mathbb {R}}^n\) with \(n = 99\), and consider the following tilings,

Here, we compare Markov chain behaviour when sampling N times from this distribution using the two samplers, Sampler 1 and Sampler 2, detailed in Algorithms 2 and 3, respectively. Sampler 1 is a blocked Gibbs sampler which samples \({\varvec{\eta }}\) using only \({\mathcal {T}}_{11}\) and \( {\mathcal {T}}_{12}\), while Sampler 2 changes the tiling used, \(\{{\mathcal {T}}_{11},{\mathcal {T}}_{12}\}\) or \(\{{\mathcal {T}}_{21},{\mathcal {T}}_{22},{\mathcal {T}}_{23}\}\), at each iteration.

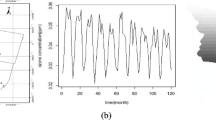

In our simulation experiment we simulated \({\varvec{\eta }}\) using \(\phi = 0.9\) and \(\sigma ^2_v = 0.2\), and let \(N = 10{,}000\). We then generated two Markov chains, one using Sampler 1 and another using Sampler 2, and for each chain took the last 5000 samples and applied a thinning factor of 2. In Fig. 9 we show the empirical auto-correlation functions computed from the trace plots of \({\varvec{\eta }}^{\{49\}}\) from both samplers. We see that samples of \({\varvec{\eta }}^{\{49\}}\) from Sampler 1 are highly correlated due to the proximity of this variable to the tiling boundary. Samples of \({\varvec{\eta }}^{\{49\}}\) from Sampler 2 are virtually uncorrelated. Hence, a system of shifting tiles in a Gibbs sampler for spatial GMRFs (as done in Algorithm 1) can virtually eliminate any auto-correlation that may appear due to tile boundaries. Note that a thinning factor greater than the number of tilings needs to be used to effectively remove any auto-correlation.

Appendix C: Simulation experiment illustrating the sensitivity of the predictions to the chosen basis functions

In this section we conduct a simulation experiment that demonstrates the effect of a coarse discretisation, and the corresponding basis-function representation, on the prediction performance of the multi-scale model. The experiment is done in a one-dimensional, two-scale-process setting.

Consider a 1D Gaussian process on \(D = [0,1]\), which has as covariance function a sum of two exponential covariance functions, \(C_0(\cdot )\) and \(C_1(\cdot )\). The exponential covariance functions have as parameters the variances \(\sigma ^2_k\) and ranges \(\tau _k\), \(k = 0, 1\), and are given by

We model the process of interest \(Y(\cdot )\) as a sum of the two processes \(Y_k(\cdot ) = \mathbf{a }_k(\cdot )^\top {\varvec{\eta }}_k, k = 0, 1\), where \(\mathbf{a }_k(\cdot )\) are basis functions and \({\varvec{\eta }}_k\) are basis-function coefficients. Now, let the basis functions \(\mathbf{a }_k(\cdot ), k = 0, 1,\) be piecewise constants on a regular partitioning of D. The basis functions have width \(\delta _0\) and \(\delta _1\), respectively, where \(\delta _1 < \delta _0\). We are interested in what the effect of a poor choice for \(\delta _0\) and \(\delta _1\) is when predicting \(Y(\cdot )\) from noisy observations \({\mathbf {Z}}\).

If \(\delta _k \rightarrow 0\), then the kth scale of the original process is reconstructed exactly if we let \({\varvec{\eta }}_k \sim \mathrm {Gau}({\mathbf {0}}, \mathbf{Q }_k^{-1}), k = 0,1,\) where \(\mathbf{Q }_k\) is the (sparse) tridiagonal matrix given by (B.20) with \(\sigma ^2_v\) replaced with \(\sigma ^2_{v,k}\) and \(\phi \) replaced with \(\phi _k\). Here, \(\phi _k = \exp (-\delta _k / \tau _k)\) and \(\sigma ^2_{v,k} = \sigma ^2_k(1 - \phi _k^2)\). In a simulation environment where we have access to \(\sigma ^2_k, \tau _k, k = 0,1\), we can therefore obtain accurate GMRF representations for our processes at the individual scales for when \(\delta _k\) is small. We can then also see what happens as \(\delta _k\) grows (this corresponds to coarsening a triangulation in 2D).

In our simulation environment we fixed \(\tau _0 = 0.4, \tau _1 = 0.04, \sigma ^2_0 = 1\), and \(\sigma ^2_1 = 0.05\), and conducted 100 Monte Carlo simulations, where in each simulation we did the following:

-

1.

Randomly established an ‘unobserved’ region in D, \(D_{\text {gap}}\) say, where \(|D_{\text {gap}}| = 0.2\).

-

2.

Generated 1100 observations on D, with 1000 in \(D \backslash D_{\text {gap}}\) and 100 in \(D_{\text {gap}}\) with measurement-error variance \(\sigma ^2_\varepsilon = 0.0002\). Five hundred of those in \(D\backslash D_{\text {gap}}\) were used as training data \({\mathbf {Z}}\), the remaining 500 in \(D \backslash D_{\text {gap}}\) as validation data \({\mathbf {Z}}_v^{(1)}\), and those 100 in \(D_{\text {gap}}\) as validation data \({\mathbf {Z}}_v^{(2)}\).

-

3.

For various values of \(\delta _0\) and \(\delta _1\), we constructed \(\mathbf{Q }_0\) and \(\mathbf{Q }_1\) according to (B.20) and used these, the true measurement-error variance, and \({\mathbf {Z}}\), to predict \(Y(\cdot )\) at all validation data locations.

-

4.

Computed the RMSPE and CRPS at the validation locations.

Each Monte Carlo simulation provided us with an RMSPE and a CRPS corresponding to a combination of \(\{\delta _0, \delta _1\}\). We then averaged over the 100 simulations to provide averaged RMSPEs and CRPSs corresponding to each combination of \(\{\delta _0, \delta _1\}\). This experiment allows us to analyse the detrimental effect of a large \(\delta _0\) or \(\delta _1\) on our predictions.

The results from this experiment are summarised in Fig. 10. The figure clearly shows that the RMSPE considerably increases in regions where data is dense (\({\mathbf {Z}}_v^{(1)}\), left panels) and \(\delta _1\) is large; on the other hand \(\delta _0\) does not have much of an effect in these regions. The situation is reversed in regions where data is missing in large contiguous blocks (\({\mathbf {Z}}_v^{(2)}\), right panels). Here \(\delta _1\) does not play a big role while \(\delta _0\) does. When doing simple kriging (with the exact model) the mean RMSPEs were 0.07 and 0.41, respectively, while the mean CRPSs were 0.033 and 0.24, respectively, which are relatively close to what was obtained with the smallest values we chose for \(\delta _0\) and \(\delta _1\). Therefore, the way in which we discretise both scales is important, and both \(\delta _0\) and \(\delta _1\) should be made as small as needed for their respective scale; in this experiment \(\delta _1 = 0.001\) and \(\delta _0 = 0.01\) are suitable choices. In practice, the coarseness of the grid (or triangulation in 2D) will be determined through computational considerations. Fortunately, we see that predictive performance deteriorates at a reasonably slow rate as the discretisations get coarser and coarser. A detailed analysis taking into account convergence rates of finite-element approximations might be needed for an in-depth analysis.

Rights and permissions

About this article

Cite this article

Zammit-Mangion, A., Rougier, J. Multi-scale process modelling and distributed computation for spatial data. Stat Comput 30, 1609–1627 (2020). https://doi.org/10.1007/s11222-020-09962-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-020-09962-6